Abstract

The matrix completion problem aims to recover missing entries in a partially observed matrix by approximating it with a low-rank structure. The two common approaches—the singular value thresholding and matrix factorization with alternating least squares—often become prohibitively expensive for large matrices or when rigorous accuracy is demanded. To address these issues, we propose a rank-restricted hierarchical alternating least squares with orthogonality and sparsity constraints, which includes a novel shrinkage function. Specifically, for faster execution speed, truncated factor matrices are updated to restrict the costly shrinkage step as well as boundary-condition heuristics. Experiments on image completion and recommender systems show that the proposed method converges with extremely fast execution speed while achieving comparable or superior reconstruction accuracy relative to state-of-the-art matrix completion methods. For example, in the image completion problem, the proposed algorithm produced outputs approximately 15 times faster on average than the most accurate reference algorithm, while achieving 98% of its accuracy.

1. Introduction

The matrix completion problem, which aims to recover missing or corrupted entries in a partially observed matrix, arises in various fields, such as recommender systems [1,2], computer vision [3,4,5], machine learning [6,7,8], and signal processing [9,10,11]. However, filling in the missing entries of a matrix is challenging because it is inherently ill-posed, as infinitely many completion results may exist. To address this issue, constraints are typically imposed on the complete matrix to capture its inherent structure or regularity. A common constraint is that the underlying matrix is of low rank, meaning that only a few linearly independent latent factors can be used to approximate the missing entries. This assumption is natural in many real-world scenarios. For example, in movie recommender systems, users’ preferences are often explained by only a few underlying factors, such as genres, actors, or directors. This low-rank property makes the matrix completion problem tractable, as it significantly reduces the degrees of freedom. Another important challenge is scalability. In real-world applications, the data matrices to be completed are often extremely large. Even though the data matrix is sparse, matrix completion algorithms typically need to perform computations on the entire matrix to recover the missing entries, requiring prohibitively expensive computational resources. Given the importance of matrix completion in practical applications, numerous methods have been developed to solve the low-rank matrix completion problem, which include optimization-based approaches and machine learning algorithms [6,7,8]. Furthermore, computationally more efficient algorithms have been extensively studied to handle large-scale data matrices [12,13].

In this paper, we focus on enhancing the performance of matrix completion by proposing a novel optimization algorithm and providing proofs of its performance in popular applications, such as image completion and recommender systems. The remainder of this paper is organized as follows. In Section 2, we introduce popular low-rank matrix completion algorithms and highlight our contributions. In Section 3, we propose a new rank-restricted hierarchical alternating least squares algorithm for matrix completion. Section 4 presents an evaluation of the numerical performance of the proposed algorithm using image completion and recommender system data. We conclude with a discussion in Section 5.

2. Related Works and Our Contribution

The mathematical expression of the low-rank completion problem is as follows: Assume that an incomplete matrix is given whose observed entries are indexed by the set . Matrix completion problems aim to obtain the low-rank matrix that matches M on the observed positions by solving the optimization problem [14]:

Introducing the sampling operator for any matrix G, defined by

the problem (1) can be rewritten as

Unfortunately, direct rank minimization in (3) is an NP-hard [15]. As a result, it requires a close approximation to rank minimization, which relaxes the problem in (3). For example, norm or nuclear norm relaxation have been studied [12,14,16,17]. Candès et al. [14] introduced a convex relaxation of the non-convex problem in (3) by employing nuclear norm regularization, such that

and showed that the optimal solution of (4) is equivalent to solving the following problems iteratively, such that

where indicates the step length, k represents the iteration step, and the function is the soft singular value thresholding (SVT) operator with the predefined threshold value . Inspired by the work of SVT, several methods based on nonlinear SVT algorithms have been proposed [12,18,19,20].

The method proposed by Candès et al. provided rigorous mathematical derivations of the problems and yielded excellent low-rank approximation of M. However, it demands substantial computational resources because it requires a singular value decomposition (SVD) in every iteration. To alleviate this burden, alternating minimization-based matrix factorization techniques have been explored. Assuming that the rank of X is known, one seeks two factor matrices and from M that minimize

A basic alternating minimization scheme updates one factor matrix while fixing the other, such that

Tanner and Wei proposed an alternating steepest-descent method to solve (7), which iteratively updates A and B using the steepest descent [13]. Hastie et al. [21] considered a nuclear norm-based matrix completion algorithm combined with the matrix factorization method, based on the following equation:

Equation (8) implies that the nuclear norm of a matrix can be approximated by the sum of the Frobenius norms of its factor matrices. By substituting (8) into their matrix completion formulation, Hastie et al. obtained

Subsequently, they employed alternating minimization techniques to determine the optimal factor matrices A and B. Since solving (9) does not require an SVD, and the sizes of the factor matrices are smaller than that of X, the algorithm is expected to run faster than SVT in (5). However, the algorithm proposed by Hastie et al. is highly dependent on an accurate estimate of the rank; an incorrect value can cause the loss of crucial low-rank information or unnecessary increases in computational time. Later, Yang et al. and Li et al. proposed non-negative matrix-factorization algorithms with an adaptive graph to mitigate sensitivity to noise or outliers [22,23]. Xu et al. combined rank-1 matrix completion with an adaptive local filter to simultaneously incorporate local information and low-rank features [24]. Specifically, Xu et al. incrementally added dominant singular triplets to X and integrated it with locally filtered outputs to obtain the completed matrix. Xiao et al. proposed the tight-and-flexible rank (TFR) function—a tunable non-convex surrogate that hugs the true rank more closely than existing convex or non-convex penalties—and integrated it into a proximal alternating minimization algorithm [17]. Depending on the data characteristics, deep learning based matrix completion approaches can be also considered [6,8,25]. Specifically, deep learning-based methods offer significant advantages for highly sparse, nonlinear, and complex data matrices compared with the matrix factorization approaches introduced in this section.

This study concentrates on computing an accurate low-rank approximation of the incomplete matrix while maintaining computational efficiency. To attain this goal, a rank-restricted hierarchical alternating least squares algorithm with orthogonality and sparsity constraints is proposed. Specifically, the main contributions of the proposed algorithm are as follows:

- A novel optimal relaxation of (3) that enforces adjustable sparsity and incorporates an orthogonality constraint is proposed, yielding more accurate and computationally efficient results than the procedure in (9). Specifically, the orthogonality constraint is included to enhance computational efficiency, while a new shrinkage function is derived to enforce sparsity in the solution.

- To improve the computational efficiency within a single iteration, we employ hierarchical alternating minimization to the proposed model, enabling faster computation through column-wise updates rather than full-matrix updates. This method remains effective even with a relatively rough estimate of the rank and is faster than the procedure in (7).

3. Rank-Restricted Hierarchical Alternating Least Squares Algorithm

In this section, an efficient matrix completion algorithm, which employs hierarchical alternating least squares, is proposed. Also, orthogonality and sparsity constraints are incorporated to improve the accuracy of the matrix completion problem.

3.1. Rank-Restricted Hierarchical Alternating Least Squares with Orthogonality Constraint

Here, the hierarchical alternating least squares algorithm for the matrix completion problem is derived. Consider the matrix completion problem in (4). By substituting (8) into (4), the Lagrange multiplier equation of (4) is given as follows:

where represents the Lagrange multiplier. Because the factor matrices and can be interpreted as and V, respectively, where denotes the SVD of X, it is reasonable to impose the orthogonality constraint on B in (10), such that

where a matrix denotes the identity matrix and represents another Lagrange multiplier [26,27]. The orthogonality constraint encourages B to be orthogonal, an assumption used in the derivation of the proposed low-rank optimal relaxation method; details are provided in Section 3.2.

Remark 1.

The orthogonality constraint in (11) does not strictly enforce orthogonality; rather, it helps to avoid the zero-lock problem, which can cause intermediate results to stall at zero values and lead to convergence to a suboptimal solution [28]. Therefore, the orthogonality constraint on (11) employs an adjustable degree of orthogonality to impose a relaxed constraint on B. The Supplementary Materials present experimental results demonstrating the role of the constraint by comparing the number of iterations required for convergence with and without the constraint.

Instead of directly computing the optimal solution of (11) using the classical alternating least squares method, we employ the hierarchical least squares technique to avoid matrix inversions [29]. Let and . The column-wise minimization of (11) can be written as follows:

The optimal solution of the column-wise minimization in (12) is obtained by taking derivatives with respect to each column and for in an alternating manner. For instance, the optimal i-th columns and are obtained by solving the alternating least squares method derived from (12), such that

where , and the constants and in (13) are defined as

For large-scale matrix completion problems, a further reduction in computational cost is crucial. To this end, a factor-by-factor procedure is preferred to the column-by-column procedure in (13), which is more beneficial for modern parallel computer architecture. Consequently, the updates for and can be obtained as

where the matrix operations in (15) can be pre-computed. The detailed procedure of this algorithm is described in the following section.

3.2. Sparsity Constraint and Imposing the Boundary Condition

In this section, we discuss some performance improvement techniques for the proposed algorithm. One technique is to incorporate a sparsity constraint. The sparsity constraint enhances the quality of image denoising or filtering [30,31], and it is an essential prerequisite for recommender system problems [1]. Specifically, rather than using the truncation of the SVD of A with a predefined threshold, it is reasonable to enforce sparsity by applying a suitable nonlinear element-wise projection or filtering function known as a shrinkage function with a same threshold because the missing entries of the incomplete matrix act like sparse, high-magnitude noise when constructing . In this regard, we propose a novel shrinkage function for the sparsity constraint in the matrix completion problem.

Consider the optimization problem of the surrogate function obtained from (10), defined as follows:

for some fixed . Then, the optimal solution to the matrix completion problem with a sparsity constraint can be obtained by solving

Proposition 1.

Let under the assumption in the previous section. Then, the solution of is obtained by applying the shrinkage operator to X, which is defined as

where is the i-th element of the diagonal matrix Σ, , and .

Proof.

Taking the derivative of with respect to B while keeping A fixed yields

Rearranging (19) and taking the -th root to the equation gives

Hence,

where . Let and . If we rewrite (21) as a sum of vectors such that

then X is obtained by

Let the SVD of M be , where , , and . Because we assumed earlier in this section that , we can assume that , , and for . Thus, X is computed as

Therefore, based on (24), the solution of is obtained by applying the shrinkage operator defined in (18), and that completes the proof. □

Proposition 2.

Proof.

See [14]. □

Remark 2.

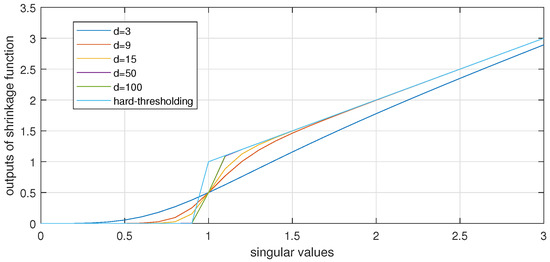

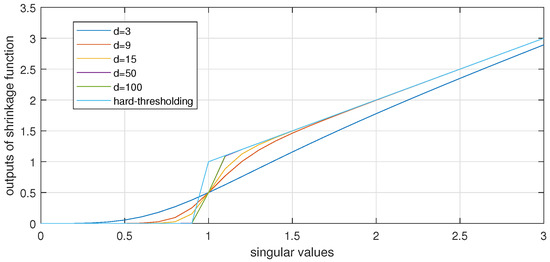

The shrinkage function (18) sharply filters out the small singular values ,

when d is sufficiently large. Contrarily, for large singular values, since , . Figure 1 shows this behavior for singular values uniformly distributed in ; as d increases, the curve approaches that of the Iterative Hard Thresholding (IHT) operator, which enforces sparsity to the results [32].

Figure 1.

Shrinkage function with different d.

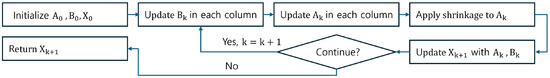

The proposed hierarchical alternating least squares minimization algorithm for the matrix completion problem (HALMC) is summarized in Algorithm 1, and Figure 2 provides a high-level workflow for additional clarity.

| Algorithm 1 | |

| 1: | |

| 2: and , where | |

| 3: for k = 1,2,… do | |

| 4: , , and compute defined in (14) | ▷ |

| 5: for j = 1,2,…,r do | |

| 6: | ▷ |

| 7: end for | |

| 8: , and compute defined in (14) | ▷ |

| 9: for j = 1,2,…,r do | |

| 10: | ▷ |

| 11: end for | |

| 12: | ▷ |

| 13: where shrinkage operator is defined in (18) | |

| 14: Set | ▷ |

| 15: | ▷ |

| 16: if then | |

| 17: break | |

| 18: end if | |

| 19: end for | |

| 20: return | |

Figure 2.

Diagram of proposed algorithm.

Another technique is to impose a boundary condition on M as a preprocess step for HALMC. It is well known that images reconstructed from the truncation of singular values are affected by the boundary value [33]. Therefore, considering the boundary condition may improve the performance of image completion, for example, by reducing the ringing artifacts, thus saving computational resources. For a detailed mathematical formulation imposing a boundary condition on low-rank matrix recovery, readers may refer to [34,35]. For the same reason, in the recommender system application, it is possible to improve the performance of HALMC by considering the boundary condition. Typical boundary conditions include zero (i.e., Dirichlet), periodic, and reflective (i.e., Neumann) boundary conditions. Among them, the reflective boundary condition, where the entries outside the matrix form a mirror image of the interior, is preferred in many image processing applications because it prevents discontinuity near the boundary. For example, a matrix M and its reflective extension of radius 3 are

In this study, a reflective boundary condition is applied to each incomplete matrix before executing HALMC. Subsequently, the restored matrix is cropped back to its original size.

3.3. Computational and Memory Complexity

HALMC performs an SVD at each iteration, but the decomposition is applied to the smaller factor matrix rather than to , thereby reducing computational cost. If HALMC converges at iteration k, the overall complexity is approximately flops. Detailed operation counts appears as comments in the pseudocode. For the memory cost analysis, we assume that only the entries of X in are required, resulting in a cost of . The memory required for the intermediate factor matrices is . Therefore, the total memory cost requires .

4. Numerical Experiments

This section presents numerical experiments designed to demonstrate the efficiency and accuracy of the proposed algorithm. For performance comparison with HALMC, we evaluated the following reference algorithms: Singular Value Thresholding (SVT) [14], Modified Schatten 2/3-Norm Minimization with Reweighting (TSNMR) [12], Rank-Restricted Efficient Minimum-Margin Matrix Factorization: SoftImpute-ALS (SoftImp) proposed by Hastie et al. [21] (p. 3375), Low-Rank Matrix Completion (LAMC) proposed by Xu et al. [24], and matrix completion with Tight and Flexible Rank (TFR) proposed by Xiao et al. [17] (https://jin-liangxiao.github.io/, accessed on 1 August 2025). Note that SVT, TSNMR, and TFR focus on developing novel shrinkage functions for matrix completion, while SoftImp and LAMC employ rank-restricted matrix factorization. All experiments were implemented using MATLAB version 9.10.00.1710957. The algorithms were tested on two common applications, image completion and recommender system, and were executed on an Intel Core i9-11900K processor with 64 GB memory.

4.1. Image Completion Problem

One of the most popular applications for matrix completion problems is image completion. The image completion problem, also known as image inpainting, involves repairing defects in individual pixel values or regions of digital images caused by some dirt, a scratch, or cracks on charge-coupled device (CCD) or impulse-type noise in images [3,36]. Given the location of the contaminated pixels, these pixels are treated as missing. An intuitive approach to restore the missing pixels is to replace their values with the interpolated values from adjacent pixels. Bertalmio et al. enhanced this approach by iteratively diffusing the values from the surrounding information, which exploits the idea of the thermal diffusion equation in physics [37]. Later, the time-consuming nature of the algorithm proposed by Bertalmio et al. was further improved by considering a total variation model [38]. Another strategy for image completion involves exploiting the low-rank structure inherent in many images. Low-rank image completion algorithms predict missing pixels using a low-rank approximation of the partially observed pixels from the image matrix. In this work, we focus on reconstructing the incomplete images based on low-rank image completion methods. We also demonstrate that the proposed algorithm is effective for image completion problems. For the experiments, we used popular natural images with randomly generated missing pixels to evaluate the performance of the algorithms.

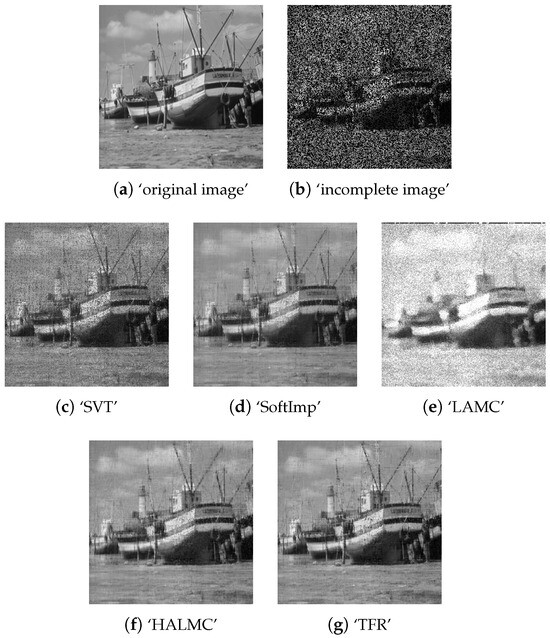

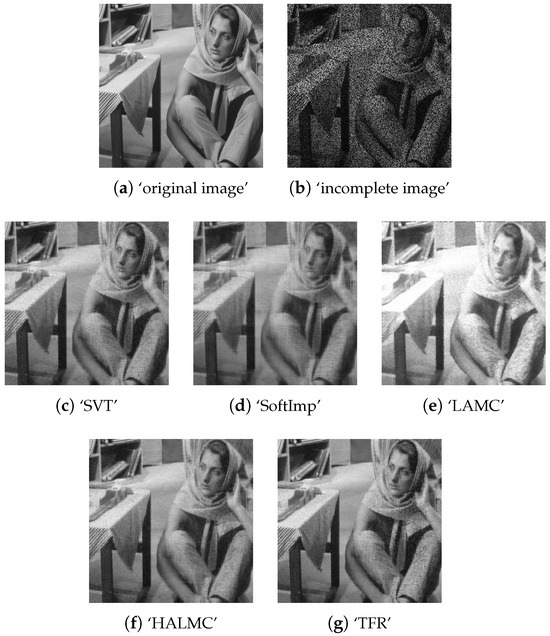

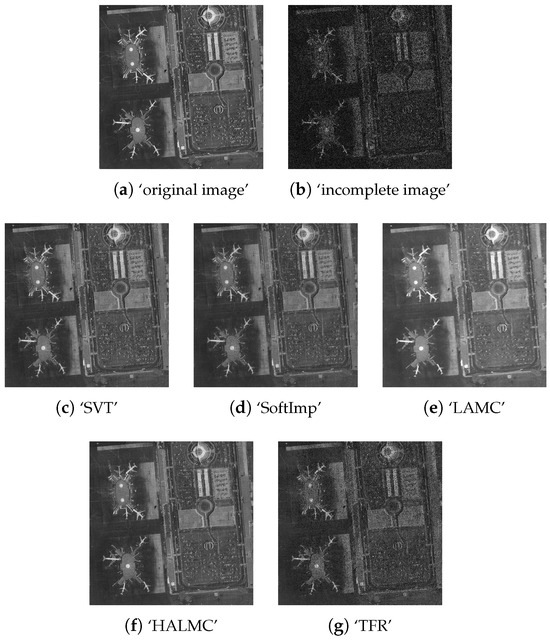

Figure 3a, Figure 4a, Figure 5a and Figure 6a depict the natural images used in our experiments. The images have dimensions of , , , and , respectively. To compare the performance of the algorithms, we measured execution time, the number of iterations to converge, mean-squared error (MSE), peak signal-to-noise (PSNR) values, structural similarity index measure (SSIM), and feature similarity index (FSIM) [39]. PSNR is defined as

and SSIM is defined as

In (27) and (28), represents the original image without missing pixels, while X denotes the image reconstructed by the algorithms. The term denotes the average of an arbitrary matrix M, denotes its variance, and represents the covariance between two arbitrary matrices M and N. The constants and are included to stabilize the division in cases where the denominator is small. While MSE and PSNR provide basic pixel-wise error measurements, SSIM evaluates structural fidelity, and FSIM captures perceptually important features, particularly in textured regions. Therefore, higher SSIM and FSIM values, along with lower MSE and higher PSNR, indicate better perceptual and numerical similarity to the ground truth.

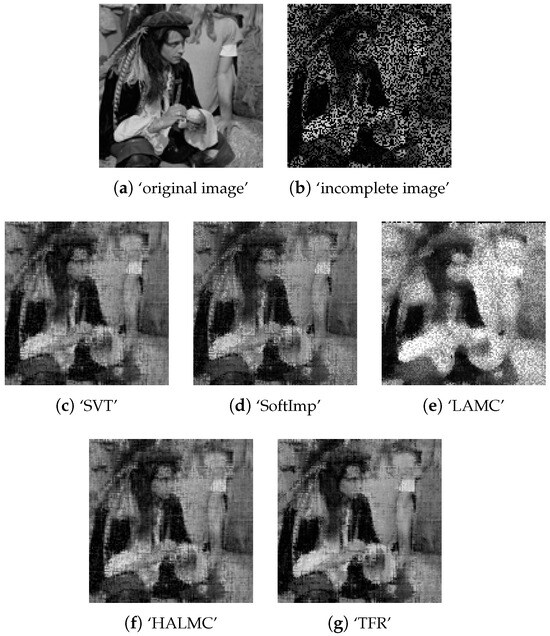

Figure 3.

Experimental results of the algorithm on ‘indian’ image with 50% missing pixels.

Figure 4.

Experimental results of the algorithm on ‘boat’ image with 50% missing pixels.

Figure 5.

Experimental results of the algorithm on ‘barbara’ image with 50% missing pixels.

Figure 6.

Experimental results of the algorithm on ‘airport’ image with 50% missing pixels.

To simulate incomplete images, we randomly selected missing pixels with various missing rates. Specifically, we randomly removed 30%, 50%, and 70% of the pixels from the entire image area and treated them as missing. To determine the optimal hyperparameters, we conducted empirical experiments by varying one hyperparameter at a time while keeping the others fixed. Based on these experiments, we set defined in (12), the truncation level as for both HALMC and SoftImp (where m is the size of the squared test image), as defined in (18), and the boundary condition to 3. For a detailed procedure of selecting the hyperparameters for HALMC, readers may refer to the Supplementary Materials.

To ensure a fair comparison among all algorithms, we define the stopping criterion as follows:

where is the predefined threshold. All experimental results were averaged over 20 independent trials.

Table 1, Table 2, Table 3 and Table 4 present the experimental results of all compared algorithms. To highlight the best performance, the top two results in each table are boldfaced. Since TSNMR produces meaningless output due to its inaccuracy and failure to converge, its results were excluded from the analysis. According to the experimental results, HALMC, TFR, and SVT achieved the highest visual quality across all test images and incompletion ratios. In some cases—such as ‘indian’ and ‘barbara’ under specific incompletion ratios—SVT produced highly accurate image restorations. TFR, in particular, achieved the best accuracy for the ‘indian’ image with 30% missing pixels. However, overall, the most accurate results across the experiments were achieved by HALMC. Specifically, HALMC consistently outperformed the other methods in terms of overall pixel-level differences (measured by MSE and PSNR) and structural differences (measured by SSIM and FSIM). Moreover, HALMC produced stable outputs regardless of the incompletion ratios, unlike SVT and TFR, which tended to yield less accurate results as the missing pixel rate increased. LAMC showed relatively higher FSIM values compared to the other accuracy metrics, such as MSE and PSNR. It can be interpreted that the local-filtering module in LAMC improved the local feature similarities, particularly under severe image degradation.

Table 1.

Performance comparisons of the algorithms on the ‘indian’ image. The hyperparameters are set to and for HALMC.

Table 2.

Performance comparisons of the algorithms on ‘boat’ image. The hyperparameters are set to and for HALMC.

Table 3.

Performance comparisons of the algorithms on ‘barbara’ image. The hyperparameters are set to and for HALMC.

Table 4.

Performance comparisons of the algorithms on ‘airport’ image. The hyperparameters are set to and for HALMC.

In terms of execution time, HALMC demonstrated significant efficiency while maintaining high accuracy. Although SoftImp achieved the fastest execution time, its performance degraded more rapidly as the incompletion ratio increased, specifically compared to HALMC. Overall, considering the execution time, robustness, and accuracy in image completion, HALMC emerges as the most effective algorithm among the four evaluated. Example output images generated by the algorithms for the case of 50% missing pixels are shown in Figure 3c–g, Figure 4c–g, Figure 5c–g and Figure 6c–g.

4.2. Recommender System

Another application for matrix completion problems is a recommender system. The primary goal of recommender systems is to provide personalized item recommendations to users based on their explicit or implicit preference information. Specifically, user preferences are typically estimated using ratings of similar items, indicating the user’s affinity for related items. As rating data of two entities (users and items) are often placed in matrix form, and users tend to rate only a small subset of items, estimation of the preferences can be considered as predicting missing entities from a large and sparse matrix. To address this challenge, matrix completion algorithms are extensively studied for decades. One widely adopted approach involves low-rank approximation of the rating matrix, providing an effective solution for predicting missing entries and enhancing the accuracy of recommender systems [1,2].

The low-rank matrix completion algorithms predict missing values by using the low-rank approximation of partially observed rating values in the rating matrix. Given a set of users and items , if we let be the rating of user u and item i, then the rating matrix can be defined as

where the set is observed. Hence, the proposed low-rank matrix completion algorithm is applicable to the recommender system to fill out the missing rate values. To measure the performance of the proposed algorithm to recommender systems, we used the MovieLens 100k dataset that has a rating matrix , MovieLens 1M dataset that has a rating matrix , and Bookcrossing dataset that has a rating matrix of size . The MovieLens 100k dataset has 97.48% of unrated entries, MovieLens 1M dataset has 98.32%, and Bookcrossing dataset has 99.95%. The datasets have rating values ranging from 1 to 5 for MovieLens 100k and MovieLens 1M, and from 1 to 10 for Bookcrossing. To evaluate performance, we randomly chose 20%, 40%, and 60% of the non-zero-rating values in the datasets and considered them as missing values. After completing the rating matrices, we calculated the mean absolute error (MAE) from the ground truth data and RMSE, where MAE and root mean square error (RMSE) are defined as follows [2]:

Here, represents the set of randomly chosen missing values to be predicted, indicates the ground-truth rating matrix, and N denotes the number of missing values in . The lower the MAE, the closer the prediction of missing user ratings. Similarly, we empirically chose hyperparameters of the algorithm, as well as common parameters, including stopping criterion.

Table 5, Table 6 and Table 7 present the experimental results. As with the image completion experiments, SoftImp demonstrated relatively fast computation speed but yielded unsatisfactory accuracy. SVT produced highly accurate results; however, it suffered from excessive computation time, which makes it less viable for large recommender system datasets. Additionally, SVT showed limited robustness in terms of convergence. TSNMR achieved excellent accuracy across nearly all recommender system datasets, but it failed to converge within the predefined maximum number of iterations. The convergence history for TSNMR is shown in the Supplementary Materials. This resulted in prohibitively high computational costs, rendering TSNMR impractical for large-scale applications. In contrast, HALMC consistently demonstrated the best execution speed across all recommender system datasets and varying missing rates, making it well-suited for large datasets. Although HALMC did not achieve the highest accuracy in all cases, its significantly faster execution speed and reasonable accuracy make it a practical and scalable option for a recommender system.

Table 5.

Performance comparisons of the algorithms on ‘Movielens 100K’ dataset. The hyperparameters are set to and for HALMC.

Table 6.

Performance comparisons of the algorithms on ‘Movielens 1M’ dataset. The hyperparameters are set to and for HALMC.

Table 7.

Performance comparisons of the algorithms on ‘Bookcrossing’ dataset. The hyperparameters are set to and for HALMC.

Consequently, HALMC stands out as the most attractive algorithm for recommender system applications, offering a strong balance between accurate restoration of missing entries and significantly reduced computation time compared to other matrix completion algorithms.

5. Conclusions and Future Works

This study introduces a rank-restricted hierarchical alternating least squares algorithm for matrix completion applications. Typical algorithms for matrix completion often demand substantial computational resources while iteratively computing the extremely expensive SVD, particularly when using large-sized images. To overcome these difficulties, we employ a rank-restricted matrix factorization with an orthogonality constraint using hierarchical alternating least squares. Additionally, we propose a novel shrinkage function of singular values to enforce the sparsity constraint. The experimental results demonstrate that the proposed algorithm restores an incomplete matrix significantly faster than existing methods, while maintaining similar or even superior accuracy in image completion problems and recommender systems. In future work, we plan to explore two directions to enhance the applicability and scalability of the proposed algorithm. First, we aim to incorporate an adaptive selection of the hyperparameters to enable more robust optimization. Second, to further address the computational demands of large-scale applications, we intend to develop a scalable implementation of the proposed algorithm. This includes parallelization strategies using GPU computing and distributed frameworks.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/app15168876/s1, Figure S1: Empirical analysis for selecting the hyperparameters and used in HALMC with 30% randomly missing pixels. Figure S2: Empirical analysis for selecting the hyperparameters and boundary condition number used in HALMC with 30% randomly missing pixels. Figure S3: Empirical analysis for selecting the hyperparameters truncation level and d defined in (23) for HALMC with 30% randomly missing pixels. Truncation level is the ratio of the image size. Figure S4: Empirical analysis for selecting the hyperparameters and used in HALMC with 50% randomly missing pixels. Figure S5: Empirical analysis for selecting the hyperparameters and boundary condition number used in HALMC with 50% randomly missing pixels. Figure S6: Empirical analysis for selecting the hyperparameters truncation level and d defined in (23) for HALMC with 50% randomly missing pixels. Truncation level is the ratio of the image size. Figuer S7: Empirical analysis for selecting the hyperparameters and used in HALMC with 70% randomly missing pixels. Figure S8: Empirical analysis for selecting the hyperparameters and boundary condition number used in HALMC with 70% randomly missing pixels. Figure S9: Empirical analysis for selecting the hyperparameters truncation level and d defined in (23) for HALMC with 70% randomly missing pixels. Truncation level is the ratio of the image size. Figure S10: Convergence history of TSNMR when MovieLens 100k dataset with 40% missing entries is used.

Funding

This work was supported by the Hankuk University of Foreign Studies Research Fund.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The author declares no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| HALMC | Hierarchical Alternating Least Squares for Matrix Completion |

References

- Ramlatchan, A.; Yang, M.; Liu, Q.; Li, M.; Wang, J.; Li, Y. A Survey of Matrix Completion Methods, for Recommendation Systems. Big Data Min. Anal. 2018, 1, 308–323. [Google Scholar] [CrossRef]

- Chen, Z.; Wang, S. A review on matrix completion for recommender systems. Knowl. Inf. Syst. 2022, 64, 1–34. [Google Scholar] [CrossRef]

- Jam, J.; Kendrick, C.; Walker, K.; Drouard, V.; Hsu, J.G.; Yap, M.H. A comprehensive review of past and present image inpainting methods. Comput. Vis. Image Underst. 2021, 203, 103147. [Google Scholar] [CrossRef]

- Li, J.; Li, M.; Fan, H. Image Inpainting Algorithm Based on Low-Rank Approximation and Texture Direction. Math. Prob. Eng. 2014. [Google Scholar] [CrossRef]

- Xu, J.; Chen, Y.; Zhang, X. Color image inpainting based on low-rank quaternion matrix factorization. J. Ind. Manag. Optim. 2024, 20, 825–837. [Google Scholar] [CrossRef]

- Fan, J.; Cheung, J. Matrix completion by deep matrix factorization. Neural Net. 2018, 98, 34–41. [Google Scholar] [CrossRef] [PubMed]

- Xu, M.; Jin, R.; Zhou, Z. Speedup matrix completion with side information: Application to multi-label learning. In Proceedings of the NIPS’13: 26th International Conference on Neural Information Processing Systems, Lake Tahoe, NV USA, 5–10 December 2013; Volume 2, pp. 2301–2309. [Google Scholar]

- Radhakrishnan, A.; Stefanakis, G.; Belkin, M.; Uhler, C. Simple, fast, and flexible framework for matrix completion with infinite width neural networks. Proc. Natl. Acad. Sci. USA 2022, 119, e2115064119. [Google Scholar] [CrossRef] [PubMed]

- Candès, E.; Eldar, Y.; Strohmer, T. Phase retrieval via matrix completion. SIAM Rev. 2015, 52, 225–251. [Google Scholar] [CrossRef]

- Kalogerias, D.S.; Petropulu, A.P. Matrix Completion in Colocated MIMO Radar: Recoverability, Bounds & Theoretical Guarantees. IEEE Trans. Signal Process. 2013, 62, 309–321. [Google Scholar] [CrossRef]

- Sun, S.; Zhang, Y.D. 4D Automotive Radar Sensing for Autonomous Vehicles: A Sparsity-Oriented Approach. IEEE J. Sel. Top. Signal Process. 2021, 15, 879–891. [Google Scholar] [CrossRef]

- Ha, J.; Li, C.; Luo, X.; Wang, Z. Matrix completion via modified schattern 2/3-norm. Eurasip J. Adv. Signal Process. 2023, 2023, 62. [Google Scholar] [CrossRef]

- Tanner, J.; Wei, K. Low rank matrix completion by alternating steepest descent methods. Appl. Comput. Harmon. Anal. 2016, 40, 417–429. [Google Scholar] [CrossRef]

- Cai, J.F.; Candès, E.J.; Shen, Z. A Singular Value Thresholding Algorithm for Matrix Completion. Siam J. Optim. 2010, 20, 1956–1982. [Google Scholar] [CrossRef]

- Recht, B.; Fazel, M.; Parrilo, P.A. Guaranteed minimum-rank solution of linear matrix equations via nuclear norm minimization. SIAM Rev. 2010, 52, 471–501. [Google Scholar] [CrossRef]

- Shi, Q.; Lu, H.; Cheung, Y. Rank-One Matrix Completion With Automatic Rank Estimation via L1-Norm Regularization. IEEE Trans. Neural Net. Learn. Sys. 2017, 29, 4744–4757. [Google Scholar] [CrossRef] [PubMed]

- Xiao, J.; Huang, T.; Deng, L.; Dou, H. A Novel Nonconvex Rank Approximation with Application to the Matrix Completion. East Asian J. Appl. Math. 2025, 15, 741–769. [Google Scholar] [CrossRef]

- Cui, A.; Peng, J.; Li, H. Exact recovery low-rank matrix via transformed affine matrix rank minimization. Neurocomputing 2018, 319, 1–12. [Google Scholar] [CrossRef]

- Josse, J.; Sardy, S. Adaptive shrinkage of singular values. Stat. Comput. 2016, 26, 715–724. [Google Scholar] [CrossRef]

- Zhang, H.; Gong, C.; Qian, J.; Zhang, B.; Xu, C.; Yang, J. Efficient recovery of low-rank via double nonconvex nonsmooth rank minimization. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 2916–2925. [Google Scholar] [CrossRef]

- Hastie, T.; Mazumder, R.; Lee, J.D.; Zadeh, R. Matrix Completion and Low-Rank SVD via Fast Alternating Least Squares. J. Mach. Learn. Res. 2015, 16, 3367–3402. [Google Scholar]

- Li, C.; Che, H.; Leung, M.F.; Liu, C.; Yan, Z. Robust multi-view non-negative matrix factorization with adaptive graph and diversity constraints. Inf. Sci. 2023, 634, 587–607. [Google Scholar] [CrossRef]

- Yang, X.; Che, H.; Leung, M.F.; Liu, C. Adaptive graph nonnegative matrix factorization with the self-paced regularization. Appl. Intell. 2023, 53, 15818–15835. [Google Scholar] [CrossRef]

- Xu, K.; Zhang, Y.; Dong, Z.; Li, Z.; Fang, B. Hybrid Matrix Completion Model for Improved Images Recovery and Recommendation Systems. IEEE Access 2021, 9, 149349–149359. [Google Scholar] [CrossRef]

- Xu, D.; Ruan, C.; Korpeoglu, E.; Kumar, S.; Achan, K. Rethinking Neural vs. Matrix-Factorization Collaborative Filtering: The Theoretical Perspectives. In Proceedings of the 38 th International Conference on Machine Learning, Virtual, 18–24 July 2021; PMLR: Cambridge, UK, 2021; Volume 139. [Google Scholar]

- Ding, C.; Li, T.; Peng, W.; Park, H. Orthogonal nonnegative matrix t-factorizations for clustering. In Proceedings of the KDD ’06: 12th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Philadelphia, PA, USA, 20–23 August 2006; pp. 126–135. [Google Scholar]

- Gan, J.; Liu, T.; Li, L.; Zhang, J. Non-negative Matrix Factorization: A Survey. Comput. J. 2021, 64, 1080–1092. [Google Scholar] [CrossRef]

- Kimura, K.; Tanaka, Y.; Kudo, M. A Fast Hierarchical Alternating Least Squares Algorithm for Orthogonal Nonnegative Matrix Factorization. In Proceedings of the Sixth Asian Conference on Machine Learning, Ho Chi Minh City, Vietnam, 20–22 November 2015; Volume 39, pp. 129–141. [Google Scholar]

- Chichocki, A.; Zdunek, R.; Phan, A.H.; Amari, S.I. Nonnegative Matrix and Tensor Factorizations: Applications to Exploratory Multi-Way Data Analysis and Blind Source Separation; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2009. [Google Scholar]

- Mairal, J.; Bach, F.; Ponce, J.; Sapiro, G.; Zisserman, A. Non-local sparse models for image restoration. In Proceedings of the IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 2272–2279. [Google Scholar]

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. Image Denoising by Sparse 3-D Transformation-Domain Collaborative Filtering. IEEE Trans. Image Process. 2007, 16, 2080–2095. [Google Scholar] [CrossRef]

- Blumensath, T.; Davies, M.E. Iterative hard thresholding for compressed sensing. Appl. Comput. Harmon. Anal. 2009, 27, 265–274. [Google Scholar] [CrossRef]

- Hansen, P.C.; Nagy, J.G.; O’Leary, D.P. Deblurring Images Matrices, Spectra, and Filtering; SIAM: Philadelphia, PA, USA, 2006. [Google Scholar]

- Fan, Y.W.; Nagy, J.G. Synthetic boundary conditions for image deblurring. Linear Algebra Appl. 2011, 434, 2244–2268. [Google Scholar] [CrossRef][Green Version]

- Zhou, X.; Zhou, F.; Bai, X.; Xue, B. A boundary condition based deconvolution framework for image deblurring. J. Comput. Appl. Math. 2014, 261, 14–29. [Google Scholar] [CrossRef]

- Nguyen, L.T.; Kim, J.; Shim, B. Low-Rank Matrix Completion: A Contemporary Survey. IEEE Access 2019, 7, 94215–94237. [Google Scholar] [CrossRef]

- Bertalmio, M.; Sapiro, G.; Caselles, V.; Ballester, C. Image inpainting. In Proceedings of the 27th Annual Conference on Computer Graphics and Interactive Techniques, New Orleans, LA, USA, 23–28 July 2000; ACM Press/Addison-Wesley Co.: New Orleans, LA, USA, 2000; pp. 417–424. [Google Scholar]

- Chan, T.F.; Shen, J. Nontexture inpainting by curvature-driven diffusions. J. Vis. Commun. Image Represent. 2001, 12, 436–449. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Mou, X.; Zhang, D. FSIM: A Feature Similarity Index for Image Quality Assessment. IEEE Trans. Image Process. 2011, 20, 2378–2386. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).