1. Introduction

The rapid advancement of generative artificial intelligence (AI) has catalyzed a significant transformation across creative technologies, with AI-based music generation emerging as an active research field due to its broad range of applications. Traditional methods relying on rule-based composition systems or simple probabilistic methods often lack the ability to represent diverse musical structures and contextual expressiveness [

1]. However, with the emergence of deep learning-based generative models, particularly latent diffusion models (LDMs), it is now possible to generate high-quality music that more accurately reflects the user’s intent [

2]. Among these models, text-to-music generation has drawn considerable interest, enabling users to generate music from natural language prompts without prior musical expertise. Various models, such as MusicLM [

3], MusicGen [

4], and AudioLDM [

5], which are built on the LDM framework, have demonstrated the ability to map textual inputs into coherent musical outputs. Despite these advances, current text-to-music models still face several unresolved limitations that hinder their scalability in real-world applications.

There are three significant problems. First, training large-scale text-to-music models requires massive, paired datasets. Most musical data are subject to copyright restrictions, limiting access and legal use. Furthermore, the ownership of AI-generated music remains a legally ambiguous area, raising concerns over fair use, licensing, and authorship [

6].

Second, existing models are effective at generating short musical phrases; however, they struggle to maintain structural coherence over longer durations (e.g., several minutes). Many models prioritize music quality over musical structure, resulting in outputs that may sound realistic but lack meaningful development or narrative form, limiting their usability in professional media production [

7].

Third, most models are generalist by design, aiming to cover a wide range of musical genres. However, they struggle to capture accurate, fine-grained stylistic elements of specific genres. For example, boom bap, trap, and gangsta rap within the hip-hop genre differ significantly in rhythm, instrumentation, and tempo. The current models often fail to reflect these distinctions, producing generic outputs that may not align with the user’s creative intent [

8].

As a representative text-to-audio generation model based on the LDM, AudioLDM aims to address several challenges in the domain. First, this model enables generation without relying on large-scale explicit text–audio paired datasets by applying contrastive language–audio pretraining (CLAP) embeddings to capture the semantic alignment between text and audio, partially alleviating data collection burdens and copyright concerns. Second, this model improves the coherence of the generated music by aligning the semantic meaning between the text and audio rather than relying solely on simple conditional prompts. Third, this model incorporates architectural flexibility that allows it to respond to a wide range of text conditions. However, despite these improvements, several limitations remain. For instance, the model still struggles with fine-grained music generation tailored to specific domains or genres. Moreover, its ability to control detailed musical style remains limited, and it lacks an efficient fine-tuning mechanism that enables lightweight adaptation to specialized data. This paper aims to address these challenges by introducing a Parameter-Efficient Fine-Tuning (PEFT) strategy by integrating low-rank adaptation (LoRA) into the self-attention blocks of the AudioLDM U-Net.

The AudioLDM system lacks the ability to express accurate fine-grained subgenres. Instead, this model maps textual semantics into conditioning embeddings using CLAP and generates audio waveforms via a U-Net-based diffusion process. Although CLAP is effective at modality-level semantic alignment, it struggles to capture subtle musical elements, such as rhythmic patterns, instrumentation, and timbre, that distinguish subgenres. More critically, most generative operations are performed within the U-Net, which is trained to reflect conditions uniformly; hence, the model fails to adapt its generation process to subgenre-specific characteristics. For example, within the hip-hop genre, styles (e.g., boom bap, trap, and gangster rap) differ significantly in rhythm and instrumentation, but AudioLDM tends to produce musically generic outputs that fail to reflect these distinctions. Similar limitations have been observed in MusicGen, which has a diffusion-based architecture [

3,

4].

Second, the model exhibits inconsistency in reflecting user-defined conditions. While AudioLDM incorporates CLAP embeddings via cross-attention layers in the U-Net, it fails to consistently align high-level conditions (e.g., emotion or atmosphere) with musical components across the entire generation process. For instance, prompts containing emotional terms (e.g., “melancholic” or “dreamy”) may appropriately influence chord progressions but often contradict the tempo or instrumentation choices. This misalignment results in a perceptual gap between the user expectations and the generated output, forcing users to revise prompts or perform manual post-processing repeatedly. MusicLM exhibits similar problems when translating emotional input into coherent musical structure [

9].

Third, the use of full fine-tuning imposes significant barriers to fast adaptation and reusability. The AudioLDM framework is built on a large-scale pretrained diffusion model, and any adaptation to new genres or user preferences requires completely retraining the U-Net. This retraining results in inefficiency in real-world deployment. For commercial applications that require rapid music generation, full-model retraining is impractical due to its associated high computational costs. Furthermore, the lack of modularity requires each style to be trained from scratch, delaying the development pipeline. Finally, no mechanism exists to transfer the learned parameters across related domains, limiting reuse, experimentation, and task switching. These limitations have been well-documented in prior work, which has called for lightweight adaptation methods to improve training efficiency and transferability [

10].

The AudioLDM framework generates audio in the latent space of a variational autoencoder based on mel spectrograms and conditioned on the CLAP embedding, which learns the semantic alignment between text and audio. However, fine-tuning the original AudioLDM is challenging due to the difficulty of updating all model parameters, which incurs high computational costs and complicates fine-grained adaptation for specific genres.

In this paper, we propose a method to address the challenges of genre-specific music generation by applying LoRA to AudioLDM, a text-to-music generation model based on the LDM. The proposed method applies LoRA only to the core components of AudioLDM (i.e., the self-attention and projection layers) while selectively adding learnable parameters. The LoRA method adapts a low-rank matrix approach to update only a small portion of the parameters while keeping the remaining model parameters fixed. This approach enables efficient fine-tuning for specific domains or styles while maintaining the overall model structure, making it practical for environments with limited computational resources. By reducing the number of learned parameters, the method offers advantages in terms of faster training speed and lower memory usage. Therefore, this method allows for quick adaptation to new genres or styles, making it effective for user-customized music generation. Additionally, the proposed method enables control of detailed musical elements, such as rhythm, tempo, timbre, instrument composition, and dynamics, allowing a precise adaptation to subgenres or specific artist styles via Parameter-Efficient Fine-Tuning of AudioLDM on genre-specific datasets. Overall, the contributions of this work are as follows:

This work is the first to apply LoRA-based lightweight fine-tuning to AudioLDM, overcoming the limitations of full-model fine-tuning.

This proposed method achieves genre-specific music generation with fine-grained control of detailed musical conditions.

We propose a method that maintains high generation quality even with small datasets and limited computational resources.

In summary, this paper introduces a lightweight LoRA-based fine-tuning method to enhance the genre adaptation capabilities of AudioLDM. The proposed method satisfies the training efficiency and music generation quality requirements, indicating its potential for future expansion in various music generation and editing domains.

2. Related Work

This section surveys prior work in three interconnected areas that form the basis of our study. We begin with Parameter-Efficient Fine-Tuning (PEFT) strategies, which aim to reduce training overhead while maintaining performance, focusing on LoRA and its variants. We then introduce key models in text-conditioned music generation, which serve as the foundation for applying PEFT techniques to audio generation. Finally, we review recent efforts to extend LoRA for personalization and domain adaptation in diffusion models, providing a rationale for our application to AudioLDM.

2.1. Parameter-Efficient Fine-Tuning

As large-scale pre-trained models become increasingly prevalent, Parameter-Efficient Fine-Tuning (PEFT) has emerged as a practical solution to reduce training cost and memory footprint. This subsection introduces core PEFT techniques, with a focus on LoRA and its evolving ecosystem, and sets the stage for their use in multimodal domains.

Parameter-efficient fine-tuning methods aim to reduce the number of trainable parameters required for adapting large pre-trained models to downstream tasks, offering significant savings in compute and memory while maintaining competitive performance [

11]. Among the earliest approaches, prefix tuning [

12] prepends trainable prefix vectors to the key and value inputs of each Transformer layer, enabling task adaptation without modifying base parameters. Prompt tuning [

13] simplifies this by learning a fixed input embedding prepended to the model’s token sequence, though its performance often lags behind under low-resource settings. BitFit [

14] updates only the bias terms in Transformer layers, demonstrating surprising effectiveness in classification tasks with minimal parameter cost. LoRA [

10] introduces a low-rank decomposition into weight update paths, achieving state-of-the-art performance with significantly fewer trainable parameters and preserving the frozen base model. Recent variants such as AdaLoRA [

15] dynamically allocate ranks during training based on parameter importance, while QLoRA [

16] combines quantization with LoRA to scale fine-tuning to 65B models on a single GPU. These advancements collectively position LoRA and its extensions as a dominant family in PEFT, widely adopted across LLMs and increasingly explored in multimodal and generative domains.

2.2. Text-to-Music Generation Models

Building on the foundations of PEFT, this subsection turns to recent advances in text-to-music generation. We outline three leading models—MusicLM, MusicGen, and AudioLDM—that illustrate the progression from hierarchical token decoding to diffusion-based synthesis and establish a motivating context for applying LoRA to music generation tasks.

Text-conditioned music generation has emerged as a key frontier in multimodal AI, with models seeking to align audio outputs with rich semantic descriptions. MusicLM [

3] generates audio via a hierarchical sequence-to-sequence framework: textual input is first mapped into MuLan semantic embeddings, followed by coarse-to-fine decoding into audio using SoundStream. This design enables high-fidelity synthesis but relies on large-scale data and complex pipelines. In contrast, MusicGen [

4] streamlines generation by directly predicting EnCodec-quantized audio tokens using a Transformer decoder conditioned on text features from a w2v-BERT encoder, reducing latency while preserving semantic controllability. AudioLDM [

5] adopts a latent diffusion approach, encoding waveforms into latent space via a VAE and aligning audio–text representations through CLAP embeddings. This enables noise-conditioned generation with high diversity and fidelity. Each model leverages different conditioning strategies and audio tokenization schemes: while MusicLM employs cascaded quantizers and MuLan, MusicGen integrates EnCodec for token compression, and AudioLDM relies on VAE-based latents and CLAP for joint embedding. Collectively, these models reflect a trend toward increasingly efficient, semantically aligned, and flexible text-to-music systems, offering fertile ground for integration with PEFT techniques such as LoRA.

2.3. LoRA for Personalization and Subdomain Adaptation in Diffusion Models

With the diffusion-based AudioLDM model providing a suitable backbone, this subsection investigates how LoRA and its extensions have been employed in diffusion models for fine-grained control and personalization. These advances motivate our proposed integration of LoRA into AudioLDM’s text encoder for genre-specific adaptation.

LoRA [

10] has gained traction as a scalable PEFT method for diffusion-based generative models, enabling efficient adaptation through rank-constrained parameter injection. In the context of music generation, LoRA facilitates selective tuning of key modules—particularly the self-attention blocks in U-Net backbones—while keeping the majority of the model frozen. Recent studies have extended LoRA for personalization and subdomain control.

DiffLoRA [

17] employs a hypernetwork to generate LoRA weights from user-specific references, enabling sample-wise personalization in text-to-image models without additional fine-tuning. Blockwise-LoRA [

18] introduces structural modularity by grouping LoRA weights into concept-specific blocks, enhancing controllability and compositionality. LoRA Fusion [

19] further advances stylistic control by integrating multiple LoRA modules based on prompt-derived attention scores, allowing soft blending across learned domains. These methods vary in architectural strategy: DiffLoRA emphasizes dynamic generation of adaptation weights, Blockwise-LoRA focuses on hierarchical modularity, and LoRA Fusion enables multi-style interpolation via softmax-weighted key–query mechanisms. In this work, we extend these approaches to text-to-music generation by integrating LoRA into the text encoder of AudioLDM, targeting

and

projection matrices for subgenre-specific adaptation. This enables efficient fine-tuning without full model retraining, supporting genre conditioning, domain alignment, and personalization.

These architectural innovations collectively demonstrate that LoRA is scalable and highly adaptable for use in domain-specific generation. In the context of text-to-music systems, this flexible control of model outputs is essential for respecting genre conventions, user intent, and stylistic coherence. Thus, LoRA-based techniques provide a compelling solution for personalized and expressive music generation in diffusion frameworks.

2.4. LoRA in Transformer-Based Large Language Models

The effectiveness of LoRA has been validated across various transformer-based language models, such as RoBERTa, DeBERTa, and the GPT family. The primary focus of this method lies in modifying the self-attention mechanism, specifically the query (

) and value (

) projection matrices. Although AudioLDM is fundamentally designed as a diffusion model, its U-Net backbone integrates self-attention layers that resemble those in transformer decoders. This architectural similarity offers a clear rationale for adapting LoRA techniques—initially developed for language models—to the attention layers in AudioLDM [

10]. In terms of target modules, prior research has consistently found that applying LoRA to

and

preserves contextual modeling abilities while keeping the feedforward network and other components frozen. This strategy effectively minimizes the computational overhead without compromising performance. Several critical studies support the practicality and efficiency of LoRA. For instance, in RoBERTa [

20], LoRA achieved an accuracy score within 0.3% to 0.7% of the full fine-tuning accuracy on GLUE tasks, outperforming other lightweight methods, such as adapters and BitFit, in terms of parameter efficiency. In the case of DeBERTa XXL (1.5B) [

21], rank-8 LoRA maintained state-of-the-art performance in classification and natural language inference tasks. The LoRA method also displayed strong results on GPT-2 [

22], surpassing prefix tuning and adapter-based approaches in various tasks (e.g., E2E NLG and DART), even with only 0.35 million trainable parameters, which is comparable to the performance of the fully fine-tuned 774 million-parameter GPT-2. Moreover, when applied to GPT-3 (175B) [

23], LoRA demonstrated impressive scalability, achieving superior performance over full fine-tuning in datasets (e.g., WikiSQL and MNLI), with only 4.7 million trainable parameters (rank

r = 1 or 2). These results collectively validate the applicability of LoRA to the attention layers of AudioLDM, establishing a strong foundation for efficient genre-specific adaptation in text-to-music generation.

3. Applying LoRA to AudioLDM

This section proposes a lightweight fine-tuning approach for genre-specific music generation by integrating LoRA [

10] into AudioLDM [

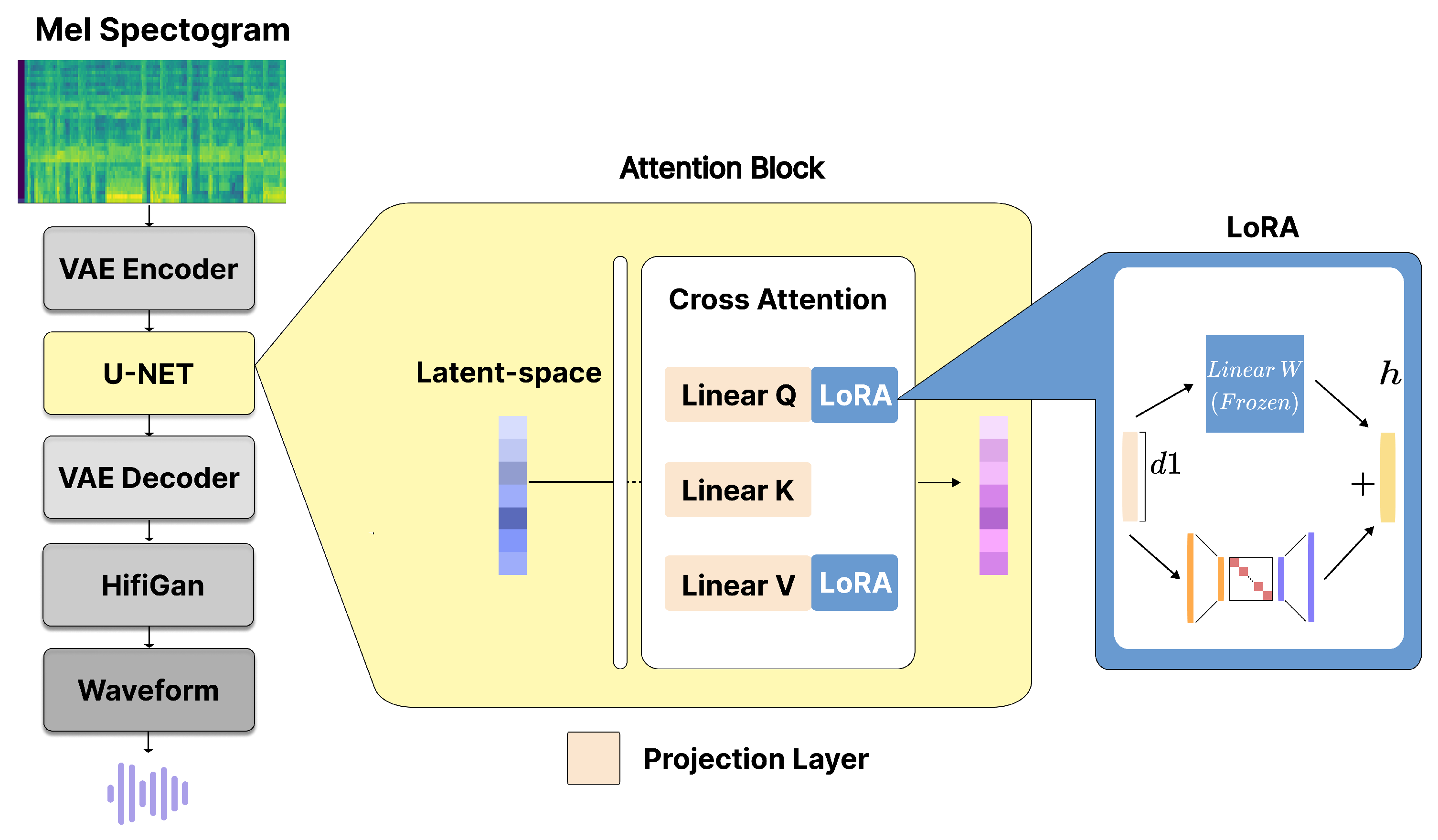

5], a text-conditional music generation model based on latent diffusion. We analyze the U-Net architecture of AudioLDM, identifying the query and value in its cross-attention mechanisms as optimal insertion points for LoRA. The proposed method builds on the parameter-efficient adaptation strategies of LoRA and customizes them for the unique properties of diffusion-based architectures, aiming to enable fine-grained control of music generation through textual inputs. The training is conducted using pairs comprising a mel spectrogram and caption, where the captions were enhanced to include detailed descriptions of subgenres.

3.1. Justification for Applying LoRA to Query and Value

The AudioLDM framework is based on a latent diffusion model and uses a U-Net structure to generate music. Within the U-Net, both self-attention and cross-attention are used extensively to improve the accuracy of conditional generation. Each attention block includes layer normalization, self-attention, cross-attention, and a feedforward module, with skip connections after each step. These blocks help the model understand both short- and long-range patterns in the data while combining guidance information at different levels.

Both self- and cross-attention mechanisms rely on three core linear projection layers: the query, key, and value. These layers model inter-dependencies by computing similarity scores using the dot product between the query and key and propagating contextual information via the value. Among them, the query and value serve as the primary conduits of information flow in each attention block, directly determining the focus of the model and which information is transmitted via U-Net.

This structural design is critical in diffusion-based music generation, where the conditioning signal from text embeddings must precisely guide the denoising process at each step. The attention mechanism enables dynamic alignment between textual semantics and audio features across varying resolution levels in the U-Net. Therefore, modifying the most influential components (i.e., the query and value) offers an effective means of controlling and refining the generative process.

The query determines which elements of the input the model should address, whereas the value projection dictates what information is delivered as a result of the attention layer. These layers directly affect the final output quality. In contrast, the key primarily serves to compute similarity with the query and plays a more auxiliary role. Similarly, the output projection layer following the cross-attention layer primarily post-processes the attention results without significantly influencing semantic alignment.

Based on this architectural analysis, we adopt a strategy of applying LoRA exclusively to the query and value. This design choice preserves the expressive capacity of the model while minimizing the number of additional parameters. This choice enables performance gains or retention with only partial fine-tuning, avoiding the computational cost of updating the entire model.

3.2. Parameter Efficiency of LoRA Integration

This section presents a quantitative analysis of the parameter reduction achieved by applying LoRA solely to the query and value in the cross-attention layers of AudioLDM. The objective is to minimize computational overhead and maximize training efficiency by introducing only a few trainable parameters to layers that play a core role in information flow. The AudioLDM U-Net includes multiple attention layers with varying hidden dimensions (

Figure 1).

These variable-width cross-attention layers enable a hierarchical representation of music features, increasing the overall capacity and resolution of the model. Employing multiple attention mechanisms also presents several opportunities for targeted adaptation. Thus, inserting LoRA modules into each cross-attention layer provides a distributed and fine-grained mechanism for controlling generative behavior. The LoRA method modifies the linear transformation by adding a trainable low-rank matrix that compensates for the original weight matrix

W (

Figure 1) [

10].

For a single query or value, the number of additional trainable parameters introduced by LoRA is given by . Each cross-attention layer introduces additional parameters, as LoRA is inserted in parallel for the query and value. Consequently, the total number of LoRA parameters in all attention layers is , where d denotes the hidden dimension of each cross-attention layer. In this case, assuming the channel number in the baseline model is 128, the cross-attention layers follow a symmetric encoder–decoder structure, resulting in a total dimension sum of . Each LoRA projection layer (query and value) adds parameters; hence, the total number of additional parameters between both projection layers in all cross-attention layers can be expressed as . Thus, the number of additional parameters linearly increases with the rank r of LoRA. For example, when , 27,648 parameters are introduced. This number represents only about of the total model parameters (about 181 million), indicating an extremely lightweight fine-tuning scheme. Such parameter efficiency enables rapid and cost-effective adaptation to domains with limited data or to specific musical genres, without compromising the generalizability of the pretrained model. Furthermore, freezing the original weights and training only the LoRA modules ensures training stability and computational efficiency.

3.3. Training Procedure

The dataset is reconstructed based on the Google MusicCaps [

3] music caption dataset. Each sample consists of a mel-spectrogram, derived via a time-frequency transformation, and a corresponding textual description. Music clips are padded to a uniform duration, and text inputs are tokenized at the word level after appending sub-genre information to the original description. The resulting data are organized into batches, each comprising paired music representations and textual descriptions, which are fed into the model.

The training is performed in a multi-process environment using data parallelism. The loss function, specifically mean squared error (MSE) loss, is computed based on the discrepancy between the predicted and ground-truth noise. (

Figure 2) Specifically, LoRA is configured with a rank of

; a scaling factor of

; and a Gaussian initialization for the additional weights. The adaptation is targeted at the query and value within all attention. During training, only the LoRA parameters are updated, whereas the rest of the model remains frozen. Text input is encoded into high-dimensional embeddings using a pre-trained encoder and serves as a conditioning input for music generation. The music is converted into latent representations, and noise is added in randomly selected time steps. The model is trained to denoise and recover the original signal.

4. Experiments

In this experiment, we evaluated the performance of the proposed method, which integrates LoRA [

10] into AudioLDM [

5] for text-conditional music generation. The primary objectives of the experiment were to assess the efficiency of the model and the quality of the generated output. First, this section describes the dataset, preprocessing methods, training parameter settings, and the overall training environment. Then, objective evaluation metrics, such as the CLAP [

24] scores and kernel audio distance (KAD) [

25] scores, along with subjective evaluation metrics, such as the mean opinion score (MOS) [

26], were applied to the generated audio to conduct a comprehensive performance analysis.

4.1. Dataset Setup

In this section, a dataset based on Google MusicCaps [

3] was employed to evaluate the performance of the text-to-music generation model. We selected music samples from the hip-hop genre, which includes a wide range of subgenres. The primary reason for this choice is that hip-hop, compared with other genres of music, exhibits a high degree of diversity in its subgenre characteristics and expressive styles. Subgenres such as boom bap, trap, etc. differ significantly in terms of rhythm patterns, harmonic structures, instrumentation, and vocal delivery.

This diversity makes hip-hop an ideal candidate for evaluating the proposed LoRA-based model’s ability to perform fine-grained, subgenre-specific conditional generation. By focusing on a single overarching genre that includes multiple distinct substyles, we can more effectively assess how well the model captures and reflects nuanced musical differences in response to conditional prompts.

Furthermore, within the MusicCaps dataset, the hip-hop category contained a sufficient number of music–text pairs, enabling meaningful experimentation. The structure of the dataset also allowed for manual annotation of subgenre information, which is essential for training and evaluating conditional generation performance with greater reliability and scalability. During this process, entry captions marked as “low quality” were removed to enhance the quality of the training data, and 193 hip-hop samples with low-quality captions removed were selected. Subgenre information was manually added to each caption to enable more fine-grained conditional generation. The following prompts were appended, depending on the subgenre of each sample, as summarized in

Table 1. All music clips were resampled to 16 kHz and preprocessed into the mel-spectrogram format to match the input requirements for AudioLDM (see

Figure 3).

4.2. Experimental Environment

The baseline model in this experiment was AudioLDM, a text-to-music generation model based on the latent diffusion architecture. The experiments were conducted to evaluate the proposed method by varying the rank of the LoRA weight matrices, which determines their dimensionality, and varying the alpha value, which controls the scaling ratio of the LoRA weights applied to the pretrained weights. The choices of rank and alpha values were based on the original LoRA paper [

10], where typical configurations (e.g., rank is 1 to 8) and alpha scaling (e.g.,

is r or 2r) were shown to provide performance gains while maintaining efficiency. The experiments were performed using different settings, where the alpha was set equal to the rank or twice the rank.

Table 2 summarizes the details on the LoRA configuration, optimization method, learning rate, and batch size. The training process was monitored using weights and biases (wandb), and the generated music samples, spectrogram images, and corresponding text prompts were stored during training for later analysis and evaluation. The experiment was conducted on a high-performance computing server with an AMD EPYC 7352 (24-core) CPU and Nvidia RTX 3080 Ti GPU (12 GB VRAM). This hardware offered sufficient resources and memory capacity to enable stable and efficient LoRA-based training.

Table 3 details the hardware specifications.

This training setup enabled efficient experimentation across various LoRA configurations, allowing us to generate diverse audio outputs conditioned on different text prompts.

4.3. Evaluation Metrics

To rigorously assess the quality and fidelity of the generated music, we adopted a multifaceted evaluation strategy. Since musical quality cannot be fully captured by a single scalar value, we employed a combination of objective and subjective metrics to reflect different dimensions of performance.

Specifically, we used three complementary metrics: CLAP to measure alignment between the generated audio and text prompts, KAD to assess statistical realism compared with real-world music distributions, and MOS to evaluate perceptual quality based on human listener judgments. Together, these metrics provide a comprehensive view of how well the generated music reflects both semantic intent and listener experience.

The first metric, the CLAP score, quantifies how well the generated audio semantically aligns with the input text. This work uses the CLAP model to extract high-dimensional embeddings for audio and text and computes the cosine similarity between these vectors. The resulting similarity score was linearly transformed from the range to , where higher scores indicate better semantic alignment between the audio and its corresponding text prompt.

The second metric, the KAD score, evaluates the statistical similarity between the distributions of the generated and real audio samples. Unlike the Fréchet audio distance [

27], KAD does not assume Gaussianity in the embedding space and remains robust even when the sample size is small. This score is based on the maximum mean discrepancy [

28] framework using a kernel-based approach. The KAD score considers three types of similarities: between generated samples, between real samples, and between generated and real samples. The closer two vectors are, the closer their kernel value is to zero; conversely, the more dissimilar they are, the larger this value becomes. In this metric, a KAD score closer to zero indicates better performance, reflecting a high degree of similarity between the generated and real audio distributions. In this metric, a value of zero indicates the best score, signifying perfect alignment between distributions, whereas the upper bound is not explicitly defined.

The third metric, MOS, is a subjective evaluation method that reflects listeners’ perceived quality of the generated audio. It captures dimensions such as naturalness, audio fidelity, and prompt alignment that are often underrepresented in objective metrics [

26,

29]. To conduct this evaluation (see

Table 4), we recruited a total of 45 verified participants. The survey was administered via Google Form, and participants were required to log in with a Google account to ensure one response per person. Duplicate or anonymous entries were excluded to maintain data integrity. The evaluation was conducted in a blind setup, where the raters were not informed of the model identity behind each sample, minimizing cognitive bias and anchoring effects. Participants consisted of undergraduate and graduate students with general listening experience but no formal training in music or audio engineering, ensuring that the evaluation reflects perceptual judgments of typical users. Each participant evaluated three instrumental hip-hop tracks, each conditioned on a distinct text prompt and generated using different LoRA configurations. For each track, listeners provided independent ratings along three criteria—naturalness, audio quality, and alignment with the given prompt—on a five-point Likert scale (1: very poor, 5: excellent). The final MOS was calculated by averaging scores across participants for each dimension per sample.

This evaluation protocol aligns with best practices in generative audio research and directly addresses reviewer concerns regarding rater expertise, interface transparency, and the reliability of subjective assessments [

26,

29].

In addition to the MOS evaluation, we conducted further analyses to assess the practicality and generalizability of our proposed method.

First, we compared the generation speed between the baseline model and our proposed method to evaluate inference efficiency. To this end, we measured the average time required to generate five samples per prompt under identical conditions. The evaluation included three metrics: the actual generation time in seconds, the length of the generated audio, and the Real-Time Factor (RTF), which indicated how many times slower than real time the generation process was.

Second, to assess performance on underrepresented genres, we employed hand-crafted prompts for non-Western music (listed in

Table 5). For each prompt, five audio samples were generated using both the baseline and our proposed method, and the average CLAP and KAD scores were calculated. This experiment demonstrated that our method maintained strong controllability even for culturally sparse or non-Western genres.

4.4. Results

Based on the evaluation metrics described earlier, we present the results of experiments using two objective scores—CLAP and KAD—as well as a subjective assessment using MOS, to evaluate the performance of the AudioLDM model with integrated LoRA under various parameter settings. The CLAP and KAD scores were recorded at five training checkpoints using different combinations of rank and alpha values. For each checkpoint, we generated audio five times with different random seeds and reported the average score. The MOS scores were collected through a user study involving 45 participants from the general public. Furthermore, to facilitate a comprehensive comparison, the proposed method was evaluated alongside AudioLDM and a fully fine-tuned MusicGen under identical experimental conditions. This evaluation allowed for a thorough assessment of the effectiveness of LoRA integration in terms of both objective performance and subjective perceptual quality.

Table 6 shows the CLAP scores for each rank and alpha configuration. In all settings, the scores ranged mostly between 0.57 and 0.69. The highest score, 0.6995, was achieved with rank = 8 and alpha = 16 at 800 epochs, while the lowest score, 0.5717, appeared in the rank = 1, alpha = 1 configuration at 400 epochs. These results suggest that increasing rank and alpha tends to improve CLAP performance, indicating better alignment between the generated audio and the input text prompt.

Table 7 shows the KAD scores for each rank and alpha configuration. Lower scores indicate better alignment with the reference audio. The lowest score, 0.2906, was observed for rank = 1, alpha = 1 at 200 epochs, suggesting a strong similarity to reference audio. In contrast, the highest score, 0.9936, was observed for rank = 8, alpha = 8 at 400 epochs, indicating a substantial deviation from the reference audio. Overall, configurations with larger rank and alpha values tended to result in higher KAD scores, implying a trade-off between semantic alignment and audio naturalness or consistency.

To evaluate practical performance differences in music generation, we compared the CLAP and KAD scores of the proposed method with those of the original AudioLDM and a fully fine-tuned MusicGen model on the same dataset.

Table 8 presents the detailed results. For the CLAP score, the MusicGen model recorded a score of 0.5699, while the original AudioLDM achieved a score of 0.6497. The proposed method, depending on the LoRA configuration, achieved scores ranging from 0.5717 to 0.6995, with the best configuration (Rank = 8, Alpha = 16) showing a 7.7% improvement over the original AudioLDM. Overall, the proposed method consistently outperformed MusicGen, and showed variable performance compared with the original AudioLDM depending on the rank and alpha values. In terms of the KAD score, MusicGen scored 1.0022, while the original AudioLDM achieved a score of 1.1255, reflecting a high deviation from the reference audio. The proposed method significantly reduced the KAD score, achieving values between 0.2906 (Rank = 1, Alpha = 1) and 0.9936 (Rank = 8, Alpha = 8), depending on the configuration. The lowest score of 0.2906 represents a reduction of 74.2% compared with the original AudioLDM, while even the highest score achieved by the proposed method shows an improvement of 11.7%. Overall, the proposed method consistently outperformed MusicGen in terms of CLAP scores and showed varying performance compared with AudioLDM depending on the configuration. In contrast, for the KAD scores, the proposed method consistently achieved better results than both MusicGen and AudioLDM in all settings. These results demonstrate that the proposed method can achieve better semantic alignment and audio consistency compared with both MusicGen and the original AudioLDM.

A subjective evaluation was conducted using the MOS to assess the perceptual quality of the generated audio. A total of 45 participants, recruited from the general public, rated the generated audio sample via Google Form on a scale from 1 (very poor) to 5 (excellent), based on three criteria: naturalness of the music, overall sound quality and alignment with the input text prompt.

Table 9 presents the detailed results of this evaluation.

Table 10 summarizes the CLAP and KAD scores for the baseline and our proposed method across three non-Western prompts. Our method consistently outperformed the baseline, with higher CLAP scores and lower KAD scores, indicating improved semantic alignment and distributional similarity to real music.

In addition, we compared the generation latency to evaluate the computational overhead introduced by our approach. As shown in

Table 11, the average generation time increased from 6.72 s to 7.64 s, with the real-time factor rising from 1.68× to 1.91×. Although this reflected a modest computational cost, the gain in controllability and musical quality justified the trade-off.

5. Discussion

This paper applies LoRA [

10] to the AudioLDM [

5] model to verify the possibility of maintaining or improving music generation performance without full fine-tuning. The experimental results revealed that CLAP [

24] scores gradually increased with higher rank and alpha values, outperforming the baseline model. In contrast, the KAD [

25] scores were generally lower for the proposed method compared with the baseline model, demonstrating improved performance in terms of statistical distribution similarity. In particular, the LoRA settings with rank = alpha = 1 and rank = alpha = 2 displayed the best KAD performance during the early training stages.

These results suggest that lightweight fine-tuning using LoRA can be efficiently applied to music generation models. The LoRA method significantly reduces the number of trainable parameters while maintaining generation quality, making it a practical approach in resource-constrained environments. Furthermore, by applying genre-specific LoRA modules, various domain-specific music generation functions can be flexibly expanded on a single base model. This proposed method enables flexibility and scalability in music generation models.

To further enhance the effectiveness and expand the applicability of LoRA in music generation, future studies could investigate its application to other components, such as cross-attention layers, variational autoencoders, or embedding adjustment layers related to the CLAP model. Additionally, systematic experiments involving various LoRA configurations (e.g., different rank values, dropout rates, and parallel setups) could help identify optimal architectures via quantitative and qualitative analyses. In particular, ablation studies involving dropout rates, initialization methods, and parallel configurations beyond rank and alpha could improve stability and mitigate overfitting, as suggested by the inverse relationship observed between CLAP and KAD scores. Visualizing loss curves across configurations would also enhance interpretability and training insight.

Furthermore, due to its low computational overhead, LoRA is highly suitable for transfer learning across genres or themes. A promising research direction is to develop a transfer learning framework that reuses LoRA weights trained on base genres to adapt rapidly to subgenres or hybrid styles. Another promising direction is to extend LoRA integration to support multimodal inputs, such as combining textual prompts with audio references or visual cues (e.g., mood boards). This could enhance controllability for hybrid applications like film scoring, where prompt fusion across modalities plays a crucial role. Moreover, extending the controllable generation capabilities to support hybrid musical styles or emotion-based conditioning could enable more expressive and user-aligned outputs. To this end, incorporating larger and more diverse datasets—including underrepresented genres such as non-Western music—would be essential. This would help reduce potential cultural or stylistic biases, improve generalizability, and broaden the applicability of generative music systems to global music traditions. In addition, future work could systematically quantify genre and cultural representation within training data to identify underrepresented styles. Based on such analysis, LoRA modules could be selectively adapted or trained to amplify these genres, functioning as lightweight debiasing components within the generation pipeline.

These future directions aim to address the current limitations and promote the broader adoption of generative music systems in both artistic and commercial contexts.

6. Conclusions

Integrating LoRA [

10] into AudioLDM [

5], as proposed in this paper, demonstrates meaningful progress in improving the controllability and training efficiency of genre-specific music generation. Selectively fine-tuning only the core components of the model verified that it is possible to maintain the generation quality of the baseline architecture while enabling rapid domain adaptation with minimal computational resources. By applying LoRA to the query and value in the projection layers, the approach achieves efficient, lightweight training by updating only a small subset of the total parameters.

The experimental results indicate that the baseline model achieved a CLAP [

24] score of 0.6497 and a KAD [

25] score of 1.1255. In contrast, the proposed method exhibited a general improvement in CLAP scores as rank and alpha increased, reaching a maximum of 0.6995. The KAD scores were significantly reduced, achieving a minimum of 0.2906 in the lower rank and alpha LoRA settings, indicating improved statistical similarity to real music. These results suggest that LoRA is a practical and scalable approach to music generation, especially in resource-constrained environments. Genre-specific LoRA modules can be flexibly combined with a shared base model, supporting a wide range of domain-specific music generation tasks.

However, as the rank and alpha values increase, the risk of overfitting also increases, and an inverse relationship between CLAP and KAD scores emerges, indicating limitations in performance optimization. Therefore, future research should focus on refining parameter optimization techniques to ensure stable and consistent performance across diverse music generation tasks.