ROS-Compatible Robotics Simulators for Industry 4.0 and Industry 5.0: A Systematic Review of Trends and Technologies

Abstract

1. Introduction

Related Work

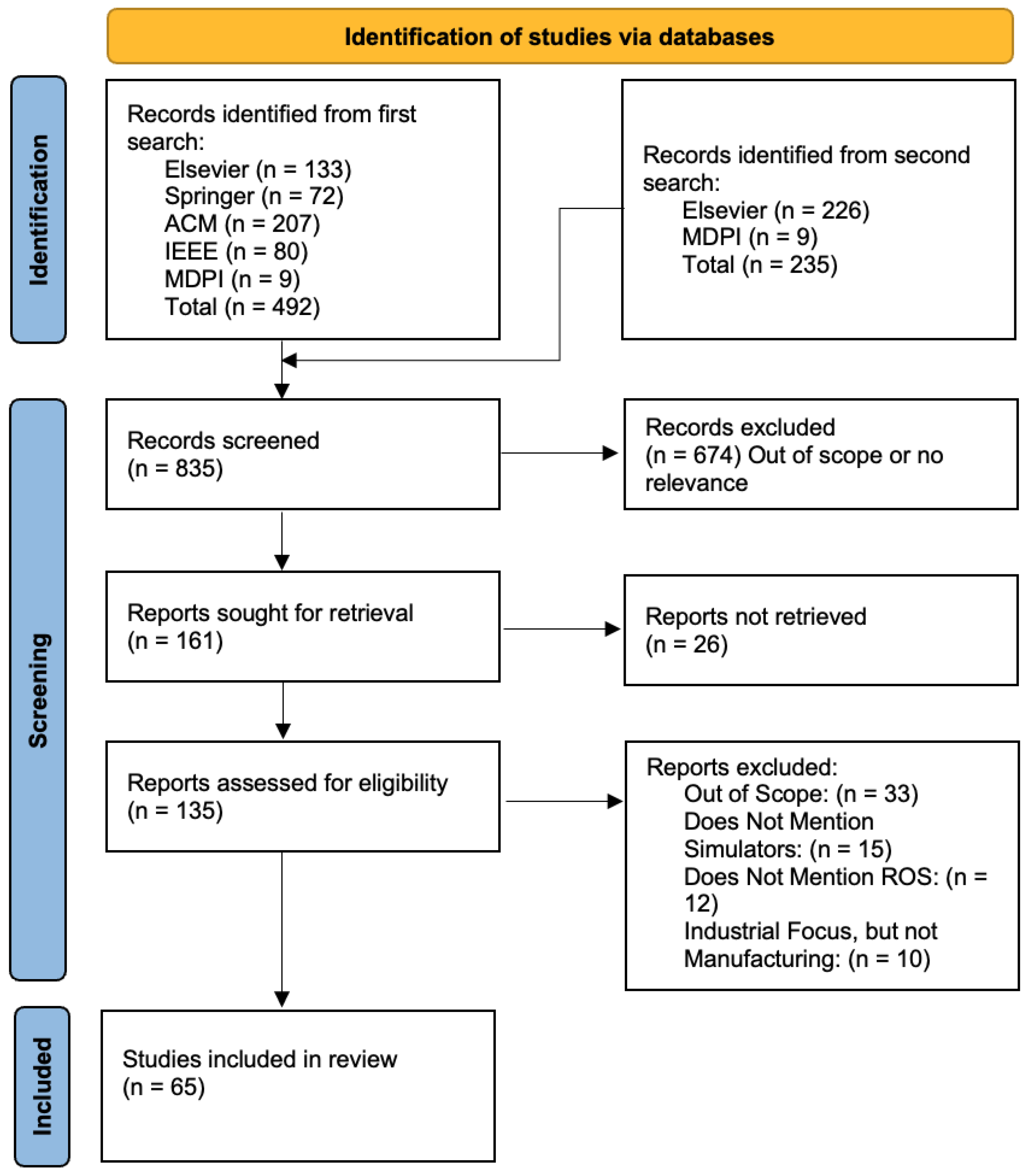

2. Methodology

2.1. Research Questions

2.2. Search Strategy and Study Selection

- Was the article published in a reputable, peer-reviewed journal (preferably indexed and with an impact factor)?

- Does the study present a clearly described system architecture or implementation?

- Is the research grounded in applied science and relevant to real-world applications?

| Inclusion Criteria |

|---|

| (1) Studies proposing robotics simulation frameworks for intelligent robotics, HRI, human–robot collaboration, or industrial automation. |

| (2) Research integrating simulation-related technologies such as digital twins, cyber–physical systems, or extended reality. |

| Exclusion Criteria |

| (1) Articles that lack a clear application within Industry 4.0 (automation and smart manufacturing) or Industry 5.0 (human-centric collaboration). |

| (2) Studies focusing primarily on robot learning or data augmentation without practical validation in industrial settings. |

| (3) Articles focusing solely on unrelated domains, including entertainment, education, search and rescue, aerial robotics, or medical robotics. |

| (4) Studies lacking explicit details about the used simulator, its integration with robotic systems, or its role in an industrial workflow. |

| (5) Research that does not use or integrate ROS, ROS 2, or other widely adopted robotics middleware for industrial applications. |

| (6) Studies relying primarily on 3D modeling tools (e.g., Blender) without actual simulation or control of robotic systems. |

| (7) Articles unavailable in full text or published in languages other than English, conference articles, book chapters, technical reports, and short articles (less than six pages) |

2.3. Data Extraction and Synthesis

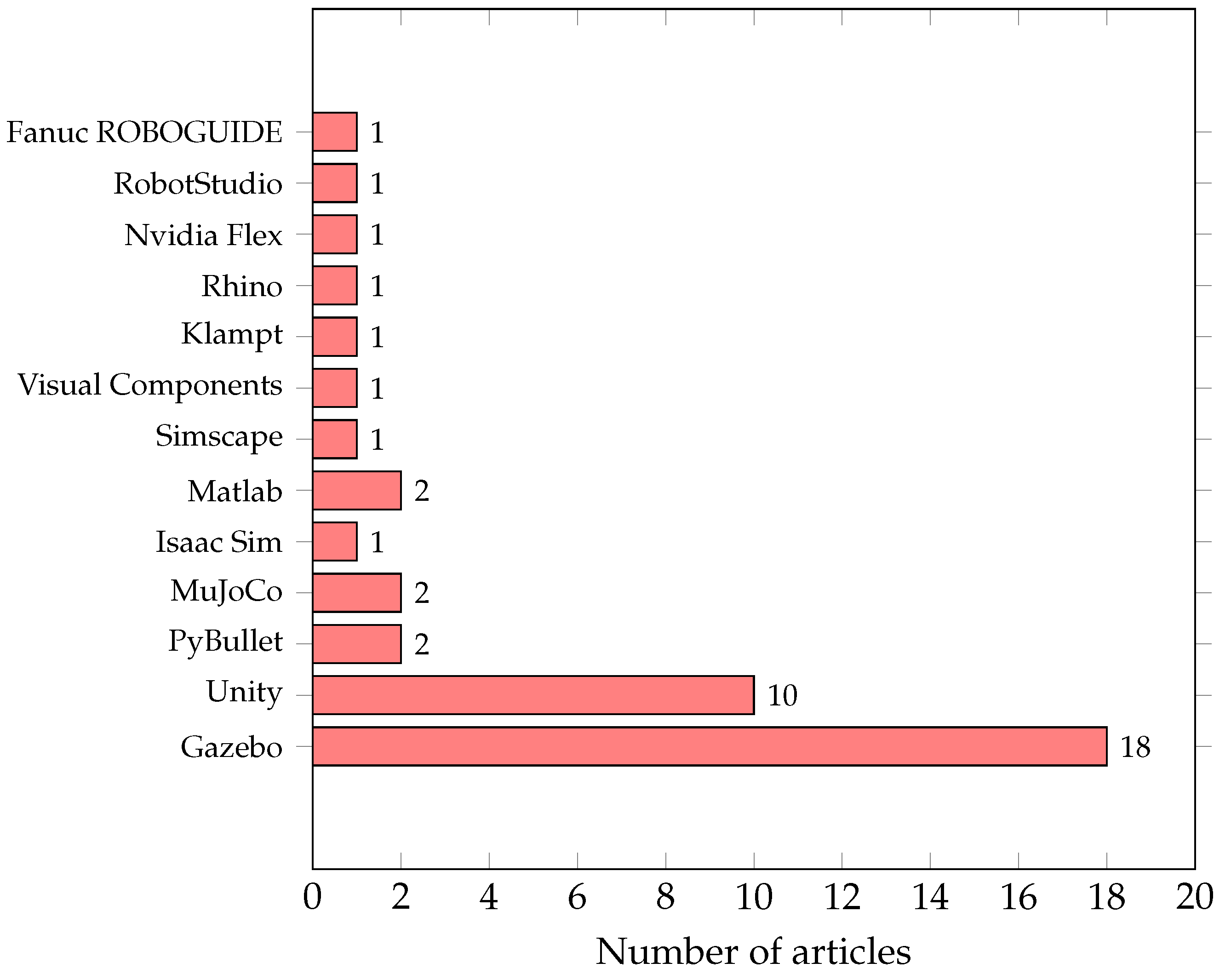

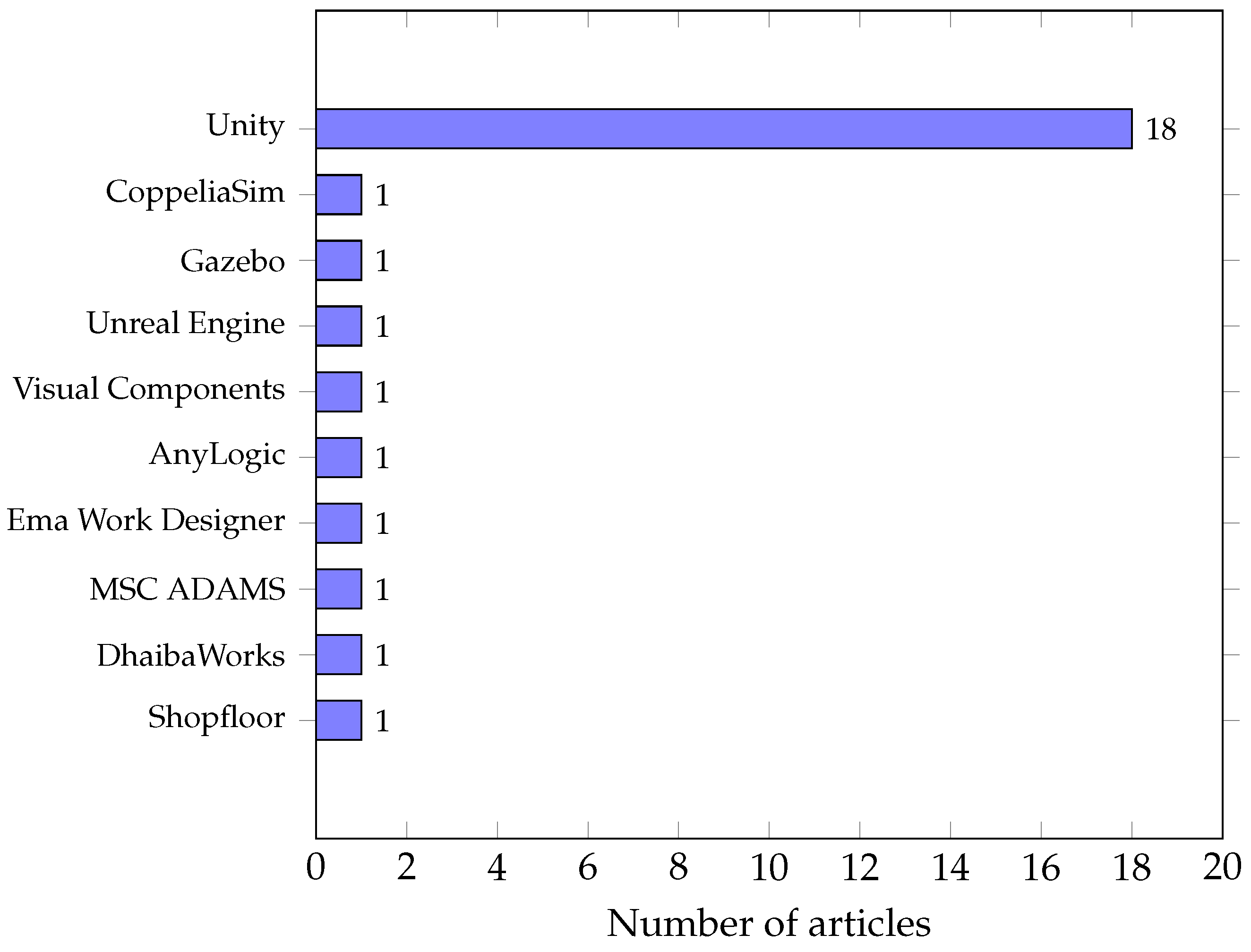

3. RQ1: What ROS-Compatible Simulator Platforms Have Been Used for Developing Industry 4.0/5.0 Applications?

3.1. Description of Common Simulation Platforms

3.2. Domain-Specific Robotic Simulation Tools

4. RQ2: What Are the Most Common Industry 4.0/5.0 Applications That Have Been Developed Using These Simulators?

| Reference | Simulator | Application |

|---|---|---|

| [59] | PyBullet | Assembly, force-based control, vision-guided manipulation |

| [19] | Gazebo | Multi-robot navigation, autonomous path planning, obstacle avoidance |

| [25] | Gazebo | Monitoring, inspection, mapping |

| [22] | MuJoCo | Assembly |

| [57] | PyBullet | Assembly, force-based control, vision-guided manipulation |

| [49] | Matlab | Shape control, large deformation, co-manipulation |

| [60] | Visual Components | Reconfiguration, assembly, layout planning, digital twin simulation |

| [61] | Gazebo | Object detection, quality inspection, process automation |

| [34] | Unity | Robot teaching, motion planning, AR-assisted control |

| [26] | Gazebo | Reconfigurable cell programming, skill modification, automated layout adaptation |

| [58] | Gazebo | Multi-robot collaboration, resource synchronization, edge-based control, conflict resolution |

| [27] | Gazebo | Path planning, obstacle avoidance, maintenance automation |

| [50] | Klampt | Assembly planning, collision avoidance, autonomous sequence optimization |

| [36] | Unity | Pick-and-place, positioning optimization, reinforcement learning-based control |

| [51] | Rhino-GH, Nvidia Flex | Additive manufacturing, block stacking, tool path planning, autonomous construction |

| [35] | Unity3D | Object detection, camera pose optimization, collision avoidance |

| [52] | Fanuc ROBOGUIDE | Welding, task sequencing, collision avoidance |

| [62] | Gazebo | Localization, tracking |

| [28] | Gazebo | Exploration, surveillance, path planning |

| [29] | Gazebo | Collision avoidance, pick-and-place |

| [30] | Gazebo | Pickup, delivery, navigation |

| [63] | Gazebo, Unity3D | Containerization, task orchestration, resource management in edge-cloud architecture, mission programming |

| [64] | Unity | Digital twin analysis |

| [65] | Unity, Gazebo | Digital twin analysis |

| [31] | Gazebo | Deployment of smart sensors in a confident space |

| [43] | MuJoCo | Screw-tightening and screw-loosening |

| [56] | Gazebo | Pick-and-place |

| [53] | MATLAB, Rviz/Gazebo | Pick-and-place |

| [32] | Gazebo | Pick-and-place |

| [66] | Gazebo | Robotic prefabrication tasks such as milling, gluing, and nailing |

| [42] | Isaac Sim | Pick-and-place |

| [33] | Unity | Assembly |

| [67] | Gazebo | Navigation, assistive robotics |

| [68] | Gazebo | Pick-and-place, transportation, inspection |

| [69] | Unity | Logistics/Material Handling |

| [42] | Isaac Sim | Sorting and manipulation |

| [54] | RobotStudio | Welding |

| [70] | Unity | Pick-and-place, HRI |

| [71] | Unity | Pick-and-place, digital twin, human-in-the-loop |

| Reference | Simulator | Application |

|---|---|---|

| [37] | Unity | Inspection, spraying |

| [18] | Unreal Engine 4 | Enhancing safety in manufacturing, human–robot collaboration |

| [21] | Unity | Collaborative robotic assembly, task coordination |

| [16] | Gazebo | Cooperative tele-recovery during manufacturing failure, task coordination |

| [76] | Unity3D | Assembly, human–robot collaboration |

| [17] | Visual Components | Resource sharing, feasibility testing, flexible automation |

| [38] | Unity | Inspection, remote handling |

| [45] | MSC ADAMS | Human–robot interaction |

| [46] | ema Work Designer (EMA) | Human–robot interaction |

| [77] | Unity3D | Collaborative robotic assembly and surface following |

| [20] | Unity | Task distribution, on-site assembly, collaborative task execution, human–robot interaction |

| [39] | Unity | Latency mitigation, teleoperation, predictive motion modeling, and remote welding |

| [40] | Unity | Task guidance, physical collaboration, workspace sharing, human–robot interaction |

| [41] | Unity | Remote manipulation |

| [9] | Unity | Telemanipulation, human-in-the-loop, peg-in-hole |

| [73] | Unity | Assembly assisted by AR interface, human–robot collaboration |

| [78] | Unity | Human–robot collaboration, natural language commands |

| [75] | Unity | Collaborative robotic assembly |

| [79] | Unity | Exoskeleton-based teleoperation for pick-and-place |

| [74] | Unity | VR-based simulation for human–robot collaboration |

| [47] | AnyLogic | Human–robot collaboration in assembly |

| [80] | Unity | Programming assistance and visualization |

| [48] | Shopfloor Digital Representation | Collaborative robotic assembly |

| [81] | CoppeliaSim | Human–robot collaboration |

| [82] | Unity | Teleoperation |

| [83] | Unity | Digital twin |

| [55] | DhaibaWorks | Ergonomic evaluation |

5. RQ3: What Are the Most Commonly Used Robot Configurations and Key Algorithms That Are Integrated in These Applications?

5.1. Robot Configurations

5.2. Sensing

5.3. Perception

5.4. Mapping

5.5. Cognition and Control

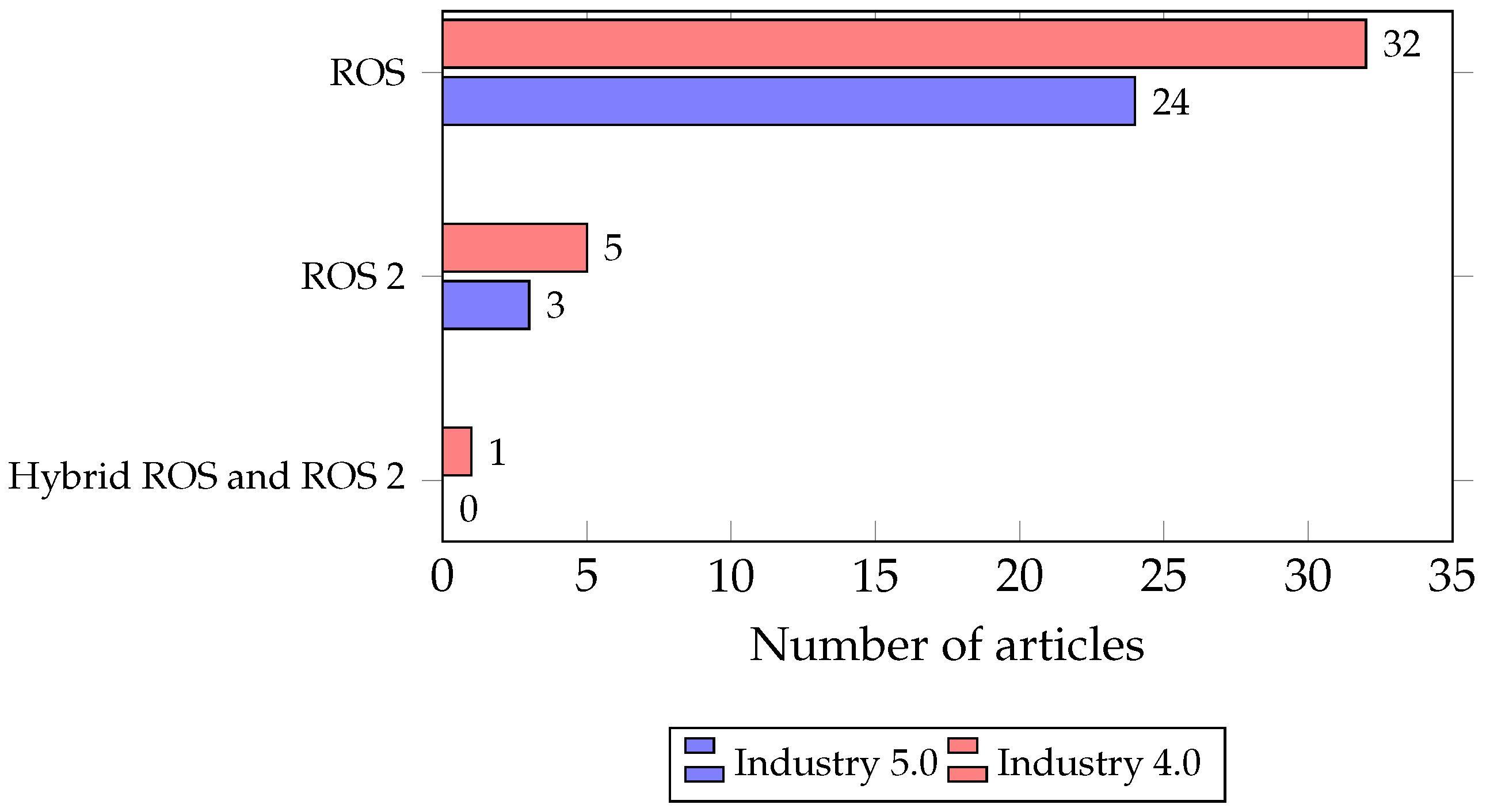

6. RQ4: How Have ROS 1 and ROS 2 Been Adopted in Industry 4.0/5.0 Applications? What Are Their Comparative Advantages and Limitations?

7. RQ5: What Are the Additional Communication and Integration Technologies That Enable Compatibility Between Simulators and Modules Used for Developing Industry 4.0/5.0 Applications?

8. Challenges and Opportunities

9. Limitations

9.1. Methodological Limitations

9.2. Technical Limitations

9.3. Contextual Limitations

10. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhong, R.Y.; Xu, X.; Klotz, E.; Newman, S.T. Intelligent Manufacturing in the Context of Industry 4.0: A Review. Engineering 2017, 3, 616–630. [Google Scholar] [CrossRef]

- Yang, T.; Yi, X.; Lu, S.; Johansson, K.H.; Chai, T. Intelligent manufacturing for the process industry driven by industrial artificial intelligence. Engineering 2021, 7, 1224–1230. [Google Scholar] [CrossRef]

- Huang, S.; Wang, G.; Lei, D.; Yan, Y. Toward digital validation for rapid product development based on digital twin: A framework. Int. J. Adv. Manuf. Technol. 2022, 119, 2509–2523. [Google Scholar] [CrossRef]

- Maddikunta, P.K.R.; Pham, Q.V.; B, P.; Deepa, N.; Dev, K.; Gadekallu, T.R.; Ruby, R.; Liyanage, M. Industry 5.0: A survey on enabling technologies and potential applications. J. Ind. Inf. Integr. 2022, 26, 100257. [Google Scholar] [CrossRef]

- Xu, X.; Lu, Y.; Vogel-Heuser, B.; Wang, L. Industry 4.0 and Industry 5.0—Inception, conception and perception. J. Manuf. Syst. 2021, 61, 530–535. [Google Scholar] [CrossRef]

- Huang, S.; Wang, B.; Li, X.; Zheng, P.; Mourtzis, D.; Wang, L. Industry 5.0 and Society 5.0—Comparison, complementation and co-evolution. J. Manuf. Syst. 2022, 64, 424–428. [Google Scholar] [CrossRef]

- Coronado, E.; Kiyokawa, T.; Ricardez, G.A.G.; Ramirez-Alpizar, I.G.; Venture, G.; Yamanobe, N. Evaluating quality in human–robot interaction: A systematic search and classification of performance and human-centered factors, measures and metrics towards an industry 5.0. J. Manuf. Syst. 2022, 63, 392–410. [Google Scholar] [CrossRef]

- Wang, B.; Zhou, H.; Li, X.; Yang, G.; Zheng, P.; Song, C.; Yuan, Y.; Wuest, T.; Yang, H.; Wang, L. Human Digital Twin in the context of Industry 5.0. Robot. Comput.-Integr. Manuf. 2024, 85, 102626. [Google Scholar] [CrossRef]

- Coronado, E.; Ueshiba, T.; Ramirez-Alpizar, I.G. A Path to Industry 5.0 Digital Twins for Human–Robot Collaboration by Bridging NEP+ and ROS. Robotics 2024, 13, 28. [Google Scholar] [CrossRef]

- Baratta, A.; Cimino, A.; Longo, F.; Nicoletti, L. Digital twin for human–robot collaboration enhancement in manufacturing systems: Literature review and direction for future developments. Comput. Ind. Eng. 2024, 187, 109764. [Google Scholar] [CrossRef]

- Kargar, S.; Yordanov, B.; Harvey, C.; Asadipour, A. Emerging Trends in Realistic Robotic Simulations: A Comprehensive Systematic Literature Review. IEEE Access 2023, 11, 1–25. [Google Scholar] [CrossRef]

- Liu, C.K.; Negrut, D. The role of physics-based simulators in robotics. Annu. Rev. Control. Robot. Auton. Syst. 2021, 4, 35–58. [Google Scholar] [CrossRef]

- Collins, J.; Chand, S.; Vanderkop, A.; Howard, D. A Review of Physics Simulators for Robotic Applications. IEEE Access 2021, 9, 51416–51431. [Google Scholar] [CrossRef]

- Budgen, D.; Brereton, P. Evolution of secondary studies in software engineering. Inf. Softw. Technol. 2022, 145, 106840. [Google Scholar] [CrossRef]

- Kitchenham, B.; Madeyski, L.; Budgen, D. SEGRESS: Software Engineering Guidelines for REporting Secondary Studies. IEEE Trans. Softw. Eng. 2023, 49, 1273–1298. [Google Scholar] [CrossRef]

- Itadera, S.; Domae, Y. Motion priority optimization framework toward automated and teleoperated robot cooperation in industrial recovery scenarios. Robot. Auton. Syst. 2024, 184, 104833. [Google Scholar] [CrossRef]

- Ribeiro da Silva, E.; Schou, C.; Hjorth, S.; Tryggvason, F.; Sørensen, M.S. Plug & Produce robot assistants as shared resources: A simulation approach. J. Manuf. Syst. 2022, 63, 107–117. [Google Scholar] [CrossRef]

- Wang, S.; Zhang, J.; Wang, P.; Law, J.; Calinescu, R.; Mihaylova, L. A deep learning-enhanced Digital Twin framework for improving safety and reliability in human–robot collaborative manufacturing. Robot. Comput.-Integr. Manuf. 2024, 85, 102608. [Google Scholar] [CrossRef]

- Horelican, T. Utilizability of navigation2/ros2 in highly automated and distributed multi-robotic systems for industrial facilities. IFAC-PapersOnLine 2022, 55, 109–114. [Google Scholar] [CrossRef]

- Alexi, E.V.; Kenny, J.C.; Atanasova, L.; Casas, G.; Dörfler, K.; Mitterberger, D. Cooperative augmented assembly (CAA): Augmented reality for on-site cooperative robotic fabrication. Constr. Robot. 2024, 8, 28. [Google Scholar] [CrossRef]

- Xie, J.; Liu, Y.; Wang, X.; Fang, S.; Liu, S. A new XR-based human–robot collaboration assembly system based on industrial metaverse. J. Manuf. Syst. 2024, 74, 949–964. [Google Scholar] [CrossRef]

- Ehrmann, C.; Min, J.; Zhang, W. Highly flexible robotic manufacturing cell based on holistic real-time model-based control. Procedia CIRP 2024, 127, 20–25. [Google Scholar] [CrossRef]

- Castellini, A.; Marchesini, E.; Farinelli, A. Partially Observable Monte Carlo Planning with state variable constraints for mobile robot navigation. Eng. Appl. Artif. Intell. 2021, 104, 104382. [Google Scholar] [CrossRef]

- Pascher, M.; Goldau, F.F.; Kronhardt, K.; Frese, U.; Gerken, J. AdaptiX-A Transitional XR Framework for Development and Evaluation of Shared Control Applications in Assistive Robotics. Proc. ACM Hum.-Comput. Interact. 2024, 8, 1–28. [Google Scholar] [CrossRef]

- Kivrak, H.; Karakusak, M.Z.; Watson, S.; Lennox, B. Cyber–physical system architecture of autonomous robot ecosystem for industrial asset monitoring. Comput. Commun. 2024, 218, 72–84. [Google Scholar] [CrossRef]

- Asif, S.; Bueno, M.; Ferreira, P.; Anandan, P.; Zhang, Z.; Yao, Y.; Ragunathan, G.; Tinkler, L.; Sotoodeh-Bahraini, M.; Lohse, N.; et al. Rapid and automated configuration of robot manufacturing cells. Robot. Comput.-Integr. Manuf. 2025, 92, 102862. [Google Scholar] [CrossRef]

- Mi, K.; Fu, Y.; Zhou, C.; Ji, W.; Fu, M.; Liang, R. Research on path planning of intelligent maintenance robotic arm for distribution lines under complex environment. Comput. Electr. Eng. 2024, 120, 109711. [Google Scholar] [CrossRef]

- Romero, A.; Delgado, C.; Zanzi, L.; Li, X.; Costa-Pérez, X. OROS: Online Operation and Orchestration of Collaborative Robots using 5G. IEEE Trans. Netw. Serv. Manag. 2023, 20, 4216–4230. [Google Scholar] [CrossRef]

- Gafur, N.; Kanagalingam, G.; Wagner, A.; Ruskowski, M. Dynamic collision and deadlock avoidance for multiple robotic manipulators. IEEE Access 2022, 10, 55766–55781. [Google Scholar] [CrossRef]

- Camisa, A.; Testa, A.; Notarstefano, G. Multi-robot pickup and delivery via distributed resource allocation. IEEE Trans. Robot. 2022, 39, 1106–1118. [Google Scholar] [CrossRef]

- Putranto, A.; Lin, T.H.; Tsai, P.T. Digital twin-enabled robotics for smart tag deployment and sensing in confined space. Robot. Comput.-Integr. Manuf. 2025, 95, 102993. [Google Scholar] [CrossRef]

- Li, X.; Liu, G.; Sun, S.; Yi, W.; Li, B. Digital twin model-based smart assembly strategy design and precision evaluation for PCB kit-box build. J. Manuf. Syst. 2023, 71, 206–223. [Google Scholar] [CrossRef]

- Terei, N.; Wiemann, R.; Raatz, A. ROS-Based Control of an Industrial Micro-Assembly Robot. Procedia CIRP 2024, 130, 909–914. [Google Scholar] [CrossRef]

- Liu, C.; Tang, D.; Zhu, H.; Nie, Q.; Chen, W.; Zhao, Z. An augmented reality-assisted interaction approach using deep reinforcement learning and cloud-edge orchestration for user-friendly robot teaching. Robot. Comput.-Integr. Manuf. 2024, 85, 102638. [Google Scholar] [CrossRef]

- Roveda, L.; Maroni, M.; Mazzuchelli, L.; Praolini, L.; Shahid, A.A.; Bucca, G.; Piga, D. Robot end-effector mounted camera pose optimization in object detection-based tasks. J. Intell. Robot. Syst. 2022, 104, 16. [Google Scholar] [CrossRef]

- Iriondo, A.; Lazkano, E.; Ansuategi, A.; Rivera, A.; Lluvia, I.; Tubío, C. Learning positioning policies for mobile manipulation operations with deep reinforcement learning. Int. J. Mach. Learn. Cyber 2023, 14, 3003–3023. [Google Scholar] [CrossRef]

- Yun, H.; Jun, M.B. Immersive and interactive cyber-physical system (I2CPS) and virtual reality interface for human involved robotic manufacturing. J. Manuf. Syst. 2022, 62, 234–248. [Google Scholar] [CrossRef]

- Fontanelli, G.A.; Sofia, A.; Fusco, S.; Grazioso, S.; Di Gironimo, G. Preliminary architecture design for human-in-the-loop control of robotic equipment in remote handling tasks: Case study on the NEFERTARI project. Fusion Eng. Des. 2024, 206, 114586. [Google Scholar] [CrossRef]

- Su, Y.; Lloyd, L.; Chen, X.; Chase, J.G. Latency mitigation using applied HMMs for mixed reality-enhanced intuitive teleoperation in intelligent robotic welding. Int. J. Adv. Manuf. Technol. 2023, 126, 2233–2248. [Google Scholar] [CrossRef]

- Chan, W.P.; Hanks, G.; Sakr, M.; Zhang, H.; Zuo, T.; Van der Loos, H.M.; Croft, E. Design and evaluation of an augmented reality head-mounted display interface for human robot teams collaborating in physically shared manufacturing tasks. ACM Trans. Hum.-Robot Interact. (THRI) 2022, 11, 1–19. [Google Scholar] [CrossRef]

- Mourtzis, D.; Angelopoulos, J.; Panopoulos, N. Closed-loop robotic arm manipulation based on mixed reality. Appl. Sci. 2022, 12, 2972. [Google Scholar] [CrossRef]

- Nambiar, S.; Jonsson, M.; Tarkian, M. Automation in Unstructured Production Environments Using Isaac Sim: A Flexible Framework for Dynamic Robot Adaptability. Procedia CIRP 2024, 130, 837–846. [Google Scholar] [CrossRef]

- Xu, W.; Yang, H.; Ji, Z.; Ba, M. Cognitive digital twin-enabled multi-robot collaborative manufacturing: Framework and approaches. Comput. Ind. Eng. 2024, 194, 110418. [Google Scholar] [CrossRef]

- Ostanin, M.; Zaitsev, S.; Sabirova, A.; Klimchik, A. Interactive Industrial Robot Programming based on Mixed Reality and Full Hand Tracking. IFAC-PapersOnLine 2022, 55, 2791–2796. [Google Scholar] [CrossRef]

- Zhou, Z.; Yang, X.; Wang, H.; Zhang, X. Coupled dynamic modeling and experimental validation of a collaborative industrial mobile manipulator with human–robot interaction. Mech. Mach. Theory 2022, 176, 105025. [Google Scholar] [CrossRef]

- Glogowski, P.; Böhmer, A.; Hypki, A.; Kuhlenkötter, B. Robot speed adaption in multiple trajectory planning and integration in a simulation tool for human–robot interaction. J. Intell. Robot. Syst. 2021, 102, 25. [Google Scholar] [CrossRef]

- Cimino, A.; Longo, F.; Nicoletti, L.; Solina, V. Simulation-based Digital Twin for enhancing human–robot collaboration in assembly systems. J. Manuf. Syst. 2024, 77, 903–918. [Google Scholar] [CrossRef]

- Katsampiris-Salgado, K.; Dimitropoulos, N.; Gkrizis, C.; Michalos, G.; Makris, S. Advancing human–robot collaboration: Predicting operator trajectories through AI and infrared imaging. J. Manuf. Syst. 2024, 74, 980–994. [Google Scholar] [CrossRef]

- Almaghout, K.; Cherubini, A.; Klimchik, A. Robotic co-manipulation of deformable linear objects for large deformation tasks. Robot. Auton. Syst. 2024, 175, 104652. [Google Scholar] [CrossRef]

- de Winter, J.; EI Makrini, I.; van de Perre, G.; Nowé, A.; Verstraten, T.; Vanderborght, B. Autonomous assembly planning of demonstrated skills with reinforcement learning in simulation. Auton Robot 2021, 45, 1097–1110. [Google Scholar] [CrossRef]

- Felbrich, B.; Schork, T.; Menges, A. Autonomous robotic additive manufacturing through distributed model-free deep reinforcement learning in computational design environments. Constr. Robot. 2022, 6, 15–37. [Google Scholar] [CrossRef]

- Touzani, H.; Séguy, N.; Hadj-Abdelkader, H.; Suárez, R.; Rosell, J.; Palomo-Avellaneda, L.; Bouchafa, S. Efficient industrial solution for robotic task sequencing problem with mutual collision avoidance & cycle time optimization. IEEE Robot. Autom. Lett. 2022, 7, 2597–2604. [Google Scholar] [CrossRef]

- Antonelli, D.; Aliev, K.; Soriano, M.; Samir, K.; Monetti, F.M.; Maffei, A. Exploring the limitations and potential of digital twins for mobile manipulators in industry. Procedia Comput. Sci. 2024, 232, 1121–1130. [Google Scholar] [CrossRef]

- Löppenberg, M.; Yuwono, S.; Diprasetya, M.R.; Schwung, A. Dynamic robot routing optimization: State–space decomposition for operations research-informed reinforcement learning. Robot. Comput.-Integr. Manuf. 2024, 90, 102812. [Google Scholar] [CrossRef]

- Maruyama, K.; Yamaoka, M.; Yamazaki, Y.; Hoshi, Y. Digital Twin-Driven Human Robot Collaboration Using a Digital Human. Sensors 2021, 21, 8266. [Google Scholar] [CrossRef]

- Niermann, D.; Doernbach, T.; Petzoldt, C.; Isken, M.; Freitag, M. Software framework concept with visual programming and digital twin for intuitive process creation with multiple robotic systems. Robot. Comput.-Integr. Manuf. 2023, 82, 102536. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, Y.; Zhang, Z.; Wang, L.; Huang, H.; Cao, Q. A residual reinforcement learning method for robotic assembly using visual and force information. J. Manuf. Syst. 2024, 72, 245–262. [Google Scholar] [CrossRef]

- Keung, K.L.; Chan, Y.; Ng, K.K.; Mak, S.L.; Li, C.H.; Qin, Y.; Yu, C. Edge intelligence and agnostic robotic paradigm in resource synchronisation and sharing in flexible robotic and facility control system. Adv. Eng. Inform. 2022, 52, 101530. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhang, Z.; Wang, L.; Zhu, X.; Huang, H.; Cao, Q. Digital twin-enabled grasp outcomes assessment for unknown objects using visual-tactile fusion perception. Robot. Comput.-Integr. Manuf. 2023, 84, 102601. [Google Scholar] [CrossRef]

- Arnarson, H.; Mahdi, H.; Solvang, B.; Bremdal, B.A. Towards automatic configuration and programming of a manufacturing cell. J. Manuf. Syst. 2022, 64, 225–235. [Google Scholar] [CrossRef]

- Yang, X.; Zhou, Z.; Sørensen, J.H.; Christensen, C.B.; Ünalan, M.; Zhang, X. Automation of SME production with a Cobot system powered by learning-based vision. Robot. Comput.-Integr. Manuf. 2023, 83, 102564. [Google Scholar] [CrossRef]

- D’Avella, S.; Unetti, M.; Tripicchio, P. RFID gazebo-based simulator with RSSI and phase signals for UHF tags localization and tracking. IEEE Access 2022, 10, 22150–22160. [Google Scholar] [CrossRef]

- Lumpp, F.; Panato, M.; Bombieri, N.; Fummi, F. A design flow based on Docker and Kubernetes for ROS-based robotic software applications. ACM Trans. Embed. Comput. Syst. 2024, 23, 1–24. [Google Scholar] [CrossRef]

- Singh, M.; Kapukotuwa, J.; Gouveia, E.L.S.; Fuenmayor, E.; Qiao, Y.; Murry, N.; Devine, D. Unity and ROS as a Digital and Communication Layer for Digital Twin Application: Case Study of Robotic Arm in a Smart Manufacturing Cell. Sensors 2024, 24, 5680. [Google Scholar] [CrossRef]

- Singh, M.; Kapukotuwa, J.; Gouveia, E.L.S.; Fuenmayor, E.; Qiao, Y.; Murray, N.; Devine, D. Comparative Study of Digital Twin Developed in Unity and Gazebo. Electronics 2025, 14, 276. [Google Scholar] [CrossRef]

- Kaiser, B.; Reichle, A.; Verl, A. Model-based automatic generation of digital twin models for the simulation of reconfigurable manufacturing systems for timber construction. Procedia CIRP 2022, 107, 387–392. [Google Scholar] [CrossRef]

- Szabó, G.; Peto, J. Intelligent wireless resource management in industrial camera systems: Reinforcement Learning-based AI-extension for efficient network utilization. Comput. Commun. 2024, 216, 68–85. [Google Scholar] [CrossRef]

- Wang, Y.; Xie, Y.; Xu, D.; Shi, J.; Fang, S.; Gui, W. Heuristic dense reward shaping for learning-based map-free navigation of industrial automatic mobile robots. ISA Trans. 2025, 156, 579–596. [Google Scholar] [CrossRef]

- Zhang, L.; Yang, C.; Yan, Y.; Cai, Z.; Hu, Y. Automated guided vehicle dispatching and routing integration via digital twin with deep reinforcement learning. J. Manuf. Syst. 2024, 72, 492–503. [Google Scholar] [CrossRef]

- Gallala, A.; Kumar, A.A.; Hichri, B.; Plapper, P. Digital Twin for Human–Robot Interactions by Means of Industry 4.0 Enabling Technologies. Sensors 2022, 22, 4950. [Google Scholar] [CrossRef]

- Llopart, A.; Bou, M.; Ribas-Xirgo, J. Unity and ROS as a Digital and Communication Layer for the Implementation of a Cyber-Physical System for Industry 4.0. In Proceedings of the 2020 IEEE 16th International Conference on Automation Science and Engineering (CASE), Hong Kong, China, 20–21 August 2020; pp. 1069–1074. [Google Scholar] [CrossRef]

- Tsuji, C.; Coronado, E.; Osorio, P.; Venture, G. Adaptive contact-rich manipulation through few-shot imitation learning with Force-Torque feedback and pre-trained object representations. IEEE Robot. Autom. Lett. 2024, 10, 240–247. [Google Scholar] [CrossRef]

- Pang, J.; Zheng, P. ProjecTwin: A digital twin-based projection framework for flexible spatial augmented reality in adaptive assistance. J. Manuf. Syst. 2025, 78, 213–225. [Google Scholar] [CrossRef]

- Dianatfar, M.; Järvenpää, E.; Siltala, N.; Lanz, M. Template concept for VR environments: A case study in VR-based safety training for human–robot collaboration. Robot. Comput.-Integr. Manuf. 2025, 94, 102973. [Google Scholar] [CrossRef]

- Dimosthenopoulosa, D.; Basamakisa, F.P.; Mountzouridisa, G.; Papadopoulosa, G.; Michalosa, G.; Makrisa, S. Towards utilising Artificial Intelligence for advanced reasoning and adaptability in human–robot collaborative workstations. Procedia CIRP 2024, 127, 147–152. [Google Scholar] [CrossRef]

- Koukas, S.; Kousi, N.; Aivaliotis, S.; Michalos, G.; Bröchler, R.; Makris, S. ODIN architecture enabling reconfigurable human–robot based production lines. Procedia CIRP 2022, 107, 1403–1408. [Google Scholar] [CrossRef]

- Tuli, T.; Manns, M.; Zeller, S. Human motion quality and accuracy measuring method for human–robot physical interactions. Intell. Serv. Robot. 2022, 15, 503–512. [Google Scholar] [CrossRef]

- Konstantinou, C.; Antonarakos, D.; Angelakis, P.; Gkournelos, C.; Michalos, G.; Makris, S. Leveraging Generative AI Prompt Programming for Human-Robot Collaborative Assembly. Procedia CIRP 2024, 128, 621–626. [Google Scholar] [CrossRef]

- Park, H.; Shin, M.; Choi, G.; Sim, Y.; Lee, J.; Yun, H.; Jun, M.B.G.; Kim, G.; Jeong, Y.; Yi, H. Integration of an exoskeleton robotic system into a digital twin for industrial manufacturing applications. Robot. Comput.-Integr. Manuf. 2024, 89, 102746. [Google Scholar] [CrossRef]

- Adler, F.; Gusenburger, D.; Blum, A.; Müller, R. Conception of a Robotic Digital Shadow in Augmented Reality for Enhanced Human-Robot Interaction. Procedia CIRP 2024, 130, 407–412. [Google Scholar] [CrossRef]

- Fellin, T.; Pagetti, C.; Beschi, M.; Caselli, S. A Framework for Enhanced Human–Robot Collaboration during Aircraft Fuselage Assembly. Machines 2022, 10, 390. [Google Scholar] [CrossRef]

- Mueller, J.; Georgilas, I.; Visala, A.; Hentout, A.; Thomas, U. A Robotic Teleoperation System with Integrated Augmented Reality and Digital Twin Capabilities for Enhanced Human-Robot Interaction. In Proceedings of the 2021 IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), Vasteras, Sweden, 7–10 September 2021; pp. 1–8. [Google Scholar] [CrossRef]

- Erdei, T.I.; Krakó, R.; Husi, G. Design of a Digital Twin Training Centre for an Industrial Robot Arm. Appl. Sci. 2022, 12, 8862. [Google Scholar] [CrossRef]

- Chen, S.C.; Pamungkas, R.S.; Schmidt, D. The Role of Machine Learning in Improving Robotic Perception and Decision Making. Int. Trans. Artif. Intell. 2024, 3, 32–43. [Google Scholar] [CrossRef]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Trommnau, J.; Kühnle, J.; Siegert, J.; Inderka, R.; Bauernhansl, T. Overview of the state of the art in the production process of automotive wire harnesses, current research and future trends. Procedia CIRP 2019, 81, 387–392. [Google Scholar] [CrossRef]

- Macenski, S.; Jambrecic, I. SLAM Toolbox: SLAM for the dynamic world. J. Open Source Softw. 2021, 6, 2783. [Google Scholar] [CrossRef]

- Quigley, M.; Gerkey, B.; Smart, W.D. Programming Robots with ROS: A Practical Introduction to the Robot Operating System; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2015. [Google Scholar]

- Maruyama, Y.; Kato, S.; Azumi, T. Exploring the Performance of ROS2. In Proceedings of the 13th International Workshop on Embedded Multicore Systems (MCSoC), Lyon, France, 21–23 September 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Open Robotics. ROS 2 Documentation. 2024. Available online: https://docs.ros.org/en/ros2_documentation/ (accessed on 27 January 2025).

- Klotzbucher, B.; Klotzbuecher, D.; Bruyninckx, H. ROS 2 for Real-Time Applications: Performance and Security Considerations. IEEE Robot. Autom. Mag. 2019, 26, 49–60. [Google Scholar] [CrossRef]

- Open Robotics. ROS 1: Architecture and Concepts. 2024. Available online: http://wiki.ros.org/ROS/Introduction (accessed on 27 January 2025).

- AWS Robomaker Team. Migrating from ROS 1 to ROS 2: A Technical Guide. 2023. Available online: https://docs.ros.org/en/foxy/The-ROS2-Project/Contributing/Migration-Guide.html#migrating-to-ros-2 (accessed on 27 January 2025).

- Wang, T.; Tan, C.; Huang, L.; Shi, Y.; Yue, T.; Huang, Z. Simplexity testbed: A model-based digital twin testbed. Comput. Ind. 2023, 145, 103804. [Google Scholar] [CrossRef]

- Yun, J.; Li, G.; Jiang, D.; Xu, M.; Xiang, F.; Huang, L.; Jiang, G.; Liu, X.; Xie, Y.; Tao, B.; et al. Digital twin model construction of robot and multi-object under stacking environment for grasping planning. Appl. Soft Comput. 2023, 149, 111005. [Google Scholar] [CrossRef]

| Year | Reference | Scope | Simulator Description or Comparison | Research Question Focus | Methodology |

|---|---|---|---|---|---|

| 2021 | Liu et al. [12] | Physics-based simulation | Dynamics engines and platforms described | Limitation of physics-based simulation in robotic systems (implicit) | Narrative review |

| 2021 | Collins et al. [13] | Field, soft, and medical robotics, manipulation and learning | Comparison of sensors, actuators, fluid/soft-body dynamics, IK, ROS, rendering, VR | Capabilities, limitations, and application areas of physics simulators in robotics (implicit) | Narrative review |

| 2024 | Kargar et al. [11] | AI-driven perception/control for wheeled mobile robots | Comparison of physics engines, languages, open-source availability | Identifies WMR applications, tasks, commonly used ROS-compatible simulators | PRISMA (systematic) |

| 2024 | Baratta et al. [10] | Digital-twin in manufacturing | Comparison of HRC simulation factors: human operator, robot agent, ergonomics, timing, interoperability | Identifies digital twin applications, tools, barriers in HRC | PRISMA (systematic) |

| 2025 | This study | Industry 4.0/5.0 technologies | Technical comparison: requirements, documentation, pricing, physics engines, learning curve, connectivity, scalability, advantages/limitations of simulators | Identifies ROS-compatible simulators, applications, robots, sensing/ perception/control technologies | SEGRESS (systematic) |

| Database | Search Terms and Filters |

|---|---|

| IEEE Xplore | robot AND (simulation OR simulators OR “digital twin”) AND ROS AND industry. Filters: 2021–2025. |

| ScienceDirect (first search) | robot AND (simulation OR simulators OR “digital twin”) AND ROS AND (industrial OR service OR assistive) AND human robot interaction. Filters: 2021–2025, Research Articles, Engineering. |

| ScienceDirect (second search) | robot AND (simulation OR simulators OR “digital twin”) AND “Robot Operating System” AND (“industry 5.0” OR “industry 4.0”). Filters: 2021–2025, Research Articles, Engineering. |

| SpringerLink | robot AND (simulation OR simulators OR “digital twin”) AND ROS AND (“industry 5.0” OR “industry 4.0”). Filters: Article, 2021–2025, English. |

| ACM Digital Library | robot AND (simulation OR simulators OR “digital twin”) AND ROS AND (“industry 5.0” OR “industry 4.0”). Filters: 2021–2025. |

| MDPI (first search) | robot AND (simulation OR simulators OR “digital twin”) AND ROS AND (“industry 5.0” OR “industry 4.0”). Filters: 2021–2025. |

| MDPI (second search) | robot AND (simulation OR simulators OR “digital twin”) AND (“industry 5.0” OR “industry 4.0”). Filters: 2021–2025. |

| Feature | Isaac Sim | Gazebo | Unity |

|---|---|---|---|

| OS (Minimum) | Ubuntu 20.04/22.04, Windows 10/11 | Ubuntu | Windows, macOS, Ubuntu |

| RAM (Minimum) | 32 GB | – | – |

| VRAM (Minimum) | 8 GB | – | – |

| Supported Languages | C++, Python | C++, Python | C#, UnityScript |

| ROS Compatibility | Yes | Yes | Yes (via ROS-TCP) |

| Docker Compatibility | Yes | Yes | No |

| Main Characteristics | Multi-robot simulation with AI | Realistic control simulation | Multi-platform support |

| Price | Free/Business Version | Free | Free/Pro: USD 2200 |

| Physics Accuracy | High | Varies (engine dependent) | Medium (extra config) |

| Learning Curve | Advanced | Moderate | Easy |

| Scalability | Highly scalable | Moderate scalability | Limited scalability |

| Feature | MuJoCo | Unreal Engine | PyBullet |

|---|---|---|---|

| OS (Minimum) | – | Windows, macOS, Ubuntu | Windows, Linux, macOS, Android |

| RAM (Minimum) | – | 16 GB | 2 GB |

| VRAM (Minimum) | – | 8 GB | 512 MB |

| Supported Languages | Python, API: C++ | C++, Python, Lua, JavaScript | Python |

| ROS Compatibility | Yes | Investigate | Yes |

| Docker Compatibility | Yes | Yes | Yes |

| Main Characteristics | Advanced physics simulation | Advanced graphics for XR, PC | Physics simulation for learning |

| Price | Free | Free/Business Version | Free |

| Physics Accuracy | High (advanced dynamics) | Chaos Physics (advanced setup) | High |

| Learning Curve | Difficult | Difficult | Moderate |

| Scalability | Highly scalable | Highly scalable | Moderate scalability |

| Simulator | Advantages | Disadvantages |

|---|---|---|

| Gazebo | High flexibility, ROS integration, multi-robot support, and extensive plugin ecosystem [19]. | High computational demands, real-time performance challenges, steep learning curve [19]. |

| Unity 3D | High-fidelity visualization, support for XR applications, modular simulation capabilities [20]. | Limited physics accuracy for robotics, high computational requirements for XR, synchronization issues in VR/AR setups [21]. |

| Isaac Sim | Advanced physics via NVIDIA PhysX, GPU-accelerated simulation, reinforcement learning support [22]. | Requires high-end hardware, proprietary platform limits accessibility [22]. |

| CoppeliaSim | Intuitive interface, real-time simulation capabilities, multi-robot interaction support [23]. | Limited physics realism, scalability constraints in complex environments [23]. |

| MuJoCo | High-precision multi-body physics, efficient for reinforcement learning [22]. | Slow performance for large-scale simulations, stability issues in long-running tasks [22]. |

| Visual Components | Optimized for industrial automation, supports flexible DT modeling [24]. | Limited adoption outside industrial manufacturing, restricted extensibility [24]. |

| Feature | ROS 1 | ROS 2 |

|---|---|---|

| Communication Middleware | Uses a custom TCP/UDP-based transport layer (ros_comm) [89]. | Uses the Data Distribution Service (DDS) for improved real-time performance, scalability, and security [90]. |

| Real-Time Support | Limited real-time capabilities; requires external modifications (e.g., Orocos) [90]. | Built-in real-time support with execution management, priority scheduling, and deterministic behavior [91]. |

| Multi-Robot Support | Limited multi-robot capabilities, requiring additional workarounds for managing distributed systems [89]. | Native support for multi-robot applications with improved node discovery and communication [91]. |

| Security | No built-in security features; security relies on external tools and configurations [90]. | Built-in security features (authentication, encryption, access control) following SROS 2 (Secure ROS 2) [91,92]. |

| Middleware Flexibility | Uses a single communication layer (ros_comm), making it less adaptable to different network environments [89]. | DDS abstraction allows selection of different middleware implementations based on application needs [91]. |

| Modularity and Scalability | Designed primarily for single-system robots; lacks flexibility for distributed systems [93]. | Modular architecture enabling distributed systems, allowing cloud-based and EC applications [91]. |

| API and Node Management | Uses a centralized master node (roscore) for service discovery [89]. | Decentralized node discovery and communication, eliminating the need for a master node [91]. |

| Compatibility with ROS 1 | Fully self-contained; does not support ROS 2 natively [89]. | Supports ROS 1 via the ROS 1 bridge, enabling hybrid deployments [91]. |

| Best Use Cases | Suitable for research, prototyping, and single-robot applications [89]. | Suitable for industrial, large-scale, real-time, and multi-robot applications [92,94]. |

| Challenge | Description |

|---|---|

| Computational efficiency and scalability | High-fidelity simulators like Isaac Sim and Gazebo require significant computational resources, limiting real-time performance and accessibility [19,22]. |

| Sim-to-real transfer limitations | Ensuring that behaviors simulated in environments like Gazebo and Isaac Sim translate effectively to real-world deployment remains a key challenge [95]. |

| Interoperability and standardization | The lack of standardized APIs and middleware complicates system integration across different hardware and software platforms, increasing development overhead [17,59,96]. |

| Latency and synchronization issues | High-frequency data exchange in distributed environments introduces delays, impacting real-time control and sensor-actuator synchronization [17,19]. |

| Human–robot interaction (HRI) modeling | Simulating both the physical and cognitive aspects of human–robot interactions in real-time remains an open problem [20]. |

| Learning curve and accessibility | Simplifying simulator setup and configuration can lower the entry barrier for researchers and developers without extensive robotics expertise. Some simulators, such as Unity and CoppeliaSim, offer more accessible learning environments, while Isaac Sim and MuJoCo require advanced configuration knowledge [19,40]. |

| Physics fidelity | Simulators like Isaac Sim and MuJoCo provide high-fidelity physics modeling for robotic manipulation and dynamic interaction tasks. In contrast, lighter simulators like CoppeliaSim prioritize efficiency at the cost of reduced physical accuracy [19,22]. |

| Integration with robotics frameworks | Gazebo and Isaac Sim are deeply integrated with ROS, enabling streamlined transitions from simulation to real-world deployment. Unity and Unreal Engine, while powerful in visualization, require additional middleware to interface effectively with robotics frameworks [20,89]. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Flores Gonzalez, J.M.; Coronado, E.; Yamanobe, N. ROS-Compatible Robotics Simulators for Industry 4.0 and Industry 5.0: A Systematic Review of Trends and Technologies. Appl. Sci. 2025, 15, 8637. https://doi.org/10.3390/app15158637

Flores Gonzalez JM, Coronado E, Yamanobe N. ROS-Compatible Robotics Simulators for Industry 4.0 and Industry 5.0: A Systematic Review of Trends and Technologies. Applied Sciences. 2025; 15(15):8637. https://doi.org/10.3390/app15158637

Chicago/Turabian StyleFlores Gonzalez, Jose M., Enrique Coronado, and Natsuki Yamanobe. 2025. "ROS-Compatible Robotics Simulators for Industry 4.0 and Industry 5.0: A Systematic Review of Trends and Technologies" Applied Sciences 15, no. 15: 8637. https://doi.org/10.3390/app15158637

APA StyleFlores Gonzalez, J. M., Coronado, E., & Yamanobe, N. (2025). ROS-Compatible Robotics Simulators for Industry 4.0 and Industry 5.0: A Systematic Review of Trends and Technologies. Applied Sciences, 15(15), 8637. https://doi.org/10.3390/app15158637