1. Introduction

The Navier–Stokes (NS) equations stand as the cornerstone of fluid dynamics, providing a mathematical description for the motion of viscous, incompressible fluids [

1]. These equations, derived from the fundamental principles of conservation of mass and momentum, have profound implications across a vast spectrum of scientific and engineering disciplines. Their applications range from aerospace engineering, in the design of aircraft and spacecraft [

2], and weather forecasting, where they model atmospheric and oceanic currents [

3], to biofluid mechanics, for understanding blood flow in the cardiovascular system [

4], and even in the creative industries for realistic visual effects [

5]. Despite their ubiquity and fundamental importance, the inherent nonlinearity of the convective term, coupled with the pressure–velocity coupling, makes obtaining analytical solutions possible only in a few highly simplified cases [

1,

3]. Consequently, the advancement of science and technology has become increasingly reliant on numerical approximations to the NS equations, a field known as computational fluid dynamics (CFD) [

2].

Among the various numerical discretization techniques, the finite element method (FEM) has emerged as a powerful and versatile tool for CFD [

4,

6]. Its strength lies in its ability to handle complex geometries and its solid mathematical foundation, rooted in the Galerkin method [

7]. The standard Galerkin FEM seeks an approximate solution within a finite-dimensional function space by enforcing the residual of the governing equation to be orthogonal to the test function space [

6]. For Stokes flow or low Reynolds number flows, this approach, when paired with appropriate velocity and pressure approximation spaces like the Taylor–Hood element (

) [

8], which satisfies the crucial inf-sup (or Ladyzhenskaya–Babuška–Brezzi) condition, provides stable and accurate results [

9,

10].

However, the landscape changes dramatically when the flow Reynolds number (Re) becomes high as is common in many practical applications such as turbulence and boundary layer separation. In such regimes, the nonlinear convection term dominates the viscous diffusion term, leading to two major, intertwined challenges for numerical simulations. The first is a stability issue: the standard Galerkin method becomes inadequate for convection-dominated problems, often producing spurious, non-physical oscillations that can corrupt the entire solution domain [

11,

12]. To counteract this, stabilized finite element methods were developed. A pioneering and highly influential approach is the Streamline Upwind Petrov–Galerkin (SUPG) method [

13], which introduces an additional term to the weighting function, acting along the streamline to add artificial diffusion only where needed, thus suppressing oscillations without overly compromising accuracy [

14]. Building upon these ideas, the Variational Multiscale Stabilization (VMS) method offers a more sophisticated framework [

15,

16]. While SUPG effectively addresses oscillations by adding artificial diffusion along streamlines, VMS offers a more physically consistent approach by explicitly modeling the effect of unresolved subgrid scales on the resolved scales. This fundamental difference often leads to superior accuracy and less intrusive stabilization, particularly in complex, high-Reynolds-number flows where the interaction between scales is significant. Furthermore, compared to traditional Galerkin methods that often suffer from non-physical oscillations in convection-dominated regimes, the VMS component of the framework ensures the physical fidelity of the solution without excessive artificial diffusion. The core concept of VMS is the decomposition of the solution into large (resolved) scales and small (unresolved) subgrid scales. The effect of the unresolved scales on the resolved scales is then modeled, effectively damping numerical oscillations generated by high Reynolds numbers by explicitly constructing and accounting for subgrid-scale effects [

17].

The second major challenge is computational efficiency. High-Reynolds-number flows are inherently multiscale, featuring a wide range of spatial and temporal scales, from large energy-containing eddies down to the smallest dissipative structures at the Kolmogorov scale [

18]. Resolving all these scales with a uniformly fine computational grid, a technique known as Direct Numerical Simulation (DNS), is prohibitively expensive and often computationally intractable for practical engineering problems [

19]. This necessitates a more intelligent approach to mesh generation. Adaptive mesh refinement (AMR) provides a compelling solution to this problem [

20]. Guided by a posteriori error estimators, which measure the local error of the numerical solution, AMR techniques dynamically adjust the mesh resolution, placing smaller elements in regions of high flow complexity (e.g., steep gradients, vortices, and boundary layers) and coarser elements elsewhere [

21,

22]. This strategy significantly reduces the total number of degrees of freedom and, consequently, the computational cost, while maintaining a high level of accuracy where it is most needed [

23].

While both VMS and AMR are powerful techniques in their own right, the existing research has often applied them independently, thereby failing to fully exploit their potential synergistic advantages. For instance, many studies have successfully applied VMS to simulate turbulent flows, but without adaptive meshing, they often require globally fine grids, leading to a significant increase in computational effort that contributes little to the accuracy of the results [

24]. Conversely, adaptive methods that lack a proper stabilization treatment, such as the one described by Verfürth (2013) [

23], can easily lead to numerical oscillations in convection-dominated scenarios, even on an adapted mesh. The true potential for a breakthrough in efficiency and accuracy lies in their combination: using AMR to focus computational power on the dynamically important regions of the flow, while employing VMS to ensure the stability and correctness of the solution on the resulting anisotropic and non-uniform grids.

While previous works have explored aspects of VMS or AMR independently, or even combined them in a less integrated fashion, the distinct novelty of this paper lies in the deep and self-consistent coupling of these two powerful techniques. Unlike traditional approaches where error estimators might operate on the original Navier–Stokes residuals (as in some standard AMR frameworks), this framework introduces a novel posteriori error estimator specifically formulated based on the dual residuals of the VMS-stabilized large-scale equations. This ensures that the adaptive mesh refinement is directly guided by the quality of the VMS solution itself, including the effects of the subgrid-scale model. This integrated design, where stabilization and adaptation mechanisms work in concert rather than as separate, sequential steps, represents a significant advancement over existing methodologies.

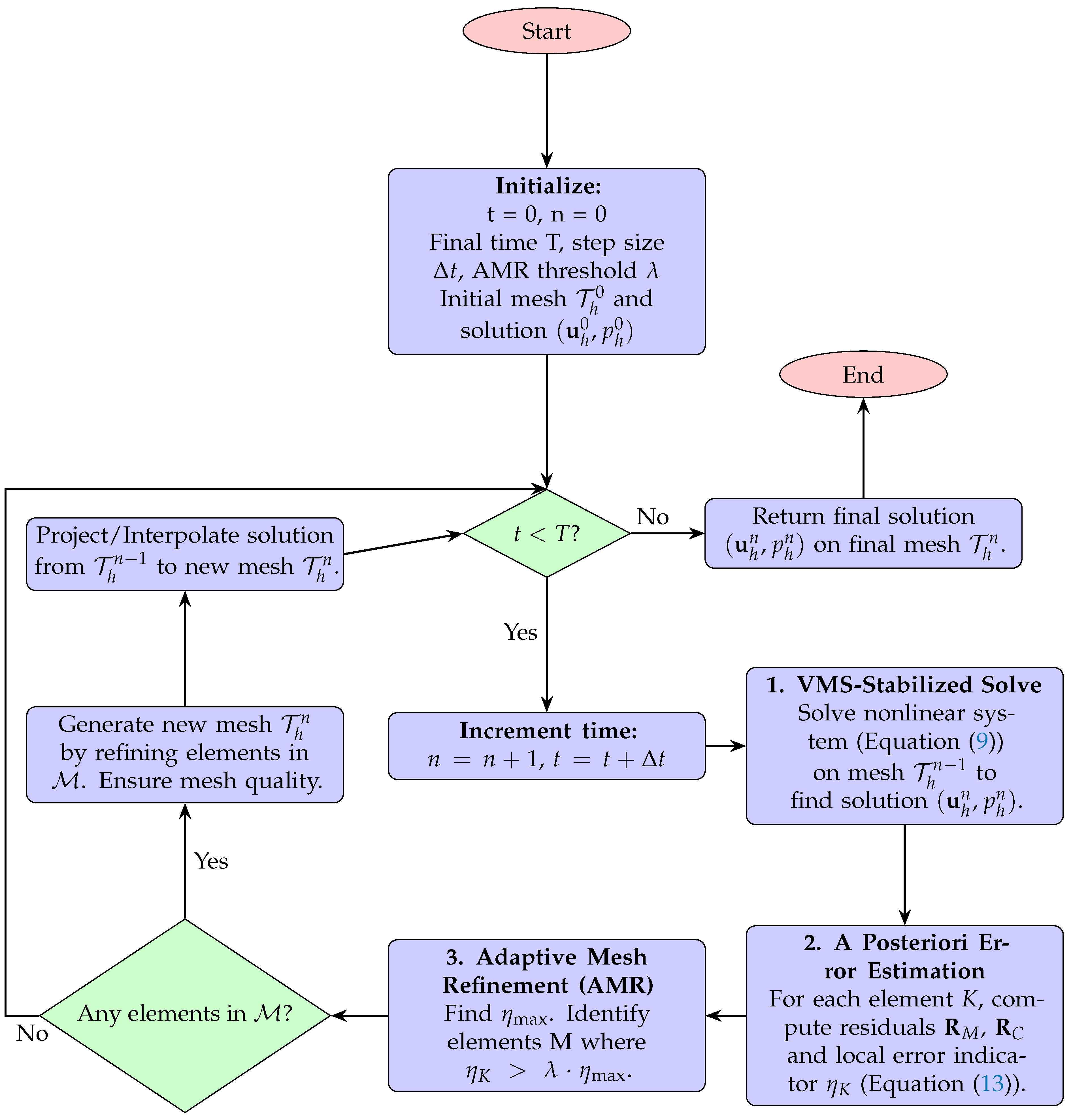

The innovation of this paper lies in the construction of such an enhanced Galerkin framework that deeply couples VMS with an AMR strategy. This synergy is realized through a novel posteriori error estimator specifically designed for the VMS system. Unlike traditional estimators that might act on the original Navier–Stokes residuals, the estimator (as detailed in

Section 3.3) is formulated based on the dual residuals of the stabilized large-scale equations. This ensures that the adaptive mesh refinement is directly guided by the quality of the VMS solution itself, including the effects of the subgrid-scale model. This approach creates a truly integrated and self-consistent framework where the stabilization and adaptation mechanisms work in concert, rather than as separate, sequential steps. Based on the standard Ritz–Galerkin finite element method and the stable Taylor–Hood element, a VMS term is introduced, derived from a generalized

-scheme for temporal discretization. This explicitly constructs a subgrid-scale model to address the numerical oscillation problem inherent in traditional Galerkin methods for convection-dominated regions. Furthermore, a new posteriori error estimator is designed based on dual residuals, which provides accurate error localization by dynamically weighting local momentum residuals, continuity residuals, and inter-element jump terms. This estimator guides a dynamic mesh refinement strategy, combining the longest-edge bisection method with local Delaunay re-triangulation to ensure mesh quality. The feasibility and superiority of this coupled approach are verified through numerical experiments on high-Reynolds-number flows, demonstrating its capability to provide a robust, accurate, and efficient solution for multiscale problems in computational fluid dynamics.

The remainder of this paper is organized as follows.

Section 2 details the mathematical model, presenting the strong and weak forms of the Navier–Stokes equations and discussing the theoretical foundation of the inf-sup condition for mixed finite element methods. In

Section 3, the proposed numerical framework is elaborated upon, including the Taylor–Hood element discretization, the formulation of the VMS method, and the design of the posteriori error estimator that drives the adaptive mesh refinement strategy.

Section 4.1,

Section 4.2,

Section 4.3 and

Section 4.4 present numerical experiments, focusing on the lid-driven cavity flow benchmark at both high and low Reynolds numbers, to validate the accuracy, stability, and efficiency of the method.

Section 4.5 introduces a classical cylinder flow benchmark at Re = 5000 to further demonstrate the framework’s robustness in handling complex, non-steady flows. Finally,

Section 5 concludes the paper with a summary of the findings and the key contributions of this work.

5. Conclusions

In this paper, an improved Galerkin framework is presented, designed to effectively address the persistent challenges of numerical stability and computational efficiency inherent in the simulation of unsteady, high-Reynolds-number Navier–Stokes equations. The work successfully demonstrates that a deep, synergistic coupling of Variational Multiscale Stabilization (VMS) with an adaptive mesh refinement (AMR) strategy provides a robust and powerful solution.

The key contributions of this study are threefold. First, a VMS method is introduced, derived from a generalized -scheme, which explicitly constructs a subgrid-scale model to quell the non-physical oscillations that plague standard Galerkin methods in convection-dominated regimes. Unlike methods such as Streamline Upwind Petrov–Galerkin (SUPG) that primarily add artificial diffusion, VMS provides a more physically consistent stabilization by directly accounting for subgrid-scale effects, thereby ensuring the physical fidelity of the solution without excessive smearing. This ensures the physical fidelity of the solution. Second, to tackle the challenge of computational expense, a novel and efficient posteriori error estimator is developed based on dual residuals, which is specifically tailored to the VMS formulation. This estimator assesses the residuals of the large-scale stabilized equations, ensuring that the AMR strategy is directly guided by the accuracy of the VMS solution itself. This creates a feedback loop where the stabilization and mesh refinement work in concert, a key aspect of this synergistic framework. Third, and most crucially, the framework is built upon the Taylor–Hood element, whose stability and satisfaction of the essential inf-sup condition are well-established. This preserves the mathematical rigor of the velocity–pressure coupling, as the AMR strategy incorporates local re-triangulation to maintain the mesh quality necessary for the inf-sup condition to hold on the non-uniform, adaptively generated grids.

The performance and superiority of the proposed method were rigorously validated. After verifying accuracy with an analytical solution at Re = 1, the framework demonstrated its robustness on the benchmark lid-driven cavity flow at Re = 1000. Crucially, its full potential was unleashed on a highly complex case of a lid-driven cavity with an offset cylinder at Re = 5000. Furthermore, the framework’s capability to accurately and stably simulate the classical cylinder flow at Re = 5000, a benchmark known for its complex von Kármán vortex street, further underscores its versatility and robustness. Even in this chaotic regime, this integrated approach maintained stability, captured the intricate multiscale vortex dynamics, and achieved stable convergence on grids that were, on average, significantly coarser than what would be required by traditional methods. This led to a substantial reduction in computational cost, without compromising the physical fidelity of the final solution.

Looking forward, the developed framework serves as a powerful and extensible foundation for future research. Immediate next steps include extending the methodology to three-dimensional (3D) simulations. While the extension to 3D introduces significant challenges, particularly concerning the substantial increase in computational cost and the complexity of mesh generation and management (e.g., efficient 3D Delaunay re-triangulation and load balancing for anisotropic meshes), the fundamental principles of VMS and dual-residual error estimation remain applicable. Addressing these challenges will necessitate advanced parallel computing strategies and optimized data structures to fully leverage the benefits of adaptive refinement in a 3D context. Beyond 3D, the framework can be applied to more complex multiphysics problems, such as fluid–structure interaction or flows with heat transfer. Furthermore, optimizing the implementation for parallel computing architectures could unlock its potential for tackling large-scale industrial and scientific applications. In summary, the integrated VMS-AMR approach presented herein offers a robust, accurate, and highly efficient pathway for the simulation of challenging high-Reynolds-number flows, significantly advancing the capabilities of computational fluid dynamics for both academic research and practical engineering analysis.