Abstract

Deep Reinforcement Learning (DRL) algorithms often exhibit significant performance variability across different training runs, even with identical settings. This paper investigates the hypothesis that a key contributor to this variability is the divergence in the observation spaces explored by individual learning agents. We conducted an empirical study using Proximal Policy Optimization (PPO) agents trained on eight Atari environments. We analyzed the collected agent trajectories by qualitatively visualizing and quantitatively measuring the divergence in their explored observation spaces. Furthermore, we cross-evaluated the learned actor and value networks, measuring the average absolute TD-error, the RMSE of value estimates, and the KL divergence between policies to assess their functional similarity. We also conducted experiments where agents were trained from identical network initializations to isolate the source of this divergence. Our findings reveal a strong correlation: environments with low-performance variance (e.g., Freeway) showed high similarity in explored observation spaces and learned networks across agents. Conversely, environments with high-performance variability (e.g., Boxing, Qbert) demonstrated significant divergence in both explored states and network functionalities. This pattern persisted even when agents started with identical network weights. These results suggest that differences in experiential trajectories, driven by the stochasticity of agent–environment interactions, lead to specialized agent policies and value functions, thereby contributing substantially to the observed inconsistencies in DRL performance.

1. Introduction

Deep reinforcement learning (DRL) has demonstrated an extraordinary ability to solve high-dimensional decision-making problems, from Atari video games [1] to the board game Go [2]. Among modern on-policy methods, Proximal Policy Optimization (PPO) [3] has emerged as a de facto baseline due to its empirical performance and implementation simplicity, and it remains the algorithm of choice in a wide range of recent studies. Yet, a persistent challenge plagues the field. Despite identical hyperparameters and training budgets, independent runs of the same DRL algorithm can yield markedly different returns [4], complicating the fair evaluation and deployment of new methods.

Existing research has identified several key sources contributing to this performance variability. One line of inquiry has focused on the profound impact of implementation details. Foundational work by Henderson et al. [4] demonstrated that differences in codebases, hyperparameter settings, and even random seeds could lead to drastically different outcomes. This was further emphasized by Engstrom et al. [5], who showed that seemingly minor code-level decisions—such as the choice of activation function or the order of operations—can alter performance by orders of magnitude. Another perspective has examined the dynamics of the training process itself. Bjorck et al. [6] provided evidence that much of this variability originates early in training, as a few “outlier” runs drift onto low-reward trajectories and never recover. This aligns with the work by Jang et al. [7], which explored how entropy-aware initialization can foster more effective exploration from the outset, thereby preventing early stagnation. A third approach has delved into the internal mechanics of the learning agent. For instance, Moalla et al. [8] recently established a connection between performance instability in PPO and internal “representation collapse,” where the network learns insufficiently diverse features, leading to trust issues in policy updates.

While these studies provide crucial insights into implementation, training dynamics, and internal representations, a complementary perspective remains less explored: the divergence in the observation space that each agent actually experiences. RL agents learn from the specific trajectories of states and rewards they encounter. If two agents, due to chance events early in training, begin to explore different regions of the state space, they are effectively training on different datasets. This can lead them to converge to substantially different—and unequally performing—policies. This paper investigates the hypothesis that this divergence in lived experience is a primary cause of performance variability. Unlike prior work focused on implementation choices or internal network states [8], we aim to directly quantify the differences in state visitation distributions among independently trained agents. And while studies like [6,7] identify that runs diverge, we characterize how they diverge in terms of the states they encounter and explicitly link this to the functional dissimilarity of the resulting policies.

In this work, we perform a controlled empirical study of five PPO agents, each trained with an independent random seed, across eight Atari environments—Alien, Boxing, Breakout, Enduro, Freeway, KungFuMaster, Pong, and Qbert—using the Arcade Learning Environment [9] and Gymnasium [10]. Training five independent PPO agents per game yields a spectrum of outcomes, from highly successful to comparatively poor policies. We then ask the following: Do higher-performing agents encounter a broader or different set of states than lower-performing agents? And do such differences manifest in the learned representations of the actor (policy) and critic (value function) networks? To answer these questions, we employ a range of analysis techniques. First, we visualize and quantify each agent’s observation distribution using dimensionality-reduction methods and statistical measures of dispersion and similarity. Second, we cross-evaluate the learned actor and value networks, applying them to states experienced by other agents to assess functional differences. Our findings reveal a clear correlation between divergence in explored observation spaces, dissimilarity of the learned networks, and variance in achieved performance across agents within each environment.

The remainder of this paper is organized as follows. Section 2 details our methodology. Section 3 presents the experimental results, featuring analyses of observation space characteristics and the discrepancies observed in actor–critic networks. In Section 4, we discuss the implications of our findings. Finally, Section 5 concludes the paper by outlining potential avenues for future research.

2. Methodology

To investigate the relationship between observation space divergence, network differences, and performance variability, a series of experiments were conducted using PPO agents trained on eight Atari environments. For this investigation, we specifically selected Proximal Policy Optimization (PPO) and the Atari 2600 benchmark suite. PPO was chosen due to its status as a robust, high-performing, and widely adopted baseline algorithm in the DRL community, making our findings on its variability highly relevant. The Atari suite, via the Arcade Learning Environment, offers a diverse collection of environments with varying complexities and reward structures. This diversity is crucial for our study, as it allows us to systematically compare the divergence phenomenon in games known to produce both highly consistent and highly variable performance outcomes.

2.1. Experimental Setup

All experiments were conducted on a workstation with the following specifications:

- Hardware: Intel(R) Core(TM) i7-14700K (28 cores), 128 GB RAM.

- Software: Python 3.10.16, along with key scientific computing libraries including NumPy 2.2.3, Pandas 2.2.3, SciPy 1.15.3, and Scikit-learn 1.7.0.

2.2. Training Environment and Agents

We trained five independent PPO agents for each of the eight Atari environments shown in Figure 1: Alien, Boxing, Breakout, Enduro, Freeway, KungFuMaster (KFM), Pong, and Qbert. The training was conducted using the Stable-Baselines3 [11], and the agents used the PPO hyperparameters for Atari games as released in the RL Baselines3 Zoo [12]. Each agent within an environment was trained from scratch, with the only source of variation being the random seeds.

Figure 1.

The eight Atari 2600 environments used for agent training: (a) Alien, (b) Boxing, (c) Breakout, (d) Enduro, (e) Freeway, (f) KungFuMaster (KFM), (g) Pong, and (h) Qbert.

2.3. Data Collection

After the training phase concluded for all agents, each of the five converged agents per environment was rolled out for 30 episodes. The performance, measured as the average reward over 30 evaluation episodes, was recorded for each agent. During these rollouts, at each step t, a tuple containing the current observation (), the received reward (), the value estimate from the agent’s own critic (), and the action logits from the agent’s own actor () was collected and stored. This process generated a dataset of trajectories specific to each agent’s learned policy.

2.4. Analysis of Observation Space

The collected observation data was analyzed to characterize the extent and nature of exploration by each agent and to compare observation distributions across agents.

2.4.1. Visualization

To qualitatively assess the similarity of explored observation spaces, the high-dimensional observation data (raw pixel frames) was visualized in a 2D space using t-SNE. For each environment, observations from all five agents were combined. From each agent’s collected observations, 5000 frames were randomly subsampled. These observations were then flattened into vectors before being projected into two dimensions using t-SNE. The perplexity hyperparameter for t-SNE was set to 30, a choice informed by a trustworthiness analysis (presented in Section 3.5) to ensure high-quality embeddings. The resulting scatter plots were color-coded by agent ID to reveal patterns of overlap and separation.

2.4.2. Single-Agent Observation Dispersion

To quantify the diversity of observations encountered by each individual agent, a multi-step process was employed:

- Data Loading and Preprocessing: For each agent, its collected observations over 30 episodes were loaded, and then 5000 frames were subsampled and flattened into vectors.

- Dimensionality Reduction and Denoising: Principal Component Analysis (PCA) was applied to reduce the dimensionality of the flattened observations to 50 dimensions. This step serves to denoise and compress the data, focusing on the directions of highest variance, which can lead to more robust and meaningful clustering in the subsequent step. Working with high-dimensional raw pixel data directly for clustering can be computationally expensive and sensitive to noise; PCA mitigates these issues by capturing the most salient features.

- State Space Discretization via Clustering: The PCA-transformed features from all agents within an environment were pooled together. K-means clustering was then performed on this pooled set of features to define a common set of discrete state categories. To assess the sensitivity of our metrics to the granularity of discretization, we performed this step with K values of 50, 100, and 200. The results for K = 100 are presented in the main analysis, with K = 50 and K = 200 used for comparison.

- Occupancy Histogram Generation: For each agent, an occupancy histogram (probability distribution, ) over the K clusters was computed.

- Dispersion Metrics Calculation: Based on this probability distribution (where c is a cluster index), the following metrics were calculated:

- Entropy (H(p)): Measures the uncertainty or diversity of the visited clusters. Higher entropy indicates that an agent visits a wider range of distinct state categories more uniformly.

- Effective Support Size (): Estimates the number of effectively visited clusters, providing an interpretable scale for diversity.

- Coverage Ratio (Cov): The proportion of the K-defined clusters that were visited at least once.

- Gini–Simpson Index (G): Measures diversity, with values closer to 1 indicating higher diversity (i.e., probabilities are spread out more evenly across many clusters).

2.4.3. Pairwise Observation Distribution Comparison

To quantify the similarity between observation distributions of pairs of agents, the following metrics were computed. The cluster definitions and the individual agent occupancy histograms (e.g., and ) used for these pairwise comparisons are established and computed according to the procedures detailed in Section 2.4.2.

- Pairwise Total Variation (TV) Distance: For each pair of agents , the TV distance between their cluster occupancy histograms and was calculated. This ranges from 0 (identical) to 1 (disjoint).

- Pairwise Maximum Mean Discrepancy (MMD): MMD was computed between the PCA-reduced feature sets of each pair of agents using a Gaussian kernel [13]. The kernel bandwidth sigma was set using the median heuristic. MMD is a statistical test for determining whether two samples are drawn from the same distribution, and its magnitude provides a measure of their dissimilarity. It operates directly on the feature representations rather than relying on the explicit cluster histograms.where K is a positive-definite kernel. We choose a Gaussian kernel . The kernel bandwidth is set to the median pairwise distance among all states in the combined dataset (median heuristic), which provides a reasonable scale. MMD is essentially a distance in reproducing kernel Hilbert space and is 0 iff the two distributions are identical (for characteristic kernels).

2.5. Analysis of Actor and Value Network Differences

To assess how the differences in explored observation spaces translate to differences in the learned actor and value networks, a cross-evaluation methodology was employed. For each pair of agents within an environment, the observations collected by agent i during its rollouts were used as input to the actor and value networks of agent j. The following metrics were calculated:

- Absolute TD-Error: The absolute TD-error was computed over agent i’s trajectory data using agent j’s value network, measuring how well agent j’s value function generalizes to agent i’s experiences.

- Root Mean Squared Error (RMSE) of Value Estimates: For each state in agent i’s trajectory, the value estimated by agent i’s critic, , was compared to the value predicted by agent j’s critic, . This directly measures how different the output of the two value functions is on states that agent i visits.

- Kullback–Leibler Divergence (KLD) of Action Logits: For each state in agent i’s trajectory, KLD was computed using the action probability distributions (derived from the logits) produced by agent i’s actor, , and agent j’s actor, . This quantifies the policy divergence by comparing the action probability distributions from agent i’s actor and agent j’s actor for states in agent i’s trajectory.

These metrics were computed for all 5 × 5 agent pairings in each of the eight environments, resulting in matrices that reveal the extent of network generalization and similarity.

2.6. Trained Agents with Identically Initialized Networks

To further investigate the sources of performance variability, we conducted an additional experiment. For four of the eight environments—Boxing and Qbert (high-performance variance), Alien (medium variance), and Freeway (low variance)—we trained five new agents starting from the same initial network weights. The only source of variation in these runs was the stochasticity inherent in the agent–environment interaction loop (e.g., action sampling, environment responses). This setup allows us to determine whether different weight initializations primarily drive performance divergence or if it emerges naturally from the training process itself. These agents were then analyzed using the same data collection and analysis pipeline described above.

3. Results

3.1. Performance Variation Across Agents and Environments

As detailed in Table 1, the five PPO agents trained independently in each of the eight environments achieved markedly different final scores. The agents are consistently listed from #1 (highest score) to #5 (lowest score). The performance gap between the best and worst agents varied dramatically across environments.

Table 1.

Performance of trained agents (mean and standard deviation of rewards).

Environments such as Freeway and Pong showed high consistency. In Freeway, scores were tightly clustered between 21.27 and 22.03, a minimal difference. In contrast, other environments displayed extreme variability. In KFM, the top agent scored 37,453, while the lowest-scoring agent achieved only 3600. Breakout also showed a massive spread, from 343.6 down to 41.2. Boxing and Qbert demonstrated significant, though less extreme, variance. This wide range of performance disparities provides a strong basis for investigating the correlation with observation space divergence. Table 2 shows the mean episode lengths, which often correlate inversely with performance (e.g., in Boxing, higher-scoring agents have shorter episodes).

Table 2.

Collected data size from trained agents (mean and standard deviation of episode length).

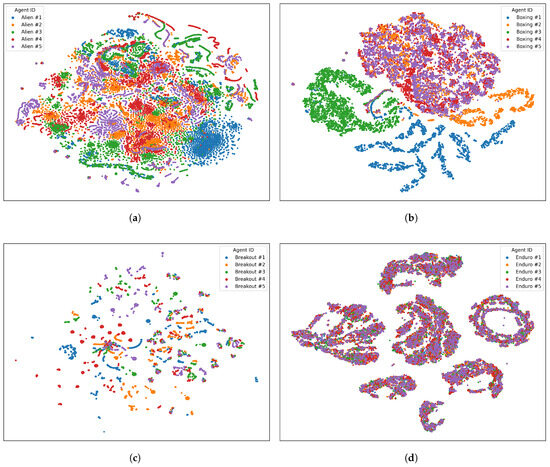

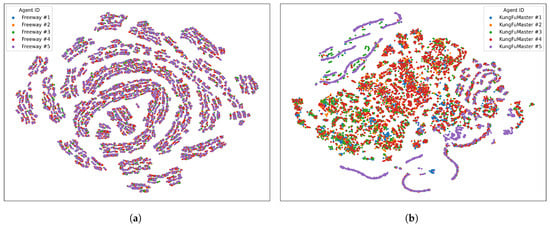

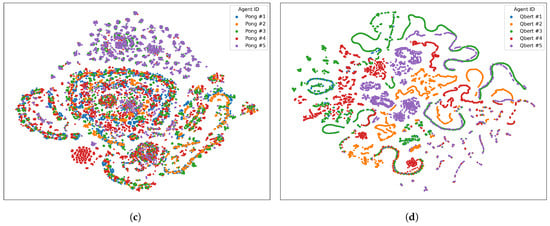

3.2. Qualitative Analysis of Observation Space via t-SNE

The t-SNE visualizations in Figure 2 and Figure 3 provide a qualitative view of the explored state manifolds, color-coded by an agent. The degree of overlap and separation between agent distributions varies significantly across games, correlating strongly with performance variance.

- Low-Variance Environments (Freeway, Enduro): In Freeway, the agent observations are thoroughly intermingled, forming a dense, homogeneous cloud with ring-like structures. This indicates that all agents explore nearly identical state spaces, consistent with their minimal performance variance. Enduro shows a similar pattern of high overlap, with points from all agents mixed together within several large clusters.

- High-Variance Environments (Boxing, Qbert, KFM, Breakout): These environments show clear signs of divergent exploration. In Boxing, agents form highly distinct clusters; for example, agent #1 (blue) and agent #3 (green) occupy almost completely separate regions. In Qbert, agents trace out unique, winding paths with little overlap, suggesting highly specialized policies. In KFM, the lowest-performing agent #5 (purple) forms tight, isolated clusters, while the higher-performing agents explore a much larger, albeit still differentiated, central region. Breakout shows less distinct clustering, but agents still carve out visibly different trajectories.

- Moderate-Variance Environments (Alien, Pong): These games represent an intermediate case. Alien agents share a large central cluster but also have unique “tendrils” of exploration. Pong agents form a series of concentric rings, but some agents are more confined to inner rings while others explore the outer regions more, and some agents (e.g., #5, purple) have more diffuse distributions.

Figure 2.

Qualitative t-SNE analysis of observation space representations from five distinct agents in four Atari games: (a) Alien, (b) Boxing, (c) Breakout, and (d) Enduro.

Figure 3.

Qualitative t-SNE analysis of observation space representations from five distinct agents in four Atari games: (a) Freeway, (b) KungFuMaster, (c) Pong, and (d) Qbert.

3.3. Quantitative Analysis of Observation Space

3.3.1. Single-Agent Observation Dispersion

The dispersion metrics in Table 3 (calculated with K = 100 clusters) quantify the t-SNE visualizations. In Freeway and Enduro, all five agents exhibit consistently high entropy (H), effective support size (), and coverage (), indicating they all explore the state space broadly and similarly. For example, all Freeway agents cover nearly 100% of the defined clusters. In high-variance games, these metrics exhibit significant differences between agents. In KFM, the lowest-performing agent (#5) has a much lower entropy (3.52) and effective size (33.74) compared to the others (H > 4.2, > 69), quantitatively confirming its limited exploration seen in the t-SNE plot. In Boxing, there is no simple correlation between performance and dispersion (e.g., agent #3 has the lowest dispersion, while agent #1 has moderate dispersion), suggesting that the quality and uniqueness of the explored region, not just its size, are critical. In Qbert, the top three agents (which all achieved the max score) have higher dispersion than the lower-performing agents #4 and #5.

Table 3.

Single agent dispersion statistics (K = 100).

3.3.2. Pairwise Observation Distribution Comparison

The Pairwise Total Variation (TV) distance (Table 4) and Maximum Mean Discrepancy (MMD) (Table 5) confirm the qualitative findings.

Table 4.

Pairwise Total Variation (TV) distance between agent observation distributions.

Table 5.

Pairwise Maximum Mean Discrepancy (MMD) between agent observation distributions.

- Freeway and Enduro show extremely low TV distances (mostly <0.1 for Freeway, <0.15 for Enduro) and MMD values (mostly <0.015 for Freeway, <0.025 for Enduro), confirming that all agents have statistically very similar observation distributions.

- Boxing, Qbert, and KFM show very high TV distances, often approaching 1.0, indicating that many agent pairs explore almost entirely different sets of state clusters. For instance, the TV distance between Qbert #1 and Qbert #2 is 0.831. In KFM, the low-performing agent #5 has TV distances > 0.7 against all other agents. The MMD values are correspondingly high, quantitatively demonstrating the stark divergence in exploration.

3.3.3. Sensitivity to Cluster Number K

To ensure our findings were not an artifact of the chosen cluster number (K = 100), we repeated the analysis for K = 50 and K = 200. While the absolute values of the dispersion and TV distance metrics changed with K (as expected), the relative trends remained consistent. For instance, across all values of K, Freeway and Enduro agents consistently showed high, uniform dispersion and low pairwise TV distances. Conversely, Boxing and Qbert agents consistently exhibited significant differences in dispersion and high pairwise TV distances. This indicates that our core conclusion—that performance variance correlates with observation space divergence—is robust to the specific granularity of state space discretization. The complete tables for this sensitivity analysis, which confirm these trends, are provided in the Appendix A.

3.4. Analysis of Actor and Value Network Differences

Cross-evaluation of the learned networks (Table 6, Table 7 and Table 8) shows that divergence in observation space corresponds to functional divergence in the actor and value networks.

Table 6.

Mean and standard deviation of absolute TD-error (value eetwork cross-evaluation).

Table 7.

RMSE of value predictions (value network cross-evaluation).

Table 8.

Mean and standard deviation of KL divergence of action logits (actor network cross-evaluation).

- For Freeway and Enduro, the off-diagonal values in the TD-error, RMSE, and KLD tables are very low, often close to the diagonal (self-evaluation) values. This means any agent’s networks can accurately predict values and replicate policies for states experienced by any other agent, indicating functional convergence.

- For Boxing, Qbert, and KFM, the off-diagonal values are substantially high. The KLD values in Boxing are frequently above 5.0, and the RMSE values in Qbert can be in the hundreds. This signifies that the agents have learned profoundly different policies and value functions that are highly specialized to their own unique experiences and do not generalize to those of their peers.

This provides strong evidence that when agents see different things, they learn to do other things, and their internal models of the world (value functions) become incompatible.

3.5. Analysis of Methodology Choices

3.5.1. Trustworthiness of t-SNE

To validate our choice of perplexity 30 for the t-SNE visualizations, we computed the trustworthiness metric for a range of perplexity values. Trustworthiness measures how well the local structure of the original high-dimensional data is preserved in the low-dimensional embedding. As shown in Table 9, a perplexity of 30 consistently yields high trustworthiness scores across most environments, indicating a reliable visualization.

Table 9.

Trustworthiness scores of t-SNE embeddings across varying perplexity values. Columns denote different perplexity settings, and cell entries are the corresponding trustworthiness metrics, with the highest score highlighted in bold.

3.5.2. Computation Time

Our analysis pipeline is computationally efficient. As shown in Table 10, PCA and K-means clustering take only a few seconds to a minute on the subsampled data. The main bottleneck is memory; increasing the number of samples per agent beyond 5000 becomes challenging, highlighting a limitation for even more fine-grained analyses on standard hardware.

Table 10.

Mean and standard deviation of computation time (seconds) for PCA and K-means with K = 50, 100, and 200.

3.6. Impact of Identical Network Initialization

To test whether performance variability stems solely from different random initializations or from the training process itself, we ran experiments where all five agents in an environment started with the same network weights. The results show that performance variance, while sometimes reduced, remains substantial in high-variance environments (Table 11). In Boxing, scores still ranged from 100 down to 90.97. In Qbert, the gap was even larger, from 4050 down to 800. In contrast, Freeway remained highly consistent. Crucially, the observation space and network divergence metrics for these agents mirrored the results from the randomly initialized experiments. For instance, in high-variance environments like Boxing and Qbert, the single-agent dispersion statistics showed considerable spread between agents, unlike in Freeway where all agents explored consistently (Table 12). This divergence in experience led to functionally different networks; the cross-agent absolute TD-error was substantial in high-variance games but negligible in Freeway (Table 13). This pattern of divergence is also confirmed by the high pairwise dissimilarity (high TV and MMD values, see Table 14 and Table 15) and network error metrics (high RMSE and KLD values, see Table 16 and Table 17). The key finding is that the stochasticity of the agent–environment interaction loop itself is a powerful driver of divergence. Even from an identical starting point, chance exploration events can set agents on different learning pathways from which they do not recover, leading to the same pattern of specialized, non-transferable policies and significant performance gaps.

Table 11.

Performance of trained agents with identically initialized networks (mean and standard deviation of rewards).

Table 12.

Single agent dispersion statistics of trained agents with identically initialized networks.

Table 13.

Mean and standard deviation of absolute TD-error for agents with identically initialized networks (value network cross-evaluation).

Table 14.

Pairwise TV distance for agents with identically initialized networks.

Table 15.

Pairwise MMD for agents with identically initialized networks.

Table 16.

RMSE of value predictions for agents with identically initialized networks (value network cross-evaluation).

Table 17.

Average and standard deviation of KLD for agents with identically initialized networks (actor network cross-evaluation).

4. Discussion

The results from our expanded study across eight Atari games provide compelling and robust evidence that divergence in explored observation spaces is a primary driver of performance variability in DRL. Our analysis indicates that the degree of this divergence is not random but is strongly tied to the intrinsic characteristics of the environment itself. The Freeway environment serves as a crucial control case. The consistent performance of Freeway agents is tightly linked to their consistent exploration patterns. All agents are guided through a similar, comprehensive set of experiences, which leads to the development of functionally equivalent policies and value functions. In stark contrast, high-variance environments like Boxing, Breakout, KFM, and Qbert reveal the consequences of divergent exploration. In these more complex settings, agents can and do find different niches within the state space. The t-SNE plots and quantitative metrics show that agents often specialize in distinct sub-regions, becoming experts in local areas while remaining naive about others. This specialization is path-dependent; early stochastic events steer an agent toward a particular trajectory, and the actor–critic learning loop reinforces this direction. An agent’s value network becomes more accurate for its frequented states, which in turn biases its policy to continue visiting them. This creates a feedback loop that amplifies initial small differences into significant chasms in both experience and capability.

4.1. Environment Characteristics and Their Impact on Divergence

The consistent patterns of divergence and stability observed across the eight Atari games can be primarily attributed to their intrinsic mechanics and objectives. By categorizing the environments, we can provide more targeted guidance for future DRL applications.

4.1.1. Low-Divergence Environments: Structured and Convergent

Environments that foster low divergence and stable performance, such as Freeway, Pong, and Enduro, often share characteristics like a clear, singular objective and a functionally narrow state space that guides agents toward a single dominant strategy.

- In Freeway, the goal is simple and monotonic: move up. There are no complex sub-tasks or branching strategic paths. This structure naturally channels all agents toward the same optimal behavior, leading to highly overlapping observation spaces and consistent performance.

- Pong is a purely reactive game where the optimal policy is to mirror the ball’s vertical movement. The simplicity and deterministic nature of this strategy mean there is little room for meaningful strategic variation to emerge.

- Enduro, while more complex visually, is also driven by a primary objective of continuous forward progress and overtaking. The core gameplay loop does not contain significant strategic “bottlenecks” that could send agents down wildly different learning paths.

In these games, the path to high rewards is straightforward, causing all agent trajectories and policies to converge. For real-world problems with similar characteristics (e.g., simple process optimization), we can expect DRL training to be relatively stable and reproducible.

4.1.2. High-Divergence Environments: Strategic Bottlenecks and Divergent Policies

Conversely, environments prone to high divergences, such as Qbert, Boxing, KFM, and Breakout, often feature strategic bottlenecks, multiple viable strategies, or complex state dependencies that amplify the effects of stochasticity.

- Boxing is a highly interactive, opponent-dependent game. An agent might learn an aggressive rushing strategy, while another learns a defensive, counter-punching style. These are two distinct but viable approaches that lead to entirely different patterns of interaction, creating separate clusters in the observation space and varied performance outcomes.

- Qbert and KFM contain significant exploration challenges and “bottlenecks.” In KFM, an agent that fails to learn how to defeat a specific enemy type will be trapped in early-level states, while an agent that succeeds will unlock a vast new region of the observation space. This creates a sharp bifurcation in experience and performance. Similarly, the unique board structure in Qbert presents many locally optimal paths, causing agents to specialize in different sections of the pyramid.

- Breakout is a classic example of an environment with a critical strategic bottleneck: learning to tunnel the ball behind the brick wall. Agents that discover this strategy enter a new, high-scoring phase of the game with a completely different set of observations. Agents that fail to discover it remain trapped in a low-scoring, repetitive gameplay loop, leading to extreme performance variance.

4.2. Potential Applications of Observation Space Divergence Analysis

The observation space divergence analysis presented in this study extends beyond understanding the problem of performance variability in DRL; it can be directly utilized to devise solutions for improving evaluation performance in practical applications. Specifically, the findings of this research can be applied to ensemble methods—a widely used technique for surpassing the performance of single agents [14]—from two perspectives.

First, for improving evaluation performance via ensemble methods. When ensembling N agents to boost performance, a novel approach can be explored beyond conventional techniques, such as majority voting, simple averaging, or weighted sums based on rewards. Specifically, one could employ a weighted sum based on the similarity between the representation of the current observation and the observation space representation of each agent in the ensemble. This method would give priority to the actions of agents whose experiences are most similar to the current situation, thereby potentially leading to more sophisticated and higher performance.

Second, for analyzing why ensemble effectiveness varies across environments. Our findings provide insight into predicting whether an ensemble will be effective in a given environment. For instance, in an environment like Freeway, where all agents explore a highly similar observation space, we can predict that the benefit of ensembling will be minimal because the individual agent policies are already convergent. In contrast, in an environment like Boxing, where agents learn distinct strategies (e.g., aggressive vs. defensive) resulting in clearly separated observation spaces, we can anticipate a significant performance boost from ensembling, as combining their different specializations would be highly complementary. This provides a practical guideline for determining where to focus limited computational resources when constructing ensembles.

4.3. General Implications and Study Limitations

The most significant finding is from the identical initialization experiment. The persistence of high-performance variance and observation space divergence in games like Boxing and Qbert, even when starting from the same network weights, demonstrates that the problem is not merely one of poor initialization. The stochastic nature of the RL interaction process itself is sufficient to drive agents onto divergent paths. This suggests that achieving reproducible performance in complex environments requires more than just fixing random seeds; it may require fundamentally new approaches to guide exploration or to make learning more robust to variations in experience.

Our comprehensive set of metrics—from visual t-SNE to quantitative measures of dispersion (entropy, TV distance, MMD) and network function (TD-error, RMSE, KLD)—presents a unified narrative. When agents see different things (high TV/MMD), their understanding of the world diverges (high-value RMSE), and their resulting behaviors diverge (high policy KLD). This work highlights that reporting only the mean and standard deviation of final scores can obscure the rich and varied behaviors that contribute to those scores. A deeper analysis of the underlying state visitation distributions is crucial for a comprehensive understanding of DRL algorithm behavior.

We acknowledge that the empirical results of our study focus on the PPO algorithm within the Atari domain. This deliberate choice of scope enabled a deep and multifaceted analysis of the divergence phenomenon. However, it is reasonable to question how these findings generalize to other algorithms and environments. We hypothesize that the core mechanism—stochastic agent–environment interactions leading to divergent experiential trajectories and specialized policies—is a fundamental aspect of the reinforcement learning process and is not unique to PPO. For instance, off-policy algorithms like DQN or SAC, which utilize a replay buffer, might exhibit different dynamics. A replay buffer could mitigate divergence by averaging experiences across different trajectories. Conversely, it could also exacerbate the issue if certain types of “lucky” trajectories become overrepresented early in training. Similarly, extending this analysis to domains with continuous action spaces, such as robotics tasks in MuJoCo, or environments with sparser rewards, represents an important next step. Exploring how the structure of the state space and the nature of the reward function influence the degree of observation divergence is a compelling avenue for future research.

5. Conclusions and Future Works

This paper investigated the role of observation space divergence as a contributing factor to performance variability in deep reinforcement learning. Through a series of experiments on PPO agents across eight Atari environments, we demonstrated a strong link between the similarity of states explored by different agents, the functional similarity of their learned networks, and the consistency of their final performance. The key findings include the following:

- We expanded the analysis to eight Atari games, confirming that environments with low variance in performance (e.g., Freeway, Enduro) exhibit highly similar state space exploration across agents. In contrast, high-variance environments (e.g., Boxing, KFM, Qbert) show significant divergence.

- Cross-evaluation of actor and value networks confirmed that agents with divergent observation distributions learn functionally different networks that do not generalize well to each other’s experiences.

- A new experiment with identically initialized networks revealed that performance variability and observation space divergence persist even without different initial weights, highlighting that stochasticity in the agent–environment interaction is a primary source of this divergence.

- Our analysis was shown to be robust to the choice of the number of clusters (K) used for state discretization, and the computational cost of the analysis pipeline was found to be modest.

This work highlights the importance of examining not only the performance levels achieved but also how agents attain them. Building on this foundational analysis in PPO and Atari, future research should extend this investigation in several key directions:

- Temporal and Causal Analysis: Study the evolution of observation space differences during the training process. Analyzing the temporal relationship between when divergence occurs and when agent performance begins to vary would help establish a more direct causal link and better explain the dynamic nature of performance fluctuations.

- Systematic Component Analysis: Conduct a comparative analysis of how different DRL components influence exploration behavior and performance variability. This includes systematically varying hyperparameters, network architectures, and regularization techniques to understand their impact on the observation space distribution of trained agents.

- Broadening Algorithmic and Environmental Scope: Apply this analysis pipeline to a broader range of algorithms, including other on-policy variants and prominent off-policy algorithms (e.g., SAC, DQN), to determine how mechanisms like replay buffers affect divergence. Furthermore, expanding the study to other domains, such as continuous control benchmarks or environments with sparse rewards, is crucial to test the generality of our findings.

- Developing Mitigation Strategies: Based on the insights from a component-level analysis, develop and benchmark novel exploration or regularization methods specifically designed to reduce undesirable trajectory divergence, promote more consistent learning outcomes, and directly mitigate DRL performance variability.

- Theoretical Foundations: Work towards proposing a theoretical framework that can interpret these diverse experimental results, providing a more formal understanding of the relationship between stochastic exploration, state space coverage, and performance instability in DRL.

Author Contributions

Conceptualization, S.J. and A.L.; methodology, S.J.; software, S.J.; validation, S.J. and A.L.; formal analysis, S.J.; investigation, S.J.; resources, S.J.; data curation, S.J.; writing—original draft preparation, S.J.; writing—review and editing, S.J. and A.L.; visualization, S.J.; supervision, A.L.; project administration, A.L.; funding acquisition, S.J. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the research fund of Hanbat National University in 2023.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on reasonable request to the corresponding author.

Acknowledgments

During the preparation of this manuscript, the authors used ChatGPT and Gemini for the purposes of formatting and English grammar correction. The authors have reviewed and edited the output and take full responsibility for the content of this publication.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Table A1.

Single agent dispersion statistics (K = 50).

Table A1.

Single agent dispersion statistics (K = 50).

| Agent | H | G | ||

|---|---|---|---|---|

| Alien #1 | 2.82 | 16.70 | 0.64 | 0.90 |

| Alien #2 | 3.23 | 25.23 | 0.78 | 0.95 |

| Alien #3 | 3.18 | 24.05 | 0.86 | 0.94 |

| Alien #4 | 2.87 | 17.67 | 0.66 | 0.91 |

| Alien #5 | 2.92 | 18.51 | 0.64 | 0.92 |

| Boxing #1 | 2.61 | 13.62 | 0.48 | 0.91 |

| Boxing #2 | 3.17 | 23.82 | 0.74 | 0.95 |

| Boxing #3 | 2.31 | 10.08 | 0.32 | 0.90 |

| Boxing #4 | 3.06 | 21.29 | 0.66 | 0.95 |

| Boxing #5 | 3.09 | 22.02 | 0.60 | 0.95 |

| Breakout #1 | 2.61 | 13.61 | 0.56 | 0.87 |

| Breakout #2 | 2.56 | 12.88 | 0.64 | 0.86 |

| Breakout #3 | 2.55 | 12.86 | 0.56 | 0.87 |

| Breakout #4 | 2.63 | 13.92 | 0.62 | 0.88 |

| Breakout #5 | 2.55 | 12.80 | 0.52 | 0.88 |

| Enduro #1 | 3.71 | 41.00 | 0.96 | 0.97 |

| Enduro #2 | 3.70 | 40.26 | 1.00 | 0.97 |

| Enduro #3 | 3.72 | 41.25 | 1.00 | 0.97 |

| Enduro #4 | 3.74 | 41.97 | 0.98 | 0.97 |

| Enduro #5 | 3.71 | 40.77 | 1.00 | 0.97 |

| Freeway #1 | 3.84 | 46.69 | 1.00 | 0.98 |

| Freeway #2 | 3.84 | 46.33 | 1.00 | 0.98 |

| Freeway #3 | 3.84 | 46.56 | 1.00 | 0.98 |

| Freeway #4 | 3.84 | 46.61 | 1.00 | 0.98 |

| Freeway #5 | 3.83 | 46.25 | 1.00 | 0.98 |

| KFM #1 | 3.51 | 33.50 | 1.00 | 0.96 |

| KFM #2 | 3.42 | 30.62 | 0.92 | 0.96 |

| KFM #3 | 3.61 | 36.88 | 0.98 | 0.97 |

| KFM #4 | 3.42 | 30.68 | 0.96 | 0.96 |

| KFM #5 | 3.05 | 21.16 | 0.64 | 0.94 |

| Pong #1 | 3.58 | 35.95 | 0.82 | 0.97 |

| Pong #2 | 3.60 | 36.46 | 0.82 | 0.97 |

| Pong #3 | 3.79 | 44.34 | 1.00 | 0.98 |

| Pong #4 | 3.60 | 36.62 | 0.94 | 0.97 |

| Pong #5 | 3.00 | 20.07 | 0.98 | 0.93 |

| Qbert #1 | 2.93 | 18.77 | 0.64 | 0.92 |

| Qbert #2 | 2.96 | 19.21 | 0.60 | 0.93 |

| Qbert #3 | 2.96 | 19.36 | 0.64 | 0.93 |

| Qbert #4 | 2.76 | 15.73 | 0.60 | 0.92 |

| Qbert #5 | 2.28 | 9.78 | 0.60 | 0.85 |

Table A2.

Single agent dispersion statistics (K = 200).

Table A2.

Single agent dispersion statistics (K = 200).

| Agent | H | G | ||

|---|---|---|---|---|

| Alien #1 | 3.94 | 51.66 | 0.43 | 0.97 |

| Alien #2 | 4.34 | 76.73 | 0.70 | 0.98 |

| Alien #3 | 4.32 | 75.09 | 0.66 | 0.98 |

| Alien #4 | 3.98 | 53.56 | 0.57 | 0.97 |

| Alien #5 | 4.25 | 69.93 | 0.50 | 0.98 |

| Boxing #1 | 3.60 | 36.72 | 0.29 | 0.97 |

| Boxing #2 | 4.37 | 79.35 | 0.64 | 0.98 |

| Boxing #3 | 3.44 | 31.29 | 0.21 | 0.97 |

| Boxing #4 | 4.50 | 89.92 | 0.63 | 0.99 |

| Boxing #5 | 4.63 | 102.24 | 0.61 | 0.99 |

| Breakout #1 | 3.12 | 22.61 | 0.39 | 0.88 |

| Breakout #2 | 3.02 | 20.47 | 0.37 | 0.87 |

| Breakout #3 | 2.93 | 18.70 | 0.32 | 0.88 |

| Breakout #4 | 3.45 | 31.55 | 0.40 | 0.93 |

| Breakout #5 | 2.98 | 19.70 | 0.31 | 0.89 |

| Enduro #1 | 5.13 | 169.43 | 0.99 | 0.99 |

| Enduro #2 | 5.11 | 165.93 | 0.99 | 0.99 |

| Enduro #3 | 5.12 | 167.66 | 0.99 | 0.99 |

| Enduro #4 | 5.15 | 172.38 | 0.99 | 0.99 |

| Enduro #5 | 5.14 | 170.26 | 0.99 | 0.99 |

| Freeway #1 | 5.24 | 187.79 | 1.00 | 0.99 |

| Freeway #2 | 5.23 | 186.22 | 1.00 | 0.99 |

| Freeway #3 | 5.23 | 186.07 | 1.00 | 0.99 |

| Freeway #4 | 5.23 | 187.14 | 1.00 | 0.99 |

| Freeway #5 | 5.23 | 187.21 | 1.00 | 0.99 |

| KFM #1 | 4.91 | 135.12 | 0.93 | 0.99 |

| KFM #2 | 4.94 | 139.90 | 0.91 | 0.99 |

| KFM #3 | 4.96 | 142.28 | 0.94 | 0.99 |

| KFM #4 | 4.92 | 137.49 | 0.91 | 0.99 |

| KFM #5 | 4.01 | 55.20 | 0.42 | 0.98 |

| Pong #1 | 4.81 | 122.46 | 0.72 | 0.99 |

| Pong #2 | 4.69 | 108.97 | 0.66 | 0.99 |

| Pong #3 | 5.08 | 160.98 | 0.97 | 0.99 |

| Pong #4 | 4.81 | 122.53 | 0.85 | 0.99 |

| Pong #5 | 4.51 | 90.51 | 0.83 | 0.99 |

| Qbert #1 | 4.12 | 61.31 | 0.51 | 0.97 |

| Qbert #2 | 3.72 | 41.29 | 0.35 | 0.96 |

| Qbert #3 | 4.10 | 60.58 | 0.51 | 0.97 |

| Qbert #4 | 3.59 | 36.13 | 0.42 | 0.95 |

| Qbert #5 | 3.60 | 36.64 | 0.42 | 0.96 |

Table A3.

Pairwise Total Variation (TV) distance between agent observation distributions (K = 50).

Table A3.

Pairwise Total Variation (TV) distance between agent observation distributions (K = 50).

| Alien #1 | Alien #2 | Alien #3 | Alien #4 | Alien #5 | |

| Alien #1 | 0.000 | 0.825 | 0.527 | 0.818 | 0.835 |

| Alien #2 | 0.825 | 0.000 | 0.679 | 0.600 | 0.361 |

| Alien #3 | 0.527 | 0.679 | 0.000 | 0.707 | 0.748 |

| Alien #4 | 0.818 | 0.600 | 0.707 | 0.000 | 0.618 |

| Alien #5 | 0.835 | 0.361 | 0.748 | 0.618 | 0.000 |

| Boxing #1 | Boxing #2 | Boxing #3 | Boxing #4 | Boxing #5 | |

| Boxing #1 | 0.000 | 0.959 | 0.913 | 0.979 | 0.985 |

| Boxing #2 | 0.959 | 0.000 | 0.967 | 0.502 | 0.457 |

| Boxing #3 | 0.913 | 0.967 | 0.000 | 0.978 | 0.984 |

| Boxing #4 | 0.979 | 0.502 | 0.978 | 0.000 | 0.148 |

| Boxing #5 | 0.985 | 0.457 | 0.984 | 0.148 | 0.000 |

| Breakout #1 | Breakout #2 | Breakout #3 | Breakout #4 | Breakout #5 | |

| Breakout #1 | 0.000 | 0.724 | 0.777 | 0.780 | 0.815 |

| Breakout #2 | 0.724 | 0.000 | 0.812 | 0.740 | 0.832 |

| Breakout #3 | 0.777 | 0.812 | 0.000 | 0.717 | 0.687 |

| Breakout #4 | 0.780 | 0.740 | 0.717 | 0.000 | 0.711 |

| Breakout #5 | 0.815 | 0.832 | 0.687 | 0.711 | 0.000 |

| Breakout #1 | Breakout #2 | Breakout #3 | Breakout #4 | Breakout #5 | |

| Enduro #1 | 0.000 | 0.125 | 0.079 | 0.104 | 0.088 |

| Enduro #2 | 0.125 | 0.000 | 0.116 | 0.120 | 0.108 |

| Enduro #3 | 0.079 | 0.116 | 0.000 | 0.103 | 0.079 |

| Enduro #4 | 0.104 | 0.120 | 0.103 | 0.000 | 0.098 |

| Enduro #5 | 0.088 | 0.108 | 0.079 | 0.098 | 0.000 |

| Freeway #1 | Freeway #2 | Freeway #3 | Freeway #4 | Freeway #5 | |

| Freeway #1 | 0.000 | 0.051 | 0.047 | 0.051 | 0.050 |

| Freeway #2 | 0.051 | 0.000 | 0.049 | 0.050 | 0.051 |

| Freeway #3 | 0.047 | 0.049 | 0.000 | 0.047 | 0.046 |

| Freeway #4 | 0.051 | 0.050 | 0.047 | 0.000 | 0.052 |

| Freeway #5 | 0.050 | 0.051 | 0.046 | 0.052 | 0.000 |

| KFM #1 | KFM #2 | KFM #3 | KFM #4 | KFM #5 | |

| KFM #1 | 0.000 | 0.171 | 0.183 | 0.180 | 0.748 |

| KFM #2 | 0.171 | 0.000 | 0.198 | 0.155 | 0.791 |

| KFM #3 | 0.183 | 0.198 | 0.000 | 0.219 | 0.686 |

| KFM #4 | 0.180 | 0.155 | 0.219 | 0.000 | 0.773 |

| KFM #5 | 0.748 | 0.791 | 0.686 | 0.773 | 0.000 |

| Pong #1 | Pong #2 | Pong #3 | Pong #4 | Pong #5 | |

| Pong #1 | 0.000 | 0.074 | 0.214 | 0.216 | 0.766 |

| Pong #2 | 0.074 | 0.000 | 0.198 | 0.213 | 0.766 |

| Pong #3 | 0.214 | 0.198 | 0.000 | 0.245 | 0.574 |

| Pong #4 | 0.216 | 0.213 | 0.245 | 0.000 | 0.740 |

| Pong #5 | 0.766 | 0.766 | 0.574 | 0.740 | 0.000 |

| Qbert #1 | Qbert #2 | Qbert #3 | Qbert #4 | Qbert #5 | |

| Qbert #1 | 0.000 | 0.742 | 0.032 | 0.599 | 0.737 |

| Qbert #2 | 0.742 | 0.000 | 0.739 | 0.839 | 0.792 |

| Qbert #3 | 0.032 | 0.739 | 0.000 | 0.589 | 0.733 |

| Qbert #4 | 0.599 | 0.839 | 0.589 | 0.000 | 0.833 |

| Qbert #5 | 0.737 | 0.792 | 0.733 | 0.833 | 0.000 |

Table A4.

Pairwise Total Variation (TV) distance between agent observation distributions (K = 200).

Table A4.

Pairwise Total Variation (TV) distance between agent observation distributions (K = 200).

| Alien #1 | Alien #2 | Alien #3 | Alien #4 | Alien #5 | |

| Alien #1 | 0.000 | 0.883 | 0.573 | 0.898 | 0.909 |

| Alien #2 | 0.883 | 0.000 | 0.787 | 0.724 | 0.579 |

| Alien #3 | 0.573 | 0.787 | 0.000 | 0.805 | 0.856 |

| Alien #4 | 0.898 | 0.724 | 0.805 | 0.000 | 0.752 |

| Alien #5 | 0.909 | 0.579 | 0.856 | 0.752 | 0.000 |

| Boxing #1 | Boxing #2 | Boxing #3 | Boxing #4 | Boxing #5 | |

| Boxing #1 | 0.000 | 0.970 | 0.939 | 0.981 | 0.984 |

| Boxing #2 | 0.970 | 0.000 | 0.974 | 0.757 | 0.637 |

| Boxing #3 | 0.939 | 0.974 | 0.000 | 0.980 | 0.984 |

| Boxing #4 | 0.981 | 0.757 | 0.980 | 0.000 | 0.323 |

| Boxing #5 | 0.984 | 0.637 | 0.984 | 0.323 | 0.000 |

| Breakout #1 | Breakout #2 | Breakout #3 | Breakout #4 | Breakout #5 | |

| Breakout #1 | 0.000 | 0.870 | 0.905 | 0.885 | 0.916 |

| Breakout #2 | 0.870 | 0.000 | 0.927 | 0.891 | 0.935 |

| Breakout #3 | 0.905 | 0.927 | 0.000 | 0.847 | 0.817 |

| Breakout #4 | 0.885 | 0.891 | 0.847 | 0.000 | 0.848 |

| Breakout #5 | 0.916 | 0.935 | 0.817 | 0.848 | 0.000 |

| Enduro #1 | Enduro #2 | Enduro #3 | Enduro #4 | Enduro #5 | |

| Enduro #1 | 0.000 | 0.193 | 0.165 | 0.166 | 0.169 |

| Enduro #2 | 0.193 | 0.000 | 0.197 | 0.190 | 0.172 |

| Enduro #3 | 0.165 | 0.197 | 0.000 | 0.179 | 0.170 |

| Enduro #4 | 0.166 | 0.190 | 0.179 | 0.000 | 0.166 |

| Enduro #5 | 0.169 | 0.172 | 0.170 | 0.166 | 0.000 |

| Freeway #1 | Freeway #2 | Freeway #3 | Freeway #4 | Freeway #5 | |

| Freeway #1 | 0.000 | 0.102 | 0.109 | 0.109 | 0.104 |

| Freeway #2 | 0.102 | 0.000 | 0.108 | 0.107 | 0.107 |

| Freeway #3 | 0.109 | 0.108 | 0.000 | 0.093 | 0.105 |

| Freeway #4 | 0.109 | 0.107 | 0.093 | 0.000 | 0.107 |

| Freeway #5 | 0.104 | 0.107 | 0.105 | 0.107 | 0.000 |

| KFM #1 | KFM #2 | KFM #3 | KFM #4 | KFM #5 | |

| KFM #1 | 0.000 | 0.278 | 0.305 | 0.289 | 0.801 |

| KFM #2 | 0.278 | 0.000 | 0.253 | 0.197 | 0.825 |

| KFM #3 | 0.305 | 0.253 | 0.000 | 0.292 | 0.720 |

| KFM #4 | 0.289 | 0.197 | 0.292 | 0.000 | 0.805 |

| KFM #5 | 0.801 | 0.825 | 0.720 | 0.805 | 0.000 |

| Pong #1 | Pong #2 | Pong #3 | Pong #4 | Pong #5 | |

| Pong #1 | 0.000 | 0.287 | 0.367 | 0.330 | 0.817 |

| Pong #2 | 0.287 | 0.000 | 0.375 | 0.402 | 0.836 |

| Pong #3 | 0.367 | 0.375 | 0.000 | 0.385 | 0.607 |

| Pong #4 | 0.330 | 0.402 | 0.385 | 0.000 | 0.754 |

| Pong #5 | 0.817 | 0.836 | 0.607 | 0.754 | 0.000 |

| Qbert #1 | Qbert #2 | Qbert #3 | Qbert #4 | Qbert #5 | |

| Qbert #1 | 0.000 | 0.853 | 0.113 | 0.800 | 0.840 |

| Qbert #2 | 0.853 | 0.000 | 0.853 | 0.906 | 0.902 |

| Qbert #3 | 0.113 | 0.853 | 0.000 | 0.823 | 0.840 |

| Qbert #4 | 0.800 | 0.906 | 0.823 | 0.000 | 0.914 |

| Qbert #5 | 0.840 | 0.902 | 0.840 | 0.914 | 0.000 |

Table A5.

Pairwise Maximum Mean Discrepancy (MMD) between agent observation distributions (K = 50).

Table A5.

Pairwise Maximum Mean Discrepancy (MMD) between agent observation distributions (K = 50).

| Alien #1 | Alien #2 | Alien #3 | Alien #4 | Alien #5 | |

| Alien #1 | 0.000 | 0.323 | 0.205 | 0.329 | 0.354 |

| Alien #2 | 0.323 | 0.000 | 0.273 | 0.243 | 0.180 |

| Alien #3 | 0.205 | 0.273 | 0.000 | 0.285 | 0.278 |

| Alien #4 | 0.329 | 0.243 | 0.285 | 0.000 | 0.237 |

| Alien #5 | 0.354 | 0.180 | 0.278 | 0.237 | 0.000 |

| Boxing #1 | Boxing #2 | Boxing #3 | Boxing #4 | Boxing #5 | |

| Boxing #1 | 0.000 | 0.476 | 0.557 | 0.542 | 0.536 |

| Boxing #2 | 0.476 | 0.000 | 0.363 | 0.219 | 0.195 |

| Boxing #3 | 0.557 | 0.363 | 0.000 | 0.437 | 0.430 |

| Boxing #4 | 0.542 | 0.219 | 0.437 | 0.000 | 0.074 |

| Boxing #5 | 0.536 | 0.195 | 0.430 | 0.074 | 0.000 |

| Breakout #1 | Breakout #2 | Breakout #3 | Breakout #4 | Breakout #5 | |

| Breakout #1 | 0.000 | 0.288 | 0.352 | 0.295 | 0.361 |

| Breakout #2 | 0.288 | 0.000 | 0.418 | 0.332 | 0.434 |

| Breakout #3 | 0.352 | 0.418 | 0.000 | 0.220 | 0.209 |

| Breakout #4 | 0.295 | 0.332 | 0.220 | 0.000 | 0.213 |

| Breakout #5 | 0.361 | 0.434 | 0.209 | 0.213 | 0.000 |

| Enduro #1 | Enduro #2 | Enduro #3 | Enduro #4 | Enduro #5 | |

| Enduro #1 | 0.000 | 0.023 | 0.017 | 0.020 | 0.021 |

| Enduro #2 | 0.023 | 0.000 | 0.023 | 0.023 | 0.015 |

| Enduro #3 | 0.017 | 0.023 | 0.000 | 0.019 | 0.014 |

| Enduro #4 | 0.020 | 0.023 | 0.019 | 0.000 | 0.019 |

| Enduro #5 | 0.021 | 0.015 | 0.014 | 0.019 | 0.000 |

| Freeway #1 | Freeway #2 | Freeway #3 | Freeway #4 | Freeway #5 | |

| Freeway #1 | 0.000 | 0.011 | 0.013 | 0.014 | 0.013 |

| Freeway #2 | 0.011 | 0.000 | 0.012 | 0.011 | 0.011 |

| Freeway #3 | 0.013 | 0.012 | 0.000 | 0.010 | 0.013 |

| Freeway #4 | 0.014 | 0.011 | 0.010 | 0.000 | 0.012 |

| Freeway #5 | 0.013 | 0.011 | 0.013 | 0.012 | 0.000 |

| KFM #1 | KFM #2 | KFM #3 | KFM #4 | KFM #5 | |

| KFM #1 | 0.000 | 0.067 | 0.060 | 0.078 | 0.315 |

| KFM #2 | 0.067 | 0.000 | 0.076 | 0.078 | 0.334 |

| KFM #3 | 0.060 | 0.076 | 0.000 | 0.098 | 0.282 |

| KFM #4 | 0.078 | 0.078 | 0.098 | 0.000 | 0.317 |

| KFM #5 | 0.315 | 0.334 | 0.282 | 0.317 | 0.000 |

| Pong #1 | Pong #2 | Pong #3 | Pong #4 | Pong #5 | |

| Pong #1 | 0.000 | 0.113 | 0.137 | 0.114 | 0.476 |

| Pong #2 | 0.113 | 0.000 | 0.152 | 0.120 | 0.485 |

| Pong #3 | 0.137 | 0.152 | 0.000 | 0.119 | 0.358 |

| Pong #4 | 0.114 | 0.120 | 0.119 | 0.000 | 0.444 |

| Pong #5 | 0.476 | 0.485 | 0.358 | 0.444 | 0.000 |

| Qbert #1 | Qbert #2 | Qbert #3 | Qbert #4 | Qbert #5 | |

| Qbert #1 | 0.000 | 0.225 | 0.013 | 0.156 | 0.225 |

| Qbert #2 | 0.225 | 0.000 | 0.225 | 0.272 | 0.255 |

| Qbert #3 | 0.013 | 0.225 | 0.000 | 0.159 | 0.225 |

| Qbert #4 | 0.156 | 0.272 | 0.159 | 0.000 | 0.255 |

| Qbert #5 | 0.225 | 0.255 | 0.225 | 0.255 | 0.000 |

Table A6.

Pairwise Maximum Mean Discrepancy (MMD) between agent observation distributions (K = 200).

Table A6.

Pairwise Maximum Mean Discrepancy (MMD) between agent observation distributions (K = 200).

| Alien #1 | Alien #2 | Alien #3 | Alien #4 | Alien #5 | |

| Alien #1 | 0.000 | 0.323 | 0.205 | 0.329 | 0.354 |

| Alien #2 | 0.323 | 0.000 | 0.273 | 0.243 | 0.180 |

| Alien #3 | 0.205 | 0.273 | 0.000 | 0.285 | 0.278 |

| Alien #4 | 0.329 | 0.243 | 0.285 | 0.000 | 0.237 |

| Alien #5 | 0.354 | 0.180 | 0.278 | 0.237 | 0.000 |

| Boxing #1 | Boxing #2 | Boxing #3 | Boxing #4 | Boxing #5 | |

| Boxing #1 | 0.000 | 0.476 | 0.557 | 0.542 | 0.536 |

| Boxing #2 | 0.476 | 0.000 | 0.363 | 0.219 | 0.195 |

| Boxing #3 | 0.557 | 0.363 | 0.000 | 0.437 | 0.430 |

| Boxing #4 | 0.542 | 0.219 | 0.437 | 0.000 | 0.074 |

| Boxing #5 | 0.536 | 0.195 | 0.430 | 0.074 | 0.000 |

| Breakout #1 | Breakout #2 | Breakout #3 | Breakout #4 | Breakout #5 | |

| Breakout #1 | 0.000 | 0.288 | 0.352 | 0.295 | 0.361 |

| Breakout #2 | 0.288 | 0.000 | 0.418 | 0.332 | 0.434 |

| Breakout #3 | 0.352 | 0.418 | 0.000 | 0.220 | 0.209 |

| Breakout #4 | 0.295 | 0.332 | 0.220 | 0.000 | 0.213 |

| Breakout #5 | 0.361 | 0.434 | 0.209 | 0.213 | 0.000 |

| Enduro #1 | Enduro #2 | Enduro #3 | Enduro #4 | Enduro #5 | |

| Enduro #1 | 0.000 | 0.023 | 0.017 | 0.020 | 0.021 |

| Enduro #2 | 0.023 | 0.000 | 0.023 | 0.023 | 0.015 |

| Enduro #3 | 0.017 | 0.023 | 0.000 | 0.019 | 0.014 |

| Enduro #4 | 0.020 | 0.023 | 0.019 | 0.000 | 0.019 |

| Enduro #5 | 0.021 | 0.015 | 0.014 | 0.019 | 0.000 |

| Freeway #1 | Freeway #2 | Freeway #3 | Freeway #4 | Freeway #5 | |

| Freeway #1 | 0.000 | 0.011 | 0.013 | 0.014 | 0.013 |

| Freeway #2 | 0.011 | 0.000 | 0.012 | 0.011 | 0.011 |

| Freeway #3 | 0.013 | 0.012 | 0.000 | 0.010 | 0.013 |

| Freeway #4 | 0.014 | 0.011 | 0.010 | 0.000 | 0.012 |

| Freeway #5 | 0.013 | 0.011 | 0.013 | 0.012 | 0.000 |

| KFM #1 | KFM #2 | KFM #3 | KFM #4 | KFM #5 | |

| KFM #1 | 0.000 | 0.067 | 0.060 | 0.078 | 0.315 |

| KFM #2 | 0.067 | 0.000 | 0.076 | 0.078 | 0.334 |

| KFM #3 | 0.060 | 0.076 | 0.000 | 0.098 | 0.282 |

| KFM #4 | 0.078 | 0.078 | 0.098 | 0.000 | 0.317 |

| KFM #5 | 0.315 | 0.334 | 0.282 | 0.317 | 0.000 |

| Pong #1 | Pong #2 | Pong #3 | Pong #4 | Pong #5 | |

| Pong #1 | 0.000 | 0.113 | 0.137 | 0.114 | 0.476 |

| Pong #2 | 0.113 | 0.000 | 0.152 | 0.120 | 0.485 |

| Pong #3 | 0.137 | 0.152 | 0.000 | 0.119 | 0.358 |

| Pong #4 | 0.114 | 0.120 | 0.119 | 0.000 | 0.444 |

| Pong #5 | 0.476 | 0.485 | 0.358 | 0.444 | 0.000 |

| Qbert #1 | Qbert #2 | Qbert #3 | Qbert #4 | Qbert #5 | |

| Qbert #1 | 0.000 | 0.225 | 0.013 | 0.156 | 0.225 |

| Qbert #2 | 0.225 | 0.000 | 0.225 | 0.272 | 0.255 |

| Qbert #3 | 0.013 | 0.225 | 0.000 | 0.159 | 0.225 |

| Qbert #4 | 0.156 | 0.272 | 0.159 | 0.000 | 0.255 |

| Qbert #5 | 0.225 | 0.255 | 0.225 | 0.255 | 0.000 |

References

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-Level Control through Deep Reinforcement Learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef] [PubMed]

- Silver, D.; Huang, A.; Maddison, C.J.; Guez, A.; Sifre, L.; van den Driessche, G.; Schrittwieser, J.; Antonoglou, I.; Panneershelvam, V.; Lanctot, M.; et al. Mastering the Game of Go with Deep Neural Networks and Tree Search. Nature 2016, 529, 484–489. [Google Scholar] [CrossRef] [PubMed]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal Policy Optimization Algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar] [CrossRef]

- Henderson, P.; Islam, R.; Bachman, P.; Pineau, J.; Precup, D.; Meger, D. Deep Reinforcement Learning That Matters. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), New Orleans, LA, USA, 2–7 February 2018; pp. 3207–3214. [Google Scholar]

- Engstrom, L.; Ilyas, A.; Santurkar, S.; Tsipras, D.; Janoos, F.; Rudolph, L.; Madry, A. Implementation Matters in Deep Policy Gradients: A Case Study on PPO and TRPO. In Proceedings of the International Conference on Learning Representations (ICLR), Virtually, 26–30 April 2020. [Google Scholar]

- Bjorck, J.; Gomes, C.P.; Weinberger, K.Q. Is High Variance Unavoidable in Reinforcement Learning? A Case Study in Continuous Control. In Proceedings of the International Conference on Learning Representations (ICLR), Virtually, 25–29 April 2022. [Google Scholar]

- Jang, S.; Kim, H.I. Entropy-Aware Model Initialization for Effective Exploration in Deep Reinforcement Learning. Sensors 2022, 22, 5845. [Google Scholar] [CrossRef] [PubMed]

- Moalla, S.; Miele, A.; Pyatko, D.; Pascanu, R.; Gulcehre, C. No Representation, No Trust: Connecting Representation, Collapse, and Trust Issues in PPO. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Vancouver, BC, Canada, 10–15 December 2024; Volume 37, pp. 69652–69699. [Google Scholar]

- Bellemare, M.G.; Naddaf, Y.; Veness, J.; Bowling, M. The Arcade Learning Environment: An Evaluation Platform for General Agents. J. Artif. Intell. Res. 2013, 47, 253–279. [Google Scholar] [CrossRef]

- Towers, M.; Kwiatkowski, A.; Terry, J.; Balis, J.U.; Cola, G.D.; Deleu, T.; Goulão, M.; Kallinteris, A.; Krimmel, M.; Arjun, K.G.; et al. Gymnasium: A Standard Interface for Reinforcement Learning Environments. arXiv 2024, arXiv:2407.17032. [Google Scholar] [CrossRef]

- Raffin, A.; Hill, A.; Gleave, A.; Kanervisto, A.; Ernestus, M.; Dormann, N. Stable-Baselines3: Reliable Reinforcement Learning Implementations. J. Mach. Learn. Res. 2021, 22, 1–8. [Google Scholar]

- Raffin, A. RL Baselines3 Zoo. 2020. Available online: https://github.com/DLR-RM/rl-baselines3-zoo (accessed on 5 June 2025).

- Roy, A.; Ghosh, A.K. Some tests of independence based on maximum mean discrepancy and ranks of nearest neighbors. Stat. Probab. Lett. 2020, 164, 108793. [Google Scholar] [CrossRef]

- Ganaie, M.; Hu, M.; Malik, A.; Tanveer, M.; Suganthan, P. Ensemble deep learning: A review. Eng. Appl. Artif. Intell. 2022, 115, 105151. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).