Enhancing Secure Software Development with AZTRM-D: An AI-Integrated Approach Combining DevSecOps, Risk Management, and Zero Trust

Abstract

1. Introduction

Organization of the Paper

2. Related Work and Foundational Concepts

2.1. Software and System Development Life Cycles

2.1.1. Waterfall Method

2.1.2. Agile Method

2.1.3. V-Model

2.1.4. Spiral Method

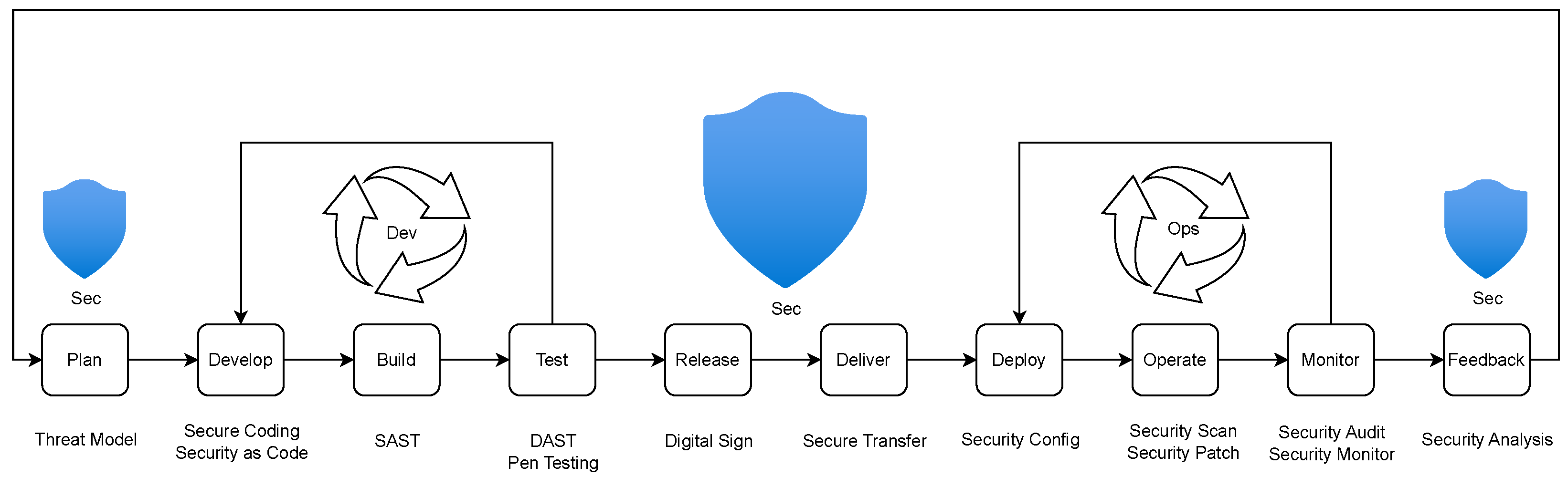

2.1.5. DevSecOps Method

2.2. Current Landscape of Secure Development Methodologies and Frameworks

2.3. The Rationale for AZTRM-D’s Integrated Approach

2.4. NIST Risk Management Framework

2.4.1. Preparation Phase

2.4.2. Categorization Phase

2.4.3. Selection Phase

2.4.4. Implementation Phase

2.4.5. Assessment Phase

2.4.6. Authorization Phase

2.4.7. Monitoring Phase

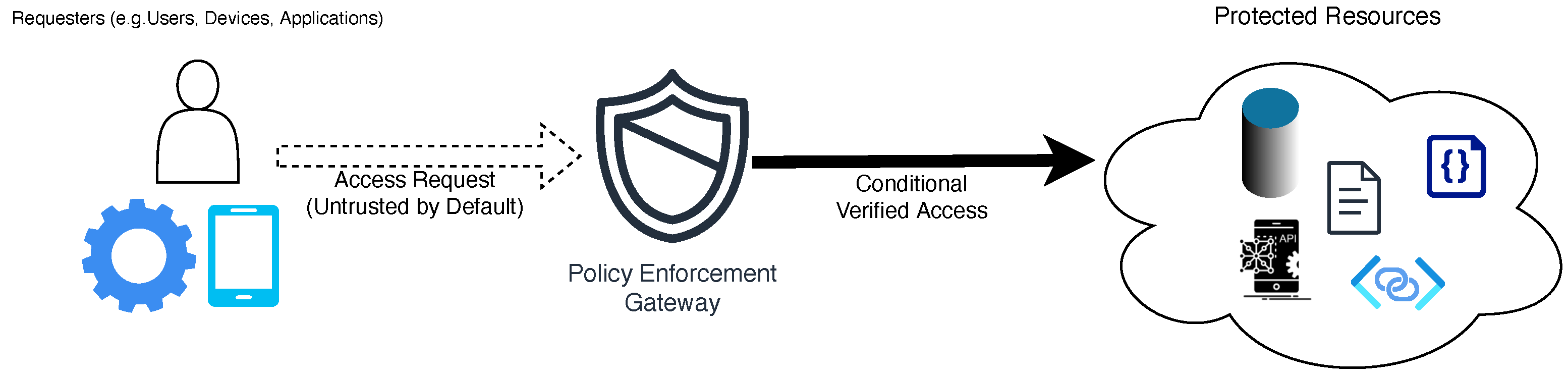

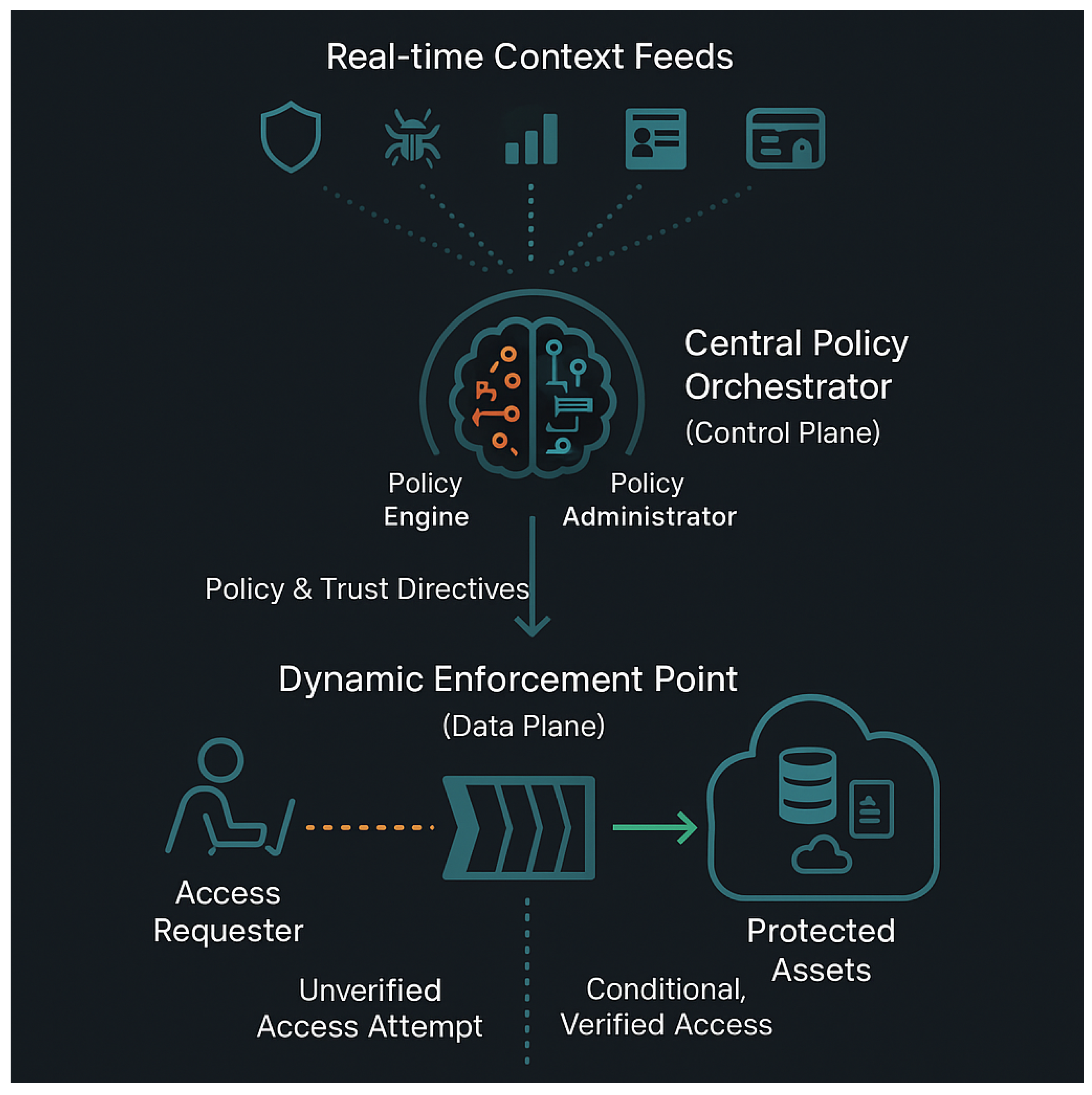

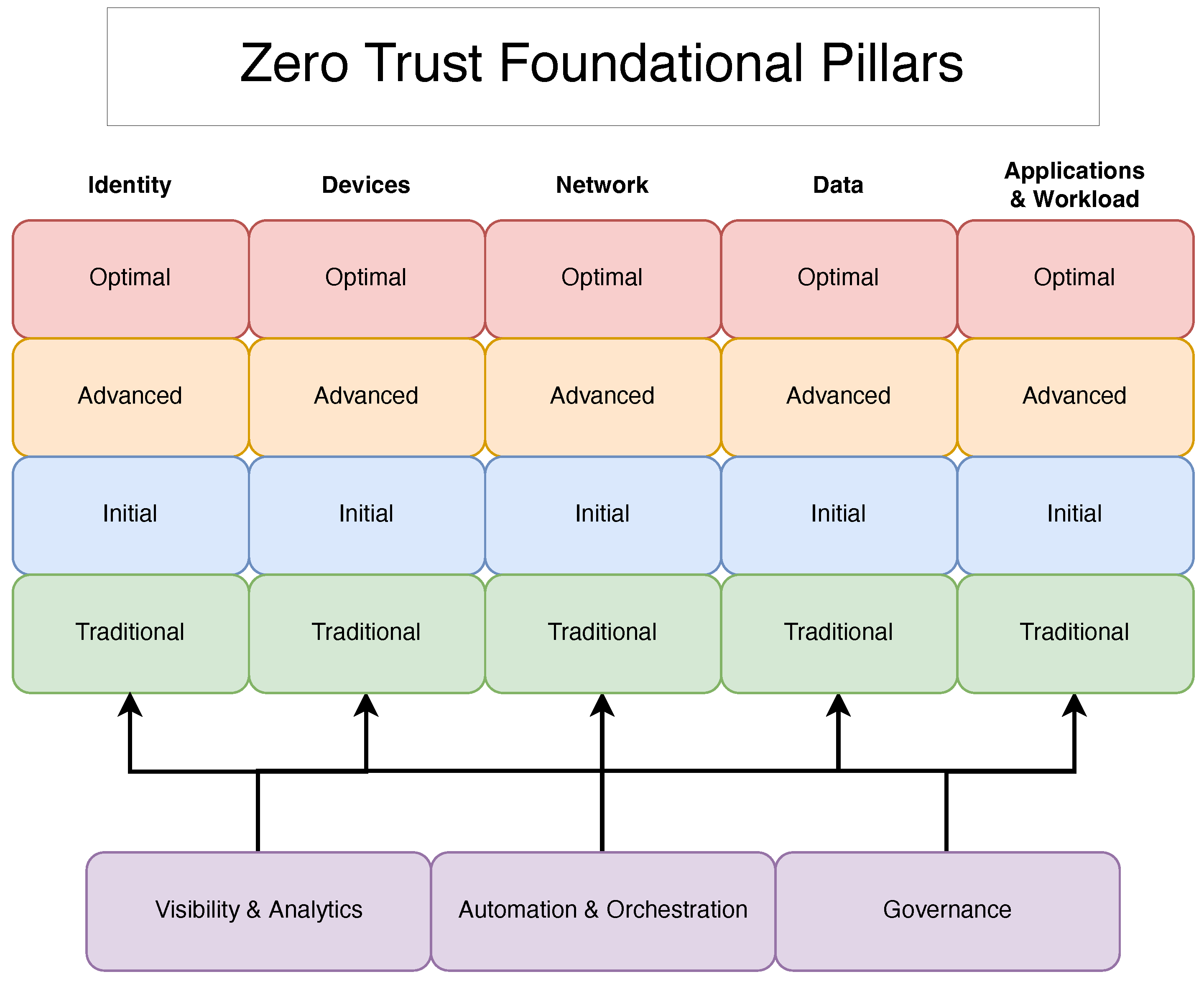

3. The Guiding Philosophy: Zero Trust Architecture in AZTRM-D

3.1. Identity

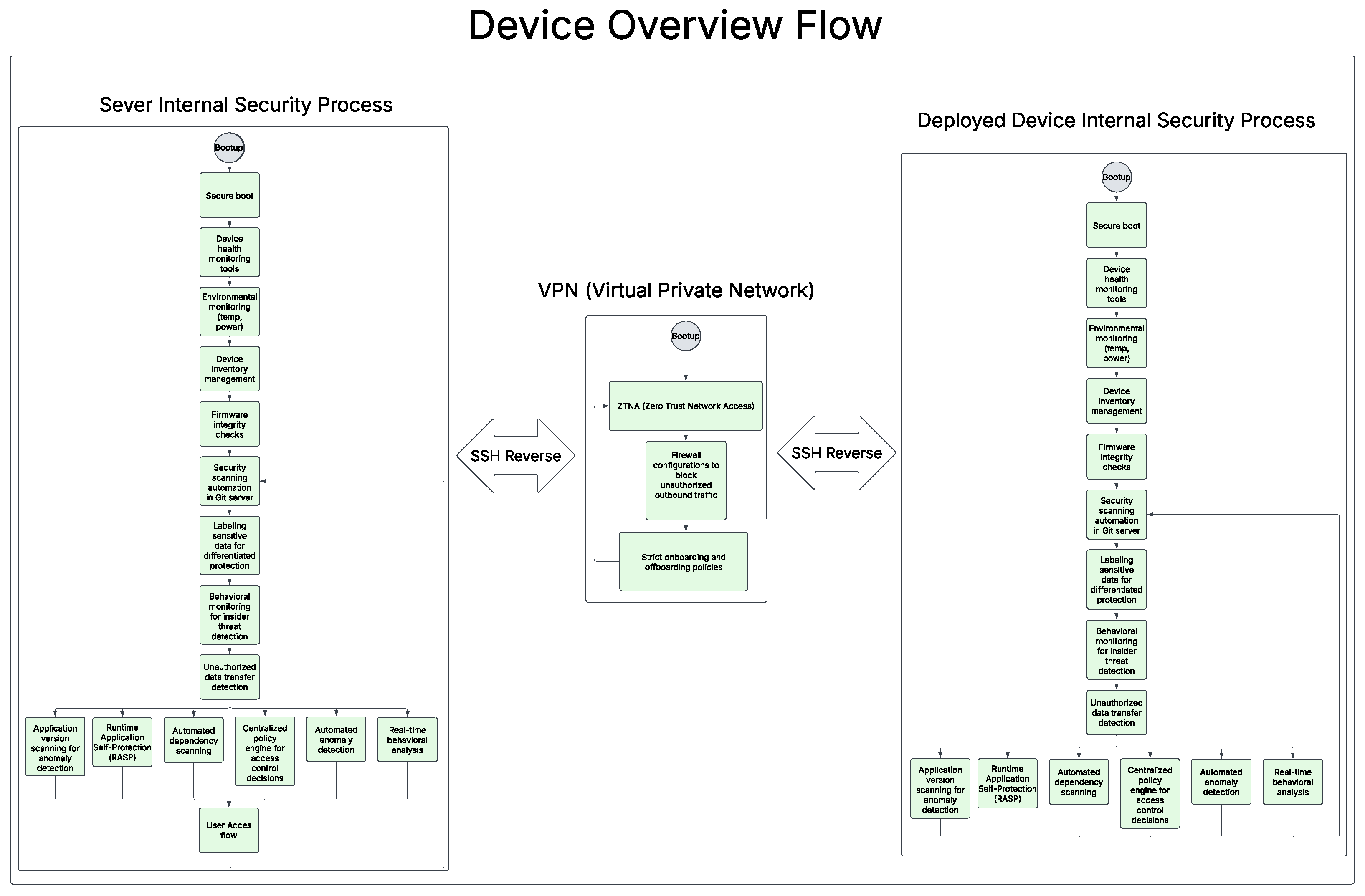

3.2. Devices

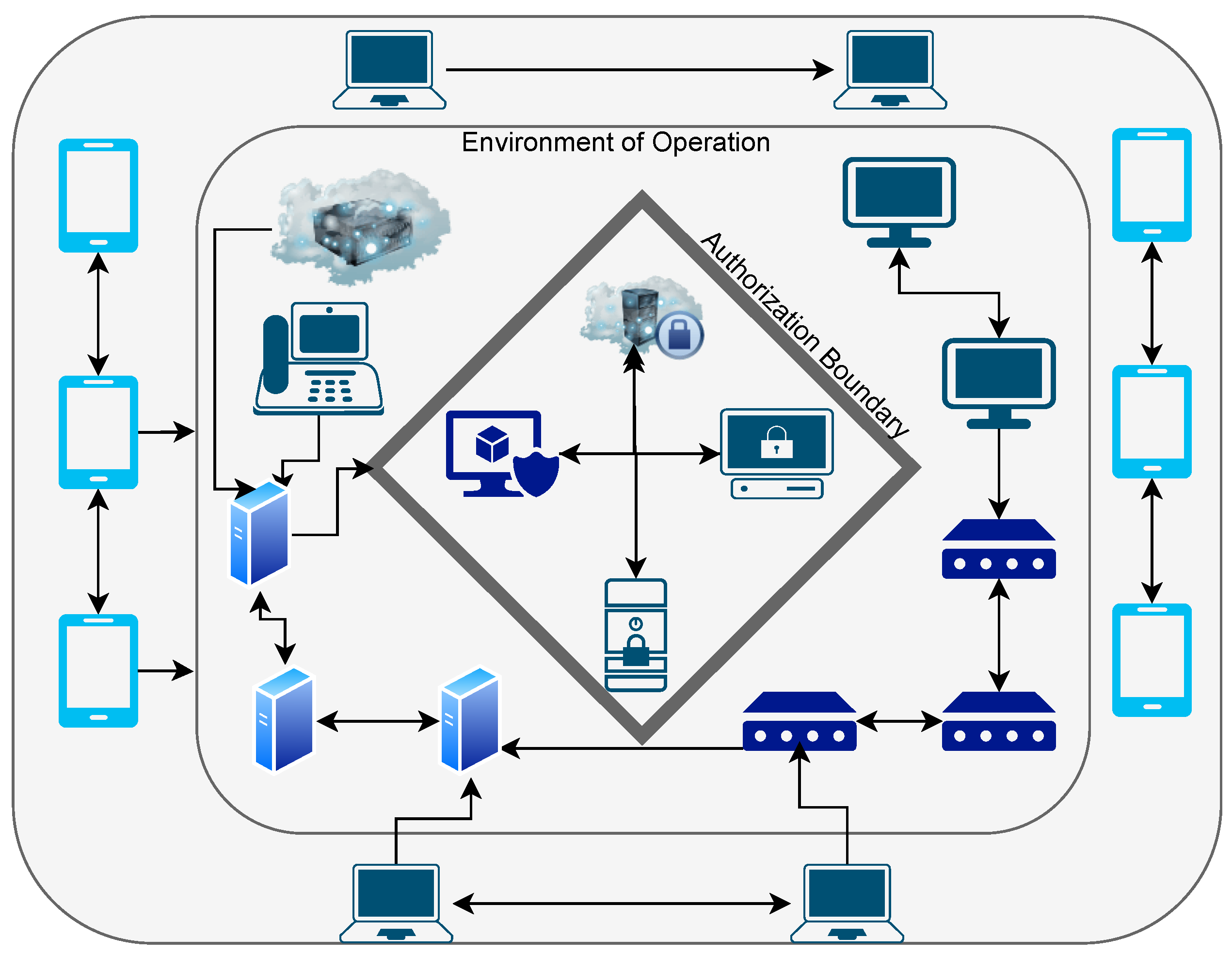

3.3. Networks

3.4. Applications and Workloads

3.5. Data

3.6. Cross-Cutting Capabilities: Governance, Automation, and Visibility

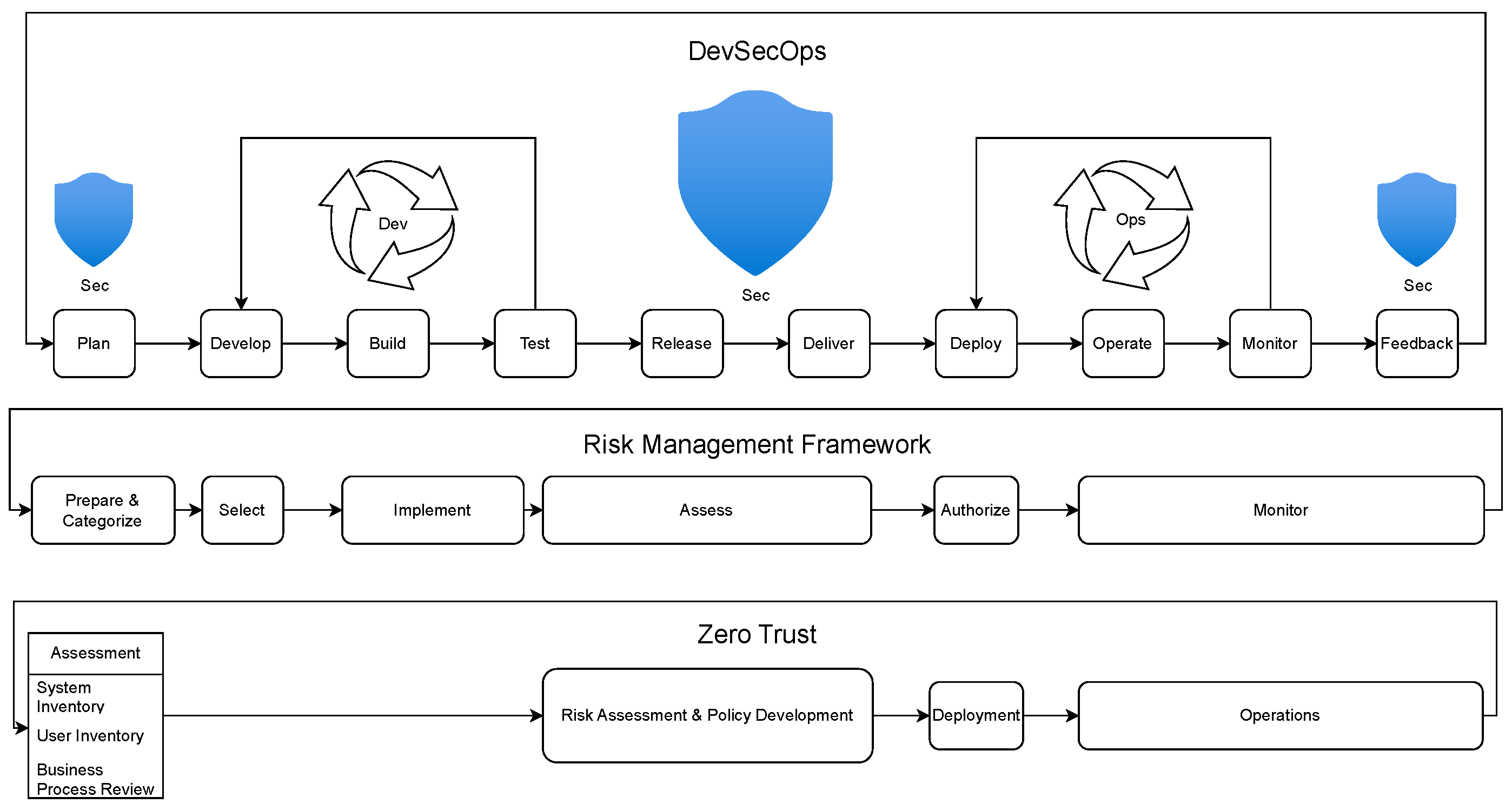

3.7. Integrating RMF, ZT, and DevSecOps in AZTRM-D

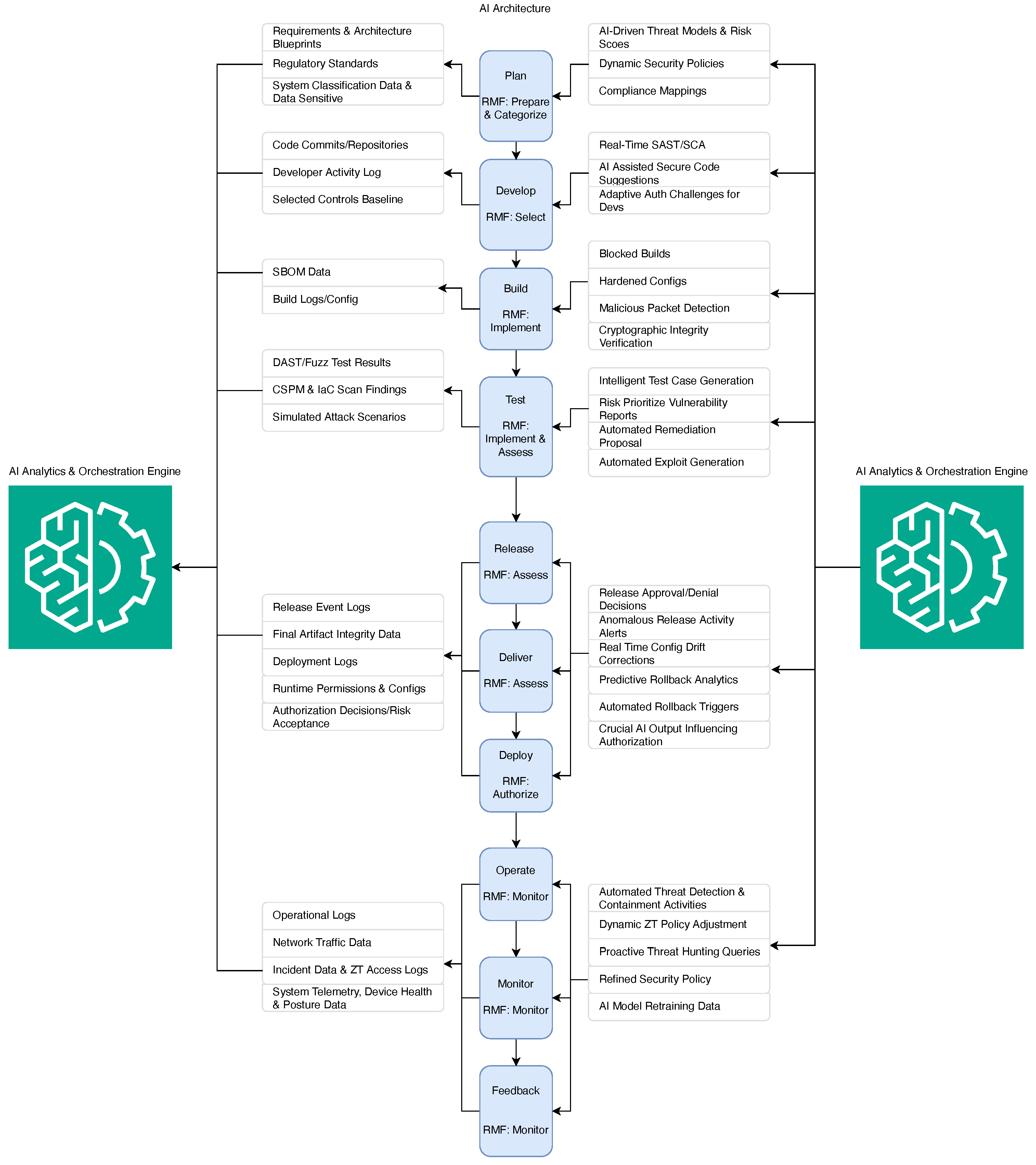

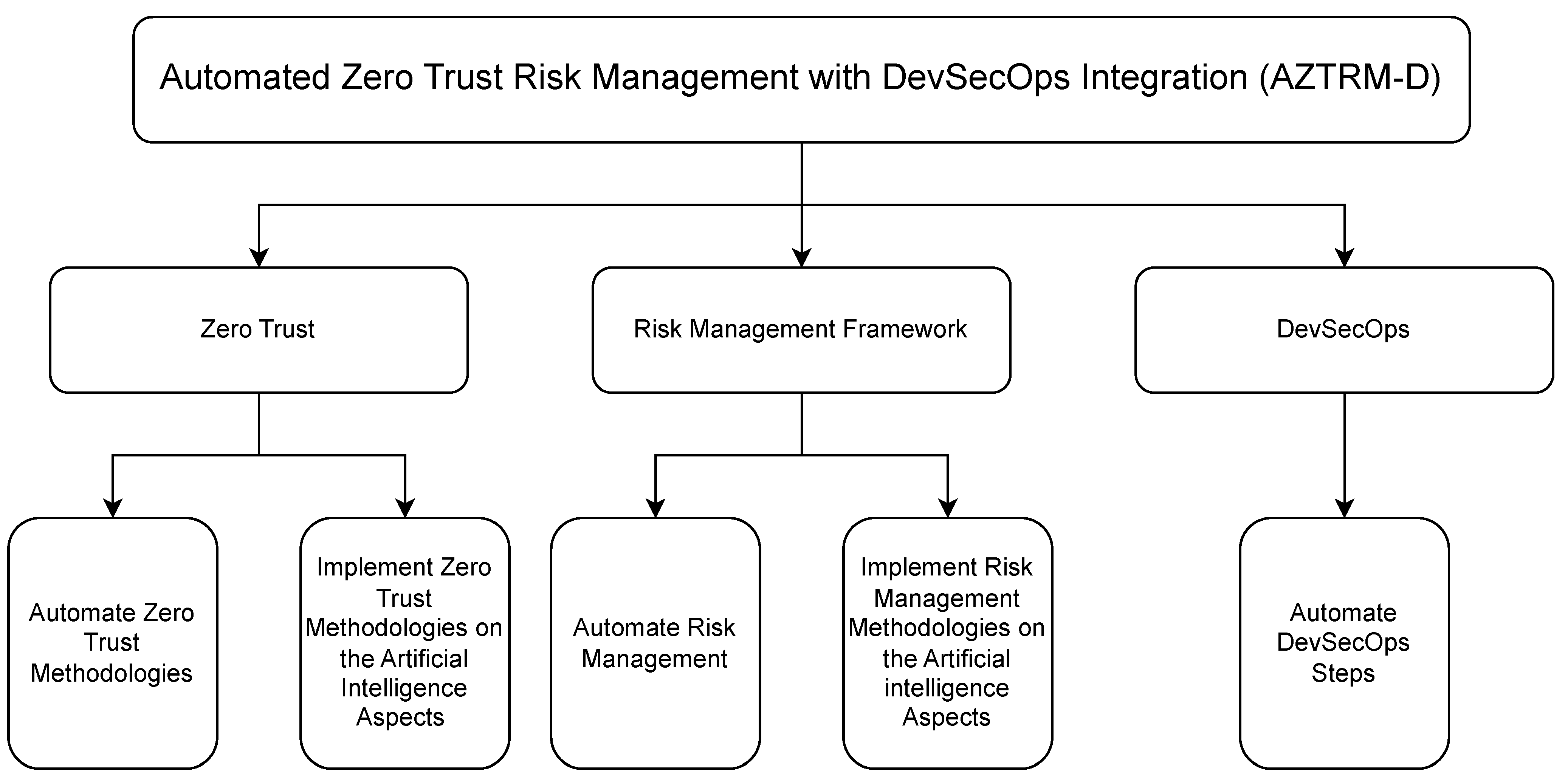

4. Automated Zero Trust Risk Management with DevSecOps Integration (AZTRM-D)

4.1. Phases of AZTRM-D

4.1.1. Planning Phase

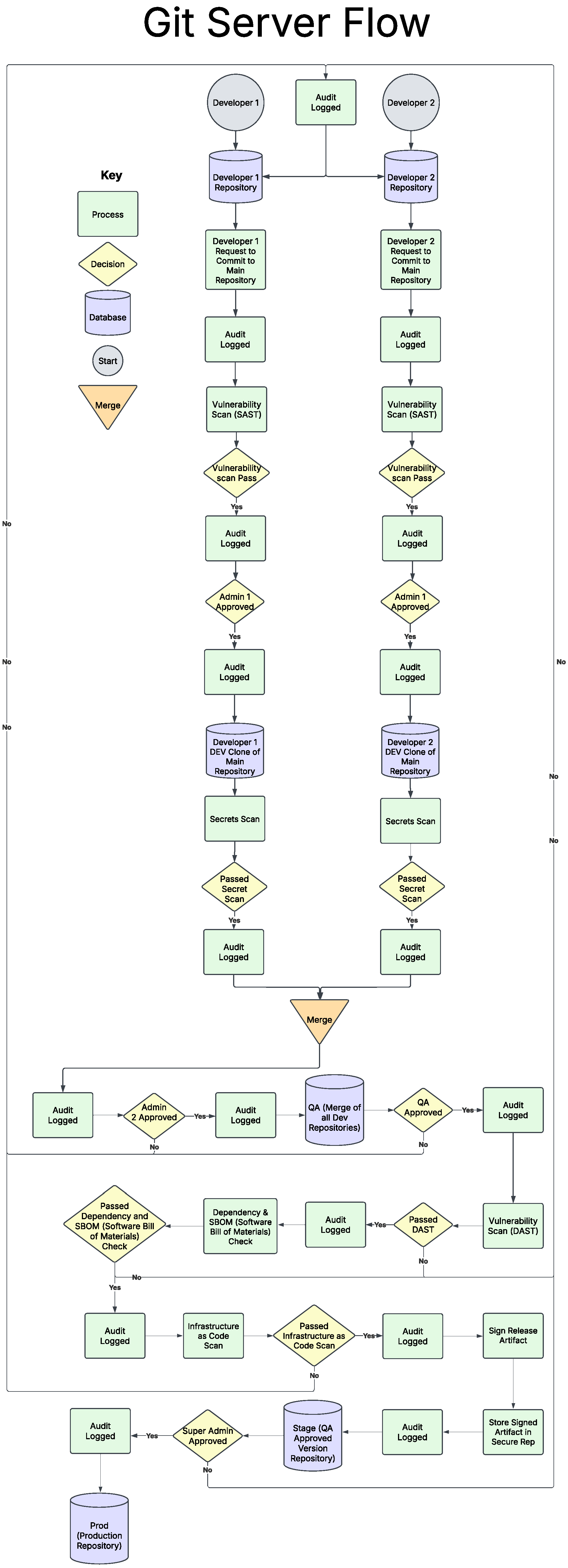

4.1.2. Development Phase

4.1.3. Building Phase

4.1.4. Testing Phase

4.1.5. Release and Deliver Phase

4.1.6. Deployment Phase

4.1.7. Operate, Monitor and Feedback Phase

4.2. NIST Artificial Intelligence Risk Management Framework

4.3. Implementation of Zero Trust Methodologies on Artificial Intelligence Models/Features

5. AZTRM-D Simulated Real-World Scenario

5.1. Initial Scenario

5.2. AZTRM-D Walkthrough

5.3. AZTRM-D Enabled System Versus the Hacker

6. Lab-Built Scenario and Benchmarking

6.1. Implemented Security Posture

6.2. Security Testing Journey

6.2.1. Security Testing Scenario: Factory Default Configuration

- Outsider Perspective:

- Insider Perspective:

6.2.2. Security Testing Scenario: After Initial Hardening

- Outsider Perspective:

- Insider Perspective:

6.2.3. Security Testing Scenario: After Final Hardening

- Outsider Perspective:

- Insider Perspective:

6.3. Quantitative Benchmarking Results

6.3.1. System Technical Overview

6.3.2. User Interaction with AZTRM-D Implemented System

6.3.3. Future Setup Additions

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| Abbreviation | Spell Out | Abbreviation | Spell Out |

| 2FA | Two-Factor Authentication | AES | Advanced Encryption Standard |

| AI | Artificial Intelligence | AI RMF | AI Risk Management Framework |

| ANN-ISM | Artificial Neural Network-Interpretive Structural Modeling | API | Application Programming Interface |

| AZTRM-D | Automated Zero Trust Risk Management with DevSecOps Integration | BYOD | Bring Your Own Device |

| cATO | Continuous Authorization to Operate | C2 | Command and Control |

| CAE | Continuous Access Evaluation | CI/CD | Continuous Integration/Continuous Deployment |

| CFO | Chief Financial Officer | CISA | Cybersecurity and Infrastructure Security Agency |

| CIS | Center for Internet Security | CSPM | Cloud Security Posture Management |

| CPU | Central Processing Unit | DAST | Dynamic Application Security Testing |

| DevSecOps | Development, Security, and Operations | DLP | Data Loss Prevention |

| EDR | Endpoint Detection and Response | FedRAMP | Federal Risk and Authorization Management Program |

| GDPR | General Data Protection Regulation | GPIO | General Purpose Input/Output |

| HIPAA | Health Insurance Portability and Accountability Act | HSM | Hardware Security Module |

| HTTP | Hypertext Transfer Protocol | HTTPS | Hypertext Transfer Protocol Secure |

| IaC | Infrastructure as Code | IAM | Identity and Access Management |

| ICMP | Internet Control Message Protocol | ICS/SCADA | Industrial Control Systems/Supervisory Control and Data Acquisition |

| IDE | Integrated Development Environment | IoBT | Internet of Battlefield Things |

| IOCs | Indicators of Compromise | IoT | Internet of Things |

| ISO | International Organization for Standardization | ISO/IEC | International Organization for Standardization/International Electrotechnical Commission |

| JIT | Just-in-Time | MAC | Media Access Control |

| MFA | Multi-Factor Authentication | ML | Machine Learning |

| MITM | Man-in-the-Middle | MTTR | Mean Time to Remediate |

| NIST | National Institute of Standards and Technology | OS | Operating System |

| OWASP | Open Web Application Security Project | PAM | Privileged Access Management |

| PCI DSS | Payment Card Industry Data Security Standard | PDP/PEP | Policy Decision/Enforcement Point |

| PII | Personally Identifiable Information | PQC | Post-Quantum Cryptography |

| QA | Quality Assurance | RASP | Runtime Application Self-Protection |

| RBAC | Role-Based Access Control | RF | Radio Frequency |

| RMF | Risk Management Framework | SAST | Static Application Security Testing |

| SCA | Software Composition Analysis | S-SDLC | Secure Software and System Development Life Cycle |

| SDL | Security Development Life Cycle | SFTP | Secure File Transfer Protocol |

| SHAP | SHapley Additive exPlanations | SIEM | Security Information and Event Management |

| SOAR | Security Orchestration, Automation, and Response | SOX | Sarbanes-Oxley Act |

| SSH | Secure Shell | TEE | Trusted Execution Environment |

| TLS | Transport Layer Security | UART | Universal Asynchronous Receiver-Transmitter |

| UML | Unified Modeling Language | USB | Universal Serial Bus |

| VNC | Virtual Network Computing | VPN | Virtual Private Network |

| WSN | Wireless Sensor Network | WPA3 | Wi-Fi Protected Access 3 |

| XAI | eXplainable Artificial Intelligence | ZT | Zero Trust |

| ZTNA | Zero Trust Network Access |

References

- Gupta, A.; Rawal, A.; Barge, Y. Comparative Study of Different SDLC Models. Int. J. Res. Appl. Sci. Eng. Technol 2021, 9, 73–80. [Google Scholar] [CrossRef]

- Olorunshola, O.E.; Ogwueleka, F.N. Review of system development life cycle (SDLC) models for effective application delivery. In Proceedings of the Information and Communication Technology for Competitive Strategies (ICTCS 2020) ICT: Applications and Social Interfaces, Udaipur, India, 11–12 December 2020; Springer: Berlin/Heidelberg, Germany, 2022; pp. 281–289. [Google Scholar]

- Albahar, M. A systematic literature review of the current state and challenges of DevSecOps. J. King Saud Univ.-Comput. Inf. Sci. 2022, 34, 7253–7268. [Google Scholar]

- Chahar, S.; Singh, S. Analysis of SDLC Models with Web Engineering Principles. In Proceedings of the 2024 2nd International Conference on Advancements and Key Challenges in Green Energy and Computing (AKGEC), Ghaziabad, India, 21–23 November 2024; IEEE: New York, NY, USA, 2024; pp. 1–7. [Google Scholar]

- de Vicente Mohino, J.; Bermejo Higuera, J.; Bermejo Higuera, J.R.; Sicilia Montalvo, J.A. The application of a new secure software development life cycle (S-SDLC) with agile methodologies. Electronics 2019, 8, 1218. [Google Scholar] [CrossRef]

- Jeganathan, S. DevSecOps: A Systemic Approach for Secure Software Development. ISSA J. 2019, 17, 20. [Google Scholar]

- Shahin, M.; Babar, M.A.; Zahedi, M. DevSecOps: A multivocal literature review. arXiv 2017, arXiv:1705.02856. [Google Scholar]

- Fu, M.; Lyu, M.R.; Lou, J.G. AI for DevSecOps: A Landscape and Future Opportunities. arXiv 2024, arXiv:2404.10173. [Google Scholar] [CrossRef]

- Christanto, H.J.; Singgalen, Y.A. Analysis and design of student guidance information system through software development life cycle (sdlc) and waterfall model. J. Inf. Syst. Inform. 2023, 5, 259–270. [Google Scholar] [CrossRef]

- Saravanos, A.; Curinga, M.X. Simulating the software development lifecycle: The Waterfall model. Appl. Syst. Innov. 2023, 6, 108. [Google Scholar] [CrossRef]

- Pargaonkar, S. A comprehensive research analysis of software development life cycle (SDLC) agile & waterfall model advantages, disadvantages, and application suitability in software quality engineering. Int. J. Sci. Res. Publ. 2023, 13, 345–358. [Google Scholar]

- Kristanto, E.B.; Andrayana, S.; Benramhman, B. Application of Waterfall SDLC Method in Designing Student’s Web Blog Information System at the National University. J. Mantik 2020, 4, 472–482. [Google Scholar]

- Agarwal, P.; Singhal, A.; Garg, A. SDLC model selection tool and risk incorporation. Int. J. Comput. Appl. 2017, 172, 6–10. [Google Scholar] [CrossRef]

- Doğan, O.; Bitim, S.; Hızıroğlu, K. A v-model software development application for sustainable and smart campus analytics domain. Sak. Univ. J. Comput. Inf. Sci. 2021, 4, 111–119. [Google Scholar] [CrossRef]

- Shylesh, S. A study of software development life cycle process models. In Proceedings of the National Conference on Reinventing Opportunities in Management, IT, and Social Sciences, Bangalore, India, 3–4 March 2017; pp. 534–541. [Google Scholar]

- Mahmood, S.; Niazi, M.; Alshayeb, M. Security in DevOps: A systematic mapping study. In Proceedings of the 17th International Conference on Evaluation of Novel Approaches to Software Engineering (ENASE), online, 25–26 April 2022; pp. 145–156. [Google Scholar]

- Al-Zubaidi, M.; Abdullah, A. A systematic literature review on security issues and challenges in DevSecOps. IEEE Access 2023, 11, 83597–83615. [Google Scholar]

- Rahman, M.; Noll, J. Realizing security as code for DevSecOps. J. Sens. Actuator Netw. 2021, 10, 44. [Google Scholar]

- Department of Defense Chief Information Officer (DoD CIO). DoD Enterprise DevSecOps Strategy Guide; Department of Defense Chief Information Officer (DoD CIO): Washington, DC, USA, 2021. [Google Scholar]

- Khari, M.; Vaishali; Kumar, P. Embedding security in software development life cycle (SDLC). In Proceedings of the 2016 3rd International Conference on Computing for Sustainable Global Development (INDIACom), New Delhi, India, 16–18 March 2016; IEEE: New York, NY, USA, 2016; pp. 2182–2186. [Google Scholar]

- Arrey, D.A. Exploring the Integration of Security into Software Development Life Cycle (SDLC) Methodology. Ph.D. Thesis, Colorado Technical University, Colorado Springs, CO, USA, 2019. [Google Scholar]

- Chun, T.J.; En, L.J.; Xuen, M.T.Y.; Xuan, Y.M.; Muzafar, S. Secured Software Development and Importance of Secure Software Development Life Cycle; TechRxiv Preprints. 2023. Available online: https://www.techrxiv.org/doi/full/10.36227/techrxiv.24548416.v1 (accessed on 13 June 2025).

- Lee, M.G.; Sohn, H.J.; Seong, B.M.; Kim, J.B. Secure Software Development Lifecycle Which Supplements Security Weakness for CC Certification; International Information Institute (Tokyo): Tokyo, Japan, 2016; Volume 19, p. 297. [Google Scholar]

- Portell Pareras, O. DevSecOps: S-SDLC. Bachelor’s Thesis, Universitat Politècnica de Catalunya, Barcelona, Spain, 2023. [Google Scholar]

- Mohammed, N.M.; Niazi, M.; Alshayeb, M.; Mahmood, S. Exploring software security approaches in software development lifecycle: A systematic mapping study. Comput. Stand. Interfaces 2017, 50, 107–115. [Google Scholar] [CrossRef]

- Horne, D.; Nair, S. Introducing zero trust by design: Principles and practice beyond the zero trust hype. In Advances in Security, Networks, and Internet of Things; Springer: Cham, Switzerland, 2021; pp. 512–525. [Google Scholar]

- Yaseen, A. Reducing industrial risk with AI and automation. Int. J. Intell. Autom. Comput. 2021, 4, 60–80. [Google Scholar]

- Pan, Z.; Shen, W.; Wang, X.; Yang, Y.; Chang, R.; Liu, Y.; Liu, C.; Liu, Y.; Ren, K. Ambush from all sides: Understanding security threats in open-source software CI/CD pipelines. IEEE Trans. Dependable Secur. Comput. 2023, 21, 403–418. [Google Scholar] [CrossRef]

- Wickramasinghe, C.; Falkner, K.; Falkner, N. Automating threat modeling for DevSecOps using machine learning. Autom. Softw. Eng. 2023, 30, 9. [Google Scholar]

- Collier, Z.A.; Sarkis, J. The zero trust supply chain: Managing supply chain risk in the absence of trust. Int. J. Prod. Res. 2021, 59, 3430–3445. [Google Scholar] [CrossRef]

- Zhu, P.; Zhang, H.; Shi, Y.; Xie, W.; Pang, M.; Shi, Y. A novel discrete conformable fractional grey system model for forecasting carbon dioxide emissions. Environ. Dev. Sustain. 2024, 27, 13581–13609. [Google Scholar] [CrossRef]

- Khan, H.U.; Khan, R.A.; Alwageed, H.S.; Almagrabi, A.O.; Ayouni, S.; Maddeh, M. AI-driven cybersecurity framework for software development based on the ANN-ISM paradigm. Sci. Rep. 2025, 15, 13423. [Google Scholar] [CrossRef] [PubMed]

- National Institute of Standards and Technology. Guide for Applying the Risk Management Framework to Federal Information Systems: A Security Life Cycle Approach; Technical Report NIST Special Publication (SP) 800-37r2; U.S. Department of Commerce: Gaithersburg, MD, USA, 2018. [Google Scholar] [CrossRef]

- Locascio, L.E.; Director, N. NIST Risk Management Framework (RMF) Small Enterprise Quick Start Guide; National Institute of Standards and Technology. 2024. Available online: https://www.nist.gov/publications/nist-risk-management-framework-rmf-small-enterprise-quick-start-guide (accessed on 20 July 2025).

- Majumder, S.; Dey, N. Risk Management Procedures. In A Notion of Enterprise Risk Management: Enhancing Strategies and Wellbeing Programs; Emerald Publishing Limited: Bradford, UK, 2024; pp. 25–40. [Google Scholar]

- McCarthy, C.; Harnett, K. National Institute of Standards and Technology (NIST) Cybersecurity Risk Management Framework Applied to Modern Vehicles; Technical report, United States; Department of Transportation. National Highway Traffic Safety: Washington, DC, USA, 2014. [Google Scholar]

- Kohnke, A.; Sigler, K.; Shoemaker, D. Strategic risk management using the NIST risk management framework. EDPACS 2016, 53, 1–6. [Google Scholar] [CrossRef]

- Reimanis, D. Risk Management Framework. In Realizing Complex Integrated Systems; CRC Press: Boca Raton, FL, USA, 2025; pp. 367–382. [Google Scholar]

- Stoltz, M. The Road to Compliance: Executive Federal Agencies and the NIST Risk Management Framework. arXiv 2024, arXiv:2405.07094. [Google Scholar] [CrossRef]

- Ajish, D. The significance of artificial intelligence in zero trust technologies: A comprehensive review. J. Electr. Syst. Inf. Technol. 2024, 11, 30. [Google Scholar] [CrossRef]

- Ferreira, L.; Pilastri, A.L.; Martins, C.; Santos, P.; Cortez, P. An Automated and Distributed Machine Learning Framework for Telecommunications Risk Management. In Proceedings of the ICAART (2), Valletta, Malta, 22–24 February 2020; pp. 99–107. [Google Scholar]

- Althar, R.R.; Samanta, D.; Kaur, M.; Singh, D.; Lee, H.N. Automated risk management based software security vulnerabilities management. IEEE Access 2022, 10, 90597–90608. [Google Scholar] [CrossRef]

- Pandey, P.; Katsikas, S. The future of cyber risk management: AI and DLT for automated cyber risk modelling, decision making, and risk transfer. In Handbook of Research on Artificial Intelligence, Innovation and Entrepreneurship; Edward Elgar Publishing: Cheltenham, UK, 2023; pp. 272–290. [Google Scholar]

- Swaminathan, N.; Danks, D. Application of the NIST AI Risk Management Framework to Surveillance Technology. arXiv 2024, arXiv:2403.15646. [Google Scholar] [CrossRef]

- Sterbak, M.; Segec, P.; Jurc, J. Automation of risk management processes. In Proceedings of the 2021 19th International Conference on Emerging eLearning Technologies and Applications (ICETA), Kosice, Slovakia, 11–12 November 2021; IEEE: New York, NY, USA, 2021; pp. 381–386. [Google Scholar]

- Rios, E.; Al-Kaswan, A.; Sun, H. Automating security analysis of infrastructure as code. In Proceedings of the 13th International Conference on Availability, Reliability and Security, Hamburg, Germany, 27–30 August 2018; pp. 1–10. [Google Scholar]

- Hutchison, D.; Lang, C.; Wagner, J. Automating the risk management framework (RMF) for agile systems. In Proceedings of the 2022 Systems and Information Engineering Design Symposium (SIEDS), Charlottesville, VA, USA, 28–29 April 2022; IEEE: New York, NY, USA, 2022; pp. 196–201. [Google Scholar]

- Granata, D.; Rak, M.; Salzillo, G. Risk analysis automation process in it security for cloud applications. In Proceedings of the International Conference on Cloud Computing and Services Science; Springer: Berlin/Heidelberg, Germany, 2021; pp. 47–68. [Google Scholar]

- Rose, S.; Borchert, O.; Mitchell, S.; Connelly, S. Zero Trust Architecture; Technical Report 800-207; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2020. [Google Scholar] [CrossRef]

- Garbis, J.; Chapman, J.W. Zero Trust Security: An Enterprise Guide; Springer: Berlin/Heidelberg, Germany, 2021. [Google Scholar]

- Gbohunmi, O.; Abayomi-Alli, A.; Abayomi-Alli, O.; Misra, S. Zero Trust Architecture: A Systematic Literature Review. arXiv 2025, arXiv:2503.11659. [Google Scholar]

- He, Y.; Huang, D.; Chen, L.; Ni, Y.; Ma, X. A survey on zero trust architecture: Challenges and future trends. Wirel. Commun. Mob. Comput. 2022, 2022, 1–13. [Google Scholar] [CrossRef]

- Edo, O.C.; Tenebe, T.; Etu, E.E.; Ayuwu, A.; Emakhu, J.; Adebiyi, S. Zero Trust Architecture: Trend and Impacton Information Security. Int. J. Emerg. Technol. Adv. Eng. 2022, 12, 140. [Google Scholar] [CrossRef] [PubMed]

- Simpson, W.R. Zero trust philosophy versus architecture. In Proceedings of the World Congress on Engineering, London, UK, 6–8 July 2022. [Google Scholar]

- Cybersecurity and Infrastructure Security Agency (CISA). Zero Trust Maturity Model; v2; Cybersecurity and Infrastructure Security Agency (CISA): Arlington, VA, USA, 2023. [Google Scholar]

- Nurkhamid, N.; Hussin, H.; Zulhalim, H. AI-driven identity and access management (IAM): The future of zero trust security. World J. Adv. Res. Rev. 2024, 21, 1138–1144. [Google Scholar] [CrossRef]

- Gupta, A.; Gupta, P.; Pandey, U.P.; Kushwaha, P.; Lohani, B.P.; Bhati, K. ZTSA: Zero Trust Security Architecture a Comprehensive Survey. In Proceedings of the 2024 International Conference on Communication, Computer Sciences and Engineering (IC3SE), Gautam Buddha Nagar, India, 9–11 May 2024; IEEE: New York, NY, USA, 2024; pp. 378–383. [Google Scholar]

- Meng, L.; Huang, D.; An, J.; Zhou, X.; Lin, F. A continuous authentication protocol without trust authority for zero trust architecture. China Commun. 2022, 19, 198–213. [Google Scholar] [CrossRef]

- Tsai, M.; Lee, S.; Shieh, S.W. Strategy for implementing of zero trust architecture. IEEE Trans. Reliab. 2024, 73, 93–100. [Google Scholar] [CrossRef]

- Weinberg, A.I.; Cohen, K. Zero Trust Implementation in the Emerging Technologies Era: Survey. arXiv 2024, arXiv:2401.09575. [Google Scholar] [CrossRef]

- Zanasi, C.; Russo, S.; Colajanni, M. Flexible zero trust architecture for the cybersecurity of industrial IoT infrastructures. Ad Hoc Netw. 2024, 156, 103414. [Google Scholar] [CrossRef]

- Annabi, M.; Zeroual, A.; Messai, N. Towards zero trust security in connected vehicles: A comprehensive survey. Comput. Secur. 2024, 145, 104018. [Google Scholar] [CrossRef]

- Sims, R. Implementing a Zero Trust Architecture For ICS/SCADA Systems. Doctoral Dissertation, Dakota State University, Madison, SD, USA, 2024; p. 445. Available online: https://scholar.dsu.edu/theses/445 (accessed on 20 July 2025).

- Kim, Y.; Sohn, S.G.; Jeon, H.S.; Lee, S.M.; Lee, Y.; Kim, J. Exploring Effective Zero Trust Architecture for Defense Cybersecurity: A Study. KSII Trans. Internet Inf. Syst. 2024, 18, 2665–2691. [Google Scholar] [CrossRef]

- Yao, Q.; Wang, Q.; Zhang, X.; Fei, J. Dynamic access control and authorization system based on zero-trust architecture. In Proceedings of the 2020 1st International Conference on Control, Robotics and Intelligent System, Xiamen, China, 27–29 October 2020; pp. 123–127. [Google Scholar]

- Greenwood, D. Applying the principles of zero-trust architecture to protect sensitive and critical data. Netw. Secur. 2021, 2021, 7–9. [Google Scholar] [CrossRef]

- Schwartz, R. Informing an Artificial Intelligence Risk Aware Culture with the NIST AI Risk Management Framework; American Bar Association: Chicago, IL, USA, 2024. [Google Scholar]

- Ahn, G.; Jang, J.; Choi, S.; Shin, D. Research on Improving Cyber Resilience by Integrating the Zero Trust security model with the MITRE ATT&CK matrix. IEEE Access 2024, 12, 89291–89309. [Google Scholar] [CrossRef]

- Hosney, E.S.; Halim, I.T.A.; Yousef, A.H. An Artificial Intelligence Approach for Deploying Zero Trust Architecture (ZTA). In Proceedings of the 2022 5th International Conference on Computing and Informatics (ICCI), New Cairo, Egypt, 9–10 March 2022; pp. 343–350. [Google Scholar] [CrossRef]

- Salah, M.; Al-Kuwaiti, M.; Al-Mulla, M.; El-Sayed, H. Threat modeling for AI/ML systems: The a-z of threat modeling. IEEE Secur. Priv. 2023, 21, 6–14. [Google Scholar]

- Hernandez, J.; Mondragon, O.; Gil, R. A systematic review on threat modeling for the software development lifecycle. Comput. Secur. 2023, 132, 103348. [Google Scholar]

- Levene, M.; Adel, T.; Alsuleman, M.; George, I.; Krishnadas, P.; Lines, K.; Luo, Y.; Smith, I.; Duncan, P. A Life Cycle for Trustworthy and Safe Artificial Intelligence Systems; NPL Report MS 57; National Physical Laboratory: Teddington, UK, 2024. [Google Scholar] [CrossRef]

- Hoffman, R.; Mueller, J.; Klein, G.; Litman, J. Four Principles of Explainable Artificial Intelligence; Technical Report NIST.AI.100-1; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2022. [Google Scholar] [CrossRef]

- Xu, C.; Zhu, P.; Wang, J.; Fortino, G. Improving the local diagnostic explanations of diabetes mellitus with the ensemble of label noise filters. Inf. Fusion 2025, 117, 102928. [Google Scholar] [CrossRef]

- Akitra. The Ethical Considerations of AI in Cybersecurity: Balancing Security Needs with Algorithmic Bias and Transparency; Medium; Akitra: Sunnyvale, CA, USA, 2024. [Google Scholar]

- Lundberg, S.M.; Lee, S.I. A unified approach to interpreting model predictions. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 4765–4774. [Google Scholar]

- Louragli, I.; El Oualkadi, A.; El Hanafi, H. A survey of security in zero trust network architectures. GSC Adv. Res. Rev. 2025, 28, 130–148. [Google Scholar] [CrossRef]

- Shanmugavadivelu, P. Next-Gen VM Security: Confidential Computing, Trusted Launch, and Zero Trust at Scale. Technix Int. J. Eng. Res. 2025, 12, b881–b891. [Google Scholar] [CrossRef]

| Method | Key Focus/Approach | Core Characteristics |

|---|---|---|

| Embedded Security in Traditional SDLC [20] | This method integrates security deeply into each phase of the traditional SDLC. | It identifies security requirements early, uses threat modeling for design, prioritizes secure coding and static analysis, employs layered testing, and focuses on continuous monitoring and patch management. |

| Early and Continuous Integration [21] | This approach emphasizes early and continuous security integration through stakeholder involvement and education. | It aligns security requirements with business objectives, involves security experts and stakeholders, fosters a culture of security awareness, and integrates automated, continuous security testing throughout all phases. |

| Agile-Adapted Security [5] | This method integrates security into Agile methodologies, making it well-suited for dynamic and iterative development. | It embeds security activities like threat modeling and reviews directly into Agile sprints. Security testing is performed iteratively, and a “security backlog” helps prioritize and manage tasks. |

| Common Criteria (CC) Certification Aligned [23] | This approach is tailored to meet the rigorous requirements of CC certification. | It emphasizes aligning with specific CC security controls and extensive documentation. Formal risk management is key, requiring detailed risk assessments (e.g., ISO/IEC 27005) to systematically identify and mitigate threats. |

| DevSecOps Transformation [6,24] | This method transforms the DevOps process into a holistic DevSecOps approach, ensuring security is a shared responsibility across all stages. | Key features include automated security integration into the CI/CD pipeline, fostering a collaborative culture between development, operations, and security teams, and using continuous monitoring with real-time feedback for rapid remediation. |

| Categorized Software Security Strategies [25] | This method is based on a comprehensive study that categorized software security strategies into five main approaches. | These include Secure Requirements Modeling; Vulnerability Identification via static and dynamic analysis; Software Security-Focus Processes using frameworks like Microsoft SDL; Extended UML-Based Secure Modeling; and Non-UML-Based Secure Modeling. |

| Proposed AZTRM-D Methodology (This Work) [26,27] | This is a unified, AI-augmented process that builds upon existing S-SDLC methods. | It integrates proactive DevSecOps, structured NIST RMF, and stringent Zero Trust (ZT) access controls. A unique aspect is its direct application of ZT principles to the AI systems within the SDLC, ensuring automation tools are continuously verified. This creates a more resilient security framework. |

| Framework/Approach | Core Principles | AI/Automation Focus | Gap Addressed by AZTRM-D |

|---|---|---|---|

| Standard DevSecOps [17] | Integration of security into CI/CD; automation of security checks; shared responsibility culture. | Automated SAST/DAST scanning; CI/CD pipeline automation. | Lacks a prescribed, formal risk management structure (like RMF) and a guiding access control philosophy (like ZT). Security can still be ad hoc. |

| AI-Enhanced Threat Modeling [29] | Using ML/AI to predict and identify design-level security threats early in the life cycle. | Automated generation of threat models from system artifacts; predictive vulnerability analysis. | Primarily focused on the “left side” (design/planning). Does not extend across the full operational life cycle, including runtime monitoring and incident response. |

| Zero Trust Supply Chain [30] | Extending ZT principles (“never trust, always verify”) to all third-party software components, dependencies, and build processes. | Automated dependency scanning (SCA); cryptographic verification of software artifacts (signing). | Focuses heavily on supply chain and artifact integrity but does not inherently include continuous operational risk management or a full DevSecOps pipeline structure. |

| AZTRM-D (This Work) | Synergistic integration of DevSecOps, NIST RMF, and ZT principles. | Pervasive AI orchestration across all phases, from AI-driven risk assessment and policy generation to automated ZT enforcement and adaptive threat response. | Provides a single, holistic framework that unifies agile development, structured risk governance, and adaptive access control, ensuring security is continuous, risk-based, and context-aware from planning to operations. |

| Pillar | Traditional Weakness | Zero Trust Objective (Optimal Maturity) | AI-Enhanced Role in AZTRM-D |

|---|---|---|---|

| Identity | Static credentials (passwords) and roles grant broad, persistent access once authenticated. | Grant access on a per-session basis based on dynamic risk assessments of the user and their context. Enforce least privilege. | AI provides continuous, risk-based authentication by analyzing user behavior in real time. It automates adaptive controls, such as triggering MFA or revoking sessions upon detecting anomalous activity [56]. |

| Devices | Any device on the “trusted” network is allowed access, with only periodic checks for compliance (e.g., antivirus). | Continuously verify the security posture of every device attempting to access resources and grant access based on device health. | AI-driven endpoint analytics continuously validate device posture (patch level, integrity, running processes). It can automatically isolate non-compliant or compromised devices to prevent them from accessing resources [8]. |

| Networks | A hard outer perimeter with a soft, trusted interior (“castle-and-moat”). Attackers can move laterally with ease once inside. | Use micro-segmentation to create granular security zones. Encrypt all traffic and log every access request. | AI-powered network traffic analysis detects anomalous east-west traffic patterns indicative of lateral movement. It can dynamically adjust firewall rules and micro-segments to contain threats in real time. |

| Applications & Workloads | Applications are trusted by default once deployed. Security is focused on the perimeter, not the application itself. | Secure application access for all users, secure application-to-application communication, and harden workloads against runtime threats. | AI enhances runtime application self-protection (RASP) by detecting and blocking exploits in real time. It automates vulnerability scanning and patching within the CI/CD pipeline, ensuring secure configurations [29]. |

| Data | Data is protected primarily by controlling access to the network or server where it resides. | Categorize and classify data based on sensitivity. Enforce access controls and encrypt data at rest, in transit, and in use. | AI automates data discovery and classification across all systems. It monitors data access patterns to detect and block potential exfiltration attempts before they succeed [42]. |

| Cross-Cutting Capabilities | Manual governance, fragmented visibility, and reactive, human-driven security responses. | Achieve comprehensive visibility, automate security orchestration, and maintain dynamic governance across all pillars. | AI serves as the engine for all three: providing advanced analytics for visibility, orchestrating automated incident response, and dynamically updating governance policies based on emerging threats and risks [8]. |

| Security Metric | Baseline (Factory Default NVIDIA Orin) | AZTRM-D Hardened Configuration (NVIDIA Orin) |

|---|---|---|

| Open Network Ports | 4 Ports (SSH, VNC, HTTP, HTTPS) | 0 Ports (Complete elimination of network attack surface). |

| Initial Access Time (External) | <5 min (via default credential brute-force). | Not Possible (No remote or physical vectors found). |

| Privilege Escalation | Immediate (single sudo su command from default nvidia user). | Blocked (Requires explicit, multi-step validation; default paths removed). |

| Vulnerability Detection Rate (CI/CD) | 0% (No automated scanning integrated into development pipeline). | 98% (Automated SAST, DAST, SCA, CSPM, and IaC scans). |

| Mean Time to Remediate (MTTR) | Weeks to Months (Manual patching, firmware updates, or re-flashing). | 1–3 days (Automated alerting and patching pipeline, with AI-driven remediation or automated rollback). |

| Supply Chain Vulnerability | High (No dependency scanning or artifact signing for device software). | Low (Mandatory SBOM validation and cryptographic signing for all software components). |

| Performance Metric | Measurement | Significance |

|---|---|---|

| Resource Utilization (AI Scans) | 12–18% average CPU overhead during CI/CD pipeline scans (SAST/SCA). | Demonstrates that continuous security scanning is computationally feasible on edge devices without crippling performance. |

| Policy Enforcement Latency | <40 ms for ZT access policy evaluation at the Policy Enforcement Point. | Ensures that stringent, real-time access checks do not introduce significant delays that would impact user experience or system-to-system communication. |

| AI Model Training Time (Initial) | 14 h to train the insider threat model using three months of log data on a standard cloud GPU instance. | Quantifies the initial setup cost for the AI components, providing a baseline for resource planning. |

| Scalability Test (Endpoints) | Successfully managed and monitored up to 1000 concurrent IoT devices per orchestrator instance in a simulated environment. | Validates the framework’s architecture can scale to support large enterprise deployments. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Coston, I.; Hezel, K.D.; Plotnizky, E.; Nojoumian, M. Enhancing Secure Software Development with AZTRM-D: An AI-Integrated Approach Combining DevSecOps, Risk Management, and Zero Trust. Appl. Sci. 2025, 15, 8163. https://doi.org/10.3390/app15158163

Coston I, Hezel KD, Plotnizky E, Nojoumian M. Enhancing Secure Software Development with AZTRM-D: An AI-Integrated Approach Combining DevSecOps, Risk Management, and Zero Trust. Applied Sciences. 2025; 15(15):8163. https://doi.org/10.3390/app15158163

Chicago/Turabian StyleCoston, Ian, Karl David Hezel, Eadan Plotnizky, and Mehrdad Nojoumian. 2025. "Enhancing Secure Software Development with AZTRM-D: An AI-Integrated Approach Combining DevSecOps, Risk Management, and Zero Trust" Applied Sciences 15, no. 15: 8163. https://doi.org/10.3390/app15158163

APA StyleCoston, I., Hezel, K. D., Plotnizky, E., & Nojoumian, M. (2025). Enhancing Secure Software Development with AZTRM-D: An AI-Integrated Approach Combining DevSecOps, Risk Management, and Zero Trust. Applied Sciences, 15(15), 8163. https://doi.org/10.3390/app15158163