1. Introduction

Public interest in experiencing and appreciating natural ecotourism resources has grown steadily with the continuous advancement of socio-economic development. However, due to their inherent vulnerability and ecological sensitivity, these resources often prove incompatible with intrusive economic activities such as tourism development. Wildlife, as a critical component of ecosystems, is particularly susceptible, with human activities posing direct threats to their habitats and long-term survival. As a result, achieving a balance between ecological conservation and economic growth has become an increasingly urgent focus in contemporary research [

1,

2,

3,

4]. Recent advancements in computer graphics have introduced promising solutions to this challenge, particularly through the development of digital conservation methods and non-invasive display technologies. These approaches help minimize disruptions to wildlife habitats while offering the public engaging, high-quality environmental education. This study centers on the digital conservation of wildlife in the Sanjiangyuan region of the Tibetan Plateau, an ecologically vital area often referred to as the “Third Pole of the Earth” due to its unique geography and global environmental significance. This high-altitude ecosystem is home to a rich diversity of flora and fauna, including endangered species such as the white-lipped deer, Przewalski’s gazelle, and snow leopard, along with distinctive endemic vegetation. The Sanjiangyuan region is not only a sensitive indicator of climate change but also a critical area for nature conservation. However, tensions between the demands of cultural tourism and the imperative of ecological preservation persist [

5]. Traditional methods of wildlife display, such as field observations and static imagery, often fail to meet the increasing public demand for immersive, informative experiences while preserving fragile ecosystems. In this context, our research aims to explore innovative digital strategies that reconcile the dual goals of ecological protection and cultural communication.

In recent years, the rapid evolution of augmented reality (AR) and virtual reality (VR) technologies has presented new possibilities for digital wildlife representation. Nevertheless, existing approaches still face significant limitations [

6,

7,

8]. For instance, a VR-based virtual simulation of the Chinese white dolphin combines morphological data and field survey results with conventional texture mapping [

9]. Similarly, AR and traditional textures simulate six rare wildlife species but lack fine rendering of skin and fur details [

10]. A hybrid hair rendering method with realistic lighting effects has been developed, though it required high-performance hardware, limiting its applicability on mobile platforms [

11]. In contrast, virtual animal companions are present in mixed-reality contexts but without an emphasis on conservation [

12]. The role of extended reality (XR) in sustainable tourism with a focus on education has been explored, but used overly simplistic animal models [

13]. The influence of AR/VR technologies on perceptions of nature have been analyzed; however, that study did not engage with the critical tension between ecotourism development and wildlife conservation [

14]. Common limitations across these studies include insufficient realism in fur rendering, limited mobile performance, and a lack of rich user interaction, constraints that significantly hinder their effectiveness in supporting ecological conservation efforts.

To address the limitations of existing fur rendering techniques for digital wildlife conservation, this study introduces an innovative fur simulation algorithm based on the Texture Procedural Overlay Technique (TPOT), integrated with AR technology, utilizing the white-lipped deer of the Sanjiangyuan region as a case study. The TPOT algorithm leverages multi-layered procedural texture overlays and physically based rendering (PBR) principles to significantly enhance the visual realism of animal fur by accurately simulating physical properties, including anisotropic light interactions and natural textural variations. Additionally, an AR-driven interactive framework is developed to seamlessly integrate high-fidelity 3D models into real-world environments, thereby enhancing user immersion and engagement. Comparative experiments demonstrate that this approach surpasses conventional methods, such as traditional texture mapping and particle-based hair systems, in both static visual fidelity and animation rendering efficiency. User evaluations from diverse academic groups further validate the method’s practical significance for advancing scientific research and environmental education, highlighting its potential as a transformative solution for non-invasive wildlife representation and sustainable ecotourism.

2. Materials and Methods

The Texture Procedural Overlay Technique (TPOT)-based fur simulation algorithm and associated case study were implemented between March and June 2024. A physically rendered 3D model of the white-lipped deer was developed based on detailed hair property data obtained from a specimen housed at the Qinghai-Tibet Nature and Wildlife Museum. Two control models were created for comparative analysis using conventional texture mapping and particle hair simulation techniques. The three models were evaluated regarding fur realism and rendering efficiency, with assessments conducted at both global and local levels. Subsequently, the models were deployed using AR technology on Android platforms to facilitate a non-invasive, interactive wildlife observation experience. User testing and evaluation involved university students from a range of academic disciplines. The objective of this methodological framework was to assess the effectiveness of the proposed approach in enhancing wildlife representation while contributing to the broader goals of ecological conservation and sustainable cultural tourism on the Tibetan Plateau.

2.1. Experimental Environment Configuration

The experimental setup utilized Blender open-source software (version 4.0) for 3D modeling and Unity3D (version 2021.3.34f1c1) for integration with the augmented reality platform. The hardware configuration included a 13th generation Intel Core i5-13600KF CPU operating at 3.5 GHz, paired with an NVIDIA GeForce RTX 3060 GPU featuring 8 GB of dedicated video memory. Mobile deployment was tested on a Samsung Galaxy S8 (64 GB model), ensuring compatibility and performance validation on mainstream Android devices.

2.2. TPOT Algorithm Methodology

Physically Based Rendering (PBR) is a rendering technique that simulates the interaction between light and materials based on real-world physical laws, thereby achieving high visual realism [

15]. The PBR models surface as aggregations of countless microscopic, randomly oriented specular facets, known as microplanes, whose varying orientations affect surface normals and ultimately influence light reflection and refraction behavior, as illustrated in

Figure 1.

2.3. Core Principles of the TPOT-Based Fur Simulation Algorithm

The TPOT algorithm integrates real-time procedural texture generation with physically informed rendering principles to construct dynamic multi-layered hair textures. Unlike traditional approaches that rely on static, high-resolution texture maps, TPOT generates and overlays texture layers in real time. This method significantly reduces memory consumption and computational overhead while enhancing visual fidelity.

2.4. Algorithm Workflow

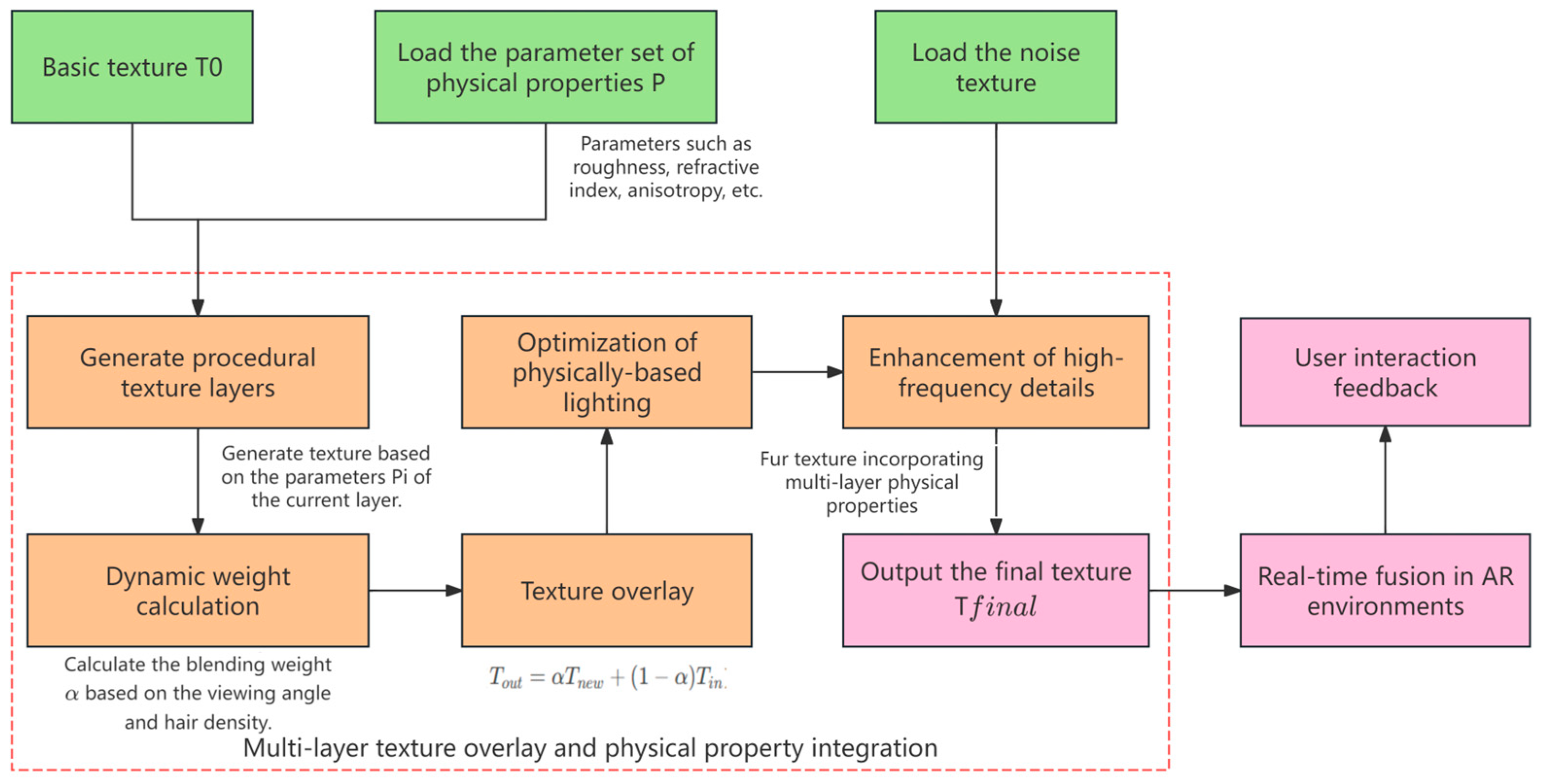

The TPOT-based fur simulation algorithm follows a structured multi-step workflow, as depicted in

Figure 2.

2.4.1. Physical Attribute Mapping

An initial texture layer with physical attributes is generated based on a defined set of physical property parameters P = {p1, p2, …, pn}.

2.4.2. Procedural Texture Overlay

Texture programming superposition is performed, using the weighted mixture Equation (1); the physical attribute texture layer is programmed in superposition on the base texture T

0.

Tin is the base texture T0, which is programmatically overlaid with a new texture layer Tnew using a weighted mixture formula. The parameter α controls the blending weight between Tnew and T0, ensuring that the base texture T0 contributes to the final composite texture Tout. Thus, T0 is explicitly incorporated as Tin in Equation (1), forming the foundation for the multi-layered texture overlay process.

2.4.3. Lighting Optimization

A physics-based lighting model is applied to simulate anisotropic reflection behavior characteristic of hair fibers. The lighting model includes both diffuse and specular reflection components, represented as follows:

Kd · cosθ is the diffuse reflection term, is the highlight reflection term, and Kd, Ks are the reflection coefficients, determined by different physical properties.

2.4.4. Microscopic Detail Enhancement

To enrich visual detail and realism, stochastic noise functions are employed to introduce fine-scale texture variations, simulating natural fur irregularities.

3. Results and Discussion

Prior to conducting comparative experiments to validate the performance of the proposed TPOT-based fur simulation algorithm, we performed a comprehensive review of mainstream techniques in 3D animal fur rendering. Specifically, we analyzed three representative approaches: (1) traditional texture mapping-based 3D modeling [

16], (2) particle-based dynamic fur simulation [

17], and (3) curve function-based hair morphology modeling [

18]. Each method was evaluated under identical conditions using Blender and Unity AR platforms [

19], focusing on rendering time, computational efficiency, and resource consumption during the generation of complex fur models. The experimental results, summarized in

Table 1, provided a quantitative foundation for assessing algorithmic performance and guided the selection of two benchmark methods for direct comparison with the TPOT algorithm.

Based on the experimental data, the traditional texture mapping algorithm exhibited the highest rendering efficiency, offering significantly faster processing speeds compared to the other two approaches. Its low computational demands make it particularly suitable for AR applications on mobile platforms. However, this method demonstrates the lowest performance in replicating realistic fur textures. In contrast, although particle-based and curve function-based hair modeling methods show comparable rendering times, the particle system provides superior visual detail and is relatively easier to implement, positioning it as a strong alternative. Consequently, traditional texture mapping and particle-based hair simulation were selected as control methods for constructing the white-lipped deer 3D model and its AR interactive display.

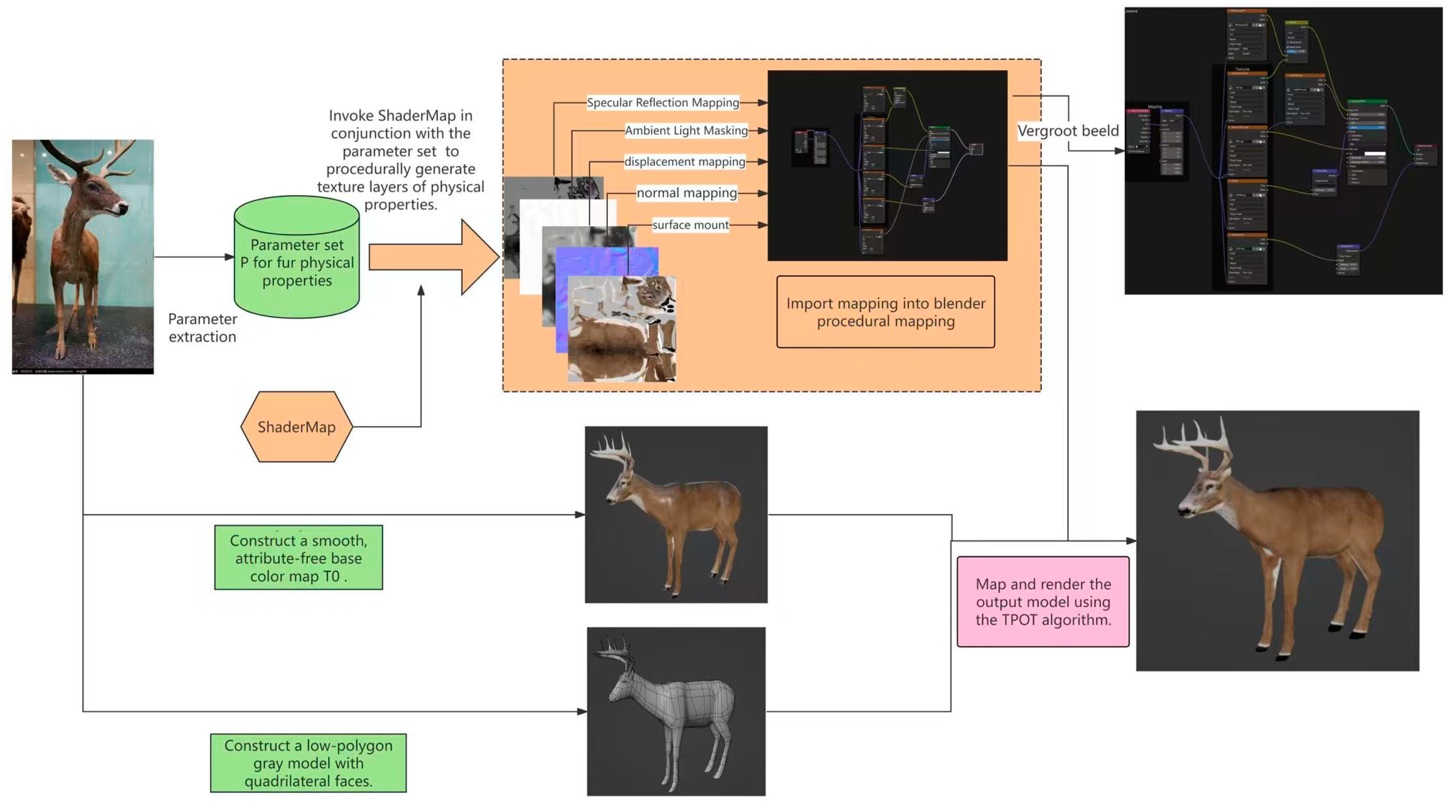

To ensure biological accuracy, a detailed physical examination of a white-lipped deer specimen was conducted at the Qinghai-Tibet Nature and Wildlife Museum. Observations revealed that the deer’s fur comprises long, coarse hairs with hollow medullae, offering thermal insulation and resistance to harsh alpine conditions. Male specimens also exhibit a prominent “creased collar” of stiff, reversed hairs along the shoulders and upper back. Based on these morphological features, a comprehensive set of physical parameters (P) was extracted. Leveraging these parameters, the TPOT algorithm, in conjunction with ShaderMap [

20], was employed to generate multiple physically based texture layers, including metallicness, ambient occlusion, and hair height maps. These layers simulate microfaceted surfaces that realistically reproduce the fur’s interaction with light. Weighted blending of each texture layer P

i with the base texture T

0 was applied to achieve a seamless composite texture. Final refinements involved the addition of microscopic hair details to further enhance visual fidelity. The overall workflow is depicted in

Figure 3.

3.1. Three-Dimensional Reconstruction of Control Models for the White-Lipped Deer

In computer graphics, animal fur simulation is commonly achieved using either the particle hair algorithm or traditional texture mapping. The particle hair algorithm offers high realism by closely replicating the natural structure and dynamics of animal hair. However, its significant resource consumption restricts its applicability on mobile platforms. Conversely, traditional texture mapping provides superior rendering performance and lower computational demand but lacks volumetric depth and accurate lighting feedback, resulting in a less realistic visual experience.

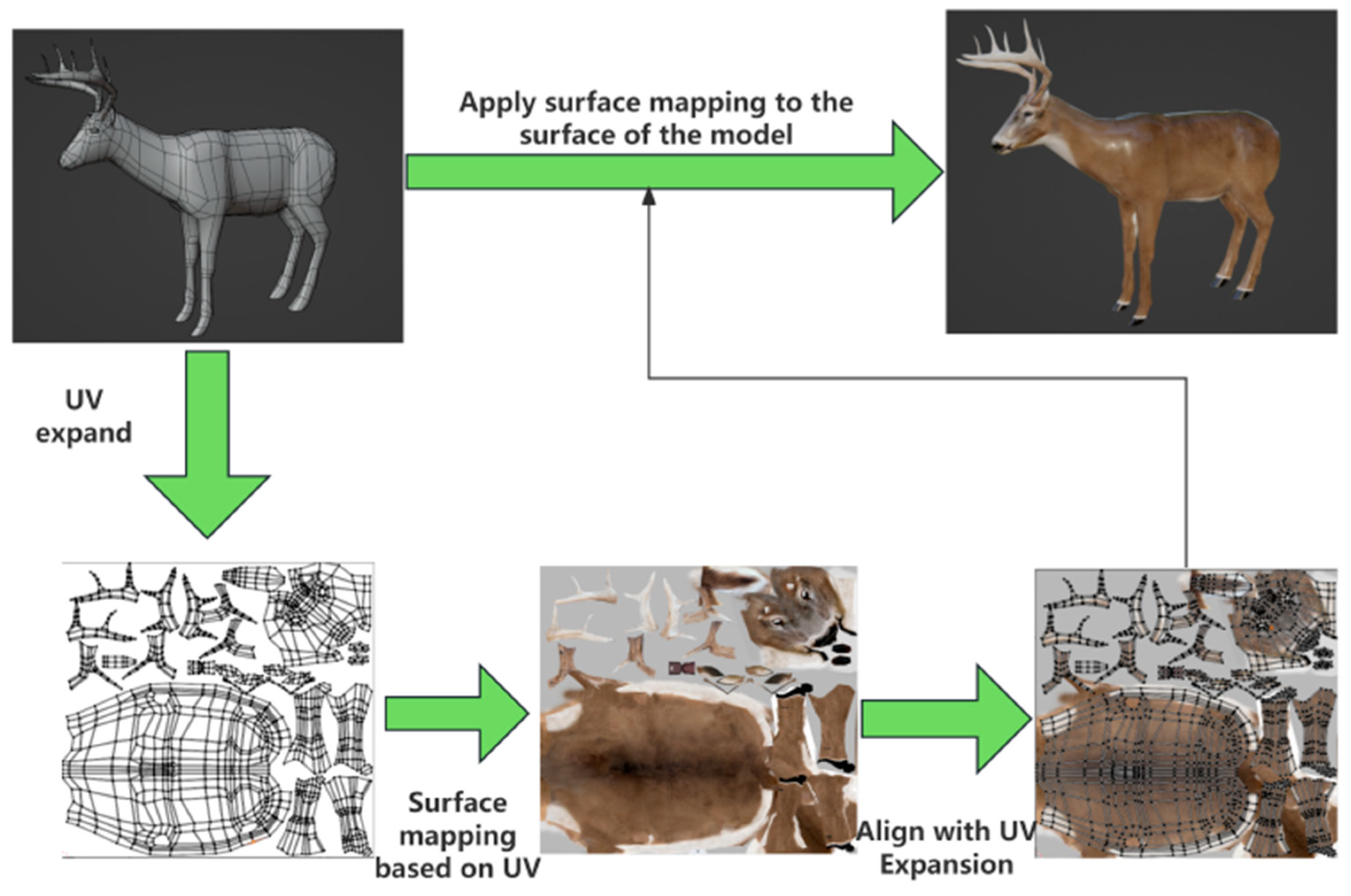

Only a low-polygon base model was required for the control group reconstruction of the white-lipped deer model using the traditional texture mapping method. The final visual effect was achieved by manually painting textures and applying them to the model surface. This streamlined approach emphasizes efficiency over realism. The production workflow for this method is illustrated in

Figure 4.

The particle hair algorithm extends traditional 3D texture mapping by incorporating particle systems to simulate complex fur structures. This approach involves distributing particles across the surface of the model to form clusters of hair strands. The physical properties, orientation, and distribution of the hair are then finely controlled using advanced simulation algorithms. Detailed morphological adjustments and weight optimization techniques are applied to enhance the realism and ensure that the fur closely replicates the natural characteristics of wild animals. The modeling process using the particle hair algorithm is illustrated in

Figure 5.

3.2. Comparison of Animation Rendering Results for 3D Models Generated Using Three Algorithms

In this subsection, we present the development of a mobile AR interactive display system for the white-lipped deer, implemented using the widely adopted Unity3D platform [

21]. A customized skeletal rig, adapted from a standard tetrapod framework, was applied to each of the three white-lipped deer models within Blender. Interactive animations—including walking, running, foraging, and kneeling—were created using inverse kinematics (IK) techniques to ensure realistic motion and adaptability across different models [

22,

23,

24,

25,

26]. These animations were seamlessly integrated into the Unity3D AR environment, establishing a robust foundation for performance benchmarking.

To comprehensively assess the efficiency of the 3D model constructed using the TPOT-based physically rendered fur simulation algorithm, comparative performance tests were conducted alongside models developed using the traditional texture mapping and particle hair simulation approaches. The animation sequences were rendered in both Blender and Unity3D AR environments on Android mobile devices to evaluate rendering quality and system resource demands. The experimental results are summarized in

Table 2, providing quantitative evidence to support the effectiveness and applicability of each algorithm and offering a theoretical basis for future research and optimization.

By analyzing the experimental data presented in

Table 2, it is evident that the TPOT-based fur simulation algorithm demonstrates rendering efficiency and system resource utilization comparable to that of the traditional texture mapping method across both platforms. Although the particle hair algorithm exhibits superior performance, with GPU rendering efficiency 22.3% higher and CPU computational efficiency 71.5% greater, our approach achieves a well-balanced trade-off between visual realism and computational efficiency.

To further emphasize the robust performance of the proposed algorithm on mobile platforms, we conducted an additional test by increasing the number of white-lipped deer rendered within the same Unity Android AR environment. Using a ten-second head-down grazing animation as a benchmark, we computed the average frame rates corresponding to varying numbers of models. The results are illustrated in

Figure 6.

The average frame rate results on the Unity Android AR platform indicate that the white-lipped deer model generated using the proposed TPOT-based algorithm achieves runtime performance comparable to that of the traditional texture mapping method while significantly outperforming the particle hair approach. This demonstrates the method’s distinctive advantage in maintaining high visual fidelity with minimal performance overhead, making it particularly well-suited for mobile deployment and the broader promotion of realistic digital wildlife representations of the Sanjiangyuan region to the public.

3.3. Comparison of Local Details and Overall Visual Quality Across Models

To thoroughly assess the effectiveness of the TPOT-based fur simulation algorithm in replicating the hair characteristics of the white-lipped deer, we conducted a detailed comparative analysis of four representative regions: the face, tail, abdomen, and torso. Each region was magnified and examined in models produced using the proposed method, traditional texture mapping, and particle hair simulation approach. The comparative results are presented in

Table 3.

A total of 40 participants were invited to evaluate the simulation quality of different modeling approaches based on the comparative results presented in

Table 2. This group included 30 undergraduate students from various academic disciplines, five postgraduate students specializing in Plateau Ecological Engineering, and five animal protection professionals from the Qinghai-Tibet Plateau Natural Museum. Among them, 28 undergraduates indicated that the traditional texture mapping method delivered only average performance in simulation realism, while 20 participants favored the TPOT-based approach for its improved overall simulation accuracy. Additionally, 26 participants identified the particle hair method as providing the most realistic facial rendering effect. Specifically, four postgraduate students and three animal protection workers expressed the view that the TPOT-based model outperformed the traditional method in terms of visual fidelity, although it was slightly less realistic than the particle hair approach. A detailed summary of the evaluation results is presented in

Figure 7.

3.4. Evaluation of the Virtual White-Lipped Deer Interactive Display

The physically rendered white-lipped deer model and its associated interactive animations were packaged using the Unity3D AR platform into an Android-compatible apk installation file. This application was subsequently deployed and tested on 15 Android smartphones of the same brand and model.

Figure 8 illustrates the operational performance and visual effects observed during actual runtime.

An evaluation study was conducted involving 30 college students from diverse academic backgrounds. Participants were randomly assigned to two equal groups: Group A and Group B, each comprising 15 individuals. Group A interacted with an AR application that featured a virtual white-lipped deer to explore and learn about wildlife in the Sanjiangyuan region. Group B served as the control group and viewed a 2D video demonstration created using a traditional texture-mapping method. Both groups were presented with identical educational content, including an introduction to protected wildlife species on the Qinghai-Tibet Plateau, a visual display of the white-lipped deer, magnified anatomical details, and model-based interaction. To assess learning outcomes, all participants completed pre- and post-intervention evaluations focusing on four dimensions: perceived realism of the white-lipped deer model, animation fluency, comprehension of ecological conservation in the Sanjiangyuan region, and awareness of digital wildlife protection initiatives. It was hypothesized that Group A, exposed to an immersive and interactive 3D experience, would demonstrate higher engagement, interest, and motivation toward wildlife conservation compared to Group B, which interacted with static 2D content. The comparative results of the pre- and post-assessments are presented in

Figure 9 and

Figure 10.

The comparative analysis of the assessment results indicates that Group A exhibited significantly enhanced responses following their interaction with the Sanjiangyuan wildlife AR application developed in this study, relative to Group B, which engaged with the 2D video format. Notably, 80% of participants in Group A reported a high level of interest in the AR product and demonstrated a more comprehensive and nuanced understanding of ecological conservation in the Sanjiangyuan region. Furthermore, a survey assessing acceptance of non-intrusive wildlife viewing methods revealed that the majority of Group A participants preferred the AR-based interactive approach. In contrast, fewer than 30% of Group B participants expressed a similar preference.

These findings suggested that the AR interactive platform not only enriches user engagement but also serves as an effective medium for ecological education regarding the Sanjiangyuan ecosystem. Additionally, the technology offers a promising and well-received approach to non-invasive wildlife observation, which is essential for safeguarding local biodiversity and supporting sustainable tourism initiatives.

4. Conclusions

In summary, the TPOT-based fur simulation algorithm integrated with AR technology presented in this study demonstrates significant advancements in the digital conservation of wildlife. This algorithm substantially enhances the realism of the white-lipped deer model by accurately replicating texture, coloration, and light interactions, thereby contributing to increased public awareness and appreciation of the species. Its optimized performance and low resource consumption ensure compatibility with a wide range of mobile devices, facilitating seamless integration with AR platforms to deliver an immersive, non-invasive interactive experience. This approach enables users to engage with the wildlife and ecological environment of Sanjiangyuan at any time and location via smartphones or tablets, effectively overcoming geographic constraints and expanding the accessibility of environmental education. Future research may explore the incorporation of artificial intelligence to imbue virtual wildlife with autonomous behaviors, reducing the need for scripted interactions and further enhancing user immersion. Such developments would help minimize disturbances to natural habitats, striking a better balance between ecological preservation and the promotion of cultural and ecotourism activities.