Generative Adversarial Networks in Histological Image Segmentation: A Systematic Literature Review

Abstract

1. Introduction

- A thorough synthesis of the current state of the art regarding the use of generative models, particularly GANs, in histological image segmentation;

- Important perspectives on the use of GANs across different aspects, including yearly trends in GAN applications, the specific tasks addressed, the organs involved, the datasets utilized, and the evaluation criteria employed in the studies analyzed;

- A practical guidance for future research, helping to shape the direction of generative model development in histological imaging.

2. Related Review Works

3. Fundaments and Basic Definitions

3.1. Histological Image Segmentation

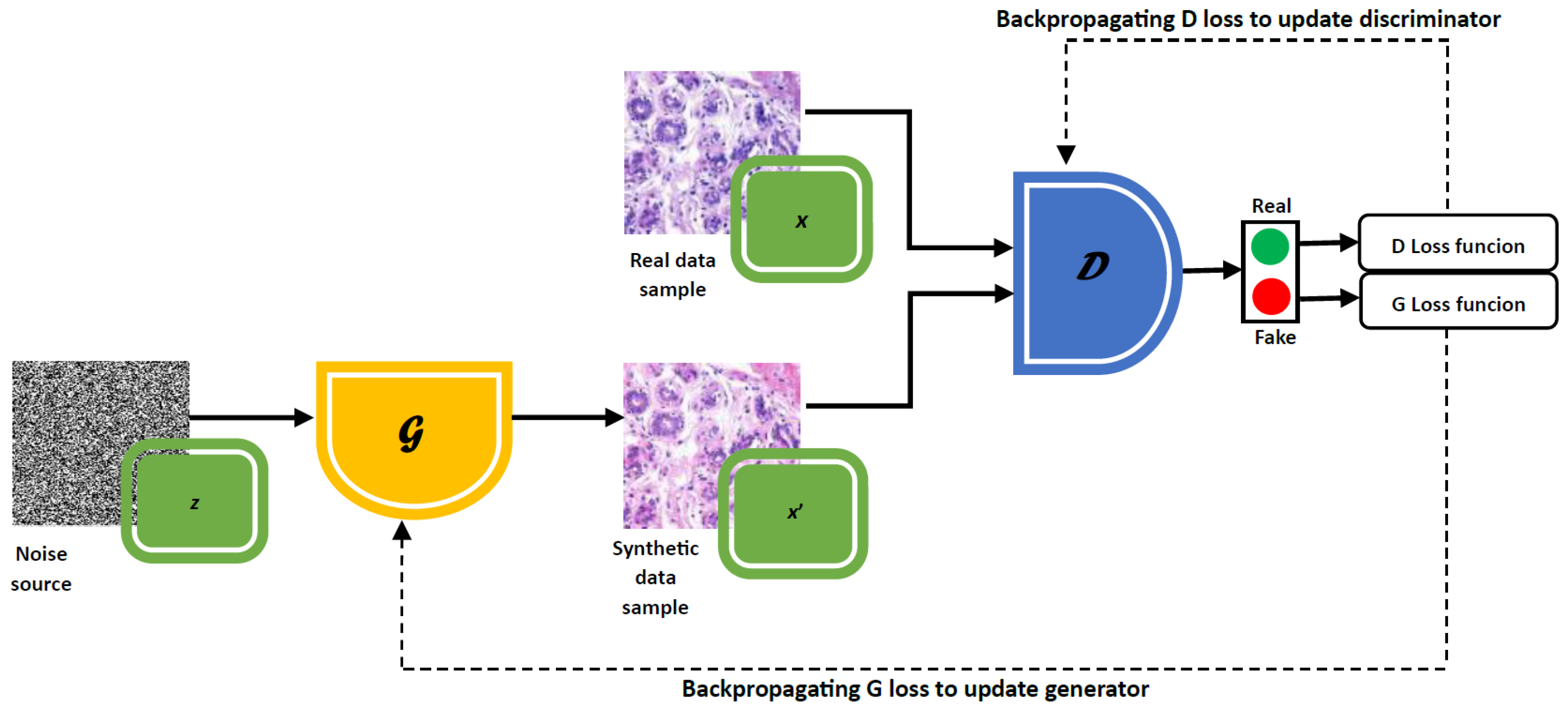

3.2. Introduction to Generative Adversarial Networks

3.3. Types of GANs

3.3.1. cGAN

3.3.2. CycleGAN

3.3.3. StyleGAN

3.3.4. PGGAN

3.3.5. WGAN

3.3.6. Pix2Pix

4. Review Methodology

4.1. Research Questions

- (RQ1) Which types of histological tissues are used in studies applying generative adversarial networks (GANs) for image segmentation tasks?

- (RQ2) Which datasets are used in studies?

- (RQ3) What are the main goal of applying GANs to histological segmentation tasks?

- (RQ4) Which GAN architectures are used in the studies?

- (RQ5) What metrics are used to evaluate the performance of GANs in the proposed tasks?

- (RQ6) What is the specific goal of the segmentation performed in the developed studies?

- (RQ7) Which segmentation architecture is used in the studies?

- (RQ8) What metrics are used to evaluate the segmentation quality?

4.2. Search Strategy

4.3. Selection Criteria

4.4. Data Extraction and Synthesis

5. Results

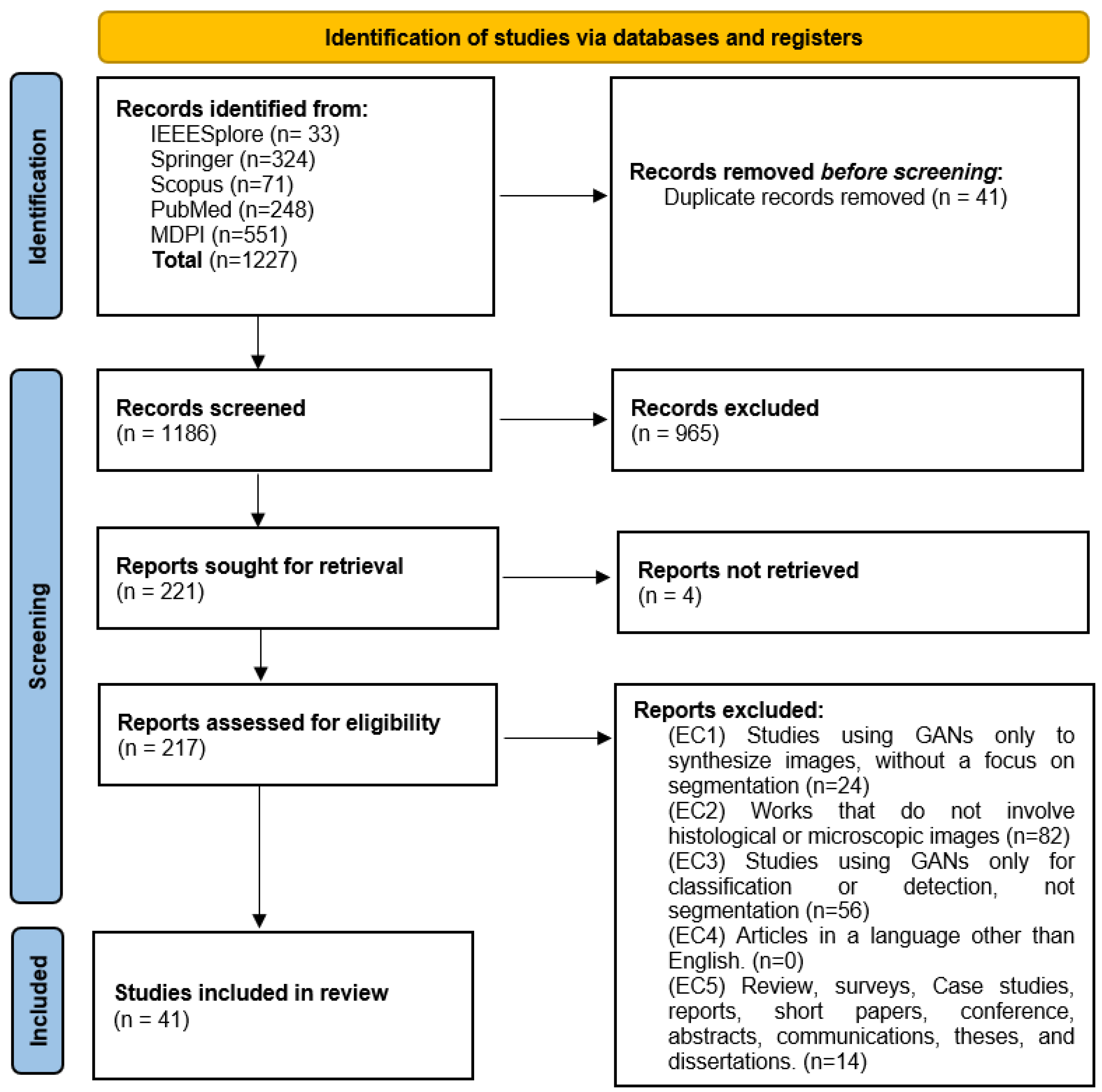

5.1. Search Results

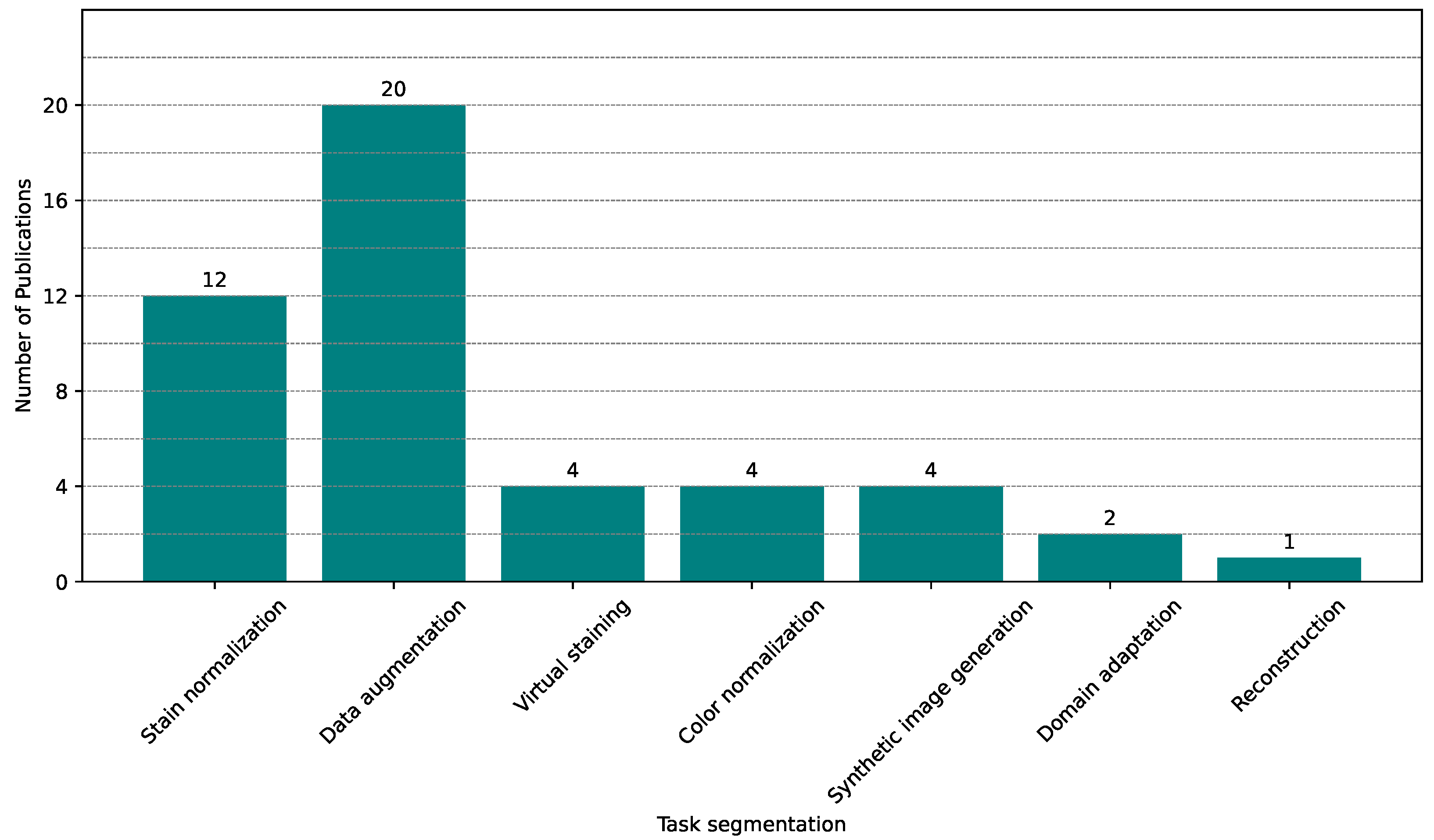

5.2. Applications and Trends of GANs in Histopathological Image Segmentation

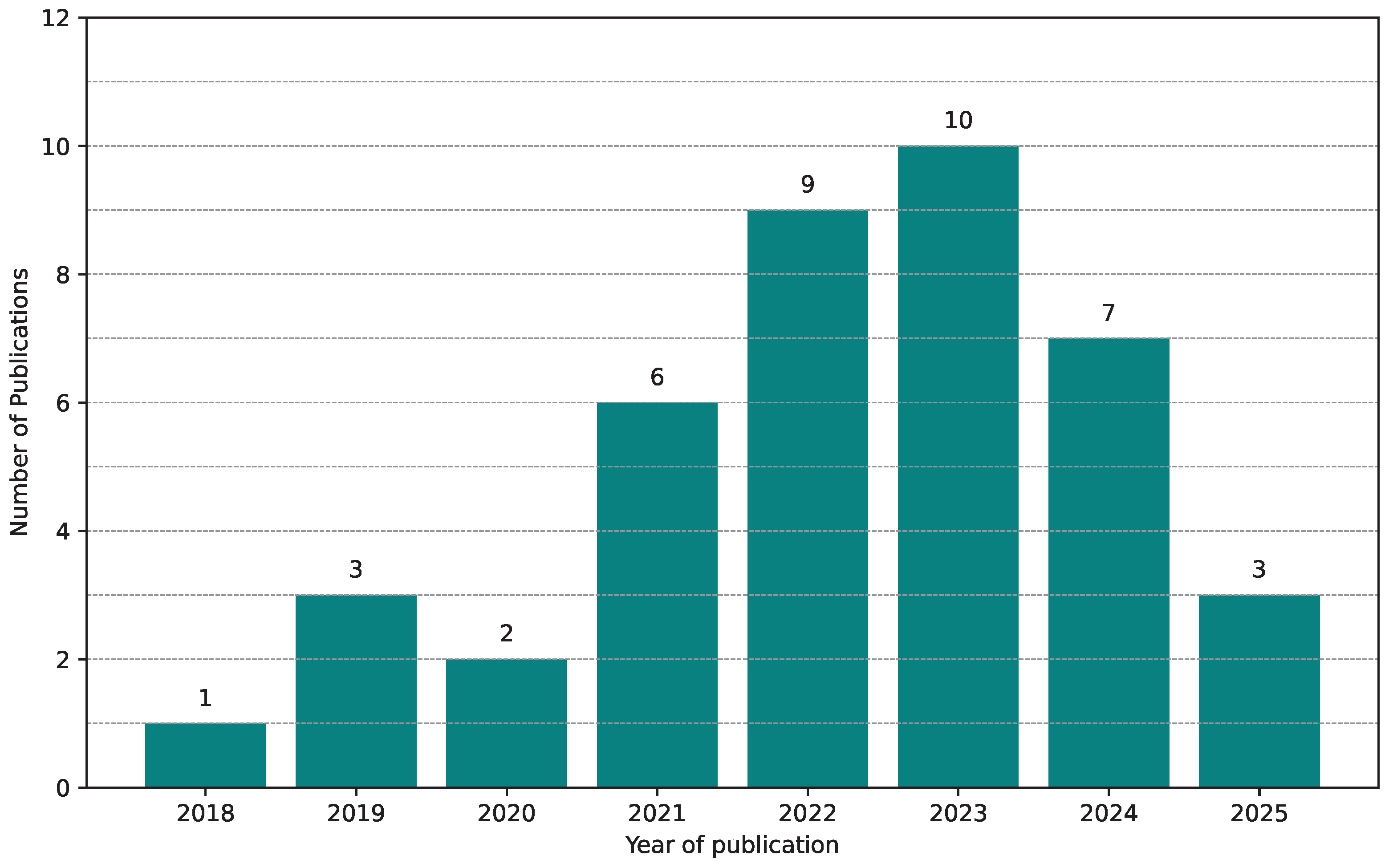

Yearly Distribution

5.3. Datasets

5.4. GANs in Histological Image

5.4.1. Data Augmentation

5.4.2. Stain Normalization

| Work | Task | Model | Setting | Performance |

|---|---|---|---|---|

| Azam et al. (2024) [84] | Virtual Staining | Pix2Pix-GAN CUT | - | |

| Bouteldja et al. (2022) [69] | Data augmentation, Stain Normalization | CycleGAN | GPU NVIDIA A100, 10 GB VRAM | - |

| De Bel et al. (2021) [93] | Data augmentation, Stain normalization | CycleGAN | - | - |

| Deshpande et al. (2022) [66] | Synthetic image generation | GAN | GPU NVIDIA GTX TITAN X single, 12 GB RAM | |

| Deshpande et al. (2024) [62] | Data augmentation | SynCLay | - | CoNiC- , PanNuke- |

| Du et al. (2025) [70] | Stain normalization | P2P-GAN, DSTGAN | GPU NVIDIA RTX 4090 | TUPAC: ; MITOS: ; ICIAR: ; MICCAI: . |

| Falahkheirkhah et al. (2023) [95] | Synthetic image generation | Pix2Pix-GAN | GPU NVIDIA 2080 | |

| Fan et al. (2024) [65] | Color normalization | CycleGAN | - | - |

| Gadermayr et al. (2019) [97] | Stain normalization | CycleGAN | GPU NVIDIA GTX 1080 Ti | - |

| Guan et al. (2024) [56] | Stain Normalization and Virtual staining | GramGAN | NVIDIA RTX 3090 GPU, Intel (TM) i5-10400 CPU, 16 GB RAM | CSS = 0.714, FID = 52.086, KID = 5.815 |

| He et al. (2022) [61] | Synthetic Image generation | cGAN | GPU NVIDIA TITAN RTX, 24 GB VRAM | |

| Hou et al. (2020) [87] | Data augmentation | GAN | - | - |

| Hossain et al. (2024) [73] | Data augmentation | CycleGAN | - | |

| Hossain et al. (2023) [91] | Data augmentation | CycleGAN | - | |

| Hu et al. (2018) [59] | Data augmentation and Stain normalization | WGAN-GP | NVIDIA Tesla V100 GPU with 32 GB of memory, CPU Intel Xeon, 128 GB of RAM | - |

| Juhong et al. (2022) [81] | Reconstruction high-resolution image | SRGAN- ResNeXt | GPU NVIDIA RTX 2060, CPU Intel Core i7-9750H, 16 GB RAM | |

| Kablan (2023) [76] | Data augmentation, Stain Normalization | CycleGAN RRAGAN | Google Colabority, GPU Tesla K80, 12 GB RAM | |

| Kapil et al. (2021) [83] | Data augmentation and Domain transformation | CycleGAN DASGAN | GPU NVIDIA V100, 32 GB RAM and GPU NVIDIA K80 | - |

| Kweon et al. (2022) [86] | Data augmentation | PGGAN | Intel Core i7-10700, NVIDIA RTX 3090 GPU 24 GB RAM | |

| Lafarge et al. (2019) [77] | Stain normalization and Data augmentation | DANN | - | - |

| Lahiani et al. (2020) [85] | Virtual staining | CycleGAN | GPU NVIDIA Testa v100 | |

| Li, Hu and Kak (2023) [63] | Data augmentation | G-SAN | AMD Ryzen 7 5800X, 32 GB of RAM, NVIDIA RTX 3090 GPU, 24 GB of memory | - |

| Liu, Wagner and Peng (2022) [57] | Data augmentation | GAN | GPU Tesla V100, CPU Intel Xeon 6230, 128 GB RAM | - |

| Lou Wei et al. (2022) [79] | Data augmentation | CSinGAN | NVIDIA Tesla V100 | - |

| Mahapatra and Maji (2023) [90] | Color normalization | LSGAN TredMiL | - | |

| Mahmood et al. (2019) [88] | Stain normalization | cGAN | Intel Xeon E5-2699 v4, 256 GB of RAM, NVIDIA Tesla V100 GPU | - |

| Naglah et al. (2022) [92] | Data augmentation, Color normalization, Domain transformation | cGAN CycleGAN | - | cGAN: ; CycleGAN: . |

| Purma et al. (2024) [68] | Stain normalization | CycleGAN | GPU NVIDIA Tesla V100, 16 GB de memória, Intel Xeon CPU E5-2680 v4 | FID: ClaS = 82.44, FID: MoNuSeg = 15.32, FID: HN cancer = 6.80, FID: Combined = 7.63 |

| Razavi et al. (2022) [71] | Data augmentation and Stain Normalization | cGAN MiNuGAN | GPU NVIDIA 2080 Ti, 11 GB RAM | - |

| Rong et al. (2023) [89] | Color Normalization | Restore-GAN | GPU NVIDIA P100, 16 GB RAM | |

| Shahini et al. (2025) [78] | Synthetic image generation | ViT-P2P | - | - |

| Song et al. (2023) [67] | Stain normalization | CycleGAN | Nvidia RTX 3090 GPU, 24 GB of GPU memory, Intel Xeon, 64 GB of RAM | - |

| Taieb and Hamarneh (2017) [75] | Stain Normalization | GAN | NVIDIA Titan X GPU | |

| Vasiljevic et al. (2021) [94] | Data augmentation | CycleGAN, StarGAN | - | |

| Vasiljevic et al. (2023) [72] | Data augmentation and Virtual staining | CycleGAN HistoStarGAN | - | |

| Wang et al. (2021) [82] | Stain normalization | ACCP-GAN | GPU NVIDIA RTX, CPU Intel Xeon Silver 4110, 128 GB RAM | |

| Wang et al. (2022) [80] | Data augmentation | GAN | NVIDIA GTX 1070, 16 GB RAM | - |

| Ye et al. (2025) [60] | Stain normalization | SASSL | - | - |

| Yoon et al. (2024) [74] | Virtual staining | CUT, E-CUT CycleGAN E-CycleGAN | GPU Nvidia RTX 3090 AMD EPYC 7302 CPU, 346 GB of RAM | FID-CycleGAN: 67.76, E-CycleGAN: 61.91, CUT: 54.87, E-CUT: 50.91 KID-CycleGAN: 2.290, E-CycleGAN: 1.631, CUT: 0.642, E-CUT: 0.245 |

| Zhang et al. (2021) [64] | Data augmentation | MASG-GAN | GPU NVIDIA RTX 2080 Ti, CPU Intel (TM) i9-10850 K, 32 GB RAM | - |

| Zhang et al. (2022) [58] | Color normalization and Virtual staining | CSTN | NVIDIA RTX 3090 GPU |

5.4.3. Virtual Staining

5.4.4. Synthetic Image Generation

5.4.5. Color Normalization

5.4.6. Domain Transformation and Reconstruction of High-Resolution Image

5.5. Segmentation Task in Histological Image Analysis

5.5.1. Nuclei Segmentation

5.5.2. Cell Segmentation

5.5.3. Tissue Segmentation

5.5.4. Multiclass Segmentation: Tissues + Nuclei or Cells

5.6. Overview of Evaluation Metrics

5.7. Study Summary

6. Discussion

7. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| CNN | Convolutional Neural Network |

| GAN | Generative Adversarial Network |

| WSI | Whole Slide Image |

| CUT | Contrastive Unpaired Translation |

| LSGAN | Least Squares Generative Adversarial Network |

| DASGAN | Domain Adaptation and Stain GAN |

| cGAN | Conditional Generative Adversarial Network |

| SRGAN | Super-Resolution Generative Adversarial Network |

| DANN | Domain-Adversarial Neural Network |

| WGAN | Wasserstein Generative Adversarial Network |

| ACCP-GAN | Attention-Conditional Cycle-Consistent Progressive GAN |

| RRAGAN | Residual and Recurrent Attention GAN |

| SASSL | Stain-Augmented Self-Supervised Learning |

| E-CUT | Enhanced Contrastive Unpaired Translation |

| PGGAN | Progressive Growing of GANs |

| G-SAN | Generative Stain Augmentation Network |

| CSTN | Cycle-consistent Stain Transfer Network |

| UDA | Unsupervised Domain Adaptation |

| CB-MTDA | Cross-Boosted Multi-Target Domain Adaptation |

| H&E | Hematoxylin and Eosin |

References

- Mezei, T.; Kolcsár, M.; Joó, A.; Gurzu, S. Image Analysis in Histopathology and Cytopathology: From Early Days to Current Perspectives. J. Imaging 2024, 10, 252. [Google Scholar] [CrossRef] [PubMed]

- Ali, M.; Benfante, V.; Basirinia, G.; Alongi, P.; Sperandeo, A.; Quattrocchi, A.; Giannone, A.G.; Cabibi, D.; Yezzi, A.; Raimondo, D.D.; et al. Applications of Artificial Intelligence, Deep Learning, and Machine Learning to Support the Analysis of Microscopic Images of Cells and Tissues. J. Imaging 2025, 11, 59. [Google Scholar] [CrossRef] [PubMed]

- Chan, J. The Wonderful Colors of the Hematoxylin–Eosin Stain in Diagnostic Surgical Pathology. Int. J. Surg. Pathol. 2014, 22, 12–32. [Google Scholar] [CrossRef] [PubMed]

- Hoque, M.Z.; Keskinarkaus, A.; Nyberg, P.; Seppänen, T. Stain normalization methods for histopathology image analysis: A comprehensive review and experimental comparison. Inf. Fusion 2024, 102, 101997. [Google Scholar] [CrossRef]

- Chen, Y.; Yang, X.H.; Wei, Z.; Heidari, A.A.; Zheng, N.; Li, Z.; Chen, H.; Hu, H.; Zhou, Q.; Guan, Q. Generative Adversarial Networks in Medical Image augmentation: A review. Comput. Biol. Med. 2022, 144, 105382. [Google Scholar] [CrossRef]

- Hou, L.; Samaras, D.; Kurc, T.M.; Gao, Y.; Davis, J.E.; Saltz, J.H. Robust Histopathology Image Analysis: To Label or to Synthesize? In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 8533–8542. [Google Scholar]

- Vo, T.; Khan, N. Edge-preserving image synthesis for unsupervised domain adaptation in medical image segmentation. In Proceedings of the 44th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Glasgow, UK, 11–15 July 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 3753–3757. [Google Scholar]

- Qu, H.; Liu, L.; Zhang, D.; Li, B.; Wang, W.; Gorriz, J.M. Weakly supervised deep nuclei segmentation using points annotation in histopathology images. Med Image Anal. 2020, 63, 101699. [Google Scholar] [CrossRef]

- Saad, M.; Hossain, M.A.; Tizhoosh, H.R. A Survey on Training Challenges in Generative Adversarial Networks for Biomedical Image Analysis. arXiv 2022, arXiv:2201.07646. [Google Scholar] [CrossRef]

- Lee, M. Recent Advances in Generative Adversarial Networks for Gene Expression Data: A Comprehensive Review. Mathematics 2023, 11, 3055. [Google Scholar] [CrossRef]

- Liu, L.; Xia, Y.; Tang, L. An overview of biological data generation using generative adversarial networks. In Proceedings of the 2020 IEEE Conference on Telecommunications, Optics and Computer Science (TOCS), Shenyang, China, 11–13 December 2020; pp. 141–144. [Google Scholar] [CrossRef]

- Iqbal, A.; Sharif, M.; Yasmin, M.; Raza, M.; Aftab, S. Generative adversarial networks and its applications in the biomedical image segmentation: A comprehensive survey. Int. J. Multimed. Inf. Retr. 2022, 11, 333–368. [Google Scholar] [CrossRef]

- Hörst, F.; Rempe, M.; Heine, L.; Seibold, C.; Keyl, J.; Baldini, G.; Ugurel, S.; Siveke, J.; Grünwald, B.; Egger, J.; et al. CellViT: Vision Transformers for Precise Cell Segmentation and Classification. arXiv 2023, arXiv:2306.15350. [Google Scholar] [CrossRef]

- Obeid, A.; Boumaraf, S.; Sohail, A.; Hassan, T.; Javed, S.; Dias, J.; Bennamoun, M.; Werghi, N. Advancing Histopathology with Deep Learning Under Data Scarcity: A Decade in Review. arXiv 2024, arXiv:2410.19820. [Google Scholar]

- Lahreche, F.; Moussaoui, A.; Oulad-Naoui, S. Medical Image Semantic Segmentation Using Deep Learning: A Survey. In Proceedings of the International Conference on Emerging Intelligent Systems for Sustainable Development (ICEIS 2024), Aflou, Algeria, 26–27 June 2024; pp. 324–345. [Google Scholar] [CrossRef]

- Sultan, B.; Rehman, A.; Riyaz, L. Generative Adversarial Networks in the Field of Medical Image Segmentation. In Deep Learning Applications in Medical Image Segmentation: Overview, Approaches, and Challenges; Wiley: Hoboken, NJ, USA, 2024; pp. 185–225. [Google Scholar] [CrossRef]

- Gui, J.; Sun, Z.; Wen, Y.; Tao, D.; Ye, J. A Review on Generative Adversarial Networks: Algorithms, Theory, and Applications. IEEE Trans. Knowl. Data Eng. 2023, 35, 3313–3332. [Google Scholar] [CrossRef]

- Alhumaid, M.; Alhumaid, M.; Fayoumi, A. Transfer Learning-Based Classification of Maxillary Sinus Using Generative Adversarial Networks. Appl. Sci. 2024, 14, 3083. [Google Scholar] [CrossRef]

- Islam, S.; Aziz, M.T.; Nabil, H.R.; Jim, J.R.; Mridha, M.F.; Kabir, M.M.; Asai, N.; Shin, J. Generative Adversarial Networks (GANs) in Medical Imaging: Advancements, Applications, and Challenges. IEEE Access 2024, 12, 35728–35753. [Google Scholar] [CrossRef]

- Jose, L.; Liu, S.; Russo, C.; Nadort, A.; Ieva, A.D. Generative Adversarial Networks in Digital Pathology and Histopathological Image Processing: A Review. J. Pathol. Inform. 2021, 12, 43. [Google Scholar] [CrossRef]

- Zhao, J.; Hou, X.; Pan, M.; Zhang, H. Attention-based generative adversarial network in medical imaging: A narrative review. Comput. Biol. Med. 2022, 149, 105948. [Google Scholar] [CrossRef]

- Hussain, J.; Båth, M.; Ivarsson, J. Generative adversarial networks in medical image reconstruction: A systematic literature review. Comput. Biol. Med. 2025, 191, 110094. [Google Scholar] [CrossRef]

- Xun, S.; Li, D.; Zhu, H.; Chen, M.; Wang, J.; Li, J.; Chen, M.; Wu, B.; Zhang, H.; Chai, X.; et al. Generative adversarial networks in medical image segmentation: A review. Comput. Biol. Med. 2022, 140, 105063. [Google Scholar] [CrossRef]

- Harari, M.B.; Parola, H.R.; Hartwell, C.J.; Riegelman, A. Literature searches in systematic reviews and meta-analyses: A review, evaluation, and recommendations. J. Vocat. Behav. 2020, 118, 103377. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372. [Google Scholar] [CrossRef]

- Ibrahim, M.; Khalil, Y.A.; Amirrajab, S.; Sun, C.; Breeuwer, M.; Pluim, J.; Elen, B.; Ertaylan, G.; Dumontier, M. Generative AI for synthetic data across multiple medical modalities: A systematic review of recent developments and challenges. Comput. Biol. Med. 2025, 189, 109834. [Google Scholar] [CrossRef] [PubMed]

- Haggerty, J.; Wang, X.; Dickinson, A.; O’Malley, C.; Martin, E. Segmentation of epidermal tissue with histopathological damage in images of haematoxylin and eosin stained human skin. BMC Med. Imaging 2014, 14, 7. [Google Scholar] [CrossRef] [PubMed]

- Mahbod, A.; Schaefer, G.; Dorffner, G.; Hatamikia, S.; Ecker, R.; Ellinger, I. A dual decoder U-Net-based model for nuclei instance segmentation in hematoxylin and eosin-stained histological images. Front. Med. 2022, 9, 978146. [Google Scholar] [CrossRef]

- He, L.; Long, L.; Antani, S.; Thoma, G. Histology image analysis for carcinoma detection and grading. Comput. Methods Programs Biomed. 2012, 107, 538–556. [Google Scholar] [CrossRef]

- Fu, X.; Liu, T.; Xiong, Z.; Smaill, B.; Stiles, M.; Zhao, J. Segmentation of histological images and fibrosis identification with a convolutional neural network. Comput. Biol. Med. 2018, 98, 147–158. [Google Scholar] [CrossRef]

- Wesdorp, N.J.; Zeeuw, J.M.; Postma, S.C.; Roor, J.; van Waesberghe, J.H.T.; van den Bergh, J.E.; Nota, I.M.; Moos, S.; Kemna, R. Deep learning models for automatic tumor segmentation and total tumor volume assessment in patients with colorectal liver metastases. Eur. Radiol. Exp. 2023, 7, 75. [Google Scholar] [CrossRef]

- Schoenpflug, L.A.; Lafarge, M.W.; Frei, A.L.; Koelzer, V.H. Multi-task learning for tissue segmentation and tumor detection in colorectal cancer histology slides. arXiv 2023, arXiv:2304.03101. [Google Scholar]

- Gudhe, N.R.; Kosma, V.M.; Behravan, H.; Mannermaa, A. Nuclei instance segmentation from histopathology images using Bayesian dropout based deep learning. BMC Med. Imaging 2023, 23, 162. [Google Scholar] [CrossRef]

- Li, Y.J.; Chou, H.H.; Lin, P.C.; Shen, M.R.; Hsieh, S.Y. A novel deep learning-based algorithm combining histopathological features with tissue areas to predict colorectal cancer survival from whole-slide images. J. Transl. Med. 2023, 21, 731. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Networks. Adv. Neural Inf. Process. Syst. 2014, 27. [Google Scholar] [CrossRef]

- Heng, Y.; Ma, Y.; Khan, F.G.; Khan, A.; Ali, F.; AlZubi, A.A.; Hui, Z. Survey: Application and analysis of generative adversarial networks in medical images. Artif. Intell. Rev. 2025, 58, 39. [Google Scholar] [CrossRef]

- Kodali, N.; Abernethy, J.; Hays, J.; Kira, Z. On Convergence and Stability of GANs. arXiv 2017, arXiv:1705.07215. [Google Scholar]

- Fedus, W.; Goodfellow, I.; Dai, A.M. Many Paths to Equilibrium: GANs Do Not Need to Decrease a Divergence at Every Step. arXiv 2023, arXiv:2002.05616. [Google Scholar]

- Lucic, M.; Kurach, K.; Michalski, M.; Gelly, S.; Bousquet, O. Are GANs Created Equal? A Large-Scale Study. Adv. Neural Inf. Process. Syst. NeurIPS 2018, 31. [Google Scholar]

- Zhang, H.; Yu, Y.; Jojic, N.; Xiao, H.; Wang, Y.; Liang, Y.; Hsieh, C.J. Overfitting in Adversarially Robust Deep Learning. In Proceedings of the 37th International Conference on Machine Learning (ICML), Virtual, 13–18 July 2020; PMLR: Cambridge, MA, USA, 2020; pp. 11134–11143. [Google Scholar]

- Mirza, M.; Osindero, S. Conditional generative adversarial nets. arXiv 2014, arXiv:1411.1784. [Google Scholar] [CrossRef]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar] [CrossRef]

- Karras, T.; Laine, S.; Aila, T. A style-based generator architecture for generative adversarial networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4401–4410. [Google Scholar] [CrossRef]

- Karras, T.; Laine, S.; Aittala, M.; Hellsten, J.; Lehtinen, J.; Aila, T. Analyzing and improving the image quality of StyleGAN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 8110–8119. [Google Scholar] [CrossRef]

- Karras, T.; Aila, T.; Laine, S.; Lehtinen, J. Progressive growing of GANs for improved quality, stability, and variation. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar] [CrossRef]

- Han, C.C.; Rundo, L.; Araki, R.; Tang, Y.C.; Peng, S.L.; Nakayama, H.; Lin, C.C.; Li, C.H. Infinite Brain MR Images: PGGAN-based Data Augmentation for Tumor Detection. arXiv 2019, arXiv:1903.12564. [Google Scholar]

- Korkinof, D.; Rijken, T.; O’Neill, M.; Matthew, J.; Glocker, B. High-Resolution Mammogram Synthesis using Progressive Generative Adversarial Networks. arXiv 2018, arXiv:1807.03401. [Google Scholar]

- Xue, Y.; Ye, J.; Zhou, Q.; You, H.; Ouyang, W.; Do, Q.V. Selective Synthetic Augmentation with HistoGAN for Improved Histopathology Image Classification. arXiv 2021, arXiv:2111.06399. [Google Scholar] [CrossRef]

- Arjovsky, M.; Chintala, S.; Bottou, L. Wasserstein GAN. arXiv 2017, arXiv:1701.07875. [Google Scholar] [CrossRef]

- Kazeminia, S.; Baur, C.; Kuijper, A.; van Ginneken, B.; Navab, N.; Albarqouni, S.; Mukhopadhyay, A. GANs for medical image analysis. Artif. Intell. Med. 2020, 109, 101938. [Google Scholar] [CrossRef]

- Yi, X.; Walia, E.; Babyn, P. Generative adversarial network in medical imaging: A review. Med Image Anal. 2019, 58, 101552. [Google Scholar] [CrossRef] [PubMed]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar] [CrossRef]

- Hillis, C.; Bagheri, E.; Marshall, Z. The Role of Protocol Papers, Scoping Reviews, and Systematic Reviews in Responsible AI Research. IEEE Technol. Soc. Mag. 2025, 44, 72–76. [Google Scholar] [CrossRef]

- PROSPERO. International Prospective Register of Systematic Reviews. Centre for Reviews and Dissemination, University of York. 2025. Available online: https://www.crd.york.ac.uk/prospero/ (accessed on 10 February 2025).

- Frid-Adar, M.; Klang, E.; Amitai, M.; Goldberger, J.; Greenspan, H. GAN-based synthetic medical image augmentation for increased CNN performance in liver lesion classification. Neurocomputing 2018, 321, 321–331. [Google Scholar] [CrossRef]

- Guan, X.; Wang, Y.; Lin, Y.; Li, X.; Zhang, Y. Unsupervised multi-domain progressive stain transfer guided by style encoding dictionary. IEEE Trans. Image Process. 2024, 33, 767–779. [Google Scholar] [CrossRef]

- Liu, Y.; Wagner, S.J.; Peng, T. Multi-modality microscopy image style augmentation for nuclei segmentation. J. Imaging 2022, 8, 71. [Google Scholar] [CrossRef]

- Zhang, H.; Liu, J.; Wang, P.; Yu, Z.; Liu, W.; Chen, H. Cross-boosted multi-target domain adaptation for multi-modality histopathology image translation and segmentation. IEEE J. Biomed. Health Inform. 2022, 26, 3197–3208. [Google Scholar] [CrossRef]

- Hu, B.; Tang, Y.; Eric, I.; Chang, C.; Fan, Y.; Lai, M.; Xu, Y. Unsupervised learning for cell-level visual representation in histopathology images with generative adversarial networks. IEEE J. Biomed. Health Inform. 2018, 23, 1316–1328. [Google Scholar] [CrossRef]

- Ye, H.; Yang, Y.y.; Zhu, S.; Wang, D.H.; Zhang, X.Y.; Yang, X.; Huang, H. Stain-adaptive self-supervised learning for histopathology image analysis. Pattern Recognit. 2025, 161, 111242. [Google Scholar] [CrossRef]

- He, Y.; Li, J.; Shen, S.; Liu, K.; Wong, K.K.; He, T.; Wong, S.T. Image-to-image translation of label-free molecular vibrational images for a histopathological review using the UNet+/seg-cGAN model. Biomed. Opt. Express 2022, 13, 1924–1938. [Google Scholar] [CrossRef]

- Deshpande, S.; Dawood, M.; Minhas, F.; Rajpoot, N. SynCLay: Interactive synthesis of histology images from bespoke cellular layouts. Med. Image Anal. 2024, 91, 102995. [Google Scholar] [CrossRef]

- Li, F.; Hu, Z.; Chen, W.; Kak, A. A laplacian pyramid based generative h&e stain augmentation network. IEEE Trans. Med. Imaging 2023, 43, 701–713. [Google Scholar]

- Zhang, H.; Liu, J.; Yu, Z.; Wang, P. MASG-GAN: A multi-view attention superpixel-guided generative adversarial network for efficient and simultaneous histopathology image segmentation and classification. Neurocomputing 2021, 463, 275–291. [Google Scholar] [CrossRef]

- Fan, J.; Liu, D.; Chang, H.; Cai, W. Learning to generalize over subpartitions for heterogeneity-aware domain adaptive nuclei segmentation. Int. J. Comput. Vis. 2024, 132, 2861–2884. [Google Scholar] [CrossRef]

- Deshpande, S.; Minhas, F.; Graham, S.; Rajpoot, N. SAFRON: Stitching across the frontier network for generating colorectal cancer histology images. Med. Image Anal. 2022, 77, 102337. [Google Scholar] [CrossRef]

- Song, Z.; Du, P.; Yan, J.; Li, K.; Shou, J.; Lai, M.; Fan, Y.; Xu, Y. Nucleus-aware self-supervised pretraining using unpaired image-to-image translation for histopathology images. IEEE Trans. Med. Imaging 2023, 43, 459–472. [Google Scholar] [CrossRef]

- Purma, V.; Srinath, S.; Srirangarajan, S.; Kakkar, A.; Prathosh, A. GenSelfDiff-HIS: Generative Self-Supervision Using Diffusion for Histopathological Image Segmentation. IEEE Trans. Med. Imaging 2024, 44, 618–631. [Google Scholar] [CrossRef]

- Bouteldja, N.; Hölscher, D.L.; Bülow, R.D.; Roberts, I.S.; Coppo, R.; Boor, P. Tackling stain variability using CycleGAN-based stain augmentation. J. Pathol. Inform. 2022, 13, 100140. [Google Scholar] [CrossRef]

- Du, Z.; Zhang, P.; Huang, X.; Hu, Z.; Yang, G.; Xi, M.; Liu, D. Deeply supervised two stage generative adversarial network for stain normalization. Sci. Rep. 2025, 15, 7068. [Google Scholar] [CrossRef]

- Razavi, S.; Khameneh, F.D.; Nouri, H.; Androutsos, D.; Done, S.J.; Khademi, A. MiNuGAN: Dual segmentation of mitoses and nuclei using conditional GANs on multi-center breast H&E images. J. Pathol. Inform. 2022, 13, 100002. [Google Scholar]

- Vasiljević, J.; Feuerhake, F.; Wemmert, C.; Lampert, T. HistoStarGAN: A unified approach to stain normalisation, stain transfer and stain invariant segmentation in renal histopathology. Knowl.-Based Syst. 2023, 277, 110780. [Google Scholar] [CrossRef]

- Hossain, M.S.; Armstrong, L.J.; Cook, D.M.; Zaenker, P. Application of histopathology image analysis using deep learning networks. Hum.-Centric Intell. Syst. 2024, 4, 417–436. [Google Scholar] [CrossRef]

- Yoon, C.; Park, E.; Misra, S.; Kim, J.Y.; Baik, J.W.; Kim, K.G.; Jung, C.K.; Kim, C. Deep learning-based virtual staining, segmentation, and classification in label-free photoacoustic histology of human specimens. Light. Sci. Appl. 2024, 13, 226. [Google Scholar] [CrossRef] [PubMed]

- BenTaieb, A.; Hamarneh, G. Adversarial stain transfer for histopathology image analysis. IEEE Trans. Med. Imaging 2017, 37, 792–802. [Google Scholar] [CrossRef] [PubMed]

- Baykal Kablan, E. Regional realness-aware generative adversarial networks for stain normalization. Neural Comput. Appl. 2023, 35, 17915–17927. [Google Scholar] [CrossRef]

- Lafarge, M.W.; Pluim, J.P.; Eppenhof, K.A.; Veta, M. Learning domain-invariant representations of histological images. Front. Med. 2019, 6, 162. [Google Scholar] [CrossRef]

- Shahini, A.; Gambella, A.; Molinari, F.; Salvi, M. Semantic-driven synthesis of histological images with controllable cellular distributions. Comput. Methods Programs Biomed. 2025, 261, 108621. [Google Scholar] [CrossRef]

- Lou, W.; Li, H.; Li, G.; Han, X.; Wan, X. Which pixel to annotate: A label-efficient nuclei segmentation framework. IEEE Trans. Med. Imaging 2022, 42, 947–958. [Google Scholar] [CrossRef]

- Wang, H.; Xu, G.; Pan, X.; Liu, Z.; Lan, R.; Luo, X. Multi-task generative adversarial learning for nuclei segmentation with dual attention and recurrent convolution. Biomed. Signal Process. Control 2022, 75, 103558. [Google Scholar] [CrossRef]

- Juhong, A.; Li, B.; Yao, C.Y.; Yang, C.W.; Agnew, D.W.; Lei, Y.L.; Huang, X.; Piyawattanametha, W.; Qiu, Z. Super-resolution and segmentation deep learning for breast cancer histopathology image analysis. Biomed. Opt. Express 2022, 14, 18–36. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, S.; Gu, L.; Zhang, J.; Zhai, X.; Sha, X.; Chang, S. Automatic consecutive context perceived transformer GAN for serial sectioning image blind inpainting. Comput. Biol. Med. 2021, 136, 104751. [Google Scholar] [CrossRef]

- Kapil, A.; Meier, A.; Steele, K.; Rebelatto, M.; Nekolla, K.; Haragan, A.; Silva, A.; Zuraw, A.; Barker, C.; Scott, M.L.; et al. Domain adaptation-based deep learning for automated tumor cell (TC) scoring and survival analysis on PD-L1 stained tissue images. IEEE Trans. Med. Imaging 2021, 40, 2513–2523. [Google Scholar] [CrossRef] [PubMed]

- Azam, A.B.; Wee, F.; Väyrynen, J.P.; Yim, W.W.Y.; Xue, Y.Z.; Chua, B.L.; Lim, J.C.T.; Somasundaram, A.C.; Tan, D.S.W.; Takano, A.; et al. Training immunophenotyping deep learning models with the same-section ground truth cell label derivation method improves virtual staining accuracy. Front. Immunol. 2024, 15, 1404640. [Google Scholar] [CrossRef] [PubMed]

- Lahiani, A.; Klaman, I.; Navab, N.; Albarqouni, S.; Klaiman, E. Seamless virtual whole slide image synthesis and validation using perceptual embedding consistency. IEEE J. Biomed. Health Inform. 2020, 25, 403–411. [Google Scholar] [CrossRef] [PubMed]

- Kweon, J.; Yoo, J.; Kim, S.; Won, J.; Kwon, S. A novel method based on GAN using a segmentation module for oligodendroglioma pathological image generation. Sensors 2022, 22, 3960. [Google Scholar] [CrossRef]

- Hou, L.; Gupta, R.; Van Arnam, J.S.; Zhang, Y.; Sivalenka, K.; Samaras, D.; Kurc, T.M.; Saltz, J.H. Dataset of segmented nuclei in hematoxylin and eosin stained histopathology images of ten cancer types. Sci. Data 2020, 7, 185. [Google Scholar] [CrossRef]

- Mahmood, F.; Borders, D.; Chen, R.J.; McKay, G.N.; Salimian, K.J.; Baras, A.; Durr, N.J. Deep adversarial training for multi-organ nuclei segmentation in histopathology images. IEEE Trans. Med. Imaging 2019, 39, 3257–3267. [Google Scholar] [CrossRef]

- Rong, R.; Wang, S.; Zhang, X.; Wen, Z.; Cheng, X.; Jia, L.; Yang, D.M.; Xie, Y.; Zhan, X.; Xiao, G. Enhanced pathology image quality with restore–generative adversarial network. Am. J. Pathol. 2023, 193, 404–416. [Google Scholar] [CrossRef]

- Mahapatra, S.; Maji, P. Truncated normal mixture prior based deep latent model for color normalization of histology images. IEEE Trans. Med. Imaging 2023, 42, 1746–1757. [Google Scholar] [CrossRef]

- Hossain, M.S.; Armstrong, L.J.; Abu-Khalaf, J.; Cook, D.M. The segmentation of nuclei from histopathology images with synthetic data. Signal Image Video Process. 2023, 17, 3703–3711. [Google Scholar] [CrossRef]

- Naglah, A.; Khalifa, F.; El-Baz, A.; Gondim, D. Conditional GANs based system for fibrosis detection and quantification in Hematoxylin and Eosin whole slide images. Med. Image Anal. 2022, 81, 102537. [Google Scholar] [CrossRef]

- de Bel, T.; Bokhorst, J.M.; van der Laak, J.; Litjens, G. Residual cyclegan for robust domain transformation of histopathological tissue slides. Med. Image Anal. 2021, 70, 102004. [Google Scholar] [CrossRef] [PubMed]

- Vasiljević, J.; Feuerhake, F.; Wemmert, C.; Lampert, T. Towards histopathological stain invariance by unsupervised domain augmentation using generative adversarial networks. Neurocomputing 2021, 460, 277–291. [Google Scholar] [CrossRef]

- Falahkheirkhah, K.; Tiwari, S.; Yeh, K.; Gupta, S.; Herrera-Hernandez, L.; McCarthy, M.R.; Jimenez, R.E.; Cheville, J.C.; Bhargava, R. Deepfake histologic images for enhancing digital pathology. Lab. Investig. 2023, 103, 100006. [Google Scholar] [CrossRef]

- Ruiz-Casado, J.L.; Molina-Cabello, M.A.; Luque-Baena, R.M. Enhancing Histopathological Image Classification Performance through Synthetic Data Generation with Generative Adversarial Networks. Sensors 2024, 24, 3777. [Google Scholar] [CrossRef]

- Gadermayr, M.; Gupta, L.; Appel, V.; Boor, P.; Klinkhammer, B.M.; Merhof, D. Generative adversarial networks for facilitating stain-independent supervised and unsupervised segmentation: A study on kidney histology. IEEE Trans. Med. Imaging 2019, 38, 2293–2302. [Google Scholar] [CrossRef]

- Bai, B.; Yang, X.; Li, Y.; Zhang, Y.; Pillar, N.; Ozcan, A. Deep Learning-enabled Virtual Histological Staining of Biological Samples. arXiv 2022, arXiv:2211.06822. [Google Scholar] [CrossRef]

- Xu, Z.; Huang, X.; Fernández Moro, C.; Bozóky, B.; Zhang, Q. GAN-based Virtual Re-Staining: A Promising Solution for Whole Slide Image Analysis. arXiv 2019, arXiv:1901.04059. [Google Scholar]

- Smith, J.; Doe, J. High-Resolution Generative Adversarial Neural Networks Applied to Histological Images Generation. In Proceedings of the ICANN 2018, Rhodes, Greece, 4–7 October 2018; pp. 195–202. [Google Scholar]

- Vu, Q.D.; Graham, S.; Kurc, T.; To, M.N.N.; Shaban, M.; Qaiser, T.; Koohbanani, N.A.; Khurram, S.A.; Kalpathy-Cramer, J.; Zhao, T.; et al. Methods for Segmentation and Classification of Digital Microscopy Tissue Images. Front. Bioeng. Biotechnol. 2019, 7, 53. [Google Scholar] [CrossRef]

- Zhang, W.; Li, M.; Chen, Y. Color Normalization Techniques to Improve Consistency in Histopathological Image Analysis. J. Med. Imaging Anal. 2023, 45, 102341. [Google Scholar]

- Shaban, M.T.; Baur, C.; Navab, N.; Albarqouni, S. Staingan: Stain style transfer for digital histological images. Comput. Methods Programs Biomed. 2020, 184, 105245. [Google Scholar]

- Zhang, Y.; Huang, L.; Pillar, N.; Li, Y.; Migas, L.G.; Van de Plas, R.; Spraggins, J.M.; Ozcan, A. Virtual Staining of Label-Free Tissue in Imaging Mass Spectrometry. arXiv 2024, arXiv:2411.13120. [Google Scholar]

- Taneja, N.; Zhang, W.; Basson, L.; Aerts, H.; Orlov, N. High-resolution histopathology image generation and segmentation through adversarial training. In Proceedings of the European Conference on Computer Vision (ECCV), Montreal, QC, Canada, 11 October 2021. [Google Scholar]

- Chen, J.; Yu, L.; Ma, Z. Unsupervised domain adaptation for histopathological image segmentation with adversarial learning. Pattern Recognit. 2023, 135, 109150. [Google Scholar]

- Fahoum, I.; Tsuriel, S.; Rattner, D.; Greenberg, A.; Zubkov, A.; Naamneh, R.; Greenberg, O.; Zemser-Werner, V.; Gitstein, G.; Hagege, R.; et al. Automatic analysis of nuclear features reveals a non-tumoral predictor of tumor grade in bladder cancer. Diagn. Pathol. 2024, 19, 1–10. [Google Scholar] [CrossRef] [PubMed]

| Study | Year | Image Type | Datasets | Task Investigated | GAN Types | Metrics | Objective |

|---|---|---|---|---|---|---|---|

| Jose et al. [20] | 2021 | Histopathology (WSI) | TCGA, Camelyon | Histological image processing | CycleGAN, Pix2Pix, CGAN | Qualitative | GANs in digital pathology and histology |

| Chen et al. [5] | 2022 | Radiology, microscopy | BRATS, LUNA, ISIC | Augmentation | CycleGAN, StyleGAN, DCGAN | Limited | GANs for data augmentation |

| Xun et al. [23] | 2022 | General medical imaging | ACDC, DRIVE | Segmentation | CGAN, U-GAN, SegAN | Limited | Review of segmentation using GANs |

| Zhao et al. [21] | 2022 | Radiology, pathology | Not detailed | Attention-enhanced segmentation | AttentionGAN, TransGAN | Limited | Emerging GAN architectures with attention |

| Gui et al. [17] | 2023 | General (incl. medical) | Not detailed | General overview | DCGAN, WGAN, InfoGAN, BigGAN | Theoretical | Broad review of GANs including healthcare |

| Islam et al. [19] | 2024 | Radiology, Pathology | Public/private datasets | Augmentation, Synthesis, Segmentation | DCGAN, CGAN, CycleGAN, StyleGAN | Limited | Comprehensive review on GANs in medical imaging |

| Sultan et al. [16] | 2024 | MRI, CT, Ultrasound | Not specified | Segmentation | Vanilla GAN, CGAN, CycleGAN | Limited | Conceptual overview of GANs in medical image segmentation |

| Hussain et al. [22] | 2025 | CT, MRI | Multiple (e.g., BraTS) | Reconstruction (incl. segmentation) | Progressive GANs, CycleGAN | Performance evaluation | Systematic review on GANs in image reconstruction |

| Ibrahim et al. [26] | 2025 | Multi-modal (MRI, histology, EHR) | Multimodal datasets | Synthetic data generation | Diffusion, GANs, VAEs | Limited | Synthetic data generation in medicine |

| This review | 2025 | Histology and histopathology | Multiple datasets | Segmentation | GAN, CycleGAN, PGGAN, Pix2Pix, StyleGAN, StarGAN, cGAN, WGAN | Qualitative and quantitive | Systematic review focused on GANs in histological image segmentation |

| Inclusion Criteria (IC) | Exclusion Criteria (EC) |

|---|---|

| (IC1) Studies using GANs for segmentation of histological images. | (EC1) Studies using GANs only to synthesize images, without a focus on segmentation. |

| (IC2) Studies with experimental validation (quantitative or qualitative). | (EC2) Works that do not involve histological or microscopic images. |

| (IC3) Published between 2014 and 2025. | (EC3) Studies using GANs only for classification or detection, not segmentation. |

| (IC4) Articles in English. | (EC4) Articles in a language other than English. |

| (IC5) Full articles. | (EC5) Review, surveys, Case studies, reports, short papers, conference, abstracts, communications, theses, and dissertations. |

| Dataset | Paper | Type of Tissue | Size | Magnification | Labeled | Availability |

|---|---|---|---|---|---|---|

| ANHIR | [56] | Kidney, breast, colon, lung and gastric mucosa | 231 images (WSI) (100,000 × 200,000) | 20× and 40× | Yes | Public |

| BBBC-data | [57,58] | Liver, Brain, Colon, Lung, Ovary, Kidney, Blood, Immune System, Embryo, Heart and Nervous System | 700 images | 20× | Yes | Public |

| BMCD-FGCD | [59] | Bone marrow | 29 images (WSI) (1500 × 800) | — | Yes | Public |

| CAMELYON16 | [60] | Breast (lymph nodes) | 400 images (WSI) (100,000 × 200,000) | 20× and 40× | Yes | Public |

| CARS | [61] | Thyroid | 200 images (256 × 256) | 40× | Yes | Private |

| CoNiC | [62] | Colon | 4981 patches (256 × 256) | — | Yes | Public |

| CoNSep | [58,63,64] | Adrenal, Larynx, Lymph node, Mediastinum. | 30 images (512 × 512) | 40× | Yes | Public |

| CPM17 | [58,63,64,65] | Colon | 64 images (500 × 500) | 20× and 40× | Yes | Public |

| Crag | [66,67] | Colorectal carcinome | 213 images (1512 × 1512) | 20× | Yes | Public |

| CryoNuSeg | [63] | Adrenal gland, larynx, lymph node, mediastinum, pancreas, pleura, testis, thymus and Thyroid gland | 30 images (512 × 512) | 20× | Yes | Public |

| Digestpath | [66] | Colorectal carcinoma | 46 images (5000 × 5000) | 20× | Yes | Public |

| Glomeruli | [56] | Kidney | 200 images (WSI) (1000 × 1000) | 40× | Yes | Public |

| HN Cancer | [68] | Head and Neck | 1562 images (1024 × 1280) | 10× | Yes | Private |

| HuBMAP | [69] | Kidney | 9 images (WSI) | 40× | Yes | Public |

| ICIAR-BACH | [70] | Breast | 400 images (2048 × 1536) | 20× | Yes | Public |

| In-House | [67] | Colorectal | 1093 images (WSI) (512 × 512) | 40× | Yes | Public |

| In-House-MIL | [67] | Kidney, Lung, Breast, Prostate, Endometrium | 260 images (WSI) (10,000 × 10,000) | 40× | Yes | Public |

| ICPR14 e 12 | [59,71] | Breast | 163 images (WSI) (2000 × 2000) | 40× | Yes | Public |

| Kather | [67] | Large intestine (colon and rectum) | 25,058 images (224 × 224) | 20× | Yes | Public |

| KidneyArtPathology | [72] | Kidney | 5000 images (512 × 512) | 20× and 40× | Yes | Public |

| KIRC | [58,63,64,73] | Kidney | >190,000 images (WSI) (100,000 × 100,000) | 20× | No | Public |

| KPMP | [69] | Kidney | 85 images (WSI) | 40× | Yes | Public |

| Kumar | [58,64,65,67,74] | Breast, Liver, Kidney, Prostate, Bladder, Colon and Stomach | 30 images (1000 × 1000) | 40× | Yes | Public |

| Lizard | [67] | Large intestine (colon and rectum) | 133 images (1016 × 917) | 20× | Yes | Public |

| Lung dataset | [56] | Lung | 23,744 patchs | 40× | - | Private |

| LYON19 (IHC) | [58] | Breast, colon, prostate | 871 images (256 × 256) | 40× | Yes | Public |

| MICCAI’16 GlaS | [68,70,75,76,77] | Large intestine (colon) | 165 images (775 × 522) | 20× | Yes | Public |

| MITOS-ATYPIA 14 | [70,76] | Breast | 1200 images (1539 × 1376) | 10×, 20× and 40× | Yes | Public |

| MoNuSAC | [63,78] | Lung, prostate, kidney and breast | 294 images (113 × 81 and 1398 × 1956) | 40× | Yes | Public |

| MoNuSeg | [63,68,79,80] | Prostate, Lung, Kidney, Colon, Breast, Pancreas, Oral cavity, Stomach, Liver and Bladder | 44 images (1000 × 1000) | 40× | Yes | Public |

| MThH | [58] | Colon | 36,000 images (256 × 256) | 20× and 40× | Yes | Public |

| MUC1 | [81] | Breast | 13,000 images (256 × 266) | 40× | No | Private |

| N7, E17, N5 | [82] | Kidney (mouse) | 1917 patchs (128 × 128) | — | Private | |

| NSCLC | [83] | Lung | 269,000 pachs (128 × 128) | 10× | Yes | Public |

| Onco-SG | [84] | Lung | 57 images (1792 × 768) | 20× | Yes | Public |

| PanNuke | [62,67,80] | Breast, Lung, Prostate, Kidney, Brain, Colon and Liver | 7901 images (256 × 256) | 20× and 40× | Yes | Public |

| Roche | [85] | Liver (colorectal) | 50 images (WSI) (512 × 512) | 10x | Private | |

| RWTH Aachen | [69] | Kidney | 1009 images (WSI) | 40× | Yes | Private |

| TCGA | [73,77,79,81,86,87,88,89,90,91] | Breast, Liver, Kidney, Prostate, Bladder, Brain, Colon and Stomach | >20,000 WSIs (100,000 × 100,000) | 20× and 40× | No | Public |

| TNBC | [58,63,64,74,79] | Breast | 50 images (512 × 512) | 20× and 40× | Yes | Public |

| TUPAC16 | [70,71,77] | Breast | 821 images (WSI) | 40× | Yes | Public |

| VALIGA | [69] | Kidney | 648 images (WSI) | 40× | Yes | Private |

| - | [92] | Liver | 64, 128, 256, 512 e 1024 patchs | 40× | Yes | Private |

| - | [93] | Colon and Kidney | 100 images (WSI) | — | Yes | Private |

| - | [94] | Kidney | 10 images | 40× | Yes | Private |

| - | [95] | Prostate and Colon | 102 images (WSI) (1024 × 1024) | 10× | Yes | Public |

| Paper | Task | Model | Learning | Data Available * | Performance |

|---|---|---|---|---|---|

| Azam et al. (2024) [84] | Nuclei | U-Net | Supervised | http://github.com/abubakrazam/Pix2Pix_TIL_H-E.git | |

| Bouteldja et al. (2022) [69] | Cell and Tissue | U-Net | Unsupervised | https://github.com/NBouteldja/KidneyStainAugmentation | |

| De Bel et al. (2021) [93] | Tissue | U-Net | Unsupervised | - | |

| Deshpande et al. (2022) [66] | Cell and Tissue | U-Net | Supervised | http://warwick.ac.uk/TIALab/SAFRON | |

| Deshpande et al. (2024) [62] | Cell | HoVer-Net | Supervised | https://github.com/Srijay/SynCLay-Framework | - |

| Du et al. (2025) [70] | Tissue and Nuclei | U-net | Semi-supervised | - | MICCAI: |

| Falahkheirkhah et al. (2023) [95] | Tissue | U-Net, ResNet | Supervised | https://github.com/kiakh93/Synthesizing-histological-images | Real images + Synthesized— |

| Fan et al. (2024) [65] | Nuclei | Mask R-CNN | Unsupervised | - | Kumar: ; CPM17: |

| Gadermayr (2019) [97] | Cells and Nuclei | U-Net | Supervised and Unsupervised | - | Supervised— |

| Guan, Li and Zhang (2024) [56] | Cell | Mask R-CNN | Unsupervised | https://github.com/xianchaoguan/GramGAN | |

| He et al. (2022) [61] | Tessue | U-Net+ | Supervised | - | |

| Hou et al. (2020) [87] | Nuclei | Mask R-CNN | - | - | |

| Hossain et al. (2023) [91] | Nuclei | U-Net | Supervised | - | |

| Hossain et al. (2024) [73] | Nuclei | Mask R-CNN, CNN, U-Net | - | - | Real images: ; Synthetic image: . |

| Hu et al. (2018) [59] | Cell | - | Unsupervised | https://github.com/bohu615/nu_gan | |

| Juhong et al. (2022) [81] | Nuclei | Interception U-Net | Supervised | - | |

| Kablan (2023) [76] | Cell and Tissue | U-Net | Unsupervised | https://github.com/junyanz/pytorch-CycleGAN-andpix2pix | |

| Kapil et al. (2021) [83] | Cell and Tissue | SegNet | Supervised | - | Two class-, Three class |

| Kweon et al. (2022) [86] | Cell and Tissue | U-Net | - | - | - |

| Lafarge et al. (2019) [77] | Nuclei | CNN | Supervised | - | |

| Lahiani et al. (2020) [85] | Tissue | ResNet | Unsupervised | - | Tumor: , No Tumor: |

| Li, Hu and Kak (2023) [63] | Nuclei | CNN | Unsupervised | https://github.com/lifangda01/GSAN-Demo | |

| Liu, Wagner and Peng (2022) [57] | Nuclei | Mask R-CNN | Supervised | http://www.kaggle.com/c/data-science-bowl-2018/submit | |

| Lou Wei et al. (2022) [79] | Nuclei | Mask R-CNN | Semi-supervised and Supervised | - | |

| Mahapatra and Maji (2023) [90] | Nuclei | Watershed | Unsupervised | - | |

| Mahmood et al. (2019) [88] | Nuclei | U-Net | Supervised | https://github.com/mahmoodlab/NucleiSegmentation | |

| Naglah et al. (2022) [92] | Cell and Tissue | U-Net | Unsupervised | - | |

| Purma et al. (2024) [68] | Tissue and Nuclei | U-Net | Supervised and Self-supervised | https://github.com/suhas-srinath/GenSelfDiff-HIS | Glas: ; MoNuSeg—; HC Cancer— |

| Razavi et al. (2022) [71] | Cell and Nuclei | - | Supervised | - | Mitosis—, Nuclei—, |

| Rong et al. (2023) [89] | Nuclei | Mask R-CNN | Unsupervised | http://pytorch.org/get-started/previous-versions/#v1101 | |

| Shahini et al. (2025) [78] | Nuclei | - | Usupervised | - | |

| Song et al. (2023) [67] | Nuclei | Mask R-CNN | Self-supervied | https://github.com/zhiyuns/UNITPathSSL | |

| Taieb and Hamarneh (2017) [75] | Nuclei | AlexNet | Unsupervised | - | MITOSIS: ; COLON: ; OVARY: . |

| Vasiljevic et al. (2021) [94] | Cell and Tissue | U-Net | Unsupervised | - | |

| Vasiljevic et al. (2023) [72] | Cell and Tissue | U-Net | Supervised | - | |

| Wang et al. (2021) [82] | Tissue | U-Net | Unsupervised | - | N5: |

| Wang et al. (2022) [80] | Nuclei | RCSAU-Net | Supervised | https://github.com/antifen/Nuclei-Segmentation | MoNuSeg: ; PanNuke: . |

| Ye et al. (2025) [60] | Tissue | PRANet | Self-supervised | http://github.com/YeahHighly/SASSL_PR_2024 | |

| Yoon et al. (2024) [74] | Area, count and distance cells | U-Net | - | https://github.com/YoonChiHo/DL-based-frameworkfor-automated-HIA-of-label-free-PAH-images | - |

| Zhang et al. (2021) [64] | Nuclei | U2-Net | Supervised | - | TNBC: . |

| Zhang et al. (2022) [58] | Nuclei | DSCN | Supervised and Unsupervised | http://github.com/wangpengyu0829 |

| GAN | Segmentation | ||

|---|---|---|---|

| Name | Paper | Name | Paper |

| Fréchet Inception Score (FID) | [56,58,62,66,68,74,82,86,94] | Accuracy (Acc) | [73,75,76,80,82,84,89,91,92] |

| Structural Similarity Index (SSIM) | [61,62,70,73,76,81,82,89,91] | Dice Similarity Coefficient (DSC) | [58,60,61,64,65,66,67,69,70,71,76,78,79,80,81,82,87,90,91,92,93] |

| Peak Signal-to-Noise Ratio (PSNR) | [70,73,76,81,82,89,91] | F1-Score | [59,64,67,68,71,72,77,80,83,85,88,90,94,97] |

| Meane Square Error (MSE) | [61,73,76,81,91] | Intersection Over Union (IOU) | [57,59,60,64,68,70,81,82,95] |

| Average Human Rank (AHR) | [58] | Aggregated Jaccard Index (AJI) | [58,63,65,68,73,79,80,88] |

| Pearson correlation coefficient (PCC) | [70] | Jaccard Index (JI) | [91] |

| Root Meane Square Error (RMSE) | [76] | Pixel Accuracy (PA) | [58,60,64,70,95] |

| Kernel Inception Distance (KID) | [56,58,74] | Precision (Pre) | [63,72,73,76,82,94,97] |

| Interception Score (IS) | [75,86] | Recall (Rec)/Sensitivity (Sen) | [64,72,76,82,94,97] |

| Contrast-Structure Simirarity (CSS) | [56] | Average Pompeiu–Hausdorff distance (aHD) | [88] |

| Normalized Mutual Information (NMI) | [90,92] | Hausdorff Distance (HD) | [68,80] |

| Complex Wavelet Structural Similarity (CWSSIM) | [85] | Mean Absolute Error (MAE) | [58] |

| Bhattacharyya Distance (BCD) | [92] | Panoptic Quality (PQ) | [63,65] |

| Histogram Correlation (HC) | [92] | Probabilistic Rand Index (PRI) | [64] |

| Feature Similarity Index (FSIM) | [76,82] | Mean Average Precision (mAP) | [56,95] |

| Multi-scale Structural Similarity Index (MSSSIM) | [76,82] | Pearson Correlation Coefficient (PCC) | [84] |

| Erreur Relative Globale Adimensionnelle de Synthese (ERGAS) | [76] | Score Segmentation (SQ) | [65] |

| Universal Quality Index (UQI) | [76] | Average Precision (AP) | [63] |

| Erreur Relative Globale Adimension (Misep) | [76] | ||

| Erreur Relative Globale Adimension (Miss) | [76] | ||

| Name | Paper | Name | Paper |

| Average Spectral Error (RASE) | [76] | ||

| Between-image Color Constancy (BiCC) | [90] | ||

| Within-set Color Constancy (WsCC) | [90] | ||

| Measuring Mutual Information (MMI) | [92] | ||

| Visual Information Fidelity (VIF) | [82] | ||

| Standard Deviation (SD) | [95] | ||

| Paper | Model GAN | Model Segmentation | Datasets | Metrics GAN | Metrics Segmentation |

|---|---|---|---|---|---|

| Azam et al. (2024) [84] | Pix2Pix-GAN CUT | U-Net | Onco-SG | FID | Pearson correlation, Acc |

| Bouteldja et al. (2022) [69] | CycleGAN | U-Net | RWTH Aachen VALIGA | - | IDSC |

| De Bel et al. (2021) [93] | CycleGAN | U-Net | - | - | DSC |

| Deshpande et al. (2022) [66] | GAN | U-Net | - | FID | DSC |

| Deshpande et al. (2024) [62] | SynCLay | HoVer-Net | Crag Digestpath | FID, SSIM | - |

| Du et al. (2025) [70] | P2P-GAN, DSTGAN | U-Net | ICIAR-BACH MICCAI’16 GlaS MITOS-ATYPIA 14 TUPAC16 | SSIM, PCC, PSNR | DSC, IoU, PA |

| Falahkheirkhah et al. (2023) [95] | Pix2Pix-GAN | U-Net, ResNet | - | Mean, SD | PA, mPA, IoU |

| Fan et al. (2024) [65] | CycleGAN | Mask R-CNN | CPM17 Kumar | - | DSC, AJI, SQ, PQ |

| Gadermayr et al. (2019) [97] | CycleGAN | U-Net | - | - | F1-Score, Precision, Recall |

| Guan, Li and Zhang (2024) [56] | GramGAN | Mask R-CNN | ANHIR Glomeruli Lung dataset | CSS, FID, KID | mAP |

| He et al. (2022) [61] | cGAN | U-Net+ | CARS | SSIM, MSE | DSC |

| Hou et al. (2020) [87] | GAN | Mask R-CNN | TCGA | - | DSC |

| Hossain et al. (2023) [91] | CycleGAN | U-Net | TCGA | SSIM, MSE, PSNR | Acc, DSC, JI |

| Hossain et al. (2024) [73] | CycleGAN | Mask R-CNN, CNN, U-Net | KIRC TCGA | SSIM, MSE, PSNR | Acc, DSC, AJI |

| Hu et al. (2018) [59] | WGAN-GP | - | BMCD-FGCD Dataset A, B, C and D | - | IoU, F1-Score |

| Juhong et al. (2022) [81] | SRGAN- ResNeXt | Interception U-Net | MUC1 TCGA | SSNR, SSIM, MSE | IoU, DSC |

| Kablan (2023) [76] | CycleGAN RRAGAN | U-Net | MICCAI’16 GlaS MITOS-ATYPIA 14 | FSIM, PSNR, SSIM, MSE, MSSSIM, RMSE, ERGAS, UQI, RASE | DSC, Acc, Precision, Recall |

| Kapil et al. (2021) [83] | CycleGAN DASGAN | SegNet | NSCLC | - | F1-Score |

| Kweon et al. (2022) [86] | PGGAN | U-Net | TCGA | FID, IS | - |

| Lafarge et al. (2019) [77] | DANN | CNN | MICCAI’16 GlaS TCGA TUPAC16 | - | F1-Score |

| Lahiani et al. (2020) [85] | CycleGAN | ResNet | Roche | CWSSIM | F1-Score |

| Li, Hu and Kak (2023) [63] | G-SAN | CNN | CPM17 CoNSep CryoNuSeg KIRC MoNuSAC MoNuSeg TNBC | - | PQ, AP, AJI |

| Liu, Wagner and Peng (2022) [57] | GAN | Mask R-CNN | BBBC-data | - | IoU |

| Lou Wei et al. (2022) [79] | CSinGAN | Mask R-CNN | MoNuSeg TCGA TNBC | - | AJI, DSC |

| Mahapatra and Maji (2023) [90] | LSGAN TredMiL | Watershed | TCGA | NMI, BiCC, WsCC | DSC |

| Mahmood et al. (2019) [88] | cGAN | U-Net | TCGA | - | aHD, F1-Score, AJI |

| Naglah et al. (2022) [92] | cGAN CycleGAN | U-Net | - | MMI, NMI, HC, BCD | Acc, DSC |

| Purma et al. (2024) [68] | CycleGAN | U-Net | HN Cancer MICCAI’16 GlaS MoNuSeg | FID | AJI, IoU, HD, F1-score |

| Razavi et al. (2022) [71] | ICPR14 e 12 TUPAC16 | - | cGAN MiNuGAN | - | DSC, F1-score |

| Rong et al. (2023) [89] | Restore-GAN | Mask R-CNN | TCGA | SSIM, PSNM | Acc |

| Shahini et al. (2025) [78] | ViT-P2P | - | MoNuSAC | - | DSC |

| Song et al. (2023) [67] | CycleGAN | Mask R-CNN | KatherKumar Lizard In-House In-House-MIL PanNuke | - | F1-Score, DSC, AJI, PQ |

| Taieb and Hamarneh (2017) [75] | GAN | AlexNet | MICCAI’16 GlaS | IS | Acc |

| Vasiljevic et al. (2021) [94] | CycleGAN, StarGAN | U-Net | - | FID | Precision, Recall, F1-score |

| Vasiljevic et al. (2023) [72] | CycleGAN HistoStarGAN | U-Net | KidneyArtPathology | FID, SSIM | Precision, Recall, F1-score |

| Wang et al. (2021) [82] | ACCP-GAN | U-Net | N7, E17, N5 | FSIM, MS-SSIM, PSNR, VIF, FID | Acc, Rcall, Precision, DSC, IoU |

| Wang et al. (2022) [80] | GAN | RCSAU-Net | MoNuSeg PanNuke | - | Acc, F1-score, DSC, AJI, HD, Recall |

| Ye et al. (2025) [60] | SASSL | PRANet | CAMELYON16 | - | PA, DSC, IoU |

| Yoon et al. (2024) [74] | CUT, E-CUT CycleGAN E-CycleGAN | U-Net | CPM17 Kumar TNBC | FID, KID | - |

| Zhang et al. (2021) [64] | MASG-GAN | U2-Net | CoNSep CPM17 KIRC Kumar TNBC | - | PA, Recall, specificity, F1-score, IoU, DSC, PRI |

| Zhang et al. (2022) [58] | CSTN | U-Net | BBBC-data CoNSep CPM17 KIRC Kumar LYON19 (IHC) MThH TNBC | FID, KID, AHR | PA, DSC, AJI7, MAE |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cruz, Y.L.K.F.; Silva, A.F.M.; Santana, E.E.C.; Costa, D.G. Generative Adversarial Networks in Histological Image Segmentation: A Systematic Literature Review. Appl. Sci. 2025, 15, 7802. https://doi.org/10.3390/app15147802

Cruz YLKF, Silva AFM, Santana EEC, Costa DG. Generative Adversarial Networks in Histological Image Segmentation: A Systematic Literature Review. Applied Sciences. 2025; 15(14):7802. https://doi.org/10.3390/app15147802

Chicago/Turabian StyleCruz, Yanna Leidy Ketley Fernandes, Antonio Fhillipi Maciel Silva, Ewaldo Eder Carvalho Santana, and Daniel G. Costa. 2025. "Generative Adversarial Networks in Histological Image Segmentation: A Systematic Literature Review" Applied Sciences 15, no. 14: 7802. https://doi.org/10.3390/app15147802

APA StyleCruz, Y. L. K. F., Silva, A. F. M., Santana, E. E. C., & Costa, D. G. (2025). Generative Adversarial Networks in Histological Image Segmentation: A Systematic Literature Review. Applied Sciences, 15(14), 7802. https://doi.org/10.3390/app15147802