Abstract

This systematic review synthesizes 82 peer-reviewed studies published between 2014 and 2024 on the use of audio features in educational research. We define audio features as descriptors extracted from audio recordings of educational interactions, including low-level acoustic signals (e.g., pitch and MFCCs), speaker-based metrics (e.g., talk-time and participant ratios), and linguistic indicators derived from transcriptions. Our analysis contributes to the field in three key ways: (1) it offers targeted mapping of how audio features are extracted, processed, and functionally applied within educational contexts, covering a wide range of use cases from behavior analysis to instructional feedback; (2) it diagnoses recurrent limitations that restrict pedagogical impact, including the scarcity of actionable feedback, low model interpretability, fragmented datasets, and limited attention to privacy; (3) it proposes actionable directions for future research, including the release of standardized, anonymized feature-level datasets, the co-design of feedback systems involving pedagogical experts, and the integration of fine-tuned generative AI to translate complex analytics into accessible, contextualized recommendations for teachers and learners. While current research demonstrates significant technical progress, its educational potential is yet to be translated into real-world educational impact. We argue that unlocking this potential requires shifting from isolated technical achievements to ethically grounded pedagogical implementations.

1. Introduction

Audio analysis in educational research can be traced back to the early 1960s when analog recording technologies first enabled the capture of classroom interactions [1]. Initially, researchers used tape recorders to document teacher–student communication, relying on manual transcription and qualitative observations to understand the dynamics of teaching and learning. The transition to digital recording in the 1990s brought a new era, as emerging digital signal processing techniques allowed for more systematic, quantitative analyses of speech patterns and interaction modalities. This evolution marked a shift from purely observational studies to data-driven investigations, enabling the systematic application of computational modeling in educational settings. By the early 2000s, as computational power increased, researchers began employing machine learning techniques to extract and analyze audio features more effectively. In the 2010s and beyond, deep learning and sophisticated artificial intelligence algorithms have further refined the process, enabling the detection of nuanced features such as emotional tone, speaker diarization, and linguistic patterns.

Audio features have emerged as a pivotal component in enhancing educational experiences, leveraging the rich information embedded within sound to facilitate learning and engagement. In educational contexts, audio features encompass a range of elements such as speech patterns, acoustic signals, and auditory cues that can be analyzed to assess student performance, provide feedback, and tailor instructional strategies. The integration of audio features into learning analytics and educational contexts has opened new opportunities for personalized learning, accessibility, and real-time assessment [2,3]. In this review, we define audio features as the measurable descriptors derived from raw audio recordings that are used to analyze educational interactions. These features may represent different levels of abstraction, with different tools for each task:

- Low-level acoustic features, such as pitch, intensity, or spectral representations (e.g., MFCCs or spectrograms), are directly extracted from the audio waveform using libraries such as Librosa [4].

- Diarization features, including speaker turn-taking, speaking time, and participant ratios, are obtained by segmenting and labeling who speaks when. For example, PyAnnote [5] is a very common tool to extract diarization information.

- Linguistic features derived from automatic transcriptions of speech, such as word usage, syntactic structure, or discourse-level indicators. Here, we must differentiate between extracting the transcription (for example, using Whisper) [6] and analyzing its content (e.g., spaCy) [7].

Despite their varying degrees of abstraction, all these features share a common origin: they are extracted from audio recordings of classroom or educational interactions. They serve as inputs for computational analyses aimed at modeling pedagogical behaviors, classroom climate, or learner engagement. Throughout this review, we use the term ‘audio features’ to refer collectively to this spectrum of descriptors, regardless of their proximity to the original waveform.

Although several reviews discuss multimodal analytics in education, none treat audio as a distinct and detailed data source. Most prior studies group audio under a generic “sensor” label or confine it to limited use cases, such as transcription or emotion detection, without examining the full range of acoustic, diarization, or linguistic metrics and their pedagogical implications. As a result, researchers lack a consolidated view of which audio feature types (e.g., prosodic versus syntactic analysis of transcripts) have proven effective for specific tasks, such as real-time feedback or modeling classroom interaction, and which remain challenging. Our review fills this gap by (1) comprehensively disaggregating the different categories of audio features used in educational research, (2) revealing how those metrics are extracted and combined, and (3) exposing methodological and ethical voids, such as limited interpretability and the scarcity of anonymized datasets, that have so far prevented findings from translating into practical solutions.

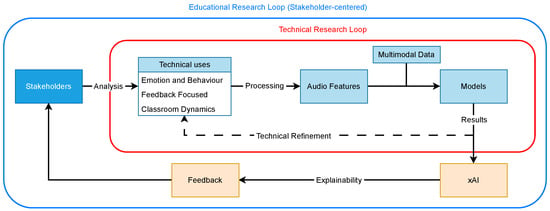

To synthesize the current landscape and expose the structural gaps that motivate this review, we present a conceptual diagram outlining two interconnected but misaligned workflows in the field (Figure 1). The current research loop (in red) captures the dominant, technology-driven approach, where audio features are extracted, processed, and modeled to produce analytical results. While this pipeline has yielded significant technical progress, it often operates in isolation from pedagogical practice. Crucial components, such as model explainability, feedback mechanisms, and, above all, the involvement of educational stakeholders, are frequently overlooked from this loop. In contrast, the educational impact loop (in blue) represents a broader, stakeholder-centered vision in which research findings are made explainable and translated into feedback that informs educational practice. The disconnect between these loops, particularly the absence of pedagogical validation and stakeholder engagement, underscores the need for a critical synthesis of how audio features are currently used in educational contexts and how they could be better aligned with real-world needs.

Figure 1.

Conceptual overview of current and idealized research workflows involving audio features in educational contexts. The red loop represents the dominant technical pipeline, which focuses on extracting and modeling audio features but largely excludes explainability, feedback, and the participation of educational stakeholders. The blue loop reflects a more complete, stakeholder-centered perspective, where analysis leads to actionable outcomes through explainable models and feedback mechanisms. The dashed arrow highlights the lack of pedagogical validation connecting research outputs back to educational use cases.

Using audio features in education extends beyond signal processing or speech recognition, as it encompasses the broader challenge of extracting relevant information from audio streams to support computational analysis of educational settings. While previous work has explored trends in data-driven education more generally [8], no existing review has systematically examined how audio-derived data are functionally applied across studies. In this review, we focus on the technological uses of audio features, i.e., how they are applied within computational pipelines to detect, classify, or model phenomena observed in educational contexts. These uses reflect the current technical framing of audio features rather than their pedagogical purpose per se. Our goal is to uncover the functional roles that audio features play in existing research, establishing a typology of use cases that guides the subsequent, more technical questions. This leads to our first question: What are the main technological uses of audio features in educational research? (RQ1).

To comprehensively explore the technical landscape of audio analytics in education, we identify the most commonly used audio features and the methods employed for their extraction. Recent trends have shifted from traditional statistical approaches to AI-driven techniques, which enable the direct use of raw or minimally processed data while maintaining strong performance across diverse tasks [9,10]. Although these elements are central to audio-based analytics, no prior review has offered a systematic categorization of the features and extraction techniques used in educational contexts. With this in mind, we mapped the audio features found in the literature, classified them, and described them in our second research question: What are the most common audio features used in educational studies and how are they extracted? (RQ2).

Educational data are increasingly being collected from a variety of sources, reflecting the inherently multimodal nature of learning environments. Multimodal learning analytics (MMLAs) have emerged as a research area that seeks to integrate data from diverse modalities (e.g, audio, video, text, and physiological signals) to gain deeper insight into learning processes [11]. Within this context, audio features are rarely used in isolation; they are often combined with other forms of data to capture different dimensions of classroom interaction and learner behavior. These combinations allow researchers to explore richer representations of educational phenomena, though the methods and rationales for such integration vary widely. This leads us to our third research question: How do researchers combine audio features with other data sources? (RQ3).

As audio features become more prevalent in educational research, a key concern is how they are computationally processed. In recent years, there has been a growing reliance on both traditional machine learning algorithms and deep learning models to handle tasks such as classification, clustering, and prediction [12]. These techniques are often applied to features derived from audio, such as speaker diarization outputs and automatic transcriptions, features that, despite being abstracted through additional processing, still originate from the raw audio stream. While these methods can yield strong performance, their complexity raises concerns about interpretability, which is a crucial factor when insights are intended to inform pedagogical decisions. Although explainable AI (xAI) has gained attention in other domains, its adoption within educational audio analytics remains limited. To examine this relationship between performance and transparency, we ask the following: What techniques are employed to process audio features in educational studies, and to what extent are these solutions interpretable? (RQ4).

Finally, we turn to the practical implications of audio-based research in education by examining the extent to which these studies lead to actionable outcomes in real-world settings. Specifically, we explore whether researchers implement mechanisms to provide feedback to participants, such as teachers or students, based on insights derived from audio analysis. Feedback loops are essential for translating research into practice and fostering impact in educational environments [13]. This forms the basis of our final research question: Which studies provide feedback for participants derived from obtained results? (RQ5).

This systematic review synthesizes the literature on the use of audio features in educational contexts, addressing how these features are applied, extracted, and interpreted across studies. The research questions are organized to reflect a gradual progression, from general trends and uses of audio data in education to the specific types of features most frequently extracted and the techniques used to process them.

This review not only synthesizes the current state of research on audio-based methods in educational contexts but also advances the field by identifying three core limitations and offering strategic directions to address them:

- Targeted analysis of audio features. Unlike earlier reviews that broadly categorize audio under “multimodal” or “sensor” headings, our research focuses specifically on audio as a standalone modality. It offers a focused examination of audio features within educational research. It covers a wide range of them, including low-level acoustic properties, linguistic indicators extracted via NLP, and speaker-based metrics obtained through diarization. As far as we are aware, no previous study has provided a systematic identification, categorization, and definition of the audio features employed in educational settings.

- Diagnosis of field-level limitations. While some existing papers describe individual case studies or isolated tools, few have highlighted systemic obstacles that impede pedagogical impact. Our synthesis reveals systemic barriers to pedagogical impact, including the scarcity of actionable feedback, low interpretability of AI models, fragmented and non-replicable datasets, and limited attention to privacy. These gaps highlight a misalignment between technical capability and practical utility.

- Actionable directions for future research. To advance the field, we propose three strategic directions: (1) the release of anonymized, standardized feature-level datasets; (2) the participatory design of feedback systems that actively involve educational practitioners and pedagogical experts; (3) the use of generative AI, particularly fine-tuned LLMs, to translate analytics into tailored, context-aware guidance for teachers and learners.

Summarizing, our research questions for this systematic review are as follows:

- RQ1: What are the main technological uses of audio features in educational research?

- RQ2: What are the most common audio features used in educational studies, and how are they extracted?

- RQ3: How do researchers combine audio features with other data sources?

- RQ4: What techniques are employed to process audio features in educational studies, and to what extent are these solutions interpretable?

- RQ5: Which studies provide feedback for participants derived from obtained results?

The rest of the paper is organized as follows: Section 2 describes the methodology, including some terminology clarifications, the research questions, databases and search terms, research selection, and the review process. Section 3 presents the analysis and synthesis of our results. Then, we end the paper with an analysis of our findings in Section 4 and conclusions in Section 5.

2. Methodology

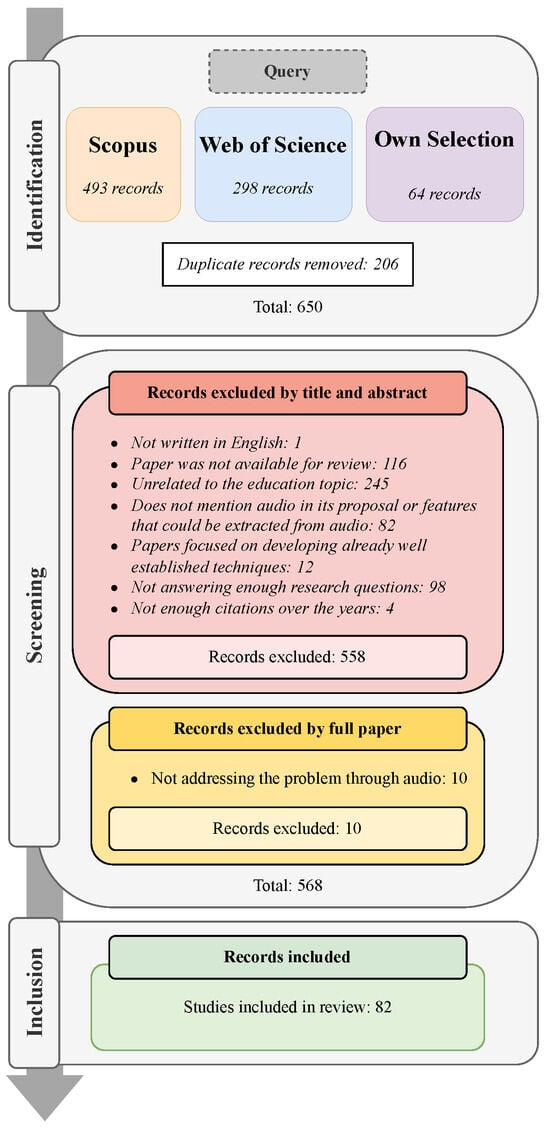

This systematic review was performed in accordance with the PRISMA (Supplementary Materials) (preferred reporting items for systematic reviews and meta-analyses) guidelines [14], which are widely used for structuring evidence-based syntheses. Figure 2 shows the diagram representing the different stages of our systematic review. The methodology includes the following stages:

Figure 2.

Flow diagram of the PRISMA methodology followed.

2.1. Identification of Research Works

The literature data search was conducted on 27 November 2024. Scopus and Web of Science (WoS) were selected due to their broad coverage of peer-reviewed literature in education, technology, and computational sciences [15], making them appropriate for interdisciplinary reviews.

To perform the search on both databases, we restricted the query to the title and abstract to balance precision and recall, as full-text searches produced an unmanageable number of irrelevant results.

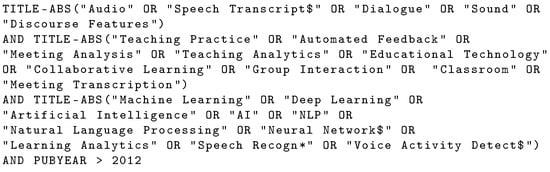

We used a structured query consisting of three conceptual blocks, along with a publication date restriction covering studies published after 2012.

- Data source: This component targets studies where audio serves as a primary or derived source of data. The query includes terms such as audio and sound to capture explicit references to raw audio. To encompass studies that use features extracted from audio rather than the waveform itself, it also includes terms like speech transcript, dialogue, and discourse features. This increased the likelihood of including work analyzing linguistic or prosodic features even when the term “audio” is not explicitly mentioned.

- Educational context: This block captures the environments in which learning interactions occur. Keywords such as collaborative learning, Group interaction, and teaching practice reflect classroom-based and peer-to-peer learning scenarios. Additionally, terms like meeting transcription are included to capture studies focusing on structured interactions in educational or academic contexts. This broader scope helps cover both formal classroom settings and informal learning environments such as workshops and seminars.

- Techniques: This component targets the computational methods used to process audio and audio-derived data. The query includes terms related to core technologies such as machine learning, deep learning, and artificial intelligence, along with domain-specific methods like speech recognition, voice activity detection, and natural language processing (NLP). These terms ensure the inclusion of studies applying advanced analytical frameworks. The inclusion of learning analytics further ensures alignment with educational objectives, emphasizing the intersection between computational processing and pedagogical insight.

The specific query terms used in this systematic review are presented in Figure 3. To account for terminological variation in a still-evolving research domain, we incorporated wildcard characters into search terms such as Speech Transcript$ and Speech Recogn*. The dollar sign ($) and asterisk (*) act as wildcard operators, allowing retrieval of multiple morphological variants of a base term. For instance, ‘Transcript$’ allows for both ‘Transcript’ and ‘Transcription’, while ‘Recogn’ retrieves terms like ‘Recognize’, ‘Recognition’, and similar word forms. This strategy increases recall by capturing terminological variation across studies, ensuring that semantically related works are not excluded due to minor wording differences. The initial search returned a total of 791 records: 493 from Scopus and 298 from Web of Science (WoS). To enhance completeness, we added 64 relevant papers that were not captured by the automated query but were identified through snowballing by reviewing the references and citations of initially selected articles, as well as through prior work by the authors. All additional papers were manually verified to meet the same inclusion criteria. After removing 206 duplicate entries from the combined dataset, the final corpus included 650 unique studies.

Figure 3.

Boolean search query used to identify relevant studies in Scopus and Web of Science. The query targets audio-related terms, educational contexts, and computational techniques in titles and abstracts, restricted to publications after 2012. Wildcards were included to account for morphological variation.

2.2. Screening of Articles

To ensure the relevance and quality of the included papers, we established a set of mandatory exclusion criteria designed to filter out studies misaligned with the review’s objectives or lacking academic rigor. The screening process was conducted independently by two of the co-authors, using the available abstracts as the basis for screening. If reviewers were not sure whether any of the criteria applied, they used the full text to verify. Discrepancies were resolved through discussion until a consensus was reached. The exclusion criteria were applied sequentially, with any study not meeting a given condition being excluded immediately:

- The paper was not written in English due to language constraints in the review team.

- The full text of the paper was not accessible for review, either due to restricted institutional access, paywall limitations, or the unavailability of a preprint or author-supplied version upon request.

- The paper did not focus on educational settings or learning processes as a primary context or objective.

- The paper did not use audio in its proposal or features that could be extracted from audio (e.g., text transcriptions).

- The paper focused exclusively on technical improvements to well-established audio processing methods (e.g., diarization or speech transcription) without applying them to educational data.

- During title/abstract screening, we required each manuscript to address at least one of our five core research questions. If a paper’s title or abstract showed no substantive discussion of audio-feature methodology or feedback-oriented use of audio, it was excluded under “Not answering enough research questions”. A total of 98 papers fell into this category. For instance, some studies mentioned the presence of audio but did not analyze or extract features from it, nor did they integrate it into the research objectives in a meaningful way.

- The paper was published in 2022 or earlier and had no citations on Google Scholar as of 4 December 2024. Given the volume and the goal of identifying impactful contributions, we applied this criterion as a secondary filtering mechanism to exclude papers that had not generated scholarly attention over time. This step affected only four papers.

2.3. Inclusion of Papers for Review

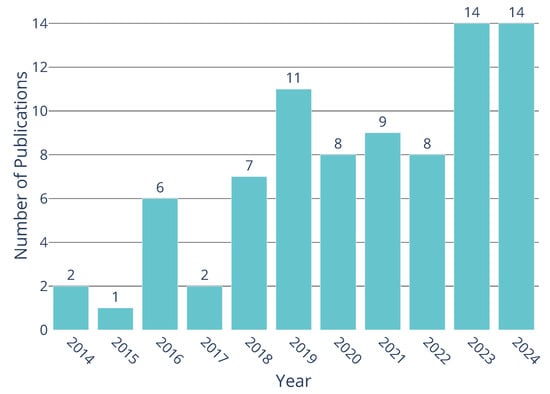

After applying the exclusion criteria to the 650 unique records, a final sample of 82 studies was retained for review. The final set comprised 43 journal articles, 37 conference papers, 1 technical report, and 1 book chapter. A detailed overview of all 82 studies, including the author, title, year, educational level, and learning context, is provided in Appendix A. Regarding the educational level analyzed in the papers of this review, 42 were focused on K-12, 27 on higher education, 4 focused on toddlers, 3 included multiple educational levels, and 6 did not specify the educational level where the research took place. Figure 4 shows the annual distribution of selected studies by publication year. The number of studies using audio-related methodologies generally increased over the 2014–2024 period. This trend reflects the broader emergence of AI technologies capable of processing unstructured data, such as audio and images, which are increasingly adopted in various educational and analytical domains [16].

Figure 4.

Number of selected studies per year of publication.

2.4. Data Analysis

Once all articles were selected, we prepared an Excel spreadsheet with columns corresponding to each element required to address our research questions. Two researchers independently coded each article by filling in all columns based on the full-text information. For each study, we extracted all available data relevant to our RQs, rather than selecting only subsets of results, ensuring that every feature, method, or feedback format mentioned was captured. After the initial coding, we held consensus meetings to compare both coders’ entries, resolve any discrepancies, and harmonize the category labels (tags) so that the final structure accurately reflected the annotated data in each column. The agreed-upon version of the spreadsheet was then used to generate preliminary tables and figures, from which the narrative synthesis of results was developed.

Regarding the analysis of each research question, every paper was considered for every research question as long as the paper provided some information regarding that specific research question.

2.5. Synthesis of Results

Given the substantial heterogeneity across study designs, types of audio-based features, and educational outcomes, we did not perform a formal meta-analysis. Instead, we adopted a narrative synthesis approach to integrate findings. This choice was driven by three main factors: (1) variability in audio-feature extraction methods (e.g., raw waveform vs. transcript-based vs. prosodic features), (2) diverse machine learning and AI techniques applied, and (3) differing educational contexts and measured outcomes (e.g., classroom participation vs. automated feedback accuracy). By using a structured narrative framework, we grouped studies according to their primary research questions, types of audio data, and analytical methods. Within each group, we summarized key findings, methodological strengths, and limitations. This narrative strategy allowed us to highlight patterns and gaps in the literature.

Within this narrative framework, we also explored possible sources of heterogeneity by including studies comprising several factors such as educational level (e.g., K–12 vs. higher education), modality (in-person vs. online), and type of audio features used (e.g., prosodic features vs. transcription-based features).

2.6. Assessment of Bias and Certainty

We acknowledge the potential for publication bias qualitatively since this emerging area often provides greater visibility to studies reporting positive or innovative findings. Therefore, we conducted additional “snowball” searches (reviewing references and citations) to capture relevant papers that might not appear in standard databases, aiming to reduce bias toward highly cited or “headline” studies.

Additionally, we are aware of the risk that some null or less-“exciting” results may remain under-represented. This critical stance helps readers interpret our conclusions in light of possible reporting biases.

To gauge confidence in the collective findings, we applied a qualitative certainty assessment based on three key factors: (1) the presence of multiple independent studies reporting similar results, (2) transparency and reproducibility of methods, and (3) adequate sample sizes or dataset volumes.

3. Results

The following subsections present the findings of our systematic review, structured around five research questions. Each subsection synthesizes evidence from the selected studies to provide a focused analysis of the role of audio features in educational contexts.

3.1. What Are the Main Technological Uses of Audio Features in Educational Research? (RQ1)

Before presenting our classification, we clarify what we mean by the technological use of audio features. In this review, a use refers not to the pedagogical goal per se but to the function that audio-derived data fulfills within the study’s analytical design. This includes tasks such as identifying question types, detecting emotional cues, inferring group behavior, or producing automated feedback. The key contribution lies in how audio features drive analytical understanding and facilitate automated processes.

This perspective allows us to frame audio usage as a continuum of increasing abstraction. At one end are studies that focus on low-level classification tasks (e.g., detecting specific interventions); at the other end are those that synthesize audio-derived insights into feedback intended for stakeholders.

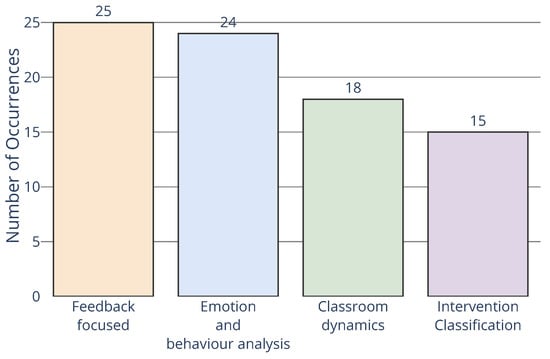

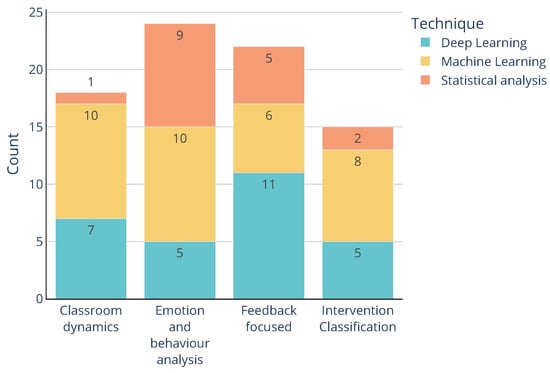

Our classification scheme thus aims to make sense of the current research landscape by analyzing what problems audio features are being applied to solve. While many studies touch on multiple goals, we assigned each to a single primary category to support consistent comparison. The distribution of studies across these categories is shown in Figure 5.

Figure 5.

Main uses detected in the analyzed literature (RQ1).

The most common applications fall into two categories: feedback provision and behavioral/emotional analysis, which together account for more than half of the reviewed studies.

A smaller but still significant portion of the literature (18 out of 82 studies) concentrates on classroom dynamics, particularly in characterizing classroom climate and identifying teaching strategies. Finally, intervention classification emerges as the least frequent category in our review. These studies focus on assigning teacher or student interventions to predefined categories established during the research process.

The four categories follow a conceptual hierarchy based on the level of abstraction of the information extracted. At the lowest level, studies on intervention classification focus on isolated speech events; these feed into analyses of emotions and behaviors, which in turn contribute to understanding broader classroom dynamics. The highest level of abstraction is found in feedback-oriented studies, which synthesize insights from the previous categories to inform educational decision-making.

3.1.1. Intervention Classification

This category encompasses studies focused on the automated classification of individual interventions, typically utterances made by teachers or students. These approaches aim to identify pedagogically relevant patterns at the utterance level, such as the presence of questions, argument components, or instructional strategies.

A common objective is question detection, with early work such as [17] developing machine learning models to identify teacher questions that promote student participation. This direction has evolved to emphasize the detection of authentic questions, as explored by [18,19] and further discussed in [20,21].

Beyond questions, other studies apply natural language processing (NLP) techniques to classify rhetorical or argumentative structures. For instance, Lugini and Litman [22] investigates the identification of argument components within student interventions to better understand the structure of classroom discourse. Others, like [23], use low-level acoustic features for the classification.

Although these studies focus on isolated speech units, their findings often serve as the foundation for higher-level analyses of classroom interaction and engagement.

3.1.2. Emotion and Behavior Analysis

This category encompasses studies that analyze group behavior, student engagement, and emotional dynamics in classroom settings. Audio features are leveraged to extract information about interaction patterns, social regulation processes, and affective states, offering insights into how students collaborate and how emotions manifest during learning.

A subset of studies focuses on collaborative behavior and group dynamics. For instance, Dang et al. [24] investigates socially shared regulation in small groups, emphasizing the pedagogical role of silent pauses. Other studies, such as [25], aim to identify different interaction phases (cognitive, metacognitive, and social) during group work. Diarization-based methods are employed by [26] to analyze participation while preserving student privacy.

Another line of work targets engagement and attention. Refs. [27,28] examine acoustic cues to infer students’ interest and concentration levels throughout lessons, aiming to identify moments of disengagement or heightened attention.

Emotional analysis represents a parallel but related focus. Hou et al. [29] examines classroom expressions of encouragement and warmth, while Yuzhong [30] explores student emotions in politically oriented instruction. Teacher emotions are also considered in [31], which classifies emotional tones in teacher speech to understand how effective expression contributes to classroom climate.

Taken together, these studies illustrate how behavioral and emotional insights derived from audio features can illuminate aspects of classroom functioning that are not easily observable through traditional metrics. By capturing how students interact, regulate, and respond emotionally, this category provides critical inputs for the design of supportive learning environments. Moreover, these analyses often serve as a foundational layer for more complex educational interventions, such as those aimed at providing personalized feedback or informing pedagogical adjustments.

3.1.3. Classroom Dynamics

Classroom dynamics captures the evolving nature of classroom interactions by focusing on how teacher strategies, student responses, and the structure of classroom activities unfold over time. Unlike studies that examine static emotional states or isolated events, these works investigate how multiple signals (e.g., verbal, acoustic, or behavioral) interact across temporal sequences to shape the overall learning environment.

A significant focus within this category is the assessment of classroom climate. For example, James et al. [32] combines audio and video features to model the overall atmosphere of a class session. This line of work continues in [33,34], where the authors use the CLASS (classroom assessment scoring system) framework to automatically classify sessions as exhibiting either positive or negative climate characteristics.

Other studies emphasize the temporal structure of lessons and how teaching unfolds in real time. Uzelac et al. [35], for instance, uses student feedback to evaluate the perceived quality of lectures, implicitly assessing the classroom dynamic from the learner’s perspective. In more technical approaches, Siddhartha et al. [36] explores the detection of classroom events under noisy conditions, while Cánovas and García [37] applies audio diarization techniques to classify different teaching methodologies based on the structure of interactions observed.

These studies reveal that classroom dynamics are not reducible to isolated actions or emotions but rather emerge from the ongoing interplay of multiple pedagogical and communicative signals. By capturing this temporal complexity, the works in this category provide a crucial lens for understanding teaching effectiveness and learning climate, factors that can guide the design of more adaptive and responsive educational practices.

3.1.4. Feedback Focused

This category includes studies that explicitly use audio-derived information to deliver feedback to educational stakeholders. Unlike works that remain at the descriptive or observational level, these studies aim to close the loop by translating insights into instructional guidance for teachers or learning support for students.

A prominent line of work centers on teacher feedback. Refs. [38,39] quantify teachers’ uptake of student ideas, highlighting how conversational patterns can either reinforce or hinder student contributions. Similarly, Cánovas et al. [40] investigates how competitive versus non-competitive response systems influence teacher behavior, providing reflective feedback that helps educators adjust their instructional strategies.

Other studies focus more narrowly on improving questioning techniques. For example, Liu et al. [41] analyzes teachers’ focus questions and provides targeted feedback to enhance student engagement. In the same vein, Hunkins et al. [42] examines how specific teacher interventions affect students’ motivation, sense of identity, and classroom belonging. In line with this, Dale et al. [43] examine teacher speech to enable self-reflection and support instructional improvement.

On the student side, feedback mechanisms are used to guide learning progress. Gerard et al. [44] introduces an automated support system that helps students answer science questions, while Varatharaj et al. [45] proposes a predictive model to assess fluency and accuracy in language learning. These tools aim to replicate or augment teacher evaluations, providing learners with specific areas for improvement.

3.2. What Are the Most Common Audio Features Used in Educational Studies, and How Are They Extracted? (RQ2)

The studies examined employ a diverse array of audio features, which can be systematically grouped into three distinct groups: acoustic features, diarization-based features, and NLP-based features. These categories not only reflect the level of abstraction of the extracted information but also correspond to different analytical strategies employed across studies. To support interpretability, we provided a structured summary of representative features within each category, offering readers a reference point for the more technical descriptions that follow. To our knowledge, this synthesis constitutes the first structured mapping of audio features and their extraction methods within educational research contexts.

3.2.1. Acoustic Features

Acoustic features operate directly on the raw audio waveform, capturing attributes such as pitch, energy, spectral properties, and Mel-frequency cepstral coefficients (MFCCs). These features allow researchers to analyze classroom environments without relying on text transcriptions, making them particularly useful in noisy settings or when privacy concerns limit transcription. Time-frequency representations (e.g., Mel-spectrograms) are often computed using established signal processing libraries such as Praat, PyAudio, OpenSmile, or Librosa [31,36,46,47,48].

The main applications of acoustic features relate to classroom climate and emotion and behavior analysis. Variations in prosodic features, such as pitch, energy, or spectral envelopes, have been associated with teacher enthusiasm or student disengagement [49], as well as with shifts in instructional delivery [34,50]. In some cases, acoustic indicators are combined with NLP techniques to refine the detection of emotional states [51] or used as the basis for diarization techniques [52].

Extraction workflows for acoustic features typically rely on digital signal processing (DSP) techniques to compute metrics such as MFCCs, pitch, energy, and zero-crossing rate (ZCR). More recent approaches leverage deep learning models, such as wav2vec2 or OpenL3, to generate acoustic embeddings that capture complex prosodic and paralinguistic information [36,53]. These features have also proven effective for tasks such as teaching methodology classification [10,54] or as a basis for voice activity detection (VAD), which supports downstream processes like diarization [55].

3.2.2. Diarization-Based Features

Diarization-based features aim to determine who is speaking and when enabling detailed turn-taking analyses within classroom interactions. By segmenting audio into speaker-specific tracks, these features capture metrics such as speaking time, the number of interventions, and the distribution of talk among participants [56]. Voice activity detection (VAD) often serves as a preliminary step to isolate speech segments from silence or background noise [57]. These segments are then grouped into speaker clusters using acoustic similarity, typically via embedding-based techniques (e.g., x-vectors) and clustering algorithms. This turn-level information supports the analysis of engagement patterns, such as who initiates discussions or how often students respond to peers [58].

The main applications of diarization-based features fall within emotion and behavior analysis, particularly in the study of classroom participation and collaboration. Knowing who talks and how often provides insight into whether students engage equitably or whether discussions are dominated by specific individuals [59,60]. These same metrics are also used in feedback-focused studies where the ratio of teacher-to-student speaking time or the distribution of teacher attention across the class is quantified [61]. When combined with emotional or linguistic indicators, diarization features can contribute to the detection of classroom climate patterns, such as identifying which speakers tend to offer encouragement or whether teacher-led sequences reflect particular instructional strategies.

Diarization pipelines typically include a voice activity detection module, followed by segmentation and speaker clustering. These steps are commonly implemented using automated toolkits such as pyannote, Kaldi, or Whisper-based diarization, often complemented by pre-trained speaker embedding models. To improve accuracy, some studies incorporate manual corrections or use auxiliary inputs such as individual microphones or spatial data [9,62].

Notably, five studies combine diarization and acoustic features, as reported in Table 1. These combinations are often used to detect engagement patterns or to differentiate between teaching methodologies [9,63]. By aligning speaker turns with acoustic variations, these studies offer a more comprehensive understanding of classroom dynamics than using either feature type alone. For instance, detecting frequent short student utterances alongside shifts in pitch and energy may signal active but uneven participation, while extended teacher monologues with low vocal variability might suggest a more transmissive teaching style. Such multimodal insights provide a nuanced lens through which researchers can infer not just who is speaking, but how that speaking behavior relates to pedagogical effectiveness.

Table 1.

Number of papers with every feature category and combination found (RQ2).

3.2.3. NLP-Based Features

Natural language processing (NLP)-based features are often derived from the textual representation of speech. Typically, the first step involves automatically transcribing classroom oral interactions using a speech recognition system (e.g., [64,65]), although in some cases, manual transcriptions are used [66,67,68]. The quality of transcription, whether automated or manual, critically influences the reliability of downstream NLP analyses. Based on these transcripts, linguistic analysis techniques are applied to extract various indicators, such as word frequency, syntactic complexity, question usage, or keyword identification [18,19,38,69].

Many studies focus primarily on examining how teachers formulate questions, provide feedback, or acknowledge student contributions [70,71]. This emphasis on linguistic dynamics allows for the analysis of teacher support (feedback-focused), as well as aspects of classroom climate and underlying emotions (emotion and behavior) when expressions of encouragement or positive comments are detected [42].

Beyond simple counts of specific words or phrases (e.g., “I” and “We” interrogative words) [19], some studies adopt more advanced NLP approaches, such as TF-IDF, transformer-based classification, or semantic embeddings, to classify intervention types, identify authentic questions, or map discourse flow [38,72]. These methodologies enable researchers to detect specific “pedagogical moves” (e.g., rephrasing, requests for justification, or corrective feedback).

The processing pipeline often begins with an automatic speech recognition system (e.g., Google Speech-to-Text, Otter.ai, IBM Watson, or Whisper) that generates the transcription [64,73]. Next, NLP algorithms are applied to extract linguistic features (e.g., keyword counts, sentiment analysis, grammatical tagging, or discourse segmentation). In more complex cases, deep learning models such as BERT are commonly employed for question classification and semantic encoding, while recurrent architectures (e.g., LSTM) are often used for emotion recognition or discourse modeling [58,74,75,76].

Finally, as with acoustic and diarization features, NLP features could also be combined with these previous features, as shown in Table 1. The combination with acoustic features is more common, as diarization information is typically integrated into ASR systems when they identify individual speakers. Examples of this integration include analyzing socially shared regulation in collaborative learning or understanding how teacher interventions affect student motivation [24,42].

This range of NLP-based strategies provides researchers with tools to quantify participation and interaction (emotion and behavior analysis), as well as to uncover patterns of teacher feedback and their potential effect on student performance (feedback-focused). Furthermore, NLP is the main group of features used in our defined intervention classification category. Given that verbal content is central to most educational exchanges, NLP-based features offer perhaps the most direct lens into instructional intent and pedagogical nuance, explaining their dominant role across multiple use categories.

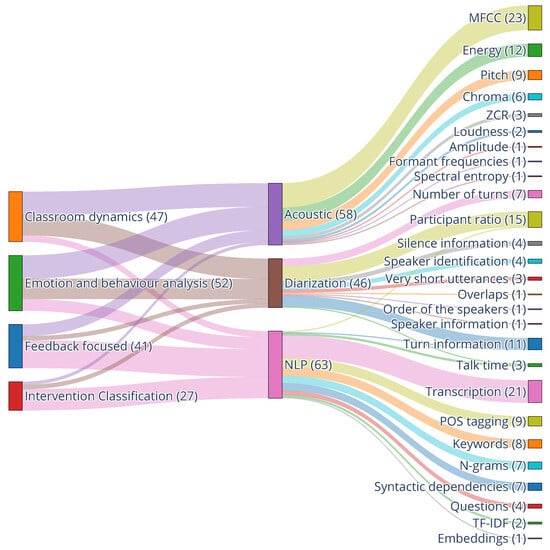

To provide a comprehensive overview, we constructed a Sankey diagram (Figure 6) to visualize how the usage categories defined in RQ1 (e.g., feedback-focused and emotion and behavior analysis) relate to the audio features types employed across studies. The diagram illustrates how studies connect these categories to the primary audio feature groups (acoustic, diarization, and NLP-based) and, subsequently, to specific extracted features, such as MFCC, transcriptions, and participant ratios.

Figure 6.

Relationships between types of usage categories (RQ1) and types of audio features identified (RQ2).

It is important to note that this visualization reflects every unique combination of usage and feature types; thus, the total number of connections exceeds the number of studies included. A disaggregated summary of feature counts by usage category is presented in Table 2. Notably, classroom dynamics and emotion and behavior analysis are more frequently associated with acoustic and diarization features, reflecting a focus on capturing environmental and interactional dynamics. In contrast, studies under feedback-focused and intervention classification categories predominantly rely on NLP features, emphasizing the analysis of linguistic content.

Table 2.

Number of papers that use each kind of features by RQ1 category (RQ2).

A summary of the most common acoustic features and their functions is presented in Table 3.

Table 3.

Description of the main features identified in the literature for acoustic, diarization, and natural language processing (NLP) approaches (RQ2).

3.3. How Do Researchers Combine Audio Features with Other Data Sources? (RQ3)

Audio features, while rich in information, are often insufficient to capture the full complexity of classroom interactions on their own. Educational settings are inherently multimodal, involving not only spoken language but also gestures, visual cues, physiological signals, and contextual data. To address this, researchers frequently integrate audio with other data sources, a practice widely recognized under the umbrella of multimodal learning analytics (MMLA) [11,77].

This integration is not merely additive but complementary: while audio may capture intonation or turn-taking, video can provide facial expressions and body posture, and log data can contextualize student actions and engagement. Machine learning models, particularly those based on deep learning, have enabled the joint modeling of such heterogeneous signals [78].

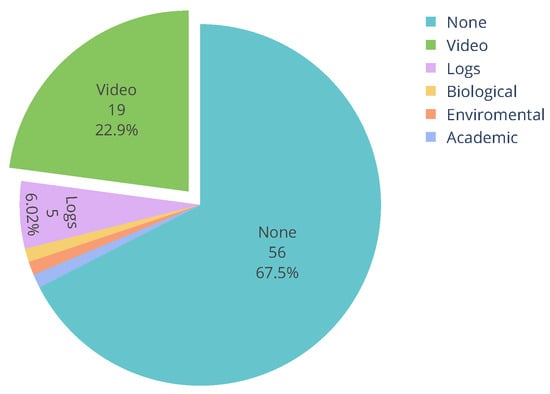

In our review, we found that nearly one-third of the analyzed studies adopt a multimodal approach, most commonly combining audio with video features. Other studies also incorporate contextual, environmental, biological, or academic performance data. Figure 7 shows the proportion of multimodal studies, while Table 4 summarizes the frequency and function of each data type across the reviewed literature.

Figure 7.

Distribution of analyzed papers that uses a multimodal approach (RQ3).

Table 4.

Types of non-audio features used in multimodal studies and their role in modeling or support tasks (RQ3).

It is important to note that not every educational task requires multimodal data. In many cases, audio alone suffices to answer the research questions, for example, identifying teacher question types or measuring talk-time distributions. Adding video, physiological signals, or logs could enrich the analysis but also raise the cost and complexity of data collection (e.g., camera setup, synchronization, and classroom consent) and processing. Therefore, although multimodal pipelines are on the rise thanks to modern deep-learning models, a majority of studies remain unimodal because their core goals can be met with audio-only features, which are faster, cheaper, and easier to obtain in real-world school settings.

3.3.1. Combination of Audio and Video Features

Several studies integrate audio and video features to build richer representations of classroom dynamics. While audio captures elements such as speech content, turn-taking, and prosody, video provides complementary signals, including facial expressions, gaze, and posture, offering insights that are otherwise inaccessible through audio alone.

Some works use video primarily as a tool to support the labeling or segmentation of audio data. For example, D’Angelo and Rajarathinam [57] combine diarized audio with video labels to analyze teaching assistant interventions during collaborative problem-solving, allowing for detailed mapping of intervention timing and response. Similarly, refs. [56,60,79,80] use video to segment turn-taking episodes in engineering classrooms, contextualizing audio-based interaction markers with visual cues.

Other studies extract concrete video features that are combined with audio in multimodal machine learning models. For instance, Ramakrishnan et al. [48] use face count and facial emotion recognition to estimate classroom climate alongside acoustic features. Ma et al. [53] incorporate facial action units (FAUs), eye gaze, and body pose to detect confusion and conflict during pair collaboration. Likewise, Heng et al. [9] fuse audio features with pose estimation data to identify teaching methodologies, while Chan et al. [81] include face detection and actions like note-taking to assess student engagement.

Overall, the combination of audio and video data enables a multidimensional view of classroom interactions, supporting the identification of affective states, collaborative dynamics, and pedagogical patterns that would be challenging to infer from a single modality.

3.3.2. Combination of Audio and Contextual Data

Beyond video, several studies integrate audio features with contextual data to deepen their understanding of educational processes. These contextual signals, ranging from log traces of student activity to physiological or environmental measurements, offer complementary information that enriches audio-based analyses.

Log data are some of the most commonly used forms of contextual information. For example, refs. [59,82] combine audio features with student-level editing traces (e.g., number of characters added or deleted) to estimate collaboration quality. Similarly, studies like [83,84] integrate student interaction logs with audio inputs to evaluate the accuracy and usability of teacher dashboards and socially shared regulation.

Some studies also incorporate biological or environmental signals. Prieto et al. [47] combines audio with physiological indicators such as pupil diameter and blink rate to classify instructional formats. In another approach, Uzelac et al. [35] use environmental data (e.g., CO2 levels, noise, temperature, and air pressure) alongside audio to assess lecture quality. Academic data are also used as a secondary context; for instance, Cánovas et al. [40] integrate students’ academic performance with audio-derived group behavior indicators to contextualize responses in audience response systems.

The distribution of feature types used across multimodal studies is summarized in Table 4. Video features dominate the landscape, with 13 instances used in modeling tasks and 6 as support information. In contrast, academic, biological, and environmental data appear more sporadically (each only once), reflecting their specialized use cases. Log data, meanwhile, play a more balanced role, contributing to both modeling and contextual enrichment.

3.4. What Techniques Are Employed to Process Audio Features in Educational Studies, and to What Extent Are These Solutions Interpretable? (RQ4)

In analyzing how audio features are computationally processed in educational research, we identified three broad methodological categories: statistical analysis, machine learning, and deep learning. These techniques are applied across the full spectrum of audio features discussed in RQ2, including acoustic properties, speaker diarization metrics, and linguistic indicators derived from transcriptions.

This section examines both the nature of these techniques and the degree to which they incorporate strategies for interpretability or explainable AI (xAI). While technical details of the models are not revisited here (see [85] for a comprehensive overview), our focus lies in categorizing the methodological choices made by researchers and evaluating how transparently these models support pedagogical interpretation.

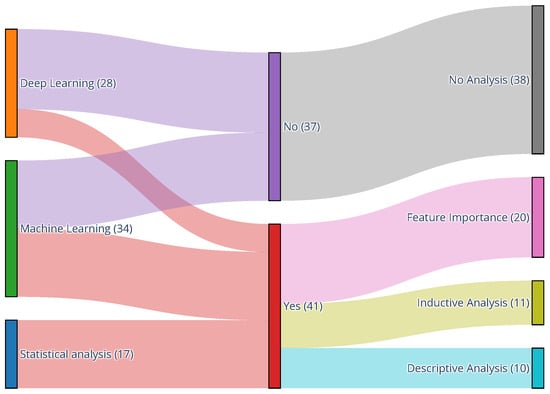

Figure 8 presents an overview of the relationship between each methodological category and its typical level of interpretability. Interpretability was assessed based on whether studies included correlation-based insights, feature importance rankings, or advanced xAI techniques (e.g., SHAP and LIME). As expected, statistical approaches offer the greatest transparency, while deep learning models, though powerful, generally lack interpretive mechanisms.

Figure 8.

Sankey diagram illustrating the relationship between the analytical methods employed (deep learning, machine learning, and statistical analysis) and the presence and type of explainability techniques. This visualization highlights how interpretability varies across methodological families, showing which types of explainability (if any) are applied in each case (RQ4).

To explore how these methodological choices map to educational purposes, Figure 9 presents the distribution of techniques across the usage categories defined in RQ1. A clear trend emerges: deep learning and machine learning are more frequently applied in domains like classroom dynamics and emotion and behavior analysis, where patterns are abstract or subtle. In contrast, studies categorized under feedback-focused and intervention classification continue to predominantly employ statistical approaches, likely reflecting their closer alignment with the principles of transparency and the generation of actionable feedback.

Figure 9.

Relationship between RQ1 categories and RQ4 techniques used (RQ4).

For the purposes of this review, we distinguish machine learning algorithms from deep learning models using two objective criteria. First, traditional ML methods (e.g., support vector machines, random forests, and XGBoost) operate on carefully engineered feature vectors (e.g., MFCCs, prosodic statistics, and diarization parameters) and generally contain on the order of 10,000 trainable parameters or fewer. In contrast, deep-learning models ingest raw or minimally processed audio representations, such as spectrograms or learned embeddings, and learn hierarchical features end-to-end. These architectures typically surpass several million trainable parameters and achieve high performance without relying on manual feature selection or preprocessing.

3.4.1. Machine Learning Techniques

Traditional machine learning (ML) pipelines typically rely on engineered features extracted from raw audio, such as prosodic attributes, spectral coefficients, or lexical indicators. These features are then fed into algorithms such as random forests, support vector machines (SVMs), decision trees, or logistic regression to perform classification, regression, or clustering tasks. A core strength of these approaches lies in their relative transparency compared to deep learning approaches: many models allow for the direct inspection of feature importance, aiding both explainability and pedagogical interpretation.

Numerous studies in this category focus on classifying aspects of classroom discourse and instructional style. For instance, Tsalera et al. [86] introduces a PCA-based feature selection strategy to manage high-dimensional audio in noisy classrooms, subsequently comparing multiple classifiers. Random forests are especially popular: Donnelly et al. [87] uses them to infer teaching methodologies (e.g., lecture vs. group work) by identifying the most salient acoustic features, while Prieto et al. [47] applies a similar strategy to detect instructional formats, discussing the most predictive feature sets for each activity type.

Some researchers go further by combining audio with other data streams. Donnelly et al. [88], for example, fuses audio with wearable sensor data to segment teacher–student interactions using naive Bayes classifiers. In contexts with limited technological resources, simpler classifiers such as KNN or naive Bayes have been tested for low-cost classroom monitoring and analysis [89,90]. Similarly, Sandanayake and Bandara [91] proposes a multimodal summarization pipeline combining KNN, speech-to-text models, and textual clustering to automatically generate lecture summaries.

Feature engineering remains central to these ML-based studies, and authors frequently report which features (e.g., pitch variation and lexical tokens) contribute most to the model’s predictions [32]. This emphasis on transparency distinguishes ML from deep learning approaches. However, more advanced explainability techniques, such as SHAP [92] and LIME [93], are rarely used in practice. One notable exception is [29], which leverages SHAP to visualize the contribution of emotional features to model decisions.

Overall, while ML pipelines strike a useful balance between performance and interpretability, the integration of explicit xAI practices remains inconsistent. There is considerable room for improvement in using these tools to strengthen transparency and trust, especially when outputs are intended to guide pedagogical decision-making.

3.4.2. Deep Learning Techniques

Deep learning (DL) approaches, such as convolutional neural networks (CNNs), long short-term memory (LSTM) networks, and transformers, typically learn representations of audio directly from spectrograms or even raw waveforms. This reduces reliance on handcrafted features but often comes at the cost of increased model opacity, given the layered and non-linear nature of these architectures.

In educational contexts, CNNs and LSTMs are frequently applied to tasks such as classroom sound classification and speech transcription under noisy conditions. For example, Mou et al. [94] trains both CNN and LSTM architectures on spectrogram inputs to categorize classroom events, while Siddhartha et al. [36] designs a custom network to handle the interruptions and acoustic challenges typical of early childhood environments. Transformers, particularly BERT, are used in studies involving transcribed classroom discourse. For example, Wang and Chen [64] investigates the impact of removing specific word categories on classification accuracy, while Alic et al. [95] employs BERT to differentiate between funneling and focusing questions.

More complex architectures are also explored. Alkhamali et al. [46], for instance, combines CNNs, LSTMs, and transformers in an ensemble to predict emotional states from classroom audio, reflecting a growing interest in modeling subtle affective cues. Multimodal integration is another emerging trend, as discussed in Section 3.3: Heng et al. [9] fuses audio and video streams to model classroom climate, showcasing how DL architectures naturally accommodate multimodal inputs.

Despite these technical advances, interpretability remains a major limitation. Most DL studies prioritize performance metrics (e.g., accuracy and F1-score) over explainability. When attempts are made, they are typically superficial, such as inspecting attention weights in transformers or correlating predictions with input features. Rigorous explainability frameworks, such as SHAP or integrated gradients, are rarely used.

This lack of transparency is particularly problematic in educational settings, where stakeholders must understand and trust system outputs to make informed decisions. Without interpretable outputs, DL models risk becoming “black boxes” that may perform well technically but lack pedagogical legitimacy.

3.4.3. Statistical Analyses

A third methodological category relies on classical statistical methods, which often focus on describing, correlating, or regressing measured audio variables against educational outcomes. Unlike machine learning or deep learning models, these approaches typically avoid predictive modeling. Instead, they aim to uncover interpretable relationships between acoustic or discourse-related metrics and constructs such as student engagement or teacher–student dynamics.

Several studies exemplify this approach. For instance, D’Angelo and Rajarathinam [57] analyzes the talk-time of teaching assistants and relates it to collaborative group performance using basic correlation measures. The same authors [56] similarly links instructor talk patterns to student responses through correlation coefficients. Others apply regression-based methods: Demszky et al. [58] models how real-time measures of teacher talk-time predict engagement levels. Experimental comparisons also appear, such as in [63], where an “engagement index” derived from acoustic and visual cues is compared statistically across baseline and intervention conditions. Studies like [37] use descriptive and correlational statistics to examine the structure of instructional discourse, while Hardman [96] interprets teacher-to-student talk ratios as indicators of classroom authority and control. Along similar lines, France [97] analyzes how teachers value dialogue and how they implement it in classroom practice, drawing on statistical analyses to explore its perceived importance and actual use.

These methods offer a key advantage: immediate interpretability. Coefficients, effect sizes, and p-values provide clear, direct evidence of how specific features relate to educational variables. This transparency makes statistical analysis particularly useful when communicating findings to educators and stakeholders who may lack technical expertise.

However, this interpretability often comes at the expense of modeling complexity. Statistical approaches may overlook nuanced patterns or interactions that more sophisticated machine learning techniques can detect. Nonetheless, their use remains widespread, especially in studies prioritizing theoretical validation or descriptive insight over predictive accuracy.

3.4.4. Extent of Interpretability and xAI Practices

Overall, most articles focus on improving predictive accuracy or illustrating empirical relationships rather than detailing how each audio feature drives model decisions. Nevertheless, three broad approaches to interpretability appear with varying frequencies:

- 1.

- Inductive (Correlation-Based) Analysis: Several studies employ correlation and regression analyses to explore associations between audio features and educational outcomes. While not true explainability methods in the xAI sense, these inductive approaches are used as proxies to validate whether model decisions align with expected behavioral patterns. For example, Chejara et al. [82] correlates audio-based collaboration features with model outcomes to check generalizability, while Chejara et al. [98] similarly relies on correlation metrics to validate whether ML models remain robust across different classroom environments. These methods provide initial evidence of construct validity but fall short of revealing how individual predictions are made.

- 2.

- Feature Importance: Tree-based ML algorithms such as random forests or gradient-boosted trees enable direct analysis of feature contributions, often through built-in importance metrics. These have been used to highlight which acoustic or linguistic features drive model predictions. For instance, Donnelly et al. [99] identifies para verbal signals as key markers for detecting teacher questions, and James et al. [32] uses feature importance to analyze contributors to perceived classroom climate. In a more advanced case, Hou et al. [29] applies SHAP values to clarify how emotional features contribute to warm or encouraging feedback. While useful, such practices are applied inconsistently across studies and often without methodological transparency or justification for the selected xAI technique.

- 3.

- Descriptive Analysis: Some studies enhance interpretability by comparing model outputs with human-annotated ground truth or classroom observations. These comparisons, while not algorithmic explanations, provide qualitative insight into how predictions align with real-world phenomena. For instance, Cook et al. [18] illustrates discrepancies between its regression-tree predictions and human-coded discourse segments, while Kelly et al. [19] analyzes how the model compares with the performance of human annotators. These approaches can build trust among end users, particularly educators, by revealing whether the system offers pedagogical value in their reasoning. However, they remain anecdotal and rarely constitute a systematic framework for interpretability.

Across the reviewed literature, statistical models offer inherent transparency by design, while ML models provide moderate interpretability depending on the availability of feature attribution methods. In contrast, DL approaches tend to sacrifice transparency in favor of performance, with only rare instances incorporating formal xAI tools. This trade-off between predictive power and explainability is a key concern for the deployment of these systems in real educational settings, where understanding the basis of decisions is often as critical as the output itself. In our corpus, only 7 out of 27 deep learning studies (26%) explicitly reported the use of explainability techniques, compared to 17 out of 34 machine learning studies (50%). These figures highlight a significant interpretability gap in the most complex and widely adopted approaches, one that deserves more attention if educational stakeholders are to trust and adopt AI-powered systems in practice.

3.5. Which Studies Provide Feedback for Participants Derived from Obtained Results? (RQ5)

Providing feedback to teachers and students is a key mechanism for translating analytical insights into pedagogical action. In educational settings, feedback serves as a bridge between data and practice, allowing teachers to refine their instructional strategies, students to reflect on their learning behaviors, and both groups to engage in more effective classroom interactions. Without such mechanisms, the potential of audio-based analytics remains largely theoretical, disconnected from the real-world contexts they are intended to support.

In this question, we examine how studies in our corpus integrate feedback into their design. Specifically, we analyze who receives the feedback, what type of information is shared, and when it is delivered. This approach allows us to map the practical utility of audio-derived data across educational scenarios.

However, it is important to highlight a critical limitation in the current literature: very few studies discuss the perception, acceptance, or impact of feedback mechanisms from the perspective of the actual stakeholders–teachers and students. While some papers mention user-facing dashboards or post-session summaries, most do not include empirical evaluations of how these outputs were received or used in practice, nor whether they resulted in actual pedagogical changes. This lack of user-centered assessment restricts our ability to draw conclusions about the effectiveness, usability, or educational value of these systems. We return to this gap in the discussion as a key avenue for future research.

Of the 82 reviewed articles, only 11 (approximately 13%) explicitly report delivering feedback derived from audio features to participants. Despite being a minority, these studies provide valuable insights into the current state of feedback integration in audio-based educational research. To structure our analysis, we organize the findings according to three dimensions: who receives the feedback, what kind of information is shared, and when the feedback is delivered.

3.5.1. Who Receives the Feedback?

Most studies delivering feedback target teachers as the primary recipients. The goal is typically to help them refine pedagogical strategies using insights derived from their own classroom discourse, for example, their use of specific talk moves, questioning patterns, or instructional vocabulary. In one case, teachers received personalized statistics on their use of mathematics terminology and the distribution of teacher–student talk-time, with comparisons against their own past data and the behavior of other platform users [100]. Other studies similarly provide post-session feedback summarizing discourse patterns or question types, encouraging gradual, data-informed pedagogical refinement [41,71,101,102].

While teacher-focused feedback dominates, a smaller group of studies extends feedback to students, either directly or through mediated teacher actions. Some systems benefit both teachers and students simultaneously by visualizing classroom participation in real time, for example, dashboards that display talk-time proportions or overlapping speech, prompting more balanced turn-taking [58]. Other systems offer individualized feedback to students, such as metrics on pronunciation accuracy or fluency scores. In these cases, teachers may also receive alerts when students fall below performance thresholds, allowing for timely instructional interventions [44,45]. This dual-feedback approach can support not only student reflection but also responsive teaching.

3.5.2. What Kind of Feedback Is Delivered?

Most feedback systems in the reviewed literature rely on quantitative metrics to make classroom dynamics visible. These include measures such as talk-time, frequency of authentic questions, or discipline-specific vocabulary use, which serve as interpretable baselines for reflection and instructional refinement. For example, one study provides real-time speaking ratios that help teachers and students rebalance participation mid-lesson [58]. Another tracks how often teachers pose authentic questions and examine the relationship between these frequencies and student engagement levels [101].

Beyond raw counts, several platforms enhance quantitative feedback with interpretative guidance. They highlight specific moments where teachers used effective discourse strategies and offer actionable suggestions, such as rephrasing or elaboration moves, that may promote deeper student reasoning [41,100]. Dashboards frequently incorporate color-coded visualizations to identify zones of high or low engagement, helping educators focus attention where it is most needed [27,83].

Notably, while quantitative feedback dominates, few studies attempt to provide qualitative or normative feedback, for example, judgments about whether a teacher’s interaction style aligns with pedagogical best practices. This absence may reflect an implicit reluctance to define what constitutes ‘good teaching.’ Educational contexts vary widely, and there is little consensus on ideal instructional behavior. In practice, providing such evaluative guidance would require not only technical robustness but also normative frameworks capable of accounting for differences in subject matter, age group, and cultural setting. As a result, most systems remain focused on reporting metrics rather than interpreting them in light of pedagogical theory or instructional goals.

3.5.3. When Is Feedback Delivered?

Feedback timing varies considerably across studies, revealing trade-offs between immediate interventions and reflective practice.

Real-Time Feedback

A small subset of studies implement real-time feedback, offering live insights during classroom sessions. In these scenarios, both teachers and students make immediate adjustments: one system updates a display of talk-time balances every 20 min, leading to a quick rebalancing of classroom discourse [58]. Another approach visualizes student interest levels in real time, allowing instructors to quickly pivot if engagement appears to wane [27].

Post-Session Feedback

More commonly, feedback is delivered after a session or across multiple sessions. Teachers might receive summaries of their questioning techniques, discourse moves, or engagement metrics after each class [71,100,102]. This setup favors reflective practice, allowing educators to review and adapt without the pressure of real-time classroom management. Longitudinal feedback, where data is collected and returned across weeks or months, has also been explored to track instructional change over time [41,101].

Single Exposure or Irregular Delivery

A few studies deliver feedback only once or at irregular intervals, often within pilot trials or prototype demonstrations. For instance, [83] uses vignette-based dashboards to gauge educators’ trust in feedback systems but does not implement sustained use. These instances serve more as proof-of-concept explorations than fully integrated classroom tools.

4. Discussion of Findings and Implications

This section critically synthesizes the main findings of our review, highlighting key limitations and opportunities in the current use of audio features within educational research. While the reviewed studies demonstrate considerable technical sophistication, spanning acoustic, diarization, and linguistic features, multimodal integration, and advanced processing techniques, we identify three recurring issues that constrain the field’s practical impact.

4.1. Challenges in Explainability

This section addresses a critical pattern that cuts across multiple research questions: despite the richness of audio-derived data (RQ2), its combination with other modalities (RQ3), the sophistication of processing techniques (RQ4), and even its intended use for feedback (RQ1 and RQ5), few studies succeed in translating analytics into pedagogical action.

As seen in RQ2, researchers extract a wide array of features, from low-level acoustic metrics and speaker diarization to sophisticated NLP indicators, offering a detailed representation of classroom discourse. These features are then processed using machine learning (RQ4), including explainable models such as decision trees and feature attribution methods or with deep learning pipelines that promise high performance but limited interpretability. For audio analytics in particular, misidentified prosodic cues or diarization errors can quickly erode teacher confidence; without transparent rationales, educators are unlikely to trust or act on the model’s recommendations. While a few studies do attempt to provide actionable feedback, often through visual dashboards or targeted recommendations, these remain exceptions. The vast majority stop at reporting descriptive indicators, shifting the responsibility for interpretation and pedagogical action entirely to the user.

This limitation is particularly evident in RQ1, where feedback provision emerges as one of the most common use cases. However, as shown in RQ5, the feedback offered is typically generic, quantitative, and delivered post hoc. While teachers may receive dashboards displaying metrics such as talk ratios or the frequency of authentic questions, these indicators are seldom accompanied by an explanation of their relevance or instructional implications. Moreover, when feedback is provided, it often lacks evidence of real-world deployment or user adoption, as most systems are confined to academic prototypes and remain untested in practical classroom environments [103].

Embedding a human-in-the-loop (HITL) methodology can address both the need for explainability and meaningful stakeholder involvement. By design, HITL systems require transparent, interpretable outputs so that educators can review, correct, and refine model predictions in real time. This process ensures that each step, from feature extraction to final recommendations, is accompanied by an explanation that teachers understand and trust. Simultaneously, involving teachers (and, when possible, students) in iterative model refinement grounds development in actual classroom practices, leading to tools that align with pedagogical goals and have higher adoption rates. For example, Qui et al. [104] demonstrate usage of GenAI for teaching and learning systems in which instructors monitor and adjust LLM-generated feedback during live sessions, ensuring that suggested interventions remain contextually appropriate.

A further challenge lies in the widespread reluctance to make normative claims about what constitutes “good” teaching. This hesitation is understandable, given the diversity of educational contexts and pedagogical philosophies. However, in the absence of interpretive scaffolds or evaluative frameworks, feedback risks becoming either meaningless or misinterpreted. Translating analytical outputs into actionable insights would require the integration of explainable models with established pedagogical principles, an approach that remains rare in the current literature.

Yet the problem may not lie in the individual contributions of each study but in their isolation. When viewed collectively, the field has already developed many of the building blocks needed for real-world applications. Tools like TeachFX (https://teachfx.com) demonstrate that it is possible to deliver personalized, real-time feedback on teacher discourse using audio analytics. These tools draw upon many of the same techniques and features found in our reviewed corpus, suggesting that research findings are indeed translatable if properly integrated.

4.2. Data Availability and Privacy Constraints

One of the most striking patterns across the reviewed literature is the widespread use of locally collected, non-public datasets, often recorded in specific classrooms or institutions to support experimental prototypes [105]. While this is understandable given the logistical and ethical complexities of educational data collection, it has resulted in a highly fragmented research landscape, particularly when it comes to audio and multimodal data (RQ2 and RQ3).