Series Arc Fault Detection Based on Improved Artificial Hummingbird Algorithm Optimizer Optimized XGBoost

Abstract

1. Introduction

2. Collection of Arc Fault Data in Series

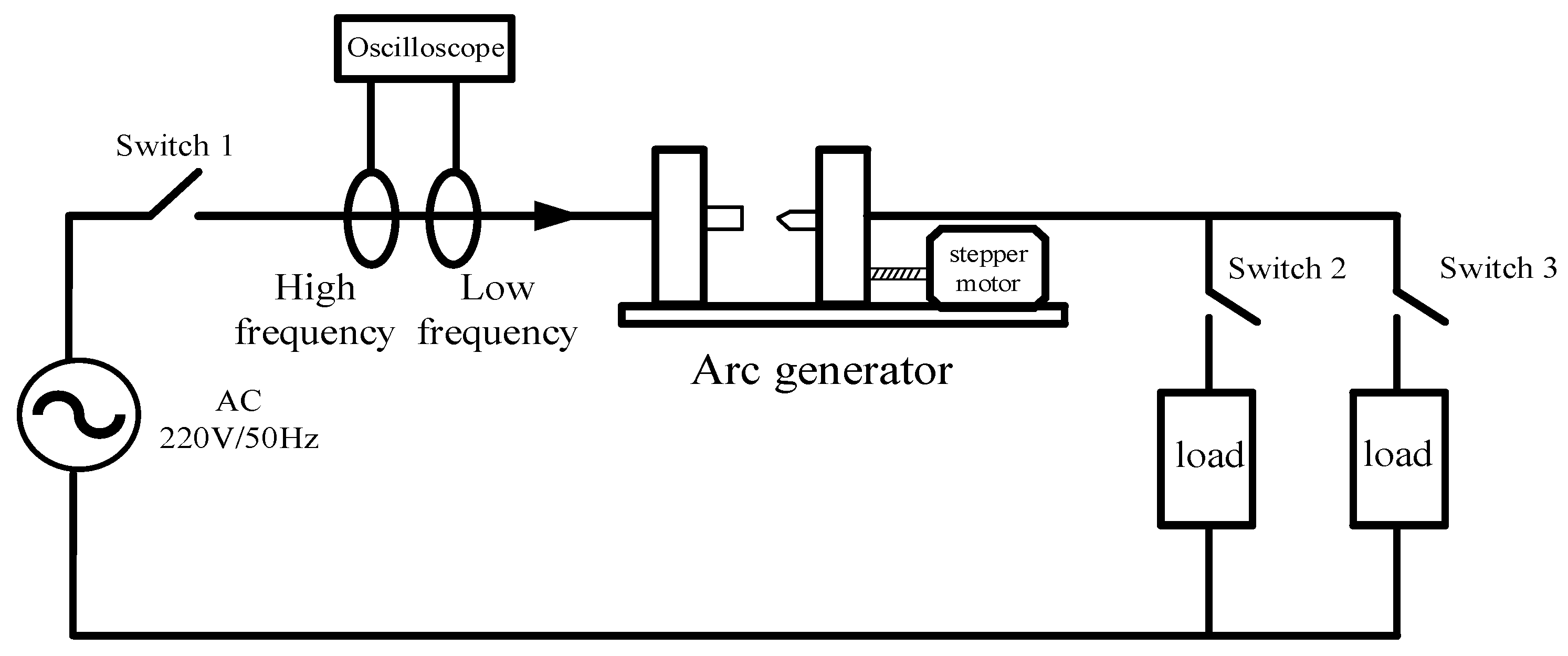

2.1. Arc Occurrence Platform Construction and Current Signal Collection

2.2. Analysis of Arc Current Characteristics of Failure

3. Current Signal Processing and Feature Extraction

3.1. Characteristic Processing of High-Frequency Current Signal

3.1.1. Maximum Rate of Current Rise

3.1.2. Peak Value

3.1.3. Pulse Indicators

3.1.4. Margin Indicators

3.1.5. Total Harmonic Distortion Rate

3.2. Feature Extraction of Low-Frequency Current Signal

- (1)

- The mean value of the average current of E full waves IMean_Mean is:

- (2)

- The instantaneous current IIN of E full waves is:

- (3)

- The peak value of E full wave average current IMean_Max is:

4. Artificial Hummingbird Algorithm (AHA) and XGBoost

4.1. Artificial Hummingbird Algorithm (AHA)

4.1.1. Initialize

4.1.2. Guide Foraging

4.1.3. Territory Foraging

4.1.4. Migrate for Food

4.2. Extreme Gradient Boosting Tree (XGBoost)

4.3. AHA-XGBoost Arc Fault Detection Process

5. Identification of Fault Arc

5.1. Feature Importance and Interpretability via SHAP Analysis

5.2. Parameter Configuration

5.3. Fault Arc Identification Results and Analysis

5.4. Comparison with Other Identification Methods

6. Conclusions

- (1)

- Feature selection optimization: Using the global search ability and dynamic adaptive mechanism of AHA, key feature subsets sensitive to arc are screened out from high-dimensional time–frequency domain features (such as wavelet packet entropy and high-frequency harmonic distortion rate) so as to overcome the local optimal defects of traditional filtering feature selection methods (such as the mutual information method).

- (2)

- Lightweight integrated modeling: Combining the parallel computing advantages of XGBoost and regularization strategies, a low-complexity and high-explanatory fault classification model is constructed. Through AHA synchronous optimization of XGBoost’s hyperparameters (such as learning rate and tree depth), the detection accuracy and real-time performance are significantly improved.

- (3)

- AHA optimizes XGBoost, which converges faster and has a better effect.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Miao, W.; Xu, Q.; Lam, K.H.; Pong, P.W.T.; Poor, H.V. DC Arc-Fault Detection Based on Empirical Mode Decomposition of Arc Signatures and Support Vector Machine. IEEE Sensors J. 2020, 21, 7024–7033. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, R.; Wang, H.; Tu, R.; Yang, K. Series AC Arc Fault Diagnosis Based on Data Enhancement and Adaptive Asymmetric Convolutional Neural Network. IEEE Sensors J. 2021, 21, 20665–20673. [Google Scholar] [CrossRef]

- Ahmadi, M.; Samet, H.; Ghanbari, T. A New Method for Detecting Series Arc Fault in Photovoltaic Systems Based on the Blind-Source Separation. IEEE Trans. Ind. Electron. 2019, 67, 5041–5049. [Google Scholar] [CrossRef]

- Qu, N.; Chen, J.; Zuo, J.; Liu, J. PSO–SOM Neural Network Algorithm for Series Arc Fault Detection. Adv. Math. Phys. 2020, 2020, 6721909. [Google Scholar] [CrossRef]

- Jiang, J.; Wen, Z.; Zhao, M.; Bie, Y.; Li, C.; Tan, M.; Zhang, C. Series Arc Detection and Complex Load Recognition Based on Principal Component Analysis and Support Vector Machine. IEEE Access 2019, 7, 47221–47229. [Google Scholar] [CrossRef]

- Kim, Y.; Lee, S. Ensemble learning-based arc fault detection with multi-sensor data fusion. IEEE Access 2021, 9, 123456–123467. [Google Scholar]

- Wang, L.; Yong, S. GA-SVM based arc fault detection with feature selection for photovoltaic systems. IEEE Trans. Sustain. Energy 2022, 13, 987–996. [Google Scholar]

- Xu, R.; Li, J. Edge computing-oriented lightweight arc fault detection model for IoT-enabled circuit breakers. IEEE Internet Things J. 2023, 10, 6543–6554. [Google Scholar]

- Wang, Y.; Hou, L.; Paul, K.C.; Ban, Y.; Chen, C.; Zhao, T. ArcNet: Series AC arc fault detection based on raw current and convolutional neural network. IEEE Trans. Ind. Inform. 2022, 18, 77–86. [Google Scholar] [CrossRef]

- Tang, A.; Wang, Z.; Tian, S.; Gao, H.; Gao, Y.; Guo, F. Series arc fault identification method based on lightweight convolutional neural network. IEEE Access 2024, 12, 5851–5863. [Google Scholar] [CrossRef]

- Yin, C.; Jiang, S.; Wang, W.; Jin, J.; Wang, Z.; Wu, B. Fault diagnosis method of rolling bearing based on GADF-CNN. J. Vib. Shock 2021, 40, 247–253. [Google Scholar]

- Lei, Z.; Wen, G.; Dong, S.; Huang, X.; Zhou, H.; Zhang, Z.; Chen, X. An intelligent fault diagnosis method based on domain adaptation and its application for bearings under polytropic working conditions. IEEE Trans. Instrum. Meas. 2020, 70, 1–14. [Google Scholar] [CrossRef]

- Zuo, F.; Liu, J.; Fu, M.; Wang, L.; Zhao, Z. An Efficient Anchor-Free Defect Detector With Dynamic Receptive Field and Task Alignment. IEEE Trans. Ind. Inform. 2024, 20, 8536–8547. [Google Scholar] [CrossRef]

- Ma, J.; Cai, W.; Shan, Y.; Xia, Y.; Zhang, R. An Integrated Framework for Bearing Fault Diagnosis: Convolutional Neural Network Model Compression Through Knowledge Distillation. IEEE Sensors J. 2024, 24, 40083–40095. [Google Scholar] [CrossRef]

- Zhu, Y.; Guo, Z.; Zhan, X.; Huang, X. Transformer winding looseness diagnosis method based on multiple feature extraction and sparrow search algorithm optimized XGBoost. Electr. Mach. Control 2013, 28, 87–97. [Google Scholar]

- Tang, Z.; Shi, X.; Zou, H.; Zhu, Y. Fault diagnosis of wind turbine based on random forest and XGBoost. Renew. Energy 2021, 39, 353–358. [Google Scholar]

- Gong, Z.; Rao, T.; Wang, G. Transformer fault diagnosis method based on improved particle swarm optimization XGBoost. High Volt. Electr. Appar. 2019, 59, 61–69. [Google Scholar]

- GB/T31143—2014; General Requirements for Arc Fault Detection Devices (AFDD). China National Standardization Administration: Beijing, China, 2014.

- GB14287.4—2014; Electrical Fire Monitoring System―Part 4: Arcing Fault Detectors. China National Standardization Administration: Beijing, China, 2014.

| Load Category | Load | Rated Electrical Parameters |

|---|---|---|

| Linear load | Electric kettle | 220 V/1600 W |

| Halogen lamp | 220 V/600 W | |

| Hair dryer | 220 V/1000 W | |

| Vacuum cleaner | 220 V/1300 W | |

| Nonlinear load | Voltage regulating circuit | 220 V/800 W |

| Computer | 220 V/100 W | |

| Electric fan | 220 V/60 W | |

| Mixed load | Hair dryer + electric kettle | 220 V/1000 W + 220 V/1600 W |

| Hair dryer + computer | 220 V/1000 W + 220 V/100 W | |

| Electromagnetic oven + voltage regulating circuit | 220 V/1300 W + 220 V/800 W |

| Data Mode | Database Set Type | Number | Total/Number |

|---|---|---|---|

| Normal (experimental label: 1) | Training set | 13,368 | 15,456 |

| Test set | 2088 | ||

| Hitch (experimental label: 2) | Training set | 13,248 | 15,462 |

| Test set | 2214 |

| Model | Specificity (Sp) | Sensitivity (Se) | Accuracy (Acc) | Precision | Recall | F1 |

|---|---|---|---|---|---|---|

| BP neural network | 96.811 | 85.324 | 93.173 | 92.536 | 85.324 | 88.784 |

| Extreme learning | 96.904 | 75.607 | 90.16 | 91.882 | 75.607 | 82.954 |

| Random forest | 97.139 | 94.94 | 96.442 | 93.894 | 94.94 | 94.415 |

| Decision tree | 97.608 | 94.028 | 96.474 | 94.796 | 94.028 | 94.411 |

| AHA-XGBoost | 98.8743 | 95.648 | 98.098 | 97.523 | 95.648 | 96.576 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qi, L.; Kawaguchi, T.; Hashimoto, S. Series Arc Fault Detection Based on Improved Artificial Hummingbird Algorithm Optimizer Optimized XGBoost. Appl. Sci. 2025, 15, 6861. https://doi.org/10.3390/app15126861

Qi L, Kawaguchi T, Hashimoto S. Series Arc Fault Detection Based on Improved Artificial Hummingbird Algorithm Optimizer Optimized XGBoost. Applied Sciences. 2025; 15(12):6861. https://doi.org/10.3390/app15126861

Chicago/Turabian StyleQi, Lichun, Takahiro Kawaguchi, and Seiji Hashimoto. 2025. "Series Arc Fault Detection Based on Improved Artificial Hummingbird Algorithm Optimizer Optimized XGBoost" Applied Sciences 15, no. 12: 6861. https://doi.org/10.3390/app15126861

APA StyleQi, L., Kawaguchi, T., & Hashimoto, S. (2025). Series Arc Fault Detection Based on Improved Artificial Hummingbird Algorithm Optimizer Optimized XGBoost. Applied Sciences, 15(12), 6861. https://doi.org/10.3390/app15126861