Transforming Monochromatic Images into 3D Holographic Stereograms Through Depth-Map Extraction

Abstract

1. Introduction

2. Related Work

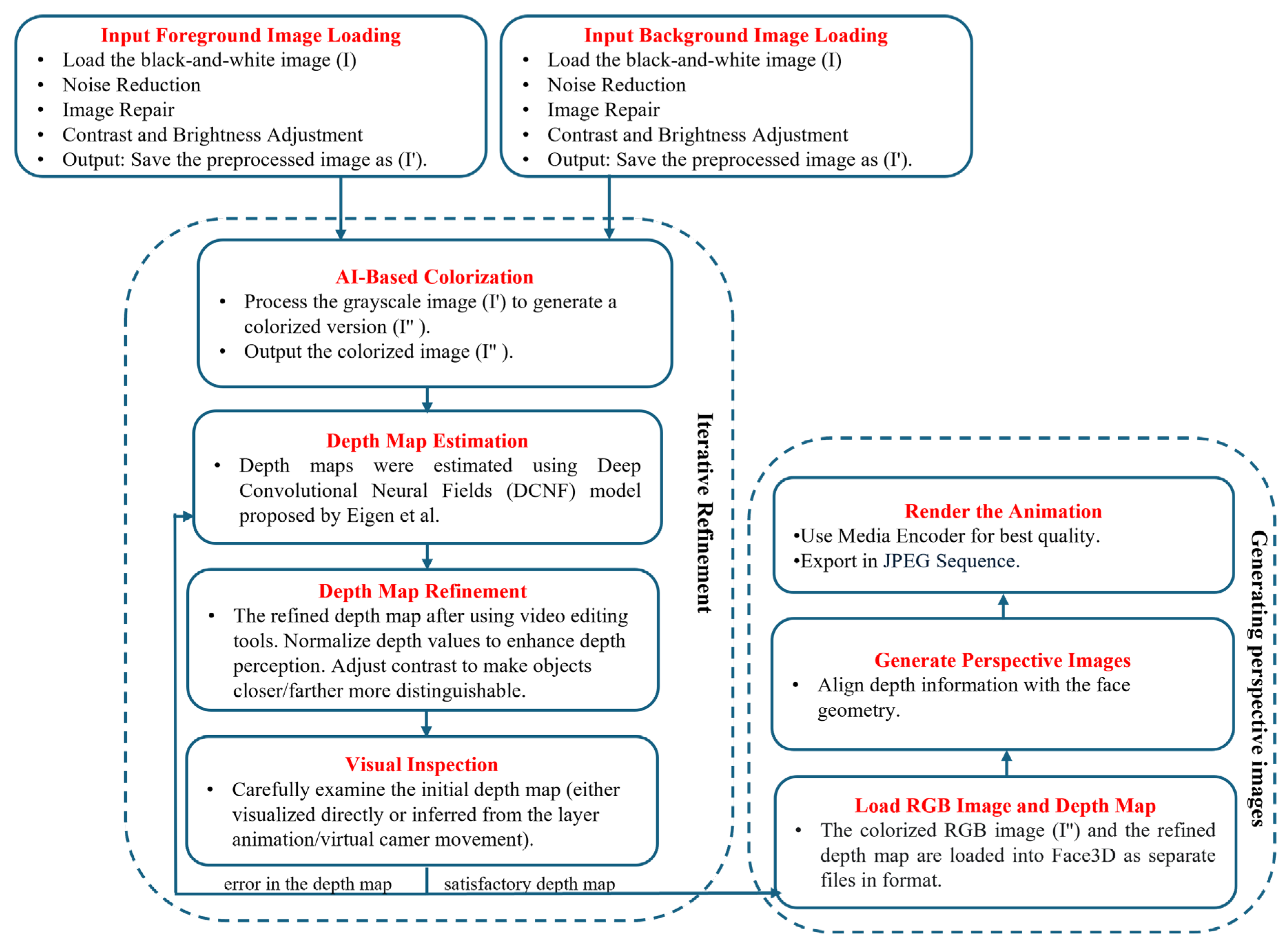

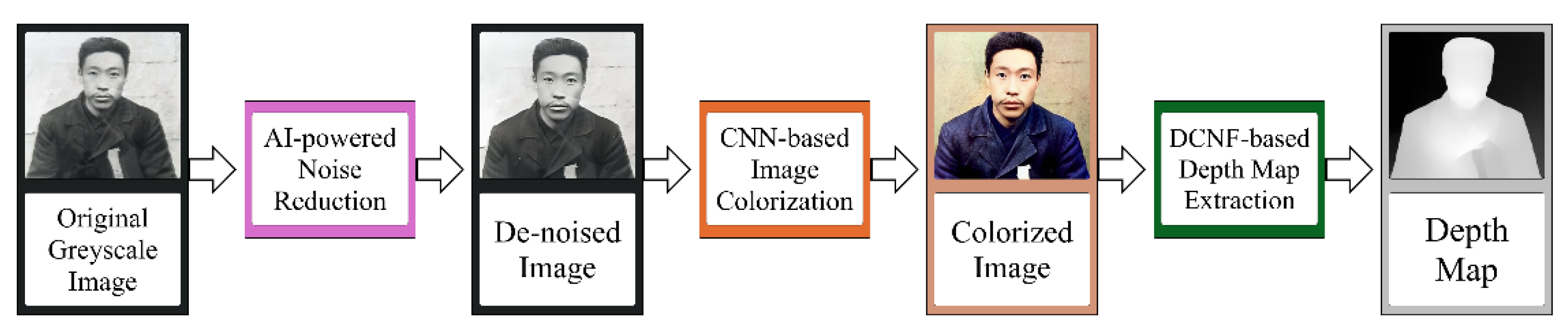

3. Proposed Method

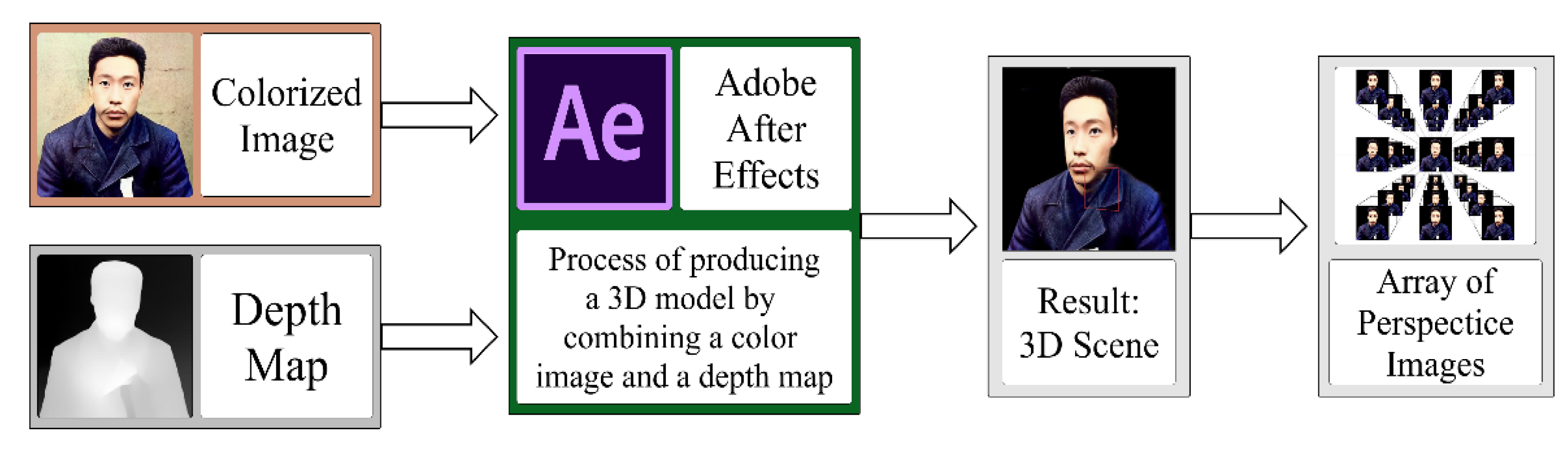

3.1. Generation of the 3D Model from a Single Image Based on Depth Maps

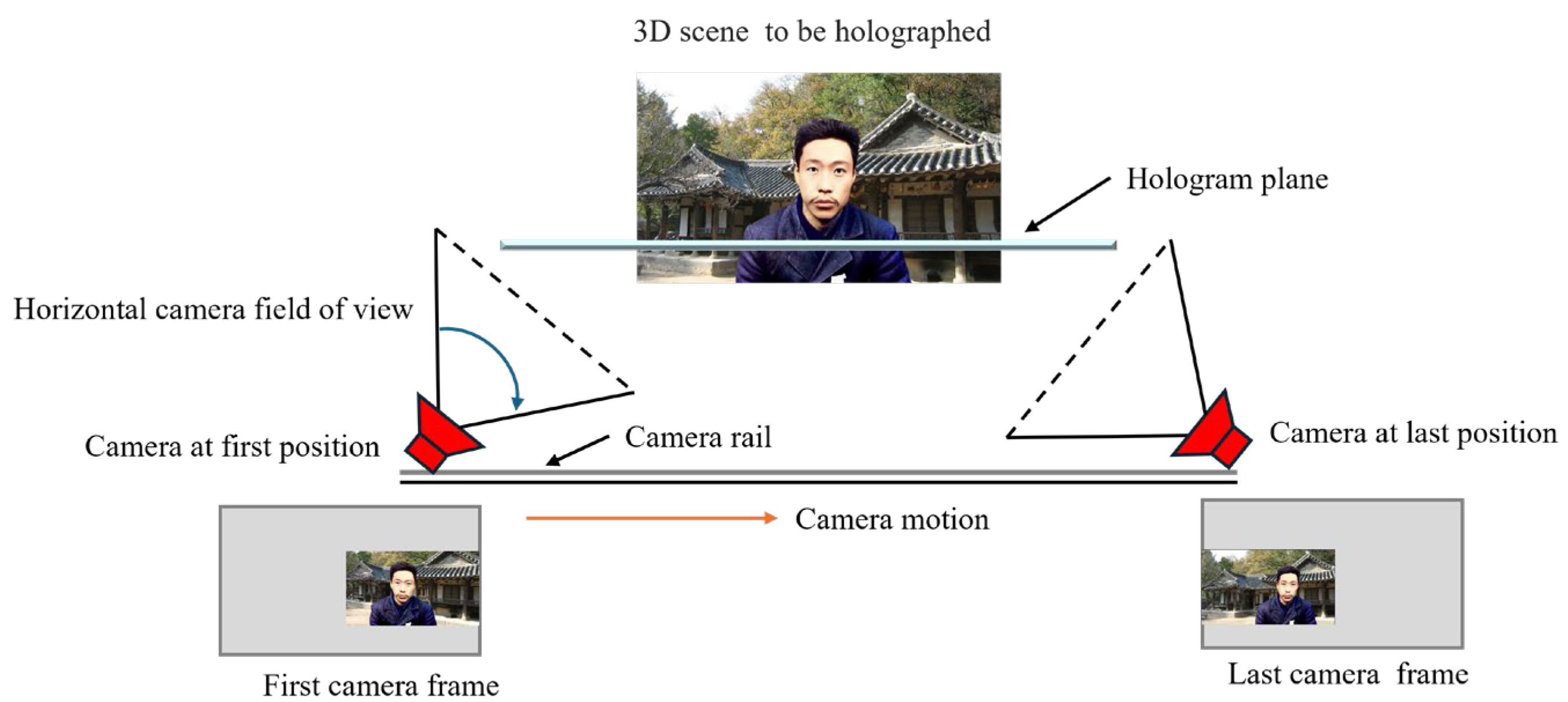

3.2. Acquisition of Perspective Images

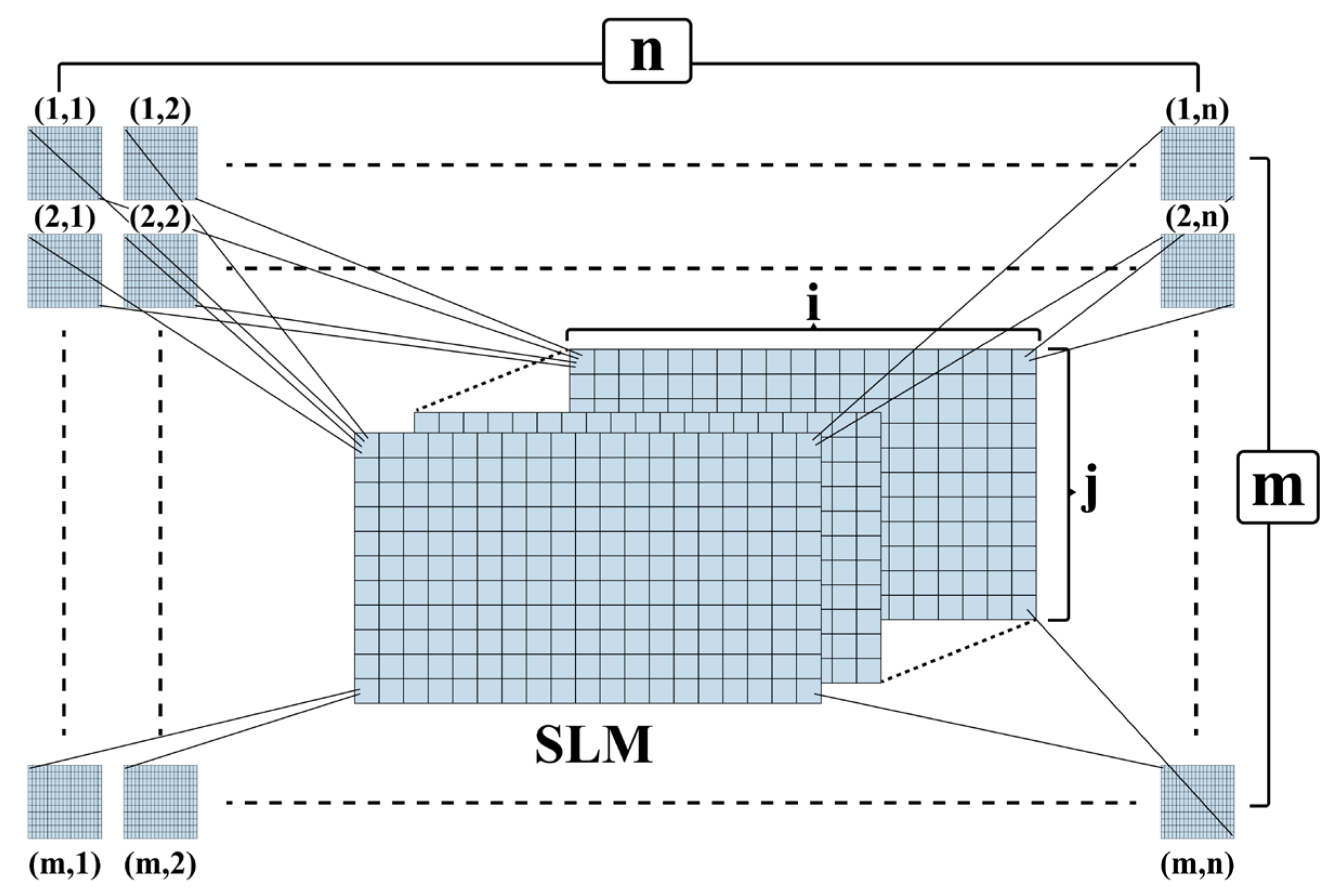

3.3. Digital Hologram Encoding and Hogel Generation

4. Results and Discussion

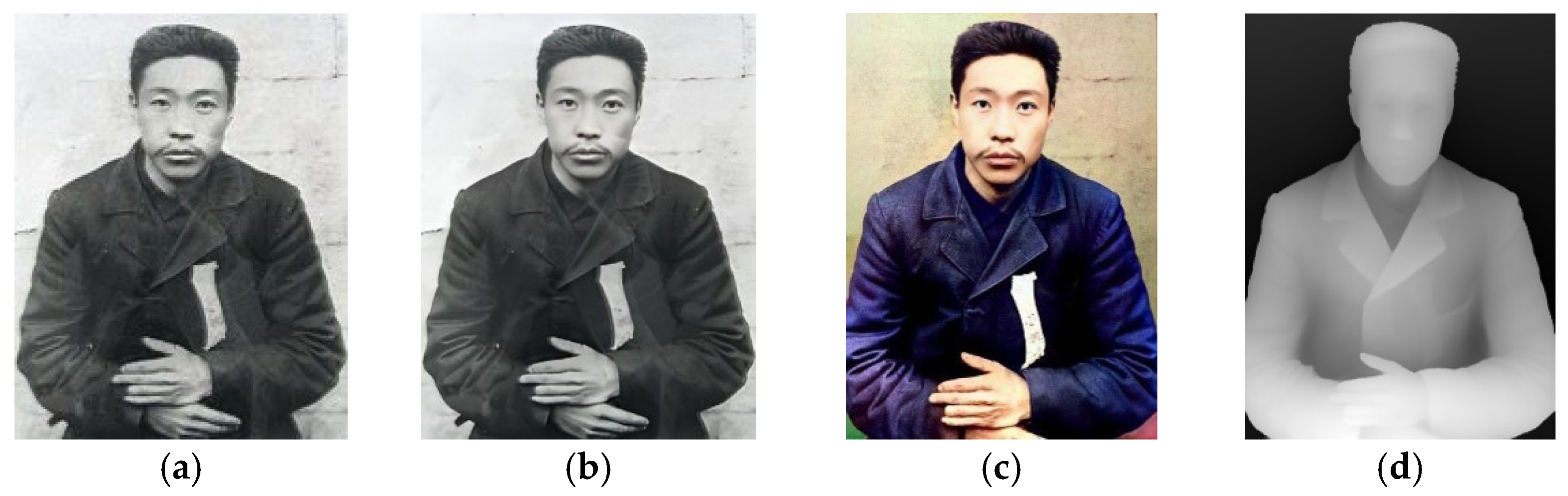

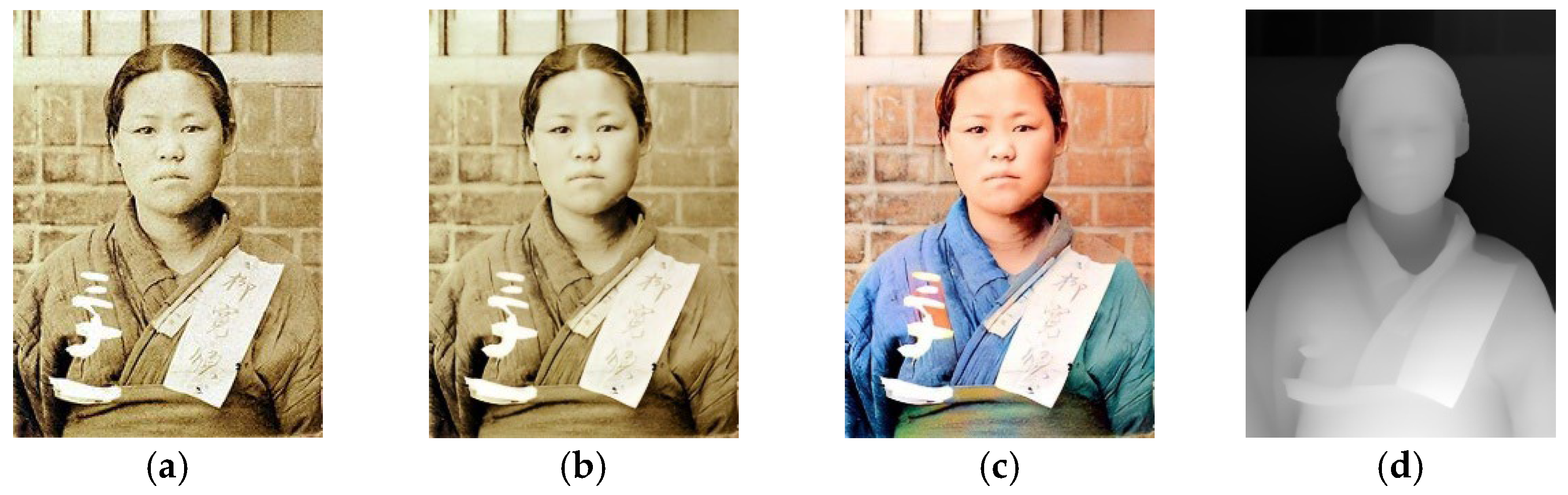

4.1. Dataset

4.2. Holographic Plates for Holographic Stereogram Printer Recordings

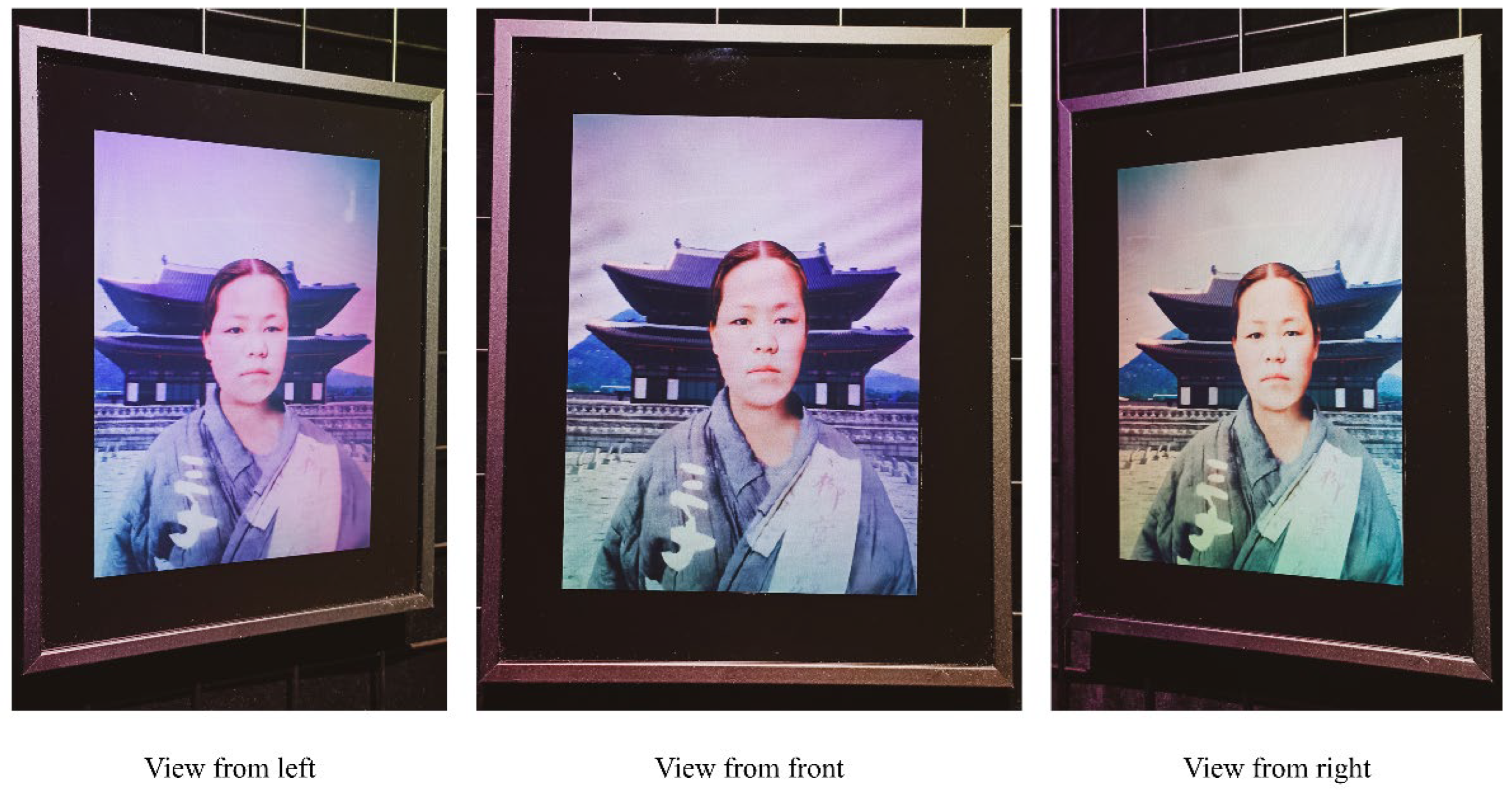

4.3. Final Holograms

4.4. Results and Analysis

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| CNN | Convolutional neural network |

| DCNF | Deep convolutional neural field |

| CRF | Conditional random field |

| SLM | Spatial light modulator |

| FOV | Field of view |

References

- Javidi, B.; Okano, F. Three-Dimensional Television, Video, and Display Technologies; Springer: Heidelberg, Germany, 2002. [Google Scholar]

- Bjelkhagen, H.; Brotherton-Ratcliffe, D. Ultra-Realistic Imaging: Advanced Techniques in Analogue and Digital Colour Holography, 1st ed.; CRC Press: Boca Raton, FL, USA, 2013. [Google Scholar] [CrossRef]

- Saxby, G.; Zacharovas, S. Practical Holography, 4th ed.; CRC Press: Boca Raton, FL, USA, 2015. [Google Scholar] [CrossRef]

- Komar, V.G. Holographic motion picture systems compatible with both stereoscopic and conventional systems. Tekh. Kino Telev. 1978, 10, 3–12. [Google Scholar]

- Gentet, P.; Coffin, M.; Gentet, Y.; Lee, S.-H. Recording of Full-Color Snapshot Digital Holographic Portraits Using Neural Network Image Interpolation. Appl. Sci. 2023, 13, 12289. [Google Scholar] [CrossRef]

- Richardson, M.J.; Wiltshire, J.D. The Hologram: Principles and Techniques; John Wiley & Sons: Hoboken, NJ, USA, 2018. [Google Scholar]

- Toal, V. Introduction to Holography, 2nd ed.; CRC Press: Boca Raton, FL, USA, 2022. [Google Scholar] [CrossRef]

- Gabor, D. A new microscopic principle. Nature 1948, 161, 777–778. [Google Scholar] [CrossRef] [PubMed]

- Denisyuk, Y.N. On the reproduction of the optical properties of an object by the wave field of its scattered radiation. Opt. Spectrosc. 1963, 14, 279–284. [Google Scholar]

- Leith, N.; Upatnieks, J. Wavefront Reconstruction with Continuous-Tone Objects. J. Opt. Soc. Am. 1963, 53, 1377–1381. [Google Scholar] [CrossRef]

- Brotherton-Ratcliffe, D.; Zacharovas, S.; Bakanas, R.; Pileckas, J.; Nikolskij, A.; Kuchin, J. Digital Holographic Printing using Pulsed RGB Lasers. Opt. Eng. 2011, 50, 091307. [Google Scholar] [CrossRef]

- Sarakinos, A.; Zacharovas, S.; Lembessis, A.; Nikolskij, A. Direct-write digital holographic printing of color reflection holograms for visualization of artworks and historic sites. In Proceedings of the 11th International Symposium on Display Holography, Aveiro, Portugal, 25-29 June 2018. [Google Scholar]

- Zacharovas, S. Advances in Digital Holography. In Proceedings of the IWHM 2008 International Workshop on Holographic Memories Digests, Tahara, Japan, 22 October 2008; pp. 55–67. [Google Scholar]

- Dashdavaa, E.; Khuderchuluun, A.; Lim, Y.-T.; Jeon, S.-H.; Kim, N. Holographic stereogram printing based on digitally computed content generation platform. Proc. SPIE 2019, 10944, 109440M. [Google Scholar] [CrossRef]

- Gentet, P.; Coffin, M.; Choi, B.H.; Kim, J.S.; Mirzaevich, N.O.; Kim, J.W.; Do Le Phuc, T.; Ugli, A.J.F.; Lee, S.H. Outdoor Content Creation for Holographic Stereograms with iPhone. Appl. Sci. 2024, 14, 6306. [Google Scholar] [CrossRef]

- Lee, S.; Kwon, S.; Chae, H.; Park, J.; Kang, H.; Kim, J.D.K. Digital hologram generation for a real 3D object using a depth camera. In Practical Holography XXXIII: Displays, Materials, and Applications; Bjelkhagen, H.I., Bove, V.M., Eds.; Proc. SPIE; SPIE: San Francisco, CA, USA, 2019; Volume 10944, p. 109440J. [Google Scholar]

- Dashdavaa, E.; Khuderchuluun, A.; Wu, H.-Y.; Lim, Y.T.; Shin, C.W.; Kang, H.; Jeon, S.H.; Kim, N. Efficient Hogel-Based Hologram Synthesis Method for Holographic Stereogram Printing. Appl. Sci. 2020, 10, 8088. [Google Scholar] [CrossRef]

- Kwon, K.H.; Erdenebat, M.-U.; Kim, N.; Kwon, K.-C.; Kim, M.Y. Image quality enhancement of 4D light field microscopy via reference image propagation-based one-shot learning. Appl. Intell. 2023, 53, 23834–23852. [Google Scholar] [CrossRef]

- Mertan, A.; Duff, D.J.; Unal, G. Single image depth estimation: An overview. Digit. Signal Process 2022, 123, 103441. [Google Scholar] [CrossRef]

- Khan, M.S.U.; Pagani, A.; Liwicki, M.; Stricker, D.; Afzal, M.Z. Three-Dimensional Reconstruction from a Single RGB Image Using Deep Learning: A Review. J. Imaging 2022, 8, 225. [Google Scholar] [CrossRef] [PubMed]

- Belkaid, M.; Alaoui, E.A.A.; Berrajaa, A.; Akkad, N.E.; Merras, M. Deep learning-based solution for 3D reconstruction from single RGB images. In Proceedings of the 2024 International Conference on Circuit, Systems and Communication (ICCSC), Fes, Morocco, 28–29 June 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Ranftl, R.; Lasinger, K.; Hafner, D.; Schindler, K.; Koltun, V. Towards Robust Monocular Depth Estimation: Mixing Datasets for Zero-Shot Cross-Dataset Transfer. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 1623–1637. [Google Scholar] [CrossRef] [PubMed]

- Iizuka, S.; Simo-Serra, E.; Ishikawa, H. Let there be Color!: Joint End-to-end Learning of Global and Local Image Priors for Automatic Image Colorization with Simultaneous Classification. ACM Trans. Graph. 2016, 35, 1–11. [Google Scholar] [CrossRef]

- Liu, F.; Shen, C.; Lin, G. Deep convolutional neural fields for depth estimation from a single image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 5162–5170. [Google Scholar]

- Su, J.; Yuan, Q.; Huang, Y.; Jiang, X.; Yan, X. Method of single-step full parallax synthetic holographic stereogram printing based on effective perspective images’ segmentation and mosaicking. Opt. Express 2017, 25, 23523–23544. [Google Scholar] [CrossRef] [PubMed]

- Gentet, P.; Gentet, Y.; Lee, S. CHIMERA and Denisyuk hologram: Comparison and analysis. In Practical Holography XXXV: Displays, Materials, and Applications; Proc. SPIE; SPIE: San Francisco, CA, USA, 2021; Volume 11710, pp. 83–89. [Google Scholar]

- Sharma, G. Digital Color Imaging Handbook; CRC Press: Boca Raton, FL, USA; London, UK; New York, NY, USA; Washington, DC, USA, 2003. [Google Scholar] [CrossRef]

| Image Size (250 μm Hogel) | Frames Rendered | Camera Distance (mm) | Camera Track (mm) | Horizontal Camera FOV (°) | Hologram Width (mm) | Hologram Height (mm) | |

|---|---|---|---|---|---|---|---|

| Pixels | Pixels | 180 | 350 | 488 | 27.82 | 150 | 200 |

| 880 | 660 | ||||||

| 880 | 660 | 300 | 480 | 795 | 27.82 | 150 | 200 |

| Metric | Original Image | Proposed Methods |

|---|---|---|

| Resolution (pixels) | 500 × 393 | 880 × 660 |

| Brightness | 133.50 | 165.85 |

| Contrast | 79.26 | 86.24 |

| Sharpness | 58.34 | 313.27 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Narzulloev, O.M.; Choi, J.; Aralov, J.F.U.; Hwang, L.; Gentet, P.; Lee, S. Transforming Monochromatic Images into 3D Holographic Stereograms Through Depth-Map Extraction. Appl. Sci. 2025, 15, 5699. https://doi.org/10.3390/app15105699

Narzulloev OM, Choi J, Aralov JFU, Hwang L, Gentet P, Lee S. Transforming Monochromatic Images into 3D Holographic Stereograms Through Depth-Map Extraction. Applied Sciences. 2025; 15(10):5699. https://doi.org/10.3390/app15105699

Chicago/Turabian StyleNarzulloev, Oybek Mirzaevich, Jinwon Choi, Jumamurod Farhod Ugli Aralov, Leehwan Hwang, Philippe Gentet, and Seunghyun Lee. 2025. "Transforming Monochromatic Images into 3D Holographic Stereograms Through Depth-Map Extraction" Applied Sciences 15, no. 10: 5699. https://doi.org/10.3390/app15105699

APA StyleNarzulloev, O. M., Choi, J., Aralov, J. F. U., Hwang, L., Gentet, P., & Lee, S. (2025). Transforming Monochromatic Images into 3D Holographic Stereograms Through Depth-Map Extraction. Applied Sciences, 15(10), 5699. https://doi.org/10.3390/app15105699