Abstract

A novel electromyography (EMG)-based wheelchair interface was developed that uses contractions from the temporalis muscle to control a wheelchair. To aid in the training process for users of this interface, a serious training game, Limbitless Journey, was developed to support patients. Amyotrophic Lateral Sclerosis (ALS) is a condition that causes progressive motor function loss, and while many people with ALS use wheelchairs as mobility devices, a traditional joystick-based wheelchair interface may become inaccessible as the condition progresses. Limbitless Journey simulates the wheelchair interface by utilizing the same temporalis muscle contractions for control of in-game movements, but in a low-stress learning environment. A usability study was conducted to evaluate the serious-game-based training platform. A major outcome of this study was qualitative data gathered through a concurrent think-aloud methodology. Three cohorts of five participants participated in the study. Audio recordings of participants using Limbitless Journey were transcribed, and a sentiment analysis was performed to evaluate user perspectives. The goal of the study was twofold: first, to perform a think-aloud usability study on the game; second, to determine whether accessible controls could be as effective as manual controls. The user comments were coded into the following categories: game environment, user interface interactions, and controller usability. The game environment category had the most positive comments, while the most negative comments were primarily related to usability challenges with the flexion-based controller. Interactions with the user interface were the main topic of feedback for improvement in future game versions. This game will be utilized in subsequent trials conducted at the facility to test its efficacy as a novel training system for the ALS population. The feedback collected will be implemented in future versions of the game to improve the training process.

1. Introduction

This research utilizes electromyography (EMG) technology as an alternative wheelchair control option. Other options that are available for patients with mobility and dexterity challenges include brain–computer interfaces (BCIs), head-tracking interfaces, and eye-tracking interfaces [1,2,3,4,5,6]. Specific BCI methods often convert electroencephalography (EEG) signals from the brain into commands that can be used for wheelchair movements [1]. A BCI equips patients with an intuitive system that accurately projects user intentionality in controlled settings [2]. However, EEG signals are sensitive to a variety of factors and need to be effectively amplified due to background noise when used in real-world environments [2]. The tracking of head movement is another alternative interface for hands-free wheelchairs [3]. Although this system has been shown to provide freedom of control, additional considerations should be taken into account for specific populations. In the case of neurodegenerative disorders, the eventual loss of muscle control can cause difficulty in changing head positions. The virtual buttons displaying wheelchair directions (forward, backward, left, right) can then be pressed with a glance through an eye-tracking interface [4]. The eye-tracking abilities of this interface require minimal head movement and can be applied to a wide range of populations, although users with complex conditions or those with low vision may face difficulties in operating systems. EMG interfaces measure electrical signals transmitted by muscular contraction [5,7,8]. Leveraging hardware and software signal processing, each intentional muscle active contraction burst can be isolated. The relative magnitude of these bursts, calibrated to the patient, can be assessed and then used to trigger movement of a wheelchair based on set threshold values. The authors have been advancing research on this EMG–wheelchair interface, which has been shown to be useful for improving mobility among ALS patients [6]. Challenges with EMG-based technology include difficulty with muscle isolation and the need for training methods [6]. The advantages of EMG systems include the minimally invasive nature of implementation and the flexibility of placement, which may be required among populations with muscle deterioration.

Early advancements in BCI, EMG, and eye-tracking interfaces laid the groundwork for later developments. More recently, hybrid brain–computer interfaces that combined BCI systems with steady-state visual evoked potentials (SSVEP) showed promise in practical applications. The multi-modality of this system increases accuracy and decreases fatigue compared to an EEG-based system only [9]. Another study sought to evaluate the usability of a BCI system coupled with eye tracking, determining that this hybrid system allows compensation when a single-modality decreases in performance [10]. Augmented reality offers another channel of user input, which further shows how multi-modal system integration is increasingly being implemented to improve the user experience [11].

The need for these types of alternative devices for mobility and dexterity is heightened for patient groups with complex and progressive pathologies, including patients with neurodegenerative disorders. Neurodegenerative disorders are progressive diseases caused by the degeneration of the central nervous system (CNS) and can result in a decline in independent mobility and hand dexterity [12]. Amyotrophic lateral sclerosis (ALS) is the most common motor neuron disease, with a prevalence rate of 3.40/100,000 in North America and 5.40/100,000 in Europe [13]. The onset and progression of the disease lead to weakness in the limbs and trunk, which can negatively impact a person’s ability to perform activities of daily living [14]. Oftentimes, movement of the upper and lower extremities is severely compromised and can limit the use of a traditional joystick for powered wheelchairs.

Previous research investigated the development and assessment of a temporalis EMG wheelchair interface that was evaluated with ALS patients for hands-free control [6]. The temporalis muscle is responsible for the elevation of the mandible and is located laterally on the head [15]. Despite the motor neuron degeneration that occurs in ALS progression, the temporalis muscle has a lesser decrease in its contraction force over time than the muscles of the digits [16]. The wheelchair interface uses signal discretization of muscle contraction magnitude to control steering and movement. For instance, a calibrated “hard” flex corresponds to a forward movement, “medium” flex to a right turn, and a “light” flex to a left turn [6]. This interface was tested among ALS patients in a clinical setting, and although independent control was established, patients found reliable discretization of temporalis flexions difficult [6]. In contrast to other wheelchair control systems, the utilization of EMG technology allows a high degree of modulation. Though the temporalis muscle was selected because of the ease of electrode placement alongside a lessened impact on contraction force, other muscles could be also used. Because surface electromyography is utilized, individuals with ALS or other disorders may benefit from the selection of other muscle groups. Additionally, the EMG–wheelchair interface can be attached to any power wheelchair system, as a housing is placed over the traditional joystick in order to provide navigational control. Training prior to real-world implementation of the interface could reduce this initial barrier to using the wheelchair [17].

As training with the hands-free wheelchair can be challenging, serious training games were explored to support patients in learning how to control the electromyography-actuated system [6]. Serious games are video games used for a purpose in addition to entertainment [18]. There have been many recent advancements in gamified trainingand it has been increasingly implemented in clinical settings. Most recently, PhantomAR was developed to improve rehabilitation outcomes for patients with phantom limb pain [19]. This platform allowed users to immerse themselves in a task-based mixed reality environment. A systematic study of motorized, virtual reality, and electrical simulation games shows how these platforms can be useful for stroke rehabilitation [20], specifically in improving cognitive, emotional, and social outcomes. One study tested racing, dexterity, and rhythm-based video games against a standardized EMG training tool (MyoBoy) [21]. Users expressed more motivation and engagement playing through the gamified training games [21]. In an analysis comparing equivalent gamified learning and traditional learning methods, gamified methods promoted more learning, and this difference was attributed to higher engagement and flow levels [22].

The authors’ prior research has focused on using gamified training to improve training efficacy for electromyography-controlled accessibility devices, including pediatric prostheses [23]. Early evaluations of EMG control mechanisms led to a variety of gamified control training schemes, which were created to improve usability and reduce rejection rates among the patients [24]. Early games such as “Sushi Slap”, “Crazy Meteor Cleaner”, and “Beeline Border Collie” utilized a flex threshold for gameplay [23]. For more complex hands-free wheelchair controls to steer the device, a new training platform was created. Limbitless Journey is a serious game designed to practice flexion control of the temporalis muscles in an engaging but low-stress environment. This virtual environment is controlled by muscle flexions that parallel the controls required to operate the wheelchair. Muscle activity is read by a novel EMG video game “flex controller” placed on the temporalis muscle that controls in-game character movements.

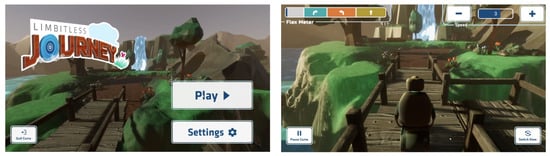

Limbitless Journey was developed as a response to the need for a safe training space for new users of the novel temporalis-muscle-based wheelchair control system. The game’s home screen and environment are pictured in Figure 1. The earliest iteration took the form of a racing game, but it was quickly determined that this category of game was incompatible with the wheelchair controls. As such, racing mechanics made the game less engaging and less realistic to the training practice needed by the users. The game then took form as a simulation of the wheelchair in an open space and was quickly adapted according to the users’ needs. This iteration of the game included a space to explore in a friendly environment with elements from cozy games, which are games known for an inviting and calming atmosphere [25], with challenges ranging from basic steering to managing deliberate obstacles. As the system evolved, it became obvious that the users would not be able to use the user interface (UI) being presented to them using a conventional keyboard and mouse. The eye-tracking interface was added to alleviate the need to manipulate controls by hand and the need for a proctor to constantly help with UI issues.

Figure 1.

Limbitless Journey pictured. Home screen (Left) and game environment (Right). The controls leverage both eye tracking and an EMG flexion schema. Participants are able to customize game settings such as player point of view and character speed using on-screen buttons.

2. Materials and Methods

This investigation is a usability study to both improve the overall usability of the system and determine if the eye-tracking interface is a suitable alternative for users with diminished hand dexterity. This study is underpinned by Flow Theory as a theoretical framework for a think-aloud study [26]. Flow Theory is grounded in users achieving optimal states of focus, control, and enjoyment. This methodology is well suited to the evaluation of a video game, and this study evaluated user experiences and opinions of a serious video game, Limbitless Journey, in order to refine the game prior to its release to patients. This research serves as a continuation of preliminary analysis of the game, which quantitatively evaluated the efficacy of the game’s design, specifically its eye-tracking interface [17].

The recruited participants met specific inclusion criteria, which included individuals between the ages of 18 and 65 who were able to consent, who had never used the wheelchair EMG interface or played the EMG-actuated training video game, and who had the ability to voluntarily contract their temporalis muscle. Participants granted informed, verbal consent in accordance with a procedure approved by the University of Central Florida’s Institutional Review Board number (STUDY00004664).

While Limbitless Journey’s intended demographic consists of those needing hands-free control of wheelchairs, including ALS patients, the able-bodied population was selected for the completion of this study. It is important to note that the intention of the study was to examine the eye-tracking interface’s usability efficacy as an alternative method for in-game navigation. As such, it was necessary for the selected population to be capable of using both traditional (mouse-and-keyboard) and alternative (eye-tracking) methods for comparison. Identifying usability and interface issues prior to interaction with patients is critical for optimizing patient-focused research. Game-based improvement prior to real-world introduction may help to increase user engagement and training.

2.1. Electromyographic Signal Processing

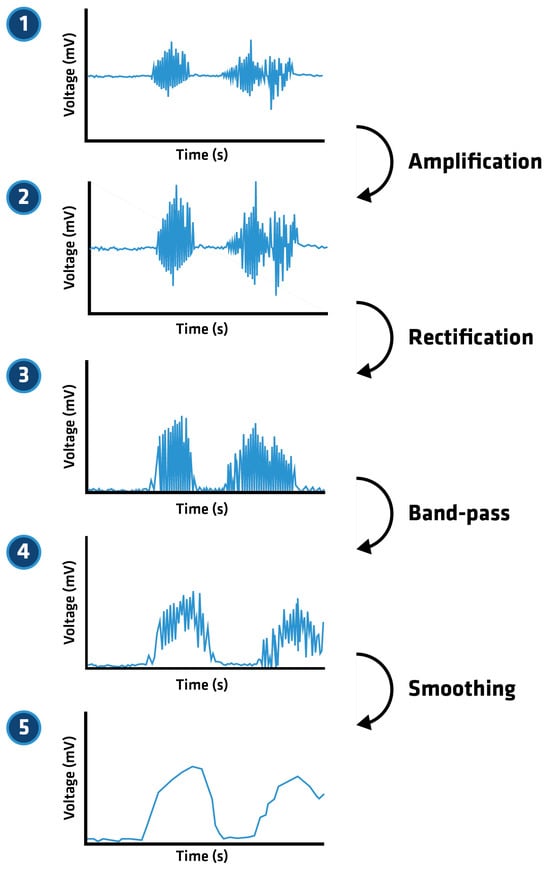

The assessment of electromyographic signals utilizes both hardware and software to create a refined signal. Depending on the size of the muscle motions, the body will recruit more or fewer motor units [7,8], resulting in variable magnitudes of voltage. The processing routine uses amplification, rectification, band-pass filtering, and smoothing to achieve a signal that can be usable for magnitude peak finding. A representation of this process can be seen in Figure 2. A calibration threshold set is applied on top of the signal, with different thresholds corresponding to activation of different action states in the training system. In the corresponding wheelchair control system, this triggers different motor combination states to enable steering control.

Figure 2.

Graphic depicting the EMG processing performed by the EMG interface for the hands-free flexion-based wheelchair controller. Note that this graphic is a representation of electromyographic signal processing and not a collected signal.

2.2. Procedure Overview

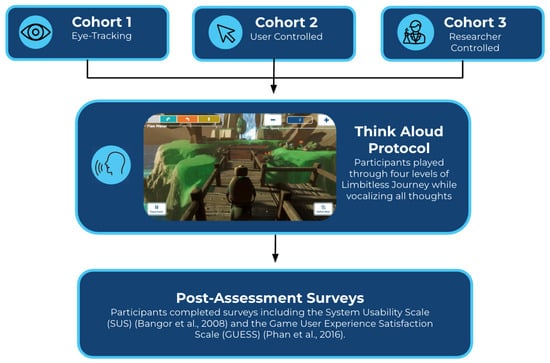

The study divided participants into three cohorts to test different methods of controlling the video game. Each cohort was composed of five individuals, who used unilateral contractions of the temporalis muscle to control in-game movements; however, the user interface to establish in-game control varied. Cohort 1 used the eye-tracking interface to navigate, Cohort 2 required participants to use a traditional mouse to make their own selections, and Cohort 3 allowed researchers to make selections at the request of the participants. Past research in the field has shown that groups of five are capable of identifying 85% of usability issues in testing [27]. Figure 3 highlights the focus of the cohorts in which the participants were placed.

Figure 3.

Procedural overview. Participants are assigned to one of three cohorts based on in-game navigation modalities. Cohort 1 utilizes the eye-tracking interface to allow users to interact with game elements. Cohort 2 involves users interacting with the game via mouse control. Cohort 3 requires the user to prompt a researcher to select game commands. All participants then play through the game while vocalizing their thoughts and feedback. Upon completion of the game, participants then complete the System Usability Scale (SUS) [28] and Game User Experience Satisfaction Scale [29].

Quantitative data were collected via the Game User Experience and Satisfaction Scale (GUESS) [29] and the Systems Usability Scale (SUS) [28] questionnaires after gameplay. Qualitative data collection used a concurrent think-aloud methodology [17].

2.3. Data Collection

Participants completed post-assessment surveys after playing through all four levels of the game. The Game User Experience and Satisfaction Scale, designed to evaluate overall user satisfaction with video games, consists of 55 items further divided into nine categories [29]. Each of the items is evaluated using a seven-point Likert Scale. A sample size of five users responding on the Likert Scale has been shown to be effective in finding usability issues [27].

The Systems Usability Scale (SUS) is a ten-item scale evaluated via five response options, modeled after the Likert Scale [28]. The items allow a consistent measure of usability, and scores are displayed on a scale from 0 to 100.

The qualitative portion of the study consisted of a concurrent think-aloud protocol, in which the subjects complete the key task while simultaneously verbally conveying their thoughts [30]. Concurrent think-aloud protocols have been shown to result in accurate verbalization of the working memory, as per Cognitive Load Theory, and are a viable method of collecting qualitative information on participants’ thought processes [31,32]. Additionally, think-aloud requirements have not been shown to influence cognitive processes or the results of the tasks, allowing participants to remain in a focused state as per Flow Theory [32]. Current theories imply that decision-making thinking is dual-process and often is conducted through two pathways: Type 1, which is rapid and automatic, and Type 2, which is slower-paced and contemplative [33]. Based on the chosen concurrent think-aloud methodology, and the design of the game, it was expected that only Type 1 responses would be elicited from participants. The think-aloud protocol has also been shown to have no impact on cognitive load from a self-regulation perspective, and exercising working memory during the think-aloud process should not have a negative impact on participants [34]. Furthermore, the system is calibrated to have a low-pass filter zone, such that users can make minor motions, talk, or even gently drink water while using the system. This may add complexity for a user issuing a new command while talking, but it does not hinder the ability to complete the protocol while controlling the game.

Users were instructed to provide their comments on factors of their choosing and were prompted as needed. Researchers silently pointed to their mouth for prompting in order to avoid the distraction of a verbal reminder. Participants were encouraged to share all thoughts that occurred during the play-through. Once gameplay was completed, participants were asked to share any final thoughts with the researchers. The audio was recorded through Zoom® [35], transcribed via Temi™ [36], and subsequently analyzed in ATLAS.ti [37]. Think-aloud procedures are useful in detecting errors in interfaces in a time-efficient manner. This allows conditions to be retested after major interface errors are corrected [38].

2.4. Analysis Methods

ATLAS.ti is a qualitative analysis software designed to assist researchers in managing and drawing conclusions from large datasets through features such as code building and data visualization [39]. While the software has the potential for AI-assisted coding and sentiment analysis, the data in this study was opted out of this service to preserve confidentiality. As an additional confidentiality measure, all identifying data of users were removed before the transcripts were uploaded into the software. Each transcript was only identifiable by a secure user ID number.

Coding was created by the researchers and organized through the qualitative analysis software in order to determine a general sentiment analysis. Compared to machine learning or dictionary-based coding systems, manual coding was found to have superior accuracy [40]. Qualitative coding of textual data involves examining individual quotations within a dataset and sorting them into predetermined categories referred to as codes [41]. These codes act as tags for specific comments made in the transcripts. For this study’s purpose, each user comment was coded as positive, neutral, or negative. Each transcript was individually coded, and three major themes emerged from the data. These themes were then split into further categories according to the codes. The final coded categories were positive, neutral, or negative within each of the three themes: environment, usability, and user interface (UI). Coding was a collaborative effort between a pair of researchers in an attempt to reduce potential bias. Consensus coding is a team-based approach to analyzing qualitative data sets and has been shown to yield reliable results [42].

The categories had rigid outlines that contained pre-set elements of the game. The environment included the user’s gameplay experience, graphics, audio, in-game features, and level theming. Usability referred to the users’ ability to navigate the game controls, as well as the perceived difficulty of the flexion-based controller. UI categorization was reserved for interactions with on-screen commands, game setup, and the eye-tracking interface.

3. Results

Following the completion of the first 15 data points of the study, an error was noted within the user interface regarding the implementation of the eye-tracking interface. Due to the inability to complete in-game levels without additional assistance, a revised version of the game build was developed for continued evaluation. The initial usability bug only affected one cohort, as it was limited to the eye-tracking interface. Five additional consenting participants were added to compensate for the lack of applicable data from one cohort, yielding a total of 20 participants, with 15 participants’ data analyzed.

The overall comments consisted of 58.9% positive sentiments, 26.3% negative sentiments, and 14.8% neutral sentiments. A binomial test was used to show that positively coded comments accounted for a higher proportion than expected (0.33). The expected count was based on the assumption that there would be an even distribution of negative, positive, and neutral comments, leading to a proportion of approximately 0.33 in each category. Based on this, there were a significantly greater number of positive sentiments than negative sentiments within each cohort (p < 0.01). Moreover, chi-squared test results showed that the overall numbers of negative and positive sentiments between each of the cohorts were also significantly different (p < 0.01), with the environmental perceptions contributing the most to this difference. However, no statistically significant differences between the cohorts could be identified. A proportion test also showed that there was no statistical significance in negative perceptions, indicating that all cohorts contained proportionally similar negative sentiments.

3.1. Reporting SUS and GUESS Scores

A quantitative analysis of the SUS [28] and GUESS [29] scores from this study has been previously reported in a conference manuscript [17]. Table 1 is included here to report the scores for the overall surveys and the GUESS subscale categories. The reporting showed an “acceptable” score for the SUS reporting, while the GUESS score was 48.88 out of a possible 63. Individually calculating means for each cohort resulted in 5.49 for Cohort 1, 5.19 for Cohort 2, and 5.31 for Cohort 3. Furthermore, reviewing the subscale scores, which were rated on a seven-point Likert scale, the scoring provides valuable insight on the relative strengths of the training software. As anticipated, the scoring for “social connectivity” was low. This is attributable to it being a single-person game without features that would lead to perceived connectivity. Overall, the GUESS scores are considered positive indications of the usability of the system.

Table 1.

Average system usability scale (SUS) scores which range from 0 to 100 [28]. Average games user experience and satisfaction scale (GUESS) with individual subscores ranging between 1 and 7 [29]. The overall SUS score of 59 is categorized in the “OK” range of the designated scale [28]. All GUESS sub-scores were above 4, with the exception of social connectivity [29]. GUESS sub-scores above 4 indicate positive perceptions regarding the given category [29].

3.2. Environmental Factors

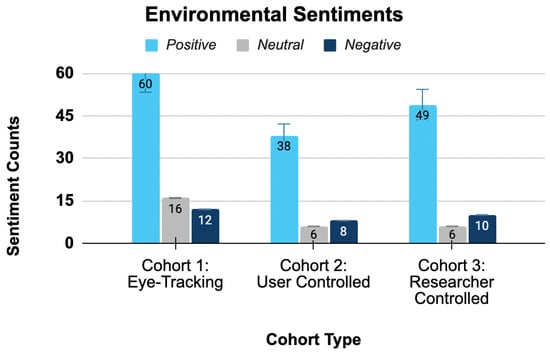

Environmental sentiments were the most frequently recorded statements by participants, with 208 pieces of feedback, and the effect size was small (Cramér’s V = 0.083). Cohort 1, which utilized the eye-tracking interface, was found to have made 60 positive, 16 neutral, and 12 negative remarks when reporting their perceptions of Limbitless Journey. Cohort 2, with a participant-controlled mouse and keyboard, reported thirty-eight positive, six neutral, and eight negative sentiments. The researcher-assisted mouse and keyboard group, Cohort 3, made forty-nine positive, six neutral, and ten negative sentiments. The results are shown in Figure 4.

Figure 4.

Environmental sentiment breakdown by cohort. Cohort 1 provided the largest number of positive environmental sentiments. However, there were no statistical difference between cohorts for positive, negative, or neutral sentiments. The proportion of positive sentiments within each cohort was statistically larger than an evenly distributed comment count.

The proportion of positive sentiments in Cohort 1 was 68.2% (95% CI: 59%, 78%). The proportion of positive sentiments in Cohort 2 was 73.1% (95% CI: 61%, 85%). The proportion of positive sentiments in Cohort 3 was 75.4% (95% CI: 65%, 86%). A binomial test showed that there was a significantly greater number of positive than negative sentiments, contrary to what would be expected (0.33 in each category) (p < 0.01), but no statistical significance was found when comparing positives, negatives, and neutrals between environmental sentiments in each cohort. However, Cohort 1 did have a higher count of positive comments (60) for environmental sentiments than any other cohort.

3.3. Usability Factors

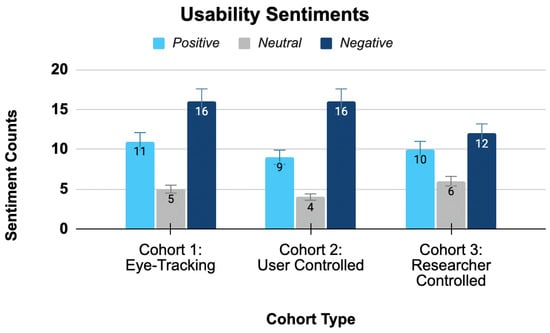

Usability sentiments included a lower count of positive sentiments with a small effect size (Cramér’s V = 0.078). Cohort 1 expressed eleven positive, five neutral, and sixteen negative sentiments on usability factors. Cohort 2 reported a total of nine positive, four neutral, and sixteen negative sentiments. Cohort 3 communicated ten positive, six neutral, and twelve negative sentiments in regards to the game. A bar chart displaying the feedback breakdown between cohorts is shown in Figure 5.

Figure 5.

Usability sentiment breakdown by cohort. All cohorts had similar counts of negative sentiments. While there were no statistical difference between each of the cohorts in terms of sentiment counts, the overall positive sentiments had a higher proportion than expected (0.33).

The proportion of positive sentiments in Cohort 1 was 34.4% (95% CI: 18%, 51%). The proportion of positive sentiments in Cohort 2 was 31.0% (95% CI: 14%, 48%). The positive sentiment proportion in Cohort 3 was 35.7% (95% CI: 18%, 54%). A binomial test showed that there were a significantly larger number of overall positive perceptions than the expected count (0.33) (p < 0.01). All cohorts had similar counts of negatives and positives for usability factor sentiments, and so no statistically significant difference between the cohorts was found.

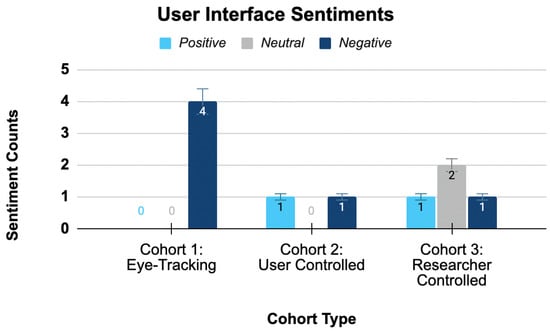

3.4. User Interface Factors

Feedback across all cohorts in regards to user interface was limited, as shown in Figure 6, and had a large effect size (Cramér’s V = 0.577). Cohort 1 expressed zero positive, zero neutral, and four negative sentiments in regards to their eye-tracking interface. Cohort 2 reported a total of one positive, zero neutral, and one negative sentiment in regards to the user interface while having autonomous mouse and keyboard control. Cohort 3 communicated one positive, two neutral, and one negative UI sentiment with a researcher controlling the mouse and keyboard.

Figure 6.

User interface sentiment breakdown by cohort. Cohort 1 had more comments, as eye-tracking comments were categorized under UI. No statistical significance was found between cohorts in terms of sentiment counts. There were also no within-cohort differences between positive, negative, and neutral sentiments.

The proportion of positive sentiments in Cohort 1 was 0% (95% CI: 0%, 0%). The proportion of positive sentiments in Cohort 2 was 50.0%, but the confidence interval was too wide for statistical interpretation. The positive sentiment proportion in Cohort 3 was 25.0%, but once again, the confidence interval was was too broad for interpretation. When a binomial test was performed, the overall positive, negative, and neutral sentiment proportions were not significantly higher than expected. Notably, Cohort 1 gave the largest number of negative sentiments, which mainly pertained to the eye tracking and UI compatibility. Overall, there was an even distribution of negative, positive, and neutral sentiments between all cohorts, without a statistically significant difference.

4. Discussion

Although statistical significance was not established across the full sample, there does seem to be a observed difference between the cohorts. Overall, there are a greater number of environmental comments compared to any other categories. Since many participants did not recalibrate during the game, players spent the majority of the gameplay period in the environment, leading to more comments in this section overall. The user interface category had the least amount of comments. Since UI encompasses the eye-tracking interface primarily, the subsample of Cohort 1 participants were some of the only players who contributed comments in that category.

4.1. Environmental Factors

Comparisons between the sentiments of cohorts were not found to be significant, suggesting that all participants perceived the environment similarly. Effect size was measured via Cramér’s V and indicated a small effect size (0.083). This indicates very consistent high proportions of positive sentiments between each of the groups, with little variation. This is also seen with the p-value being insignificant for between cohort differences, showing an even distribution of sentiments throughout. Interestingly, it was found that Cohort 1, which utilized eye-tracking, administered the most feedback followed by Cohort 3 and Cohort 2, respectively. Such a finding may be due to an increase in visual attention that is necessary for successful use of the eye-tracking interface. As such, participants may be more intentional and vigilant with their gaze when completing levels and expressing their perceptions.

Based on the participant comments, the general consensus was that the environment is aesthetically pleasing, engaging, and fun. Some participants pointed out specific elements such as “I like the bioluminescent water”, “I like the deer over there”, and “…really pretty purple crystals”. Another participant noted, “I think the visuals [and] environments are really nice”.

Negative comments were centered around the character point of view (POV) in the game. One participant directly stated “I did not like the first person view”. It should be noted, however, that this aspect could be changed by the user. Moreover, some participants felt as though the baseline and lower speeds were too fast for them to effectively play through the levels, stating: “It does not go any lower, okay, So I’m stuck at this speed”.

Moving forward, these changes will be implemented. The speed will be normalized in every level and will have an additional option to navigate slower if desired. Another change being implemented is starting the characters in the first person POV rather than the third-person POV. The feedback suggests this is more intuitive for gameplay purposes.

4.2. Usability Factors

Statistical analysis across cohorts showed no statistical significance at this sampling volume. The effect size was small (0.078), indicating that there was a weak correlation between participant sentiments regarding usability and cohort type. This is also seen with the p-value as it was insignificant for between cohort differences as well. This implies that participants, regardless of method of in-game navigation, received a similar user experience while playing Limbitless Journey. As such, integration of the eye-tracking interface into the menu was found to be equivalent in quality to that of traditional mouse-and-keyboard. The researchers believe this is an important step to ensuring that the hands-free calibration and actuation of the game is a cohesive training experience, as the true population for the training will require those features. Without the implementation of the eye-tracking interface, patient populations with low upper extremity control would not be able to play the game completely autonomously. Early testing and validation with this sampling population plays an important role in progressing the platform forward for streamlining testing with a more vulnerable population.

Cohort 3, which received calibration and other in-game selection through researcher assistance, reported a decreased level of negative sentiments. Such a finding may have been due to the shifting of responsibility to the researcher rather than the participant. This third party input while troubleshooting may have changed user perception to lean more positive.

An issue that consistently persisted was difficulty playing the game while complying with the think-aloud protocol. The temporalis muscle would activate when the participant was talking, which led to some frustrations. For instance, one participant stated:

“Some of the flexes were a little bit difficult ’cause I think I was like accidentally flexing and then I would get stuck”.

However, the cumulative feedback was that the flex controller became easier to operate over time. One participant stated:

“It was definitely challenging at times, but I think it helped me get used to the controller more”.

In an effort to improve usability, the game now uses dry electrodes instead of wet gel electrodes. Dry electrodes are frequently associated with increased usability due to their mobility [43]. Another feature that has been implemented in other games includes auto-calibration. Auto-calibration can customize the flex meter to best fit the user. This will be implemented in future iterations of the game to improve usability.

The population utilized in this study should also be considered in the interpretation of these results, while all participants were able-bodied, this was done intentionally to ensure that an optimized version of the game was made prior to dispersal to the ALS population.

4.3. User Interface Factors

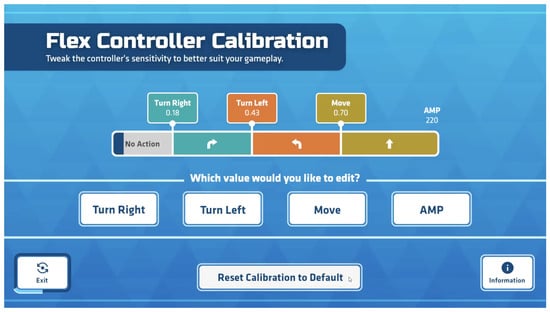

Though no statistical significance was found across cohorts, the information conveyed during data collection is of particular note. There was a large effect size (0.577), showing that there is a strong relationship between cohort type and UI sentiments. However, this is not reflected in the p-value, as the results were not significant when evaluating between cohort. Participants from Cohort 3 gave feedback specific to the eye-tracking interface alone. As the novel inclusion within the game, such attention throughout gameplay is to be expected. Eye-tracking feedback focused on specific areas of the screen that proved difficult. All comments described that selection of controls at the edges of the screen proved difficult. This feedback will be considered in improving The Limbitless Journey formatting of the calibration menus and other control schemes. Additionally, another significant piece of feedback referred to the flex control, which can be seen in Figure 7, stating:

“I would say the main thing that got me confused was that for some reason I kept thinking the, the orange one was turned right and the blue one was turning left”.

This comment brings attention to a more intuitive possibility for the discretization of muscles. The first flexion requires a softer flex and is shown as the leftmost value. However, the in-game flex meter places the right turn at this leftmost positioning. The individuals responsible for developing the hardware of the wheelchair interface will be consulted on this change. As the main purpose of the training game is to provide a safe practice environment for wheelchair control, the flex controller should be analogous between the two. As such, the current aim is to ensure that both systems are changed to have low level of muscular contractions cause a left turn, while medium levels are aligned with a right turn.

Figure 7.

Calibration system for the flex controller. The visualization of the eye-tracking can be seen with the selection of the “exit” button. Players are able to adjust the bounds of each zone as needed prior to starting the game. “Amp” refers to the overall magnitude of the calibration scale and can be adjusted based on user comfort. Overall, the calibration screen allows for signal customization and can be adjusted to the user’s needs.

An additional point of feedback lies within providing clearer instructions for players. One participant stated that for the settings and calibration:

“It was a little difficult to know what to do when you click on those menus”.

Though there is a tutorial portion on the game labeled as “information”, which can be seen in Figure 7, it was noted that most participants did not select this control during the play through, while it is unsure whether a name change or a change in location on the screen would best aid in drawing user attention, the game developers will use this feedback moving forward to provide a better experience.

4.4. Considerations for Patients’ Symptom Progression

The initial study [6] had some patients with further progressed disease symptoms. To accommodate patient symptom variations associated with disease states, the EMG interface was revised to have two potential input methods. Participants in the study were able to select between bilateral EMG sensors (placed on both temporalis muscles) or a unilateral input system (EMG sensors on a single temporalis muscle). Though some participants were able to quickly adapt to the bilateral system and isolate each muscle, an alternative system was developed to ensure that an inclusive design was achieved. Past research in the field of ALS has shown that neurodegeneration typically is focal and asymmetric in nature [44] though a more formalized, specific analysis regarding dystrophy of facial muscles is yet to be completed. After observing difficulty in bilateral muscle contraction among some participants, an alternative was developed. The unilateral EMG input alternative enables users to control directionality both in game and in the wheelchair, through discretization of contraction magnitude of their preferred temporalis muscle. This training game has been developed to minimize training complexity and to provide options for refined calibration that may adapt as disease progression continues. The research data collected has provided valuable usability and human–machine interface feedback prior to implementation of the vulnerable population, in particular for where variations across the patients’ reported symptoms may be extensive.

4.5. Impact of Think-Aloud on EMG Signal Integrity

A component that should be considered in the interpretation of our results is the nature of the think aloud protocol. The flex controller system featured in game is responsible for directional control in the game environment. The target muscle group, the temporalis muscle, is responsible for the elevation, retraction, and side-to-side movement [15]. These actions are often also accomplished while speaking, leading to electrical signals that are received by the electromyographic electrodes that cause unintentional character movement. Many individuals within the study experienced difficulty completing the game while verbalizing their thoughts. This may have caused other thoughts and feedback from the game to be missing from their responses. As participants were encouraged to provide a steady stream of thought, this likely compounded any frustrations that they may experience. Though a retrospective think-aloud paradigm may provide benefits in some cases [45], the intention of this usability study was to receive comprehensive feedback for the eye-tracking interface. Retrospective think-aloud studies have been postulated and proven to minimize participant feedback because of a lapse in memory [46]. Retrospective think-aloud protocols make cause participants to be more biased in their feedback as participants can more easily conceal their thoughts [47]. This same scenario would likely not occur when our target population, individuals with ALS, is utilizing the gaming platform for training purposes. Future studies in the field of usability testing in alternative wheelchair systems may be able to modulate the methodology of this trial for more optimized results. Additionally, previous work in the field suggests that concurrent think-aloud may only slow down execution of task and its efficacy [45], supporting its validity in dual-task interference theory. Though concurrent think-aloud was ultimately our preferred methodology as, time on task was not a priority and the immediate verbalization of working memory prevented overload as per cognitive load theory, modification may provide useful. In specific, the addition of clear operational periods where participants can work in silence versus verbalization periods may prove useful. This technique may allow the benefits of both concurrent and retrospective think-aloud methods to come into play.

4.6. Study Limitations

Using think aloud as a data collection tool, and the Nielsen number limits the number of participants by design [27]. With a larger sample size and improved statistical power the confidence intervals would be more likely to be valid, though they currently calculate negative.After conclusion of usability testing, a larger validation study could rectify this and decrease the possibility of a type two statistical error.

Another potential limitation for the results of the study lies in the selection of non-disabled participants as the sample population. As this study aims to prove that an eye-tracking interface can be implemented to provide navigational control in game, it is important to ensure our sample population is capable of generalization [48]. The chosen population, individuals without disabilities from ages 18 to 65, was selected to ensure that there would be no confounding variables. Though many individuals with ALS, especially early in the course of the disease, may have the control of their extremities required for in-game selections this may provide an additional limitation.

The feedback from the pilot mobility research study validated the steering control potential for this new hands-free control system. Commentary from patients and caregivers focused around the challenges learning the system reliably while driving in physical space. This was the driver for the stress-free gamified training system. The focus of the training software has been to refine the training software prior to working with ALS patients. Throughout the clinical research, the reminder was that every challenge the vulnerable patient group struggles with from design or implementation challenges has a significant time cost for the patient. This necessitates usability and efficacy benchmarking throughout the training platform to minimize such potential burdens.

This study sought to prove that an eye-tracking interface was as effective as traditional in game navigation. Individuals living with disability provide insight unique to their own experiences that will benefit the usability design. The video game’s design and implementation continue through an iterative process, with playtesting and evaluation being critical to the process.

The results of this study suggest that a traditional mouse and keyboard and the eye-tracking interface are similar in usability. The results are not necessarily generalizable to the ALS patient population.

Each cohort was composed of five individuals, as past research in usability has found that the majority of issues can be identified in this manner [27]. Though other sources argue that a larger number of individuals should be included to find usability errors [49], it should be noted that this is not the first time this game has been played through. The game was played through by the game creators as well as researchers prior to administration of the study. As such, past prototypes of the game were tested iteratively prior to admittance of users in the formal usability study. It should additionally be noted that because the designers of each level were comfortable with the interface, the game was not played through in its entirety, and therefore, prior build errors were not caught. This error is common in expert reviews that are interface specific rather than task specific [50]. Further iterations are likely to be made in subsequent studies with ALS patients, where Limbitless Journey will be utilized as a training platform for the EMG–wheelchair interface.

During initial testing, the eye-tracking cohort (Cohort 1) experienced software issues. These problems included freezing in the midst of gameplay and when selecting menu options during the calibration period. Buttons towards the edges of the screen were unable to be selected via the eye-tracking interface. Other software bugs included on-screen buttons being permanently selected regardless of the user’s gaze. Since these errors interfered with the user’s ability to play through the game effectively, the errors had to be addressed in order to best complete this study. As these coding errors only affected Cohort 1’s performance, Cohort 2 and 3 data were not re-collected. Errors were identified and a new version of the game was developed that minimized these bugs. Five additional participants were consented and played through the game. The data from this second set of Cohort 1 participants was used for analysis purposes and is presented in the dataset. It is important to note that the think-aloud protocol allows for this type of revision to be made. The goal of these types of procedures is to quickly identify interface errors and resolve those that obstruct effective feedback from being recorded.

5. Conclusions

Previous quantitative analysis studies have demonstrated that the serious video game Limbitless Journey is a viable method of practice to learn how to use a hands-free EMG wheelchair interface, regardless of the UI [17]. This study aimed to analyze the qualitative data in order to determine user sentiments and opinions regarding the game. This analysis examined different aspects of the game through a think-aloud protocol and determined that a completely autonomous version of Limbitless Journey was comparable to playing the game with a mouse and keyboard. The researchers anticipate that this is critical for a cohesive training experience, as the true population for the training will require those features or support from caregivers or attendants. Early testing and validation with this sampling population revealed the importance of minimizing the effort and frustration required in start-up testing with a more vulnerable population. Though ALS was once believed to only impact individuals’ motor capacities, further research has shown that cognition can also be impacted [51]. Individuals with classic ALS do not always experience the frontotemporal dementia that may be associated with the disease [52]; however, this subset is one that should be considered. The iteration of the game utilized in this study was not designed to address other forms of disability, such as cognitive impairment; however, this will be considered in Frontotemporallater versions. dementia may have various disease presentations depending on the subset. Previous usability studies have been completed for individuals with frontotemporal dementia in digital spaces, with an emphasis put on simplistic design, personalization, and age-sensitive design [53]. To address such issues, modifications to Limbitless Journey’s interface will be considered, such as customization of text sizes for the display. Additionally, each level is meant to build on the difficulty of previous levels and proceeds automatically. The consideration of a free-roam mode or the ability to replay levels as desired may allow more comfort for newer users, as they can remain at lower difficulty settings for longer. The Limbitless Solutions facility will continue to work on improving assistive technology products aimed at universal usability.

A previous study assessed the quantitative data from the GUESS [29] and SUS [28] measures in this study [17]. The quantitative and qualitative measures both indicate that for patients with limited control of their extremities, Limbitless Journey could provide an independent way to train the temporalis muscle for wheelchair usage purposes [17]. The think-aloud protocol provides a range of data beyond what can be discerned from quantitative methods alone, making it a beneficial method of analyzing serious training games. These data have provided valuable insights into the applicability of the game, and the findings will be implemented. Continued testing of this gaming platform can help to advance the improvement of dignity and independence among communities with caregiver dependence due to low mobility.

The EMG–wheelchair interface provides an additional option for individuals with ALS or those with other ambulatory disorders. As a highly modular system, the aim is to provide individuals with accessible options for navigation. As the system itself can be adapted to power wheelchairs with relative ease, this option can easily be added to individual wheelchairs when traditional wheelchair control is no longer an option. While other usability studies have been performed on novel wheelchair interfaces, a concurrent think-aloud has very rarely been included [54,55]. By utilizing traditional usability questionnaires alongside think-aloud methodology, researchers obtained more comprehensive and directed feedback. Both methodological techniques will allow increased usability of the eye-tracking interface alongside an improved user experience for Limbitless Journey.

Future research with the training platform will expand to include new groups of patients who could benefit from hands-free wheelchair controls. A new virtual/augmented reality version of the game is being developed to increase the translation of the training into a real-life environment, with augmented reality projecting the user interface over the real-world terrain. This seamless integration of the game environment and the real world will allow users to play while sitting in the EMG–wheelchair interface. Past work has shown the efficacy of virtual reality training for wheelchair skills [56]. Additionally, research has shown that, in some cases, VR training combined with AR can lead to improved training performance on real-world tasks compared to real-world training alone [57]. Implementing virtual and augmented reality options in the serious training game provides an additional option for future clinical trial participants with ALS to use the hands-free wheelchair system. Moving forward, to increase the usability of Limbitless Journey, the addition of mini-games within the primary training levels will be implemented. Additionally, the level design feedback will be implemented to improve playability.

Author Contributions

Conceptualization, P.S., M.D., A.M., M.P. and V.R.; methodology, D.G. and M.P.; software, P.S., M.D. and J.S.; validation, P.S. and A.M.; formal analysis, D.G. and M.P.; investigation, D.G., M.P. and V.R.; resources, A.M.; data curation, A.M. and V.R.; writing, D.G., M.P., V.R., P.S. and A.M.; visualization, D.G., M.P., V.R., P.S. and A.M.; supervision, A.M., J.S., M.D. and P.S.; project administration, P.S. and A.M.; funding acquisition, A.M., P.S. and M.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Institutional Review Board of the University of Central Florida (STUDY00004664, approved on 20 December 2022).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Acknowledgments

The authors would like to thank the interdisciplinary team members at Limbitless Solutions who have supported and contributed to this research. The researchers appreciate the philanthropic support for undergraduate research from the Paul B. Hunter & Constance D. Hunter Charitable Foundation and the Pabst Steinmetz Foundation.

Conflicts of Interest

The authors declare that they have no competing interests and want to disclose that the technology used for this research has been awarded Patent #10,426,370. Game development at Limbitless Solutions has been supported by philanthropic grants from Unity Technologies® and Epic Games®. Neither company was involved with this publication, and their funding was not used for the game development or the study presented in this article.

Abbreviations

The following abbreviations are used in this manuscript:

| ALS | Amyotrophic Lateral Sclerosis |

| BCI | Brain–Computer Interface |

| CNS | Central Nervous System |

| EEG | Electroencephalography |

| EMG | Electromyography |

| UI | User Interface |

References

- Limchesing, T.J.C.; Vidad, A.J.P.; Munsayac, F.E.T.; Bugtai, N.T.; Baldovino, R.G. Application of EEG Device and BCI for the Brain-controlled Automated Wheelchair. In Proceedings of the 2021 IEEE 13th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management (HNICEM), Manila, Philippines, 28–30 November 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Felzer, T. On the Possibility of Developing a Brain-Computer Interface (BCI). 2001. Available online: https://api.semanticscholar.org/CorpusID:18261749 (accessed on 5 March 2025).

- Solea, R.; Margarit, A.; Cernega, D.; Serbencu, A. Head movement control of powered wheelchair. In Proceedings of the 2019 23rd International Conference on System Theory, Control and Computing (ICSTCC), Sinaia, Romania, 9–11 October 2019; IEEE: New York, NY, USA, 2019; pp. 632–637. [Google Scholar]

- Palumbo, A.; Ielpo, N.; Calabrese, B.; Garropoli, R.; Gramigna, V.; Ammendolia, A.; Marotta, N. An Innovative Device Based on Human-Machine Interface (HMI) for Powered Wheelchair Control for Neurodegenerative Disease: A Proof-of-Concept. Sensors 2024, 24, 4774. [Google Scholar] [CrossRef] [PubMed]

- Rash, G.S.; Quesada, P. Electromyography Fundamentals. 2002. Available online: https://people.stfx.ca/smackenz/courses/HK474/Labs/EMG%20Lab/EMGfundamentals.pdf (accessed on 13 November 2024).

- Manero, A.C.; McLinden, S.L.; Sparkman, J.; Oskarsson, B. Evaluating surface EMG control of motorized wheelchairs for amyotrophic lateral sclerosis patients. J. Neuroeng. Rehabil. 2022, 19, 88. [Google Scholar] [CrossRef]

- Soderberg, G.L. Selected Topics in Surface Electromyography for Use in the Occupational Setting: Expert Perspectives; DHHS (NIOSH) Publication Number 91-100; U.S. Department of Health and Human Services, Public Health Service, Centers for Disease Control, National Institute for Occupational Safety and Health: Washington, DC, USA, 1992.

- Zheng, M.; Crouch, M.S.; Eggleston, M.S. Surface electromyography as a natural human–machine interface: A review. IEEE Sens. J. 2022, 22, 9198–9214. [Google Scholar] [CrossRef]

- Zhang, J.; Gao, S.; Zhou, K.; Cheng, Y.; Mao, S. An online hybrid BCI combining SSVEP and EOG-based eye movements. Front. Hum. Neurosci. 2023, 17, 1103935. [Google Scholar] [CrossRef] [PubMed]

- Tan, Y.; Lin, Y.; Zang, B.; Gao, X.; Yong, Y.; Yang, J.; Li, S. An autonomous hybrid brain-computer interface system combined with eye-tracking in virtual environment. J. Neurosci. Methods 2022, 368, 109442. [Google Scholar] [CrossRef]

- Dillen, A.; Omidi, M.; Díaz, M.A.; Ghaffari, F.; Roelands, B.; Vanderborght, B.; Romain, O.; De Pauw, K. Evaluating the real-world usability of BCI control systems with augmented reality: A user study protocol. Front. Hum. Neurosci. 2024, 18, 1448584. [Google Scholar] [CrossRef] [PubMed]

- Hardiman, O.; Kiernan, M.C.; van den Berg, L.H. Amyotrophic Lateral Sclerosis. In Neurodegenerative Disorders: A Clinical Guide; Hardiman, O., Doherty, C.P., Elamin, M., Bede, P., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 145–165. [Google Scholar] [CrossRef]

- Grad, L.I.; Rouleau, G.A.; Ravits, J.; Cashman, N.R. Clinical Spectrum of Amyotrophic Lateral Sclerosis (ALS). Cold Spring Harb. Perspect. Med. 2017, 7, a024117. [Google Scholar] [CrossRef]

- Trail, M.; Nelson, N.; Van, J.N.; Appel, S.H.; Lai, E.C. Wheelchair use by patients with amyotrophic lateral sclerosis: A survey of user characteristics and selection preferences. Arch. Phys. Med. Rehabil. 2001, 82, 98–102. [Google Scholar] [CrossRef]

- Moore, K.L.; Dalley, A.F. Clinically Oriented Anatomy; Wolters Kluwer India Pvt Ltd.: Gurugram, India, 2018. [Google Scholar]

- Riera-Punet, N.; Martinez-Gomis, J.; Paipa, A.; Povedano, M.; Peraire, M. Alterations in the masticatory system in patients with amyotrophic lateral sclerosis. J. Oral Facial Pain Headache 2018, 32, 84–90. [Google Scholar] [CrossRef]

- Smith, P.; Dombrowski, M.; MacDonald, C.; Williams, C.; Pradeep, M.; Barnum, E.; Rivera, V.P.; Sparkman, J.; Manero, A. Initial Evaluation of a Hybrid eye tracking and Electromyography Training Game for Hands-Free Wheelchair Use. In Proceedings of the 2024 Symposium on Eye Tracking Research and Applications, Glasgow, UK, 4–7 June 2024; pp. 1–8. [Google Scholar]

- Susi, T.; Johannesson, M.; Backlund, P. Serious Games: An Overview. 2007. Available online: https://www.diva-portal.org/smash/get/diva2:2416/fulltext01.pdf (accessed on 5 March 2025).

- Prahm, C.; Eckstein, K.; Bressler, M.; Wang, Z.; Li, X.; Suzuki, T.; Daigeler, A.; Kolbenschlag, J.; Kuzuoka, H. PhantomAR: Gamified mixed reality system for alleviating phantom limb pain in upper limb amputees—Design, implementation, and clinical usability evaluation. J. Neuroeng. Rehabil. 2025, 22, 21. [Google Scholar] [CrossRef]

- Sánchez-Gil, J.J.; Sáez-Manzano, A.; López-Luque, R.; Ochoa-Sepúlveda, J.J.; Cañete-Carmona, E. Gamified devices for stroke rehabilitation: A systematic review. Comput. Methods Programs Biomed. 2024, 258, 108476. [Google Scholar] [CrossRef]

- Prahm, C.; Kayali, F.; Sturma, A.; Aszmann, O. PlayBionic: Game-Based Interventions to Encourage Patient Engagement and Performance in Prosthetic Motor Rehabilitation. PM R 2018, 10, 1252–1260. [Google Scholar] [CrossRef] [PubMed]

- Thomas, N.J.; Baral, R. Mechanism of gamification: Role of flow in the behavioral and emotional pathways of engagement in management education. Int. J. Manag. Educ. 2023, 21, 100718. [Google Scholar] [CrossRef]

- Smith, P.A.; Dombrowski, M. Designing games to help train children to use Prosthetic Arms. In Proceedings of the 2017 IEEE 5th International Conference on Serious Games and Applications for Health (SeGAH), Perth, Australia, 2–4 April 2017; pp. 1–4. [Google Scholar] [CrossRef]

- McLinden, S.; Smith, P.; Dombrowski, M.; MacDonald, C.; Lynn, D.; Tran, K.; Robinson, K.; Courbin, D.; Sparkman, J.; Manero, A. Utilizing Electromyographic Video Games Controllers to Improve Outcomes for Prosthesis Users. Appl. Psychophysiol. Biofeedback 2024, 49, 63–69. [Google Scholar] [CrossRef]

- Wäppling, A.; Walchshofer, L.; Lewin, R. What Makes a Cozy Game? A Study of Three Games Considered Cozy. Bachelor’s Thesis, Uppsala University, Uppsala, Sweden, 2022. [Google Scholar]

- Csikszentmihalyi, M.; Csikzentmihaly, M. Flow: The Psychology of Optimal Experience; Harper & Row: New York, NY, USA, 1990; Volume 1990. [Google Scholar]

- Nielsen, J.; Landauer, T.K. A mathematical model of the finding of usability problems. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems—CHI’93, Amsterdam, The Netherlands, 24–29 April 1993; pp. 206–213. [Google Scholar] [CrossRef]

- Bangor, A.; Kortum, P.T.; Miller, J.T. An Empirical Evaluation of the System Usability Scale. Int. J. Hum. Comput. Interact. 2008, 24, 574–594. [Google Scholar] [CrossRef]

- Phan, M.H.; Keebler, J.R.; Chaparro, B.S. The development and validation of the game user experience satisfaction scale (GUESS). Hum. Factors 2016, 58, 1217–1247. [Google Scholar] [CrossRef] [PubMed]

- Prokop, M.; Pilař, L.; Tichá, I. Impact of Think-Aloud on Eye-Tracking: A Comparison of Concurrent and Retrospective Think-Aloud for Research on Decision-Making in the Game Environment. Sensors 2020, 20, 2750. [Google Scholar] [CrossRef]

- Johnson, W.; Artino, A.; Durning, S. Using the think aloud protocol in health professions education: An interview method for exploring thought processes: AMEE Guide No. 151. Med. Teach. 2022, 45, 937–948. [Google Scholar] [CrossRef] [PubMed]

- Fox, M.C.; Ericsson, K.A.; Best, R. Do Procedures for Verbal Reporting of Thinking Have to Be Reactive? A Meta-Analysis and Recommendations for Best Reporting Methods. Psychol. Bull. 2011, 137, 316–344. [Google Scholar] [CrossRef]

- Bellini-Leite, S.C. Dual process theory: Embodied and predictive; symbolic and classical. Front. Psychol. 2022, 13, 805386. [Google Scholar] [CrossRef]

- Park, B.; Korbach, A.; Brünken, R. Does thinking-aloud affect learning, visual information processing and cognitive load when learning with seductive details as expected from self-regulation perspective? Comput. Hum. Behav. 2020, 111, 106411. [Google Scholar] [CrossRef]

- Zoom. 2011. Available online: https://www.zoom.com/ (accessed on 12 February 2025).

- Temi. 2017. Available online: https://www.temi.com/ (accessed on 12 February 2025).

- Muhr, T. ATLAS. ti 6.0, version 6; ATLAS. ti Scientific Software Development GmbH: Berlin, Germany, 2004.

- Cooke, L. Assessing concurrent think-aloud protocol as a usability test method: A technical communication approach. IEEE Trans. Prof. Commun. 2010, 53, 202–215. [Google Scholar] [CrossRef]

- Ñañez-Silva, M.V.; Quispe-Calderón, J.C.; Huallpa-Quispe, P.M.; Larico-Quispe, B.N. Analysis of academic research data with the use of ATLAS. ti. Experiences of use in the area of Tourism and Hospitality Administration. Data Metadata 2024, 3, 306. [Google Scholar] [CrossRef]

- van Atteveldt, W.; van der Velden, M.A.C.G.; Boukes, M. The Validity of Sentiment Analysis: Comparing Manual Annotation, Crowd-Coding, Dictionary Approaches, and Machine Learning Algorithms. Commun. Methods Meas. 2021, 15, 121–140. [Google Scholar] [CrossRef]

- Becker, I.; Parkin, S.; Sasse, M.A. Combining qualitative coding and sentiment analysis: Deconstructing perceptions of usable security in organisations. In Proceedings of the the LASER Workshop: Learning from Authoritative Security Experiment Results (LASER 2016), Boston, MA, USA, 30 October–3 November 2016; pp. 43–53. [Google Scholar]

- Cascio, M.A.; Lee, E.; Vaudrin, N.; Freedman, D.A. A team-based approach to open coding: Considerations for creating intercoder consensus. Field Methods 2019, 31, 116–130. [Google Scholar] [CrossRef]

- Meziane, N.; Webster, J.; Attari, M.; Nimunkar, A. Dry electrodes for electrocardiography. Physiol. Meas. 2013, 34, R47. [Google Scholar] [CrossRef]

- Yoganathan, K.; Dharmadasa, T.; Northall, A.; Talbot, K.; Thompson, A.G.; Turner, M.R. Asymmetry in amyotrophic lateral sclerosis: Clinical, neuroimaging and histological observations. Brain 2025, awaf121. [Google Scholar] [CrossRef]

- Ericsson, K.A.; Simon, H.A. Protocol Analysis: Verbal Reports as Data; EUA Massachusetts Institute of Technology: Cambridge, MA, USA, 1993. [Google Scholar]

- Van Den Haak, M.; De Jong, M.; Jan Schellens, P. Retrospective vs. concurrent think-aloud protocols: Testing the usability of an online library catalogue. Behav. Inf. Technol. 2003, 22, 339–351. [Google Scholar] [CrossRef]

- Hoc, J.M.; Leplat, J. Evaluation of different modalities of verbalization in a sorting task. Int. J. Man Mach. Stud. 1983, 18, 283–306. [Google Scholar] [CrossRef]

- Lu, T.; Franklin, A.L. A protocol for identifying and sampling from proxy populations. Soc. Sci. Q. 2018, 99, 1535–1546. [Google Scholar] [CrossRef]

- Lindgaard, G.; Chattratichart, J. Usability testing: What have we overlooked? In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 28 April–3 May 2007; pp. 1415–1424. [Google Scholar]

- Lazar, J.; Feng, J.H.; Hochheiser, H. Research Methods in Human-Computer Interaction; Morgan Kaufmann: Burlington, MA, USA, 2017. [Google Scholar]

- Phukan, J.; Pender, N.P.; Hardiman, O. Cognitive impairment in amyotrophic lateral sclerosis. Lancet Neurol. 2007, 6, 994–1003. [Google Scholar] [CrossRef]

- Von Braunmuhl, A. Picksche Krankheit und Amyotrophische Lateralsklerose. Allg. Z. Psychiat Psych. Med. 1932, 96, 364–366. [Google Scholar]

- Chien, S.Y.; Zaslavsky, O.; Berridge, C. Technology Usability for People Living with Dementia: Concept Analysis. JMIR J. Med. Internet Res. Aging 2024, 7, e51987. [Google Scholar] [CrossRef]

- Borges, L.R.; Naves, E.L.; Sa, A.A. Usability evaluation of an electric-powered wheelchair driven by eye tracking. Univers. Access Inf. Soc. 2022, 21, 1013–1022. [Google Scholar] [CrossRef]

- Kutbi, M.; Du, X.; Chang, Y.; Sun, B.; Agadakos, N.; Li, H.; Hua, G.; Mordohai, P. Usability studies of an egocentric vision-based robotic wheelchair. ACM Trans. Hum. Robot. Interact. THRI 2020, 10, 1–23. [Google Scholar] [CrossRef]

- Lam, J.F.; Gosselin, L.; Rushton, P.W. Use of virtual technology as an intervention for wheelchair skills training: A systematic review. Arch. Phys. Med. Rehabil. 2018, 99, 2313–2341. [Google Scholar] [CrossRef]

- Cooper, N.; Millela, F.; Cant, I.; White, M.D.; Meyer, G. Transfer of training—Virtual reality training with augmented multisensory cues improves user experience during training and task performance in the real world. PLoS ONE 2021, 16, e0248225. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).