1. Introduction

Hybrid evolutionary algorithms, by integrating the advantages of multiple search strategies, have proven to be robust and efficient in solving complex optimization problems. When applied to a generative adversarial network (GAN), they exhibit significant potential, achieving notable success in fields such as advanced prototyping [

1], and enhancing robotic functionalities [

2], optimizing logistics operations [

3], and making improvements in predictive maintenance [

4]. In recent years, with increasing attention on sustainable optimization studies, researchers have begun to explore how to incorporate sustainability principles into the integration of GANs and evolutionary algorithms, aiming to reduce computational resource consumption, improve model efficiency, and balance environmental responsibility and social impact while advancing technological progress. Despite their promising prospects, practical applications of GANs based on evolutionary algorithms face challenges, including deformation and distortion during generation, and a lack of diversity in outcomes, which can appear monotonous. To overcome these issues, researchers have developed improved models like DCGAN [

5], Wasserstein GAN [

6], and StyleGAN [

7], significantly enhancing the quality and diversity of outputs and thus increasing the realism and innovativeness of results.

GAN is one of the most important technologies which has made significant progress in the industry in recent years. At present, many researchers have studied GAN from different aspects: (1) objective function-based ones, (2) model structure-based ones, and (3) latent space-based ones. Objective function-based ones can improve the stability of adversarial training, reduce the problems of mode collapse and gradient vanishing during training, enhance the quality of generated images, and promote the convergence of models, e.g., the recurrent stacked GAN (RSGAN), the spectral normalized GAN (SNGAN), and the least squares GAN (LSGAN). However, the design of objective functions is often problem dependent, requiring different optimization strategies for different tasks and datasets. For model structure-based ones, since the model structure plays a decisive role in the performance of GAN, the expression ability of the generator and discriminator can be significantly enhanced by using complex model structure. Because the generator learns the real data distribution more effectively, it thus improves the quality of generated images. In addition, an excellent model structure also helps to alleviate problems such as gradient vanishing and mode collapse during training. However, the complex model structure causes the training process to become more computationally expensive. The optimization of the latent space through interpolation, transformation, and traversal can improve the robustness of model input and the controllability of generated sample. This helps to build more expressive latent space, which improves the quality and diversity of generated images.

Compared with the objective function-based ones and the model structure-based ones, the optimization of the latent space has the following advantages: By adjusting the latent vector with different dimensions or directions, a finer control over the generated samples can be achieved. At the same time, the latent space of GAN is completely independent of other components, such as objective function and model structure. The latent space of GAN have emerged as a pivotal research frontier, and optimizing this space through evolutionary algorithms presents notable advantages. By simulating the process of biological evolution, evolutionary algorithms systematically explore the latent space, transcending the constraints of random initialization. In contrast to gradient descent, which can often converge to local optima, yielding samples that may not be globally optimal, evolutionary algorithms delve deeply into the search space, identifying latent vectors that are closer to global optimality.

This paper focuses on the optimization of the latent space using evolutionary algorithms and then proposes a novel hybrid evolutionary algorithm, called the improved crisscross optimization (ICSO) algorithm, to optimize random latent vectors so as to enhance the quality and diversity of generated images. On the one hand, the original quality fitness and diversity fitness are balanced by normalization. Simultaneously, since the discriminator gradient has a certain influence on the diversity of generated images, a gradient penalty is introduced to constrain the discriminator gradient. On the other hand, based on the traditional crisscross optimization algorithm, ICSO is proposed by incorporating the local optimal solution and the global optimal solution from PSO. The contributions of this paper are summarized as follows:

Based on the original crisscross optimization, this paper introduces a fusion strategy of local and global optimal solutions from the particle swarm optimization, and proposes an improved evolutionary algorithm—improved crisscross optimization. This algorithm is specifically designed to optimize the latent space of generative adversarial networks, thereby enhancing the quality and diversity of generated images.

This study proposes the normalization to balance the quality and diversity of generated images, ensuring that both are effectively considered in the fitness. Meanwhile, it introduces a gradient penalty mechanism for the discriminator, which constrains the discriminator’s gradients to enhance the stability and performance of the model during adversarial training.

The rest of this paper is organized as follows:

Section 2 introduces the related work.

Section 3 introduces ICSO in detail, including objective function and improved crossover optimization. In

Section 4, a comparison of ICSO versus its competitors in terms of generated sample quality using mainstream architectures on the different datasets is made. Moreover, ICSO is compared with classical GANs to verify the diversity of ICSO. And ICSO is also applied to StyleGAN3, a mainstream network model, to validate its applicability in industry. The conclusion of this paper is presented in

Section 5.

3. Method

The paper proposes the ICSO algorithm, which is an enhanced version of the original CSO, primarily including population initialization, improved horizontal crossover, and vertical crossover.

3.1. Overall Framework of ICSO

The ICSO algorithm develops based on the original CSO, which consists of HC and VC. HC primarily manages information exchange among different individuals, enhancing their exploration capability through such external exchanges, aiding the population in finding better solutions. In contrast, VC focuses on interactions between different dimensions within an individual, leading to escaping local optima, thus achieving a refined and optimized performance. The original CSO faces challenges in search efficiency and achieving global optimality due to the randomness in its search direction. To address these problems, ICSO incorporates concepts of local and global optima from PSO, using information from optimal solutions as perturbation factors to guide the search process. This enhancement enables ICSO to maintain the original CSO’s strong global search ability while more effectively exploring the search space by heuristically guiding the population towards known optimal positions.

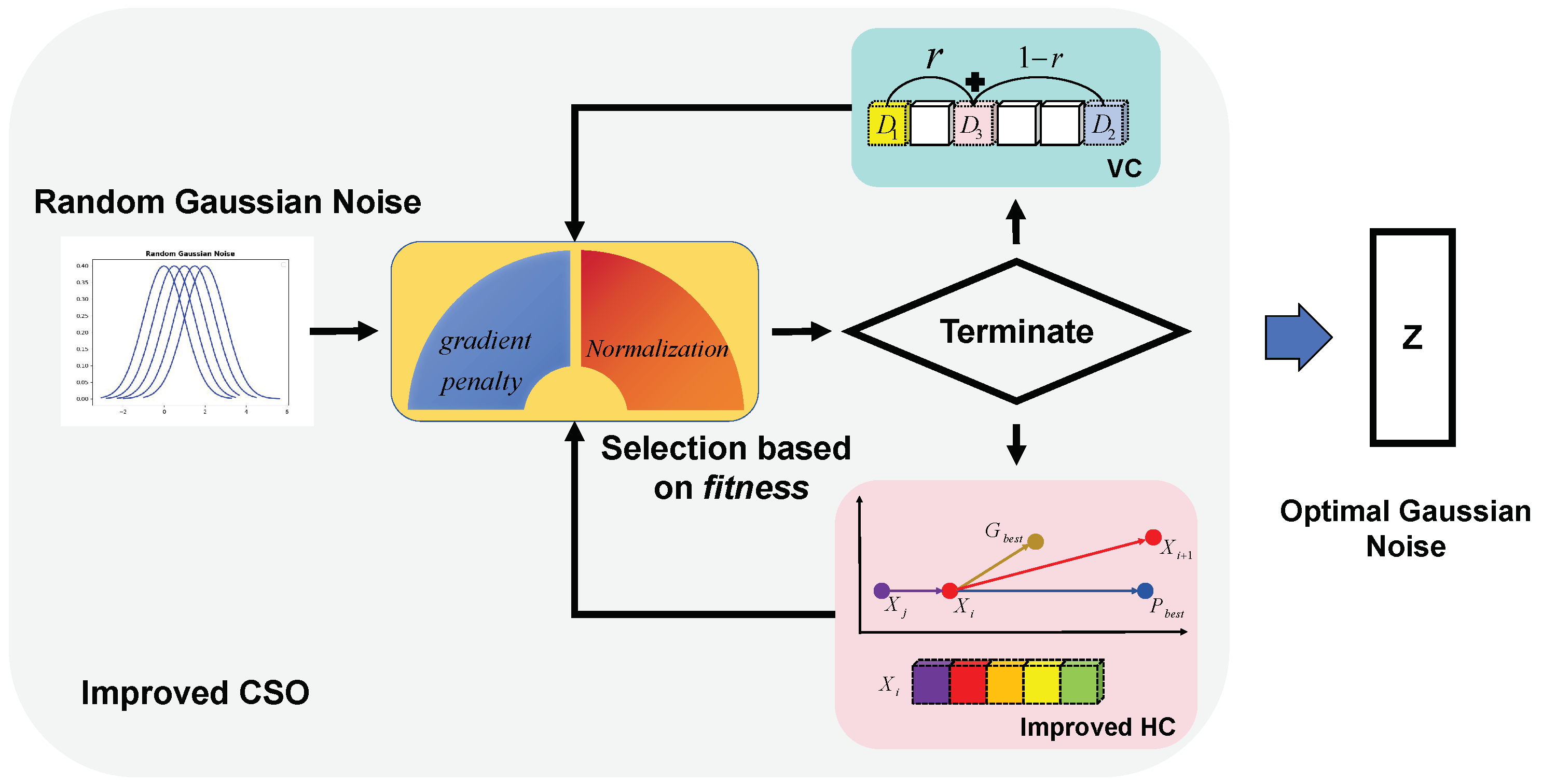

The pseudocode and architecture of ICSO are shown in Algorithm 1 and

Figure 1, respectively, which resembles traditional evolutionary algorithms in its process. Initially, the population is initialized with random Gaussian noise (Line 1 in Algorithm 1 and “Random Gaussian Noise” in

Figure 1). Subsequently, the iterative evolution core revolves around “Improved HC”, “VC”, and “Selection based on

fitness”. In each iteration, the improved horizontal crossover is employed to compute the new noise for individuals, leveraging crossover operations between distinct individuals within the population to broaden the exploration of the latent space (Line 9 in Algorithm 1 and “Improved HC” in

Figure 1). Concurrently, the vertical crossover performs a crossover operation across dimensions within the same individual, fostering integration and innovation among diverse features (Line 17 in Algorithm 1 and “VC” in

Figure 1). Notably, regardless of the crossover operation applied, individuals undergo a renewed fitness evaluation, which subsequently updates both local and global optimal solutions, ensuring the population’s continuous progression towards optimality (Line 3, 10, and 18 in Algorithm 1 and “Selection based on

fitness” in

Figure 1). Ultimately, the algorithm terminates autonomously upon reaching a predefined iteration limit or satisfying specific convergence criteria, outputting the meticulously selected optimal Gaussian noise (Line 27 in Algorithm 1 and “Optimal Gaussian Noise” in

Figure 1).

| Algorithm 1 The Overall Framework of ICSO |

Input: The number of individuals n Output: The optimal Gaussian noise Procedure: - 1:

Initialize the position for each individual () using Gaussian noise. - 2:

for to n do - 3:

Calculate the fitness which formulates as Equation ( 13) - 4:

Set the personal best position - 5:

end for - 6:

Set the global best position - 7:

for to do - 8:

for to n do - 9:

= Improved Horizontal Crossover which formulates as Equation ( 14) - 10:

= Calculate Fitness which formulates as Equation ( 13) - 11:

if then - 12:

Update personal best position - 13:

end if - 14:

if then - 15:

Update global best position - 16:

end if - 17:

= Vertical Crossover which formulates as Equation ( 15) - 18:

= Calculate Fitness which formulates as Equation ( 13) - 19:

if then - 20:

Update personal best position - 21:

end if - 22:

if then - 23:

Update global best position - 24:

end if - 25:

end for - 26:

end for - 27:

Set the optimal Gaussian noise

|

3.2. Problem Definition

The objective function of the GAN can be expressed as follows:

where

represents the distribution of real data;

denotes the prior distribution of the latent variable

z. The Discriminator

D is responsible for distinguishing between real data and data generated by the generator. The Generator

G is designed to create data that closely mimic the distribution of real data.

The latent variable

z is randomly generated during the training process of GAN, which may lead to issues such as poor quality of generated samples and mode collapse. The main objective of optimizing the latent space is to find the optimal latent variable

z, thereby improving the quality and diversity of the samples generated by the generator. When considering the use of evolutionary algorithms to optimize the latent variable

z, we can define the fitness to guide the optimization of the randomly generated latent variable

z, which can be expressed as follows:

where

and

are evaluation functions for assessing the quality of generation and the diversity of generation, respectively.

is gradient-based regularization.

3.3. Population Initialization

Population initialization is a crucial initial step in ICSO, where it generates

n individuals to form the initial population

:

where each individual

is a vector with a fixed dimensionality. A high-quality initialization not only increases the probability of the algorithm finding the global optimal solution but also effectively reduces the risk of being trapped in local optima. The latent space of GAN is often generated using the Gaussian distribution. When employing evolutionary algorithms to optimize this latent space, the formula involved in initializing individuals is as follows:

where

represents the mean, which indicates the average value of a dataset, and its formula is as follows:

where

denotes the probability density function. In Equation (

4),

represents the standard deviation, which measures the dispersion of data points around the mean, and its formula is as follows:

and

represents a random value drawn from the standard normal distribution

, and its probability density function is as follows:

Evaluation is a key operation in the evolutionary algorithm. By measuring the fitness of individuals, evaluation provides an effective evolutionary direction for a population. This paper approaches the problem from the perspective of multi-objective optimization, with a focus on two competing attributes of the generated samples: quality and diversity. On one hand, the generated samples need to exhibit high quality, meaning that they should be realistic enough to trick the discriminator. On the other hand, diversity must be ensured so that the distribution of generated samples is broad enough to prevent mode collapse. These two attributes often conflict in practice: an excessive focus on quality may cause the model to generate samples that concentrate on only a few modes, lacking diversity, while an excessive emphasis on diversity may compromise the overall quality of the generated samples.

The process of observing the average output value from the discriminator, when fed with images generated by the generator, can be considered a form of quality fitness function in the context of IE-GAN [

15], as follows:

where

D is the discriminator,

G is the generator, and

z is random Gaussian noise.

IE-GAN indirectly evaluates the distributional distance of the samples produced by the generator, so it is achieved by estimating the diversity through the mean absolute error (MAE) between samples. The formal definition of diversity fitness function is as follows:

where

refers to the number of times that each sample is compared with other samples.

Generally, it is not appropriate to balance different objective functions only using Weighted Sum Approaches.

and

are two independent objective functions that sometimes differ by a factor of 10 or 100. Therefore,

and

of all individuals in the population are normalized, thus unifying the different fitnesses to an order of magnitude, wherein the min–max normalization is calculated by the following:

where

and

are used to obtain the minimum and maximum values of the data, respectively.

Gradient-based regularization can stabilize the adversarial training of GAN and suppress the mode collapse of models [

16]. The gradient penalty is a technology that applies constraints to the gradients of both the generator and the discriminator during training in order to make the training process more stable and smooth. This prevents gradient exploding or gradient vanishing between the generator and the discriminator, thereby improving the quality and diversity of the generated samples. Moreover, when the generator generates realistic samples, the discriminator does not reject the generated sample confidently (i.e., the discriminator updates with small gradient), and when the generator collapses to a small region, the discriminator subsequently labels collapsed points as fake with an obvious countermeasure (i.e., the discriminator updates with a big gradient). By constraining the gradient of discriminator, the generated samples tend to be dispersed enough to avoid mode collapse, and the formula is as follows:

where

is a sample drawn from the distribution

;

calculates the squared difference between the L2 norm of the gradient of the discriminator

D with respect to

and 1. The goal is to keep the gradient norm close to 1, which helps in satisfying the Lipschitz condition.

A fitness function that integrates both the quality and diversity of generated images is designed and combined with GP to construct a multi-objective function, aiming to balance these two conflicting attributes. Normalization is applied to ensure fairness and consistency between the quality and diversity metrics, and the objective function is formulated in a minimization form:

where

is a scalar parameter used to balance the contributions of

and

. When

is close to 0, the model focuses more on

, while when

is close to 1, the model focuses more on

.

is another scalar parameter used to adjust the weight of the gradient penalty term

. A smaller

indicates that the evaluated individual has a better generative performance.

3.4. Improved Crisscross Optimization

ICSO, an integration of PSO and CSO, primarily consists of improved HC and VC. The original CSO effectively speeds up the convergence ability of ICSO by running the HC and VC operations alternately. In addition, ICSO introduces the local optimal search mechanism of PSO, which helps ICSO to quickly search local optimal solution in its own neighborhood. Similarly, ICSO incorporates the global optimal search mechanism of PSO, guiding toward the direction of population optimization through information interaction between particles. This approach can accelerate the convergence of the population and avoid falling into local optimal solutions [

17].

HC randomly selects two particles from the population to perform crossover operations between the same dimensions, aiming to promote diversity and enhance search capability among particles through information exchange. Unlike the original CSO, this operator incorporates principles from the PSO, introducing the local optimal solutions of individuals and the global optimal solution from population into the original CSO (as shown in Equation (

14)). The perturbation, which consists of the local and global optimal solutions, can make the particle heuristic search and help the operator search towards the global optimal solution. By integrating the rapid convergence ability of the original CSO with the heuristic search strategy of PSO, this method not only accelerates the convergence speed of the population but also effectively avoids being trapped in local optimal solutions, thereby enhancing the efficiency of global search. The formula for HC is as follows:

where

r is the random number between

;

is the individual learning factor that controls the step size of the individual moving towards its

;

is the social learning factor that controls the step size of the individual moving towards the

. In the standard implementation of PSO, both

and

are commonly set to 2;

is the Gaussian noise of generation

i;

is the local optimal solution and

is the global optimal solution. The particles produced by the improved HC must be compared with their parent particles, and only the particles with better fitness can be retained in the VC operation.

Figure 2 provides the schematic of a particle’s trajectory of evolution in the procedure of improved HC. The operation ➀ borrows from the original CSO. The main part of the search space is constructed with parent particles as the diagonal vertices of a hypercube. In particular, it is clear that to reduce unsearchable blind spots, the original CSO also searches the periphery of the hypercube with a lower probability. In this paper, however, we utilize the local optimal solutions and global optimal solutions from PSO as perturbations, replacing the aforementioned part of the original CSO. This search mechanism effectively leverages the individual experience of particles and the optimal experience of the population, heuristically guiding the population to search for blind spots on the periphery of the hypercube, thereby enhancing the global search capability of the operations (➁ and ➂).

VC, which is an operation from the original CSO, is a crossover operation between two different dimensions of a particle, aimed at escaping stagnant dimensions to prevent particles from falling into local optimal solutions. The formula for VC is as follows:

where

r is the random number between

and

is the

i dimension of the particle. As with HC, only the better particles are retained to the next iteration.

Since the number of iterations is directly proportional to the number of stagnant particles, the more iterations there are, the higher the value of

P, which in turn determines the operating probability of the VC. The formula is as follows:

where

is the current number of iterations and

is the total number of iterations.

As shown in

Figure 3, the process of VC is used to remove stagnant dimensions by performing crossover operation among different dimensions of an individual. Once a certain stagnant dimension of an individual escapes from a stuck value, it spreads rapidly through the whole population via the HC operation. Moreover, this also helps other stagnant dimensions to quickly escape local minima through the VC. It is the crisscross operation on both horizontal and vertical directions that makes ICSO have a unique global search ability for addressing multi-modal problems with many local minima.

Each iteration requires checking if the current individual’s new position

is better than its historically best position

. If the objective function at the current position is smaller, then it is updated as follows:

Similarly, the global best

is the best individual extremum among the entire population, representing the globally optimal solution within the current search space. During each iteration, it is necessary to find the optimal one among all individual’

; that is

4. Experimental Results and Analysis

In this section, ICSO is compared with some state-of-the-art algorithms to validate the quality and diversity of the generated images. In addition, we have integrated ICSO into StyleGAN3 and applied it in real industrial scenarios.

4.1. Benchmark Dataset

The CIFAR-10 dataset contains 10 classes with a total of 60,000 32 × 32 RGB images. The dataset contains the following classes: bird, cat, plane, boat, car, truck, dog, deer, horse, and frog, and each class contains 5000 training images and 1000 test images. The STL-10 dataset contains 10 classes with a total of 113,000 96 × 96 RGB images. The training set has 5000 images, the test set has 8000, and the remaining 100,000 are unlabeled images. The STL-10 dataset is unique because it is suitable for semi-supervised learning and self-supervised learning research. The LSUN is a large-scale image dataset, comprising 10 scene categories, such as bedrooms, living rooms, and classrooms, and 20 object categories, totaling approximately 1 million labeled images with 512 × 512 resolution. LSUN-64 refers to a subset with a resolution of 64 × 64 in the LSUN dataset. The CelebA is a large dataset of face attributes containing more than 200,000 64 × 64 RGB images. The CelebA dataset has been leveraged for a range of face-related computer vision tasks, including facial attribute recognition, facial expression analysis, and face generation. The CelebA-64 is a variant of CelebA in which the face of each image is centrally located and labeled with 40 attributes. The ImageNet is a large image dataset containing more than 14 million images, covering more than 20,000 categories. This dataset is usually used for classification, localization, and detection. It is extensively employed in research in the field of computer vision and related industrial applications.

4.2. Parameter Setting

The hyperparameters involved in ICSO can be divided into search strategy and GAN: For search strategies, the population size is 10, the maximum number of iterations is 10, and the dimension of the individual (i.e., the Gaussian noise) is 100. The horizontal probability is 1. For GAN, since the latent space focused on in this paper is completely independent from the other components of GAN, the model and parameter setting of GAN remain consistent with [

18]. The testing environment is described as follows: PyTorch (version 1.13.1) and Nvidia GeForce GTX 4090 (Nvidia, Santa Clara, CA, USA).

4.3. Evaluation Metrics

In this study, we evaluate the performance of the generative models using the following metrics: Inception Scores (IS) [

19], Fréchet Inception Distance (FID) [

20], Density [

21], and Coverage [

21]. The IS measures the quality and diversity of generated images, with higher values indicating better performance. The FID quantifies the similarity between the distributions of real and generated images, where lower values correspond to higher generation quality. Density evaluates how well the model covers the data manifold without generating unrealistic samples, while Coverage assesses the proportion of the test data manifold that is captured by the generated samples. Additionally, we report the computational efficiency in terms of GPU training days required to reach convergence, expressed in GPU/days.

4.4. Generative Performance

As shown in

Table 1, the experiments systematically evaluate the outstanding performance of ICSO in optimizing the latent space through comprehensive comparisons with random Gaussian noise (Baseline) and AdvLatGAN [

18] (iterative fast gradient sign method: I-FGSM), revealing the significant advantages of ICSO across various classic GAN architectures, including DCGAN [

22], WGAN [

23], WGAN-GP [

16], SNGAN [

24], LSGAN [

25], WGAN-div [

26], and ACGAN [

27]. The experiments cover multiple benchmark datasets ranging from CIFAR-10 and STL-10 to LSUN-64/128, CelebA-64/128, and ImageNet, demonstrating the consistent superiority of ICSO on datasets of different scales and complexities. Specifically, on the CIFAR-10 and STL-10 datasets, ICSO achieves higher IS across multiple GANs, demonstrating the remarkable quality and diversity of the generated images. Similarly, lower FID further validates the authenticity and detail fidelity of the images generated by ICSO. When applied to more complex datasets such as LSUN-64/128, CelebA-64/128, and ImageNet, ICSO leverages its unique evolutionary strategies to deeply explore global optima and generate high-quality samples. Whether in a relatively simple generative adversarial network like DCGAN or in other classic GANs that employ more complex training mechanisms to enhance stability and the quality of generated images, such as WGAN-GP and WGAN-div, ICSO demonstrates outstanding optimization capabilities. However, when applied to SNGAN across CIFAR-10, STL-10, and CelebA-64 datasets, ICSO does not perform as well as AdvLatGAN. This is attributed to SNGAN’s use of spectral normalization techniques to stabilize the training process, which provide more stable gradients, highly beneficial for gradient descent-based methods like the I-FGSM used by AdvLatGAN. This consistent outstanding performance across datasets and model architectures demonstrates the high scalability of ICSO, indicating that it is not only applicable to small-scale datasets but also to large-scale datasets, and can be effectively applied to different foundational model architectures. This provides a new approach for improving latent space optimization in GAN.

4.5. Mode Collapse

When evaluating the diversity of GANs, the 2D Gaussian mixture distribution, typically consisting of 8-Gaussian-mixture distribution and 25-Gaussian-mixture distribution, can visually demonstrate the model’s mode collapse. Typically, this can be directly observed using kernel density estimation (KDE) plots, with side plots reflecting the probability distribution. To verify the effectiveness of ICSO, ICSO-GAN is compared with five classical GANs, i.e., GAN [

28], NS-GAN [

28], LS-GAN, E-GAN [

29], and IE-GAN. For fairness, various GANs are trained using the same three-layer MLP network architecture. In particular, all images are from [

15], except ICSO-GAN. As depicted in

Figure 4, on two synthetic datasets, all classical GANs tend to generate some missing modes. Taking the 8-Gaussian-mixture distribution as an example, GAN produces seven modes, NS-GAN produces six modes, and LS-GAN and E-GAN produce very few modes. These results indicate that they are affected by mode collapse. In contrast, ICSO-GAN successfully learns the Gaussian mixture distribution for all modes, although some modes are weakly covered. Similar experimental results also occur in 25-Gaussian-mixture distribution. Compared with most classical GANs, ICSO-GAN has obvious advantages. This suggests that our evolutionary strategy can effectively suppress mode collapse. This is corroborated by the side plots, which show that the probability distribution of ICSO-GAN is closest to that of the target dataset.

4.6. Ablation Study and Analysis

Comparison with some popular evolutionary algorithms: In order to clearly demonstrate the advantages of ICSO, the experiment selected several well-recognized and widely applied algorithms in the field of optimization, including PSO [

30], DE [

31], CSO, BOA [

32], and WOA [

33], and conducted a systematic comparison between these algorithms and the proposed ICSO based on the WGAN-GP framework and CIFAR-10 dataset. In addition to the IS and FID, the experiment introduces density and coverage, which evaluate the quality and diversity of generated images from two perspectives: the degree of overlap between generated samples and real data in the feature space, and the coverage of categories. Through a comparison in

Table 2, it can be clearly observed that ICSO exhibits significant superiority over other evolutionary algorithms in multiple key metrics. Further analysis of the reasons reveals that both DE and CSO rely on information exchange strategies among individuals to generate new offspring. However, relying solely on the intra-population evolution mechanism often fails to maintain population diversity, making the algorithm prone to falling into local optima. Both BOA and WOA utilize a two-stage mechanism involving global exploration followed by local exploitation to generate new candidate solutions. Nevertheless, due to the absence of mutation operators, these algorithms may suffer from limited population diversity, leading to premature convergence. In contrast, ICSO ingeniously incorporates the evolutionary mechanism of PSO, enabling the more precise and efficient tracking of the global optimal solution during the evolutionary iteration process, rather than simply performing crossover operations among individuals. From an overall efficiency perspective, ICSO demonstrates excellent performance. Although its two-stage operational mechanism leads to a longer runtime compared to PSO and DE, the performance improvement brought by this design far outweighs the additional computational cost. Compared with CSO, BOA, and WOA, ICSO also exhibits higher runtime efficiency. Unlike CSO, which relies solely on cross-dimensional crossover operations to escape local optima, and BOA and WOA, which employ a two-stage strategy combining global and local search, the strength of ICSO lies in its effective integration of a global best mechanism during the evolutionary process. This enables the algorithm to more accurately guide the population toward the global optimal solution, thereby enhancing both convergence speed and search quality.

Comparison with different objective functions: By comparing multiple perspectives of the objective function in ICSO, the evolutionary process can be guided more precisely, thereby significantly improving the performance of GAN. We conduct similar experiments above, utilizing WGAN-GP on CIFAR-10, and apply the evaluation metrics of IS, FID, Density, and Coverage. It can be seen from

Table 3 that ICSO has a competitive advantage in various metrics. Specifically, ICSO not only achieves higher scores on the IS, indicating that the images it generates are both realistic and diverse, but also achieves lower values on the FID, further demonstrating the high consistency between its generated samples and the real data distribution. In addition, ICSO also performs exceptionally well in terms of Density and Coverage, as it is able to generate dense sample points within the real data distribution while effectively covering a broader range of distribution areas, avoiding the problem of mode collapse. In summary, in the process of optimizing GAN, this algorithm comprehensively surpasses other comparative algorithms by enhancing the quality, diversity, and coverage breadth of the generated samples.

4.7. Industrial Application of StyleGAN3 with ICSO

To evaluate the performance and applicability of ICSO in industrial applications, we integrate it into the StyleGAN3 [

34] network architecture, which possesses outstanding industrial characteristics, and apply it to the inspection of traditional electric poles, addressing challenges brought by grid expansion, such as increasing demand for inspection personnel and misjudgments or omissions due to extended inspection cycles. By applying ICSO to power line inspection, a typical industrial scenario, we not only validate its effectiveness in enhancing infrastructure resilience, but also further demonstrate its potential in automating and intelligentizing inspection tasks. At the same time, the generative AI capabilities embodied in this method provide traditional industrial systems with novel means of data generation and enhancement, offering technological support for advancing industrial digital transformation. Especially in the current context emphasizing green development, low carbon emissions, and efficient resource utilization, ICSO has the capability to generate large-scale, high-quality image data at relatively low real-world acquisition costs. This provides a feasible pathway toward building data reuse mechanisms, alleviating data acquisition bottlenecks, and achieving sustainable operations [

35,

36]. By employing ICSO-GAN to generate images of electric poles taken by an unmanned aerial vehicle (UAV), the aim is to construct a dataset specifically for the automated inspection of electric poles. The experiment bases on the stylegan3-r-ffhqu-256x256 model as a pre-trained model with an additional 5000 kimgs of training. The original dataset contains a total of 1000 images of a single category of electric poles. To assess the performance of the proposed algorithm, it is compared with random Gaussian noise and I-FGSM optimized latent space in StyleGAN3. Evaluation metrics include fid50k_full (FID against the full dataset), kid50k_full (Kernel inception distance [

37] against the full dataset), pr50k3_full (Precision and recall [

38] against the full dataset, i.e., pr50k3_full_precision and pr50k3_full_recall), ppl2_wend (Perceptual path length [

39] in latent space, endpoints, full image), eqt50k_int (Equivariance [

34] w.r.t. integer translation: EQ-T), eqt50k_frac (Equivariance [

34] w.r.t. fractional translation: EQ-Tfrac), and eqr50k (Equivariance [

34] w.r.t. rotation: EQ-R).

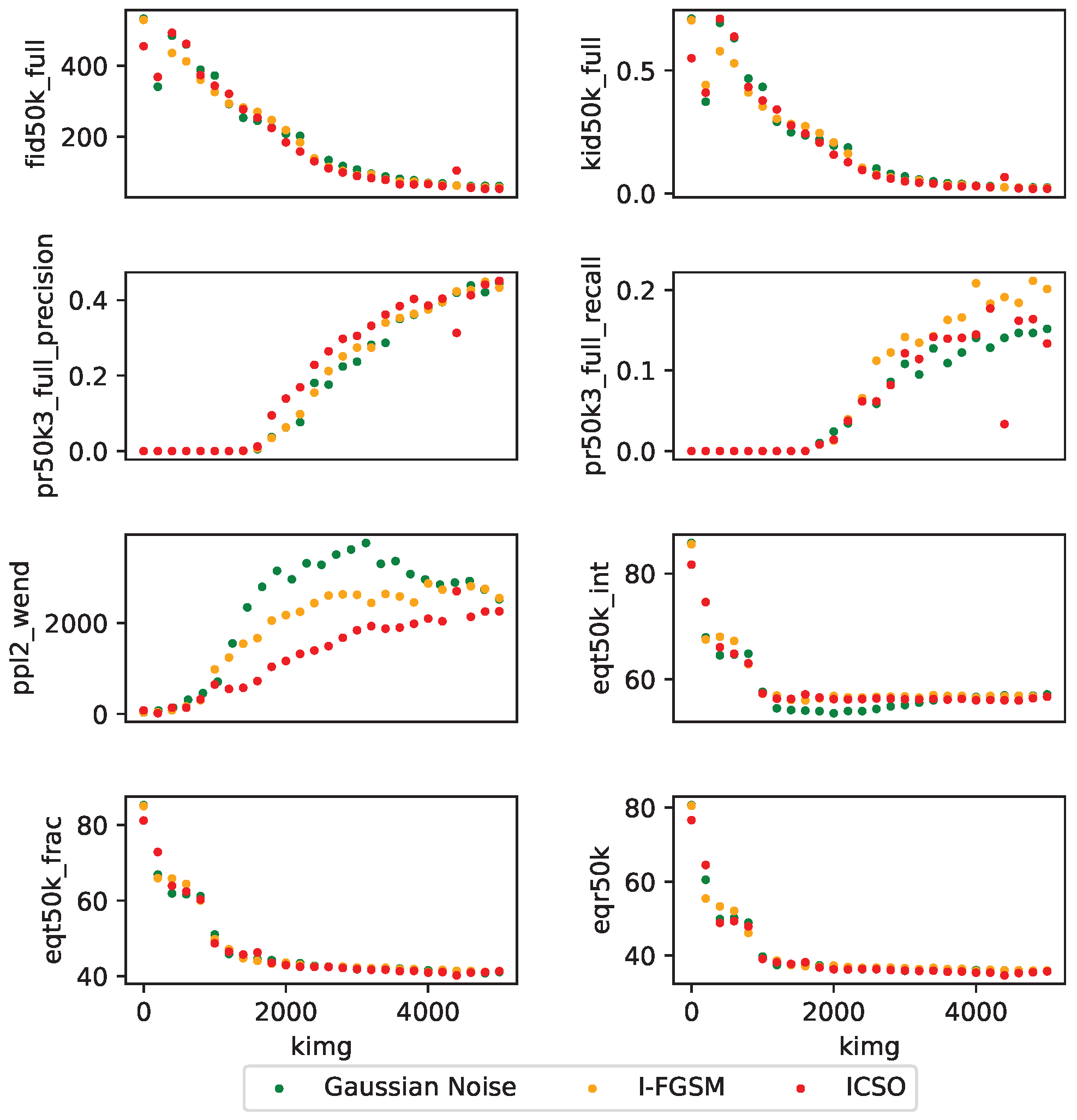

Quantitative comparison: As can be seen from

Figure 5 and

Table 4, ICSO demonstrates superior performance across multiple evaluation metrics. Specifically, ICSO achieves the best performance on metrics such as fid50k_full, kid50k_full, pr50k3_full_precision, eqt50k_int, and eqr50k. These metrics cover various aspects of generative models, including image quality (such as FID and KID), precision, and recall. Although ICSO’s performance is slightly inferior to other methods on some metrics (such as pr50k3_full_recall, ppl2_wend and eqt50k_frac), overall, ICSO shows competitiveness across most metrics. This indicates the robustness and effectiveness of ICSO in handling complex tasks.

Qualitative results: StyleGAN3 generates random images from a latent space that has been optimized using various algorithms. As shown in

Figure 6, the images generated by other algorithms are distorted when compared with those generated by ICSO. Since ICSO incorporates the global optimal mechanism and the local optimal mechanism from PSO on the basis of the original CSO algorithm, it can always guide the evolution of the population towards the globally optimal direction, rather than relying solely on heuristic iterations.

5. Conclusions

This paper proposes the improved crisscross optimization (ICSO) algorithm for optimizing the latent space of GAN. In the algorithm, normalization is employed to equilibrate the order of magnitude between two independent attributes of quality and diversity within the objective function. Then, the gradient of the discriminator is constrained by introducing the gradient regularization term (i.e., GP) into the discriminator’s loss function, which helps to avoid problems such as mode collapse and gradient exploding in the training process of GAN. By incorporating the local and global search mechanisms of PSO with the rapid convergence of the original crisscross optimization, ICSO effectively directs the evolution of population towards the optimal individuals. The experiments show that the proposed algorithm has significant advantages in the quality and diversity of the generated images, and it also has the prospect of being applied to industrial applications, specifically in the area of UAV image generation.

In the future, we will study how to leverage attention mechanisms, wavelet analysis, and other technologies to explore the impact of latent space on the generation performance of GAN in greater depth.