Exploiting Frequency Characteristics for Boosting the Invisibility of Adversarial Attacks

Abstract

1. Introduction

- We propose an invisible adversarial attack method based on Fourier analysis, where the derivation and superposition of adversarial perturbations are performed in the frequency domain, thus avoiding the drawbacks to adversarial attacks due to the use of norm constraints.

- We propose a new optimization objective for adversarial attacks in the Fourier domain, using a joint adversarial loss and frequency loss for optimization. Unlike previous works that mainly focus on generating adversarial examples in the spatial domain and use the norm to constrain the strength of perturbation, which is insufficient for the invisibility of adversarial examples, our analysis of the Fourier domain characteristics of adversarial examples offers a new perspective for further research.

- We have conducted extensive experiments to evaluate the proposed method and compare it with existing transferable adversarial attack methods. The results show that the proposed method significantly enhances the invisibility of adversarial attacks.

2. Related Works

2.1. Black-Box Adversarial Attacks

2.2. Frequency Principle of Adversarial Examples

3. Methodology

3.1. Preliminaries

3.1.1. Adversarial Attack

3.1.2. Frequency Domain Robustness

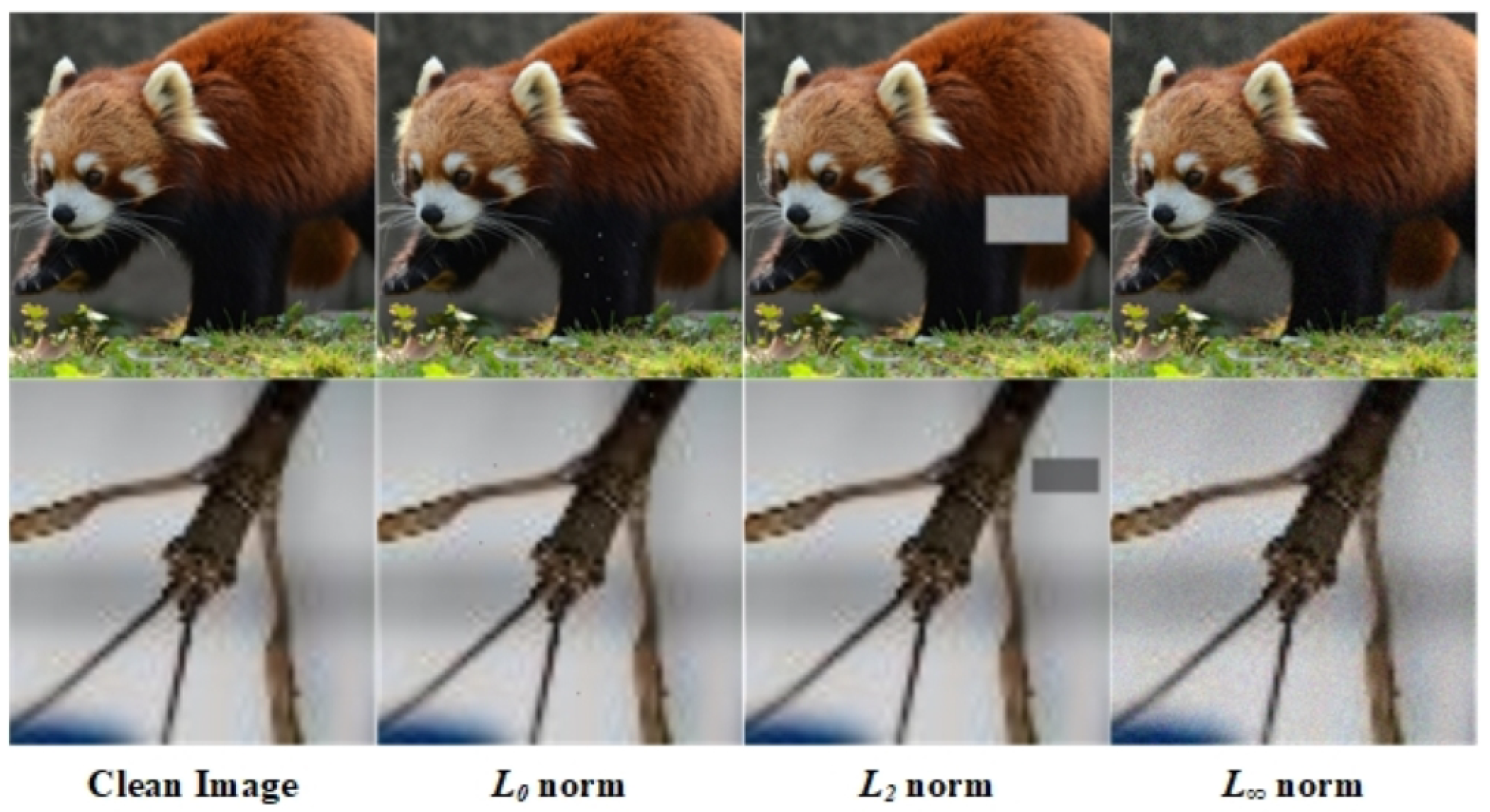

3.2. Motivation

3.3. Fourier Domain Analysis

3.4. Attack Algorithm

| Algorithm 1 Fourier invisible adversarial attack. |

|

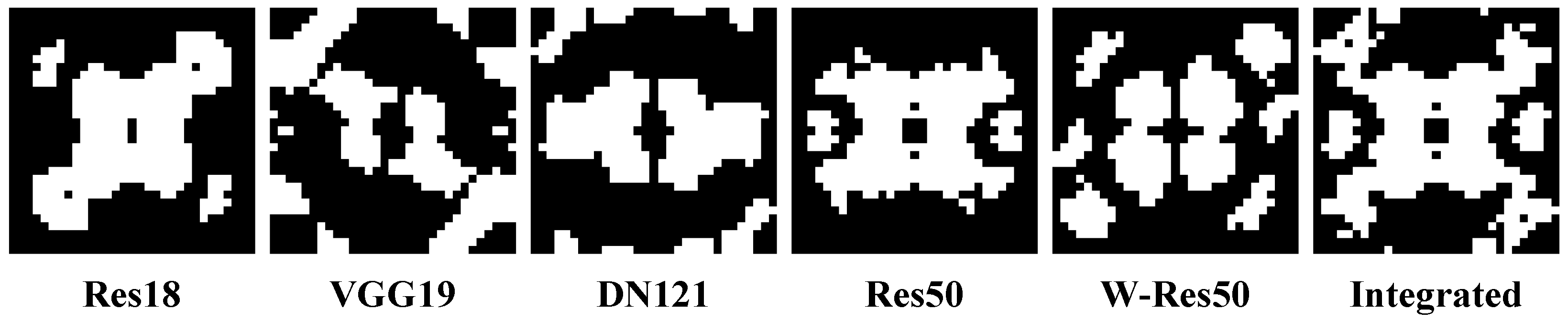

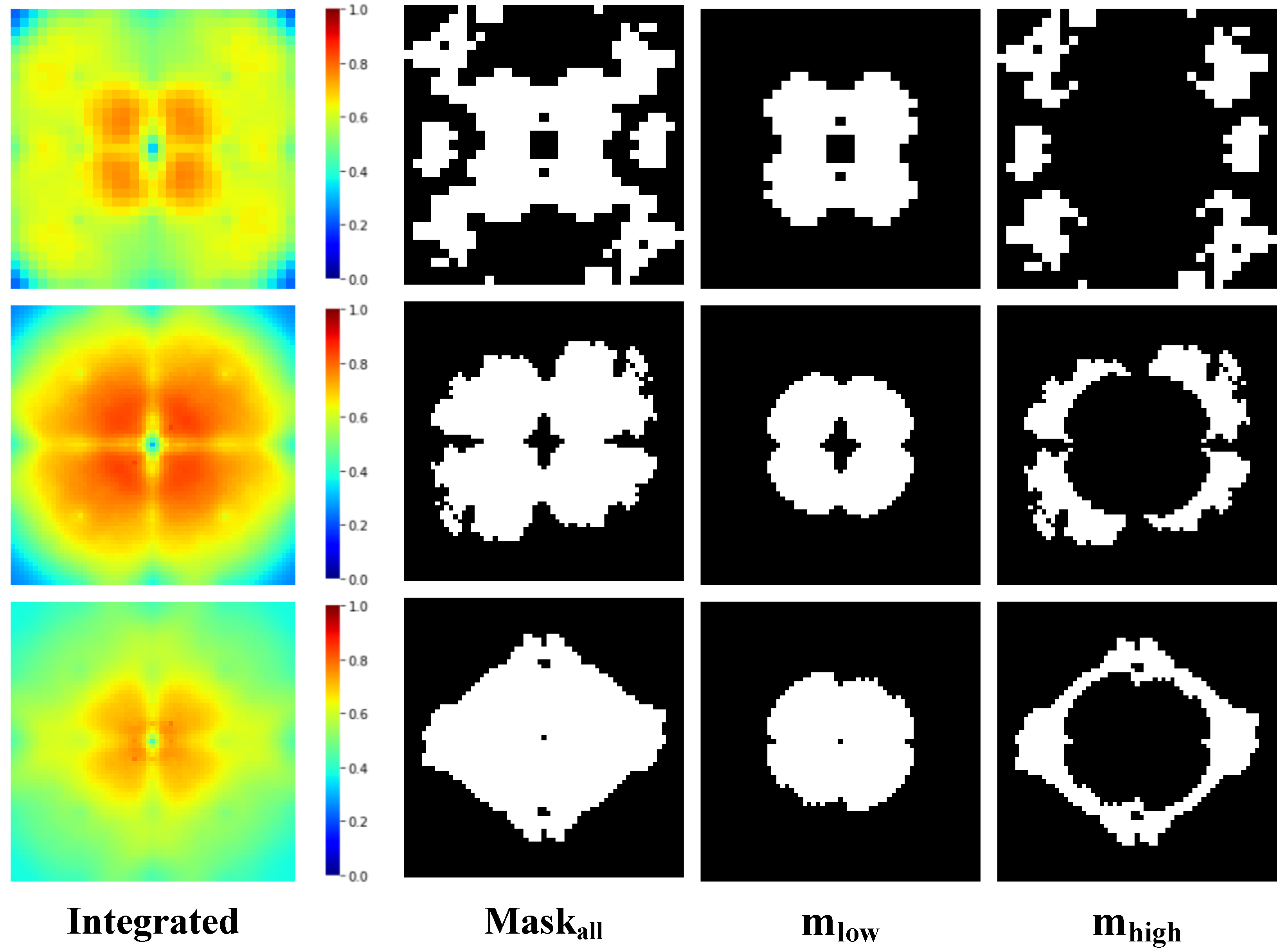

3.5. Cluster Analysis

3.6. Frequency Domain Loss

4. Experimental Results and Analysis

4.1. Experiment Setup

4.1.1. Datasets

4.1.2. Backbone Network

4.1.3. Comparative Methods

4.1.4. Parameter Settings

4.1.5. Attack Scenarios

4.1.6. Evaluation Matrix

4.2. Evaluation of Image Quality

4.2.1. Tiny-ImageNet

4.2.2. CIFAR100 and CIFAR10

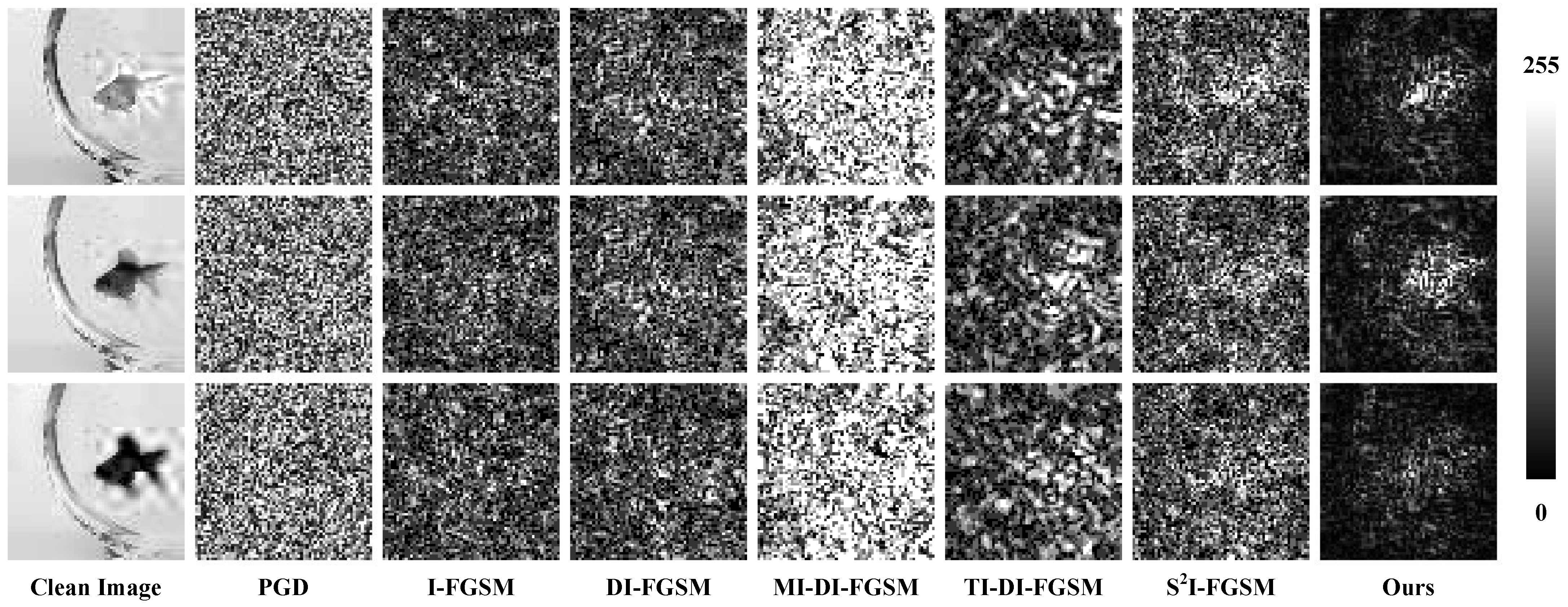

4.3. Visualization Analysis

4.3.1. Detail Comparison

4.3.2. Difference Analysis

4.4. Evaluation of Transferability

4.5. Ablation Study

4.5.1. Quantitative Analysis in the Frequency Domain

4.5.2. Fourier Perturbation Hotspot Map

5. Conclusions and Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Long, Y.; Zhang, Q.; Zeng, B.; Gao, L.; Liu, X.; Zhang, J.; Song, J. Frequency domain model augmentation for adversarial attack. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 549–566. [Google Scholar]

- Dong, Y.; Pang, T.; Su, H.; Zhu, J. Evading defenses to transferable adversarial examples by translation-invariant attacks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4312–4321. [Google Scholar]

- Dong, Y.; Liao, F.; Pang, T.; Su, H.; Zhu, J.; Hu, X.; Li, J. Boosting adversarial attacks with momentum. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 9185–9193. [Google Scholar]

- Xie, C.; Zhang, Z.; Zhou, Y.; Bai, S.; Wang, J.; Ren, Z.; Yuille, A.L. Improving transferability of adversarial examples with input diversity. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2730–2739. [Google Scholar]

- Zhang, J.; Wang, J.; Wang, H.; Luo, X. Self-recoverable adversarial examples: A new effective protection mechanism in social networks. IEEE Trans. Circuits Syst. Video Technol. 2022, 33, 562–574. [Google Scholar] [CrossRef]

- Sun, W.; Jin, J.; Lin, W. Minimum Noticeable Difference-Based Adversarial Privacy Preserving Image Generation. IEEE Trans. Circuits Syst. Video Technol. 2022, 33, 1069–1081. [Google Scholar] [CrossRef]

- Thorpe, S.; Fize, D.; Marlot, C. Speed of processing in the human visual system. Nature 1996, 381, 520–522. [Google Scholar] [CrossRef] [PubMed]

- Sharif, M.; Bauer, L.; Reiter, M.K. On the suitability of lp-norms for creating and preventing adversarial examples. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 1605–1613. [Google Scholar]

- Madry, A.; Makelov, A.; Schmidt, L.; Tsipras, D.; Vladu, A. Towards deep learning models resistant to adversarial attacks. arXiv 2017, arXiv:1706.06083. [Google Scholar]

- Wang, J. Adversarial Examples in Physical World. In Proceedings of the International Joint Conference on Artificial Intelligence, Montreal, QC, Canada, 19–27 August 2021; pp. 4925–4926. [Google Scholar]

- Wang, H.; Wu, X.; Huang, Z.; Xing, E.P. High-frequency component helps explain the generalization of convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 8684–8694. [Google Scholar]

- Zhang, Q.; Zhang, C.; Li, C.; Song, J.; Gao, L.; Shen, H.T. Practical no-box adversarial attacks with training-free hybrid image transformation. arXiv 2022, arXiv:2203.04607. [Google Scholar]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and Harnessing Adversarial Examples. Stat 2015, 1050, 20. [Google Scholar]

- Lin, J.; Song, C.; He, K.; Wang, L.; Hopcroft, J.E. Nesterov Accelerated Gradient and Scale Invariance for Adversarial Attacks. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Ding, X.; Zhang, S.; Song, M.; Ding, X.; Li, F. Toward invisible adversarial examples against DNN-based privacy leakage for Internet of Things. IEEE Internet Things J. 2020, 8, 802–812. [Google Scholar] [CrossRef]

- Wang, Z.; Song, M.; Zheng, S.; Zhang, Z.; Song, Y.; Wang, Q. Invisible adversarial attack against deep neural networks: An adaptive penalization approach. IEEE Trans. Dependable Secur. Comput. 2019, 18, 1474–1488. [Google Scholar] [CrossRef]

- Zhang, Y.; Tan, Y.a.; Sun, H.; Zhao, Y.; Zhang, Q.; Li, Y. Improving the invisibility of adversarial examples with perceptually adaptive perturbation. Inf. Sci. 2023, 635, 126–137. [Google Scholar] [CrossRef]

- Luo, T.; Ma, Z.; Xu, Z.Q.J.; Zhang, Y. Theory of the frequency principle for general deep neural networks. arXiv 2019, arXiv:1906.09235. [Google Scholar] [CrossRef]

- Maiya, S.R.; Ehrlich, M.; Agarwal, V.; Lim, S.N.; Goldstein, T.; Shrivastava, A. A frequency perspective of adversarial robustness. arXiv 2021, arXiv:2111.00861. [Google Scholar]

- Su, J.; Vargas, D.V.; Sakurai, K. One pixel attack for fooling deep neural networks. IEEE Trans. Evol. Comput. 2019, 23, 828–841. [Google Scholar] [CrossRef]

- Carlini, N.; Wagner, D. Towards evaluating the robustness of neural networks. In Proceedings of the 2017 IEEE Symposium on Security and Privacy, San Jose, CA, USA, 22–26 May 2017; pp. 39–57. [Google Scholar]

- Yin, D.; Gontijo Lopes, R.; Shlens, J.; Cubuk, E.D.; Gilmer, J. A fourier perspective on model robustness in computer vision. In Proceedings of the Advances in Neural Information Processing Systems 2019, Vancouver, BC, Canada, 8–14 December 2019; Volume 32. [Google Scholar]

- Hamid, O.H. Data-centric and model-centric AI: Twin drivers of compact and robust industry 4.0 solutions. Appl. Sci. 2023, 13, 2753. [Google Scholar] [CrossRef]

- Brendel, W.; Rauber, J.; Kurakin, A.; Papernot, N.; Veliqi, B.; Mohanty, S.P.; Laurent, F.; Salathé, M.; Bethge, M.; Yu, Y.; et al. Adversarial vision challenge. In The NeurIPS’18 Competition; Springer: Cham, Switzerland, 2019; pp. 129–153. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems 2017, Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Hamid, O.H. There is more to AI than meets the eye: Aligning human-made algorithms with nature-inspired mechanisms. In Proceedings of the 2022 IEEE/ACS 19th International Conference on Computer Systems and Applications (AICCSA), Abu Dhabi, United Arab Emirates, 5–8 December 2022; pp. 1–4. [Google Scholar]

- Krizhevsky, A.; Hinton, G. Learning Multiple Layers of Features from Tiny Images. Master’s Thesis, University of Toronto, Toronto, ON, Canada, 2009. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the 3rd International Conference on Learning Representations (ICLR 2015), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Zagoruyko, S.; Komodakis, N. Wide Residual Networks. In Proceedings of the British Machine Vision Conference 2016, York, UK, 19–22 September 2016. [Google Scholar]

- Mannos, J.; Sakrison, D. The effects of a visual fidelity criterion of the encoding of images. IEEE Trans. Inf. Theory 1974, 20, 525–536. [Google Scholar] [CrossRef]

- Daly, S.J. Visible differences predictor: An algorithm for the assessment of image fidelity. In Human Vision, Visual Processing, and Digital Display III; SPIE: Bellingham, WA, USA, 1992; Volume 1666, pp. 2–15. [Google Scholar]

- Yang, Z.; Li, L.; Xu, X.; Zuo, S.; Chen, Q.; Zhou, P.; Rubinstein, B.; Zhang, C.; Li, B. TRS: Transferability reduced ensemble via promoting gradient diversity and model smoothness. In Proceedings of the 35th Conference on Neural Information Processing Systems, Online, 6–14 December 2021; Volume 34, pp. 17642–17655. [Google Scholar]

- MacQueen, J. Classification and analysis of multivariate observations. In Proceedings of the 5th Berkeley Symposium on Mathematical Statistics and Probability; University of California Press: Los Angeles, LA, USA, 1967; pp. 281–297. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Bovik, A.C. A universal image quality index. IEEE Signal Process. Lett. 2002, 9, 81–84. [Google Scholar] [CrossRef]

- Wald, L. Quality of high resolution synthesised images: Is there a simple criterion? In Proceedings of the Third Conference “Fusion of Earth data: Merging Point Measurements, Raster Maps and Remotely Sensed Images”, Sophia Antipolis, France, 26–28 January 2000; pp. 99–103. [Google Scholar]

- Sheikh, H.R.; Bovik, A.C. Image information and visual quality. IEEE Trans. Image Process. 2006, 15, 430–444. [Google Scholar] [CrossRef] [PubMed]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 586–595. [Google Scholar]

- Liu, Y.; Wu, H.; Zhang, X. Robust and Imperceptible Black-box DNN Watermarking Based on Fourier Perturbation Analysis and Frequency Sensitivity Clustering. IEEE Trans. Dependable Secur. Comput. 2024, 1–14. [Google Scholar] [CrossRef]

| Settings | Methods | L2(↓) | PSNR(↑) | SSIM(↑) | VIF(↑) | LPIPS (↓) | RMSE(↓) | UQI(↑) | ERGAS(↓) |

|---|---|---|---|---|---|---|---|---|---|

| Targeted Res50 | PGD | 3.970 | 28.90 | 0.9219 | 0.4775 | 0.0873 | 0.0359 | 0.9808 | 5477.7 |

| I-FGSM | 2.403 | 33.29 | 0.9619 | 0.6087 | 0.101 | 0.0217 | 0.9933 | 3551.9 | |

| DI-FGSM | 2.779 | 32.03 | 0.9617 | 0.5727 | 0.1139 | 0.0251 | 0.9888 | 3889.6 | |

| TI-DI-FGSM | 2.728 | 32.19 | 0.9577 | 0.5783 | 0.1091 | 0.0246 | 0.9902 | 4020.1 | |

| MI-DI-FGSM | 3.517 | 29.97 | 0.9309 | 0.5011 | 0.1765 | 0.0317 | 0.9863 | 5145.7 | |

| -FGSM | 3.043 | 31.24 | 0.9572 | 0.5444 | 0.1222 | 0.0275 | 0.9885 | 4170.3 | |

| Ours | 1.169 | 39.60 | 0.9902 | 0.7894 | 0.0478 | 0.0105 | 0.9991 | 1375.8 | |

| Targeted VGG19 | PGD | 3.973 | 28.91 | 0.922 | 0.4781 | 0.0946 | 0.0358 | 0.9809 | 5467.3 |

| I-FGSM | 2.299 | 33.68 | 0.9709 | 0.6273 | 0.1015 | 0.0207 | 0.9923 | 3209.3 | |

| DI-FGSM | 2.593 | 32.64 | 0.9655 | 0.5917 | 0.1258 | 0.0234 | 0.9907 | 3588.3 | |

| TI-DI-FGSM | 2.664 | 32.40 | 0.9651 | 0.5843 | 0.1093 | 0.024 | 0.9891 | 3692.9 | |

| MI-DI-FGSM | 3.426 | 30.20 | 0.9436 | 0.512 | 0.1762 | 0.0309 | 0.9853 | 4717.9 | |

| -FGSM | 2.862 | 31.78 | 0.9611 | 0.5646 | 0.1369 | 0.0258 | 0.9898 | 3903.8 | |

| Ours | 1.109 | 40.07 | 0.9938 | 0.8062 | 0.0422 | 0.01 | 0.9985 | 1548.0 | |

| Untargeted Res50 | PGD | 4.002 | 28.85 | 0.9228 | 0.4753 | 0.0916 | 0.0361 | 0.983 | 5378.5 |

| I-FGSM | 2.775 | 32.10 | 0.9601 | 0.5733 | 0.0918 | 0.025 | 0.9889 | 3723.5 | |

| DI-FGSM | 2.807 | 31.95 | 0.9622 | 0.5686 | 0.107839 | 0.0253 | 0.9888 | 3754.8 | |

| TI-DI-FGSM | 2.960 | 31.50 | 0.9607 | 0.5569 | 0.1026 | 0.0267 | 0.9876 | 3876.3 | |

| MI-DI-FGSM | 5.199 | 26.57 | 0.8888 | 0.3856 | 0.2224 | 0.0469 | 0.9748 | 6738.5 | |

| -FGSM | 3.287 | 30.57 | 0.9525 | 0.5207 | 0.1341 | 0.0297 | 0.9872 | 4355.0 | |

| Ours | 1.694 | 36.36 | 0.9855 | 0.7005 | 0.0578 | 0.0153 | 0.9946 | 2423.9 | |

| Untargeted VGG19 | PGD | 4.024 | 28.80 | 0.9208 | 0.4718 | 0.1083 | 0.0363 | 0.9824 | 5452.3 |

| I-FGSM | 2.979 | 30.88 | 0.9605 | 0.5593 | 0.1348 | 0.0269 | 0.9889 | 3877.1 | |

| DI-FGSM | 3.288 | 30.59 | 0.9534 | 0.525 | 0.1636 | 0.0297 | 0.9873 | 4285.7 | |

| TI-DI-FGSM | 3.382 | 30.36 | 0.9525 | 0.5155 | 0.1428 | 0.0305 | 0.9858 | 4403.9 | |

| MI-DI-FGSM | 5.152 | 26.66 | 0.8916 | 0.3872 | 0.2416 | 0.0465 | 0.975 | 6706.0 | |

| -FGSM | 3.724 | 29.50 | 0.9435 | 0.4886 | 0.1988 | 0.0336 | 0.9853 | 4835.8 | |

| Ours | 2.097 | 34.61 | 0.9789 | 0.6485 | 0.1170 | 0.0189 | 0.9938 | 2953.4 |

| Settings | Methods | L2(↓) | PSNR(↑) | SSIM(↑) | VIF(↑) | LPIPS (↓) | RMSE(↓) | UQI(↑) | ERGAS(↓) |

|---|---|---|---|---|---|---|---|---|---|

| Targeted CIFAR100 | PGD | 3.9592 | 28.9501 | 0.8506 | 0.4207 | 0.2248 | 0.0357 | 0.9846 | 4861.313 |

| I-FGSM | 2.212 | 34.0041 | 0.9422 | 0.5856 | 0.1769 | 0.02 | 0.9925 | 2753.327 | |

| DI-FGSM | 2.3564 | 33.459 | 0.9392 | 0.5677 | 0.1988 | 0.0213 | 0.9923 | 2896.558 | |

| TI-DI-FGSM | 2.4783 | 33.0247 | 0.9359 | 0.5492 | 0.1718 | 0.0224 | 0.9907 | 3108.424 | |

| MI-DI-FGSM | 3.1938 | 30.8156 | 0.8981 | 0.4791 | 0.2727 | 0.0288 | 0.9887 | 3833.376 | |

| -FGSM | 2.653 | 32.4333 | 0.9253 | 0.5263 | 0.2128 | 0.0239 | 0.9907 | 3291.363 | |

| Ours | 1.1808 | 39.555 | 0.985 | 0.7468 | 0.0866 | 0.0107 | 0.9971 | 1554.388 | |

| Targeted CIFAR10 | PGD | 2.033 | 28.7168 | 0.9544 | 0.4836 | 0.0978 | 0.0367 | 0.9945 | 4020.453 |

| I-FGSM | 1.4367 | 31.794 | 0.978 | 0.5789 | 0.0861 | 0.0259 | 0.997 | 2826.112 | |

| DI-FGSM | 1.843 | 29.5851 | 0.9651 | 0.5106 | 0.1359 | 0.0333 | 0.9952 | 3630.055 | |

| TI-DI-FGSM | 2.0019 | 28.8683 | 0.9623 | 0.5042 | 0.1334 | 0.0361 | 0.9936 | 3923.169 | |

| MI-DI-FGSM | 2.7379 | 26.1326 | 0.928 | 0.4048 | 0.2124 | 0.0494 | 0.9901 | 5389.967 | |

| -FGSM | 1.7697 | 29.9492 | 0.9673 | 0.5151 | 0.1229 | 0.0319 | 0.9956 | 3526.703 | |

| Ours | 0.6173 | 39.2151 | 0.9948 | 0.755 | 0.021 | 0.0111 | 0.9992 | 1324.995 | |

| Untargeted CIFAR100 | PGD | 3.9664 | 28.9342 | 0.8503 | 0.4199 | 0.2278 | 0.0358 | 0.9846 | 4866.471 |

| I-FGSM | 2.2014 | 34.0471 | 0.9433 | 0.5878 | 0.1725 | 0.0199 | 0.9924 | 2738.272 | |

| DI-FGSM | 2.3082 | 33.64 | 0.9403 | 0.5741 | 0.1925 | 0.0208 | 0.9921 | 2857.357 | |

| TI-DI-FGSM | 2.4504 | 33.126 | 0.9371 | 0.554 | 0.1682 | 0.0221 | 0.991 | 3042.448 | |

| MI-DI-FGSM | 4.8833 | 27.1276 | 0.8064 | 0.351 | 0.3608 | 0.0441 | 0.9793 | 5848.935 | |

| -FGSM | 2.7332 | 32.179 | 0.9226 | 0.5176 | 0.2297 | 0.0247 | 0.9903 | 3393.043 | |

| Ours | 1.5578 | 37.1052 | 0.9751 | 0.674 | 0.1267 | 0.0141 | 0.9959 | 2023.021 | |

| Untargeted CIFAR10 | PGD | 2.0425 | 28.6772 | 0.9552 | 0.486 | 0.1005 | 0.0369 | 0.9944 | 4051.093 |

| I-FGSM | 1.7972 | 29.8239 | 0.9697 | 0.5227 | 0.1191 | 0.0324 | 0.9955 | 3546.147 | |

| DI-FGSM | 1.8363 | 29.6212 | 0.9673 | 0.5155 | 0.1307 | 0.0331 | 0.9952 | 3635.69 | |

| TI-DI-FGSM | 2.0438 | 28.6842 | 0.9625 | 0.5019 | 0.1329 | 0.0369 | 0.9933 | 4006.551 | |

| MI-DI-FGSM | 2.7362 | 26.138 | 0.93 | 0.406 | 0.2075 | 0.0494 | 0.9901 | 5398.472 | |

| -FGSM | 1.9634 | 29.0394 | 0.9613 | 0.488 | 0.1528 | 0.0354 | 0.9946 | 3947.055 | |

| Ours | 1.1618 | 33.6425 | 0.9825 | 0.5969 | 0.0747 | 0.021 | 0.9976 | 2495.726 |

| Targeted Attack | ||||

| Methods | Res18 | VGG19 | Dense | W-Res50 |

| PGD | 3.20% | 0.45% | 0.05% | 0.15% |

| I-FGSM | 1.50% | 0.10% | 0.50% | 0.50% |

| TI-DI-FGSM | 18.50% | 1.20% | 3.00% | 13.50% |

| Ours | 21.00% | 10.00% | 6.00% | 16.50% |

| PGD | 4.05% | 3.55% | 4.65% | 0.95% |

| I-FGSM | 8.45% | 4.90% | 8.30% | 2.60% |

| TI-DI-FGSM | 27.85% | 20.40% | 30.25% | 10.55% |

| Ours | 28.85% | 22.10% | 32.15% | 12.90% |

| PGD | 6.89% | 6.61% | 7.22% | 7.44% |

| I-FGSM | 15.11% | 11.89% | 13.56% | 15.94% |

| TI-DI-FGSM | 22.94% | 17.39% | 21.44% | 19.94% |

| Ours | 32.22% | 27.78% | 28.44% | 32.22% |

| Untargeted Attack | ||||

| PGD | 40.05% | 11.25% | 1.15% | 4.10% |

| I-FGSM | 50.45% | 20.15% | 4.80% | 17.70% |

| TI-DI-FGSM | 63.54% | 40.82% | 20.52% | 47.02% |

| Ours | 69.15% | 41.10% | 21.40% | 48.65% |

| PGD | 46.50% | 52.00% | 54.00% | 12.50% |

| I-FGSM | 54.00% | 47.50% | 59.00% | 21.00% |

| TI-DI-FGSM | 66.33% | 66.83% | 73.92% | 31.21% |

| Ours | 67.00% | 68.50% | 75.50% | 35.50% |

| PGD | 31.50% | 37.00% | 33.50% | 34.50% |

| I-FGSM | 58.50% | 56.50% | 60.50% | 62.50% |

| TI-DI-FGSM | 53.50% | 46.00% | 57.00% | 58.00% |

| Ours | 69.00% | 61.50% | 63.00% | 68.50% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, C.; Liu, Y.; Zhang, X.; Wu, H. Exploiting Frequency Characteristics for Boosting the Invisibility of Adversarial Attacks. Appl. Sci. 2024, 14, 3315. https://doi.org/10.3390/app14083315

Li C, Liu Y, Zhang X, Wu H. Exploiting Frequency Characteristics for Boosting the Invisibility of Adversarial Attacks. Applied Sciences. 2024; 14(8):3315. https://doi.org/10.3390/app14083315

Chicago/Turabian StyleLi, Chen, Yong Liu, Xinpeng Zhang, and Hanzhou Wu. 2024. "Exploiting Frequency Characteristics for Boosting the Invisibility of Adversarial Attacks" Applied Sciences 14, no. 8: 3315. https://doi.org/10.3390/app14083315

APA StyleLi, C., Liu, Y., Zhang, X., & Wu, H. (2024). Exploiting Frequency Characteristics for Boosting the Invisibility of Adversarial Attacks. Applied Sciences, 14(8), 3315. https://doi.org/10.3390/app14083315