Abstract

Human activity recognition (HAR) identifies people’s motions and actions in daily life. HAR research has grown with the popularity of internet-connected, wearable sensors that capture human movement data to detect activities. Recent deep learning advances have enabled more HAR research and applications using data from wearable devices. However, prior HAR research often focused on a few sensor locations on the body. Recognizing real-world activities poses challenges when device positioning is uncontrolled or initial user training data are unavailable. This research analyzes the feasibility of deep learning models for both position-dependent and position-independent HAR. We introduce an advanced residual deep learning model called Att-ResBiGRU, which excels at accurate position-dependent HAR and delivers excellent performance for position-independent HAR. We evaluate this model using three public HAR datasets: Opportunity, PAMAP2, and REALWORLD16. Comparisons are made to previously published deep learning architectures for addressing HAR challenges. The proposed Att-ResBiGRU model outperforms existing techniques in accuracy, cross-entropy loss, and F1-score across all three datasets. We assess the model using k-fold cross-validation. The Att-ResBiGRU achieves F1-scores of 86.69%, 96.23%, and 96.44% on the PAMAP2, REALWORLD16, and Opportunity datasets, surpassing state-of-the-art models across all datasets. Our experiments and analysis demonstrate the exceptional performance of the Att-ResBiGRU model for HAR applications.

1. Introduction

Recently, significant advances in sensing technologies have enabled the creation of intelligent systems capable of supporting various applications that can be useful in daily life. Such applications include those for assisted-living smart homes [1], healthcare monitoring [2], and security surveillance [3]. These systems aim to develop enhanced artificial intelligence that promptly recognizes people’s everyday activities, like walking or jogging. This technology can improve the quality of life for those in poor health by closely tracking their movements. For example, elderly, disabled, or diabetic individuals often need to follow strict daily physical activity regimens as part of their medical care. Thus, healthcare providers can use these intelligent systems to monitor patient behaviors, identifying actions like ambulation, stair climbing, and reclining. Moreover, even healthy individuals can utilize the systems proactively. Studying the activities of healthy people could aid early disease detection in some cases [4]. The systems could also improve well-being by providing insights into daily activity patterns. This research explicitly examines human activity recognition (HAR), which accurately determines a person’s activity using sensors embedded in wearable devices worn on the body.

HAR analyzes people’s movements using technology and sensors. Automatically identifying physical activities has become vital in ubiquitous computing, interpersonal communication, and behavioral analysis research. Extensively applying HAR can enhance human safety and well-being. In hospitals, patients can wear devices that detect mobility, heart rate, and sleep quality, enabling remote monitoring without constant expert oversight. Such tools also facilitate tracking elderly and young patients. HAR-powered smart home solutions provide energy savings and personalized comfort by recognizing whether individuals are present and adapting lights or temperature accordingly. Some personal safety gadgets can automatically notify emergency responders or designated contacts when required. Additionally, many other applications need automated coordination based on identifying user activities.

Technological advances have enabled the creation of wearable devices with sensing, processing, and communication capabilities that are more compact, affordable, and power-efficient. Sensors embedded in these wearables, commonly leveraged for Internet of Things (IoT) applications, can rapidly capture various body motions and recognize human behaviors. In particular, inertial measurement unit (IMU) sensors containing accelerometers and gyroscopes have improved drastically in recent years, simplifying the tracking and detection of human movements. Undoubtedly, sensor-based HAR furnishes a highly versatile and cost-effective approach for continual monitoring while better addressing privacy concerns.

HAR is not a new research field, with over a decade of discussions. Consequently, numerous published studies have focused on developing activity recognition systems. Earlier systems relied on machine learning approaches, which yielded some success. However, these machine learning methods depended heavily on manual feature engineering techniques [5,6]. As such, they were time-intensive and laborious, necessitating substantial human input. Additionally, they failed to guarantee generalization to novel, unseen scenarios [7,8]. Due to these drawbacks, the field has witnessed a progressive shift from machine learning to deep learning techniques, creating many new research opportunities. A key advantage of deep learning is its layered data processing architecture, which enables models to learn from raw, unprocessed input data without complex preprocessing. This facilitates the analysis of multimodal sensor data [9]. A primary reason for the prevalence of deep learning in HAR is that machine learning algorithms depend greatly on human input and preprocessing of data before implementation [10]. In contrast, deep learning models can autonomously extract knowledge and insights directly from sensor data gathered by devices [11] without manual effort or domain expertise.

Currently, many sensor-based HAR systems require wearable devices to be placed in fixed positions on the body [12,13,14]. These systems are referred to in research as position-specific HARs. However, people often move their wearable gadgets to different on-body locations. This decreases recognition accuracy since sensor readings for the same motion can vary substantially across body placement sites. As such, the primary challenge for sensor-based HAR platforms is developing position-independent solutions that work reliably, regardless of a device’s location [15].

The key objective of this research is to develop a position-independent HAR system using deep learning techniques. To accomplish this goal, we introduce Att-ResBiGRU, a novel deep residual neural network designed to efficiently categorize human activities irrespective of device placement on the body. The model is trained end-to-end within a deep learning framework, eliminating the need for manual feature engineering.

This research introduces several advancements contributing to position-independent HAR:

- Introduction of Att-ResBiGRU: We propose a novel deep learning architecture called Att-ResBiGRU. This innovative model combines convolutional layers for feature extraction, residual bidirectional gated recurrent unit (GRU) modules for capturing temporal dependencies, and attention mechanisms for focusing on crucial information. This combination enables highly accurate recognition of activities, regardless of the device’s position.

- State-of-the-art performance: Extensive testing on benchmark datasets (PAMAP2, Opportunity, and REALWORLD16) demonstrates that our proposed model achieves the best results reported for position-independent HAR. It achieves F1-scores of 86.69%, 96.44%, and 96.23% on these datasets.

- Robustness against position variations: We compare our model against existing position-dependent and -independent methods using various real-world scenarios to validate its robustness to sensor position changes. Att-ResBiGRU effectively addresses the challenges associated with position independence.

- Impact of model components: Through ablation studies, we analyze the influence of incorporating residual learning and attention blocks on the model’s performance compared to baseline recurrent models. This quantifies the specific contributions of each component to the overall accuracy.

- Simplified deployment: This work establishes a highly reliable single model for classifying complex activities, regardless of a device’s position. This simplifies deployment by eliminating the need for complex position-dependent models.

The remainder of this paper is structured as follows. Section 2 provides a review of recent related works in HAR research. Section 3 presents an in-depth description of the proposed Att-ResBiGRU model. Section 4 details the experimental outcomes and results. Section 5 discusses the conclusions drawn from the research findings. Finally, Section 6 concludes this paper by considering challenging avenues for future work.

2. Related Works

This section reviews relevant research in sensor-based HAR. The specific topics covered include position-specific and position-independent HAR systems, deep learning techniques applied in HAR, and the publicly available datasets for developing and evaluating HAR models.

2.1. Activity Recognition Constrained to Fixed Device Locations

HAR researchers have introduced innovative methods for activity identification using peripheral sensors. To collect accelerometer data, participants were instructed by Kwapisz et al. [16] to carry a smartphone in the front pocket of their trousers. They trained an activity recognition model with the data, producing 43 features and achieving an accuracy exceeding 90%. They omitted domain-specific frequency features despite proposing novel characteristics like the duration between peaks and binned distribution. Bieber et al. [17] improved activity identification by combining accelerometer and sound sensor data. They attached the smartphone to the trouser pocket, using the ambient noise as supplementary information about the user’s surroundings. However, challenges arose as the microphone introduced noise, and no solution was found. Kwon et al. [18] employed a smartphone in a specific pocket position for unsupervised learning methodologies in activity recognition. This approach addressed the issue of label generation. Although the approach demonstrated a 90% accuracy rate, it did not cover routine daily activities like ascending and descending stairs.

Harasimowicz et al. [19] classified eight user activities using smartphone accelerometers, including sitting, strolling, running, lying, ascending, descending, standing, and turning. They explored the impact of window size on identification, achieving a 98.56% accuracy with the KNN algorithm. However, the study focused solely on data from a smartphone in a trouser pocket, neglecting smartphone orientation issues. Ustev et al. [20] introduced a human activity recognition algorithm utilizing magnetic field, accelerometer, and gyroscope sensors in smartphones. They transformed the smartphone’s coordinate system to the Earth’s magnetic coordinate system to mitigate device orientation issues. Shoaib et al. [21] identified intricate human activities using wrist-worn motion sensors on smartphones and smartwatches. While effective, their approach was constrained by the position and orientation of the devices.

Each study maintained a fixed smartphone position for activity recognition, yielding high accuracy. However, in practical scenarios, users seldom confine their wearable devices to a single location.

2.2. Position-Independent Activity Recognition

When faced with an unfamiliar or undisclosed position, the placement of sensors on an individual’s body is rarely fixed in practical scenarios. As a result, these algorithms encounter challenges in achieving significant accuracies. Hence, it becomes crucial to develop HAR models that are not reliant on specific positions and can adapt to various sensor locations, taking into account individual behaviors in real time [22].

The challenge becomes more complex when considering the placement of the smartphone. Henpraserttae et al. [23] conducted experiments involving six daily tasks with a smartphone positioned in three different ways. Models were created for each position using features insensitive to the device’s location. However, certain positions exhibited inaccuracies in activity recognition. Grokop et al. [24] explored the variability in smartphone positions by combining data from the accelerometer, ambient light sensor (ALS), proximity sensor, and camera intensity. This combination allowed them to accurately classify movement conditions and device locations, achieving respective accuracies of 66.8% and 92.6%. Adding more sensors to a smartphone could enhance precision but at the cost of increased resource consumption. Notably, their study should have covered ascending and descending stairs, analogous to walking.

Coskun et al. [25] introduced an innovative method for categorizing position and activity using only an accelerometer. To improve position recognition accuracy, they incorporated angular acceleration, achieving an 85% accuracy in activity recognition. Miao et al. [26] positioned smartphones in various pockets and used light and proximity sensors to determine pocket placement. They then collected raw data and extracted position-insensitive features for model training. The decision tree with the highest effectiveness in recognition and classification was selected, conserving smartphone resources and boosting recognition accuracy to 89.6%. However, their approach needed to account for hand and purse weight. Gu et al. [27] investigated user-independent movement detection using smartphone sensors, exploring positions such as holding with or without swaying motion and placement in trouser pockets during activity recognition.

2.3. Deep Learning Approaches for HAR

Advanced deep learning models like convolutional neural networks (CNNs) and recurrent neural networks (RNNs) have demonstrated remarkable effectiveness in various recognition tasks, including HAR. These models leverage hierarchical representations from raw sensor data, enabling them to extract relevant features autonomously and understand intricate temporal dependencies within sequences of activities. Researchers have developed deep learning frameworks for HAR [28,29,30,31,32,33], achieving accuracy and robustness across diverse user groups and environments.

Shi et al. [28] constructed a dataset from body movement sensors using the Boulic kinematic model. They proposed a deep convolutional generative adversarial network (DCGAN) and a pre-trained deep CNN architecture, VGG-16, for identifying three distinct categories of walking actions based on velocity. Although this approach effectively broadens the training set to prevent overfitting, it is limited to three categories of walking activities (fast walking and extremely fast walking). Ravi et al. [29] introduced CNN models with three distinct regularizations, achieving high accuracy in classifying four datasets—ActivMiles, WISDM v1.1, Daphnet FoG, and Skoda. Their focus on sensor orientation invariant and discriminative features maintained consistent accuracy for real-time classification with low power consumption. However, accuracy and computational durations were comparable to previous state-of-the-art approaches. Hammerla et al. [30] examined three datasets—Opportunity, PAMAP2, and Dolphin Gait—developing five deep models for each. They achieved high accuracy but failed to demonstrate consistent success across all benchmark HAR datasets, and their recommendations were limited to a few deep models. Xu et al. [31] utilized Inception, GoggLeNet, and GRU on three datasets, achieving enhanced generalizability. However, they did not address the issue of class imbalance in data relevant to practical HAR applications. Bhattacharya et al. [32] introduced Ensem-HAR, a stacking meta-model consisting of random forest and various deep learning models. Although the approach outperformed other works, the cumulative training time of four distinct models made it unsuitable for real-time HAR implementations.

3. Proposed Methodology

This section outlines a position-independent HAR framework to fulfill this study’s goal. The position-independent HAR framework developed in this research investigates the activities of individuals using wearable devices. It employs deep learning algorithms on sensor data collected from these devices to achieve position-independent HAR.

3.1. Data Acquisition

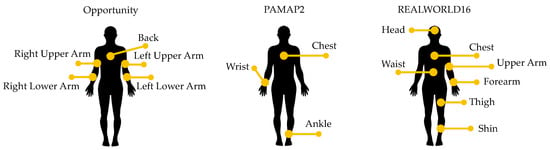

This study aimed to develop a HAR model that effectively deals with real-life situations where people often carry their wearable electronics in different positions. To explore position-independent HAR, three publicly accessible multi-position HAR datasets—Opportunity, PAMAP2, and REALWORLD16—were selected. Several factors support the choice of these datasets: (i) The data are gathered from diverse locations, as shown in Figure 1; (ii) The datasets are sufficiently large to support the application of deep learning algorithms; and (iii) The covered activities are diverse, including both small-scale activities (like gestures in the Opportunity dataset) and everyday daily activities.

Figure 1.

Positions of wearable sensors in the Opportunity, PAMAP2, and REALWORLD16 datasets.

3.1.1. Opportunity Dataset

In their investigation, Roggen et al. [34] introduced the Opportunity dataset for activity recognition, comprising 72 sensors capturing naturalistic activities in a sensor-rich setting. This dataset incorporates diverse sensors, including those embedded in objects, worn on the body, and placed in the environment. HAR research categorizes data from body-worn sensors, specifically targeting four types of movements and seventeen microactivities. For upper-body monitoring, five IMUs were strategically placed: on the back of the torso (Back), the left upper arm (LUA), the left lower arm (LLA), the right upper arm (RUA), and the right lower arm (RLA). Additionally, two sensors were attached to the user’s footwear. The study involved 12 subjects, utilizing 15 interconnected sensor systems with 72 sensors across ten modalities. These sensors seamlessly integrate into the subject’s surroundings, objects, and attire, making the dataset valuable for assessing various activity recognition approaches. Due to its unique attributes, the Opportunity dataset is exceptionally suitable for assessing various action identification methodologies.

Initially framed as an 18-category multi-classification challenge, the Opportunity dataset was streamlined to 17 categories by excluding the null class, an extra label. Table 1 shows a comprehensive list of these categories. From the Opportunity dataset, we collected 90 samples for each window, employing 3-second intervals with a sampling rate of 30 Hz.

Table 1.

Summary of the three multi-position HAR datasets used in this work.

3.1.2. PAMAP2 Dataset

Reiss and Stricker [35] introduced the PAMAP2 physical activity monitoring dataset, containing recordings from nine participants, encompassing both male and female subjects. These participants were instructed to engage in eighteen diverse lifestyle activities, ranging from leisure pursuits to household tasks, such as climbing and descending stairs, ironing, vacuum cleaning, lying, standing, walking, driving a car, performing Nordic walks, and more. Household activities included lying, sitting, standing, ascending, and descending stairs.

Over ten hours, the participants’ activity data were collected using three IMUs positioned on the dominant wrist, thorax, and ankle. Each IMU operated at a sampling rate of 100 Hz, while a heart rate sensor collected data at a rate of 9 Hz. Additionally, a thermometer was utilized. A summary of the results obtained from training models on the PAMAP2 dataset is presented in Table 1. In the PAMAP2 dataset, the sensor information underwent sampling within fixed-width sliding intervals lasting 3 s, devoid of overlap. This approach yielded 300 readings per window, given that the data were captured at 100 Hz.

3.1.3. REALWORLD16 Dataset

The HAR process starts with collecting sensor data recorded by peripheral devices. To implement this approach, the REALWORLD16 dataset [36] was chosen as the standardized public HAR dataset. The dataset includes data from acceleration, GPS, gyroscope, light, magnetic field, and sound-level sensors sampled at 50 Hz. Activities such as ascending and descending stairs, jumping, lying, standing, sitting, running, and walking were performed in real-world conditions. Fifteen subjects of diverse ages and genders—average age 31.9 ± 12.4, height 173.1 ± 6.9, weight 74.1 ± 13.8; eight males and seven females—engaged in each activity for ten minutes, except for jumping, which was repeated for approximately 1.7 min.

Wearable sensors were attached to each subject’s waist, forearm, head, chin, chest, and thigh to capture signals from various body positions. A video camera was used to document every movement, aiding the subsequent analysis. A detailed summary of the distinctive features of each REALWORLD16 dataset is presented in Table 1. The REALWORLD sensor data underwent sampling using fixed-width sliding windows lasting 3 s without overlap. This process led to 150 readings per window, as the recording was conducted at 50 Hz.

3.2. Data Preprocessing

3.2.1. Data Denoising

The raw data collected from the sensors might contain unwanted system measurement noise or unexpected disturbances caused by the individual’s active movements during the experiments. This noise can compromise the valuable information embedded in the signal. Hence, it was crucial to mitigate the impact of noise to extract meaningful insights from the signal for subsequent analysis. Commonly utilized filtration techniques include mean, low-pass, Wavelet, and Gaussian filters [37,38]. In our study, we opted for an average smoothing filter for signal denoising. This entailed applying the filter across all three dimensions along the accelerometer and gyroscope sensors.

3.2.2. Data Normalization

Data obtained from various sensors frequently display uneven scales, and the existence of outlying samples in the data can result in extended training durations and potential challenges in reaching convergence. To mitigate the impact of variations in the values of each channel, min-max normalization was implemented for each feature, projecting their values into the [0, 1] interval.

As expressed in Equation (1), the initial sensor data undergo normalization prior to processing, bringing them within the range of 0 to 1. This method addresses the challenge in model learning by standardizing the entire range of data values. This standardization facilitates faster convergence of gradient descents.

where represents the normalized data, n represents the number of channels, and and are the maximum and minimum values of the i-th channel, respectively.

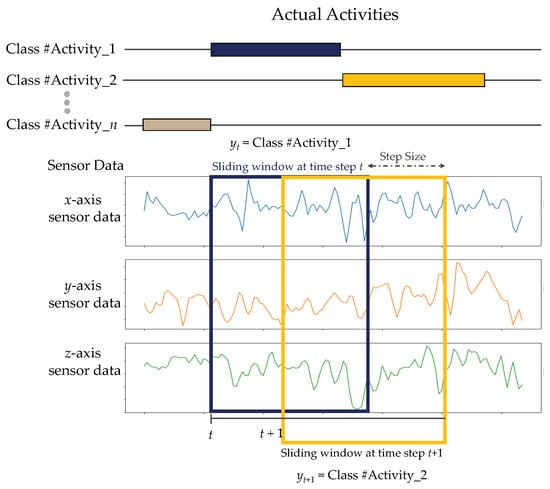

3.2.3. Data Segmentation

Considering the substantial amount of signal data captured by wearable sensors, it becomes impractical to input them into the HAR model simultaneously. Therefore, a crucial step is to conduct sliding-window segmentation before providing data to the model. This technique is widely employed in HAR to segment data and identify periodic and static activities, making it a popular method for this purpose [39]. The sensor signals are partitioned into fixed-length windows, employing a strategic approach to ensure comprehensive coverage of adjacent windows. This maximizes the number of training data samples and captures the smooth transition between different activities.

The sample data that are segmented by a sliding window with size N is a size of . The sample is denoted as:

We represent the signal data for sensor k at window time t as column vector . The transpose operation is denoted by T. K stands for the number of sensors and N represents the length of the sliding window. In Equation (2), K denotes the count of sensor channels supplying input time-series data streams. Each sensor mode serves as one feature dimension for the dataset. For instance, a triaxial accelerometer embedded in wearable devices would supply K = 3 input acceleration features, representing the x-, y-, and z-axes. Likewise, a sensor consisting of nine IMUs, each with 3-axis gyroscope, accelerometer, and magnetometer data, would encompass K = 9 overall features (three for each modality). To effectively leverage the relationships between windows and streamline the training process, the window data are segmented into sequences of windows, as shown in Figure 2, as follows:

where T represents the length of the window sequence and signifies the corresponding activity label for window . When windows encompass multiple activity classes, the window label is determined based on the most prevalent activity among the sample data.

Figure 2.

Illustration of fixed-length sliding-window technique used in this work.

3.3. The Proposed Att-ResBiGRU Model

The initial phase of sensor-based HAR involves gathering activity data through intelligent wearable devices. Subsequently, these data undergo preprocessing before being recognized as processed sensor data. Current identification methods are time-consuming and need help distinguishing similar activities, such as ascending and descending stairs.

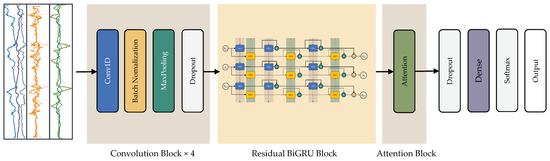

To address these issues, we propose a novel architecture named Att-ResBiGRU. The model’s network structure, depicted in Figure 3, consists of three key components: convolution, ResBiGRU, and attention blocks. The convolution block, the first component, is responsible for extracting spatial features from the preprocessed data. Adjusting the convolution stride efficiently reduces the time-series length, accelerating the recognition process. Furthermore, the improved ResBiGRU network is employed to extract time-series features from the data processed by the convolution block. The ResBiGRU component enhances the model’s capability to capture long-term dependencies in time-series data by combining the strengths of a bidirectional GRU (BiGRU) neural network and incorporating residual connections. This integration enhances the model’s grasp of complex temporal patterns, thus improving recognition accuracy.

Figure 3.

Detailed architecture of the proposed Att-ResBiGRU model.

We also introduce an attention mechanism to optimize the final recognition features’ efficiency. This mechanism calculates weights for the feature information generated by the ResBiGRU network, enabling the model to prioritize the most informative parts of the input data. By emphasizing critical features, the attention mechanism bolsters the model’s ability to differentiate between various activities, ultimately enhancing activity recognition accuracy.

The classification process of behavior information utilizes a fully connected layer and the SoftMax function. The outcome of this classification process is the recognition result, predicting the specific activity being performed. In the subsequent sections, we thoroughly explain each component, elucidating their functions and contributions within our proposed model.

3.3.1. Convolution Block

CNNs excel at extracting features from tensor data, making them particularly well suited for tasks like image analysis and behavioral recognition. This investigation employs the one-dimensional CNN (1D-CNN) for effective feature extraction. For the provided data, Figure 3 visually presents the representation of the time series on the vertical axis and the multi-axis channel features gathered by various sensors on the horizontal axis.

The 2D convolution is recognized for its localized nature, which can pose challenges when dealing with many sensors. This is due to the potential disruption of sensor channel integrity caused by local convolution. Conversely, the 1D convolution operates on behavioral units and processes all sensor channels concurrently. Consequently, when designing the model for spatial feature extraction, the 1D convolution is favored over the traditional 2D convolution. Utilizing the 1D convolution serves the purpose of feature extraction. The features obtained are considered spatial characteristics within an image, comprising sensor measurement samples. This perspective implies a spatial distribution connection among the samples instead of a time-series relationship.

For the computation of the 1D convolution, the input data undergo convolution with each filter and are subsequently activated through a non-linear activation function. This process is computed as follows:

The output data that are activated, represented by , are determined through a multi-step process. First, the raw input data, denoted as , interact with the weightings, , associated with the ith filter. This interaction involves convolving, or mathematically combining, the two elements. Next, a bias value, , is added to the convolved result. After that, a non-linear transformation function, f, is applied to the combined data. This non-linear function further alters the data. The passage indicates multiple filters, represented by the variable n.

This work utilizes a specialized activation function called the Smish function [40] in its convolutional layer. Compared to the commonly used Relu activation function [41], the Smish function is better suited for handling negative input values, an issue that frequently comes up when processing sensor data. The Smish activation brings additional benefits over Relu, given the characteristics of sensor feeds. The authors defined the Smish function through the following mathematical approach:

The pooling layer downsamples the data after activation using the same padding to maintain a consistent output size. Within the convolution blocks, adjusting the stride parameter of the pooling filters shortens the time-series data length by altering how much the filters downsample, as follows:

The length of the input time series is denoted as , the pooled kernel step size is represented as s, and the length of the pooled time series is denoted as .

Fluctuations in the neural network layer input distributions during training can cause gradient explosion or vanishing, known as the intermediate covariate shift problem [42]. To address this, Ioffe et al. [43] proposed batch normalization (BN). BN standardizes the batch data before each layer to stabilize training. It smooths gradient descent convergence, accelerating overall training. BN calculates the mean and variance of the input batches and then transforms them into standardized values for the next layer, as follows:

where represents the k-dimensional component of in the training set {x}, represents the mean of the k-dimensional component of all samples in the training set, and represents the standard deviation of the k-dimensional component of all samples in the training set.

As shown in Figure 3, each convolution block contains Conv1D, BN, Smish activation, and max-pooling layers. These represent the core building blocks. In our proposed Att-ResBiGRU model, we utilize a four-layer architectural approach to stack these convolution blocks for the entire network. The detailed hyperparameters of the convolution blocks are shown in Table A6 in Appendix A.

3.3.2. Residual BiGRU Block

Accurate recognition of human activities necessitates more than relying on convolution blocks for spatial pattern extraction, as human behaviors unfold over time in an ordered sequence. Models designed for sequential data, like RNNs, are better suited for processing behavioral time-series inputs. However, as the length of the input time series grows, RNNs face challenges with gradients fading during backpropagation and losing information from earlier time steps.

As initially proposed by Hochreiter et al. [44], long short-term memory (LSTM) networks are a type of gated recurrent neural network able to retain long-range dependencies in sequence data. Unlike standard recurrent networks, LSTMs leverage internal gating mechanisms to store information over an extended time. This unique architecture enables LSTMs to model lengthy temporal contexts more effectively. However, human behavior exhibits complex bidirectional dynamics influenced by past and future actions.

Although LSTM shows promise as an alternative to RNNs for addressing the vanishing gradient problem, the memory cells in this architecture contribute to increased memory usage. In 2014, Cho et al. [45] introduced a new RNN-based model called the GRU network. GRU is a simplified version of LSTM that lacks a separate memory cell [46]. In a GRU network, update and reset gates are utilized to control the extent of modification for each hidden state. This means they determine which knowledge is essential for transmission to the next state and which is not. The BiGRU network, a two-way GRU network that considers both forward and backward information, enhances the extraction of time-series features compared to the GRU network by incorporating bidirectional dependencies. Consequently, a BiGRU network is appropriate for extracting time-series features from behavioral data.

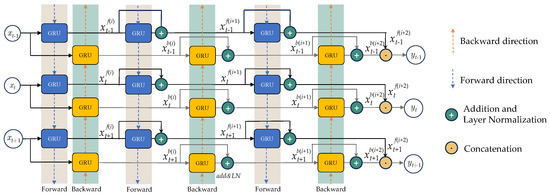

While the BiLSTM network exhibits proficiency in deriving features from time-series data, its capability to capture spatial features diminishes. Moreover, during the training phase, the issue of gradient disappearance becomes more pronounced as the number of layered layers increases. ResNet, a residual network, was introduced by the Microsoft Research team in 2015 as a solution to the issue of gradient disappearance [47]. The 152-layer network in question won the ILSVRC championship in 2015. Each block comprising the residual is denoted as follows:

The residual blocks are composed of two components: the direct mapping () and the residual portion ().

The encoder module of the Transformer model is similarly modified to accommodate the envisioned framework. Our inquiry presents an alternative framework that capitalizes on the advantages of the residual design utilizing the BiGRU network. Normalization techniques can be implemented within the BiGRU network. When considering recurrent neural networks, layer normalization (LN) is found to be more advantageous compared to BN [48]. LN is subjected to a similar computation process as BN and can be expressed as follows:

where denotes the input vector in the i-th dimension and represents the output subsequent to layer normalization.

This research presents ResBiGRU, a novel integration of a residual structure and layer normalization in a BiGRU network (see Figure 4). Detailed below is an explanation of the recursive feature information y:

Figure 4.

The structure of the ResBiGRU.

Layer normalization is referred to as in this specific context. In contrast, the management of input states within the GRU network is denoted as G. The variable t in represents the t-th moment in the time series. In contrast, f and b denote the forward and reverse phases. Furthermore, the value denotes the quantity of layered layers. Denoted as , the encoded information at time t is merged from the corresponding values of f and b.

The ResBiGRU component extracts features from the time-series data following the convolutional blocks. This component incorporates the advantages of BiGRUs, which can analyze data in both directions with residual connections. These connections help the model learn long-term dependencies within the data, allowing it to better understand complex temporal patterns and ultimately improve recognition accuracy.

3.3.3. Attention Block

The utilization of the attention mechanism is prevalent across multiple domains within the realm of deep learning. In addition to capturing positional relationships among data, models that depend on this mechanism can also evaluate the significance of particular data features based on their intrinsic properties [49]. When implementing RNNs in activity recognition, conventional methods frequently employ the final feature information () or the mean of the feature information (). These traditional methods need to account for the unique importance of various feature data in the context of behavior recognition, thus emphasizing a substantial shortcoming. By implementing the attention mechanism that Raffel and Ellis originally suggested [50], the precision of behavior prediction is improved. The calculation method for feature information C is as follows:

In this context, the fully connected layer is pivotal in facilitating the backpropagation-based acquisition of the expression function f. Throughout the training, the weight remains constant, whereas the feature information C gradually gains greater representational significance as its significance evolves.

3.4. Evaluation Metrics

Assessing the effectiveness of recognition involves employing a confusion matrix, a distinct element that effectively illustrates the efficiency of deep learning models. The mathematical portrayal of a confusion matrix in a multiclass scenario is delineated below. In this representation, the rows denote instances in a predicted class, whereas the columns signify cases in the actual class.

The components of confusion corresponding to each class are as follows:

- True positive: ;

- False positive: ;

- False negative: ;

- True negative: .

The mathematical expressions for these assessment metrics are formulated as follows:

The datasets employed in this study exhibit an imbalance in their distribution. In tackling the challenge of uneven class representation in the data, we adopted the F1-score as an evaluation metric to identify the optimal parameter combination for the model, as recommended in [51]. As indicated in [52], this metric measures the model’s accuracy, particularly in imbalanced dataset scenarios.

4. Experiments and Results

This section delineates the experimental configuration employed to implement the deep learning models utilized in this study.

4.1. Experimental Setup

The investigations utilized in this research were performed on the Google Colab Pro+ platform [53]. In order to streamline the process of training deep learning models, a Tesla V100-SXM2-16GB GPU module was utilized. A range of foundational deep learning models, including the proposed Att-ResBiGRU model, were executed utilizing the Python library in conjunction with the Tensorflow backend (version 3.9.1) and CUDA (version 8.0.6). The GPU was utilized to accelerate the training and evaluation of deep learning models. The examinations were conducted utilizing the following Python libraries:

- In order to read, manipulate, and comprehend the sensor data, the data management tools Numpy and Pandas were implemented.

- In order to visually represent and illustrate the results of data exploration and model evaluation, Matplotlib and Seaborn were employed.

- The Scikit-learn (Sklearn) library was utilized in sampling and data generation studies.

- TensorFlow, Keras, and TensorBoard were utilized to implement and train the deep learning models for model construction.

4.2. Experimental Results of Position-Dependent HAR

We evaluated the proposed methodology for various on-body positions in this particular instance. Evaluating position-dependent HAR was paramount. The relevant tables, specifically Table 2, Table 3 and Table 4, present the F1-scores and accuracies of the Att-ResBiGRU model as suggested. The on-body positions from the three HAR datasets—Opportunity, PAMAP2, and REALWORLD16—are presented in these tables.

Table 2.

Accuracies and F1-scores of the proposed Att-ResBiGRU model for position-dependent HAR across all five positions on the Opportunity dataset.

Table 3.

Accuracies and F1-scores of the proposed Att-ResBiGRU model for position-dependent HAR across all three positions on the PAMAP2 dataset.

Table 4.

Accuracies and F1-scores of the proposed Att-ResBiGRU model for position-dependent HAR across all seven positions on the REALWORLD16 dataset.

The findings presented in Table 2 enable the following significant observations to be drawn:

- The model demonstrated commendable effectiveness when identifying position-dependent human activities on the Opportunity dataset. Accuracy scores spanned from 84.76% to 89.06% across the five sensor positions.

- The F1-score of 85.69% and accuracy of 89.06% for the back position indicate that it produced the most valuable signals for activity recognition on the Opportunity dataset.

- The effectiveness of the left lower arm (LLA) position was the lowest, with an accuracy of 84.76% and an F1-score of 79.84%. This finding implies that the left lower arm motion may not be as indicative of the actions designated in the Opportunity dataset.

- The sensor’s efficacy generally declined as the position transitioned from the upper body (e.g., back, right upper arm) to the lower body (e.g., left upper arm, left lower arm). This is consistent with the hypothesis that signals from the upper body are more discriminatory for numerous prevalent activities.

- The LLA position exhibited the smallest standard deviation in performance variation between trials, with approximately 0.7% for accuracy and 1.2% for the F1-score. Other positions showed a more significant variance, indicating greater inconsistency between studies.

The outcomes illustrate the model’s capacity to attain commendable performance in the HAR domain, with the performance varying according to the sensor positions. Significantly, variations in the efficacy of each sensor position for activity recognition can be observed. The most informative signals were obtained from the back and upper-arm positions in the provided dataset.

The following are the principal findings presented in Table 3:

- The model attained exceptionally high F1-scores and accuracies across all three sensor positions on the PAMAP2 dataset, with the accuracy varying from 97.11% to 97.59%.

- The torso position exhibited superior performance, achieving an accuracy of 97.59% and an F1-score of 97.46%. This finding implies that the signals produced by upper-body motion were the most valuable for activity recognition in this dataset.

- The effectiveness of the hand (97.12% accuracy) and ankle (97.25% accuracy) positions was comparable, indicating similar information provided by wrist and ankle movements for action recognition on PAMAP2.

- The mean values of accuracy and F1-score standard deviations for each trial were below 0.3% and 0.26%, respectively, indicating that the model’s effectiveness remained consistent across numerous iterations.

- All loss values were below 0.13, indicating that the model accurately represented the training data.

The Att-ResBiGRU model consistently exhibited outstanding HAR performance, particularly across different positions, when evaluated on the PAMAP2 dataset. The thoracic position yielded the most critical signals. The model’s robust generalization, observed across many test trials, was substantiated by the minimal deviations.

The key conclusions from the results presented in Table 4 are as follows:

- The model achieved high F1-score ratings and accuracies ranging from 94.74% to 98.59% across all seven sensor positions on the REALWORLD16 dataset. Notably, the F1-score was 98.52%, and the quadriceps position achieved the highest accuracy of 98.59%, suggesting that thigh motion provided the most valuable signals for activity recognition in this dataset.

- Conversely, the accuracy and F1-score for the forearm position were the lowest, at 94.74% and 94.75%, respectively, indicating that forearm motion was the least indicative of the activities.

- Upper-body positions, including the chest, upper arm, and abdomen, consistently performed well, with accuracies above 97%. This finding supports the notion that the movement of the upper body provides valuable information regarding numerous everyday activities.

- Notably, the standard deviations for the accuracies and F1-scores were less than 0.5% across all positions, suggesting minimal variance between trials and indicating the consistent effectiveness of the model.

- Generally, the low loss values indicate that the model effectively aligned with the training data across different positions.

To sum up, the model exhibited robust position-dependent HAR on the REALWORLD16 dataset, with the most distinct signals arising from the motion of the thigh. The low variance in performance emphasizes the model’s reliable generalization.

4.3. Experimental Findings of Position-Independent HAR

The model developed in this study demonstrated potent interpretation for position-independent HAR across multiple datasets, as shown in Table 5. Specifically, it achieved high accuracies and F1-scores, regardless of the on-body sensor position. The F1-scores ranged from 90.27% on the Opportunity dataset to 96.61% on the PAMAP2 dataset. The highest scores were observed on the PAMAP2 dataset, indicating it provides the most useful aggregated signals for position-independent HAR. In contrast, performance was lowest on the Opportunity dataset, indicating greater variability in informative signals across positions.

Table 5.

Accuracies and F1-scores of the proposed Att-ResBiGRU model for position-independent HAR across the three HAR datasets.

Nevertheless, there was a slight variance between trials on all datasets, with accuracy and F1-score standard deviations under 0.3%. This points to consistent model performance across multiple test runs. Although loss values were relatively modest, they were slightly higher than those observed under position dependence. Some decline is expected when aggregating signals across various positions. Given its uniformity, the model successfully enabled position-independent HAR by aggregating multi-position signals, especially on PAMAP2. The results demonstrate the viability of machine learning for both position-dependent and position-independent sensor-based HAR.

5. Discussion

This section examines the experimental results presented in Section 4. Specifically, it contrasts the performance of the Att-ResBiGRU model in this work with baseline deep learning approaches. Two contexts are analyzed: position-dependent HAR, where the sensor location is fixed, and position-independent HAR, where the sensor position varies.

5.1. Comparison Results with Baseline Deep Learning Models on Position-Dependent HAR

To evaluate the proposed Att-ResBiGRU model, five baseline deep learning models (CNN, LSTM, bidirectional LSTM (BiLSTM), GRU, and BiGRU) were trained and tested using 5-fold cross-validation on three datasets: Opportunity, PAMAP2, and REALWORLD16. These models identify human activities based on wearable sensor data from various on-body locations specific to each dataset. For PAMAP2, this includes the hand, chest, ankle, and right upper arm. REALWORLD16 uses the back, right upper/lower arm, and left upper/lower arm. The other dataset utilizes the chest, forearm, head, shin, thigh, upper arm, and waist. Two separate experiments were conducted to evaluate the efficacy of the suggested Att-ResBiGRU model: position-dependent activity recognition, where the sensor location was specified, and position-independent recognition, where the position varied.

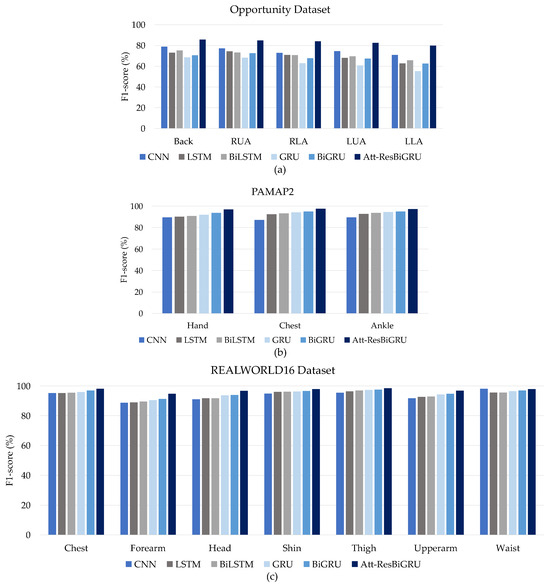

Compared to the CNN, LSTM, BiLSTM, GRU, and BiGRU models, the proposed Att-ResBiGRU model achieved superior F1-scores across all sensor locations and datasets, as shown in Figure 5. The most significant performance gains were observed on the Opportunity dataset, with F1-scores over 5% higher than the next most satisfactory model. On the PAMAP2 and REALWORLD16 datasets, Att-ResBiGRU showed modest but meaningful improvements of around 2% in the F1-score over alternative models. CNN could have performed better overall, highlighting the limitations of solely using convolutional layers for sequential sensor data classification. The close scores between BiGRU and Att-ResBiGRU demonstrate the advantages of bidirectional architectures over standard LSTM and GRU for human activity recognition. However, the additional residual connections and attention mechanisms in Att-ResBiGRU led to a significant performance boost compared to BiGRU, especially on the Opportunity dataset.

Figure 5.

Comparison results of five deep learning models and the proposed Att-ResBiGRU model in position-dependent HAR: (a) Opportunity, (b) PAMAP2, and (c) REALWORLD16 datasets.

The results demonstrate significant improvements in performance using the Att-ResBiGRU model compared to standard deep learning models for position-based HAR on various datasets. Incorporating residual connections and attention components substantially enhances the modeling of sequential sensor information.

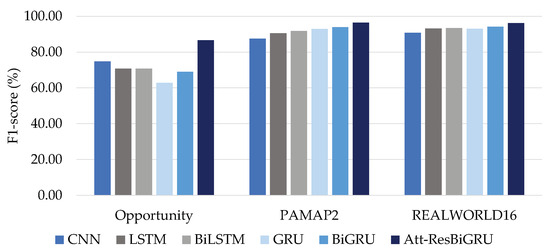

5.2. Comparison Results with Baseline Deep Learning Models on Position-Independent HAR

The preceding section concentrated on HAR, which relies on position information. The proposed Att-ResBiGRU was evaluated against five fundamental deep neural networks (CNN, LSTM, BiLSTM, GRU, and BiGRU), demonstrating superior performance. In this part, we assess the position-independent proficiency of Att-ResBiGRU by comparing it with established recurrent and convolutional baseline architectures. The evaluation used the Opportunity, PAMAP2, and REALWORLD16 datasets, employing consistent experimental protocols.

Examining the outcomes of the position-independent human activity recognition comparison presented in Figure 6 across the Opportunity, PAMAP2, and REALWORLD16 benchmark datasets revealed several noteworthy observations. Initially, our proposed Att-ResBiGRU framework consistently attained the highest average F1-scores, reaching 86.69%, 96.44%, and 96.23% on the dataset compilations. This underscores its advanced generalization capabilities.

Figure 6.

Comparison results of five baseline deep learning models and the proposed Att-ResBiGRU model in position-independent HAR.

Specifically, when confronted with the intricate activities in the Opportunity dataset, the performance of the proposed model surpassed that of RNN variants such as LSTM and GRU by over 15% while also outperforming the CNN baseline by nearly 10% in terms of the F1-score. The incorporation of residual bidirectionality and attention mechanisms demonstrably enhances disambiguation fidelity.

These advantages become even more evident when assessing the daily activity datasets of PAMAP2 and REALWORLD16. On PAMAP2, we surpassed the performance of BiGRU by 2.56%, and on REALWORLD16, we surpassed alternative fusion approaches by more than 1.95% in terms of the F1-score, substantiating our model’s efficacy in recognizing sequential daily living motions.

5.3. Comparison Results with State-of-the-Art Models

To underscore the importance and dependability of the proposed Att-ResBiGRU in both position-dependent and position-independent HAR, this research conducted a comparison of performance using the same benchmark HAR datasets employed in this study, namely the PAMAP2 and REALWORLD16 datasets, against state-of-the-art studies.

When scrutinizing the outcomes of position-dependent HAR on the PAMAP2 dataset, as presented in Table 6, several noteworthy observations can be made regarding the performance of our Att-ResBiGRU model compared to earlier state-of-the-art approaches. Most notably, our model achieved superior F1-scores across all three positions of wearable devices—wrist, chest, and ankle. Specifically, we obtained a 96.95% F1-score for the hand, 97.46% F1-score for the chest, and 97.17% F1-score for the ankle.

Table 6.

Comparison results with state of the art in position-dependent HAR using sensor data from the PAMAP2 dataset.

This signifies a noteworthy improvement of over 3% in absolute terms compared to the best-performing random forest (RF), support vector machine (SVM), and decision tree (DT) [22] models for the hand position. Similarly, enhancements of nearly 1% were observed compared to previous works [22] utilizing chest and ankle sensors.

Examining the outcomes of HAR based on sensor positions in the REALWORLD16 dataset, as displayed in Table 7, revealed consistent enhancements in performance through the application of our proposed Att-ResBiGRU methodology when compared to prior state-of-the-art approaches [54,55] across all seven sensor locations.

Table 7.

Comparison results with state of the art in position-dependent HAR using sensor data from the REALWORLD16 dataset.

Notably, we attained F1-scores exceeding 96% on the chest, upper-arm, and waist positions, surpassing 94% on the wrist/forearm, and exceeding 97% on the head and shin positions using the proposed Att-ResBiGRU model. This signifies substantial improvements in F1-scores ranging from 3% to 13% in absolute terms when compared to the previously top-performing two-stream CNN [54] and random forest (RF) model [55].

In position-independent HAR, our examination involved assessing the effectiveness of Att-ResBiGRU by comparing it with currently established leading techniques outlined in [54,55,56]. We opted for this comparison because the authors provided evaluations on the identical PAMAP2 and REALWORLD16 datasets. The comparative analysis is presented in Table 8, showcasing the superior results achieved by Att-ResBiGRU compared to previous studies’ findings.

Table 8.

Comparison results with state of the art in position-dependent HAR using sensor data from the PAMAP2 dataset.

Examining the outcomes presented in Table 8, which compares the performance of our proposed Att-ResBiGRU model with existing cutting-edge techniques in position-independent human activity recognition on the PAMAP2 and REALWORLD16 benchmark datasets, revealed highly promising advancements in performance.

On the PAMAP2 dataset, our methodology achieved an F1-score of 96.44%, surpassing all baseline methods such as RF, SVM, k-nearest neighbors (KNN), Bayes Net (NB), and DT, which exhibited F1-scores ranging from 78% to 91%. Similarly, on the REALWORLD16 dataset, we attained a 96.23% F1-score, outperforming previous works utilizing CNN, LSTM, and multi-layer perceptron (MLP) architectures that reported F1-scores between 88% and 90%. Notably, a multi-stream CNN architecture achieved an F1-score of 90%, a mark we surpassed through our comprehensive management of positional independence.

5.4. Effects of Complex Activities on Position-Independent HAR

The effectiveness of systems in recognizing human activities without relying on position information may need to be improved when dealing with intricate and dynamic real-world activities. To assess the capacity of our Att-ResBiGRU model to handle more complex real-world activities, we conducted a multi-label evaluation encompassing locomotive movements and activities of daily living on the Opportunity, PAMAP2, and REALWORLD16 datasets.

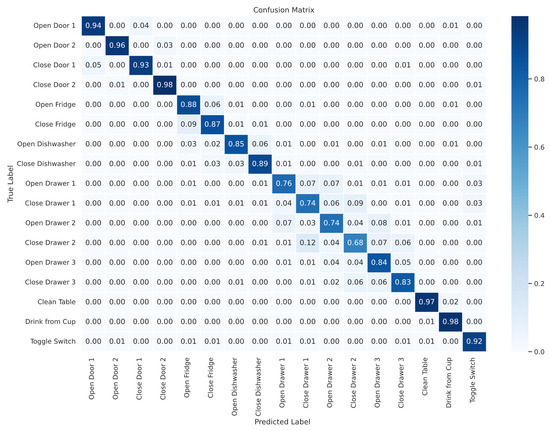

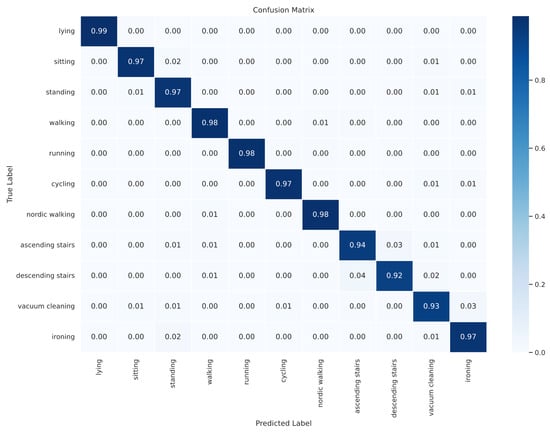

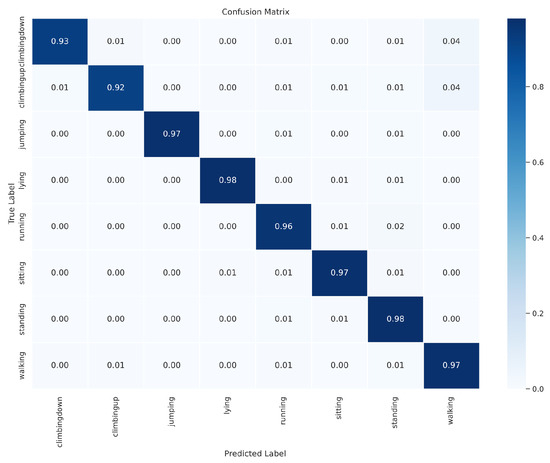

Upon scrutinizing the confusion matrices for the Opportunity and PAMAP2 datasets, we observed robust performance in accurately classifying a significant portion of complex ambulation, gesture, and daily living activities using our Att-ResBiGRU model. The misclassifications tended to concentrate along the main diagonals. Overall accuracy levels exceeded 90% for Opportunity and 96% for PAMAP2 across diverse activity sets, as illustrated in Figure 7 and Figure 8, respectively. Additionally, the accuracy information of the confusion matrix of REALWORLD16 dataset is shown in Figure 9.

Figure 7.

Confusion matrix for the proposed Att-ResBiGRU model from the Opportunity dataset.

Figure 8.

Confusion matrix for the proposed Att-ResBiGRU model from the PAMAP2 dataset.

Figure 9.

Confusion matrix for the proposed Att-ResBiGRU model from the REALWORLD16 dataset.

Nevertheless, certain instances posed challenges in accurately distinguishing between subtle sequential pairs of activities, particularly those involving intricate limb or joint movements and swift transitions. To illustrate, about 4% of instances representing the “Open door 1” activity in the Opportunity dataset were incorrectly classified as the similar “Close door 1” activity, introducing difficulties in discerning the completion of complex interactions.

Moreover, in the case of PAMAP2, there was a 12.3% rate of confusion between “Ascending stairs” and “Descending stairs.” Predictably, activities with more consistent signals, such as Cycling, were easier to discern compared to these transient multi-stage sequences. This underscores the potential to incorporate additional positional constraints and environmental triggers to improve the detection of activity commencement and conclusion.

5.5. Ablation Studies

In the realm of neural networks, researchers often use ablation studies to understand how different parts of a model contribute to its overall performance [57]. This technique involves systematically removing or modifying components and observing the resulting impact. Following this approach, we conducted three ablation studies on our proposed Att-ResBiGRU model. In each case, we altered specific blocks or layers to assess their influence on the model’s accuracy [58,59]. By analyzing the results of these studies, we identified the configuration that yielded the best recognition performance, thus optimizing our model’s effectiveness.

In analyzing model variations, the F1-score serves as a crucial metric. This safeguards against inflated evaluation scores from merely selecting the predominant class within imbalanced datasets. Instead, it furnishes precise insights into the model’s proficiency in handling diverse class activities [12].

5.5.1. Impact of Convolution Blocks

To understand how adding convolution blocks affected our model’s performance, we conducted ablation experiments on the three datasets (Opportunity, PAMAP2, and REALWORLD16) using wearable sensor data.

We compared our proposed model with and without convolution blocks. The model without them served as the baseline. As shown in Table 9, the baseline model performed the worst, possibly due to difficulties capturing spatial relationships between data points. Conversely, the model with convolution blocks achieved significantly better results. The F1-score improved across all three datasets: Opportunity (from 86.56% to 86.69%), PAMAP2 (from 95.13% to 96.44%), and REALWORLD16 (from 95.80% to 96.23%). These findings demonstrate the positive impact of incorporating convolution blocks in our model for activity recognition tasks.

Table 9.

Impact of convolution blocks.

5.5.2. Impact of the ResBiGRU Block

We conducted another ablation experiment to further assess the convolutional block’s ability to capture spatial features. We compared our full model with a modified version that lacked the residual BiGRU block. This modified version served as the baseline.

Table 10 summarizes the results. The model with the residual BiGRU block achieved significantly better performance, demonstrating the effectiveness of this block in processing temporal information. The F1-score improvements were substantial across all datasets: Opportunity (1.66%), PAMAP2 (1.55%), and REALWORLD16 (2.07%). These findings highlight the importance of the residual BiGRU block in enhancing the model’s ability to recognize activities from time-series data.

Table 10.

Impact of the ResBiGRU block.

5.5.3. Effect of the Attention Block

Our proposed Att-ResBiGRU model incorporates an attention mechanism that directs focus toward crucial aspects of the learned spatial-temporal features before classification. This mechanism is vital in achieving position invariance by selectively reweighting signals and suppressing noise.

We conducted an ablation experiment to verify the attention block’s significance. We created a modified version of the model that lacked the attention block while keeping the convolutional and residual BiGRU modules intact. This modified model served as the baseline for comparison.

We evaluated both models on all three benchmark datasets. As shown in Table 11, the Att-ResBiGRU model with the attention block achieved superior performance across all datasets. The F1-score improvements were 0.38% for Opportunity, 1.37% for PAMAP2, and 1.00% for REALWORLD16. These results demonstrate the effectiveness of the attention mechanism in enhancing the model’s recognition accuracy.

Table 11.

Impact of the attention block.

Our Att-ResBiGRU model efficiently extracts informative features from wearable sensor data by combining convolutional blocks, a residual BiGRU block, and an attention mechanism. This combination effectively captures spatial relationships, temporal dependencies, and crucial aspects of the data, leading to superior performance in activity recognition tasks.

5.6. Complexity Analysis

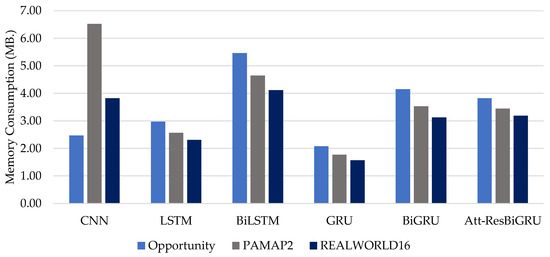

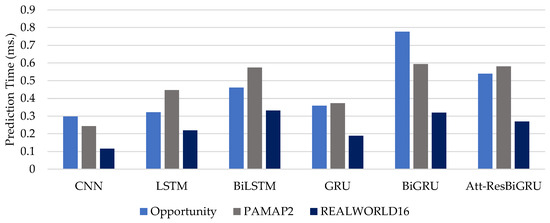

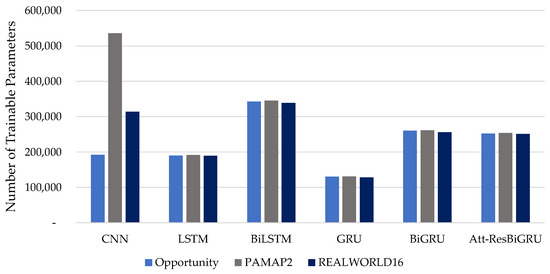

To assess the resource requirements of our proposed model, we conducted a complexity analysis inspired by the approach presented in [60] for HAR. This analysis evaluated the memory usage, average prediction time, and number of parameters that can be adjusted during training. All models, including the baseline versions, were tested on the same benchmark datasets (Opportunity, PAMAP2, and REALWORLD16).

5.6.1. Memory Consumption

We compared the memory usage of our Att-ResBiGRU model with several baseline architectures (CNN, LSTM, BiLSTM, GRU, and BiGRU) during prediction on the test datasets. We employed the compute unified device architecture (CUDA) profiling tools to measure memory consumption. Figure 10 shows the results. Our model required an average of 3MB of RAM, which was used to store the encoder features, temporal hidden states, classifier weights, and outputs. This was lower than the baseline CNN, which consumed 4.27 MB, on average, for shorter sequences. However, the standalone BiGRU model did not capture spatial information through convolutions and used slightly less memory (3.60 MB, on average). Overall, Att-ResBiGRU offered a good balance between accuracy and memory efficiency.

Figure 10.

Memory consumption in megabytes of deep learning models used in this work.

5.6.2. Prediction Time

To delve deeper into the models’ resource usage, we compared their prediction speeds. We measured the average time each model took to process a set of samples from the test data. This average time represents the mean prediction time.

Figure 11 illustrates the average time in milliseconds each model took to process a single data window across the three datasets (Opportunity, PAMAP2, and REALWORLD16). The BiGRU models were the slowest, requiring 0.32–0.78 ms per window for all datasets. This is likely due to their sequential processing nature, where each step relies on the previous output. In contrast, convolutional layers, like those used in Att-ResBiGRU, can operate in parallel, leading to faster processing. Consequently, Att-ResBiGRU achieved faster prediction times, averaging 0.54 ms, 0.58 ms, and 0.27 ms for Opportunity, PAMAP2, and REALWORLD16, respectively.

Figure 11.

Mean prediction time in milliseconds of deep learning models used in this work.

5.6.3. Trainable Parameters

After examining memory usage and prediction speed, we now turn to the third metric of model complexity: the number of trainable parameters. In deep learning models, each adjustable weight during training counts as a trainable parameter. Generally, a higher number of parameters allows a model to capture more intricate data patterns but also increases the risk of overfitting on limited training data.

Figure 12 displays the number of trainable parameters for the various deep learning models used in this study (CNN, LSTM, BiLSTM, GRU, BiGRU, and our proposed Att-ResBiGRU). These values were obtained from model summaries across the benchmark HAR datasets (Opportunity, PAMAP2, and REALWORLD16). As expected, based on their complexity, the baseline models showed varying parameter counts. The GRU emerged as the simplest model, with around 130,000 parameters for each dataset. Conversely, the CNN boasted the highest parameter count, signifying its greater complexity. Our proposed Att-ResBiGRU fell between these extremes, exhibiting a moderate parameter count of approximately 250,000 across all datasets. Att-ResBiGRU maintained a lower parameter count compared to CNN, BiLSTM, and BiGRU.

Figure 12.

Number of trainable parameters of deep learning models used in this work.

5.7. Interpretation of the Proposed Model

Deep learning models, often considered black boxes, require explanation techniques like LIME to understand their decision-making processes. LIME helps us analyze how the model arrives at specific predictions and how different features contribute to those outcomes [61]. Building trust in deep learning models for HAR necessitates examining how they classify individual data points across various categories. This approach can reduce the number of features considered, potentially leading to faster training times and improved accuracy by focusing on the most influential elements. To establish clinical trust in deep learning approaches for activity prediction, it is crucial to understand the role of motion features captured by sensors like accelerometers, gyroscopes, and magnetometers. Explainable AI (XAI) can be valuable in exploring how these features contribute to the model’s classification decisions.

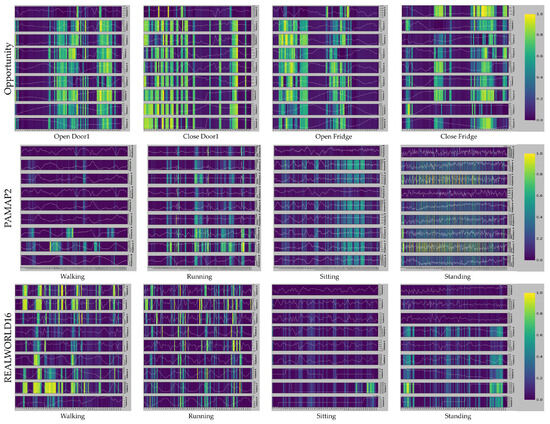

To enhance our model’s understandability and transparency, we leveraged a technique called Grad-CAM (Gradient-weighted Class Activation Mapping) [62]. This technique helps us visualize and pinpoint the specific regions within the sensor data that significantly influence the model’s predictions.

For time-series data, Grad-CAM identified the most crucial moments within the sequence that influenced the network’s classification decision. Figure 13 showcases an example sequence, with a colormap highlighting these critical regions [63]. In this study, we extracted and normalized the activation features generated by Grad-CAM to a range between 0 and 1. Figure 13 displays the Grad-CAM activations for randomly chosen segments on the Opportunity, PAMAP2, and REALWORLD16 datasets using our proposed Att-ResBiGRU models. Lighter colors indicate higher activation values, signifying a more significant impact on the prediction. The results for each dataset (Opportunity, PAMAP2, and REALWORLD16) are presented in rows 1, 2, and 3, respectively.

Figure 13.

Visualization of the Grad-CAM method.

6. Conclusions, Limitations, and Future Work

This study investigates the potential of deep learning models for recognizing human activities, regardless of the user’s position. We propose a novel deep residual network, Att-ResBiGRU, to achieve high accuracy in activity identification. This network incorporates three key components:

- Convolutional blocks: These blocks extract spatial features from the sensor data. Each block uses a 1D convolutional layer to capture patterns, followed by batch normalization for stability and pooling to reduce data size while preserving key information.

- ResBiGRU block: This block captures temporal features by considering both past and future data points in the sequence. This bidirectional approach effectively models the dependencies and dynamics within the data.

- Attention mechanism: This mechanism assigns greater importance to informative features, enhancing the model’s ability to distinguish between similar activities and improving recognition accuracy.

We evaluated Att-ResBiGRU on three public datasets, achieving overall F1-scores of 86.69%, 96.44%, and 96.23% for position-independent HAR on Opportunity, PAMAP2, and REALWORLD16, respectively. Ablation experiments confirmed the effectiveness of each component, demonstrating the model’s superior ability to differentiate between similar actions.

Furthermore, our proposed model outperformed existing state-of-the-art techniques, showcasing its exceptional performance in position-independent HAR. Notably, Att-ResBiGRU surpassed the current leading method, establishing itself as a robust single classifier for this task. We also investigated the model’s practical utility in situations without regard to position or subject, an area with considerable promise that has yet to see much research.

6.1. Limitations

Although our experiments showcased promising results for position-independent activity recognition using affordable wearable sensors, there are challenges to consider when deploying this deep learning model in real-world settings:

- Computational limitations: The Att-ResBiGRU model requires up to 4MB of memory, exceeding the capabilities of low-power wearable devices like smartwatches. To address this, model compression and quantization techniques are necessary for deployment on resource-constrained devices.

- Sensor drift: Sensor performance can degrade over time, leading to changes in signal distribution and potentially impacting the model’s accuracy. Implementing mechanisms for recalibration or adaptive input normalization would enhance robustness.

- Battery life: Continuous sensor data collection for long-term activity tracking rapidly depletes battery life. Optimizing duty-cycling strategies is crucial to enable more extended monitoring periods.

- Privacy concerns: Transmitting raw, multi-dimensional sensor data raises privacy concerns, as it could reveal sensitive information about daily activities or underlying health conditions. Federated learning and selective feature-sharing approaches could mitigate these concerns and encourage user adoption

6.2. Future Work

Although our research lays the groundwork, further efforts are needed to achieve higher accuracy in position-independent HAR. Here are some promising avenues for future exploration:

- Expanding data sources: Although this work focused on inertial sensors from wearable devices, future studies could incorporate data from environmental sensors like pressure, humidity, and even CCTV frames. This multi-modal approach could enhance the model’s ability to recognize activities by leveraging contextual information beyond the user’s body.

- Cross-domain generalizability: By incorporating data from diverse sources, we can assess the model’s ability to adapt and perform well in different environments. This could lead to more robust and generalizable solutions.

- User-centered design: Future studies should involve qualitative user experience studies to ensure user acceptance and comfort. Gathering feedback from relevant patient populations and clinical experts will be crucial in informing the design of unobtrusive and user-friendly systems.

These future directions hold the potential to significantly improve the accuracy, usability, and real-world applicability of position-independent HAR systems.

Author Contributions

Conceptualization, S.M. and A.J.; methodology, S.M.; software, A.J.; validation, A.J.; formal analysis, S.M.; investigation, S.M.; resources, A.J.; data curation, A.J.; writing—original draft preparation, S.M.; writing—review and editing, A.J.; visualization, S.M.; supervision, A.J.; project administration, A.J.; funding acquisition, S.M. and A.J. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Thailand Science Research and Innovation Fund; the University of Phayao (Grant No. FF67-UoE-Sakorn); and King Mongkut’s University of Technology North Bangkok under Contract No. KMUTNB-67-KNOW-12.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All data are presented in the main text.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Table A1.

A summary of the hyperparameters for the CNN network used in this work.

Table A1.

A summary of the hyperparameters for the CNN network used in this work.

| Stage | Hyperparameters | Values | |

|---|---|---|---|

| Architecture | 1D Convolution | Kernel Size | 5 |

| Stride | 1 | ||

| Filters | 64 | ||

| Dropout | 0.25 | ||

| Max Pooling | 2 | ||

| Flatten | - | ||

| Training | Loss Function | Cross-entropy | |

| Optimizer | Adam | ||

| Batch Size | 64 | ||

| Number of Epochs | 200 | ||

Table A2.

A summary of the hyperparameters for the LSTM network used in this work.

Table A2.

A summary of the hyperparameters for the LSTM network used in this work.

| Stage | Hyperparameters | Values |

|---|---|---|

| Architecture | LSTM Unit | 128 |

| Dropout | 0.25 | |

| Dense | 128 | |

| Training | Loss Function | Cross-entropy |

| Optimizer | Adam | |

| Batch Size | 64 | |

| Number of Epochs | 200 |

Table A3.

A summary of the hyperparameters for the BiLSTM network used in this work.

Table A3.

A summary of the hyperparameters for the BiLSTM network used in this work.

| Stage | Hyperparameters | Values |

|---|---|---|

| Architecture | BiLSTM Unit | 128 |

| Dropout | 0.25 | |

| Dense | 128 | |

| Training | Loss Function | Cross-entropy |

| Optimizer | Adam | |

| Batch Size | 64 | |

| Number of Epochs | 200 |

Table A4.

A summary of the hyperparameters for the GRU network used in this work.

Table A4.

A summary of the hyperparameters for the GRU network used in this work.

| Stage | Hyperparameters | Values |

|---|---|---|

| Architecture | GRU Unit | 128 |

| Dropout | 0.25 | |

| Dense | 128 | |

| Training | Loss Function | Cross-entropy |

| Optimizer | Adam | |

| Batch Size | 64 | |

| Number of Epochs | 200 |

Table A5.

A summary of the hyperparameters for the BiGRU network used in this work.

Table A5.

A summary of the hyperparameters for the BiGRU network used in this work.

| Stage | Hyperparameters | Values |

|---|---|---|

| Architecture | BiGRU Unit | 128 |

| Dropout | 0.25 | |

| Dense | 128 | |

| Training | Loss Function | Cross-entropy |

| Optimizer | Adam | |

| Batch Size | 64 | |

| Number of Epochs | 200 |

Table A6.

A summary of the hyperparameters for the Att-ResBiGRU network used in this work.

Table A6.

A summary of the hyperparameters for the Att-ResBiGRU network used in this work.

| Stage | Hyperparameters | Values | |

|---|---|---|---|

| Architecture | (Convolution Block) | ||

| 1D Convolution | Kernel Size | 5 | |

| Stride | 1 | ||

| Filters | 256 | ||

| Batch Normalization | - | ||

| Activation | Smish | ||

| Max Pooling | 2 | ||

| Dropout | 0.25 | ||

| 1D Convolution | Kernel Size | 5 | |

| Stride | 1 | ||

| Filters | 128 | ||

| Batch Normalization | - | ||

| Activation | Smish | ||

| Max Pooling | 2 | ||

| Dropout | 0.25 | ||

| 1D Convolution | Kernel Size | 5 | |

| Stride | 1 | ||

| Filters | 64 | ||

| Batch Normalization | - | ||

| Activation | Smish | ||

| Max Pooling | 2 | ||

| Dropout | 0.25 | ||

| 1D Convolution | Kernel Size | 5 | |

| Stride | 1 | ||

| Filters | 32 | ||

| Batch Normalization | - | ||

| Activation | Smish | ||

| Max Pooling | 2 | ||

| Dropout | 0.25 | ||

| (Residual BiGRU Block) | |||

| ResBiGRU_1 | Neural | 128 | |

| ResBiGRU_2 | Neural | 64 | |

| (Attention Block) | |||

| Dropout | 0.25 | ||

| Dense | 128 | ||

| Activation | SoftMax | ||

| Training | Loss Function | Cross-entropy | |

| Optimizer | Adam | ||

| Batch Size | 64 | ||

| Number of Epochs | 200 | ||

References

- Mekruksavanich, S.; Jitpattanakul, A. LSTM Networks Using Smartphone Data for Sensor-Based Human Activity Recognition in Smart Homes. Sensors 2021, 21, 1636. [Google Scholar] [CrossRef]

- Diraco, G.; Rescio, G.; Caroppo, A.; Manni, A.; Leone, A. Human Action Recognition in Smart Living Services and Applications: Context Awareness, Data Availability, Personalization, and Privacy. Sensors 2023, 23, 6040. [Google Scholar] [CrossRef] [PubMed]

- Maity, S.; Abdel-Mottaleb, M.; Asfour, S.S. Multimodal Low Resolution Face and Frontal Gait Recognition from Surveillance Video. Electronics 2021, 10, 1013. [Google Scholar] [CrossRef]

- Khalid, A.M.; Khafaga, D.S.; Aldakheel, E.A.; Hosny, K.M. Human Activity Recognition Using Hybrid Coronavirus Disease Optimization Algorithm for Internet of Medical Things. Sensors 2023, 23, 5862. [Google Scholar] [CrossRef] [PubMed]

- Stisen, A.; Blunck, H.; Bhattacharya, S.; Prentow, T.S.; Kjærgaard, M.B.; Dey, A.; Sonne, T.; Jensen, M.M. Smart Devices Are Different: Assessing and MitigatingMobile Sensing Heterogeneities for Activity Recognition. In Proceedings of the 13th ACM Conference on Embedded Networked Sensor Systems (SenSys’15), Seoul, Republic of Korea, 1–4 November 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 127–140. [Google Scholar] [CrossRef]

- Nweke, H.F.; Teh, Y.W.; Al-garadi, M.A.; Alo, U.R. Deep learning algorithms for human activity recognition using mobile and wearable sensor networks: State of the art and research challenges. Expert Syst. Appl. 2018, 105, 233–261. [Google Scholar] [CrossRef]

- Ha, S.; Yun, J.M.; Choi, S. Multi-modal Convolutional Neural Networks for Activity Recognition. In Proceedings of the 2015 IEEE International Conference on Systems, Man, and Cybernetics, Hong Kong, China, 9–12 October 2015; pp. 3017–3022. [Google Scholar] [CrossRef]

- Zebin, T.; Scully, P.J.; Ozanyan, K.B. Human activity recognition with inertial sensors using a deep learning approach. In Proceedings of the 2016 IEEE SENSORS, Orlando, FL, USA, 30 October–3 November 2016; pp. 1–3. [Google Scholar] [CrossRef]

- Chen, K.; Zhang, D.; Yao, L.; Guo, B.; Yu, Z.; Liu, Y. Deep Learning for Sensor-Based Human Activity Recognition: Overview, Challenges, and Opportunities. ACM Comput. Surv. 2021, 54, 77. [Google Scholar] [CrossRef]

- Fedorov, I.; Adams, R.P.; Mattina, M.; Whatmough, P.N. SpArSe: Sparse Architecture Search for CNNs on Resource-Constrained Microcontrollers. In Proceedings of the 33rd International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; Curran Associates Inc.: Red Hook, NY, USA, 2019. [Google Scholar]

- Zhang, Y.; Suda, N.; Lai, L.; Chandra, V. Hello Edge: Keyword Spotting on Microcontrollers. arXiv 2018, arXiv:1711.07128. Available online: http://xxx.lanl.gov/abs/1711.07128 (accessed on 5 January 2024).