Abstract

Existing algorithms for enhancing low-light images predominantly focus on the low-light region, which leads to over-enhancement of the glare region, and the high complexity of the algorithm makes it difficult to apply it to embedded devices. In this paper, a GS-AGC algorithm based on regional luminance perception is proposed. The indirect perception of the human eye’s luminance vision was taken into account. All similar luminance pixels that satisfied the luminance region were extracted, and adaptive adjustment processing was performed for the different luminance regions of low-light images. The proposed method was evaluated experimentally on real images, and objective evidence was provided to show that its processing effect surpasses that of other comparable methods. Furthermore, the potential practical value of GS-AGC was highlighted through its effective application in road pedestrian detection and face detection. The algorithm in this paper not only effectively suppressed glare but also achieved the effect of overall image quality enhancement. It can be easily combined with the embedded hardware FPGA for acceleration to improve real-time image processing.

1. Introduction

In a low-light environment, light intensity is not sufficient. Additionally, the presence of vehicle high beams, street lights, illuminators, and other light sources can create glare, causing an image to appear stratified due to differences in luminance [1]. This results in some regions of the image being over-illuminated while the surrounding regions have very low brightness. As a result, the visibility of details and local contrast in these regions is affected. In real-life scenarios, the glare produced by high-intensity headlights of oncoming vehicles during night-time encounters [2] can easily cause discomfort to the driver and interfere with their judgment. Therefore, it is crucial to enhance image quality and utilize embedded device hardware to effectively suppress glare in real time, ensuring the algorithm’s practicality in real-world scenarios.

Various image enhancement algorithms have been proposed by researchers worldwide to improve image visibility in low-light environments. Each algorithm has its own implementation and varying effects. Notably, Mandal G. et al. [3] have employed frame region segmentation combined with localized enhancement techniques, effectively highlighting enhancement information. However, while their approach achieves good overall visual enhancement and glare suppression, it involves more complex image processing. In the field of night-time low-light image defogging, several methods have been proposed to suppress the glow caused by haze or fog particles. Among them, W. Wang et al. [4] utilized luminescent color correction to address the issue of glow elimination during foggy nights. Y. Liu et al. [5,6] proposed a unified variational retina model for haze and glow removal. L. Tan et al. [7] eliminated haze and glow through the use of multi-scale saliency detection algorithms, as well as the fusion of ambient light and transmittance. Yan and colleagues [8] have developed a semi-supervised approach using a generative adversarial network model for hazy atmospheric imaging to tackle foggy night glow. However, these techniques are effective in reducing haze or glow in foggy conditions but do not address glare removal in low-light images.

Deep learning-based methods [9,10,11] for low-light image enhancement typically rely on supervised learning, where a large number of paired low/normal light image samples are required. Unsupervised methods [12,13], on the other hand, use unpaired low/normal light images and employ adversarial training techniques. Semi-supervised methods [14,15] use unlabeled samples with the help of unpaired high-quality images. In recent developments, zero-reference learning methods have been proposed for low-light enhancement [16,17]. However, most existing methods fail to effectively suppress glare while enhancing low-light regions in night-time images. To address this, Jin et al. [18] have proposed an unsupervised method that integrates a layer decomposition network and a light effect suppression network. This approach achieves both glare suppression and low-light enhancement without the need for image pairs while preserving image details. High-dynamic-range (HDR) imaging methods can improve low-light images and counteract overexposure. Sharma et al. [19] have explained that their method linearizes a single input night image and splits it into low-frequency (LF) and high-frequency (HF) feature maps. The processed HF feature maps are then combined with the LF feature maps to produce an output map with suppressed glare and improved dark lighting. By utilizing high-dynamic-range (HDR) imaging, the darker regions of the image are greatly improved while the brighter regions are reduced, resulting in an overall improvement in contrast.

The disadvantages of deep learning-based high-dynamic-range imaging methods and unsupervised methods include the need for large training samples and high computation requirements, making them impractical for embedded devices. Thus, this paper introduces a GS-AGC algorithm that operates on a hardware platform utilizing a field programmable gate array (FPGA). After conducting a theoretical analysis and FPGA hardware verification, the proposed algorithm emerged as an easily implementable solution under resource constraints, and at the same time, it meets the real-time processing requirement of glare suppression at a framerate of 52 f/s. The algorithm effectively adjusted low-light images while simultaneously reducing glare, thereby enhancing their visual quality. The primary contributions of this paper are as follows:

- Luminance region delineation for single and multiple light sources was carried out using a region luminance perception algorithm, which helped guide the subsequent image processing.

- The GS-AGC algorithm, based on region brightness perception, was proposed to effectively suppress glare from single and multiple light sources in complex real-life scenarios. This algorithm achieved a balanced brightness of low-light images and provided valuable reference ideas and inspiration for related research fields.

- Building upon this foundation, the GS-AGC algorithm was accelerated with hardware, and corresponding modules for algorithm acceleration were designed. The implementation was carried out using a field programmable gate array (FPGA).

The description of the study consists mainly of:

Section 2 explores some related work. In Section 3, a thorough overview of the GS-AGC algorithm and its FPGA implementation is provided, including theoretical details. Then, our algorithm is compared to traditional and deep-learning algorithms using publicly available datasets in Section 4, evaluating implementation speed and energy consumption across both CPUs and FPGAs and presenting experimental results and analysis. Finally, in Section 5, the primary research in this paper is summarized, and potential avenues for future investigation are suggested.

2. Related Work

Low-light image enhancement: Low-light images are primarily caused by insufficient lighting in the shooting environment. They are characterized by a limited grayscale range, high spatial correlation among neighboring pixels, and minimal variation in grayscale, resulting in narrow information representation of objects, backgrounds, details, and noise. Two types of low-light images exist: dark-light images and wide-dynamic-range images. Dark-light images typically exhibit large regions of darkness, with gray values concentrated within a low range, leading to minimal image contrast. On the other hand, wide-dynamic-range images have an uneven distribution of light, with both extremely dark and bright regions present. For this reason, one of the main characteristics of a low-light image is dark light regions occupying the main part of the image. Some techniques commonly used in recent years include Zero-DCE [20], gamma correction [21], dark channel a priori [22], and histogram equalization [23]. Histogram equalization enhances an image by adjusting the grayscale of each pixel. Gamma correction improves the grayscale value of darker regions through a nonlinear transformation. Dark channel a priori leverages noise distribution characteristics, specifically foggy characteristics of glare noise, to enhance image color and visibility. Zero-DCE, or Zero Reference Depth Curve Estimation, utilizes a lightweight network (DCE-NET) to predict higher-order curves at the pixel level, which are then employed as adjustments to expand the input’s range of variation. All of the above methods show excellent performance in low-light image enhancement but cannot satisfy the real-time nature of low-light image processing.

Gamma correction algorithm: The response curve of early CRT monitors is the origin of gamma [24]. This refers to the nonlinear relationship between the output brightness and input voltage. Through Gamma-encoded data and Gamma characteristics of CRT monitors, the product of these two sets of encoding results in exactly 1 [25]. The Gamma curve is a special kind of hue curve, and Gamma correction [26,27] is the editing of this curve for image processing. It belongs to one of the grayscale transformation methods. The Gamma value is a crucial parameter in this process, as it determines the degree of change in brightness and contrast. Larger Gamma values correspond to smaller changes in brightness and contrast, resulting in darker images. Conversely, smaller Gamma values result in greater changes in brightness and contrast, leading to brighter images. The mathematical expression for the Gamma correction formula is:

where Vout denotes the output luminance value, Vin denotes the input luminance value, and γ denotes the Gamma value. When the Gamma value is equal to 1, the output luminance value is equal to the input luminance value and the image does not change; when the Gamma value is greater than 1, the output luminance value is smaller than the input luminance value, and the image becomes darker; when the Gamma value is less than 1, the output luminance value is greater than the input luminance value, and the image becomes brighter. While Gamma correction aims to correct the image from a linear response to a response that aligns better with human eye perception through a nonlinear transformation, it should be noted that this global processing method does not have the ability to selectively adjust specific regions of interest in the image. There is a possibility of overlooking bright regions and solely focusing on dark light regions during the image processing stage.

Luminance perception by the human eye: For most sensory and perceptual stimuli, their performance follows the Weber–Fechner law [28]. The Weber–Fechner Law [29] is a law in psychophysics that aims to explain the relationship between the intensity of a stimulus and the corresponding perceived response in humans. It can be expressed as follows:

where S is sensory intensity, K is a constant, and R is stimulus intensity, which indicates that sensory intensity is a logarithmic function of stimulus intensity. Since the brightness range of the scenery in daily life is very large, the human eye needs to adapt to a certain brightness difference, and the photographic ability is automatically adjusted with the strength of the external ambient light. Therefore, the Weber–Fechner law is also applied in the field of low-illumination image enhancement [30,31,32]. The brightness range that the human eye can perceive is 10−2–106 cd/m2 (candela per square meter), and it can perceive a range of 108. Under moderate ambient brightness, the brightness range recognized by the human eye can reach 103 orders of magnitude. According to the Weber–Fechner law, the Log function [33] is considered similar to this property of the human eye, and its mathematical expression is as follows:

where a is a constant, I denotes luminance, and Se denotes human eye response. Equation (3) shows the correspondence between luminance and human eye response, indicating that the human eye is more sensitive to changes in darkness. The simulated human response to light has also appeared in various image processing [34,35].

3. An Adaptive Glare Suppression ALGORITHM Based on Regional Brightness Perception

3.1. Regional Brightness Perception

The shortcomings of commonly used low-illumination image enhancement methods are still observed, such as the inability of traditional algorithms to effectively solve the problem of uneven brightness. Additionally, the contextual information of the image cannot be fully considered by deep learning-based algorithms [36,37,38], leading to the inability to establish pixel dependencies. This defect makes it difficult to resolve the problem of uneven illumination in each local region. To address these problems, our method of regional luminance perception is proposed, aiming to solve the problem of uneven luminance generated by local regions, accurately divide regional luminance, and eliminate the influence of the division of luminance range on the suppression effect of the algorithm.

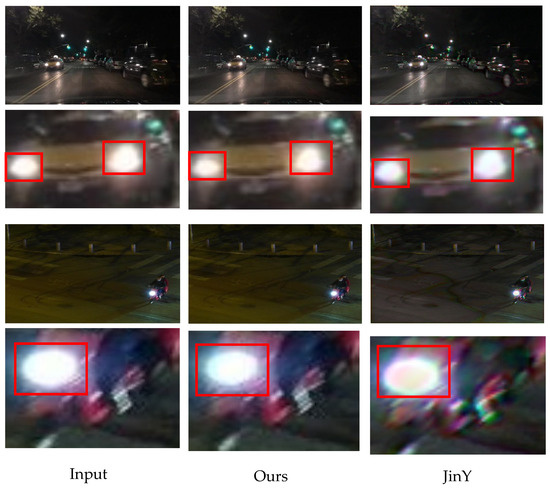

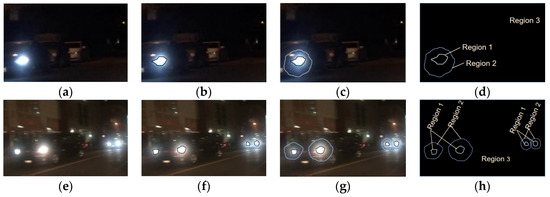

While the image segmentation algorithm [3] is capable of dividing image’s brightness, it fails to fully consider the contextual information of the image. Additionally, a separate algorithm is needed to smooth the region boundaries. This becomes particularly challenging in real-life situations with complex backgrounds, where multiple light sources are present and need to be accurately taken into account for proper brightness region division. Although the JinY algorithm [18] demonstrates good performance in dealing with halo light, it does not explicitly differentiate between a single light source and multiple light sources, as illustrated in Figure 1 below. Obviously, it is evident that the JinY algorithm’s performance is not as effective in suppressing only multiple light sources, as our approach exceled in this particular task. In view of a multi-light source and a single light source under a complex background, we proposed to divide the brightness region into the dark light region, halo region, glare region, and other regions. The range of pixel brightness of the halo, glare, and dark light regions of an image is shown in Figure 2 below, where Figure 2a,e are the original frame of the image, Figure 2b,f are the glare region of the image, Figure 2c,g are the glare region plus the halo region of the image, and Figure 2d,h are the visual representation of the division of the halo, glare, and dark light regions of the image.

Figure 1.

Comparison of the suppression effect of a single light source and multiple light sources, and its local magnification diagrams.

Figure 2.

Brightness region division range: (a) single-source original image; (b) glare regions; (c) glare + halo regions; (d) single-source region segmentation; (e) multi-source original image; (f) glare regions; (g) glare + halo regions; (h) multi-source region segmentation.

In the case of a single light source in a relatively dark background, the object’s brightness and contrast are more prominent, making details such as its shape and texture easier to observe and recognize. However, with multiple light sources, complex reflection effects may occur, making object recognition more challenging. To address this issue, we leveraged the powerful adaptability of region luminance perception to maintain object perception in different luminance environments, enabling recognition in a variety of lighting conditions. The convenience of region brightness perception in dividing image brightness was fully presented by exclusively applying the suppression algorithm to suppress the original frames of both single and multiple light sources in the image, as depicted in Figure 3—the complex light source suppression effect—where Figure 3a,c represent the original frame of the image and Figure 3b,d represent the resultant image suppression frame.

Figure 3.

Complex light source suppression effect: (a) single-source original image; (b) single light source suppression frames; (c) multi-source original image; (d) multiple light source suppression frames.

Before the luminance processing of low-illumination images, the limitations of image segmentation algorithms in dividing the luminance range were addressed. This was inspired by the luminance perception function of the human eye [39], which was utilized to assist in selecting the luminance thresholds. This was done by leveraging the fact that the luminance perception function of the human eye covers a wider range of luminance sensations, thus aiding in the selection of appropriate thresholds for brightness division. Due to the existence of two regions with opposite brightness in dark light region brightness and glare region brightness, to select the current most suitable utilization algorithm for the respective region, we made use of the human eye luminance perception function R(L) [39] for the luminance adjustment of each region, which was defined as follows:

where R(L) is the retinal responsiveness of the input grayscale image, L is the original pixel intensity of the grayscale map, is the pixel intensity normalized to [0, 1], and k is a sensitivity parameter that determines how fast the retinal responsiveness grows with increasing luminance intensity. Since human retinal responsiveness is adaptively determined by background luminance, the sensitivity k can be expressed in the following functional form depending on luminance perception:

where CDFG(L) is the cumulative distribution function of the input histogram and p(x) denotes the normalized gray-level histogram.

In Equation (4), the human eye luminance perception function covers the full dynamic range of the low-illumination image [R(Lmin) = 0, R(Lmax) = 255], and the value range of L is [0, 255]; is the pixel intensity normalized to [0, 1], specifically:

where Lmax is the maximum pixel brightness of the grayscale image.

To obtain a threshold parameter that is suitable for the brightness of the current region, a one-dimensional search algorithm was utilized in the optimization method, specifically, the golden section search algorithm [40,41]. The reason was that the golden section search algorithm is not only fast but also has a wide range of adaptability. Based on the golden section method, the shortening rate of each retention interval was typically considered as 0.618. By calculations and comparisons of the function values of a finite number of points, the original search interval was systematically diminished to meet the allowed inter-cell error. Consequently, the objective was to progressively decrease the interval of the optimal solution until the length of the interval was reduced to 0. In this paper, the iterative process of the algorithm used the initial space [0, 50], [50, 150], [150, 200], and [200, 255] in four iterations. The process continued until it reached the maximum number of iterations, which stopped at 100. The thresholds in the [0, 50], [50, 150], [150, 200], and [200, 255] luminance intervals were then found in turn using just the function values , , , and . was derived in the same way as the other three luminance thresholds, and the derivation of was taken as an example. Its luminance threshold acquisition method is shown in Algorithm 1.

| Algorithm 1 Brightness threshold acquisition: |

|

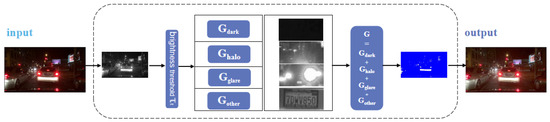

3.2. GS-AGC

In this paper, the proposed algorithm is based on the regional brightness perception function and utilizes the exploits the nonlinear characteristics of the Gamma correction algorithm. Instead of employing region segmentation, the algorithm suggests controlling the change in image brightness data by identifying different brightness regions based on the perceived brightness results of various types of regions. This is achieved through adaptive adjustment using the brightness threshold parameter . By doing so, the algorithm enables the adaptive adjustment of image brightness without relying on traditional region segmentation methods.

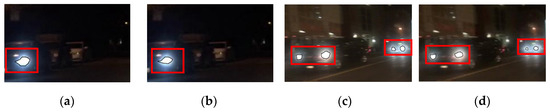

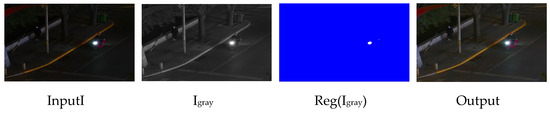

The detail information of the light and dark light regions was enhanced in this paper by extracting the brightness information of the image through the corresponding grayscale map Igray of the original image. Additionally, the algorithm expressed the brightness of the glare region that needed to be suppressed through the attention map Reg(Igray), where the white portion represents the regions that need to be suppressed, while the blue portion represents the regions that need to be enhanced. Afterwards, the luminance information presented in the attention map Reg(Igray) was integrated into the layer decomposition result of the algorithm by utilizing an iterative method to select the multi-level transition luminance threshold parameter. Additionally, a better visual processing effect for low-illumination images was achieved by processing the pixel luminance of different regions. It is important to note that the processing flow of this paper remained the same for both single and multiple light sources. In order to illustrate the detailed processing of GS-AGC, Figure 4 below showcases the layer decomposition results of the algorithm in the scenarios of both single and multiple light sources.

Figure 4.

Layer decomposition result of the algorithm.

In order to address the issue of uneven illumination caused by multiple light sources, in this paper, the brightness region of the image was divided into four parts based on the perceived brightness. The specific process involved traversing the overall grayscale image, starting from the grayscale value that corresponded to the brightness of the first pixel in each row, and ending at the grayscale value that corresponded to the brightness of the last pixel in each column. The GS-AGC algorithm was then applied to perform the necessary processing in different brightness regions. As a result, the algorithm enhanced the brightness in dark light regions and suppressed the brightness in glare regions, thereby improving the overall illumination uniformity of the image.

The core algorithm of this paper primarily comprised four parts:

In the formula, R1, R2, and R3 are pre-defined parameters that are constants. The values for these parameters are set as follows: R1 = 2, R2 = 3, and R3 = 3. These parameters represent the power to which the corresponding expressions are raised. Specifically, the Gdark expression is raised to the second power, the Gglare expression is raised to the third power, and the Ghalo expression is raised to the third power. The Gother expression has a function value of 1, indicating that it does not directly modify image brightness, and therefore, no parameter value is assigned to it. It was revealed from the experiments that the parameter mentioned above can drastically enhance the image processing result. Specifically, the image processing result remained unchanged once the value of parameter R exceeded 5. The luminance region division was based on Igray to carry out, on the overall gray range [0, 255] of the image, the gray value 0, 255, and the four luminance thresholds, ,,,, will directly divide the image grayscale into four regions, which are the luminance region of the image I. They included the dark light region (T0, ), the halo region(, ), and the glare region (, T255), respectively, and the other regions excluding the dark light, halo, and glare regions(, ), (,). To enhance the visual effect of low-illumination images with a narrow grayscale range, a nonlinear grayscale transformation was performed. This was necessary due to the limited visual distinction and high spatial correlation among adjacent pixels in such images. By adjusting the grayscale values, the image’s brightness range was expanded within a range more suitable for human eye observation. This process involved the following pixel-level operations:

where R1 = 2, is a dark light region threshold parameter, is a medium-bright scene threshold parameter, is a bright scene threshold parameter, T0 is a gray-level value of 0, and its dark light enhancement function Gdark indicates that the gray scale image pixels are stretched from the dark light region by stretching a narrow gray range to the medium-bright scene , and that all the corresponding Gamma values between the Gdark regions (T0,) are less than 1.

In the image visual region in addition to focusing on the dark light region part, the high gray value of the region also needs to inhibit the halo, so that the brightness of the image is maintained in the same range, retaining the image with more information on the halo region for the processing:

wherein R3 = 3, is a highlight scene threshold parameter whose halo suppression Ghalo function indicates that the gray scale image pixels are lowered from the highlight scene to the mid-brightness scene , and the high gray scale values are corrected by the Ghalo function to make the corresponding Gamma value of the region between the highlight scene threshold parameter and the mid-brightness scene threshold parameter (, ) both greater than 1.

Uneven distribution of brightness in images is primarily caused by glare, a phenomenon that occurs in various forms and can be complex in real-life scenarios. Glare imposes a tremendous level of visual burden due to the significant increase in brightness, making glare inhibition a core issue for us to solve. Therefore, it is particularly important to consider the size and number of gray values that span the pixel values of the glare region. We treat the glare region as follows:

where R = 3, T255 is the gray level value 255, the glare suppression function Gglare makes the grayscale image pixels close to the maximum gray value of the bright scene close to the mid-bright scene to reduce the visual difference, and the Gglare function makes the region between the threshold parameter of the bright scene and the threshold parameter of the mid-bright scene (, T255) and its corresponding Gamma value both greater than 1.

There is another function to control the removal of dark light, halo, and glare of the other brightness region, (, ), (, ). The main role of the other regions of brightness is to remain unchanged:

Gother = 1

The flowchart of the GS-AGC algorithm proposed by us is displayed in Figure 5, while the steps of the algorithm are exhibited in Algorithm 2. It is worth mentioning that the suppression algorithm proposed by us can be adjusted in a specific luminance region by regional luminance pixels, resulting in the enhancement of the overall low-light image based on local glare suppression and the maintenance of a balanced state of image luminance.

| Algorithm 2 GS-AGC: |

|

Figure 5.

Flow chart of the GS-AGC algorithm.

3.3. FPGA Implementation of the Algorithm

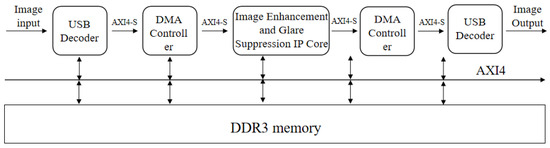

3.3.1. Image Processing Framework on FPGA Platforms

FPGA, a programmable logic device, was utilized in this study to accelerate the algorithms on the hardware platform. It offers programmability, high performance, low power consumption, and robust security. Leveraging FPGA’s hardware architecture characteristics, the entire system’s input and output were facilitated via a USB interface. The development methodology employed comprised High-Level Synthesis (HLS) description, along with co-programming using Python, C, and Verilog hardware description language. These approaches harnessed FPGA’s high data throughput, computational prowess, and parallelization capabilities to achieve accelerated execution of the algorithms discussed in this paper.

Figure 6 illustrates the image processing framework employed in the glare suppression system. The framework utilizes the AXI4 Stream protocol, which enables stream-oriented transmission without the need for memory addresses and without restricting burst length. This protocol is well-suited for high-speed big data applications. Consequently, the entire image processing framework is built upon the ARM AMBA AXI4 protocol. This choice ensures that the overall framework possesses high bandwidth, low latency, and low power consumption, among other characteristics. These qualities are crucial for meeting the real-time requirements of the algorithm.

Figure 6.

Image processing framework.

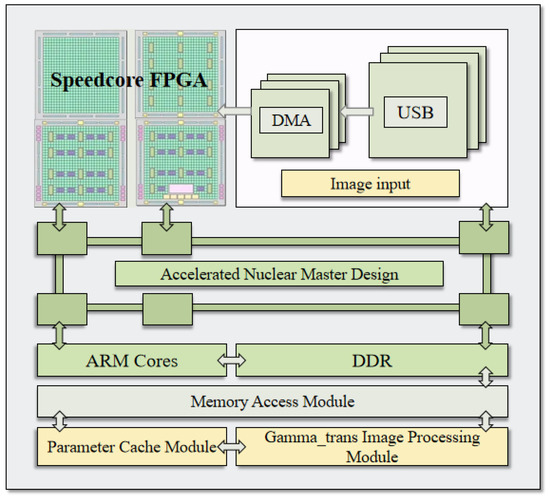

3.3.2. Modular Design of the Algorithm

Figure 7 presents the general architectural framework of the FPGA accelerator designed for the GS-AGC algorithm. The framework consists of two main components: the processing system and the FPGA acceleration core system. The processing system is responsible for access and control tasks and comprises ARM CPU cores and external memory. On the other hand, the FPGA acceleration core system is dedicated to implementing the algorithm’s acceleration tasks and is divided into three modules: the access module, the Gamma_trans image processing module, and the parameter cache module. To achieve higher processing speed in Vivado_HLS global processing and image processing, the framework employs a pipelined operation. This approach incorporates concurrent caching and processing of image data within the FPGA, enabling the implementation of IP cores with low latency and high bandwidth.

Figure 7.

Overall design framework of FPGA acceleration core.

- (1)

- Memory access module

During runtime, the CPU initializes the off-chip memory module and stores both the low-illumination image and the corresponding grayscale image data in the external DDR memory. Additionally, the CPU is in charge of transmitting the input image for processing to the access memory module via the AXI bus. The access memory module retrieves the input image from the external storage and caches it into the cache module.

- (2)

- Parameter Cache Module

During transmission, to ensure the integrity of the transmitted data, the parameter cache module incorporates block random access memory (BRAM) located on the chip. Within the FPGA, the BRAM resources consist of individual RAMs that are primarily responsible for caching the image data and the results obtained from the Gamma_trans module after processing.

- (3)

- Image processing module

The image processing module nonlinearizes the image through a brightness-based calculation of the pixels in the image, as well as by evaluating the brightness of the pixels in the surrounding region. The output of this operation is transferred to the image processing module to achieve the overall enhancement and regional suppression of the image.

To accelerate the algorithm, custom computation combined with parallel computation was utilized. A dedicated circuit was designed using Vivado 2017.4 to perform mathematical calculations for the custom computation, with multiple circuits performing parallel computations simultaneously. During the data parallel computation stage, a pipeline was used to fetch data from the cache module, perform calculations, and write the results back to the cache module. When traversing the pixels, the #Pragma HLS PIPELINE directive in Xilinx HLS guided the synthesis process and added the PIPELINE to the J loop, which was unrolled along the input image’s width direction. This enabled HLS to process one pixel per clock cycle. The HLS completely expanded the window calculation, allowing for the read of nine data at once and increasing the bandwidth. The algorithm is shown in Algorithm 3.

| Algorithm 3 calulate loop |

| Input: in_channel, rows, cols Output: gamma_val_1

|

For this purpose, the Gamma_trans nonlinearized operation was implemented as a pipelined multiply-add operation with the help of DSP Slice. The pipeline consists of fetching data from the cache module, performing the arithmetic operation, and writing the result back to the cache module. The pipelining cycle is determined by the execution time of the segment of the task with the longest execution time. By designing the pipelined system, the FPGA’s throughput could be improved, and the logic resources could be better utilized for the parallel processing of the two tasks of parameter caching and image processing.

4. Experimental Results and Analysis

4.1. Experimental Preparation

4.1.1. Experimental Dataset

In this paper, the low-light image dataset BDD [42] created by the University of Berkeley AI Lab (BAIR) was primarily utilized. This dataset encompasses a variety of environments, ranging from city streets to highways and country roads, and involves numerous weather conditions and driving scenarios. As a result, it provides a comprehensive database for autonomous driving perception information and meets our requirements for low-light and glare scenes. Furthermore, we utilized the LLVIP public dataset to evaluate the algorithm’s performance.

4.1.2. Simulation Experiment Environment

PC: HP OMEN 8P; operating system: Windows 11 Home Chinese Edition; configuration: 12th Generation Intel® i7-12700H processor, NVIDIA GeForce RTX 3070Ti, 16 GB + 512 GB; development languages: Python, an object-oriented dynamic programming language, and C, a process-oriented dynamic programming language; development tools: PyCharm editor from JetBrains, Vivado Design Suite, an integrated design environment released by Xilinx.

4.2. Hardware Experimental Verification

The glare suppression system in this paper utilized a Xilinx Zynq-7020 (XC7Z020-1CLG400C) model development board, which belongs to a family of SoCs featuring a 650 MHz dual-core ARM Cortex-A9 processor and an FPGA architecture. The board incorporates 13,300 logic slices internally, where each slice consists of four six-input LUTs and eight flip-flops. Additionally, it is equipped with 630 KB of fast block RAM, 220 DSP Slices, and an integrated memory controller hardcore. These integrated features provide the benefits of parallel hardware execution, hardware-accelerated algorithms, low-latency control, and development flexibility.

To validate the effectiveness of the GS-AGC algorithm in enhancing dark light images and suppressing glare, an experimental environment is established using a USB interface. Leveraging the strong data processing capability of FPGAs, the algorithm took advantage of their parallel computing and pipelining processing characteristics, where pipelining allows for the execution of program instructions in parallel. For instance, executing data caching simultaneously with processing can significantly reduce algorithm computation time compared to that when using serial processing in software implementation. This showcases the advantages of our hardware design solution in terms of real-time performance, power consumption, and overall performance when compared to other general-purpose platform algorithms. Moreover, in resource-constrained scenarios, the GS-AGC algorithm is more easily portable compared to the complex networking requirements of other solutions. It requires fewer FPGA resources, and its image processing capability meets the usage requirements of most projects.

4.3. Experimental Results

A comparison of this paper’s method GS-AGC algorithm with other methods, such as Adaptive Gamma Correction (AGC) [21], Unsupervised Night Image Enhancement (Jin Y) [18], Zero-reference low-light enhancement (Zero-DCE) [20], HWMNet [43], and some other representative methods, was done through test images of two datasets. Then, the experimental results were evaluated globally and locally in terms of quality to test the glare suppression performance and dark light enhancement performance of the algorithms in this paper.

4.3.1. Global Image Quality Evaluation

For global image quality evaluation, the processed images were evaluated using image quality assessment metrics such as PSNR, entropy, VIF, and MSE, as shown in Table 1 and Table 2. Among them, we used the Peak Signal Noise Ratio (PSNR) from the perspective of image perspective distortion. A larger PSNR value indicates that the distortion between the image to be evaluated and the reference image is smaller and the image quality is better. From the perspective of information theory, entropy is used to reflect the richness of image information: the larger the entropy of image information, the richer the information and the better the quality. Visual Information Transfer Rate (VIF) and Mean Square Error (MSE) are used for clarity and detail information of the image itself. The larger the value of VIF, the higher the clarity and the better the quality of the image. The smaller the value of MSE, the better the quality of the image and the better the model of the network.

Table 1.

Evaluation of different algorithms on the BDD dataset, and bold is the best value.

Table 2.

Evaluation of different algorithms on LLVIP dataset, and bold is the best value.

Table 1 and Table 2 present quantitative results for low-light data, comparing our proposed GS-AGC algorithm with both deep-learning methods and classical low-light image enhancement methods. The results indicate that the GS-AGC algorithm exhibited certain advantages to some extent.

On the BDD dataset, the above comparison shows that although our GS-AGC was 0.25 lower than AGC on the information entropy metric, it was slightly better than the AGC algorithm on all other metrics. For example, in the PSNR metric, we were 1.15 higher than AGC; in the VIF metric, we were 0.01 higher than AGC; in the MSE metric, we were 25.47 better than AGC. Compared to other algorithms, our algorithm had the highest scores in PSNR, information entropy, VIF, and MSE evaluation metrics.

On the LLVIP dataset, our GS-AGC algorithm had the same score as histogram equalization and Zero-DCE on the VIF metric with 0.81, but we had the best results on the remaining PSNR, information entropy, and MSE metrics. Compared to the remaining Jin Y, HWMNet, AGCgamma, and dark channel prior, we had the best scores in the above four evaluation metrics, which indicated that the low-light images enhanced in this paper had high visual quality.

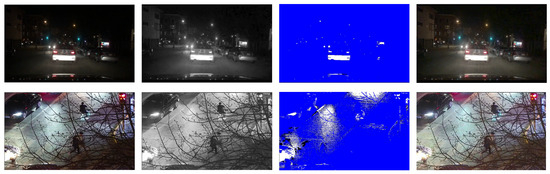

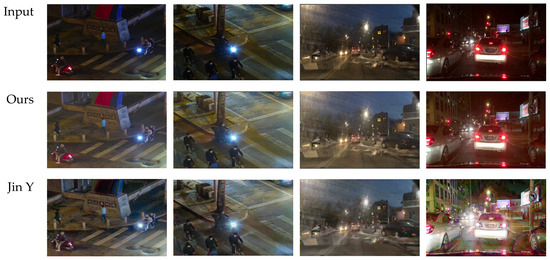

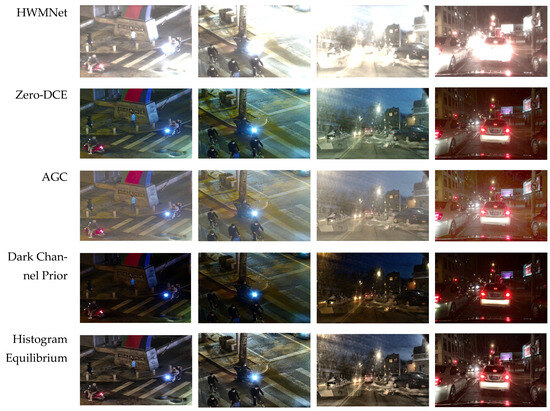

Figure 8 displays the visual effect graphs of the experimental subjects, showcasing the outcomes achieved by employing various processing methods. From the figure, it is evident that certain image enhancement algorithms like the AGC algorithm, Zero-DCE algorithm, the histogram equalization algorithm, and HWMNet tended to excessively enhance local regions in low-illumination datasets with light sources. On the other hand, although the dark channel a priori algorithm effectively suppressed glare in images, it brought down the overall brightness, resulting in unsatisfactory visual effects. This observation indicates that relying solely on a single image enhancement method has limitations in terms of improving both glare suppression and image brightness balance.

Figure 8.

Processing effect of different algorithms on the LLVIP dataset and the BDD dataset. The first two images are from the LLVIP dataset, while the latter two images are from the BDD dataset.

In practical experiments, our algorithm was comparable to Jin Y’s algorithm on the LLVIP dataset and had better visual effects near the dark light region on the BDD dataset, while the shape of the light source maintained good consistency. However, Jin Y’s method showed significant color bias near houses, resulting in a certain degree of image distortion. It is worth noting that our algorithm performed better in glare suppression for the presence of glare in the real low-light image dataset and was comparable to other methods in terms of enhancement effect.

4.3.2. Local Image Quality Evaluation

In this paper, a combination of two evaluation metrics, namely saliency-guided local quality evaluation (SG-ESSIM) [44] and global and local variation image quality evaluation (GLV-SIM) [45], was utilized with a full-reference image evaluation algorithm to more accurately assess the effect of image glare suppression. The evaluation results are presented in Table 3. The SG-ESSIM algorithm makes use of visual saliency as the weighting mechanism, emphasizing image regions that are salient to human observers. Subsequently, it is combined with a full-reference image quality evaluation algorithm to obtain a comprehensive evaluation of image quality. Similarly, the GLV-SIM algorithm employs fractional order derivatives to quantify global changes in the image while using gradient modes to measure local changes. These two measurements are then combined to calculate a similarity map between the reference image and the degraded image, resulting in an objective image score.

Table 3.

Results of different algorithms on localized assessment metrics, and bold is the best value.

In Table 3, the results of comparing different algorithms using various metrics in the local target region are presented. Specifically, on the BDD dataset, our GS-AGC algorithm achieved a score of 0.96 on the GLV-SIM indicator, which was the same as that of the AGC and histogram equilibrium algorithms. However, on the SG-ESSIM indicator, our GS-AGC algorithm obtained the highest score compared to that of JinY, HWMNet, Zero-DCE, and dark channel prior.

On the LLVIP dataset, our GS-AGC algorithm achieved the same score as JinY did on the GLV-SIM index. However, on the SG-ESSIM index, our algorithm scored 0.02 higher than JinY did. Although our GLV-SIM score was 0.01 lower than AGC’s, our SG-ESSIM score was 0.01 higher than AGC’s. Additionally, compared to HWMNet, Zero-DCE, dark channel prior, and histogram equalization algorithms, our algorithm showed the highest scores on the GLV-SIM and SG-ESSIM metrics mentioned above.

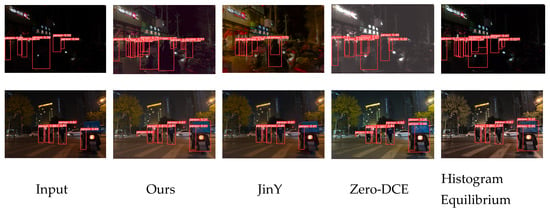

4.3.3. Road Pedestrian Detection

We used yolov5 [46], a well-known target detection algorithm, to evaluate road pedestrian detection performance. In the process of road pedestrian detection experiment, the coco [47] dataset was used to train the yolov5 model, and the Dark face [48] dataset was used for experimental test. As depicted in Figure 9, our method achieved more visually pleasing results compared to the original image’s detection accuracy. Furthermore, our method demonstrated detection accuracy comparable to that of advanced deep-learning enhancement algorithms. This showcases the effectiveness of our approach in achieving accurate road pedestrian detection. For instance, in the first row of the image, the detection accuracy of the image enhanced by our algorithm was 0.23 higher than that of the Zero-DCE algorithm and 0.22 higher than that of the histogram equalization algorithm. However, the JinY algorithm failed to detect the pedestrian riding an electric vehicle in the enhanced image. Moving on to the second row of images, our algorithm and the Zero-DCE algorithm exhibited the same detection accuracy of 0.39 for pedestrians next to the fence. On the other hand, the other algorithms failed to detect these pedestrians in this particular region. Therefore, it offered an effective solution for real-time pedestrian detection on dark roads in embedded systems.

Figure 9.

Comparison of the performance of various algorithms for road pedestrian detection.

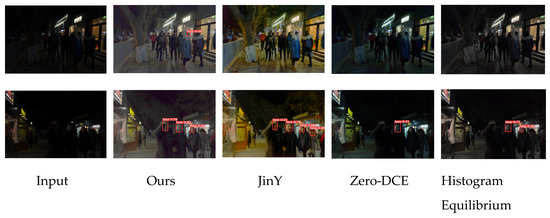

For night-time face detection performance evaluation, 500 face images from the training set of the widerface [49] dataset were labelled using a local labelling tool, labelimg, as the coco dataset does not have face detection boxes labelled in its categories. Subsequently, the yolov5× model was trained using this labelled portion of the dataset, and experimental testing was conducted using the Dark face [48] dataset. As shown in Figure 10, in the first row of images, only human face detection frames of our augmented images were present. In the second row of images, although the image enhanced by the JinY algorithm had one more face detection frame than ours, the detection accuracy of this frame was lower, measuring only 0.26, whereas the accuracy of all our other face detection frames was higher compared to that of the JinY algorithm. In the domain of night-time face detection, our augmented image demonstrated the best face detection accuracy when compared to other techniques such as Zero-DCE and histogram equalization algorithms. This underscores that our method is an indispensable pre-processing step in advanced image processing systems.

Figure 10.

Comparison of the performance of various algorithms for face detection at night.

4.3.4. Hardware Energy Consumption and Real-Time Comparison Test

Since embedded systems are more concerned about energy consumption and real-time performance, we conducted comparisons with other general-purpose hardware platform accelerators in terms of energy efficiency and real-time performance. Table 4 shows the test results, the test platforms were Intel Core i7 and Zynq 7020, and the test data were 1280 × 720 low-light image files.

Table 4.

Comparison of real time and energy consumption.

In terms of energy efficiency, FPGAs make full use of their parallel processing capabilities by rationally designing and configuring hardware circuits to achieve faster runtimes. The PIPELINE instruction was used in the FPGA to demonstrate the parallel processing capability by dividing the Gamma value in Equation (6) into multiple phases and executing them simultaneously, resulting in a faster runtime than on the CPU platform. The FPGA could also be accelerated by customizing the hardware, and the hardware logic was customized according to the requirements of the GS-AGC algorithm. In contrast, CPUs are general-purpose processors that cannot directly optimize the hardware execution of algorithms. Translating the algorithms into hardware circuits can eliminate some of the overheads on the CPU and improve execution efficiency.

In terms of real time, FPGAs have low latency, as demonstrated by the latency (1,900,172) and interval (1,900,173) given by the HLS tool. In FPGAs, the circuitry and signal transmission paths are direct, without interference from the operating system and other software layers. As a result, algorithms executed on FPGAs can respond faster to inputs and outputs, thereby reducing overall execution time. In the test, the CPU platform took 680 ms to complete the single frame of the image, while the FPGA accelerator took only 19 ms. in terms of real time, the CPU platform processed 1.47 frames per second, while the FPGA could achieve a rate of 52 frames per second. Relative to the CPU, our designed accelerator achieved about 35.3-fold performance improvement. In addition, our algorithm exhibits comparable visual processing compared to a previous lightweight low-light image enhancement system [50] using FPGAs. Thus, by employing FPGAs as accelerators, we could leverage their advantages in terms of energy efficiency and real-time performance, resulting in higher computational performance and faster execution times.

From the processing results of this study’s algorithm, the dark light region could enhance the visual visibility of this part of the image, retain more image detail information, especially in the visual effect of the ground around the car and the house, and meet pedestrians and drivers on their visual information needs, but also effectively solve the low-light image due to headlights and other light sources caused by the glare problem. From the comparison of these visual effects, the algorithm in this paper could better solve the problem of uneven illumination of low-light images due to glare, enhance the visual effect of the darker regions of the image and at the same time suppress the brightness of the image glare regions. In dealing with this kind of image problem it has obvious advantages: focus in the processed image was more prominent, image distortion was lower, and the details of the information were richer. At the same time, the algorithm had lower power consumption and better real-time performance in hardware implementation compared with accelerators on other general-purpose hardware platforms.

5. Conclusions

The GS-AGC algorithm proposed in this paper was based on regional brightness perception. Compared to other approaches, such as using complex regional segmentation combined with local detail enhancement algorithms, or foggy night glow removal algorithms with limited glare targeting, as well as deep learning algorithms that are challenging to implement on resource-constrained embedded devices, the algorithms introduced in this paper demonstrated significant effects in suppressing glare and enhancing overall visual quality of low-light images. Importantly, these effects are achieved while preserving the detail information of the images. To address the real-time processing requirements of image processing, the algorithm presented in this study was deployed on a PC and successfully ported to a Xilinx Zynq-7020 development board. This deployment allowed the algorithm to effectively handle glare in real-time scenarios, overcoming the limitations of competing algorithms. Additionally, the algorithm demonstrated low execution time, requiring only 19 ms to process 1280 × 720 low-light images, which was sufficient to meet real-time demands. The design approach adopted in this study was straightforward and effectively improved the sense of hierarchy in low-light images, leading to the desired results.

Currently, this issue addresses the uneven illumination and glare problem in low-light environments, yet it does not resolve the issue of excessive glare suppression in complex real-world situations, as the number and scope of glares are too extensive, leading to over-suppression of glare during suppression. This problem can be the main research direction in the future, and the unsupervised network-based approach can then be used to achieve glare suppression and low-light enhancement in low-light images. We plan to integrate the photodecomposition induction luminance network and the illumination learning network. In the photodecomposition induction luminance network, we will reduce the glare range by combining glare detection and brightness suppression using the brightness sensor module. This will guide the photodecomposition network to decompose the input image into the sensing layer, illumination layer, background layer, and output layer. Subsequently, the illumination learning network will further suppress overexposed regions and enhance dark light regions.

Author Contributions

Conceptualization, X.P. and W.W.; methodology, P.L. and W.W.; software, W.W.; validation, Y.M. and W.W.; formal analysis, H.W. and W.W.; investigation, W.W.; data curation, W.W.; writing—original draft preparation, W.W.; writing—review and editing, W.W.; visualization, W.W.; supervision, P.L.; funding acquisition, P.L. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by the Key Industry Chain Technology Research Project of Xi’an: Key Technology Research on Artificial Intelligence (Key Technology Research on the Integration of 3D Virtual Live Character Scene) 23ZDCYJSGG0010-2023, and is also supported by the Shaanxi Provincial Key Research and Development Program 2022ZDLSF04-05.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets generated and/or analyzed during the current study are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Jiang, X.; Yao, H.; Liu, D. Nighttime image enhancement based on image decomposition. SIViP 2019, 13, 189–197. [Google Scholar] [CrossRef]

- Tao, R.; Zhou, T.; Qiao, J. Improved Retinex for low illumination image enhancement of nighttime traffic. In Proceedings of the 2022 International Conference on Computer Engineering and Artificial Intelligence (ICCEAI), Shijiazhuang, China, 22–24 July 2022; pp. 226–229. [Google Scholar] [CrossRef]

- Mandal, G.; Bhattacharya, D.; De, P. Real-time automotive night-vision system for drivers to inhibit headlight glare of the oncoming vehicles and enhance road visibility. J. Real-Time Image Process. 2021, 18, 2193–2209. [Google Scholar] [CrossRef]

- Wang, W.; Wang, A.; Liu, C. Variational single nighttime image haze removal with a gray haze-line prior. IEEE Trans. Image Process. 2022, 31, 1349–1363. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Yan, Z.; Tan, J.; Li, Y. Multi-purpose Oriented Single Nighttime Image Haze Removal Based on Unified Variational Retinex Model. IEEE Trans. Circuits Syst. Video Technol. 2022, 33, 1643–1657. [Google Scholar] [CrossRef]

- Liu, Y.; Yan, Z.; Wu, A.; Ye, T.; Li, Y. Nighttime Image Dehazing Based on Variational Decomposition Model. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 21–24 June 2022; pp. 640–649. [Google Scholar]

- Tan, L.; Wang, S.; Zhang, L. Nighttime Haze Removal Using Saliency-Oriented Ambient Light and Transmission Estimation. In Proceedings of the 2021 IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2021; pp. 1764–1768. [Google Scholar]

- Yang, C.; Ke, X.; Hu, P.; Li, Y. NightDNet: A Semi-Supervised Nighttime Haze Removal Frame Work for Single Image. In Proceedings of the 2021 3rd International Academic Exchange Conference on Science and Technology Innovation (IAECST), Guangzhou, China, 10–12 December 2021; pp. 716–719. [Google Scholar]

- Afifi, M.; Derpanis, K.G.; Ommer, B.; Brown, M.S. Learning multi-scale photo exposure correction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 9157–9167. [Google Scholar]

- Zhou, Z.; Shi, Z.; Ren, W. Linear Contrast Enhancement Network for Low-illumination Image Enhancement. IEEE Trans. Instrum. Meas. 2022, 72, 5002916. [Google Scholar] [CrossRef]

- Yu, N.; Li, J.; Hua, Z. FLA-Net: Multi-stage modular network for low-light image enhancement. Vis. Comput. 2023, 39, 1251–1270. [Google Scholar] [CrossRef]

- Jiang, Y.; Gong, X.; Liu, D.; Cheng, Y.; Fang, C.; Shen, X.; Yang, J.; Zhou, P.; Wang, Z. Enlightengan: Deep light enhancement without paired supervision. IEEE Trans. Image Process. 2021, 30, 2340–2349. [Google Scholar] [CrossRef] [PubMed]

- Yu, W.; Zhao, L.; Zhong, T. Unsupervised Low-Light Image Enhancement Based on Generative Adversarial Network. Entropy 2023, 25, 932. [Google Scholar] [CrossRef] [PubMed]

- Kozłowski, W.; Szachniewicz, M.; Stypułkowski, M.; Zięba, M. Dimma: Semi-supervised Low Light Image Enhancement with Adaptive Dimming. arXiv 2023, arXiv:2310.09633. [Google Scholar]

- Yang, W.; Wang, S.; Fang, Y.; Wang, Y.; Liu, J. Band representation-based semi-supervised low-light image enhancement: Bridging the gap between signal fidelity and perceptual quality. IEEE Trans. Image Process. 2021, 30, 3461–3473. [Google Scholar] [CrossRef]

- Li, W.; Xiong, B.; Ou, Q.; Long, X.; Zhu, J.; Chen, J.; Wen, S. Zero-Shot Enhancement of Low-Light Image Based on Retinex Decomposition. arXiv 2023, arXiv:2311.02995. [Google Scholar]

- Li, C.; Guo, C.; Loy, C.C. Learning to enhance low-light image via zero-reference deep curve estimation. arXiv 2021, arXiv:2103.00860. [Google Scholar] [CrossRef] [PubMed]

- Jin, Y.; Yang, W.; Tan, R.T. Unsupervised night image enhancement: When layer decomposition meets light-effects suppression. In Proceedings of the Computer Vision–ECCV 2022, 17th European Conference, Tel Aviv, Israel, 23–27 October 2022; Proceedings, Part XXXVII. Springer Nature: Cham, Switzerland, 2022; pp. 404–421. [Google Scholar]

- Sharma, A.; Tan, R.T. Nighttime visibility enhancement by increasing the dynamic range and suppression of light effects. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 11977–11986. [Google Scholar]

- Guo, C.; Li, C.; Guo, J.; Loy, C.C.; Hou, J.; Kwong, S.; Cong, R. Zero-reference deep curve estimation for low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 14–19 June 2020; pp. 1780–1789. [Google Scholar]

- Jeong, I.; Lee, C. An optimization-based approach to gamma correction parameter estimation for low-light image enhancement. Multimed. Tools Appl. 2021, 80, 18027–18042. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X.J.I. topa and intelligence, m. 2010. Single Image Haze Remov. Using Darn. Channel Prior 2011, 12, 2341–2353. [Google Scholar]

- Stark, J.A. Adaptive image contrast enhancement using generalizations of histogram equalization. IEEE Trans. Image Process. 2000, 9, 889–896. [Google Scholar] [CrossRef]

- Braun, G.J. Visual display characterization using flicker photometry techniques//Human vision and electronic imaging VIII. SPIE 2003, 5007, 199–209. [Google Scholar]

- Haines, E.; Hoffman, N. Real-Time Rendering; CRC Press: Boca Raton, FL, USA, 2018. [Google Scholar]

- Li, C.; Tang, S.; Yan, J.; Zhou, T. Low-light image enhancement via pair of complementary gamma functions by fusion. IEEE Access 2020, 8, 169887–169896. [Google Scholar] [CrossRef]

- Shi, Z.; Feng, Y.; Zhao, M.; Zhang, E.; He, L. Normalised gamma transformation-based contrast-limited adaptive histogram equalisation with colour correction for sand–dust image enhancement. IET Image Process. 2020, 14, 747–756. [Google Scholar] [CrossRef]

- Bee, M.A.; Vélez, A.; Forester, J.D. Sound level discrimination by gray treefrogs in the presence and absence of chorus-shaped noise. J. Acoust. Soc. Am. 2012, 131, 4188–4195. [Google Scholar] [CrossRef]

- Drösler, J. An n-dimensional Weber law and the corresponding Fechner law. J. Math. Psychol. 2000, 44, 330–335. [Google Scholar] [CrossRef]

- Wang, W.; Chen, Z.; Yuan, X. Simple low-light image enhancement based on Weber–Fechner law in logarithmic space. Signal Process. Image Commun. 2022, 106, 116742. [Google Scholar] [CrossRef]

- Wang, W.; Chen, Z.; Yuan, X.; Wu, X. Adaptive image enhancement method for correcting low-illumination images. Inf. Sci. 2019, 496, 25–41. [Google Scholar] [CrossRef]

- Kim, D. Weighted Histogram Equalization Method adopting Weber-Fechner’s Law for Image Enhancement. J. Korea Acad.-Ind. Coop. Soc. 2014, 15, 4475–4481. [Google Scholar]

- Gonzales, R.C.; Wintz, P. Digital Image Processing; Addison-Wesley Longman Publishing Co., Inc.: Reading, MA, USA, 1987. [Google Scholar]

- Drago, F.; Myszkowski, K.; Annen, T.; Chiba, N. Adaptive logarithmic mapping for displaying high contrast scenes. Comput. Graph. Forum 2003, 22, 419–426. [Google Scholar] [CrossRef]

- Thai, B.C.; Mokraoui, A.; Matei, B. Contrast enhancement and details preservation of tone mapped high dynamic range images. J. Vis. Commun. Image Represent. 2019, 58, 589–599. [Google Scholar] [CrossRef]

- Zhang, Y.; Di, X.; Zhang, B.; Ji, R.; Wang, C. Better than reference in low-light image enhancement: Conditional re-enhancement network. IEEE Trans. Image Process. 2021, 31, 759–772. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, J.; Guo, X. Kindling the darkness: A practical low-light image enhancer. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 1632–1640. [Google Scholar]

- Wang, Y.; Yu, Y.; Yang, W.; Guo, L.; Chau, L.P.; Kot, A.C.; Wen, B. Exposurediffusion: Learning to expose for low-light image enhancement. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 12438–12448. [Google Scholar]

- Huang, S.C.; Cheng, F.C.; Chiu, Y.S. Efficient contrast enhancement using adaptive gamma correction with weighting distribution. IEEE Trans. Image Process. 2012, 22, 1032–1041. [Google Scholar] [CrossRef]

- Roh, S.; Choi, M.C.; Jeong, D.K. A Maximum Eye Tracking Clock-and-Data Recovery Scheme with Golden Section Search (GSS) Algorithm in 28-nm CMOS. In Proceedings of the 2021 18th International SoC Design Conference (ISOCC), Jeju Island, Republic of Korea, 6–9 October 2021; pp. 47–48. [Google Scholar]

- Alrajoubi, H.; Oncu, S. PV Fed Water Pump System with Golden Section Search and Incremental Conductance Algorithms. In Proceedings of the 2023 15th International Conference on Electronics, Computers and Artificial Intelligence (ECAI), Bucharest, Romania, 29–30 June 2023; pp. 1–4. [Google Scholar]

- Yu, F.; Chen, H.; Wang, X.; Xian, W.; Chen, Y.; Liu, F.; Madhavan, V.; Darrell, T. Bdd100k: A diverse driving dataset for heterogeneous multitask learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 14–19 June 2020; pp. 2636–2645. [Google Scholar]

- Fan, C.M.; Liu, T.J.; Liu, K.H. Half wavelet attention on M-Net+ for low-light image enhancement. In Proceedings of the 2022 IEEE International Conference on Image Processing (ICIP), Bordeaux, France, 16–19 October 2022; pp. 3878–3882. [Google Scholar]

- Gao, M.-J.; Dang, H.-S.; Wei, L.-L.; Wang, H.-L.; Zhang, X.-D. Combining global and local variation for image quality assessment. Acta Autom. Sin. 2020, 46, 2662–2671. [Google Scholar] [CrossRef]

- Varga, D. Saliency-Guided Local Full-Reference Image Quality Assessment. Signals 2022, 3, 483–496. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv5, Improved real-time object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Virtual, 14–19 June 2020. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision–ECCV 2014, 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part V 13; Springer International Publishing: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Yang, W.; Yuan, Y.; Ren, W.; Liu, J.; Scheirer, W.J.; Wang, Z.; Zhang, T.; Zhong, Q.; Xie, D.; Pu, S.; et al. Advancing image understanding in poor visibility environments: A collective benchmark study. IEEE Trans. Image Process. 2020, 29, 5737–5752. [Google Scholar] [CrossRef]

- Yang, S.; Luo, P.; Loy, C.C.; Tang, X. Wider face: A face detection benchmark. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 5525–5533. [Google Scholar]

- Wang, W.; Xu, X. Lightweight CNN-Based Low-Light-Image Enhancement System on FPGA Platform. Neural Process. Lett. 2023, 55, 8023–8039. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).