Abstract

Impulse radio ultra-wideband (IR-UWB) radar, operating in the low-frequency band, can penetrate walls and utilize its high range resolution to recognize different human activities. Complex deep neural networks have demonstrated significant performance advantages in classifying radar spectrograms of various actions, but at the cost of a substantial computational overhead. In response, this paper proposes a lightweight model named TG2-CAFNet. First, clutter suppression and time–frequency analysis are used to obtain range–time and micro-Doppler feature maps of human activities. Then, leveraging GhostV2 convolution, a lightweight feature extraction module, TG2, suitable for radar spectrograms is constructed. Using a parallel structure, the features of the two spectrograms are extracted separately. Finally, to further explore the correlation between the two spectrograms and enhance the feature representation capabilities, an improved nonlinear fusion method called coordinate attention fusion (CAF) is proposed based on attention feature fusion (AFF). This method extends the adaptive weighting fusion of AFF to a spatial distribution, effectively capturing the subtle spatial relationships between the two radar spectrograms. Experiments showed that the proposed method achieved a high degree of model lightweightness, while also achieving a recognition accuracy of 99.1%.

1. Introduction

As a form of information transmission, human activity recognition (HAR) has significant application value in security, counter-terrorism, disaster rescue, smart homes, intelligent driving, and traffic safety [1]. Traditional HAR technologies mainly rely on optical sensors and wearable devices [2]. Optical sensors, such as cameras, can provide intuitive visual information but are limited in low-light or occluded environments, and pose significant privacy concerns. Wearable devices, while capable of providing continuous physiological data monitoring, are highly intrusive and require the user to wear them constantly, which can affect user comfort and acceptance.

In contrast, radar-based HAR can demonstrate unique advantages. IR-UWB radar is an ultra-wideband radar that uses extremely short pulses to transmit data. When operating in the low-frequency band, the radar waves it emits can penetrate walls and other obstacles under non-contact conditions, achieving non-line-of-sight behavior recognition. This effectively addresses the challenge of HAR in complex occlusion environments where cameras are not viable, while also protecting personal privacy [3]. Due to its extremely narrow pulse characteristics, IR-UWB radar has a very high range resolution, sufficient to distinguish subtle human movements. Moreover, because signal transmission relies on brief pulses, IR-UWB radar has low power consumption, making it highly advantageous for constructing lightweight HAR systems [4].

The process of through-wall HAR using IR-UWB low-frequency radar consists of four main steps: clutter suppression, signal transformation, feature extraction, and classifier recognition. The echo data obtained from pulse radar are in the form of a two-dimensional range–time (RT) matrix, which directly reflects information about the target’s spatial position and temporal variations. By performing clutter suppression on the raw data, an RT matrix predominantly containing human motion information can be obtained. Feature visualization operations on the RT matrix result in a range–time map (RTM). It is possible to skip the signal transformation step and directly perform feature extraction on the RTM. For instance, Wang et al. [5] argued that the transformed signal data might not effectively represent the intrinsic features of the movement and, therefore, they directly used the RTM as the input feature for their proposed method, an autoencoder self-organized mapping network.

However, the mainstream perspective still holds that frequency information can reveal the periodic characteristics of signals and is key to analyzing signal variations. The micro-movements of different actions vary, leading to different micro-Doppler frequency features for different actions. By leveraging this characteristic, performing time–frequency transformation on the filtered RT data can yield a time–Doppler map (TDM), which depicts different actions. In the past, the features used for classification and recognition were typically manually extracted from TDMs and then input into classifiers built with traditional machine learning (ML) algorithms. Additionally, applying a Fourier transform to each range cell in the RT matrix results in a range–Doppler map (RDM), which reflects the relationship between the action frequency and range. However, the RDM is rarely used as a standalone feature map and is often fused with RTM and TDM features.

Radar-based HAR methods relying on manual feature extraction are heavily dependent on experience-based heuristic algorithms and prior knowledge. The selected features are often shallow features, which means the final recognition performance is significantly influenced by the quality of the extracted features and their independence. In recent years, deep learning has achieved remarkable success in feature extraction across multiple research fields, such as image recognition [6], image segmentation [7], and speech recognition [8]. Deep learning can automatically extract high-level features and has strong generalization capabilities, which provide unique advantages in processing radar spectrograms.

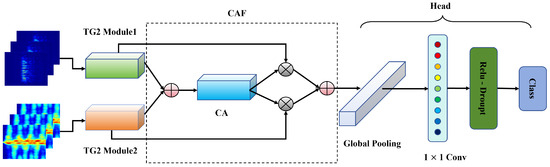

However, deep learning models typically require extensive computational resources and storage, conflicting with the size, power, and cost constraints of radar devices. This makes it challenging to deploy complex neural networks directly on radar devices. Furthermore, existing lightweight models often compromise the model’s representational capacity during compression and acceleration, impacting recognition accuracy. To address this issue, this paper proposes TG2-CAFNet, a through-wall radar-based HAR method leveraging a lightweight network. The proposed method constructs a lightweight feature extraction module, TG2, based on GhostV2 convolution, which parallelly extracts RTM and TDM features. Then, a CA-attention-based AFF fusion method is used to capture correlations between multi-domain features and adaptively fuse inter-map and intra-map spatial features.

In summary, the innovations of this paper can be summarized as follows:

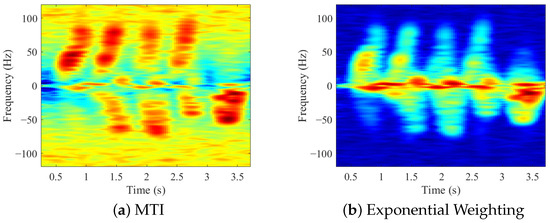

- This paper demonstrates that, under through-wall conditions, the exponential weighting method is more suitable than traditional moving target indication (MTI) for suppressing wall clutter. By employing exponential weighting, the multipath effect of a wall can be effectively mitigated, enhancing the resolution of target signals in the frequency domain, and thereby improving the signal quality and recognition accuracy of through-wall radar systems.

- The proposed TG2 module, based on GhostV2 convolution, is capable of generating rich feature representations with a small number of parameters and FLOPs. Unlike most existing lightweight models that are based on a single feature domain, the TG2 module is able to extract features from both range–time map (RTM) and time–Doppler map (TDM) domains in a parallel manner, fully utilizing multi-domain information and effectively enhancing feature representation capabilities. This approach addresses the limitation of the single-domain feature expression found in current lightweight models.

- This paper proposes a feature fusion method based on coordinate attention (CA), named CAF. CAF improves upon the traditional attention feature fusion (AFF) by extending the adaptive weighting mechanism to the spatial dimension, enabling information from different feature domains (RTM and TDM) to be fused at a finer spatial granularity. This method not only enhances the effectiveness of feature fusion but also significantly improves the model recognition accuracy, while maintaining lightweight model properties.

2. Related Works

2.1. Radar-Based HAR with Handcrafted Features

Handcrafted feature extraction generally refers to statistical information such as the mean, variance, frequency, amplitude, and bandwidth. Commonly used machine learning algorithms include support vector machine (SVM), dynamic time warping (DTW), and random forest. In reference [9], Çağlıyan et al. extracted ten different statistical features, such as the mean, extrema, and bandwidth of the torso, peaks, and envelope from a TDM of walking, running, and crawling actions. These features were then used as input to a K-nearest neighbors (KNN) classifier for classification. In reference [10], Li et al. detailed 23 different statistical features of a micro-Doppler spectrogram, including experience-based features, image processing-based features, and transform-based features. From these 23 features, they selected the seven most impactful and least correlated features for classification performance to use as input for an SVM. Finally, they proposed a custom hierarchical structure for activity classification, which improved the accuracy of multi-class classification by 2%.

2.2. Radar-Based HAR with Deep Learning

Kim et al. [11] were the first to apply DCNN technology to radar-based human activity recognition. They used time–frequency images directly as input and successfully distinguished seven human activities with an accuracy of 90.9%, and human versus non-human targets with an accuracy of 97.6%. They treated the classification of time–frequency images as an image recognition problem, a method that has been widely adopted in subsequent research and become the mainstream approach today. When discussing CNN-based recognition methods, it is important to note that CNNs are particularly suitable for processing data rich in spatial features. However, radar data and human activity recognition typically involve time series analysis. Therefore, some researchers believe that models should be designed to leverage this temporal feature and handle radar time-series data. To address this, Du et al. [12] designed a segmented convolutional gated recurrent neural network. This structure computes implicit features from each time segment of the micro-Doppler images through convolution and then uses bidirectional GRUs to further extract features based on the current time step. This method can recognize and locate activities of varying durations, offering a finer temporal resolution and better generalization.

RTMs and RDMs can provide additional perspectives for explaining human activities. By combining complementary information from multiple domains, a more comprehensive representation of activities can be captured, enhancing the model’s ability to distinguish similar actions. Cao et al. [13], based on a parallel CNN structure and SE attention mechanism, fused TDM and RTM spectrograms. Their experimental results demonstrated that multi-domain feature fusion can effectively improve the accuracy of radar-based human activity recognition. He et al. [14], using MobileNet-V3, fused a RTM, TDM, and RDM at the data level, feature level, and decision level to complete their study on fall detection. Through experimental comparisons, they found that data fusion and decision fusion methods both outperformed single-domain approaches, with decision fusion achieving the best results under the same conditions.

Transformers have demonstrated excellent performance in handling long-range dependencies in sequential data, which is crucial for human activity recognition tasks where actions are distributed over time. Li et al. [15], based on a RTM, proposed a swin transformer encoder that combines cosine similarity attention and patch overlap to capture deep spatiotemporal features of human activity spectrograms. Through experimental comparisons, this method achieved a better performance than traditional CNNs and RNNs. Similarly, Zhang et al. [16] first used parallel CNNs to extract features from the RTM and TDM separately, then fused these features and fed them into a swin transformer for classification, enabling comprehensive extraction and modeling of the spatiotemporal features of radar spectrograms.

2.3. Lightweight Approaches for Radar-Based HAR

Although significant progress has been made in radar-based human activity recognition research, the study of lightweight models in this field still needs to be fully developed. Zhu et al. [17] targeted the TDM and constructed an efficient CNN, Mobile-RadarNet, using one-dimensional depthwise convolution and pointwise convolution. They indicated that this architecture is specifically designed for human activity classification based on micro-Doppler features. Chakraborty et al. [18] built a lightweight model based on depthwise separable convolution, weighting the feature maps and adding negligible training parameters to enhance the model’s generalization ability to suspicious behaviors. For fall detection, Ou et al. [19] employed a unique parallel one-dimensional depthwise convolution structure as the core module, achieving significant parameter reduction.

However, current lightweight research, constrained by parameters and FLOPs (floating point operations per second), forces researchers to abandon multi-domain fusion methods. This approach requires a parallel structure in the network, doubling the parameters and FLOPs. To overcome this challenge, let us hypothesize the existence of a model with such small parameters and FLOPs that doubling them would still be comparable to the scale of current advanced lightweight models. In this case, multi-domain fusion technology could be naturally adopted to further improve the network performance.Therefore, we propose the TG2-CAFNet method to address this issue.

3. Multi-Domain Representation of Human Activity Features

3.1. Clutter Suppression and RTM

The data collected by IR-UWB radar are directly represented in range–time (RT) form, where the range dimension is the fast-time axis and the time dimension is the slow-time axis. At this stage, the data obtained are a raw RT numerical matrix without any processing.

In through-wall scenarios, the raw RT matrix contains not only the target echo components but also the strong direct current clutter and multipath components caused by the wall [20]. To obtain an RTM that represents human activity features, clutter suppression is necessary. In non-through-wall radar activity recognition research, moving target indication (MTI) [21] technology is widely used for static clutter suppression. However, under through-wall conditions, this method tends to excessively attenuate the target amplitude and is less effective at suppressing multipath components, resulting in a low signal-to-noise ratio after filtering. To better suppress wall-induced clutter, this paper employs an exponential weighting method, an improved version of the average background subtraction method [22]. The differences between MTI and the exponential weighting method will become more evident in the TDM representation in Section 2.2. Therefore, in Section 2.2, this paper will demonstrate how the exponential weighting method outperforms MTI under through-wall conditions.

The average background subtraction method estimates the background of a detected area based on the echo signals. By subtracting the background estimate from the current echo, a filtered signal can be obtained. Let X denote the radar echo RT matrix, and denote the element in the m-th row (slow-time) and n-th column (fast-time) of X. The background estimate Y can be expressed as [22]

As can be seen from Equation (1), the current background estimate consists of the previous background estimate and the current echo. However, in through-wall environments, the radar echo background has significant fluctuations. To prevent the background from earlier times from excessively contributing to the current background, Equation (1) can be adjusted by adding a weighting factor to smooth the background estimate:

The method described in Equation (2) is known as the exponential weighting method. It is easy to observe that if is assigned a suitable fixed value, the contribution of the background from earlier times decreases as t increases. Using Equation (2), a radar echo matrix with suppressed clutter can be easily obtained:

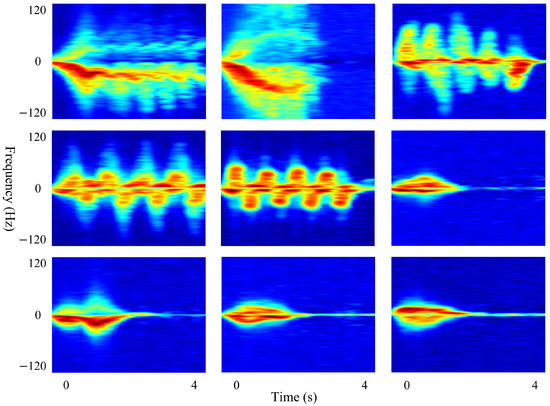

By applying range compensation to the matrix and displaying it as a heatmap, a range–time map (RTM) of human activity can be obtained. As shown in Figure 1, a RTM for nine different actions is illustrated. The purpose of range compensation is to enhance the distant features of the RTM, while relatively further suppressing near-range clutter. However, this suppression effect is only effective for RTMs, and the use of range compensation does not have a significant impact on TDMs, as discussed in Section 3.2 of this paper. Here, the range compensation can be expressed as

where represents the element in the m-th row and n-th column of the range-compensated human activity RT matrix. k is a constant and, in this paper, k is set to 2.

Figure 1.

RTM of nine different actions. From left to right and top to bottom, the actions represented are walking, running, punching, kicking, stepping, bending, sitting, squatting, and standing up.

The RTM represents the features of human activity in the range–time domain. Generally, different actions have different radial distances relative to the radar at different times. As shown in Figure 1, the actions of walking and punching exhibit significant differences in the range dimension, which is a very obvious feature difference. However, some actions like punching, kicking, and stepping display very similar features. Faced with such similar actions, RTMs, relying solely on the range–time representation, cannot effectively distinguish them. Therefore, we need to use a more effective feature representation map: the time–Doppler map (TDM).

3.2. Time–Frequency Transformation and TDMs

In reality, we cannot transmit complex-valued signals; thus, the values in the RT matrix Z obtained in the previous section are real-valued. To obtain a micro-Doppler feature map of human movements, performing time–frequency analysis on real-valued signals alone cannot capture negative frequency information. This limitation prevents us from intuitively understanding the physical meaning of the time–frequency map. Therefore, a Hilbert transform is applied to each pulse of Z, as follows

Thus, we obtain the matrix . Let

At this point, represents the analytic signal of Z, which is the complex-valued RT matrix we expect.

The short time Fourier transform (STFT) is simple and efficient for time–frequency analysis. According to the method in [23], the STFT is first performed on each range cell, and then the results are summed. This approach makes the generated time–frequency map very smooth:

where w is the window function, typically chosen to have localization properties; in this paper, a Hanning window is used. t is the time index, used to determine the position of the window function in the signal. is the frequency variable, which can be expressed as , where k is the frequency index and N is the FFT length. By processing the RT matrix using Equations (5)–(7), a TD matrix can be obtained. As shown in Figure 2, a TDM for nine different actions is illustrated.

Figure 2.

TDM of nine different actions. From left to right and top to bottom, the actions represented are walking, running, punching, kicking, stepping, bending, sitting, squatting, and standing up.

Compared to RTMs, a TDM, which represents micro-Doppler features, often contains richer information about human activities. In addition to their overall movement, a human target also involves various joint flexion and extension, adduction and abduction, and internal and external rotation motions, as well as small vibrations of the body surface. Together, these constitute more complex micro-movements, which introduce additional frequency modulation into the radar echo signal. These additional frequency components are called micro-Doppler frequencies and have an advantage in representing more detailed features. Compared to the actions that are relatively difficult to distinguish in Figure 1, the feature representation in Figure 2 shows more obvious distinctions.

In Figure 3, we selected the time–frequency map of the punching action to compare the clutter suppression effects of MTI and exponential weighting. It is evident that the exponential weighting method is superior to MTI in terms of clutter suppression.

Figure 3.

Comparison of TDM results using MTI and exponential weighting methods.

4. Proposed Method

4.1. Overall Framework of the Model

In the field of radar-based human activity recognition, previous lightweight models often avoided using multi-domain feature fusion methods that significantly improve accuracy, because such fusion requires parallel structures, which substantially increase the number of parameters and FLOPs. However, our method constructs an efficient and lightweight feature extraction module using GhostV2 [24] convolution. This allows the model to remain lightweight, even when the number of parameters and FLOPs are doubled. Thus, we can utilize multi-domain feature fusion technology to achieve high recognition accuracy, without increasing the computational cost.

We propose a lightweight through-wall radar human activity recognition model named TG2-CAFNet. Specifically, we built a lightweight CNN feature extraction module, TG2, suitable for radar spectrograms, based on GhostV2 convolution. Using a parallel structure, we extract the features of the RTM and TDM separately, without sharing parameters. Since a TDM is another domain representation of an RTM, we cannot treat the two spectrograms as completely different types during feature fusion; they have certain correlations. Considering that traditional fusion methods find it challenging to capture this relationship, we employ AFF [25] to adaptively fuse the features of the spectrograms after the parallel feature extraction module has finished. Furthermore, given the physical significance of the spatial distribution of radar spectrograms, we improve AFF using coordinate attention (CA) [26], so that the weighted fusion can extend to a spatial distribution, effectively enhancing the expression capability of the fused features. Figure 4 shows the overall framework of TG2-CAFNet.

Figure 4.

Overall framework diagram of TG2-CAFNet. The content enclosed by the dashed line represents the CAF module, which performs nonlinear feature fusion.

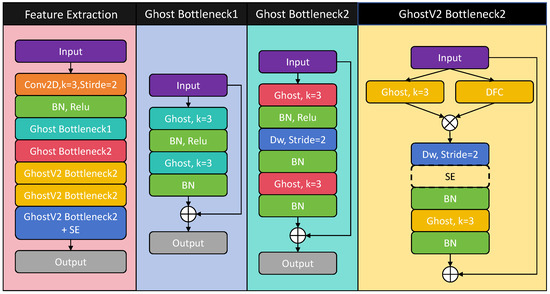

4.2. Optimized Feature Extraction Module for Radar Spectrograms: TG2

The simplicity of radar image backgrounds determines that the complexity of the recognition model will not be very high. Therefore, through-wall radar human activity recognition is highly compatible with lightweight network models. Inspired by lightweight model construction ideas in the literature [18], we noticed that GhostNet [27] generates redundant intermediate features using inexpensive operations, significantly improving the performance compared to other lightweight models, with only a slight increase in the number of parameters and FLOPs. A radar human activity recognition model will actually be much smaller than an image recognition model, making this slight increase in computational overhead for improved performance entirely acceptable.

The core idea of GhostNet is that, in a well-trained deep neural network, rich and even redundant information in the feature maps often ensures a comprehensive understanding of the input data. Therefore, a ghost convolution can be completed in two steps. First, a 1 × 1 convolution is used to generate the intrinsic features [27]:

where X represents the input features and is the pointwise convolution. Then, using depthwise separable convolution, this operation generates multiple ghost features from the intrinsic features. By concatenating these ghost features with the intrinsic features, the ghost convolution is completed:

where represents the depthwise separable convolution operation. Figure 5 illustrates how a ghost convolution is performed.

Figure 5.

Ghost convolution. First, the input feature X is processed through a convolution to generate intrinsic features . Then, is used to produce ghost features through a cheaper operation. Finally, and are concatenated to form the output of the ghost convolution.

Radar spectrograms contain rich physical significance in their spatial distribution, but ghost convolution’s ability to capture spatial information is limited, due to the presence of pointwise convolution. Therefore, we chose GhostV2 to enhance this capability. In fact, the powerful spatial-capture ability of GhostV2 mainly comes from the introduction of DFC (decoupled fully connected) attention, which is a lightweight improvement of fully connected attention and can be specifically represented as

where is the element at the h-th row and w-th column of X, and are the weights of the horizontal FC and vertical FC, respectively; ⊙ denotes element-wise multiplication; and is the attention map obtained through DFC.

We use GhostV2 convolution to construct a feature extraction module and name it Tiny GhostV2 (TG2). The structure of a single TG2 is shown in Figure 6, and Table 1 provides the number of channels for each block of TG2. This design is inspired by the structure of ResNet18 and considers the appropriate number of feature channels for radar spectrograms, enabling TG2 to achieve the maximum performance with minimal parameters and FLOPs. In Section 6.2, we experimentally verify that even slight changes to this structure reduce the performance of the feature extraction module for radar spectrograms.

Figure 6.

The structure of the TG2 feature extraction module. The diagram consists of four columns: the first column represents the overall structure of TG2, and the remaining three columns illustrate the specific contents of the modules within TG2. Notably, the SE module enclosed by a dashed line in GhostV2 Bottleneck2 indicates that this module is optional.

Table 1.

The number of channels for each block of TG2.

It should be noted that we place an SE [28] module in the last bottleneck block of TG2. The DFC attention in GhostV2 already has strong spatial attention capabilities, and the SE module can further enhance the channel feature attention.

4.3. Improved Attention Feature Fusion: CAF

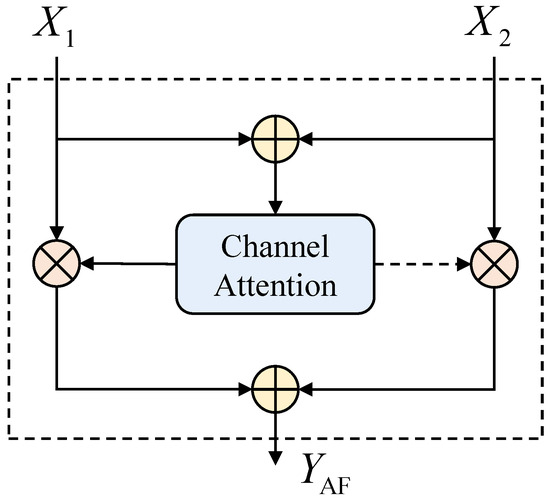

Feature fusion can effectively utilize the complementary characteristics between multiple features to enhance network performance. However, current research on feature fusion in the field of radar human activity recognition mostly remains at the level of simple addition, multiplication, or concatenation. These operations only provide fixed linear fusion, leaving us uncertain whether a combination is suitable for specific objects. Attention feature fusion (AFF) provides us with a dynamic and selective solution. Figure 7 illustrates the implementation process of AFF.

Figure 7.

AFF implementation process. The dashed arrow represents .

Global pooling in SE attention is used to encode channel information, but this global compression method cannot perform spatial encoding. Coordinate attention (CA) is a clever improvement on SE attention, allowing it to not only focus on channel features but also accurately capture the location information of the target. Specifically, this method decomposes the global pooling operation into two 1D pooling operations. Pooling operations with kernel sizes of and are applied along the vertical and horizontal directions, respectively, to aggregate and generate the spatial coordinate encoding on the x-axis and y-axis for the c-th channel feature map:

Let and be the high-level features of the two types of spectrograms, and be the channel attention. Then, the output after AFF can be represented as [25]:

Unless specifically required by the task, channel attention mechanisms are an excellent choice within the AFF modules. In fact, radar spectrograms do not exhibit spatial invariance like visual images [29]. When observing the same target object, the spatial distribution of radar spectrograms does not change significantly, and this spatial information carries substantial physical significance. Therefore, it is necessary to improve AFF by extending weighted fusion to include spatial distribution.

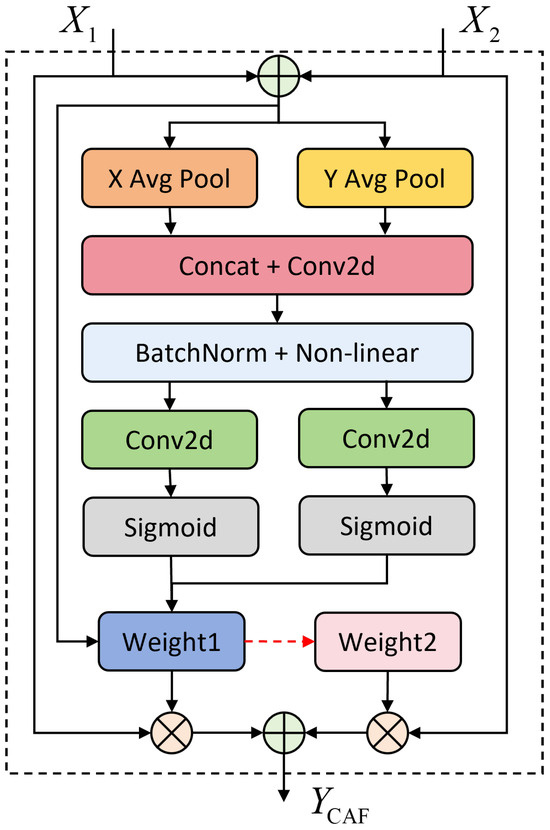

Global pooling in SE attention is used to encode channel information, but this global compression method cannot perform spatial encoding. Coordinate Attention (CA) is a clever improvement on SE attention, allowing it to not only focus on channel features but also accurately capture the location information of the target. Specifically, this method decomposes the global pooling operation into two 1D pooling operations. Pooling operations with kernel sizes of and are applied along the vertical and horizontal directions, respectively, to aggregate and generate the spatial coordinate encoding on the x-axis and y-axis for the c-th channel feature map:

where denotes the input feature of the c-th channel. Then, to effectively capture the relationship between channels, these two features are concatenated along the channel dimension and input into the convolution , yielding the intermediate feature:

where denotes the concatenation along the channel dimension; and is the nonlinear activation function. Next, the intermediate features are split into two 1D tensors of length W and H, respectively, denoted as and , and input into two new convolutions and , to obtain two attention weights:

where is the sigmoid function. Based on this, we modify Equation (14) to obtain the output of CAF :

where and are elements of the two types of high-level features.To improve the visual clarity and readability of the formula representation, all variables in Equation (14) are assumed to belong to the c-th channel by default. Figure 8 shows a flow chart of CAF.

Figure 8.

CAF implementation process. In the diagram, weight2 represents .

The features extracted by the TG2 lightweight parallel feature extraction module can be adaptively weighted and fused with channel and spatial information through CAF. This not only achieves a lightweight design but also effectively enhances the network’s feature representation capability.

5. Data Collection Experiment and Dataset Description

Currently, there is a severe lack of datasets for through-wall radar human activity recognition. Therefore, this study required the creation of a custom dataset to conduct research on through-wall radar human activity recognition.

5.1. Experiment Setup

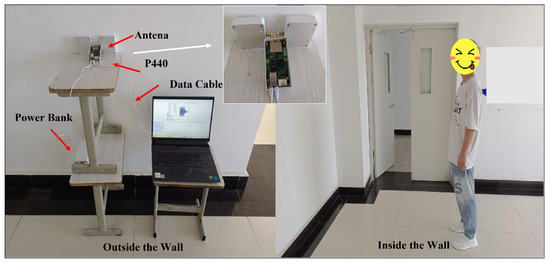

In this study, the P440 Monostatic Radar Module (MRM) developed by Time Domain, Huntsville, AL, USA was used. The P440 MRM is equipped with two UWB planar directional antennas. Additionally, a laptop was used as the system’s control and data receiving terminal. Figure 9 shows the experimental setup for through-wall radar human activity recognition in an indoor environment.

Figure 9.

The experimental setup for through-wall radar human activity recognition in an indoor environment.

The P440 MRM is a fully coherent short-range radar that emits high-bandwidth Gaussian pulses with centimeter-level waveform resolution. In this experiment, the radar operated in the 3.1–4.8 GHz frequency band with a center frequency of 4.3 GHz. This low-frequency radar has excellent capability to penetrate obstacles.

The radar captures the Doppler frequency shift and radial velocity of moving targets through the Doppler effect. By performing time–frequency transformation on a radar time-series signal, a graph showing the relationship between time and target velocity can be obtained. However, the target velocity may not always be measurable. According to the Shannon sampling theorem, in order for a radar signal to capture the maximum velocity information of a moving target, the radar’s pulse repetition frequency (PRF) needs to be greater than twice the maximum frequency of the moving target. Based on this principle, the maximum unambiguous velocity of a radar can be derived as

where is the radar center frequency, and c is the speed of light. We needed the maximum unambiguous velocity of the radar to be greater than the speed generated by most actions, which meant that the PRF should be greater than the micro-Doppler frequency of most human movements. It should be noted that, theoretically, a higher PRF is more beneficial for human activity recognition. However, given the constant total power of the radar system, increasing the PRF would lead to a decrease in the signal-to-noise ratio (SNR) of the radar echo, which is undesirable for through-wall tasks. Therefore, after comprehensive consideration, we set the PRF to 240 Hz, resulting in a maximum unambiguous velocity of approximately m/s. Additionally, to ensure a high SNR for the radar echo, we set the target activity range to within 4.38 m behind the wall.

5.2. Dataset Description

In this experiment, the radar was positioned at a height of 1.08 m above the ground. The wall material was reinforced concrete with a thickness of 0.26 m. We designed a total of 9 actions, as described in Table 2. A key measure of a model’s ability to recognize actions is its capability to distinguish between similar actions. Therefore, the actions we designed included groups of similar actions. Among them, walking and running constituted one group; punching, kicking, and stepping constituted another group; and bending, sitting, squatting, and standing up constituted the third group.

Table 2.

Action description.

Volunteers were recruited for the experiment, totaling 12 participants. Each participant performed each action 20 times, resulting in 240 samples for a single action. In total, 2160 samples were collected for all actions. In a single sample, the radar data were represented as a matrix with a vertical dimension of slow time, totaling 960 timestamps, and a horizontal dimension of fast time, totaling 480 sampling points. The slow time dimension represents actual time, while the fast time dimension represents distance. This form of matrix, after applying the clutter suppression methods mentioned earlier, resulted in the RTM used in this study.

6. Experimental Results and Discussion

6.1. Training Strategies

The proposed TG2-CAFNet model was implemented using the PyTorch 2.0 deep learning framework. We divided the dataset into a training set and a testing set in a 7:3 ratio. To fully leverage the advantages of the fusion network, we employed a fine-tuning strategy. Specifically, we first trained TG2Net, built with TG2, separately on RT and TD maps using the Adam optimizer with an initial learning rate of 0.0001 for 60 epochs. These pre-trained TG2Net models can be considered as pre-trained networks. By removing their head layers, they serve as the fine-tuning part of TG2-CAFNet. Then, the remaining parts were trained with a learning rate of 0.0002 using the Adam optimizer. This training strategy ensured that TG2 optimally extracted features from individual spectrograms, allowing us to further improve the network performance by fusing the networks on the basis of optimal feature extraction modules. It also avoided a decrease in accuracy due to increased network complexity when training TG2-CAFNet directly.

6.2. Comparison of Feature Extraction Module Structures

The GhostNet series networks are lightweight networks designed for image classification, which may not be optimal for radar imagery recognition. Therefore, we designed TG2Net specifically to handle radar imagery. To demonstrate the advantages of TG2Net, we conducted a longitudinal comparison with the GhostNet series networks, as shown in Table 3. Here, the accuracy is based on TD as the input.

In this subsection and the subsequent experiments, the evaluation of lightweight network models is based on two categories of metrics. The first category encompasses model efficiency indicators, including the number of trainable parameters, FLOPs, and inference time, which assess the computational cost and operational efficiency. The second category includes performance metrics, namely accuracy, precision, recall, and F1 score, offering a comprehensive assessment of the model’s classification capabilities.

By comparing TG1Net and TG2Net, as well as GhostNetV1-0.5 and GhostNetV2-0.5 in Table 3, it can be observed that adding DFC attention significantly improved the network performance, at the cost of a slight increase in the number of parameters and FLOPs. This improvement was mainly attributed to the DFC’s ability to capture the dependencies between pixels in distant spatial locations. However, there was an exception: the accuracy of GhostNetV2 was approximately 0.7% lower than that of GhostNetV1. We speculate that this may have been due to the fact that the radar spectrogram data were better suited for simple, fast convolution operations that extract local information, and the addition of DFC introduced redundant computation, leading to a decrease in the generalization ability.

To further investigate the impact of the model complexity on the recognition performance, we reduced the scale of GhostNetV1 and GhostNetV2 to half of their original size and added or removed a layer from TG2Net. The comparison in Table 3 reveals that, except for GhostNetV1-0.5 and TG2Net, the difference in accuracy among the remaining models was not significant. This suggests that by using GhostV2 convolution, it was possible to explore an optimal lightweight feature extraction module TG2 with minimal parameter costs. TG2 achieved 97.99% accuracy with only a 6-layer structure, 0.12 M parameters, and 52.4 M FLOPs. This remarkable performance is attributed to the TG2 structure, which draws inspiration from ResNet18. A key factor is the similarity to ResNet18 in the number and arrangement of bottleneck blocks. Thus, TG2 can be viewed as an improvement of the successful classic model ResNet18, leveraging GhostV2 convolution to create a lightweight network. The superior performance of ResNet18 on the dataset used in this paper will be demonstrated in Section 6.4.

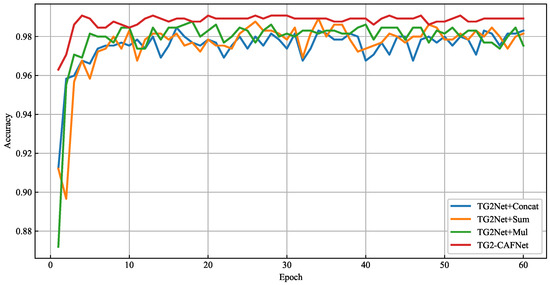

6.3. Analysis of Fusion Methods

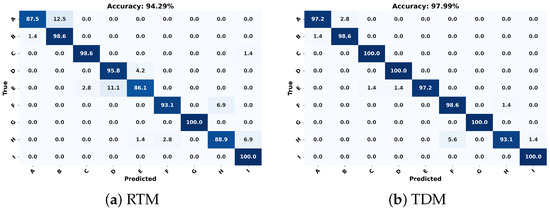

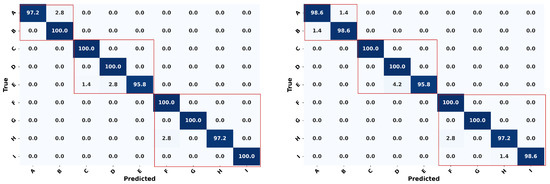

To verify the effectiveness of TG2-CAFNet, we conducted ablation experiments and compared different fusion methods. As shown in Figure 10, confusion matrices were used to observe the performance of TG2Net when the RTM and TDM were individually used as input features. Confusion matrices help identify the error patterns of different models on specific classes. By observing the confusion matrices, we could find out whether certain categories were easily confused, thereby conducting an in-depth analysis of the contribution of the different feature extraction methods to the different categories.

Figure 10.

Confusion matrices of the two types of spectrograms. (a) TG2Net extracting features solely from the RTM. (b) TG2Net extracting features solely from the TDM.

From Figure 10, we can see that the recognition performance of the RTM as a feature was not as good as that of the TDM. The RTM did not perform well in distinguishing similar actions; for example, 12.5% of “walking” was misclassified as “running”, 11.1% of “kicking” was misclassified as “punching”, 6.9% of “bending” was misclassified as “squatting”, and 6.9% of “squatting” was misclassified as “standing up”. This is because an RTM only shows the relationship between the target’s distance and time, which can only capture the target’s macro movement trajectory, but cannot effectively capture subtle differences between similar actions. In contrast, a TDM reflects the Doppler effect of the target’s movement over time, capturing both speed information and micro-movement features. Therefore, in Figure 10, the misclassification rates of the TDM are mostly below 6%.

Combining an RTM and TDM can take advantage of both the macro-positioning information provided by the RTM and the speed and micro-movement features captured by the TDM, highlighting the necessity of feature fusion. In Figure 11, we compare traditional fusion methods with the proposed fusion method, CAF. As shown in the figure, concatenation fusion performed the worst overall. Although this method did not lose any information, it was prone to overfitting due to the increased dimensions and was insufficient for feature interactions. Addition fusion performed slightly better than concatenation fusion, and due to its involvement in feature interaction, addition fusion is more robust than concatenation. Multiplication fusion achieved a slightly lower overall accuracy compared to CAF but was far less robust than CAF and addition fusion. The CAF fusion method outperformed the traditional fusion methods in both accuracy and robustness. This is attributed to CAF’s nonlinear fusion mechanism, which effectively integrates multiple features, avoids the overfitting problem of concatenation fusion, and surpasses addition and multiplication fusion in feature interactions. Therefore, CAF demonstrated a superior performance in handling complex features.

Figure 11.

Comparison of traditional fusion methods and the proposed CAF method.

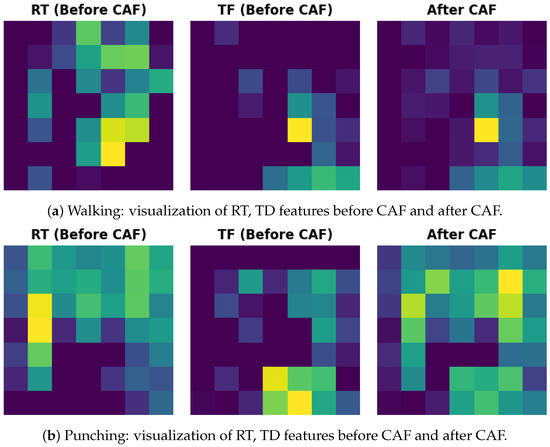

To better understand how CAF performs feature fusion, we visualized the features extracted by TG2-CAFNet in Figure 12, including the RT and TF features before CAF fusion and the features after fusion. We analyzed the visualized features of two actions: running and punching. As shown in the figure, in the case of running, the RT features before CAF fusion covered more dispersed regions, indicated by several low-brightness green blocks, whereas the TF features were more focused on specific key areas. After CAF fusion, the final feature map was primarily dominated by the TF features, with the RT features being diminished. This demonstrates that CAF effectively filters and retains features that are more important for action recognition, thereby enhancing the model’s accuracy. In contrast, for the punching action, both RT and TF features were significant before CAF fusion, and both were well preserved in the fused feature map, indicating a complementary fusion. Hence, CAF is not a simple linear fusion, but rather has an adaptive capability to balance different features. It can selectively fuse features based on their importance for different actions, allowing CAF to achieve a better recognition performance when handling complex actions.

Figure 12.

Feature maps before and after CAF. (a) shows the visualization for “walking” action, and (b) shows the visualization for “punching” action.

To demonstrate the significant advantages of CAF, a statistical significance test was conducted. In this study, a one-way analysis of variance (ANOVA) was first carried out to evaluate the differences between the fusion methods. The results of the ANOVA test showed that the F value was 51.6145 and the p-value was 2.88 × , which is much smaller than the significance level of 0.05. This indicates that the differences in accuracy among the different fusion methods were statistically significant, meaning that at least two groups had a significant difference.

While the ANOVA test successfully demonstrated that there was a statistically significant difference between the groups, it did not specify which groups exhibited significant differences. Therefore, a post hoc Tukey HSD (honest significant difference) test was performed, as shown in Table 6. The Tukey HSD test indicated that the mean differences between CAF and the other methods were significant, particularly for concatenation, multiplication, and summation. The p-adj values were close to or equal to 0 (where p-adj = 0 indicates an extremely small p-value), and the mean differences (Mean Diff) were relatively large. In contrast, the difference between multiplication and summation was not significant (p-adj = 0.4044), suggesting that their performance was similar. The Mean Diff in Table 6 actually reflects the average accuracy difference between the two fusion methods. A larger mean difference indicates a more pronounced performance difference between the two groups. The mean differences between CAF and the other three fusion methods were all large, and the confidence intervals did not include zero, indicating that these differences were statistically significant. This aligns with the ANOVA test results, further verifying the significant performance advantage of CAF over the other fusion methods.

Table 6.

Tukey HSD test results of fusion methods.

Finally, in Figure 13, we present a detailed error analysis using two confusion matrices of TG2-CAFNet. Although TG2-CAFNet demonstrated an excellent recognition performance, it still produced misclassifications of certain actions. By observing the matrices, it can be seen that misclassification and omission errors mainly occurred in the three groups of actions enclosed in red rectangles. These three groups consisted of similar actions, where differences in amplitude, frequency, and period were not obvious. In fact, when a person performs one action, the characteristics of their movements may resemble those of another person performing a different action, further increasing the difficulty of recognition. Therefore, in order to reduce errors, it is important to enhance the distinction and extraction of features for similar behaviors. Methods that could be applied include performing radar-based temporal analysis, introducing complex radar data, and further integrating other data types. These approaches aim to provide a comprehensive analysis of human behaviors from multiple perspectives, thereby minimizing the model’s misclassification rate.

Figure 13.

Two confusion matrices of TG2-CAFNet used for detailed error analysis. The red rectangles highlight the groups of similar actions where misclassifications and omissions primarily occurred.

6.4. Comparison with Other Models

As shown in Table 7, we compared TG2-CAFNet with several mainstream models to validate its performance. Overall, when the TDM was used as input, the accuracy was generally higher than when the RTM was used. Regardless of the input type, DenseNet121 and ResNet18 achieved the highest recognition accuracy, primarily due to their complex deep network structures, which allowed them to capture more detailed features and improve the classification results. However, their large number of parameters makes them unsuitable for deployment in practical applications. In the comparison of lightweight models, we selected four classical lightweight architectures. In terms of parameters and FLOPs, MobileNetV3-Small-0.75 and ShuffleNetV2-0.5 performed similarly to TG2Net. However, MobileNetV3-Small-0.75 performed poorly in terms of accuracy, limiting its applicability in scenarios with high accuracy requirements. While ShuffleNetV2-0.5 excelled for inference time, it fell slightly behind TG2Net in other metrics. EfficientNetLite0 and MobileViTV3-0.5 were inferior to TG2Net in both lightweight design and accuracy.We also compared a CRNN, a temporal model. Although the CRNN had a relatively small number of parameters and FLOPs, it suffered from a longer inference time and lower accuracy compared to TG2Net. Finally, TG2-CAFNet, by fusing both RTM and TDM features, further boosted the recognition accuracy by approximately 1%. Although it did not have the best parameters or FLOPs among all models, TG2-CAFNet achieved notable accuracy improvements by sacrificing a modest increase in parameters and FLOPs, meaning the model remains suitable for deployment. While its inference time may not be its strongest suit, the focus of this research was not real-time performance, but deployability and accuracy improvements.

Table 7.

Comparison of model performance.

In summary, TG2-CAFNet leverages GhostV2 convolution to construct a radar spectrogram feature extraction module, TG2, with a significantly lower parameter count and FLOPs compared to existing lightweight models. We believe that the lightweight nature and high recognition accuracy of TG2 for radar spectrograms can mainly be attributed to redundant features and DFC attention. RTMs and TDMs are representations of human actions in the range–time domain and time-Doppler domain, respectively. These representations are abstract and contain a lot of details. Redundant features enrich feature representation and help capture complex range–time and time–frequency relationships. The introduction of DFC further enhances the model’s focus on the spatial information of radar spectrograms. Then, multi-domain fusion technology is used to further improve the model’s performance. Traditional fusion methods do not allow for steady performance improvements, so the non-linear fusion method AFF is introduced, providing a dynamic adaptive weighting fusion approach. Meanwhile, the use of CA extends the weighted fusion to spatial distribution, which is of significant importance for radar spectrograms with a clear physical meaning.

7. Conclusions

Based on IR-UWB through-wall radar, this paper proposed a method for human activity recognition using mobile edge devices and feature fusion. The method first uses an exponential weighting method to suppress static clutter and the clutter caused by wall multipath effects, generating time–distance feature maps. Then, the Hilbert transform and short-time Fourier transform are applied to the time–distance feature maps to generate time–Doppler feature maps. The signal preprocessing method we use is simple and efficient, laying a solid foundation for deploying the algorithm on mobile edge devices. Secondly, we use Ghost convolution to construct a TG2 feature extraction module suitable for radar spectrograms. Experiments verified that the TG2Net, formed by adding a head layer to this module, had high recognition accuracy for both types of radar spectrogram features. Moreover, compared with existing lightweight networks, TG2Net has extremely low parameters and FLOPs. Finally, the TG2 module is used to extract the two types of spectrogram features separately, and the AFF is improved to CAF using CA, extending the nonlinear fusion to a spatial distribution. Experiments showed that TG2-CAFNet was superior to the current mainstream models in terms of its lightweight nature and recognition accuracy when performing through-wall radar human activity recognition.

Despite the satisfactory performance of TG2-CAFNet, there are still some limitations in both the method and the dataset used in this study. One of the main limitations lies in the reliance on the spatial features of radar spectrograms for action recognition. This means that to recognize an action, the action must be completed before generating the spectrogram, which limits the method’s real-time capabilities. Furthermore, the dataset used in this study mainly contains relatively isolated and standardized actions, whereas actions in real life are continuously evolving. TG2-CAFNet only handles isolated action segments, neglecting the temporal dependencies of these actions, which limits its ability to dynamically capture continuous movements. Additionally, when multiple individuals perform actions simultaneously, their spectrograms may overlap, leading to interference and reduced recognition performance.

To overcome these limitations, future research could focus on the following aspects:

- Improving real-time recognition: developing algorithms that can recognize actions incrementally as they occur, rather than waiting for the entire action to be completed, to enhance the method’s applicability in real-time scenarios.

- Introducing continuous action recognition: incorporating models capable of handling continuous actions based on time-series features, such as RNNs, transformers, etc., to better adapt to the dynamic nature of real-world behaviors.

- Addressing multi-person scenarios: Future work could explore advanced signal separation techniques or multi-target tracking to reduce interference when multiple individuals are performing actions simultaneously.

- Multi-modal integration: Combining radar data with inputs from other sensors (such as visual or inertial data) could help distinguish similar actions and reduce the impact of interference from multiple individuals.

Author Contributions

Conceptualization, L.H. and D.L.; methodology, L.H. and D.L.; software, D.L.; validation, B.Z., G.C. and H.A.; formal analysis, M.L.; investigation, D.L.; resources, L.H.; data curation, D.L. and B.Z.; writing—original draft preparation, D.L.; writing—review and editing, L.H.; visualization, D.L.; supervision, L.H.; project administration, L.H.; funding acquisition, L.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China Special Project, grant number 62341311, and the Lanzhou City Talent Innovation and Entrepreneurship Project, grant number 2022-RC-7.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are part of a proprietary dataset. Data are available from the corresponding author upon reasonable request and with permission of the original data holders. Interested researchers may contact the corresponding author via email.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Wang, C.L.; Yan, J.J.; Zhang, Z.D. Review on Human Action Recognition Methods Based on Multimodal Data. Comput. Eng. Appl. 2024, 60, 1–18. [Google Scholar]

- Ullmann, I.; Guendel, R.G.; Kruse, N.C.; Fioranelli, F.; Yarovoy, A. A survey on radar-based continuous human activity recognition. IEEE J. Microwaves 2023, 3, 938–950. [Google Scholar] [CrossRef]

- Li, J.X.; Zhang, Q.; Zheng, G.M. Overview of Human Posture Recognition by Ultra-wideband Radar. Comput. Eng. Appl. 2021, 57, 14–23. [Google Scholar]

- Cheraghinia, M.; Shahid, A.; Luchie, S.; Gordebeke, G.J.; Caytan, O.; Fontaine, J.; Van Herbruggen, B.; Lemey, S.; De Poorter, E. A Comprehensive Overview on UWB Radar: Applications, Standards, Signal Processing Techniques, Datasets, Radio Chips, Trends and Future Research Directions. arXiv 2024, arXiv:2402.05649. [Google Scholar]

- Wang, M.; Cui, G.; Huang, H.; Gao, X.; Chen, P.; Li, H.; Yang, H.; Kong, L. Through-wall human motion representation via autoencoder-self organized mapping network. In Proceedings of the 2019 IEEE Radar Conference (RadarConf), Boston, MA, USA, 22–26 April 2019; pp. 1–6. [Google Scholar]

- Zhang, Z.; Lei, Z.; Omura, M.; Hasegawa, H.; Gao, S. Dendritic learning-incorporated vision transformer for image recognition. IEEE/CAA J. Autom. Sin. 2024, 11, 539–541. [Google Scholar] [CrossRef]

- Umirzakova, S.; Whangbo, T.K. Detailed feature extraction network-based fine-grained face segmentation. Knowl.-Based Syst. 2022, 250, 109036. [Google Scholar] [CrossRef]

- Kheddar, H.; Hemis, M.; Himeur, Y. Automatic speech recognition using advanced deep learning approaches: A survey. Inf. Fusion 2024, 109, 102422. [Google Scholar] [CrossRef]

- Çağlıyan, B.; Gürbüz, S.Z. Micro-Doppler-based human activity classification using the mote-scale BumbleBee radar. IEEE Geosci. Remote Sens. Lett. 2015, 12, 2135–2139. [Google Scholar] [CrossRef]

- Li, X.; Fioranelli, F.; Yang, S.; Romain, O.; Le Kernec, J. Radar-based hierarchical human activity classification. In Proceedings of the IET International Radar Conference (IET IRC 2020), Online, 4–6 November 2020; Volume 2020, pp. 1373–1379. [Google Scholar]

- Kim, Y.; Moon, T. Human detection and activity classification based on micro-Doppler signatures using deep convolutional neural networks. IEEE Geosci. Remote Sens. Lett. 2015, 13, 8–12. [Google Scholar] [CrossRef]

- Du, H.; Jin, T.; He, Y.; Song, Y.; Dai, Y. Segmented convolutional gated recurrent neural networks for human activity recognition in ultra-wideband radar. Neurocomputing 2020, 396, 451–464. [Google Scholar] [CrossRef]

- Cao, L.; Liang, S.; Zhao, Z.; Wang, D.; Fu, C.; Du, K. Human Activity Recognition Method Based on FMCW Radar Sensor with Multi-Domain Feature Attention Fusion Network. Sensors 2023, 23, 5100. [Google Scholar] [CrossRef] [PubMed]

- He, M.; Ping, Q.W.; Dai, R. Fall detection based on deep learning fusing ultrawideband radar spectrograms. J. Radars 2022, 12, 343–355. [Google Scholar]

- Li, X.; Chen, S.; Zhang, S.; Zhu, Y.; Xiao, Z.; Wang, X. Advancing IR-UWB radar human activity recognition with Swin-transformers and supervised contrastive learning. IEEE Internet Things J. 2023, 11, 11750–11766. [Google Scholar] [CrossRef]

- Zhang, L.L.; Jia, D.Z.; Pan, T.P.; Liu, Y.J. Human Activity Classification Based on Distributed Ul-tra-wideband Radar Combined with CNN-win Transformer. Telecommun. Eng. 2024, 64, 830–839. [Google Scholar] [CrossRef]

- Zhu, J.; Lou, X.; Ye, W. Lightweight deep learning model in mobile-edge computing for radar-based human activity recognition. IEEE Internet Things J. 2021, 8, 12350–12359. [Google Scholar] [CrossRef]

- Chakraborty, M.; Kumawat, H.C.; Dhavale, S.V.; Raj, A.A.B. DIAT-RadHARNet: A lightweight DCNN for radar based classification of human suspicious activities. IEEE Trans. Instrum. Meas. 2022, 71, 1–10. [Google Scholar] [CrossRef]

- Ou, Z.; Ye, W. Lightweight deep learning model for radar-based fall detection with metric learning. IEEE Internet Things J. 2022, 10, 8111–8122. [Google Scholar] [CrossRef]

- An, Q.; Wang, S.; Yao, L.; Zhang, W.; Lv, H.; Wang, J.; Li, S.; Hoorfar, A. RPCA-based high resolution through-the-wall human motion feature extraction and classification. IEEE Sens. J. 2021, 21, 19058–19068. [Google Scholar] [CrossRef]

- Huan, S.; Wu, L.; Zhang, M.; Wang, Z.; Yang, C. Radar human activity recognition with an attention-based deep learning network. Sensors 2023, 23, 3185. [Google Scholar] [CrossRef]

- Yang, D.; Zhu, Z.; Zhang, J.; Liang, B. The overview of human localization and vital sign signal measurement using handheld IR-UWB through-wall radar. Sensors 2021, 21, 402. [Google Scholar] [CrossRef]

- Ding, C.; Zhang, L.; Gu, C.; Bai, L.; Liao, Z.; Hong, H.; Li, Y.; Zhu, X. Non-contact human motion recognition based on UWB radar. IEEE J. Emerg. Sel. Top. Circuits Syst. 2018, 8, 306–315. [Google Scholar] [CrossRef]

- Tang, Y.; Han, K.; Guo, J.; Xu, C.; Xu, C.; Wang, Y. GhostNetv2: Enhance cheap operation with long-range attention. Adv. Neural Inf. Process. Syst. 2022, 35, 9969–9982. [Google Scholar]

- Dai, Y.; Gieseke, F.; Oehmcke, S.; Wu, Y.; Barnard, K. Attentional feature fusion. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Virtual, 5–9 January 2021; pp. 3560–3569. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13713–13722. [Google Scholar]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More features from cheap operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1580–1589. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Zheng, Z.; Zhang, D.; Liang, X.; Liu, X.; Fang, G. RadarFormer: End-to-End Human Perception with Through-Wall Radar and Transformers. IEEE Trans. Neural Netw. Learn. Syst. 2023, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Khalid, H.-U.-R.; Gorji, A.; Bourdoux, A.; Pollin, S.; Sahli, H. Multi-view CNN-LSTM architecture for radar-based human activity recognition. IEEE Access 2022, 10, 24509–24519. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).