Influence of Camera Placement on UGV Teleoperation Efficiency in Complex Terrain

Abstract

1. Introduction

- -

- The symbol recognition zone has a width of 10–30°.

- -

- The color recognition zone spans from 30 to 60° in width.

- -

- Peripheral vision zone with a horizontal width of 180–200° where only movement and brightness changes are noted.

2. Materials and Methods

2.1. Experimental Design

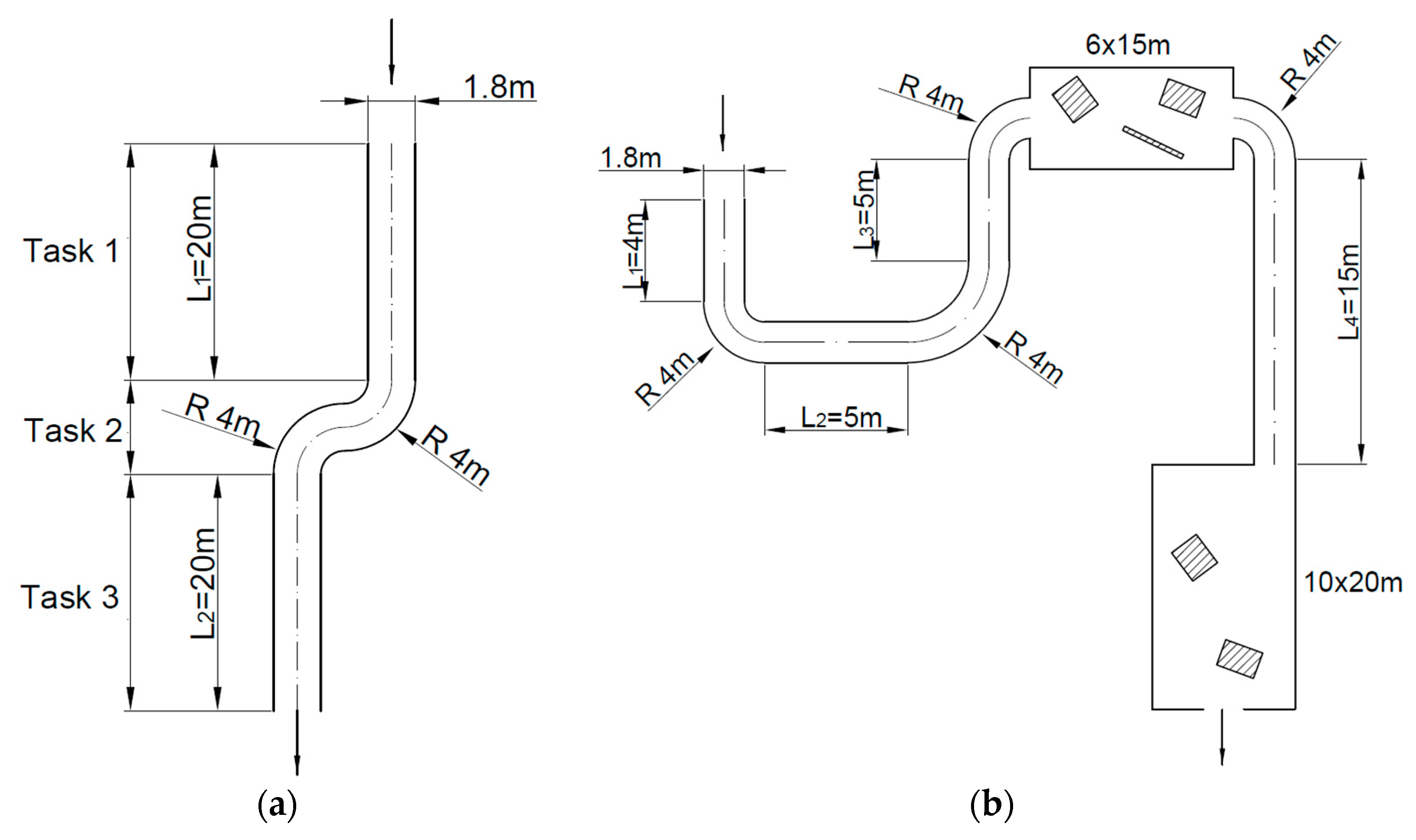

2.2. Experimental Setting and User Task

- Task 1 involves assessing the distance and speed that the platform can travel before changing its direction of movement to reach an obstacle or turn.

- Task 2 involves assessing the orientation of obstacles on the road, which in turn influences the implementation of platform turning maneuvers for overcoming obstacles (turns).

- Task 3 involves evaluating the platform’s ability to drive freely without the need for maneuvers (departing to the open space).

2.3. Participants

2.4. Used UGV and Interfaces

2.5. Experimental Procedure

2.6. Indicators of Evaluation of Conducted Tests

3. Results and Discussion

- -

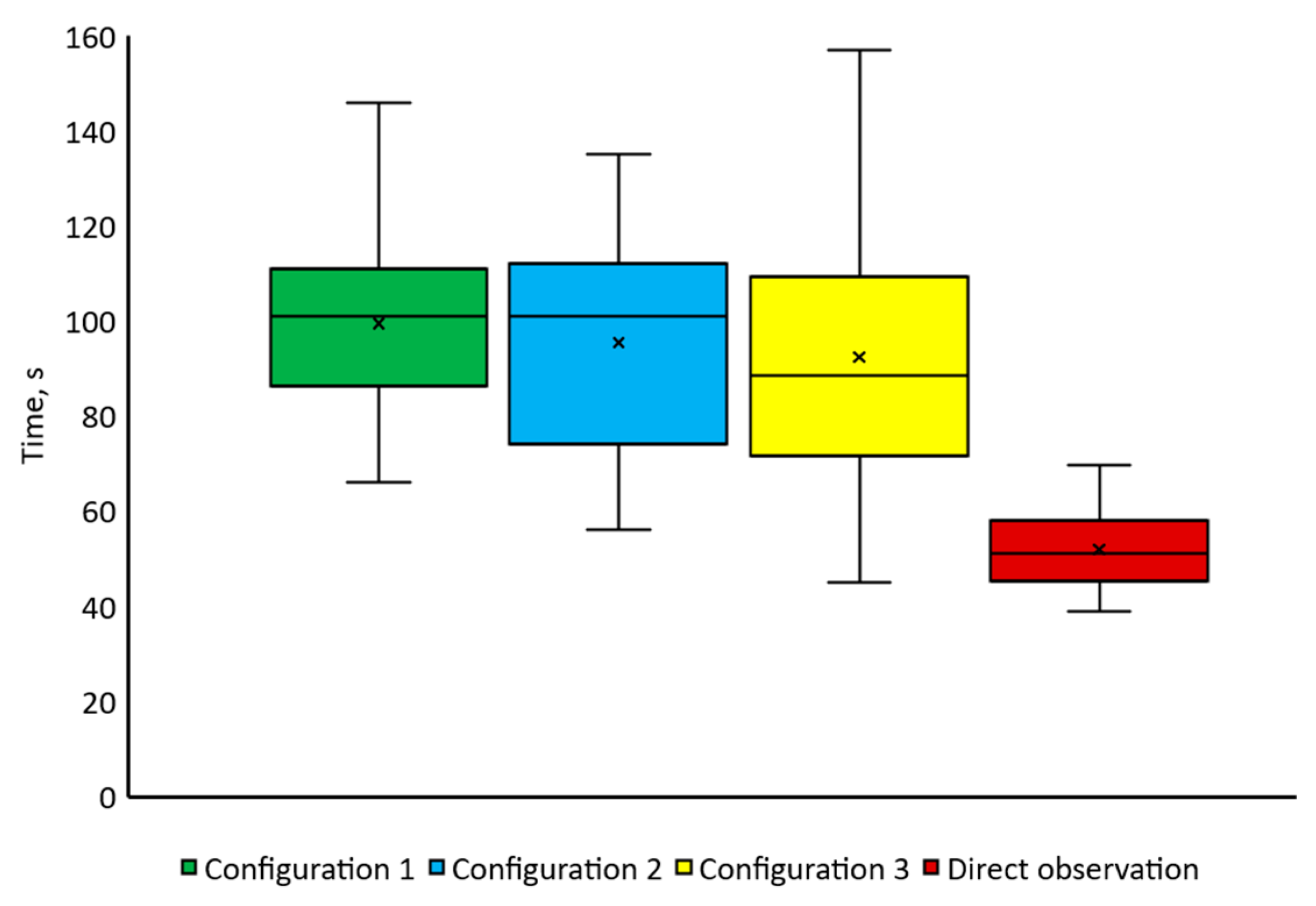

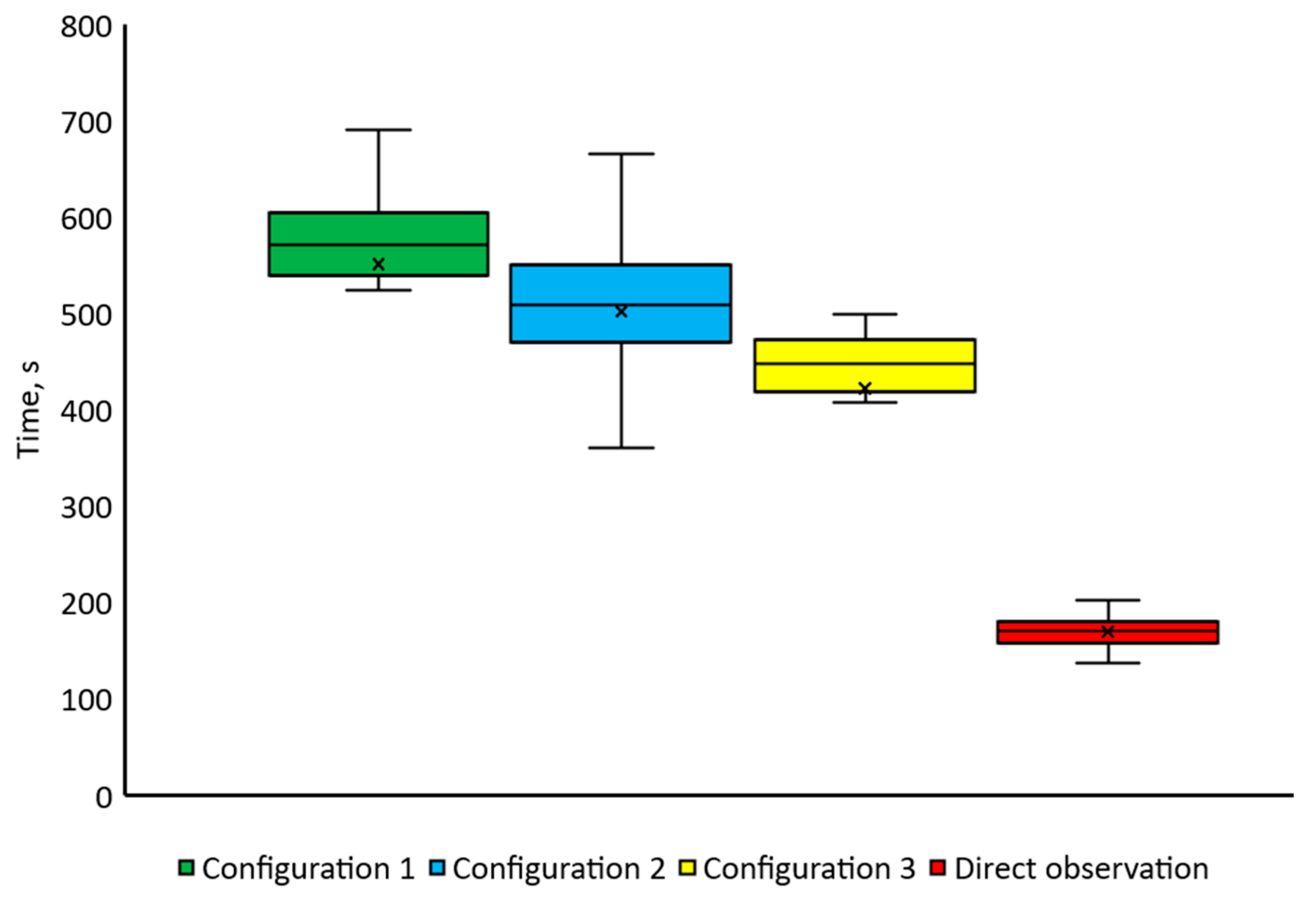

- The total time to complete trial 1 (t1);

- -

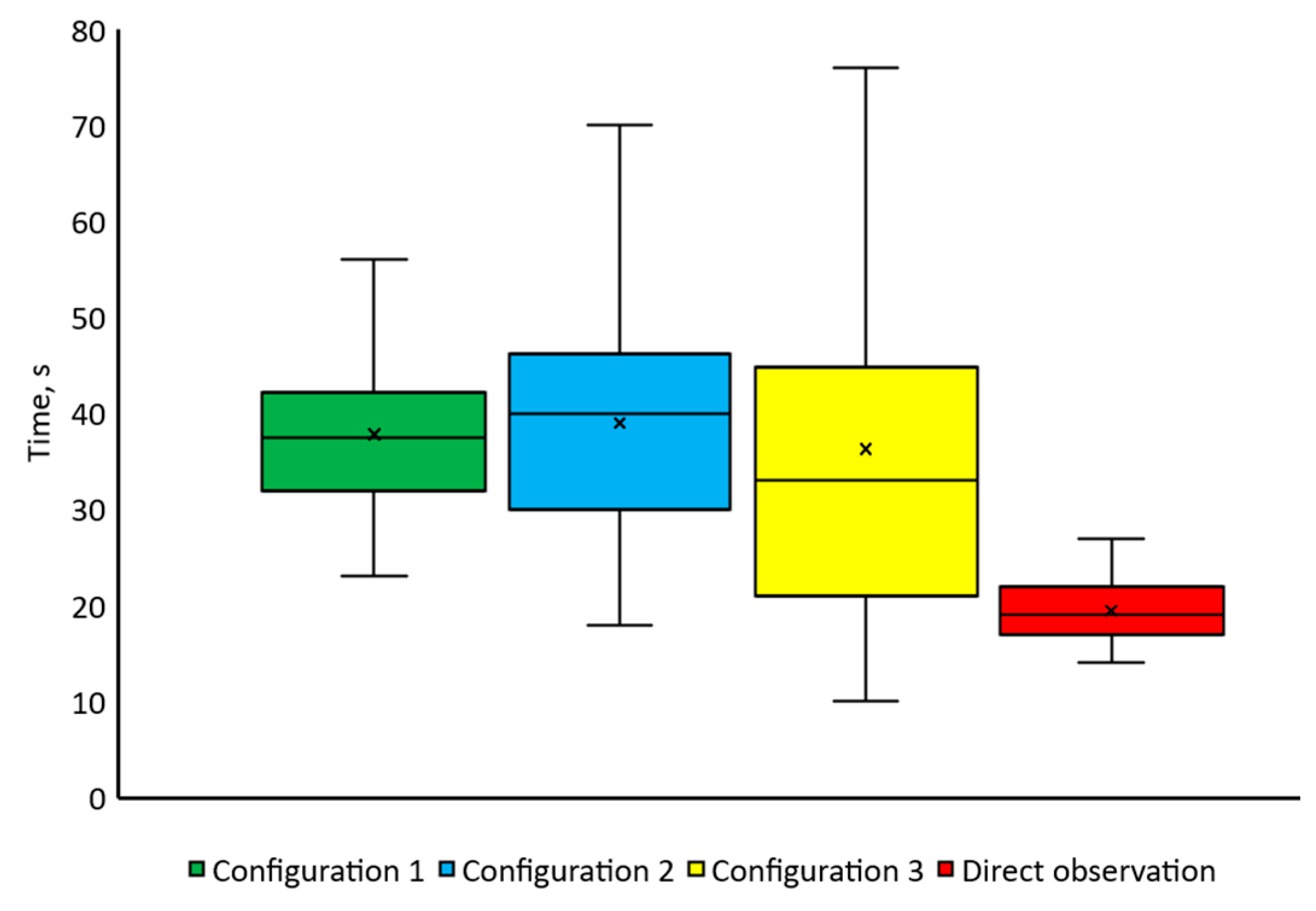

- The time to complete task 1 (t1_1);

- -

- The time to complete task 2(t1_2);

- -

- The time to complete task 3 (t1_3);

- -

- The total time to complete trial 2 (t2);

- -

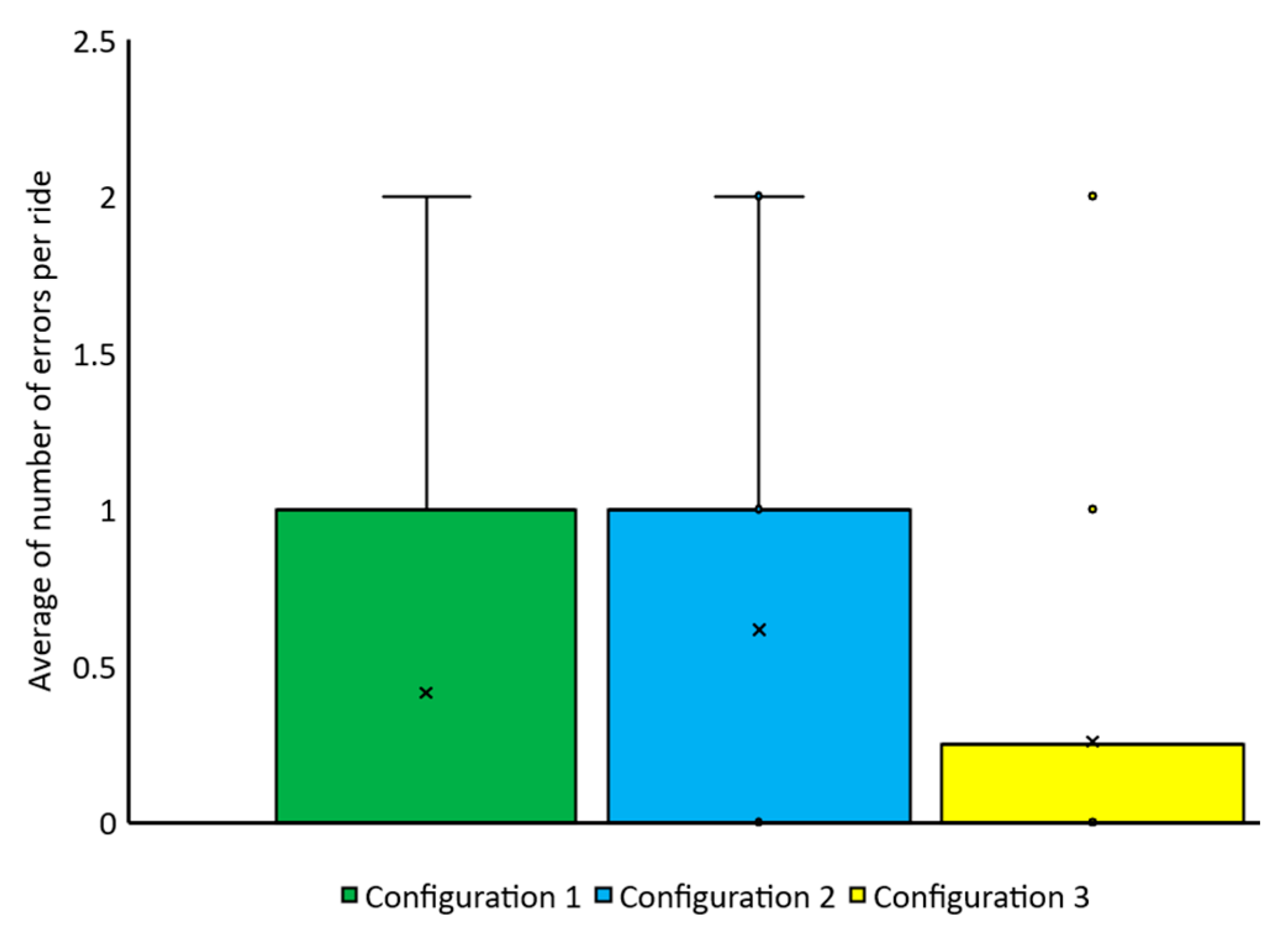

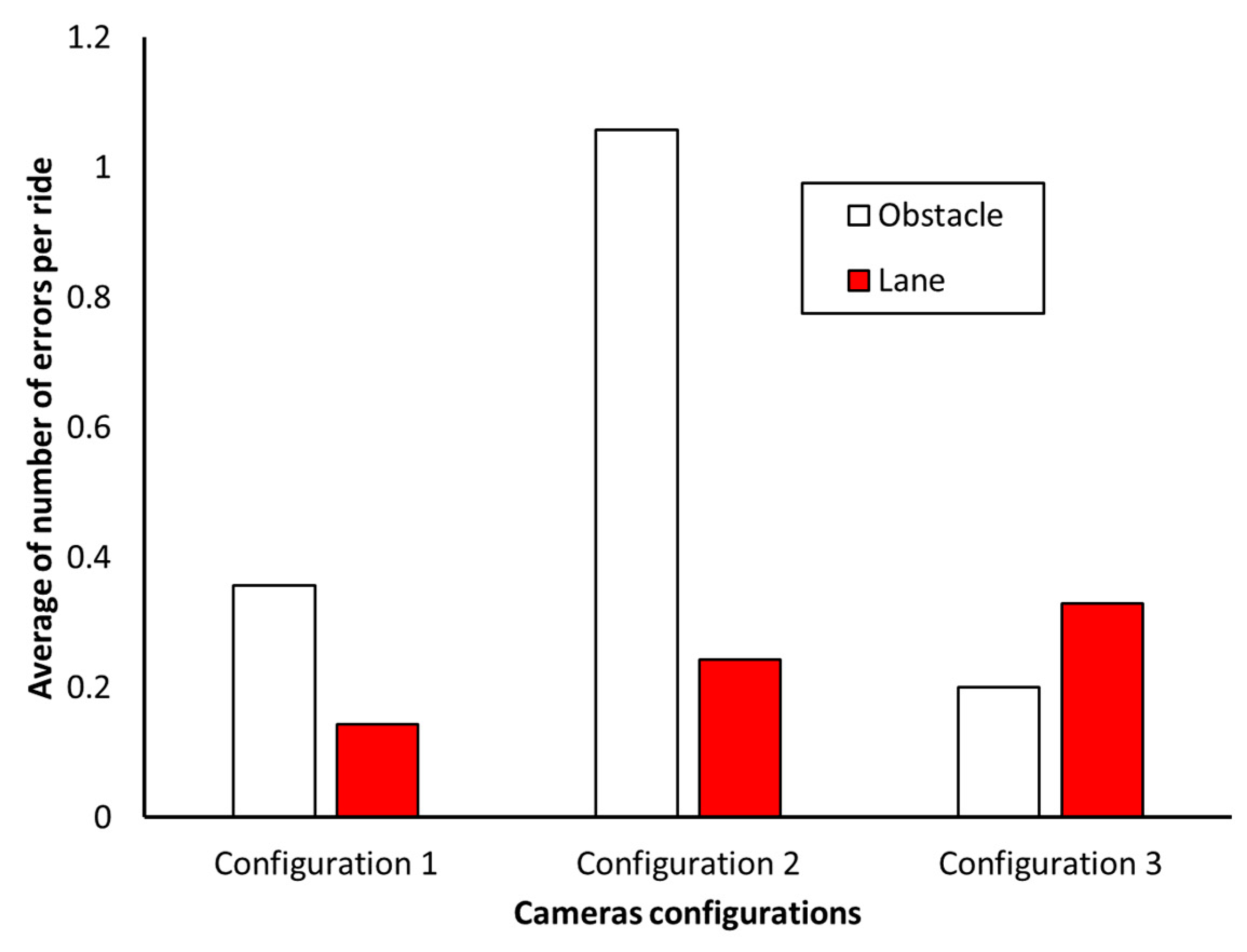

- The number of lane violations on trial 1 (n1);

- -

- The number of lane violations on trial 2 (n2);

- -

- The number of hitting obstacles on trial 2 (n2_1);

- -

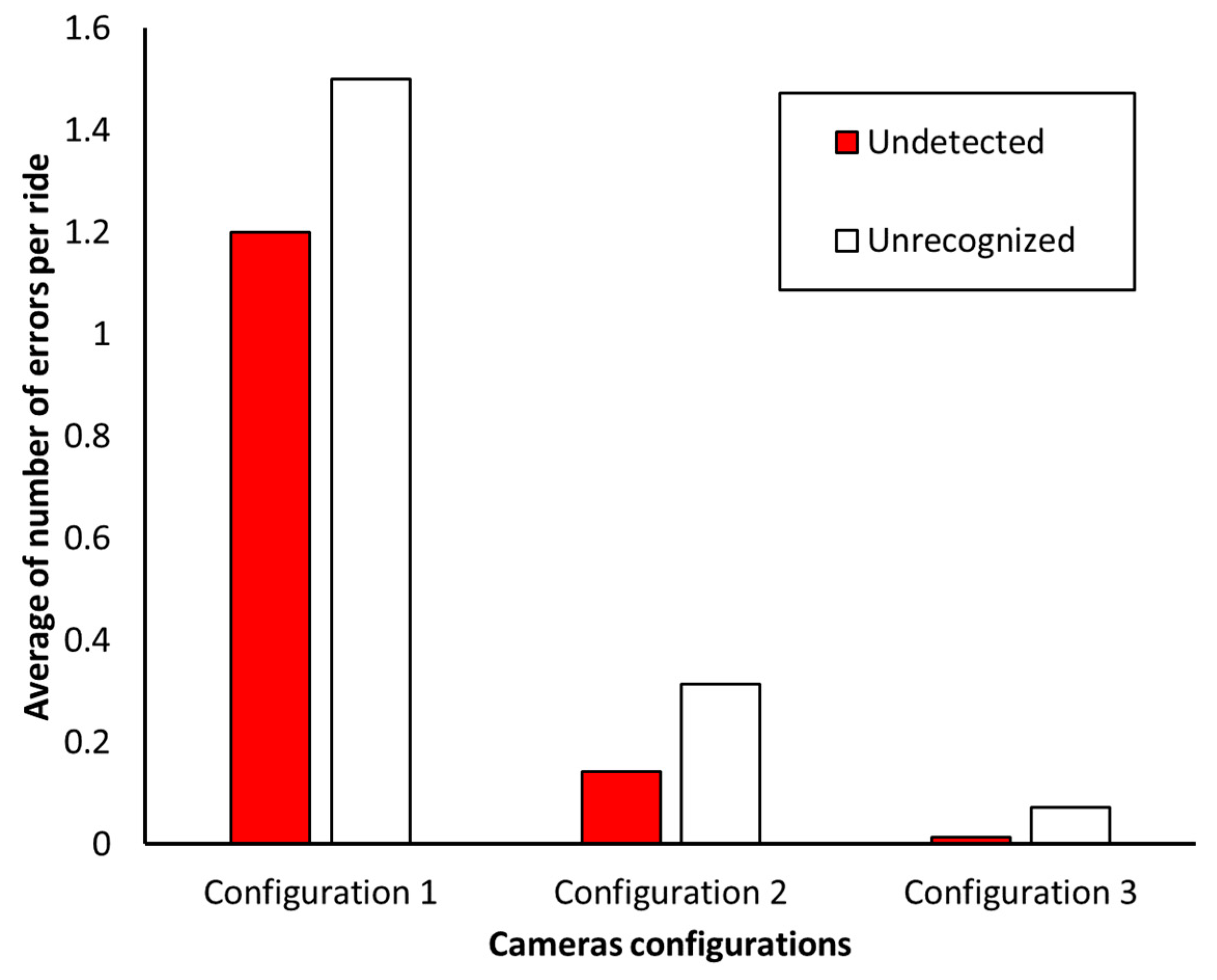

- The number of undetected obstacles on trial 2 (n2_2);

- -

- The number of unrecognized obstacles on trial 2 (n2_3).

- -

- ANOVA [52]—for parameters characterized by a normal distribution (t1; t1_1; t1_2; t1_3; t2), to demonstrate sufficient test power to demonstrate a relationship between camera configurations and complete trial time;

- -

- Kruskal–Wallis [53]—for parameters that do not have a normal distribution (n1; n2; n2_1; n2_2; n2_3), to check the significance of the relationship between the number of errors made and the camera configurations. The critical value of the Kruskal-Wallis test—H = 5.9914, for α = 0.05 and df = 2 (the degrees of freedom is number of groups minus one).

- -

- For trial 1—t1 (α = 0.05 and RMSSE (Root Mean Square Standardized Effect) = 0.161698);

- -

- For task 1—t1_1 (α = 0.05 and RMSSE = 0.159098);

- -

- For task 2—t1_2 (α = 0.05 and RMSSE = 0.293171);

- -

- For task 3—t1_2 (α = 0.05 and RMSSE = 0.465155);

- -

- For trial 2—t2 (α = 0.05 and RMSSE = 0.699696).

- -

- The number of lane violations on trial 1—n1 (α = 0.05; df = 2; H = 12.33742; p = 0.0021)

- -

- The number of lane violations on trial 2—n2 (α = 0.05; df = 2; H = 6.261478; p = 0.0058);

- -

- The number of hitting obstacles on trial 2—n2_1 (α = 0.05; df = 2; H = 47.59081; p = 0.00001)

- -

- The number of undetected obstacles on trial 2—n2_2 (α = 0.05; df = 2; H = 96.78267; p = 0.00001)

- -

- The number of unrecognized obstacles on trial 2—n2_3 (α = 0.05; df = 2; H = 95.40297; p = 0.00001)

- -

- The data number for the Kruskal–Wallis analysis—N was 600.

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kułakowski, K.; Kułakowski, P.; Klich, A.; Porzuczek, J. Application of the Infrared Thermography and Unmanned Ground Vehicle for Rescue Action Support in Underground Mine—The AMICOS Project. Remote Sens. 2022, 14, 2134. [Google Scholar] [CrossRef]

- Wojtusiak, M.; Lenda, G.; Szczepański, M.; Mrówka, M. Configurations and Applications of Multi-Agent Hybrid Drone/Unmanned Ground Vehicle for Underground Environments: A Review. Drones 2023, 7, 136. [Google Scholar] [CrossRef]

- Wu, L.; Wang, C.; Zhang, X. Robotic Systems for Underground Mining: Safety and Efficiency. Int. J. Min. Sci. Technol. 2017, 27, 855–860. [Google Scholar] [CrossRef]

- Gao, S.; Shao, W.; Zhu, C.; Ke, J.; Cui, J.; Qu, D. Development of an Unmanned Surface Vehicle for the Emergency Response Mission of the ‘Sanchi’ Oil Tanker Collision and Explosion Accident. Appl. Sci. 2020, 10, 2704. [Google Scholar] [CrossRef]

- Li, Q.; Zhang, Y.; Wang, J.; Yu, Z.; Xie, Z.; Meng, X. Study on the Autonomous Recovery System of a Field-Exploration Unmanned Surface Vehicle. Sensors 2020, 20, 3207. [Google Scholar] [CrossRef]

- Smith, J.R.; Chen, H.; Johnson, L. Autonomous Navigation of Unmanned Ground Vehicles in Urban Military Operations. IEEE Trans. Rob. 2021, 37, 905–917. [Google Scholar]

- Williams, D.K.; Garcia, M.J.; Thompson, P.A. Development and Deployment of Unmanned Ground Vehicles for Defense Applications. J. Def. Technol. 2022, 18, 205–220. [Google Scholar]

- Patel, R.; Kumar, S.; Desai, T. Challenges and Opportunities in UGV Applications for Military Operations. J. Mil. Sci. 2023, 12, 45–59. [Google Scholar]

- Mahmud, M.S.; Dawood, A.; Goher, K.M.; Bai, X.; Morshed, N.M.; Bakar, A.A.; Boon, S.W. ResQbot 2.0: An Improved Design of a Mobile Rescue Robot with an Inflatable Neck Securing Device for Safe Casualty Extraction. Appl. Sci. 2024, 14, 4517. [Google Scholar]

- Smith, J.; Johnson, R.; Lee, T. Unmanned Ground Vehicles for Search and Rescue Operations: A Review. J. Rob. Autom. 2023, 15, 567–589. [Google Scholar]

- Brown, K.; Wang, L.; Patel, A. Design and Implementation of Autonomous Ground Robots for Emergency Response. Int. J. Rob. Res. 2022, 41, 210–225. [Google Scholar]

- Anderson, M.; Kumar, S.; Zhang, Y. Challenges and Opportunities in Using Unmanned Ground Vehicles for Disaster Response. IEEE Trans. Rob. 2021, 37, 1234–1245. [Google Scholar]

- Williams, P.; Martinez, R.; Tanaka, H. Autonomous Robotic Systems for Search and Rescue in Urban Environments. Adv. Intell. Syst. 2024, 6, 89–101. [Google Scholar]

- Krogul, P.; Cieślik, K.; Łopatka, M.J.; Przybysz, M.; Rubiec, A.; Muszyński, T.; Rykała, Ł.; Typiak, R. Experimental Research on the Influence of Size Ratio on the Effector Movement of the Manipulator with a Large Working Area. Appl. Sci. 2023, 13, 8908. [Google Scholar] [CrossRef]

- Łopatka, M.J.; Krogul, P.; Przybysz, M.; Rubiec, A. Preliminary Experimental Research on the Influence of Counterbalance Valves on the Operation of a Heavy Hydraulic Manipulator during Long-Range Straight-Line Movement. Energies 2022, 15, 5596. [Google Scholar] [CrossRef]

- Chen, X.; Liu, Y.; Wang, Z. A Review on Teleoperation Control Systems for Unmanned Vehicles. Sensors 2022, 22, 1398. [Google Scholar] [CrossRef]

- Zhang, T.; Li, J.; Zhao, Q. Teleoperation Control Strategies for Mobile Robots: A Survey. Robotics 2021, 10, 275. [Google Scholar]

- Yang, H.; Liu, F.; Xu, J. Design and Implementation of a Teleoperation System for Ground Robots. Appl. Sci. 2023, 13, 1204. [Google Scholar]

- Wang, M.; Sun, Y.; Liu, Z. Real-Time Teleoperation Control for Autonomous Vehicles Using Advanced Communication Techniques. Electronics 2023, 12, 2022. [Google Scholar]

- Kim, H.; Park, J.; Lee, S. The Impact of Visual Systems on Situational Awareness in Remote Operation Systems. Sensors 2022, 22, 4434. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, W.; Yang, X. Enhancing Operator Situational Awareness with Advanced Vision Systems in Autonomous Vehicles. Robotics 2021, 10, 180. [Google Scholar]

- Chen, L.; Wang, J.; Li, Q. Visual Perception and Situational Awareness in Remote Control Systems: A Comprehensive Review. Appl. Sci. 2023, 13, 789. [Google Scholar]

- Smith, R.; Brown, T.; Davis, M. The Role of Vision-Based Systems in Improving Situational Awareness for Drone Operators. Electronics 2022, 11, 1234. [Google Scholar] [CrossRef]

- Xiang, Y.; Li, D.; Su, T.; Zhou, Q.; Brach, C.; Mao, S.S.; Geimer, M. Where Am I? SLAM for Mobile Machines on a Smart Working Site. Vehicles 2022, 4, 529–552. [Google Scholar] [CrossRef]

- Khan, S.; Guivant, J. Design and Implementation of Proximal Planning and Control of an Unmanned Ground Vehicle to Operate in Dynamic Environments. IEEE Trans. Intell. Veh. 2023, 8, 1787–1799. [Google Scholar] [CrossRef]

- Zhou, Z.; Wang, Y.; Zhou, G.; Nam, K.; Ji, Z.; Yin, C. A Twisted Gaussian Risk Model Considering Target Vehicle Longitudinal-Lateral Motion States for Host Vehicle Trajectory Planning. IEEE Trans. Intell. Transp. Syst. 2023, 24, 13685–13697. [Google Scholar] [CrossRef]

- Ding, F.; Shan, H.; Han, X.; Jiang, C.; Peng, C.; Liu, J. Security-based resilient triggered output feedback lane keeping control for human–machine cooperative steering intelligent heavy truck under Denial-of-Service attacks. IEEE Trans. Fuzzy Syst. 2023, 31, 2264–2276. [Google Scholar] [CrossRef]

- Taylor, J.H. Vision in Bioastronautics Data Book; Scientific and Technical Information Office, NASA: Hampton, VI, USA, 1973; pp. 611–665. [Google Scholar]

- Howard, I.P. Binocular Vision and Stereopsis; Oxford University Press: Oxford, UK, 1995. [Google Scholar]

- Costella, J.P. A Beginner’s Guide to the Human Field of View; Technical Report; School of Physics, The University of Melbourne: Melbourne, Australia, 1995. [Google Scholar]

- Strasburger, H.; Rentschler, I.; Jüttner, M. Peripheral Vision and Pattern Recognition: A Review. J. Vis. 2011, 11, 13. [Google Scholar] [CrossRef]

- Glumm, M.M.; Kilduff, P.W.; Masley, A.S. A Study of the Effects of Lens Focal Length on Remote Driver Performance; ARL-TR-25; Army Research Laboratory: Adelphi, MD, USA, 1992. [Google Scholar]

- van Erp, J.B.F.; Padmos, P. Image Parameters for Driving with Indirect Viewing Systems. Ergonomics 2003, 46, 1471–1499. [Google Scholar] [CrossRef]

- Glumm, M.M.; Kilduff, P.W.; Masley, A.S.; Grynovicki, J.O. An Assessment of Camera Position Options and Their Effects on Remote Driver Performance; ARL-TR-1329; Army Research Laboratory: Adelphi, MD, USA, 1997. [Google Scholar]

- Scribner, D.R.; Gombash, J.W. The Effect of Stereoscopic and Wide Field of View Condition on Teleoperator Performance; Technical Report ARL-TR-1598; Army Research Laboratory: Adelphi, MD, USA, 1998. [Google Scholar]

- Shinoda, Y.; Niwa, Y.; Kaneko, M. Influence of Camera Position for a Remotely Driven Vehicle—Study of a Rough Terrain Mobile Unmanned Ground Vehicle. Adv. Rob. 1999, 13, 311–312. [Google Scholar] [CrossRef]

- Shiroma, N.; Sato, N.; Chiu, Y.; Matsuno, F. Study on Effective Camera Images for Mobile Robot Teleoperation. In Proceedings of the International Workshop on Robot and Human Interactive Communication, Kurashiki, Okayama, Japan, 20–22 September 2004. [Google Scholar]

- Delong, B.P.; Colgate, J.E.; Peshkin, M.A. Improving Teleoperation: Reducing Mental Rotations and Translations. In Proceedings of the IEEE International Conference on Robotics and Automation, New Orleans, LA, USA, 26 April–1 May 2004. [Google Scholar]

- Scholtz, J.C. Human-Robot Interaction: Creating Synergistic Cyber Forces. In Multi-Robot Systems: From Swarms to Intelligent Automata; Springer: Boston, MA, USA, 2002; pp. 177–184. [Google Scholar]

- Thomas, L.C.; Wickens, C.D. Effects of Display Frames of Reference on Spatial Judgment and Change Detection; Technical Report ARL-00-14/FED-LAB-00-4; U.S. Army Research Laboratory: Adelphi, MD, USA, 2000. [Google Scholar]

- Witmer, B.G.; Sadowski, W.I. Nonvisually Guided Locomotion to a Previously Viewed Target in Real and Virtual Environments. Hum. Factors 1998, 40, 478–488. [Google Scholar] [CrossRef]

- Voshell, M.; Woods, D.D.; Philips, F. Overcoming the Keyhole in Human-Robot Coordination. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Canberra, Australia, 21–23 November 2005; Sage CA: Los Angeles, CA, USA, 2005; Volume 49, pp. 442–446. [Google Scholar]

- Keys, B.; Casey, R.; Yanco, H.A.; Maxwell, B.M. Camera Placement and Multi-Camera Fusion for Remote Robot Operation. In Proceedings of the IEEE International Workshop on Safety, Security and Rescue Robotics, Sendai, Japan, 21–24 October 2008; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2006; pp. 22–24. [Google Scholar]

- Fiala, M. Pano-Presence for Teleoperation. In Proceedings of the 2005 IEEE/RSJ International Conference on Intelligent Robots and Systems, Edmonton, AB, Canada, 2–6 August 2005. [Google Scholar]

- Brayda, L.; Ortiz, J.; Mollet, N.; Chellali, R.; Fontaine, J.G. Quantitative and Qualitative Evaluation of Vision-Based Teleoperation of a Mobile Robot. In Proceedings of the ICIRA 2009: Intelligent Robotics and Applications, Singapore, 16–18 December 2009; Springer: Berlin/Heidelberg, Germany, 2009; pp. 792–801. [Google Scholar] [CrossRef]

- Yamauchi, B.; Massey, K. Stingray: High-Speed Teleoperation of UGVS in Urban Terrain Using Driver-Assist Behaviors and Immersive Telepresence. In Proceedings of the 26th Army Science Conference, Orlando, FL, USA, 1–4 December 2008. [Google Scholar]

- Doisy, G.; Ronen, A.; Edan, Y. Comparison of Three Different Techniques for Camera and Motion Control of a Teleoperated Robot. Appl. Ergon. 2017, 58, 527–534. [Google Scholar] [CrossRef] [PubMed]

- Johnson, S.; Rae, I.; Multu, B.; Takayama, L. Can You See Me Now? How Field of View Affects Collaboration in Robotic Telepresence. In Proceedings of the CHI 2015, Seoul, Republic of Korea, 18–23 April 2015. [Google Scholar]

- Adamides, G.; Katsanos, C.; Parmet, Y.; Christou, G.; Xenos, M.; Hadzilacos, T.; Edan, Y. HRI Usability Evaluation of Interaction Modes for a Teleoperated Agricultural Robotic Sprayer. Appl. Ergon. 2017, 62, 237–246. [Google Scholar] [CrossRef] [PubMed]

- Hughes, S.; Manojlovich, J.; Lewis, M.; Gennari, J. Camera Control and Decoupled Motion for Teleoperation. In Proceedings of the 2003 IEEE International Conference on Systems, Man and Cybernetics (SMC’03), Washington, DC, USA, 5–8 October 2003; pp. 1820–1825. [Google Scholar]

- Tomšik, R. Power Comparisons of Shapiro-Wilk, Kolmogorov-Smirnov and Jarque-Bera Tests. Sch. J. Res. Math. Comput. Sci. 2019, 3, 238–243. [Google Scholar]

- Ogundipe, A.A.; Sha’ban, A.I. Statistics for Engineers: An Introduction to Hypothesis Testing and ANOVA; Springer: Berlin/Heidelberg, Germany, 2019. [Google Scholar]

- Jain, P.K. Applied Nonparametric Statistical Methods; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar]

| Parameter | Configuration 1 | Configuration 2 | Configuration 3 | Direct Observation | |

|---|---|---|---|---|---|

| t1 | W | 0.99283 | 0.98234 | 0.98997 | 0.96140 |

| p | 0.43841 | 0.01289 | 0.17701 | 0.02992 | |

| t1_1 | W | 0.99094 | 0.99229 | 0.98821 | 0.96836 |

| p | 0.24430 | 0.37349 | 0.09694 | 0.07362 | |

| t1_2 | W | 0.99470 | 0.99630 | 0.99495 | 0.95412 |

| p | 0.70557 | 0.91468 | 0.74287 | 0.01198 | |

| t1_3 | W | 0.99542 | 0.99212 | 0.97161 | 0.93721 |

| p | 0.80934 | 0.35525 | 0.00045 | 0.00164 | |

| t2 | W | 0.9841 | 0.98948 | 0.96881 | 0.99099 |

| p | 0.02360 | 0.14991 | 0.00020 | 0.24777 | |

| n1 | W | 0.77294 | 0.89553 | 0.88745 | - |

| p | 0.02189 | 0.30478 | 0.26160 | - | |

| n2 | W | 0.41608 | 0.53108 | 0.57489 | - |

| p | 0.00001 | 0.00001 | 0.00001 | - | |

| n2_1 | W | 0.59449 | 0.85219 | 0.42342 | - |

| p | 0.00001 | 0.00001 | 0.00001 | - | |

| n2_2 | W | 0.85158 | 0.41608 | 0.09816 | - |

| p | 0.00001 | 0.00001 | 0.00001 | - | |

| n2_3 | W | 0.87559 | 0.59456 | 0.28170 | - |

| p | 0.00001 | 0.00001 | 0.00001 | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cieślik, K.; Krogul, P.; Muszyński, T.; Przybysz, M.; Rubiec, A.; Typiak, R.K. Influence of Camera Placement on UGV Teleoperation Efficiency in Complex Terrain. Appl. Sci. 2024, 14, 8297. https://doi.org/10.3390/app14188297

Cieślik K, Krogul P, Muszyński T, Przybysz M, Rubiec A, Typiak RK. Influence of Camera Placement on UGV Teleoperation Efficiency in Complex Terrain. Applied Sciences. 2024; 14(18):8297. https://doi.org/10.3390/app14188297

Chicago/Turabian StyleCieślik, Karol, Piotr Krogul, Tomasz Muszyński, Mirosław Przybysz, Arkadiusz Rubiec, and Rafał Kamil Typiak. 2024. "Influence of Camera Placement on UGV Teleoperation Efficiency in Complex Terrain" Applied Sciences 14, no. 18: 8297. https://doi.org/10.3390/app14188297

APA StyleCieślik, K., Krogul, P., Muszyński, T., Przybysz, M., Rubiec, A., & Typiak, R. K. (2024). Influence of Camera Placement on UGV Teleoperation Efficiency in Complex Terrain. Applied Sciences, 14(18), 8297. https://doi.org/10.3390/app14188297