Steel Surface Defect Detection Algorithm Based on Improved YOLOv8n

Abstract

1. Introduction

2. Related Works

2.1. Model Architecture of YOLOv8

2.2. Based on the Improved YOLOv8 Algorithm (DDI-YOLO)

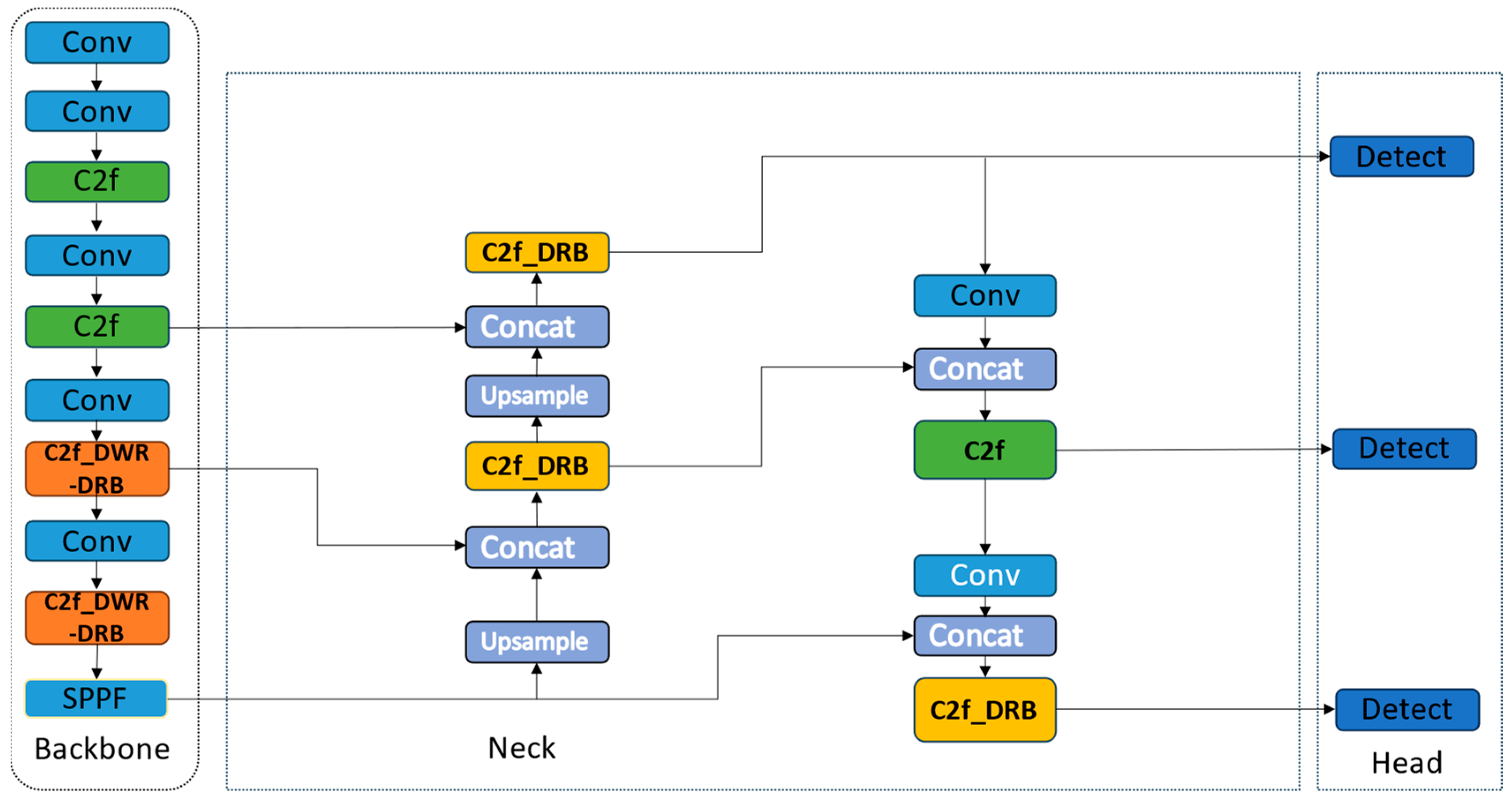

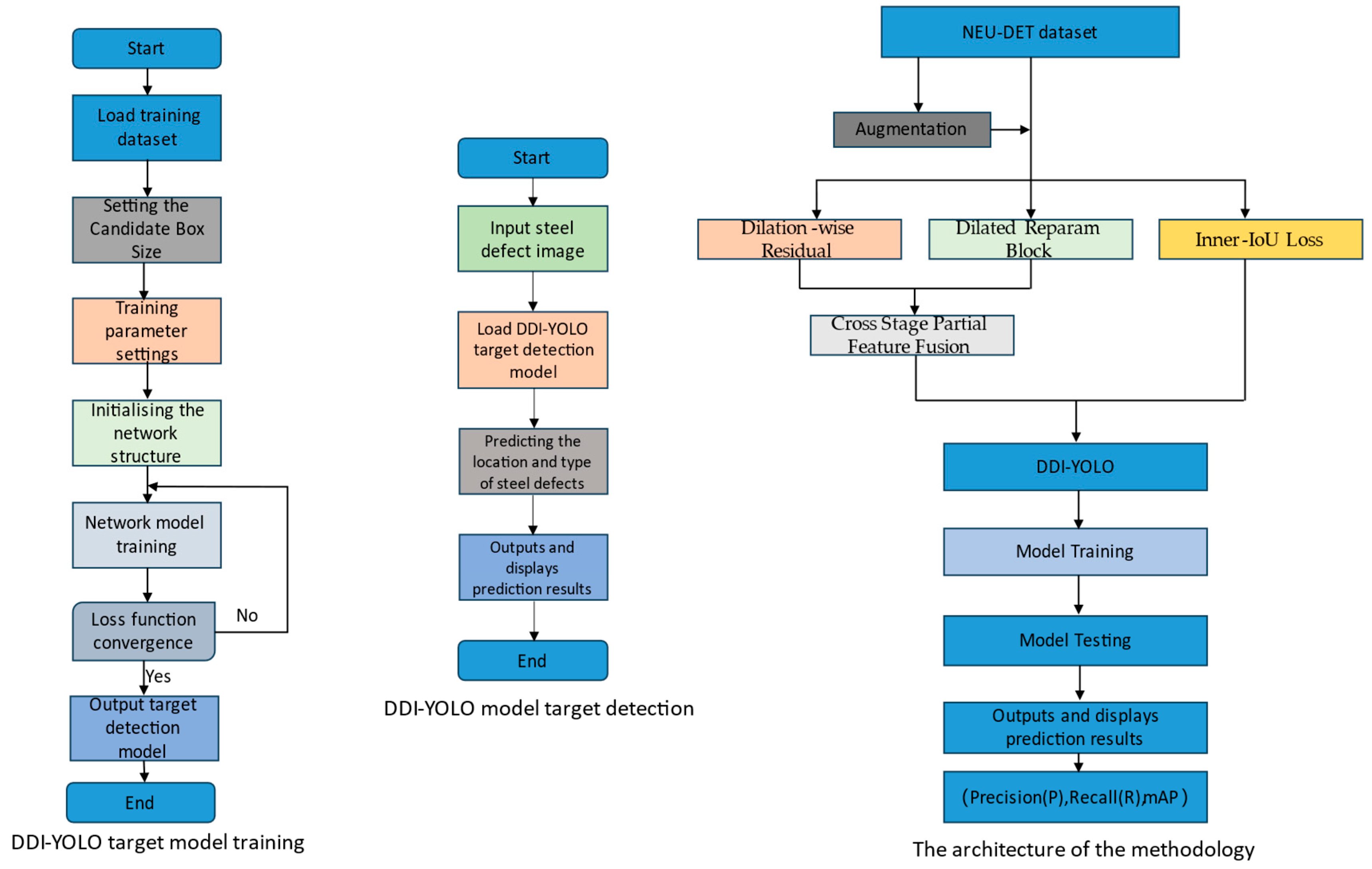

2.2.1. DDI-YOLO

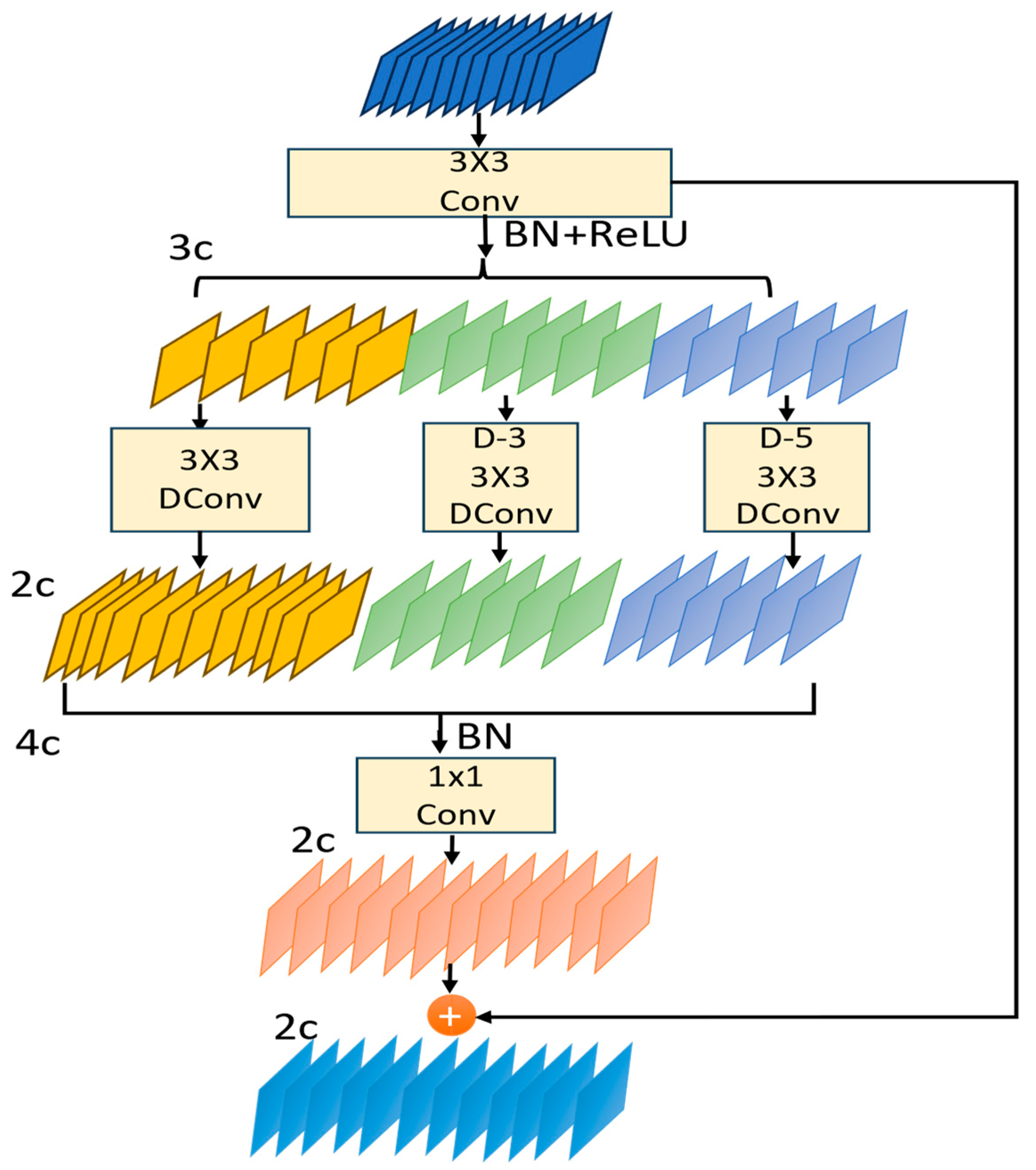

2.2.2. C2f-DWR Module

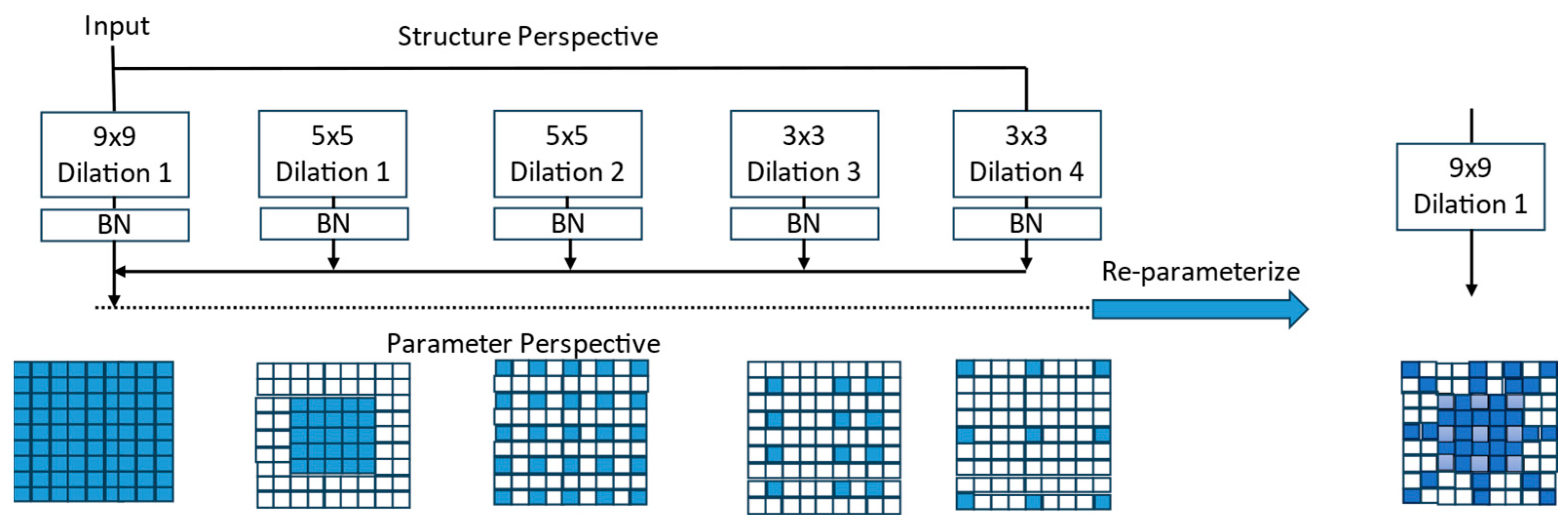

2.2.3. C2f-DRB Module

2.2.4. Inner-IoU Loss Functions

3. Results

3.1. Image Dataset

3.2. Experimental Environment

3.3. Experimental Metrics

3.4. Analysis of Experimental Results

3.4.1. Ablation Experiments

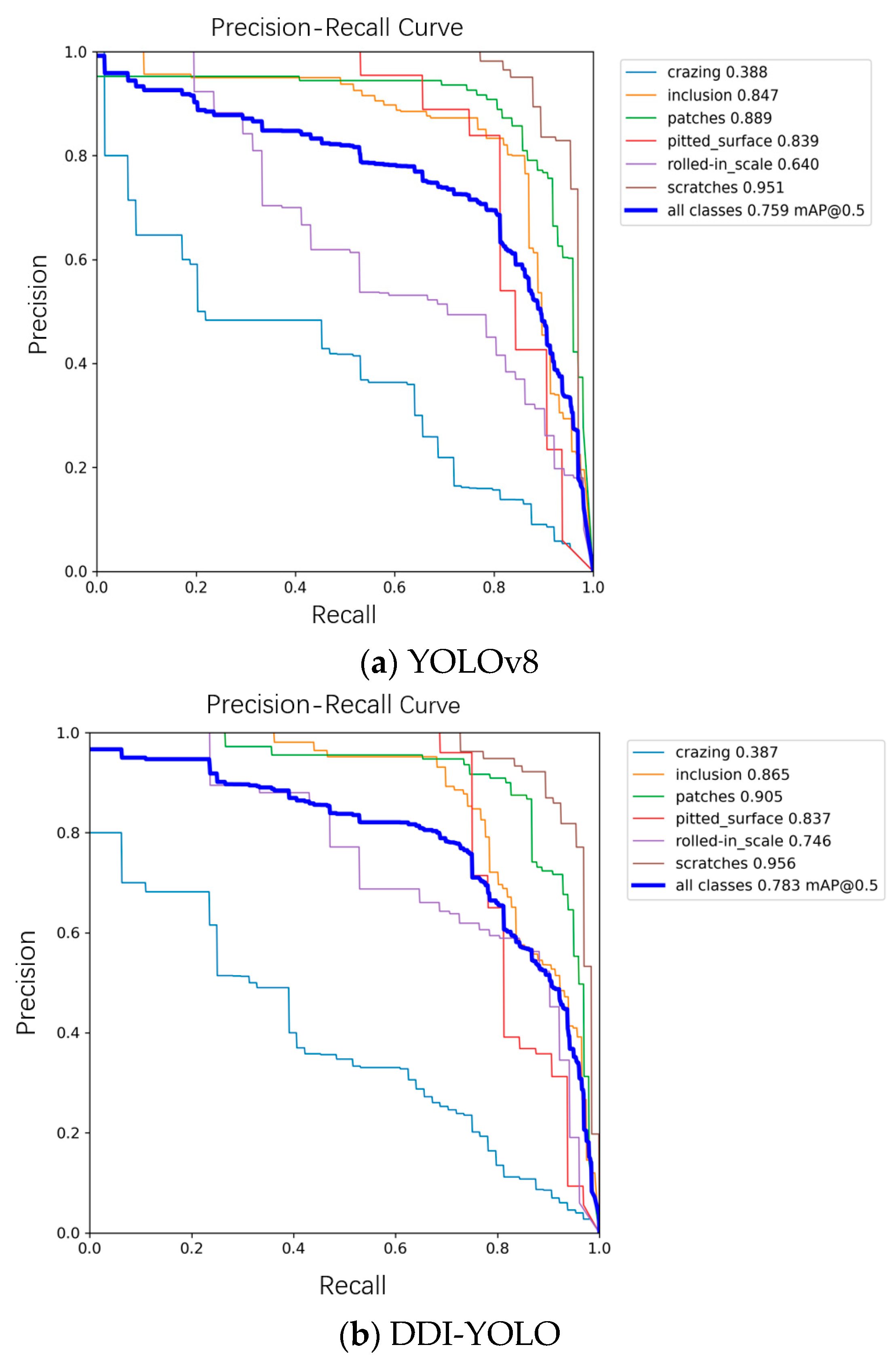

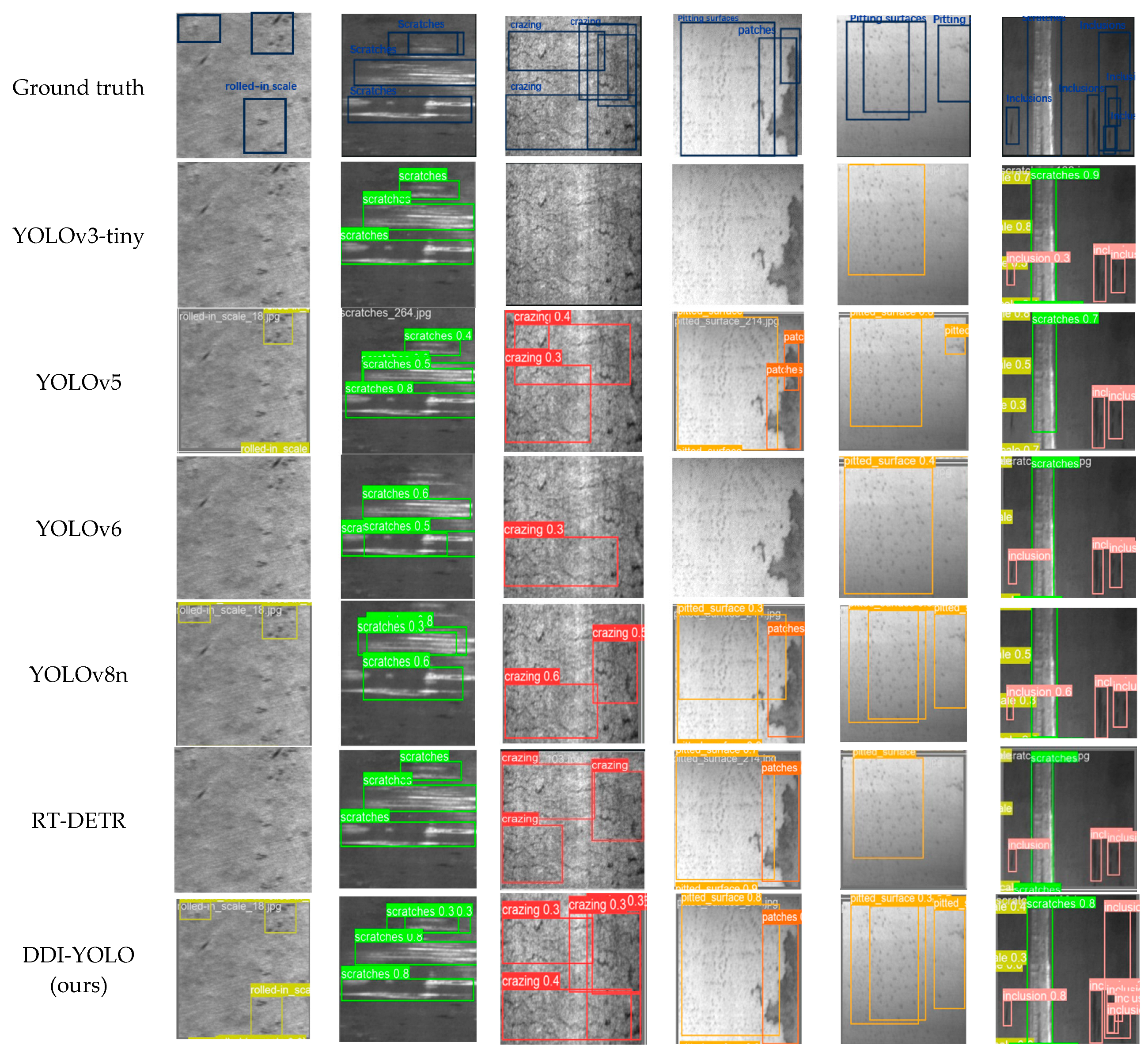

3.4.2. Comparative Experiments

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Yu, Q.; Wu, Q.; Liu, H. Research on X-Ray Contraband Detection and Overlapping Target Detection Based on Convolutional Network. In Proceedings of the 2022 4th International Conference on Frontiers Technology of Information and Computer (ICFTIC), Qingdao, China, 2–4 December 2022; pp. 736–741. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the Computer Vision—ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Lecture Notes in Computer Science. Springer International Publishing: Cham, Switzerland, 2016; Volume 9905, pp. 21–37, ISBN 978-3-319-46447-3. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Lecture Notes in Computer Science. Springer International Publishing: Cham, Switzerland, 2015; Volume 9351, pp. 234–241, ISBN 978-3-319-24573-7. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-Cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-Cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Proceedings of the Computer Vision—ECCV 2020, Glasgow, UK, 23–28 August 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M., Eds.; Lecture Notes in Computer Science. Springer International Publishing: Cham, Switzerland, 2020; Volume 12346, pp. 213–229, ISBN 978-3-030-58451-1. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image Is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. arXiv 2021, arXiv:2010.11929. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. arXiv 2016, arXiv:1511.06434. [Google Scholar]

- Applied Sciences|Free Full-Text|A Feature-Oriented Reconstruction Method for Surface-Defect Detection on Aluminum Profiles. Available online: https://www.mdpi.com/2076-3417/14/1/386 (accessed on 8 June 2024).

- Ren, F.; Fei, J.; Li, H.; Doma, B.T. Steel Surface Defect Detection Using Improved Deep Learning Algorithm: ECA-SimSPPF-SIoU-Yolov5. IEEE Access 2024, 12, 32545–32553. [Google Scholar] [CrossRef]

- Dou, Z.; Gao, H.; Liu, B.; Chang, F. Small sample steel plate defect detection algorithm of lightweight YOLOv8. Comput. Eng. Appl. 2024, 60, 90–100. [Google Scholar] [CrossRef]

- Guo, Z.; Wang, C.; Yang, G.; Huang, Z.; Li, G. Msft-Yolo: Improved Yolov5 Based on Transformer for Detecting Defects of Steel Surface. Sensors 2022, 22, 3467. [Google Scholar] [CrossRef]

- Cui, K.; Jiao, J. Steel surface defect detection algorithm based on MCB-FAH-YOLOv8. J. Graph. 2024, 45, 112–125. [Google Scholar]

- Zhou, Y.; Meng, J.; Wang, D.; Tang, Y. Steel defect detection based on multi-scale lightweight attention. Control Decis. 2024, 39, 901–909. [Google Scholar] [CrossRef]

- Zhu, W.; Zhang, H.; Zhang, C.; Zhu, X.; Guan, Z.; Jia, J. Surface Defect Detection and Classification of Steel Using an Efficient Swin Transformer. Adv. Eng. Inform. 2023, 57, 102061. [Google Scholar] [CrossRef]

- He, Y.; Song, K.; Meng, Q.; Yan, Y. An End-to-End Steel Surface Defect Detection Approach via Fusing Multiple Hierarchical Features. IEEE Trans. Instrum. Meas. 2019, 69, 1493–1504. [Google Scholar] [CrossRef]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. YOLOX: Exceeding YOLO Series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Stoken, A.; Borovec, J.; Kwon, Y.; Michael, K.; Fang, J.; Yifu, Z.; Wong, C.; Montes, D. Ultralytics/Yolov5: V7. 0-Yolov5 Sota Realtime Instance Segmentation. Zenodo 2022. [Google Scholar] [CrossRef]

- Wei, H.; Liu, X.; Xu, S.; Dai, Z.; Dai, Y.; Xu, X. DWRSeg: Rethinking Efficient Acquisition of Multi-Scale Contextual Information for Real-Time Semantic Segmentation. arXiv 2023, arXiv:2212.01173. [Google Scholar]

- Ding, X.; Zhang, Y.; Ge, Y.; Zhao, S.; Song, L.; Yue, X.; Shan, Y. UniRepLKNet: A Universal Perception Large-Kernel ConvNet for Audio, Video, Point Cloud, Time-Series and Image Recognition. arXiv 2024, arXiv:2311.15599. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU Loss: Faster and Better Learning for Bounding Box Regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12993–13000. [Google Scholar]

- Zhang, H.; Xu, C.; Zhang, S. Inner-IoU: More Effective Intersection over Union Loss with Auxiliary Bounding Box. arXiv 2023, arXiv:2311.02877. [Google Scholar]

- Song, K.; Yan, Y. A Noise Robust Method Based on Completed Local Binary Patterns for Hot-Rolled Steel Strip Surface Defects. Appl. Surf. Sci. 2013, 285, 858–864. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. DETRs Beat YOLOs on Real-Time Object Detection. arXiv 2024, arXiv:2304.08069. [Google Scholar]

| Model | P% | R% | mAP% | Params/M | GFLOPs/G | FPS (Frames/s) |

|---|---|---|---|---|---|---|

| YOLOv8n | 69.2 | 77.4 | 75.9 | 3.01 | 8.1 | 99 |

| YOLOv8n + DWR | 71.7 | 76.8 | 78.4 | 2.95 | 8.0 | 95 |

| YOLOv8n + DRB | 68.1 | 75.6 | 76.6 | 2.62 | 7.4 | 104 |

| YOLOv8n + Inner-Iou | 74.6 | 72.5 | 77.4 | 3.01 | 8.1 | 108 |

| YOLOv8n + DWR + DRB | 73.8 | 71.6 | 78.0 | 2.66 | 7.5 | 126 |

| DDI-YOLO (ours) | 72.5 | 71.4 | 78.3 | 2.66 | 7.5 | 158 |

| Dataset | Methods | Defect Type | P% | R% | mAP% |

|---|---|---|---|---|---|

| NEU-DET | YOLOv8n | Cr | 35.1 | 54.7 | 38.8 |

| In | 78.9 | 86.2 | 84.7 | ||

| Pa | 83.7 | 85.7 | 88.9 | ||

| Ps | 82.9 | 81.2 | 83.9 | ||

| Rs | 52.1 | 64.0 | 64.0 | ||

| Sc | 82.5 | 92.7 | 95.1 | ||

| DDI-YOLO | Cr | 49.7 | 31.2 | 38.7 (↓0.1) | |

| In | 78.6 | 78.4 | 86.5 (↑1.8) | ||

| Pa | 81.1 | 86.7 | 90.5 (↑1.6) | ||

| Ps | 76.9 | 75.0 | 83.7 (↓0.2) | ||

| Rs | 67.4 | 64.7 | 74.6 (↑10.6) | ||

| Sc | 81.4 | 92.4 | 95.6 (↑0.5) |

| Model | P% | R% | mAP% | Params/M | GFLOPs/G | FPS/(Frames/s) |

|---|---|---|---|---|---|---|

| SSD | 75.50 | 61.12 | 73.03 | 21.5 | 31.5 | 42 |

| YOLOv3-tiny | 61.8 | 70.7 | 69.0 | 12.13 | 18.9 | 95 |

| YOLOv5n | 72.3 | 73.9 | 77.7 | 2.50 | 7.1 | 111 |

| YOLOv6n | 71.8 | 70.7 | 76.4 | 4.23 | 11.8 | 99 |

| YOLOv7-tiny | 70.5 | 61.2 | 66.8 | 6.03 | 13.2 | 89 |

| YOLOv8n | 69.2 | 77.4 | 75.9 | 3.01 | 8.1 | 99 |

| RT-DETR | 61.4 | 63.2 | 66.5 | 28.5 | 100.6 | 44 |

| DDI-YOLO (ours) | 72.5 | 71.4 | 78.3 | 2.66 | 7.5 | 158 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, T.; Pan, P.; Zhang, J.; Zhang, X. Steel Surface Defect Detection Algorithm Based on Improved YOLOv8n. Appl. Sci. 2024, 14, 5325. https://doi.org/10.3390/app14125325

Zhang T, Pan P, Zhang J, Zhang X. Steel Surface Defect Detection Algorithm Based on Improved YOLOv8n. Applied Sciences. 2024; 14(12):5325. https://doi.org/10.3390/app14125325

Chicago/Turabian StyleZhang, Tian, Pengfei Pan, Jie Zhang, and Xiaochen Zhang. 2024. "Steel Surface Defect Detection Algorithm Based on Improved YOLOv8n" Applied Sciences 14, no. 12: 5325. https://doi.org/10.3390/app14125325

APA StyleZhang, T., Pan, P., Zhang, J., & Zhang, X. (2024). Steel Surface Defect Detection Algorithm Based on Improved YOLOv8n. Applied Sciences, 14(12), 5325. https://doi.org/10.3390/app14125325