Abstract

The positioning error of the GPS method at close distances is relatively large, rendering it incapable of accurately guiding unmanned surface vehicles (USVs) back to the mother ship. Therefore, this study proposes a near-distance recovery method for USVs based on monocular vision. By deploying a monocular camera on the USV to identify artificial markers on the mother ship and subsequently leveraging the geometric relationships among these markers, precise distance and angle information can be extracted. This enables effective guidance for the USVs to return to the mother ship. The experimental results validate the effectiveness of this approach, with positioning distance errors of less than 40 mm within a 10 m range and positioning angle errors of less than 5 degrees within a range of ±60 degrees.

1. Introduction

Unmanned surface vehicles (USVs) are self-driving tools capable of performing tasks in a variety of complex environments without any human intervention. USVs exhibit significant practical value in both commercial and military applications [1]. In commercial sectors, they are utilized in environmental monitoring [2], resource exploration [3], shipping [4], and other related areas. In the military domain, they can execute missions such as mine countermeasures [5], antisubmarine warfare [6], and reconnaissance [7], among others. Autonomous recovery and guidance are essential capabilities for USVs. While GPS positioning can guide USVs to approach the mother ship when they are at a distance, it lacks precision when USVs are in close proximity. To address this issue, employing visual positioning enables more precise guidance for USVs to return to the mother ship, especially in close-range scenarios.

In recent years, visual guidance technology has gained significant attention in the field of computer vision and has been widely applied in domains such as autonomous driving [8], unmanned drone landing [9], robot guidance [10], and automatically guided vehicle (AGV) visual guidance [11]. Visual sensors can capture rich environmental scene information, enabling the precise positioning and guidance of targets. Additionally, they are cost-effective and user-friendly, making them highly favored in both academic and industrial sectors [12,13,14].

Visual guidance is typically based on monocular vision models or multi-vision models for the positioning of the target, and on using the obtained position and posture of the target to guide its movement [15]. Multi-vision requires multiple cameras for shooting, resulting in higher costs, and also requires addressing the feature matching issue from different cameras, which leads to complex operations [16,17]. In contrast, monocular vision only requires a single camera, enabling implementation through the pinhole imaging principle, resulting in lower costs and convenient operation [18,19]. The commonly used methods for monocular vision positioning include the Perspective-n-Point (PNP) method [20], the imaging model method [21], the data regression modeling method [22], and the geometric relationship method [23]. The imaging model method achieves positioning based on a similar triangle relationship and is typically utilized for forward vehicle positioning in the transportation field but is not suitable for directional shifts of water surface targets. The data regression method achieves positioning based on the mapping relationship model constructed from the pixel coordinates of feature points and actual coordinate data. This method requires a large amount of training data and is significantly influenced by the environment, thus not being suitable for water surface target positioning. The geometric relationship method establishes a model based on the known vertical height of the camera installation to achieve positioning, enabling accurate positioning of targets within a plane. However, water wave fluctuations can lead to significant positioning errors, making it unsuitable for water surfaces with pronounced ripples. The PNP method is a common technique in monocular vision positioning and guidance technology. It achieves positioning and guidance by identifying artificial markers and utilizing the geometric features of these markers. Therefore, this paper adopts the PNP method for the positioning and recovery guidance of unmanned surface vessels.

Visual positioning and guidance based on artificial markers have a wide range of applications. Lin [24] designed an artificial marker that consists of a circle and the letter “H”. They used a model-based enhancement approach to improve the quality and brightness of the target image. This enabled safe landing of unmanned drones in low-light conditions. Babinec [25] developed a specialized ArCuo marker, which comprises black or white square blocks measuring 7 × 7 in size. The recognition of ArCuo markers is used for robot localization. Huang [26] utilized the internal circular refueling port on rotary-wing aircrafts as a marker for recognition. This marker is used in autonomous docking and refueling tasks for unmanned drones.

Autonomous recovery technology for USVs based on vision is currently a research focus in the field of marine technology. To solve the problem of large GPS positioning errors within a short range which prevent accurate guidance of unmanned surface vehicles (USVs) returning to their mother ships, this paper proposes a close-range recovery guidance method based on monocular vision for USVs. Firstly, a two-level circular artificial marker is designed for target localization at different distances. Secondly, the marker is initially extracted using color segmentation, and then, ellipse fitting based on least squares estimation is employed to obtain the center points of the eight ellipses corresponding to the marker. Subsequently, a correction method is proposed to address the issue of noncoincident center points of the two circles in the marker on the imaging plane. Finally, based on the corrected pixel coordinates of the four circular markers, the PNP method is utilized for localization, guiding the USV back to the mother ship. The main contributions of this paper are as follows:

- Designed an artificial marker consisting of a circular ring for close-range visual localization.

- Utilized the least squares method to obtain the pixel coordinates of the centers of the two circles within the ring and addressed the issue of noncoinciding centers caused by perspective transformation using the cross-ratio invariance.

- Analyzed the influence of different distances on positioning angles and the influence of different angles on positioning distances. Through experimental comparison, it has been demonstrated that the method proposed in this paper is practical for close-range positioning and can be applied to recovery guidance for unmanned boats.

The structure of this article consists of four parts. The first part is the introduction, which provides a detailed description of the research background and significance, and discusses the structure and contribution of the present study. The second part is the content of the research methods, which provides a detailed description of the content and innovation of the proposed methods. The third part is the experimental results and analysis, which validates and analyzes the proposed methods through experiments. The fourth part is the conclusion, which summarizes this study and outlines the limitations of the proposed methods and potential future directions.

2. Materials and Methods

This section describes the specific method for USV localization and guidance, which consists of four parts: the design of artificial markers, detection of artificial markers and extraction of ellipse centers, correction of circular ring center points, and visual localization of the USV.

2.1. Design of Artificial Markers

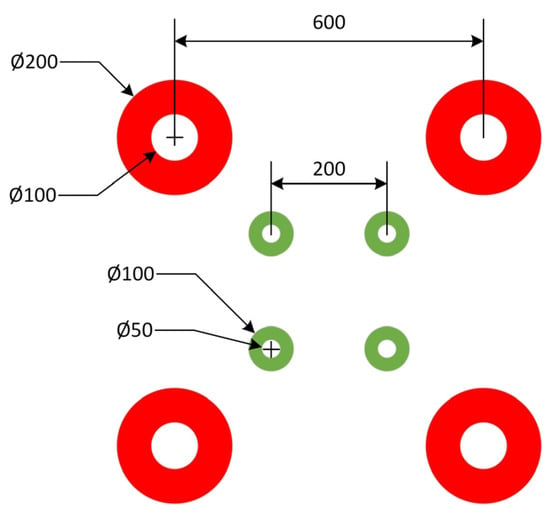

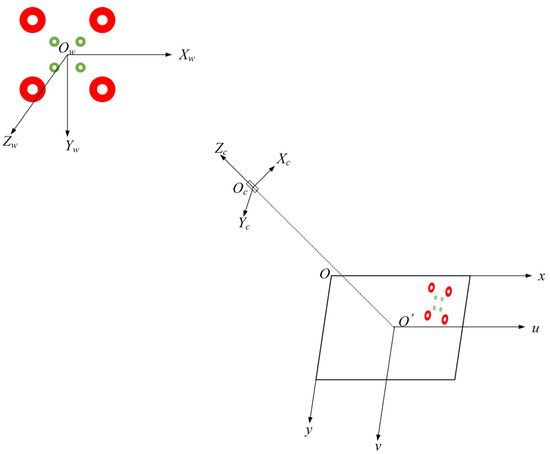

The structure and geometric characteristics of circular markers are simple, and their projection on the image plane is always approximated as an ellipse, making them easy to recognize. Therefore, this study introduces a two-tier artificial marker composed of circular rings for autonomous recovery guidance of USVs. The dimensional structure of the artificial marker is depicted in Figure 1. The first tier consists of four outer red circular rings, with diameters of 100 mm and 200 mm, and a center-to-center distance of 600 mm. The second tier comprises four inner green circular rings, with diameters of 50 mm and 200 mm, and a center-to-center distance of 200 mm. The design of the two-tier circular marker aims to facilitate recognition and positioning at different distances. When the distance is close, positioning can be achieved by identifying the four inner green circular rings. Conversely, in situations where the distance is far and the camera fails to identify the four green circular rings, positioning can be accomplished by recognizing the four outer red circular rings.

Figure 1.

Camera shooting scene.

2.2. Detection of Artificial Markers and Extraction of Ellipse Centers

The detection of artificial markers is a prerequisite for visual positioning. By detecting the artificial markers installed on the mother ship, the positioning of the USV can be achieved based on the characteristics of the artificial markers. Therefore, a method combining color segmentation and least squares ellipse fitting is proposed to achieve the detection of artificial markers and the extraction of the center points of the imaged ellipses. This method is aimed toward achieving accurate detection and localization of the artificial markers.

The color segmentation technique is employed to initially differentiate artificial markers from the background. Color segmentation is a method of image segmentation that relies on color features. Converting the acquired RGB image to the HSV color space enables better differentiation of color characteristics, facilitating the segmentation of artificial markers. The conversion formula for the HSV image is given by Equation (1), where H, S, and V represent the hue, saturation, and value, respectively. R, G, and B correspond to the values of the red, green, and blue channels, respectively.

The color-segmented image of the artificial marker is shown in Figure 2b after color segmentation. However, in Figure 2b, there are still other interferences present besides the region of the artificial marker. To eliminate these interferences and extract the pixel coordinates of the center point of the imaged ellipse, a least squares ellipse fitting method is employed [27]. The final fitting result is illustrated in Figure 2c. The purpose of using the least squares ellipse fitting method is due to the fact that the projection of a circle on the image plane is always approximately an ellipse. The principle of the least squares ellipse fitting method is to first assume the parameters of the ellipse and then calculate the sum of the squared distances between all of the points and the assumed ellipse. Finally, the parameters that minimize this sum are determined as the parameters of the assumed ellipse. The specific implementation process is as follows:

Figure 2.

Diagrams illustrating the process of detection and ellipse fitting of artificial markers: (a) the original image; (b) color segmentation; (c) least squares fitting of an ellipse.

First, let us assume an equation for an ellipse (as shown in Equation (2)), where the pixel coordinates of the ellipse in the image plane are denoted as Pi(xi, yi). Based on the ellipse equation in Equation (2) and the principle of least squares, the objective function F(A, B, C, D, E) is derived. The objective function F is represented by Equation (3).

To obtain the minimum value of the objective function F, it is necessary to set the partial derivatives of F(A, B, C, D, E) with respect to each parameter to zero, as shown in Equation (4).

According to the principle of solving partial derivatives, Equation (5) can be derived. Then, by substituting the acquired pixel coordinates of the ellipse in the image plane, Pi(xi, yi), into Equation (5), the unknown parameters of the ellipse, A, B, C, D, and E, can be solved.

Once the values of the ellipse parameters A, B, C, D, and E are solved, they can be substituted into Equation (6) to obtain the pixel coordinates of each ellipse’s center point. Equation (6) represents the formula for solving the coordinates of the ellipse’s center point, as well as the lengths of the major and minor axes. Here, (x0, y0) represents the center point of the ellipse, a represents the length of the major axis, and b represents the length of the minor axis.

After performing least squares ellipse fitting, we obtained the pixel coordinates of the center points for the eight ellipses in the image plane. This is because each ring will result in two ellipses in the image plane. Figure 2 illustrates the process of artificial marker detection and the extraction of the ellipse center points.

2.3. Correction of Circular Ring Center Points

While a circle is approximately represented as an ellipse on the imaging plane and is easier to recognize, there can still be deviations in the center points of the circles due to the effects of perspective transformation. Therefore, we designed a circular marker which allows us to correct the center points using the circular structure.

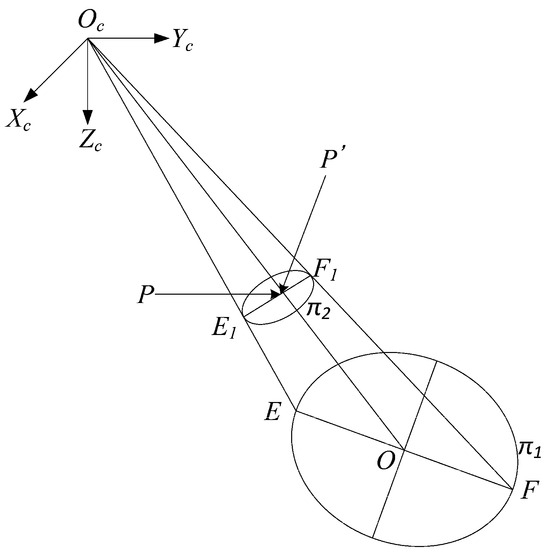

Figure 3 depicts the perspective projection image of an individual circle in the artificial marker. In Figure 3, XcYcOcZc represents the camera coordinate system, π1 denotes a circular plane within the artificial marker, O represents the center of the circle, EF represents the diameter of the circle, π2 indicates the ellipse of the circular marker after undergoing perspective transformation on the imaging plane, E1F1 represents the projection of EF on the imaging plane, P′ represents the intersection point between OcO and E1F1, and P represents a center point of an ellipse extracted in Section 2.2 on the imaging plane.

Figure 3.

Perspective transformation of a circle.

In the first case of projection imaging, when the optical axis Zc of the camera is perpendicular to the plane π1, which means that the planes π1 and π2 are parallel to each other, and the line EF is parallel to the line E1F1, the triangle OcE1F1 is similar to the triangle OcEF. This similarity principle allows us to derive Formula (7). According to Equation (7) and the equality EO = OF, it can be concluded that E1P′ = P′F1, which implies that P and P′ coincide with each other.

In the second case of projection imaging, when the camera’s optical axis Zc is not perpendicular to the plane π1, the point P′ and the ellipse center point P obtained through least squares fitting will not coincide, resulting in a center deviation.

In order to address the center deviation issue of the ellipse in the second case, this study proposes a center correction method using a ring marker. By utilizing the imaging relationship of the ring marker, a unique center point can be identified. The specific procedure is as follows:

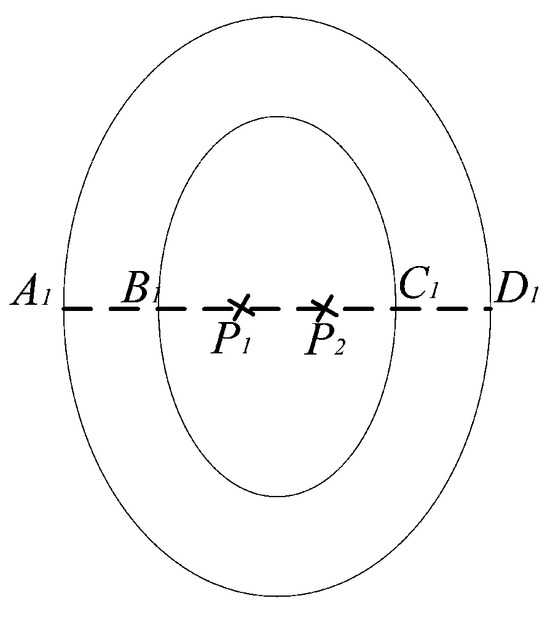

First, based on the method described in Section 2.2, one obtains the pixel coordinates of the two ellipses’ center points P1 and P2 on the image plane. One connects points P1 and P2, and extends the line P1P2 to intersect with the circumference of the two ellipses at points A1, B1, C1, and D1, as shown in Figure 4.

Figure 4.

Circular ring in image plane.

Next, the linear equation of the line A1D1 can be derived based on the points P1 and P2. Furthermore, utilizing Equation (2) from Section 2.2, the equations for the two ellipses can be obtained. Finally, by simultaneously solving the linear equation of the line and the equations of the ellipses, the pixel coordinates of points A1, B1, C1, and D1 can be determined.

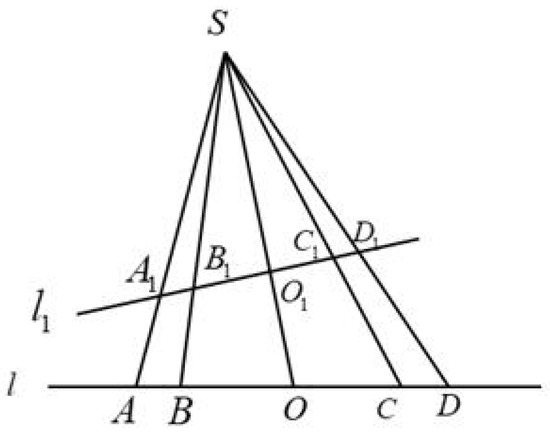

Finally, based on the cross-ratio invariance principle [28] in perspective projection, the pixel coordinates of the unique center point can be determined. The cross-ratio invariance states that for any projection transformation, when four points A, B, C, and D on the ring undergo projection transformation and are mapped to new points A1, B1, C1, and D1 on the image plane, their cross-ratio remains unchanged, i.e., CR(A1, B1, O1, C1) = CR(A, B, O, C). The principle of cross-ratio invariance is depicted in Figure 5, where S represents the camera position, l1 denotes the image plane, l represents the plane of the artificial marker, and O1 represents the unique center point of the ring on the pixel plane. The cross-ratio formula is shown as Equation (8).

Figure 5.

Principle diagram of cross-ratio invariance.

2.4. Visual Localization of the USV

The positioning of USVs on the water surface is achieved by measuring the distance and angle between the camera and the artificial marker using monocular vision. The distance obtained from visual localization is the linear distance between the camera and the artificial marker, while the angle refers to the angle between the camera’s optical axis and the artificial marker. By calculating the distance and angle, the USV can be guided back to the mother ship.

The centers of the four circular rings in the artificial marker are coplanar, and none of these three points are collinear. Based on the obtained four center points, the PNP method is utilized for visual localization. Given the known pixel coordinates of the coplanar four points and their corresponding world coordinates, a unique solution can be obtained. The camera’s captured scene is illustrated in Figure 6.

Figure 6.

The camera’s shooting scene.

2.4.1. Coordinate System Conversion

The PNP localization method involves the conversion of coordinates among four coordinate systems: the image coordinate system, pixel coordinate system, camera coordinate system, and world coordinate system. The image coordinate system uO′v is established with the image plane center as the coordinate origin. The pixel coordinate system xOy is established with the top-left corner of the image plane’s first pixel as the coordinate origin. The camera coordinate system XcYcOcZc is established with the camera’s optical center as the origin. The world coordinate system XwYwOwZw is established to determine the target position. Visual localization can be achieved through the transformation relationships between the four coordinate systems.

The transformation relationship between the world coordinate system and the camera coordinate system is described by Equation (9), where R and T represent the rotation matrix and translation matrix, respectively.

The transformation relationship between the camera coordinate system and the pixel coordinate system is described by Equation (10), where f represents the camera focal length.

The transformation relationship between the pixel coordinate system and the image coordinate system is described by Equation (11), where (dx, dy) represents the size of each pixel in the CCD/CMOS image sensor.

By combining Equations (9)–(11), the transformation relationship between the image coordinate system and the world coordinate system can be obtained, as shown in Equation (12). In Equation (12), M1 represents the camera’s intrinsic matrix, which contains parameters obtained through camera calibration. M2 represents the projection transformation matrix, also known as the extrinsic matrix, which mainly consists of the rotation matrix R and the translation matrix T.

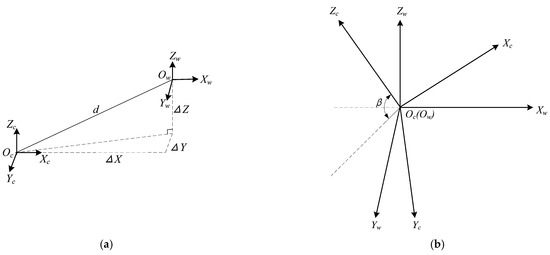

2.4.2. Visual Localization Process

Using the PNP method for visual localization, autonomous recovery guidance for USVs can be achieved. By employing the PNP method, the distance and angle for USV positioning can be determined. The distance refers to the distance between the world coordinate system and the camera coordinate system, while the angle represents the angle between the optical axis and the plane of the artificial marker. To solve for the distance and angle, the external matrix M2 is computed through coordinate transformation. Subsequently, M2 is decomposed to obtain the rotation matrix R and the translation matrix T. Finally, by using R and T matrices, the distance and angle can be derived. Figure 7 shows the translation and rotation between the two coordinate systems, where XwYwOwZw and XcYcOcZc represent the world coordinate system and the camera coordinate system, respectively. In Figure 7a, ‘d’ represents the distance between the coordinate systems (i.e., the distance determined by the localization method in this paper). In Figure 7b, ‘β’ represents the angle between the camera optical axis Zc and the XwOwYw plane of the world coordinate system (i.e., the angle determined by the localization method in this paper).

Figure 7.

Translation and rotation of coordinate systems: (a) translation of coordinate systems; (b) rotation of coordinate systems.

The specific process for solving the rotation matrix R and the translation matrix T is as follows. The world coordinate system is established based on the center of the artificial marker as the origin. Thus, by utilizing the structural dimensions of the artificial marker as depicted in Figure 1 of Section 2.1, it is possible to calculate the world coordinates (Xw, Yw, Zw) for each circle’s center point on the artificial marker. Subsequently, employing the methodology outlined in Section 2.3 enables the determination of the corrected pixel coordinates for the center point. Afterwards, by incorporating the world coordinates and pixel coordinates of the center point, along with the intrinsic matrix M1 derived from camera calibration, into Formula (12), it becomes feasible to derive the projection transformation matrix M2. Ultimately, by decomposing the M2 matrix, the rotation matrix R and the translation matrix T can be obtained, as evidenced by Formula (13).

We then calculate the distance through the translation matrix T. The translation matrix T represents the translation relationship between two coordinate systems, as shown in Figure 7a. It mainly consists of three parameters, representing the translation amounts ΔX, ΔY, and ΔZ along the three coordinate axes. Based on the translation matrix T, the distance between the camera coordinate system and the world coordinate system can be determined by using the formula shown in (14).

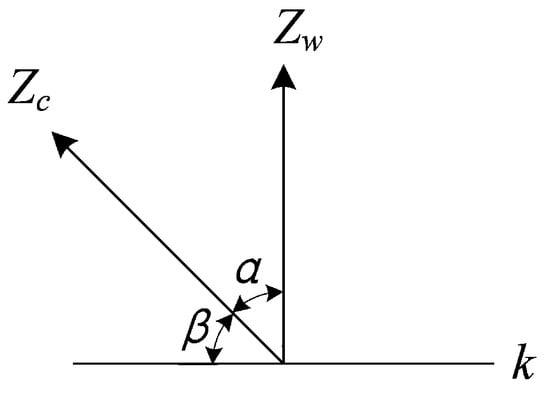

We then calculate the angles based on the rotation matrix R. The rotation matrix R represents the rotational transformation relationship between two coordinate systems, as shown in Figure 7b. To find the angle between the optical axis (i.e., the Z-axis of the camera coordinate system) and the artificial marker plane, it is equivalent to finding the complementary angle to the angle between the Z-axis of the camera coordinate system and the Z-axis of the world coordinate system, as shown in Figure 8. In Figure 8, Zc and Zw represent the Z-axes of the camera coordinate system and the world coordinate system, respectively, while the plane labeled k represents the artificial marker plane, which corresponds to the XwOwYw plane of the world coordinate system. In this study, we use vector methods to calculate the angle α between these two Z-axes and then determine the complement angle of this angle to obtain the desired angle β.

Figure 8.

Angle solving diagram.

The specific procedure for calculating the angle is as follows:

Firstly, the third column of the rotation matrix R is considered the representation of the Z-axis of the camera coordinate system in the world coordinate system, as shown in Formula (15).

Next, by transposing the rotation matrix R, the vector representation of the Z-axis of the world coordinate system in the camera coordinate system can be obtained. This is achieved by calculating the transpose matrix RT of R and taking its third column as the vector representation of the Z-axis of the world coordinate system in the camera coordinate system, as shown in Formula (16).

Then, the angle α between the camera coordinate system and the world coordinate system can be obtained by using the two vectors, as shown in Formula (17).

Finally, by taking the complement of α, the angle β between the optical axis and the artificial marker plane can be obtained, as shown in Formula (18).

3. Experimental Results and Analysis

To validate the effectiveness of the proposed method and measure the accuracy of the distance and angle obtained, a simulated experiment was conducted. The accuracy of both distance and angle was determined using a controlled variable approach. By fixing the angle, images were captured at 1 m intervals within a 10 m range, and the distance d was obtained using the proposed method. By fixing the distance, images were captured at 20° intervals within a range of ±60°, and the angle β was obtained by using the proposed algorithm. Some experimental equipment used during the experiment are listed in Table 1.

Table 1.

Experimental equipment and configuration parameters.

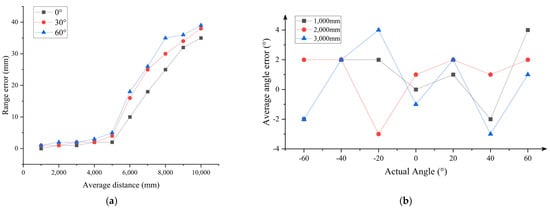

Figure 9 displays the experimental curve results, while Table 2 and Table 3 exhibit the numerical outcomes of the experiments. Figure 9a presents the distance error curves within a range of 10 m from three different angles. It can be observed from Figure 9a that the distance error increases with the actual distance between the camera and the artificial marker. Additionally, the results in Table 2 indicate that when the actual distance is less than 5 m, the measured distance error is within 5 mm. Within the range from 5 to 10 m, the distance error remains within 40 mm. Figure 9b demonstrates the angle measurement error curves for three different distances within a range of ±60°. Furthermore, the results in Table 3 show that the angle errors for all three distances are less than 5°.

Figure 9.

Measurement results of distance and angle: (a) measurement results of distance; (b) measurement results of angle.

Table 2.

Comparison of ranging results at different angles.

Table 3.

Angle measurement error at different distances.

The results of the experiment demonstrate that the proposed monocular visual localization method boasts high accuracy and holds certain application value. It can provide effective guidance for the recovery of unmanned surface vehicles (USVs).

4. Conclusions

The recovery and guidance of USVs is a crucial technology for their operation. In this regard, the accurate localization of USVs within a short range where GPS positioning is not precise becomes a challenge. To address this issue, this paper proposes a monocular vision-based localization method to guide USVs back to the mother ship. The proposed method achieves localization and guidance by recognizing artificial markers installed on the mother ship based on their structural and feature relationships. Firstly, in terms of artificial marker recognition, this paper applies a color segmentation method to obtain the position of the marker in the image. Then, it uses least squares fitting to approximate the elliptical shape of the marker, allowing for the extraction of the center coordinate. Furthermore, to address the misalignment caused by perspective distortion, a center point calibration method based on circular ring structure and cross-ratio invariance has been proposed. This method obtains the pixel coordinates of the unique center points of each circle on the marker in the imaging plane. Finally, the distance and angle are obtained by utilizing the coordinate transformation relationship in the PNP method.

The results demonstrate that the proposed method can effectively recognize artificial markers and achieve accurate distance and angle measurements. Additionally, the center-point correction method presented in this paper successfully resolves the issue of misalignment between center points. The evaluation indicates that the distance error within 10 m is within 40 mm, and the angle error within ±60° is within 5°. These results validate the effectiveness of the proposed method, which can achieve precise localization and guide the USV back to the mother ship.

The proposed method in this paper aims to achieve precise recovery guidance for short-range targets, while for long-range target guidance, it is necessary to complement this approach with other methods such as GPS positioning. In addition, this paper designs a specific artificial marker to locate and guide unmanned surface vehicles (USVs) back to the mother ship. In the future, other markers on the mother ship can be utilized for visual localization. For example, the recovery hatch on the mother ship can be used to guide the USV by leveraging its structural dimensions and features.

Author Contributions

Conceptualization, Z.S., Z.L. and Q.X.; methodology, Z.S. and Q.X.; visualization, Z.S. and Q.X.; investigation, Z.L. and Q.X.; experiments, Z.S. and Q.X.; writing—original draft preparation, Z.S.; validation, Z.S.; project administration, Z.L.; funding acquisition, Z.L. and Q.W. All authors have read and agreed to the published version of the manuscript.

Funding

This study was funded by the Key Research and Development Program of Jiangsu Province, grant number BE2022062; the Zhangjiagang Science and Technology Planning Project, grant number ZKYY2314; the Doctoral Scientific Research Start-up Fund Project of Nantong Institute of Technology, grant number 2023XK(B)02.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Acknowledgments

The authors thank all projects for the funding and the team members for their help and encouragement.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Woo, J.; Park, J.; Yu, C.; Kim, N. Dynamic model identification of unmanned surface vehicles using deep learning network. Appl. Ocean Res. 2018, 78, 123–133. [Google Scholar] [CrossRef]

- Lewicka, O.; Specht, M.; Stateczny, A.; Specht, C.; Dardanelli, G.; Brčić, D.; Szostak, B.; Halicki, A.; Stateczny, M.; Widzgowski, S. Integration data model of the bathymetric monitoring system for shallow waterbodies using UAV and USV platforms. Remote Sens. 2022, 14, 4075. [Google Scholar] [CrossRef]

- Mishra, R.; Koay, T.B.; Chitre, M.; Swarup, S. Multi-USV Adaptive Exploration Using Kernel Information and Residual Variance. Front. Robot. AI 2021, 8, 572243. [Google Scholar] [CrossRef] [PubMed]

- Barrera, C.; Padron, I.; Luis, F.S.; Llinas, O. Trends and Challenges in Unmanned Surface Vehicles (USV): From Survey to Shipping. TransNav Int. J. Mar. Navig. Saf. Sea Transp. 2021, 15, 135–142. [Google Scholar] [CrossRef]

- Yang, Q.; Yin, Y.; Chen, S.; Liu, Y. Autonomous exploration and navigation of mine countermeasures USV in complex unknown environment. In Proceedings of the 2021 33rd Chinese Control and Decision Conference (CCDC), Kunming, China, 22–24 May 2021; pp. 4373–4377. [Google Scholar] [CrossRef]

- Feng, L. Research on Anti-submarine Warfare Scheme Design of Unmanned Surface Ship. In Proceedings of the 2022 IEEE 13th International Conference on Software Engineering and Service Science (ICSESS), Beijing, China, 21–23 October 2022; pp. 133–137. [Google Scholar] [CrossRef]

- Huang, Q.; Ma, X.; Liu, K.; Ma, X.; Pang, W. Autonomous Reconnaissance Action of Swarm Unmanned System Driven by Behavior Tree. In Proceedings of the 2022 IEEE International Conference on Unmanned Systems (ICUS), Guangzhou, China, 28–30 October 2022; pp. 1540–1544. [Google Scholar] [CrossRef]

- Mo, H.; Zhao, X.; Wang, F.Y. Application of interval type-2 fuzzy sets in unmanned vehicle visual guidance. Int. J. Fuzzy Syst. 2019, 21, 1661–1668. [Google Scholar] [CrossRef]

- Patruno, C.; Nitti, M.; Petitti, A.; Stella, E.; D’Orazio, T. A vision-based approach for unmanned aerial vehicle landing. J. Intell. Robot. Syst. 2019, 95, 645–664. [Google Scholar] [CrossRef]

- Chou, J.S.; Cheng, M.Y.; Hsieh, Y.M.; Yang, I.; Hsu, H.T. Optimal path planning in real time for dynamic building fire rescue operations using wireless sensors and visual guidance. Autom. Constr. 2019, 99, 1–17. [Google Scholar] [CrossRef]

- Pan, Y. Challenges in Visual Navigation of AGV and Comparison Study of Potential Solutions. In Proceedings of the 2021 International Conference on Signal Processing and Machine Learning (CONF-SPML), Stanford, CA, USA, 14 November 2021; pp. 265–270. [Google Scholar] [CrossRef]

- Ortiz-Fernandez, L.E.; Cabrera-Avila, E.V.; Silva, B.M.F.; Gonçalves, L.M.G. Smart Artificial Markers for Accurate Visual Mapping and Localization. Sensors 2021, 21, 625. [Google Scholar] [CrossRef]

- Khattar, F.; Luthon, F.; Larroque, B.; Dornaika, F. Visual localization and servoing for drone use in indoor remote laboratory environment. Mach. Vis. Appl. 2021, 32, 32. [Google Scholar] [CrossRef]

- Wu, X.; Sun, C.; Zou, T.; Li, L.; Wang, L.; Liu, H. SVM-based image partitioning for vision recognition of AGV guide paths under complex illumination conditions. Robot. Comput. Integr. Manuf. 2020, 61, 101856. [Google Scholar] [CrossRef]

- Li, H.; Zhang, Y.; Ye, B.; Zhao, H. A Monocular vision positioning and tracking system based on deep neural network. J. Eng. 2023, 3, e12246. [Google Scholar] [CrossRef]

- Yu, R.; Fang, X.; Hu, C.; Yang, X.; Zhang, X.; Zhang, C.; Yang, W.; Mao, Q.; Wang, J. Research on positioning method of coal mine mining equipment based on monocular vision. Energies 2022, 15, 8068. [Google Scholar] [CrossRef]

- Wang, H.; Sun, Y.; Wu, Q.M.J.; Lu, X.; Wang, X.; Zhang, Z. Self-supervised monocular depth estimation with direct methods. Neurocomputing 2021, 421, 340–348. [Google Scholar] [CrossRef]

- Meng, C.; Bao, H.; Ma, Y.; Xu, X.; Li, Y. Visual Meterstick: Preceding vehicle ranging using monocular vision based on the fitting method. Symmetry 2019, 11, 1081. [Google Scholar] [CrossRef]

- Ma, L.; Meng, D.; Zhao, S.; An, B. Visual localization with a monocular camera for unmanned aerial vehicle based on landmark detection and tracking using YOLOv5 and DeepSORT. Int. J. Adv. Robot. 2023, 20, 17298806231164831. [Google Scholar] [CrossRef]

- Yin, X.; Ma, L.; Tan, X.; Qin, X. A Robust Visual Localization Method with Unknown Focal Length Camera. IEEE Access 2021, 9, 42896–42906. [Google Scholar] [CrossRef]

- Huang, L.; Zhe, T.; Wu, J.; Wu, Q.; Pei, C.; Chen, D. Robust inter-vehicle distance estimation method based on monocular vision. IEEE Access 2019, 7, 46059–46070. [Google Scholar] [CrossRef]

- Cao, X.; Shrikhande, N.; Hu, G. Approximate orthogonal distance regression method for fitting quadric surfaces to range data. Pattern Recognit. Lett. 1994, 15, 781–796. [Google Scholar] [CrossRef]

- Yang, R.; Yu, S.; Yao, Q.; Huang, J.; Ya, F. Vehicle Distance Measurement Method of Two-Way Two-Lane Roads Based on Monocular Vision. Appl. Sci. 2023, 13, 3468. [Google Scholar] [CrossRef]

- Lin, S.; Jin, L.; Chen, Z. Real-Time Monocular Vision System for UAV Autonomous Landing in Outdoor Low-Illumination Environments. Sensors 2021, 21, 6226. [Google Scholar] [CrossRef]

- Babinec, A.; Jurisica, L.; Hubinský, P.; Duchoň, F. Visual localization of mobile robot using artificial markers. Procedia Eng. 2014, 96, 1–9. [Google Scholar] [CrossRef]

- Huang, B.; Sun, Y.R.; Sun, X.D.; Liu, J.Y. Circular drogue pose estimation for vision-based navigation in autonomous aerial re-fueling. In Proceedings of the 2016 IEEE Chinese Guidance, Navigation and Control Conference (CGNCC), Nanjing, China, 12–14 August 2016; pp. 960–965. [Google Scholar] [CrossRef]

- Gu, Y.; Lu, Y.; Yang, H.; Huo, J. Concentric Circle Detection Method Based on Minimum Enveloping Circle and Ellipse Fitting. In Proceedings of the 2019 IEEE 10th International Conference on Software Engineering and Service Science (ICSESS), Beijing, China, 18–20 October 2019; pp. 523–527. [Google Scholar] [CrossRef]

- Xu, X.; Fei, Z.; Yang, J.; Tan, Z.; Luo, M. Line structured light calibration method and centerline extraction: A review. Results Phys. 2020, 19, 103637. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).