Contextual Hypergraph Networks for Enhanced Extractive Summarization: Introducing Multi-Element Contextual Hypergraph Extractive Summarizer (MCHES)

Abstract

1. Introduction

- We propose a unique method for constructing a multi-element hypergraph that captures extensive semantic, narrative, and discourse relationships within texts, offering a more nuanced understanding of document structure.

- We develop the Contextual Homogenization Module (CHM), which effectively integrates the heterogeneous information present in different types of hyperedges, creating a uniform feature space that enhances data processing.

- Our Hypergraph Contextual Attention Module (HCA) implements a dual-level attention mechanism, refining the focus on the most informative parts of the hypergraph and significantly improving the relevance and coherence of the generated summaries.

- The Extractive Read-out Strategy we introduce optimizes the selection of sentences for the summary based on a combination of their intrinsic content value and their contextual importance, as mediated by their hypergraph connections.

2. Related Work

- Utilizing a hypergraph structure to capture complex relationships among sentences, including semantic, narrative, and discourse connections.

- Incorporating a Contextual Homogenization Module (CHM) to harmonize features from diverse hyperedges, enhancing data integration.

- Implementing a Hypergraph Contextual Attention Module (HCA) with a dual-level attention mechanism, focusing on the most salient information.

- Employing the innovative Extractive Read-out Strategy to ensure that summaries reflect the core themes and logical structure of the original text.

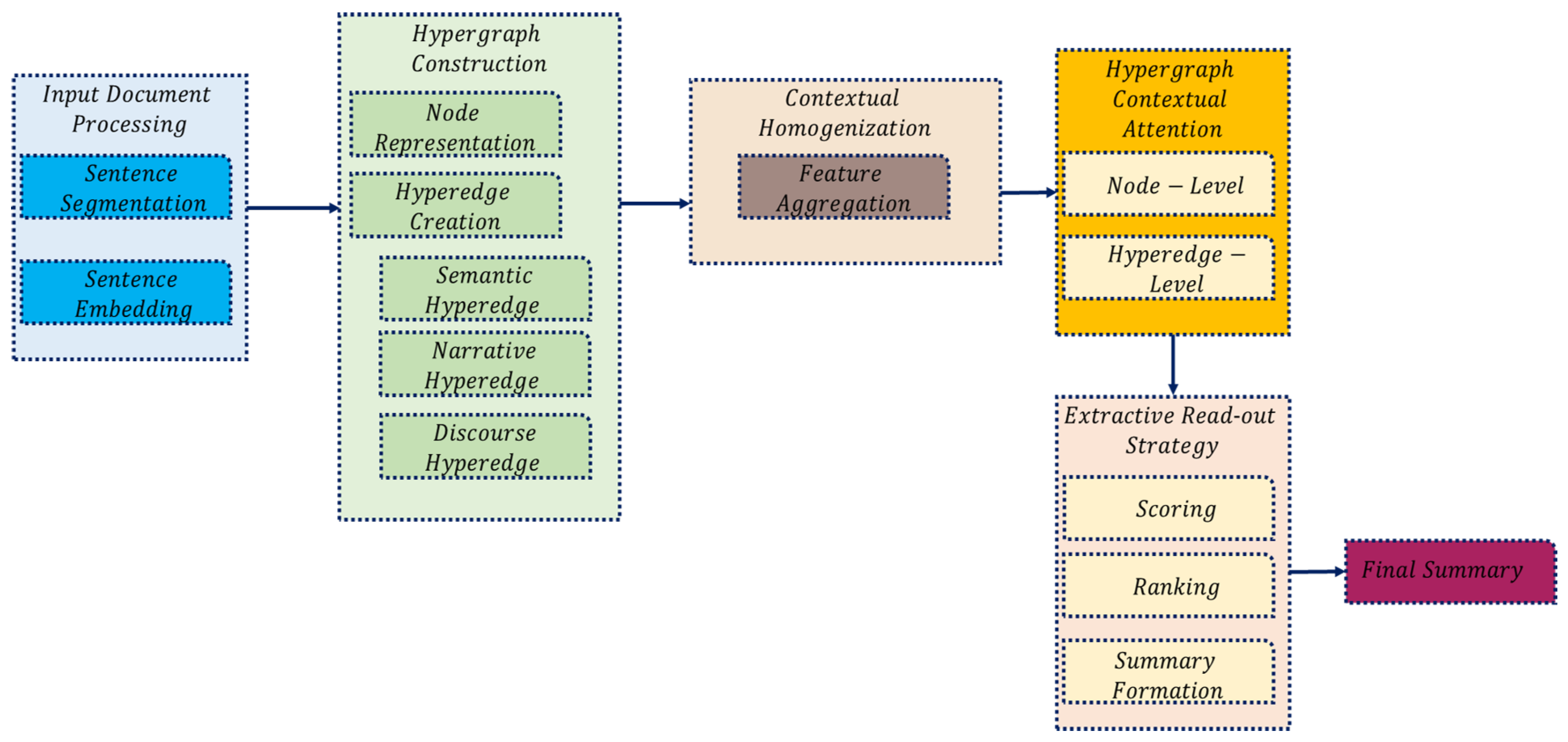

3. Methodology

- Input Document Processing: The document is segmented into individual sentences, which are then transformed into high-dimensional vector representations by using pre-trained embeddings.

- Hypergraph Construction: Sentences are represented as nodes in a hypergraph, with different types of hyperedges (semantic, narrative, and discourse) capturing various contextual relationships between sentences.

- Contextual Homogenization Module (CHM): This module harmonizes features from the different hyperedges to create a unified feature set for each node.

- Hypergraph Contextual Attention (HCA) Module: A dual-level attention mechanism is applied to focus on the most relevant sentences and their contextual relationships.

- Extractive Read-out Strategy: The most informative sentences are selected based on their relevance scores, ranked, and combined to form the final summary.

- Output Summary: The final summary is generated, capturing the core themes and logical structure of the original document.

3.1. Contextual Hypergraph Construction

3.1.1. Hypergraph Definition

- Each node represents a sentence in the document.

- Each hyperedge represents a group of sentences that share a specific contextual relationship, described further below.

3.1.2. Node Representation

3.1.3. Types of Hyperedges

- Semantic hyperedges: They connect nodes that share significant semantic similarities. The semantic similarity between two sentences and is quantified by using the cosine similarity of their embeddings:A semantic hyperedge includes all sentence pairs whose similarity exceeds a predefined threshold .

- Narrative hyperedges: They link consecutive sentences to maintain the narrative flow. Each narrative hyperedge connects sentences that are adjacent in the text:

- Discourse hyperedges: They are formed based on the discourse role of sentences within the document structure (e.g., argumentative structure and exposition). Sentences fulfilling similar roles are grouped under the same hyperedge:where identifies the discourse role of sentence .

3.1.4. Hypergraph construction algorithm

| Algorithm 1 Hypergraph Construction Algorithm |

|

3.2. Contextual Homogenization Module (CHM)

| Algorithm 2 Contextual Homogenization Module (CHM) |

|

3.2.1. Multimodal Factorized Bilinear Pooling (MFB)

3.2.2. Feature Integration across Hyperedges

- Semantic feature integration: For semantic hyperedges, the embeddings of connected nodes are pooled together by using an MFB-based approach, which enhances the feature representation by emphasizing shared semantic characteristics.where represents the set of node vectors in semantic hyperedge .

- Narrative feature integration: Narrative hyperedges connect consecutive sentences, capturing the flow of the narrative. The integration of these features aims to preserve the sequential integrity of the text.where includes the node vectors linked by narrative hyperedges.

- Discourse feature integration: Discourse hyperedges group sentences by their roles within the document’s structure. Integrating these features helps emphasize the rhetorical significance of each sentence grouping.where corresponds to nodes associated with a particular discourse role.

3.3. Hypergraph Contextual Attention Module (HCA)

| Algorithm 3 Hypergraph Contextual Attention (HCA) Module |

|

3.3.1. Attention Mechanism

3.3.2. Gating Mechanism

3.4. Integration and Output

3.5. Extractive Read-Out Strategy

3.5.1. Attention Pooling Mechanism

3.5.2. Sentence Selection for Summary

3.5.3. Summary Compilation

3.5.4. Optimization of Selection Criteria

4. Optimization and Training

Optimization Algorithm

| Algorithm 4 Optimization algorithm |

|

- Early stopping: Monitor the ROUGE score on the validation set after each epoch, and stop training when the score does not improve for a predefined number of consecutive epochs.

- Grid search: Systematically vary parameters within specified ranges, and select the configuration that achieves the highest ROUGE score on the validation set.

5. Experiments and Results

5.1. Datasets

- XSum: This dataset comprises news articles sourced from the British Broadcasting Corporation (BBC), known for their concise single-sentence summaries. It includes approximately 203,028 articles for training, 11,273 for validation, and 11,332 for testing. Each document is accompanied by a professionally written summary, making it ideal for evaluating summarization on short texts [36].

- CNN/DailyMail: This dataset features news articles paired with multi-point bullet summaries, serving as a moderate-length document source for summarization tasks. The non-anonymized version of the dataset was used, segmented into 287,084 training samples, 13,367 validation samples, and 11,489 test samples. The structure of these summaries provides a unique challenge in capturing essential narrative threads across multiple bullet points [37].

- PubMed: As a representative of scientific and technical document summarization, the PubMed dataset contains lengthy abstracts of biomedical articles. It is composed of 83,233 training documents, 4946 for validation, and 5025 for testing. The comprehensive and technical nature of these summaries tests the ability of summarization models to handle complex, information-dense content [38].

5.2. Evaluation Metrics

5.3. Baseline Models

- LEAD-3 is a heuristic baseline that extracts the first three sentences of a document, based on the assumption that the lead sentences contain key information. It serves as a simple yet effective comparison point.

- SummaRuNNer [41] is an RNN-based sequence model that evaluates the salience of sentences based on features extracted across the document to generate a summary.

- NeuSum [42] integrates sentence scoring and selection into a single joint model, using an RNN-based encoder–decoder framework to predict the saliency of sentence combinations directly.

- BanditSum [43] implements a reinforcement learning approach, treating the summarization task as a contextual bandit problem where the model learns to choose sentences that maximize the ROUGE metric.

- JECS [44] utilizes a joint extraction and compression strategy, employing a syntactic transformation of the input text to produce concise summaries.

- HIBERT [45] uses a hierarchical bidirectional transformer to encode long documents and perform document-level summarization.

- NeRoBERTa [47] is a variant that adapts RoBERTa embeddings specifically for nested hierarchical document structures to enhance summarization.

- MatchSum [48] formulates the summarization task as a semantic matching problem between candidate sentences and the document, using a contrastive learning framework.

- HAHSum [49] incorporates a hierarchical attention mechanism with heterogeneous graph representations to refine the summarization process across multiple document levels.

- GenCompareSum [50] is a hybrid model that combines unsupervised abstractive techniques with extractive methods, tailored for summarizing long and complex documents, like scientific papers.

5.4. Experimental Settings

- Preprocessing: Texts were tokenized by using spaCy, and sentences were encoded by using pre-trained BERT-base embeddings to capture deep semantic features.

- Hypergraph construction: A hypergraph was constructed for each document, where nodes represent sentences and hyperedges encapsulate semantic, narrative, and discourse relations.

- Model architecture: The CHM and the HCA module are central to our framework, focusing on homogenizing features and applying dual-level attention, respectively.

- Optimizer: Adam, with a learning rate of 1 × 10−4.

- Batch size: 32 documents per batch to balance computational efficiency and training stability.

- Early stopping: employed based on the validation loss to prevent overfitting, with a patience parameter of 10 epochs.

- Setup: All baselines were implemented with their standard configurations as reported in their respective publications.

- Preprocessing: Uniform preprocessing was applied across all models for a fair comparison, including the same tokenization and embedding methods used for MCHES.

- Hyperparameter tuning: Each model was tuned individually on the validation set of each dataset to optimize performance.

5.5. Model Variants

- MCHES-NoCHM: This variant removes the Contextual Homogenization Module to assess the impact of feature homogenization across different hyperedges:

- −

- Includes: contextual hypergraph, HCA, and Extractive Read-out.

- −

- Excludes: CHM.

- MCHES-NoHCA: This version excludes the Hypergraph Contextual Attention Module, testing the importance of dual-level attention in enhancing summary relevance:

- −

- Includes: contextual hypergraph, CHM, and Extractive Read-out.

- −

- Excludes: HCA.

- MCHES-NoReadout: By omitting the Extractive Read-out Strategy, this variant evaluates the efficacy of the attention-based sentence selection mechanism:

- −

- Includes: contextual hypergraph, CHM, and HCA.

- −

- Excludes: Extractive Read-out.

- MCHES-BasicGraph: This model uses a simplified graph structure without the multi-element enhancements to determine the baseline effectiveness of using any hypergraph structure:

- −

- Includes: basic hypergraph, CHM, HCA, and Extractive Read-out.

- −

- Replaces multi-element contextual hypergraph with basic hypergraph.

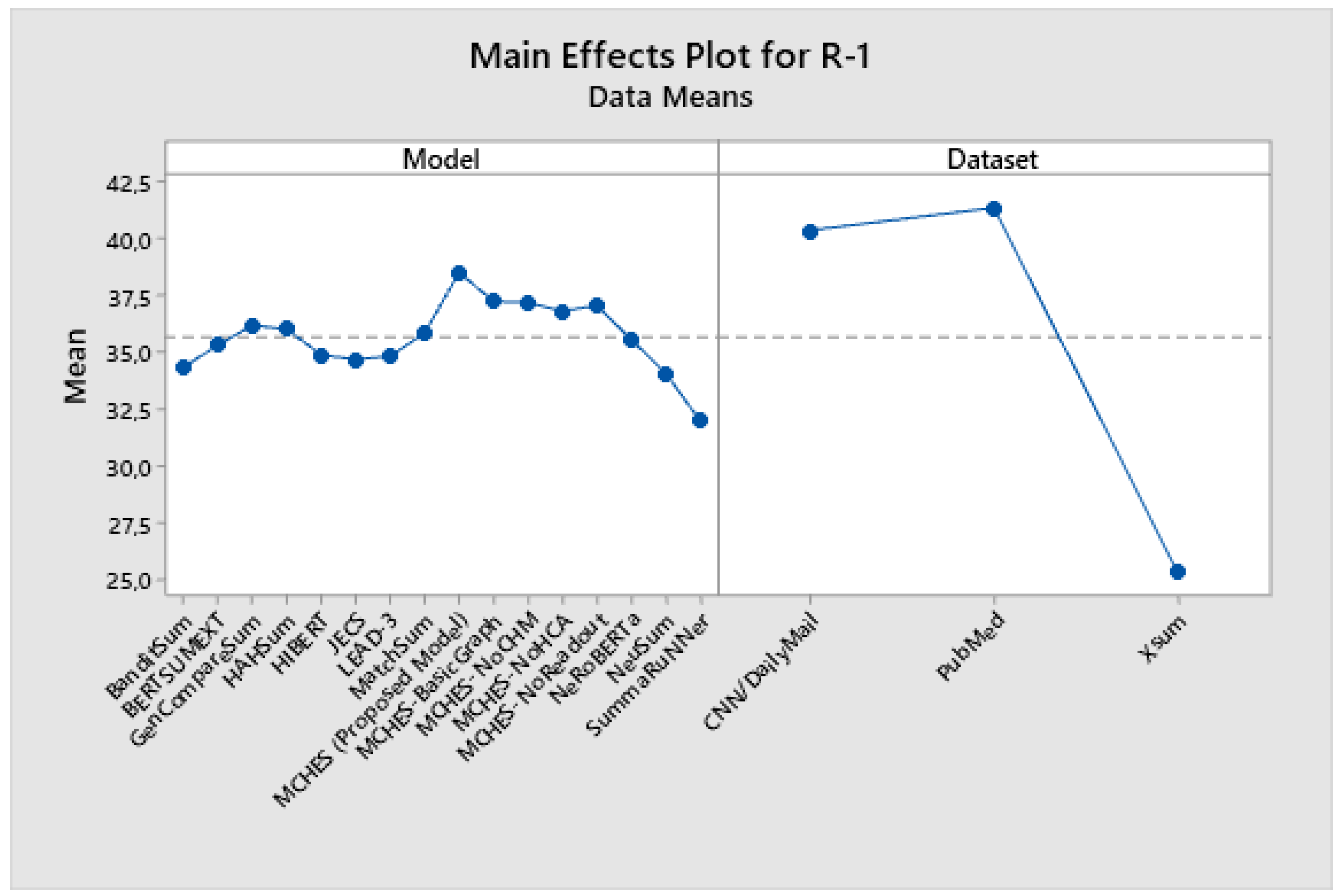

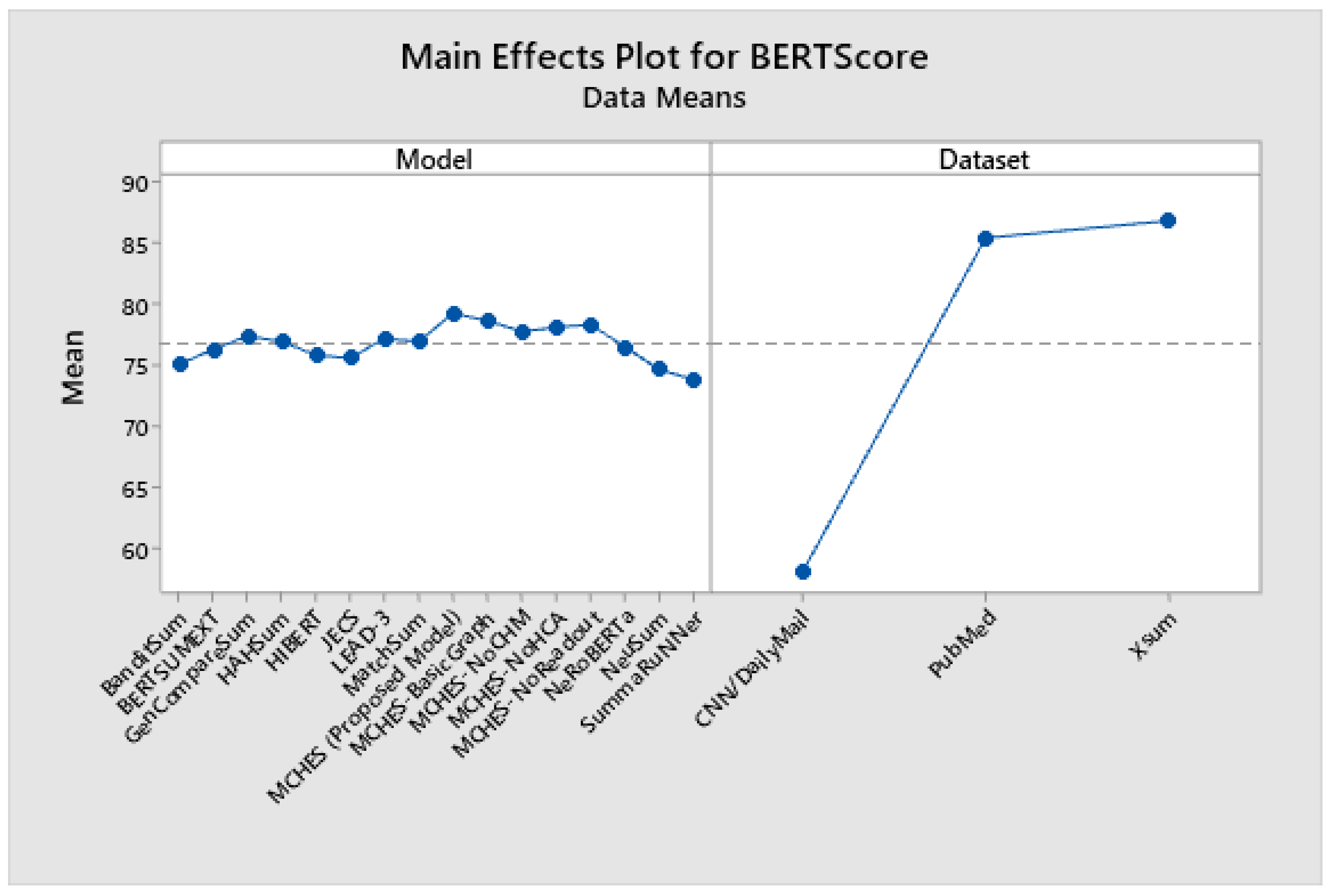

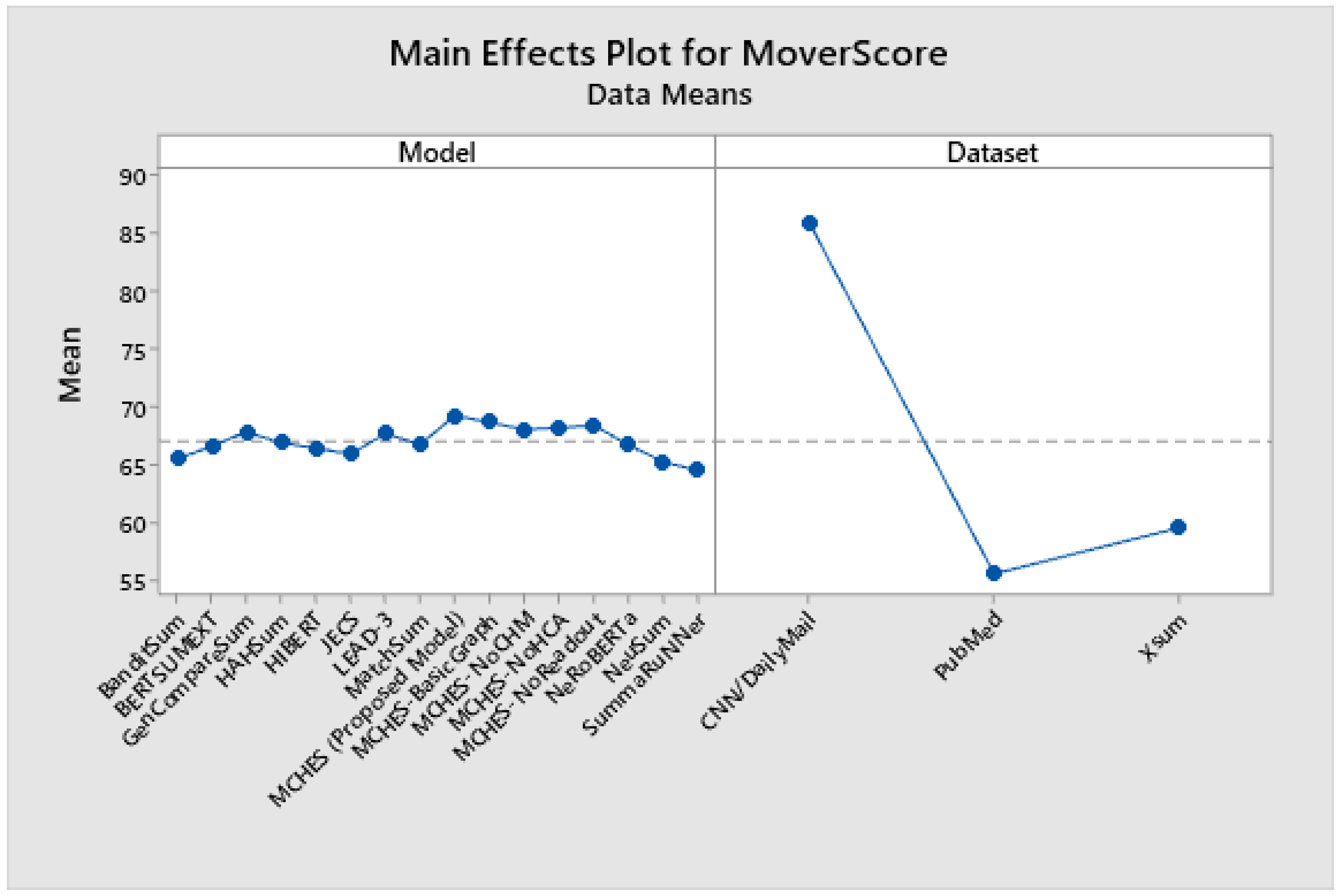

5.6. Experimental Results

5.7. Manual Evaluation

- Coherence: how logically connected and consistent the summary is.

- Readability: how easy it is to read and understand the summary.

- Informativeness: how well the summary captures the essential information from the original document.

- Relevance: the relevance of the content included in the summary to the main topics of the original document.

6. Discussion

- Outstanding performance of MCHES:

- −

- MCHES outperforms the baseline models on all datasets, indicating its effective capture of semantic content and structure.

- −

- The substantial lead in MoverScore on the PubMed dataset suggests pronounced strength in processing technical and domain-specific texts.

- Significance of MCHES components:

- −

- The minimal performance drop for the MCHES-NoCHM and MCHES-NoHCA variants suggests a strong base model that is enhanced, rather than defined, by its individual components.

- −

- The MCHES-NoReadout variant, despite the absence of the read-out strategy, maintains competitive scores, underlining the robustness of the attention mechanisms within the model.

- Performance consistency across datasets:

- −

- MCHES exhibits consistent performance across different datasets, reinforcing the model’s adaptability and generalizability.

- −

- The consistently high performance of MCHES-BasicGraph, particularly on the Xsum dataset, challenges the assumption that model complexity is a prerequisite for higher semantic alignment.

- Implications for future research:

- −

- The results prompt a re-evaluation of component contributions in complex models, suggesting potential areas for simplification without significant performance losses.

- −

- Future research might explore the trade-off between model complexity and performance, especially in the context of domain-specific summarization tasks.

7. Limitations

- Computational complexity: The construction and processing of hypergraphs, especially with multiple types of hyperedges (semantic, narrative, and discourse), can be computationally intensive. This complexity might limit the scalability of MCHES for very large datasets or real-time applications.

- Training data requirements: Like many advanced NLP models, MCHES requires a substantial number of training data to perform effectively. The requirement for large annotated datasets can be a barrier, particularly in specialized domains where such data may not be readily available.

- Domain specificity: While MCHES has shown superior performance across various datasets, the model’s effectiveness might vary when applied to domain-specific texts not represented in the training data. Adaptation to new domains may require additional fine tuning.

- Interpretability: The complexity of the hypergraph-based model and the dual-level attention mechanism may reduce the interpretability of the summarization process. Users might find it challenging to understand how specific sentences are selected for inclusion in the summary.

- Resource-intensive nature: The model’s requirements for computational resources, including memory and processing power, are higher compared with simpler models. This could be a limiting factor for deployment in resource-constrained environments.

8. Conclusions

- The MCHES framework significantly outperforms baseline models in terms of ROUGE scores and BERTScore, affirming its effectiveness in capturing salient information and maintaining the semantic integrity of the original texts.

- The ablation study revealed the importance of each component in the MCHES framework, illustrating their synergistic effect on the model’s overall performance.

- Despite the complexity of the summarization task, particularly within specialized domains such as the biomedical literature, MCHES maintained a consistent level of high performance, underscoring its robustness and versatility.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Liu, Y.; Lapata, M. Text summarization with pretrained encoders. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; pp. 3730–3740. Available online: https://aclanthology.org/D19-1387 (accessed on 1 April 2024).

- Moratanch, N.; Chitrakala, S. A survey on extractive text summarization. In Proceedings of the 2017 International Conference on Computer, Communication and Signal Processing (ICCCSP), IEEE, Chennai, India, 10–11 January 2017. [Google Scholar]

- Gupta, V.; Lehal, G.S. A survey of text summarization extractive techniques. J. Emerg. Technol. Web Intell. 2010, 2, 258–268. [Google Scholar] [CrossRef]

- El-Kassas, W.S.; Salama, C.R.; Rafea, A.A.; Mohamed, H.K. Automatic text summarization: A comprehensive survey. Expert Syst. Appl. 2021, 165, 113679. [Google Scholar] [CrossRef]

- Mao, R.; Chen, G.; Zhang, X.; Guerin, F.; Cambria, E. Gpteval: A survey on assessments of ChatGPT and GPT-4. arXiv 2023, arXiv:2308.12488. [Google Scholar]

- Yenduri, G.; Ramalingam, M.; Selvi, G.C.; Supriya, Y.; Srivastava, G.; Maddikunta, P.K.R.; Depti, R.G.; Rutvij, H.J.; Prabadevi, B.; Wang, W.; et al. GPT (Generative Pre-trained Transformer)–A Comprehensive Review on Enabling Technologies, Potential Applications, Emerging Challenges, and Future Directions. arXiv 2023, arXiv:2305.10435. [Google Scholar] [CrossRef]

- Kalyan, K.S. A survey of GPT-3 family large language models including ChatGPT and GPT-4. Nat. Lang. Process. J. 2023, 6, 100048. [Google Scholar] [CrossRef]

- Onan, A.; Balbal, K.F. Improving Turkish text sentiment classification through task-specific and universal transformations: An ensemble data augmentation approach. IEEE Access 2024, 12, 4413–4458. [Google Scholar] [CrossRef]

- Nasution, A.H.; Onan, A. ChatGPT Label: Comparing the Quality of Human-Generated and LLM-Generated Annotations in Low-resource Language NLP Tasks. IEEE Access 2024, 12, 71876–71900. [Google Scholar] [CrossRef]

- Yadav, A.K.; Ranvijay; Yadav, R.S.; Maurya, A.K. State-of-the-art approach to extractive text summarization: A comprehensive review. Multimed. Tools Appl. 2023, 82, 29135–29197. [Google Scholar] [CrossRef]

- Jin, H.; Zhang, Y.; Meng, D.; Wang, J.; Tan, J. A Comprehensive Survey on Process-Oriented Automatic Text Summarization with Exploration of LLM-Based Methods. arXiv 2024, arXiv:2403.02901. [Google Scholar]

- Van Lierde, H.; Chow, T.W. Query-oriented text summarization based on hypergraph transversals. Inf. Process. Manag. 2019, 56, 1317–1338. [Google Scholar] [CrossRef]

- Wang, W.; Wei, F.; Li, W.; Li, S. Hypersum: Hypergraph based semi-supervised sentence ranking for query-oriented summarization. In Proceedings of the 18th ACM Conference on Information and Knowledge Management, Hong Kong, China, 2–6 November 2009; pp. 1855–1858. [Google Scholar]

- Zhang, H.; Liu, X.; Zhang, J. Hegel: Hypergraph transformer for long document summarization. arXiv 2022, arXiv:2210.04126. [Google Scholar]

- Onan, A. GTR-GA: Harnessing the power of graph-based neural networks and genetic algorithms for text augmentation. Expert Syst. Appl. 2023, 232, 120908. [Google Scholar] [CrossRef]

- Onan, A. Hierarchical graph-based text classification framework with contextual node embedding and BERT-based dynamic fusion. J. King Saud-Univ.-Comput. Inf. Sci. 2023, 35, 101610. [Google Scholar] [CrossRef]

- Onan, A. SRL-ACO: A text augmentation framework based on semantic role labeling and ant colony optimization. J. King Saud-Univ.-Comput. Inf. Sci. 2023, 35, 101611. [Google Scholar] [CrossRef]

- Gulati, V.; Kumar, D.; Popescu, D.E.; Hemanth, J.D. Extractive article summarization using integrated TextRank and BM25+ algorithm. Electronics 2023, 12, 372. [Google Scholar] [CrossRef]

- Yadav, J.; Meena, Y.K. Use of fuzzy logic and WordNet for improving performance of extractive automatic text summarization. In Proceedings of the 2016 International Conference on Advances in Computing, Communications and Informatics (ICACCI), IEEE, Jaipur, India, 21–24 September 2016; pp. 2071–2077. [Google Scholar] [CrossRef]

- Kumar, A.; Sharma, A.; Nayyar, A. Fuzzy logic-based hybrid model for automatic extractive text summarization. In Proceedings of the 2020 5th International Conference on Intelligent Information Technology, Hanoi, Vietnam, 19–22 February 2020; pp. 7–15. [Google Scholar] [CrossRef]

- Grail, Q.; Perez, J.; Gaussier, E. Globalizing BERT-based transformer architectures for long document summarization. In Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: Main Volume, Association for Computational Linguistics, Virtual Event, 19–23 April 2021; pp. 1792–1810. Available online: https://aclanthology.org/2021.eacl-main.154 (accessed on 1 April 2024).

- Bharathi Mohan, G.; Prasanna Kumar, R.; Parathasarathy, S.; Aravind, S.; Hanish, K.B.; Pavithria, G. Text summarization for big data analytics: A comprehensive review of GPT-2 and BERT approaches. In Data Analytics for Internet of Things Infrastructure; Springer: Berlin/Heidelberg, Germany, 2023; pp. 247–264. [Google Scholar]

- Mallick, C.; Das, A.K.; Dutta, M.; Das, A.K.; Sarkar, A. Graph-based text summarization using modified TextRank. In Soft Computing in Data Analytics: Proceedings of International Conference on SCDA 2018; Springer: Singapore, 2019; pp. 137–146. [Google Scholar]

- Erkan, G.; Radev, D.R. LexRank: Graph-based lexical centrality as salience in text summarization. J. Artif. Intell. Res. 2004, 22, 457–479. [Google Scholar] [CrossRef]

- El-Kassas, W.S.; Salama, C.R.; Rafea, A.A.; Mohamed, H.K. EdgeSumm: Graph-based framework for automatic text summarization. Inf. Process. Manag. 2020, 57, 102264. [Google Scholar] [CrossRef]

- Belwal, R.C.; Rai, S.; Gupta, A. A new graph-based extractive text summarization using keywords or topic modeling. J. Ambient. Intell. Humaniz. Comput. 2021, 12, 8975–8990. [Google Scholar] [CrossRef]

- Fatima, Q.; Cenek, M. New graph-based text summarization method. In Proceedings of the 2015 IEEE Pacific Rim Conference on Communications, Computers and Signal Processing (PACRIM), IEEE, Victoria, BC, Canada, 24–26 August 2015; pp. 396–401. [Google Scholar]

- Suleiman, D.; Awajan, A. Deep learning based abstractive text summarization: Approaches, datasets, evaluation measures, and challenges. Math. Probl. Eng. 2020, 2020, 9365340. [Google Scholar] [CrossRef]

- Joshi, A.; Fidalgo, E.; Alegre, E.; de León, U. Deep learning based text summarization: Approaches, databases and evaluation measures. In Proceedings of the International Conference of Applications of Intelligent Systems, Las Palmas de Gran Canaria, Spain, 10–12 January 2018. [Google Scholar]

- Song, S.; Huang, H.; Ruan, T. Abstractive text summarization using LSTM-CNN based deep learning. Multimed. Tools Appl. 2019, 78, 857–875. [Google Scholar] [CrossRef]

- Zhang, M.; Zhou, G.; Yu, W.; Huang, N.; Liu, W. A comprehensive survey of abstractive text summarization based on deep learning. Comput. Intell. Neurosci. 2022, 2022, 7132226. [Google Scholar] [CrossRef] [PubMed]

- Yu, Z.; Yu, J.; Fan, J.; Tao, D. Multi-modal factorized bilinear pooling with co-attention learning for visual question answering. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1821–1830. [Google Scholar]

- Ji, G.; Liu, K.; He, S.; Zhao, J. Distant supervision for relation extraction with sentence-level attention and entity descriptions. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; Volume 1. [Google Scholar]

- Lin, C.Y.; Och, F.J. Looking for a few good metrics: ROUGE and its evaluation. In Proceedings of the Ntcir Workshop, Tokyo, Japan, 2–4 June 2004. [Google Scholar]

- Barbella, M.; Tortora, G. Rouge Metric Evaluation for Text Summarization Techniques; SSRN 4120317; Elsevier: Rochester, NY, USA, 2022. [Google Scholar]

- Hasan, T.; Bhattacharjee, A.; Islam, M.S.; Samin, K.; Li, Y.F.; Kang, Y.B.; Rahman, S.M.; Shahriyar, R. XL-sum: Large-scale multilingual abstractive summarization for 44 languages. arXiv 2021, arXiv:2106.13822. [Google Scholar]

- Asif, M.H.; Yaseen, A.U. Comparative Evaluation of Text Similarity Matrices for Enhanced Abstractive Summarization on CNN/Dailymail Corpus. J. Comput. Biomed. Inform. 2023, 6, 208–215. [Google Scholar]

- Gupta, V.; Bharti, P.; Nokhiz, P.; Karnick, H. SumPubMed: Summarization dataset of PubMed scientific articles. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing: Student Research Workshop, Virtual Event, 1–6 August 2021; pp. 292–303. [Google Scholar]

- Colombo, P.; Staerman, G.; Clavel, C.; Piantanida, P. Automatic text evaluation through the lens of Wasserstein barycenters. arXiv 2021, arXiv:2108.12463. [Google Scholar]

- Zhao, W.; Peyrard, M.; Liu, F.; Gao, Y.; Meyer, C.M.; Eger, S. MoverScore: Text generation evaluating with contextualized embeddings and earth mover distance. arXiv 2019, arXiv:1909.02622. [Google Scholar]

- Narayan, S.; Cohen, S.B.; Lapata, M. Don’t give me the details, just the summary! Topic-aware convolutional neural networks for extreme summarization. arXiv 2018, arXiv:1808.08745. [Google Scholar]

- Zhou, Q.; Yang, N.; Wei, F.; Huang, S.; Zhou, M.; Zhao, T. Neural document summarization by jointly learning to score and select sentences. arXiv 2018, arXiv:1807.02305. [Google Scholar]

- Dong, Y.; Shen, Y.; Crawford, E.; van Hoof, H.; Cheung, J.C.K. BanditSum: Extractive summarization as a contextual bandit. arXiv 2018, arXiv:1809.09672. [Google Scholar]

- Xu, J.; Durrett, G. Neural extractive text summarization with syntactic compression. arXiv 2019, arXiv:1902.00863. [Google Scholar]

- Zhang, X.; Wei, F.; Zhou, M. HIBERT: Document level pre-training of hierarchical bidirectional transformers for document summarization. arXiv 2019, arXiv:1905.06566. [Google Scholar]

- Liu, Y.; Lapata, M. Text summarization with pretrained encoders. arXiv 2019, arXiv:1908.08345. [Google Scholar]

- Kwon, J.; Kobayashi, N.; Kamigaito, H.; Okumura, M. Considering nested tree structure in sentence extractive summarization with pre-trained transformer. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Virtual and Punta Cana, Dominican Republic, 7–11 November 2021; pp. 4039–4044. [Google Scholar]

- Zhong, M.; Liu, P.; Chen, Y.; Wang, D.; Qiu, X.; Huang, X. Extractive summarization as text matching. arXiv 2020, arXiv:2004.08795. [Google Scholar]

- Liu, Y.; Zhang, J.G.; Wan, Y.; Xia, C.; He, L.; Yu, P.S. HETFORMER: Heterogeneous transformer with sparse attention for long-text extractive summarization. arXiv 2021, arXiv:2110.06388. [Google Scholar]

- Bishop, J.; Xie, Q.; Ananiadou, S. GenCompareSum: A hybrid unsupervised summarization method using salience. In Proceedings of the 21st Workshop on Biomedical Language Processing, Dublin, Ireland, 26 May 2022; pp. 220–240. [Google Scholar]

| Reference | Technology Used | Performance/Advantages | Disadvantages |

|---|---|---|---|

| Liu and Lapata (2019) [1] | BERT-based Encoder–Decoder | High performance in capturing contextual nuances; improved ROUGE scores | Computationally expensive; requires large datasets for training |

| Moratanch and Chitrakala (2017) [2] | Statistical and linguistic features | Simple implementation; effective for basic tasks | Fail to capture deep semantic relationships; limited contextual understanding |

| Gupta and Lehal (2010) [3] | TF-IDF, frequency-based methods | Easy to implement; interpretable results | Do not capture document structure; poor performance on long texts |

| El-Kassas et al. (2021) [4] | Deep learning models (RNN, CNN, and Transformer) | Superior performance on complex texts; high accuracy | High computational cost; require extensive training data |

| Yadav et al. (2023) [10] | Graph-based models (TextRank and LexRank) | Capture relational information among sentences; improved coherence | May struggle with very large documents; computationally intensive |

| Van Lierde and Chow (2019) [12] | Hypergraph-based summarization | Flexible representation of relationships; better integration of document context | Complexity in construction and processing of hypergraphs; requires sophisticated algorithms |

| Dataset | Domain | # Pairs | Avg. Doc Tokens | Avg. Sum Tokens |

|---|---|---|---|---|

| XSum | News | 226,711 | 431 | 23 |

| CNN/DailyMail | News | 311,971 | 781 | 56 |

| PubMed | Scientific | 93,207 | 3011 | 203 |

| Model | R-1 | R-2 | R-L |

|---|---|---|---|

| LEAD-3 | 39.447 | 20.086 | 38.208 |

| SummaRuNNer | 34.862 | 17.258 | 33.516 |

| NeuSum | 37.573 | 18.106 | 33.968 |

| BanditSum | 37.898 | 18.287 | 33.986 |

| JECS | 37.956 | 19.051 | 35.499 |

| HIBERT | 38.378 | 20.029 | 35.806 |

| BERTSUMEXT | 39.638 | 20.161 | 38.269 |

| NeRoBERTa | 40.228 | 21.101 | 38.836 |

| MatchSum | 40.584 | 21.512 | 39.857 |

| HAHSum | 40.929 | 21.546 | 40.043 |

| GenCompareSum | 41.094 | 21.568 | 40.311 |

| MCHES (proposed model) | 44.756 | 24.963 | 42.477 |

| MCHES-NoCHM | 43.847 | 23.491 | 41.601 |

| MCHES-NoHCA | 42.236 | 21.629 | 40.616 |

| MCHES-NoReadout | 42.709 | 22.295 | 41.271 |

| MCHES-BasicGraph | 43.599 | 23.394 | 41.376 |

| Model | R-1 | R-2 | R-L |

|---|---|---|---|

| LEAD-3 | 25,512 | 7355 | 16,069 |

| SummaRuNNer | 23,194 | 5626 | 14,867 |

| NeuSum | 24,194 | 5699 | 15,056 |

| BanditSum | 24,577 | 6416 | 15,150 |

| JECS | 25,005 | 6709 | 15,332 |

| HIBERT | 25,145 | 6728 | 15,582 |

| BERTSUMEXT | 25,145 | 6747 | 15,631 |

| NeRoBERTa | 25,225 | 7011 | 15,723 |

| MatchSum | 25,431 | 7022 | 15,893 |

| HAHSum | 25,450 | 7239 | 15,901 |

| GenCompareSum | 25,593 | 7552 | 16,199 |

| MCHES (proposed model) | 26,422 | 8150 | 17,318 |

| MCHES-NoCHM | 25,775 | 7700 | 16,498 |

| MCHES-NoHCA | 26,059 | 7771 | 16,758 |

| MCHES-NoReadout | 26,174 | 7938 | 17,311 |

| MCHES-BasicGraph | 25,605 | 7576 | 16,260 |

| Model | R-1 | R-2 | R-L |

|---|---|---|---|

| LEAD-3 | 39,554 | 12,822 | 33,352 |

| SummaRuNNer | 37,949 | 12,759 | 32,537 |

| NeuSum | 40,496 | 13,909 | 33,353 |

| BanditSum | 40,601 | 13,987 | 33,983 |

| JECS | 41,120 | 14,095 | 34,129 |

| HIBERT | 41,152 | 14,410 | 34,186 |

| BERTSUMEXT | 41,161 | 14,964 | 34,207 |

| NeRoBERTa | 41,234 | 15,049 | 34,881 |

| MatchSum | 41,527 | 15,214 | 34,955 |

| HAHSum | 41,672 | 15,270 | 34,963 |

| GenCompareSum | 41,892 | 15,345 | 35,222 |

| MCHES (proposed model) | 44,321 | 19,129 | 37,505 |

| MCHES-NoCHM | 41,938 | 15,710 | 35,415 |

| MCHES-NoHCA | 42,148 | 16,605 | 35,683 |

| MCHES-NoReadout | 42,294 | 16,842 | 36,137 |

| MCHES-BasicGraph | 42,533 | 17,873 | 36,968 |

| Model | CNN/DailyMail | Xsum | PubMed |

|---|---|---|---|

| LEAD-3 | 58,261 | 87,199 | 86,073 |

| SummaRuNNer | 56,060 | 84,004 | 81,392 |

| NeuSum | 56,576 | 84,882 | 82,460 |

| BanditSum | 56,830 | 85,567 | 82,784 |

| JECS | 56,952 | 86,273 | 83,453 |

| HIBERT | 57,421 | 86,491 | 83,570 |

| BERTSUMEXT | 57,880 | 86,581 | 84,281 |

| NeRoBERTa | 57,999 | 86,766 | 84,684 |

| MatchSum | 58,130 | 87,036 | 85,566 |

| HAHSum | 58,130 | 87,077 | 85,603 |

| GenCompareSum | 58,661 | 87,257 | 86,180 |

| MCHES (proposed model) | 59,995 | 88,424 | 89,285 |

| MCHES-NoCHM | 58,862 | 87,299 | 87,121 |

| MCHES-NoHCA | 59,094 | 87,784 | 87,342 |

| MCHES-NoReadout | 59,153 | 87,833 | 87,980 |

| MCHES-BasicGraph | 59,260 | 88,193 | 88,430 |

| Model | CNN/DailyMail | Xsum | PubMed |

|---|---|---|---|

| LEAD-3 | 86,651 | 59,831 | 56,532 |

| SummaRuNNer | 83,318 | 58,486 | 51,685 |

| NeuSum | 84,648 | 58,577 | 52,471 |

| BanditSum | 84,878 | 58,757 | 52,739 |

| JECS | 85,281 | 58,961 | 53,570 |

| HIBERT | 85,396 | 59,286 | 54,607 |

| BERTSUMEXT | 85,397 | 59,429 | 54,971 |

| NeRoBERTa | 85,421 | 59,531 | 55,051 |

| MatchSum | 85,574 | 59,618 | 55,137 |

| HAHSum | 85,698 | 59,783 | 55,245 |

| GenCompareSum | 86,676 | 59,835 | 56,975 |

| MCHES (proposed model) | 87,432 | 60,549 | 59,739 |

| MCHES-NoCHM | 86,879 | 59,974 | 57,232 |

| MCHES-NoHCA | 86,882 | 60,101 | 57,643 |

| MCHES-NoReadout | 87,024 | 60,120 | 58,016 |

| MCHES-BasicGraph | 87,342 | 60,365 | 58,269 |

| Dataset | Algorithm | R-1 | R-2 | R-L | BERTScore |

|---|---|---|---|---|---|

| CNN/DailyMail | TF-IDF | 30.450 | 15.210 | 28.370 | 50.120 |

| MCHES (proposed) | 44.756 | 24.963 | 42.477 | 59.995 | |

| XSum | TF-IDF | 23.320 | 6.540 | 14.890 | 48.750 |

| MCHES (proposed) | 26.422 | 8.150 | 17.318 | 88.424 | |

| PubMed | TF-IDF | 32.140 | 16.890 | 30.120 | 52.450 |

| MCHES (proposed) | 44.321 | 19.129 | 37.505 | 89.285 |

| Criterion | HAHSum | GenCompareSum | MCHES |

|---|---|---|---|

| Coherence | 3.5 | 3.8 | 4.2 |

| Readability | 3.7 | 4.0 | 4.3 |

| Informativeness | 3.4 | 3.6 | 4.1 |

| Relevance | 3.6 | 3.7 | 4.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Onan, A.; Alhumyani, H. Contextual Hypergraph Networks for Enhanced Extractive Summarization: Introducing Multi-Element Contextual Hypergraph Extractive Summarizer (MCHES). Appl. Sci. 2024, 14, 4671. https://doi.org/10.3390/app14114671

Onan A, Alhumyani H. Contextual Hypergraph Networks for Enhanced Extractive Summarization: Introducing Multi-Element Contextual Hypergraph Extractive Summarizer (MCHES). Applied Sciences. 2024; 14(11):4671. https://doi.org/10.3390/app14114671

Chicago/Turabian StyleOnan, Aytuğ, and Hesham Alhumyani. 2024. "Contextual Hypergraph Networks for Enhanced Extractive Summarization: Introducing Multi-Element Contextual Hypergraph Extractive Summarizer (MCHES)" Applied Sciences 14, no. 11: 4671. https://doi.org/10.3390/app14114671

APA StyleOnan, A., & Alhumyani, H. (2024). Contextual Hypergraph Networks for Enhanced Extractive Summarization: Introducing Multi-Element Contextual Hypergraph Extractive Summarizer (MCHES). Applied Sciences, 14(11), 4671. https://doi.org/10.3390/app14114671