1. Introduction

Recently, time-of-flight (ToF) cameras have been used in various applications in industrial settings. Due to their laser projection structure, these ToF cameras are less dependent on environmental lighting conditions and provide high-performance 3D measurement results, making them crucial in fields such as industrial robots, autonomous vehicles, and industrial measuring equipment. However, ToF cameras still have areas that need improvement. Their performance can significantly decrease due to environmental factors such as the internal temperature of the camera, ambient temperature, and humidity, which can affect the accuracy of distance measurements. Additionally, the accuracy of ToF cameras varies significantly with the distance to the target, requiring correction technologies for practical applications [

1,

2].

To address these issues, various studies have been conducted. Efforts to improve performance have been made through enhancements to the sensors and lens structures within ToF cameras, advancements in software technologies, and improvements in calibration algorithms. In particular, recent research has focused on improving the performance of ToF cameras using deep learning technology. Deep learning has shown high performance in the field of image processing and can be applied to ToF camera sensor data for correction and filtering [

3]. Research on improving the performance of ToF cameras has been ongoing, and recently, as the application possibilities of ToF cameras in industrial robots, autonomous vehicles, and industrial measuring equipment have expanded, the demand for more efficient sensor technologies has increased.

Industrial robots have primarily been used to perform uniform tasks in automated production lines, playing a significant role in enhancing productivity and efficiency in manufacturing plants. However, there is a rapid trend of transitioning from industrial robots to mobile robots due to reasons such as productivity, efficiency, safety, and labor savings. Unlike industrial robots, mobile robots are designed to work with humans, prioritizing safety and flexibility over repeatability. As the use of robots expands, mobile robots require high positional repeatability and precise manipulator control. Industrial robots guarantee highly accurate position control and repeatability, typically with repeatability less than 0.01 mm. In contrast, mobile robots, which operate in environments alongside humans, are designed to function more flexibly and safely, usually having repeatability in the range of 20 mm to 50 mm [

4].

Due to the lack of positional repeatability and accuracy in mobile robots or mobile manipulators, there are cases where they cannot be applied. To address these issues, this paper proposes a new method to improve the pixel-level sensing accuracy of ToF cameras by obtaining accurate ground truth distance values from depth data acquired in real-world environments using ToF cameras. To obtain accurate ground truth distance values from depth data acquired with ToF cameras used in real-world environments, a simulation environment was constructed with the same ToF sensor viewpoint as the real environment, and the simulation was synchronized with the real environment to acquire depth values for 3D parts. It has been demonstrated that the accuracy of the ToF camera’s depth data can be improved through AI learning using the accurate depth values obtained from the ToF camera [

3].

Using the method proposed in this paper, the accuracy of the ToF camera’s depth data was improved. Based on this, it was possible to enhance inspection accuracy in automotive manufacturing processes by replacing 2D vision systems with 3D vision inspections. Furthermore, even when positional accuracy errors occur in mobile robots, the ToF depth data can be utilized to accurately correct the measurement positions of mobile manipulators. The proposed ToF camera depth accuracy improvement method provides a foundation for achieving higher depth accuracy through additional environmental information and learning, contributing to the use of sensors for more precise and meticulous tasks in industrial settings.

2. Related Work

Time-of-flight (ToF) cameras provide high-resolution depth information at a low cost, making them widely used in various applications and robotic technologies. ToF cameras operate by measuring the time it takes for light to travel to an object and back, calculating the distance based on this time-of-flight principle. However, these cameras are sensitive to environmental factors such as temperature changes, dust, and distance variability, which can decrease the accuracy of the depth information. Various studies are underway to address these issues. Frangez’s research evaluated the impact of environmental factors on the sensors and laser depth measurements of ToF cameras and aimed to eliminate key environmental factors affecting ToF camera accuracy based on this evaluation data. However, the tests were conducted only in temperature-controlled laboratory environments, limiting the reflection of complex interactions of other variables [

5]. Verdie’s research developed the Cromo algorithm to enhance depth accuracy by minimizing optical distortion and sensor noise, maintaining ToF camera performance under various lighting conditions. However, this method proved effective under highly controlled laboratory conditions but insufficient in reflecting the variability of real environments [

6]. Reitmann’s research used specially coated lenses and software algorithms to reduce interactions between laser beams and dust for ToF cameras. This approach improved ToF performance under dusty conditions but did not address other environmental factors, making it inadequate for use in actual industrial settings [

7].

Given the limitations in improving depth accuracy through practical ToF performance enhancements, recent research has focused on using RGB-D technology to simultaneously collect RGB image information and depth information, combining color and spatial information to enhance depth accuracy. This technology aims to more accurately recognize, segment, and classify captured objects rather than directly improving ToF depth accuracy. For instance, RGB-D technology allows for clearer identification of objects within an image, enabling more precise reconstruction of object depth [

8,

9].

Furthermore, research is being conducted on algorithms that reconstruct identified objects into 3D models using RGB-D sensors [

10,

11]. This technology can create accurate 3D object models even with imprecise RGB-D data and is particularly useful for reconstructing complex-shaped objects in 3D. This expands the applicability in fields such as digital content creation, virtual reality, and augmented reality.

However, these deep learning-based RGB-D technologies primarily focus on utilizing approximate depth information to identify objects in images. This approach may not be suitable for industrial environments that require precise depth measurements. Considering the characteristics of ToF cameras, their use is recommended only in environments where a certain margin of error in measured depth is acceptable. If the sensing accuracy of ToF cameras can be improved, it is expected that ToF sensors can be utilized in industrial settings that require precise depth data measurement.

The difficulty in improving the depth accuracy of ToF cameras lies in knowing the exact distance values of depth data measured by actual ToF cameras. While it is possible to measure depth accuracy using high-precision equipment in specific environments during the ToF camera manufacturing stage, improving the accuracy of ToF cameras, which are influenced by various environmental factors and measurement target components, requires knowing the exact distance values of depth data measured in actual usage environments. Additionally, to develop AI algorithms, which have been actively researched recently, accurate depth values of depth data measured by ToF cameras must be used as labeling values. However, obtaining such precise depth values is virtually impossible, which has hindered research on improving the pixel-level accuracy of ToF cameras.

This paper proposes a new method for obtaining the exact distance ground truth values of depth data acquired in real environments with ToF cameras to improve pixel-level sensing accuracy. The proposed method fully utilizes the construction of 3D models of products and simulation environments in the design stage during product development in industrial settings. To obtain the exact distance ground truth values of depth data acquired by ToF cameras used in actual environments, simulations were set up with the same ToF sensor viewpoints as the real environment, synchronizing the real and simulated environments to obtain depth values for 3D components. Minor errors occurring during this process were resolved using preprocessing algorithms. Furthermore, the accuracy of ToF camera depth was demonstrated to be improvable through AI learning using the accurate depth values obtained from simulations.

This paper introduces the process for acquiring the exact distance values necessary for improving the accuracy of ToF sensors and proposes development approaches for each step.

Section 3 introduces the AI learning process for ToF sensors, explaining preprocessing methods and the acquisition of accurate labeling through simulations.

Section 4 presents performance evaluations and application results in industrial settings using the proposed method.

Section 5 and

Section 6 discuss future research directions and conclusions, respectively.

3. High-Quality ToF Camera Data Acquisition Strategies

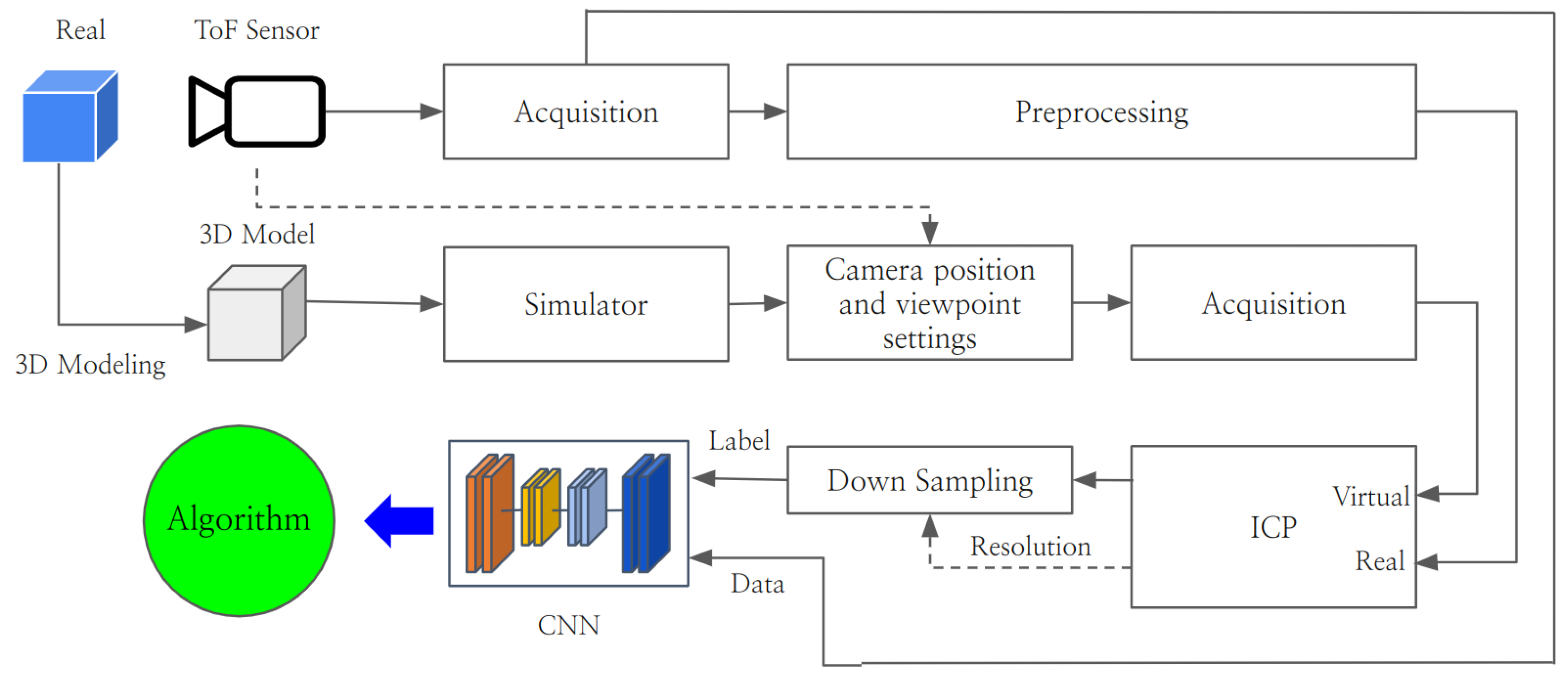

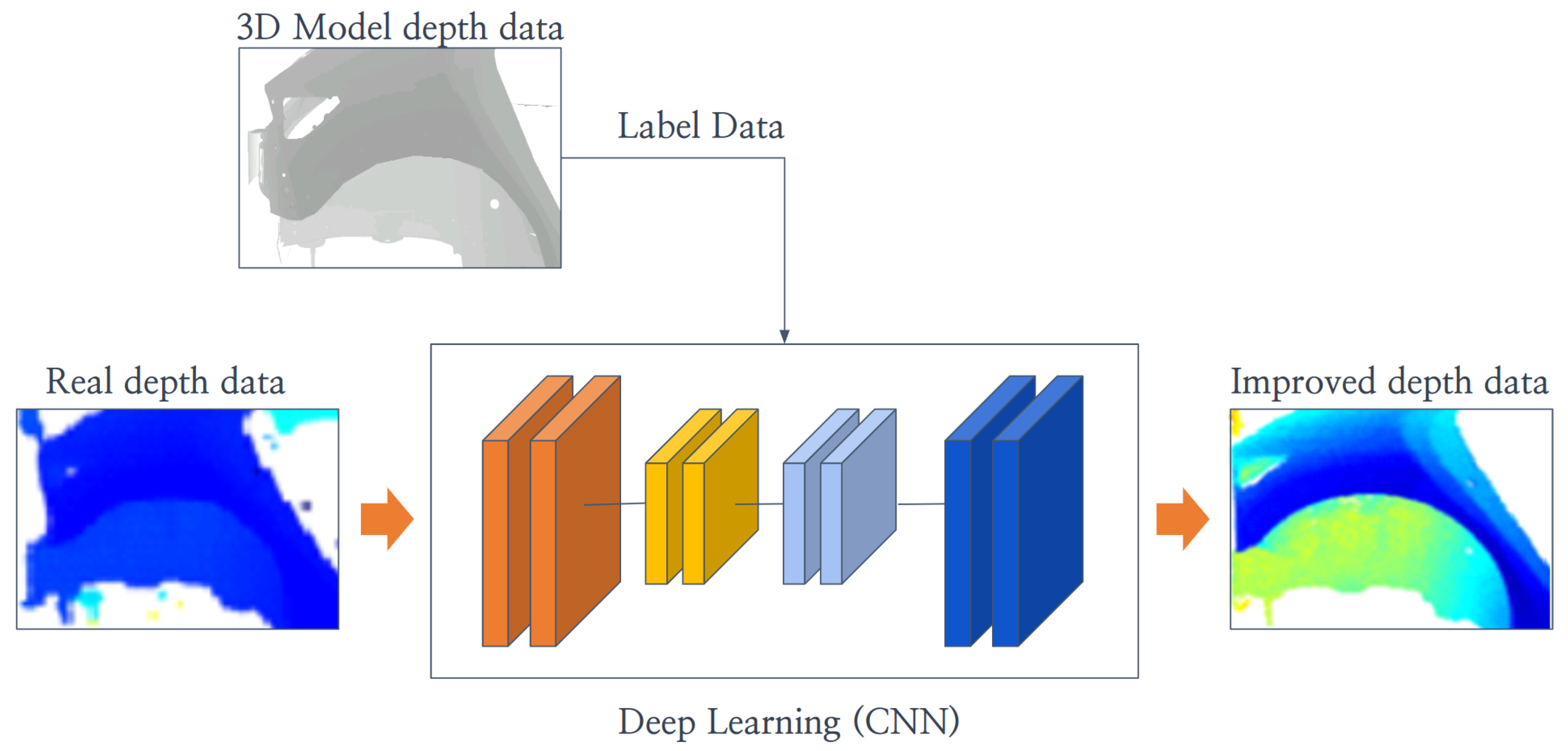

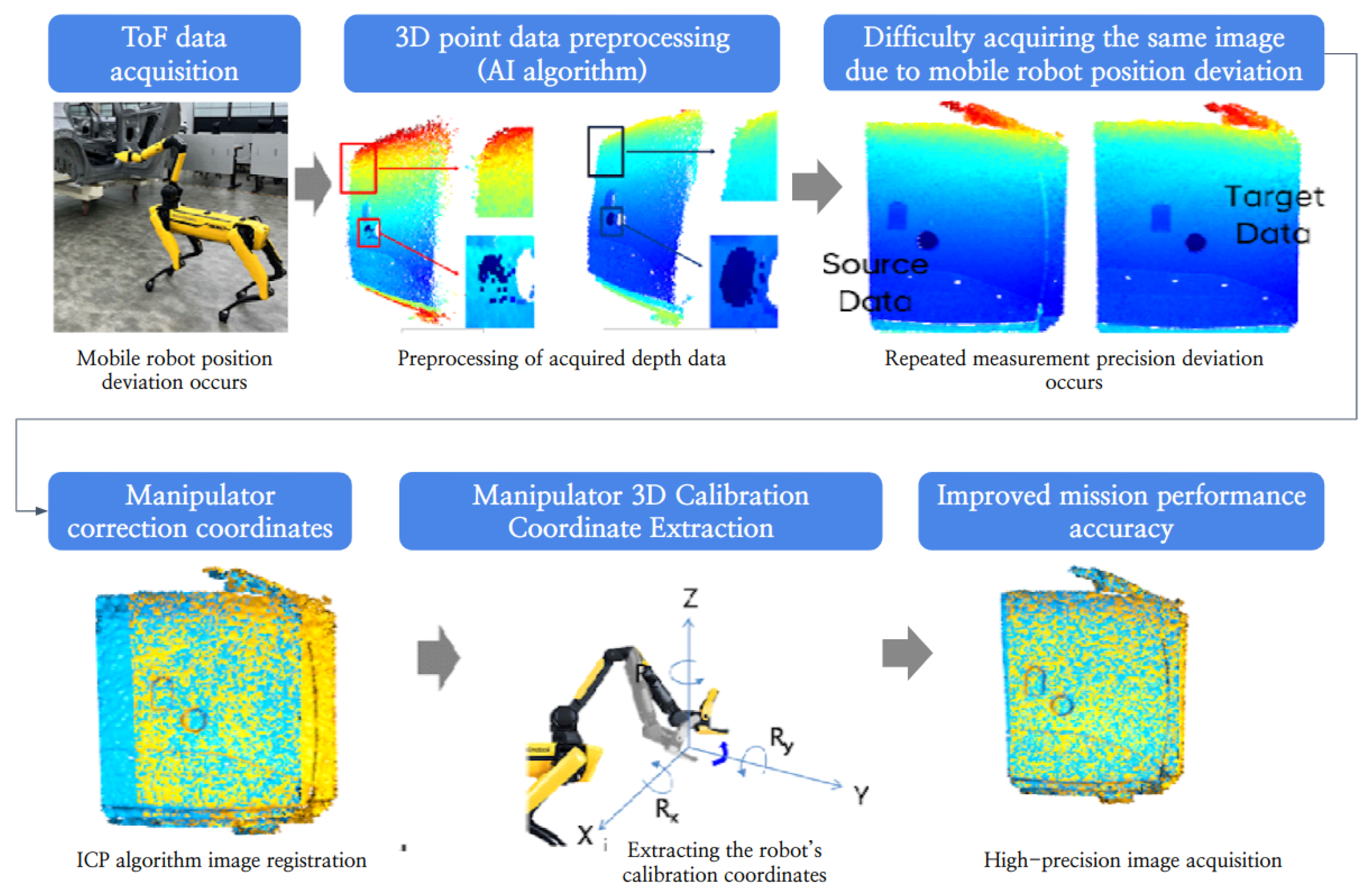

To improve the data acquisition accuracy of existing time-of-flight (ToF) cameras (Boston Dynamics, Waltham, MA, USA), deep learning algorithms have primarily been used to adjust sensor values based on the clustering of object shapes. However, this method has not focused on achieving the millimeter-level precision required in industrial settings. It has instead concentrated on recognizing and differentiating the forms of general objects. To obtain more accurate and detailed depth information necessary in industrial environments, there is a need to develop deep learning algorithms that enhance the accuracy of sensor acquisition values. A practical method could be to use the depth of ToF pixels obtained from real environments and the corresponding exact distance as labels for training to improve the accuracy of each pixel’s depth value. The main reason such methods have not been previously attempted is due to the practical difficulty of labeling the exact distance values of pixels measured by ToF sensors (Boston Dynamics, Waltham, MA, USA) This paper proposes a method for acquiring precise millimeter-scale depth values of pixels from ToF sensors to be used as labels. This method involves simulating a ToF sensor in a simulation environment set up exactly like the real environment, using a 3D model, and using the accurate depth values obtained as labels, as shown in

Figure 1. This approach leverages the benefits of parts and process 3D models available in the product development processes already used in industrial settings. For example, in automobile development, cars are produced based on the 3D models of parts created during the design and manufacturing processes. Furthermore, to automate the manufacturing process, 3D models and simulation environments of the production process and surrounding objects are established. By utilizing these existing industrial processes, precise millimeter-scale depth values of ToF sensor pixels can be easily labeled.

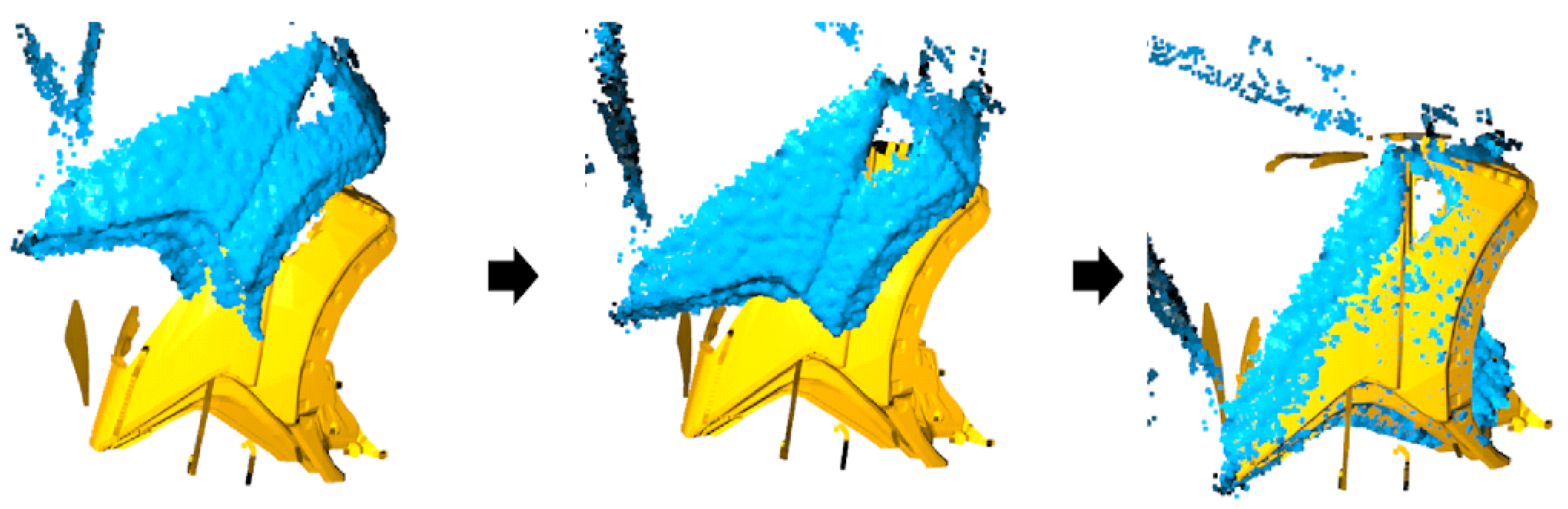

As shown in

Figure 1, the entire process involves setting up a simulation environment using a 3D model identical to the actual product and simulating a ToF camera to acquire 3D depth information from the same location and viewpoint as in real environments. Even with precise settings, discrepancies in acquisition position and viewpoint can occur. To resolve this issue, shape matching using the ICP algorithm is performed to align the shapes of the data acquired in reality and in simulation. However, due to noise from the ToF sensor, the performance of the ICP algorithm may degrade, necessitating pre-data processing. Additionally, to eliminate the resolution discrepancy between the high-resolution depth information obtained in the simulation and the 400 × 300 resolution of the ToF sensor, the resolution of the depth information is adjusted through downsampling after 3D model alignment using ICP. Finally, accurate labels for the depth information of ToF pixels obtained are extracted and used to train a deep learning algorithm, thus developing an AI algorithm to enhance the accuracy of ToF sensing values.

3.1. Integrated Preprocessing Techniques for Enhancing Depth Data Accuracy

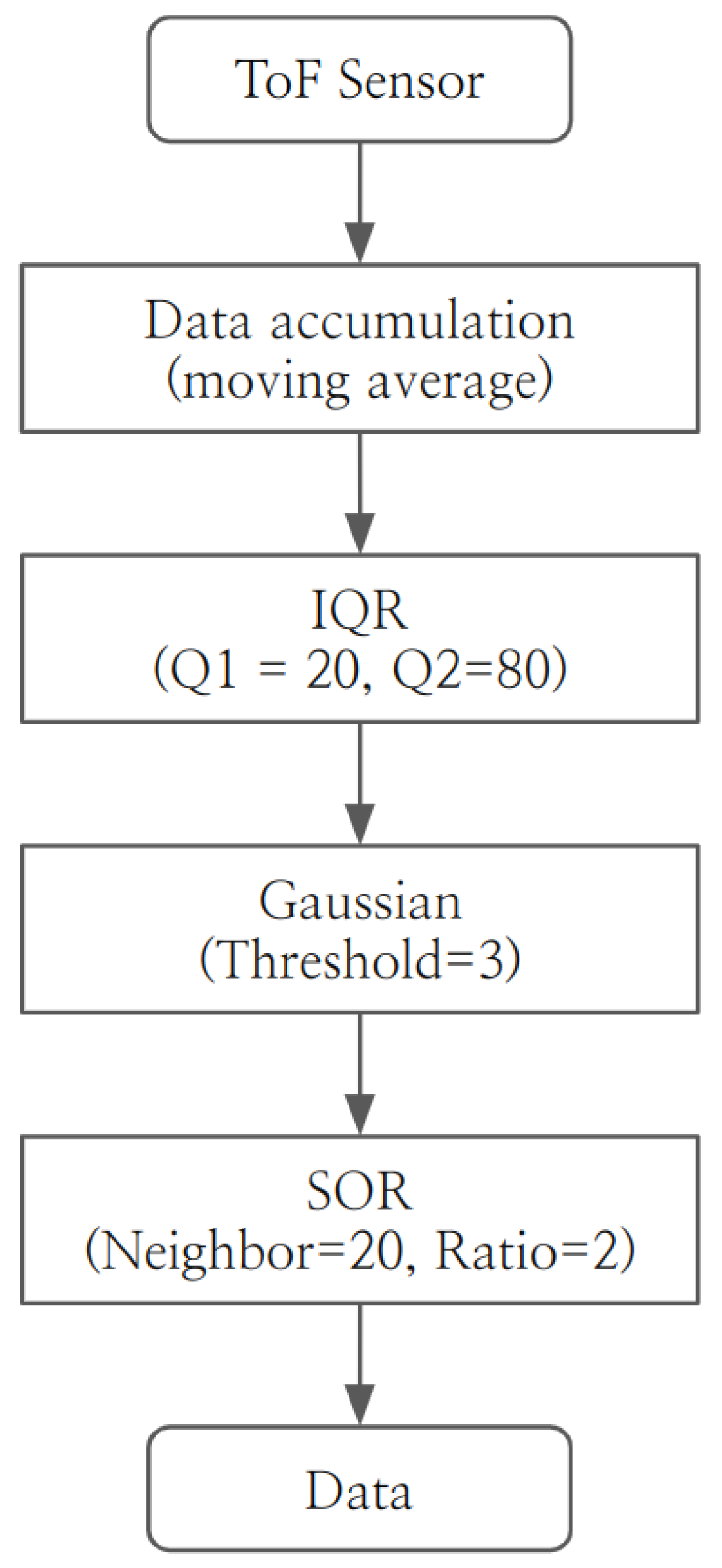

In order to match shapes between depth data acquired from real environments and data acquired in simulation environments, preprocessing of noise and data loss from time-of-flight (ToF) sensors is essential. Due to the frequent occurrence of noise and missing pixel data in ToF sensors, shape matching and alignment using unprocessed depth data is practically challenging. This paper proposes optimizing the preprocessing of ToF depth camera data using traditional methods and integrated preprocessing algorithms considering industrial field characteristics, as shown in

Figure 2. ToF sensors often experience noise and data loss in each pixel due to the object being measured, the influence of light, and environmental changes. This occurs due to various factors such as instability of light sources, slight differences in illumination, and dust.

To acquire accurate data, this study aims to improve accuracy by calculating average values, not just by accumulating data, to prevent data omission and maximize data performance. It is important to exclude the number of times data has been lost. There are two types of data loss: cases where no distance value can be obtained and cases where the sensor displays the maximum value due to saturation. These two types were pre-filtered during accumulation, and by using a moving average filter as in Equation (

1), the conditional average of the accumulated data from ToF sensor pixels was calculated to enhance data omission and accuracy. However, depth data acquired through data accumulation may contain more noise, leading to increased noise issues. To resolve this, a process of data normalization is required to remove outlier data and smooth out the data.

For this purpose, three main algorithms were used to preprocess the depth data:

Interquartile Range (IQR): Represents the middle 50% range of the data, which is useful for identifying the distribution of data and outliers [

12]. In this study, the first quartile (Q1) was set to 20% and the third quartile (Q3) to 80%, targeting the data between the lower 20% and upper 20% to eliminate noise.

Gaussian Distribution: Uses the mean and standard deviation of the data to measure how far the data points are from the mean and to detect outliers [

13]. In this research, data points that are more than three times the standard deviation from the mean were defined as outliers and considered as noise.

Statistical Outliers Removal (SOR) Filter: Calculates the average distance among the K-nearest neighbors around each data point, and data points that are significantly farther from the average distance are considered outliers [

14]. In this study, the number of neighbors was set to 20, and data points that are more than twice the standard deviation from the average distance were considered outliers. This filter is effective in smoothing the surface of 3D point clouds.

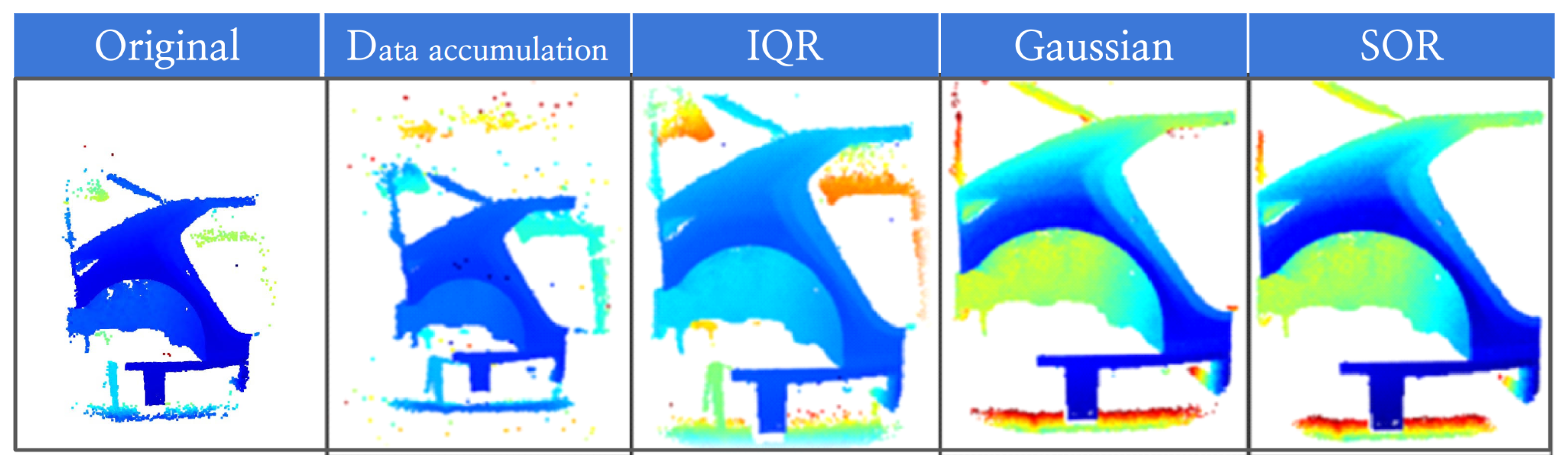

By applying these preprocessing techniques, we were able to effectively prevent data loss and remove noise from the ToF sensor data, as shown in

Figure 3. Moreover, this preprocessing process allowed us to successfully apply the ICP algorithm without any issues for shape matching with depth data acquired in simulations.

3.2. Pixel-Level Depth Data Labeling Techniques Using 3D Simulation Data

This study, unlike segmentation and edge smoothing techniques based on RGB-D [

15], utilizes time-of-flight (ToF) cameras to enhance pixel-level accuracy by obtaining precise distance values corresponding to the pixel values captured by the camera. To achieve this, the creation of highly precise measuring equipment is essential, and obtaining real distance values from depth data captured in various environments is almost impossible. Even when additional equipment such as Lidar is installed and synchronized with the ToF sensor, discrepancies due to installation location can cause significant errors, making it difficult to label depth information at pixel-level distances [

15,

16].

In this research, a new method is proposed that compares point cloud data captured with a ToF camera with data obtained through 3D simulation to compensate for inaccuracies. This method leverages the simulation environment set up to check the accuracy and interference levels of part-by-part 3D drawings and production equipment easily obtainable during the design and manufacturing processes in industrial settings such as automotive factories. Precision 3D models are created to millimeter accuracy, and actual vehicle components are also precisely manufactured based on these 3D models, with tolerance management in place. The application environment that captures depth information using real ToF sensors is simulated, and the actual environment’s location and viewpoint captured by the ToF sensor are replicated to obtain simulation data. This approach ensures the accuracy of the depth data, even for automotive products with intricate curves and contours, making it sufficient for use as labeling values for training ToF sensor data. Furthermore, the accurate depth information obtained in the simulation environment can effectively enhance the accuracy of AI algorithms aimed at improving ToF sensor data.

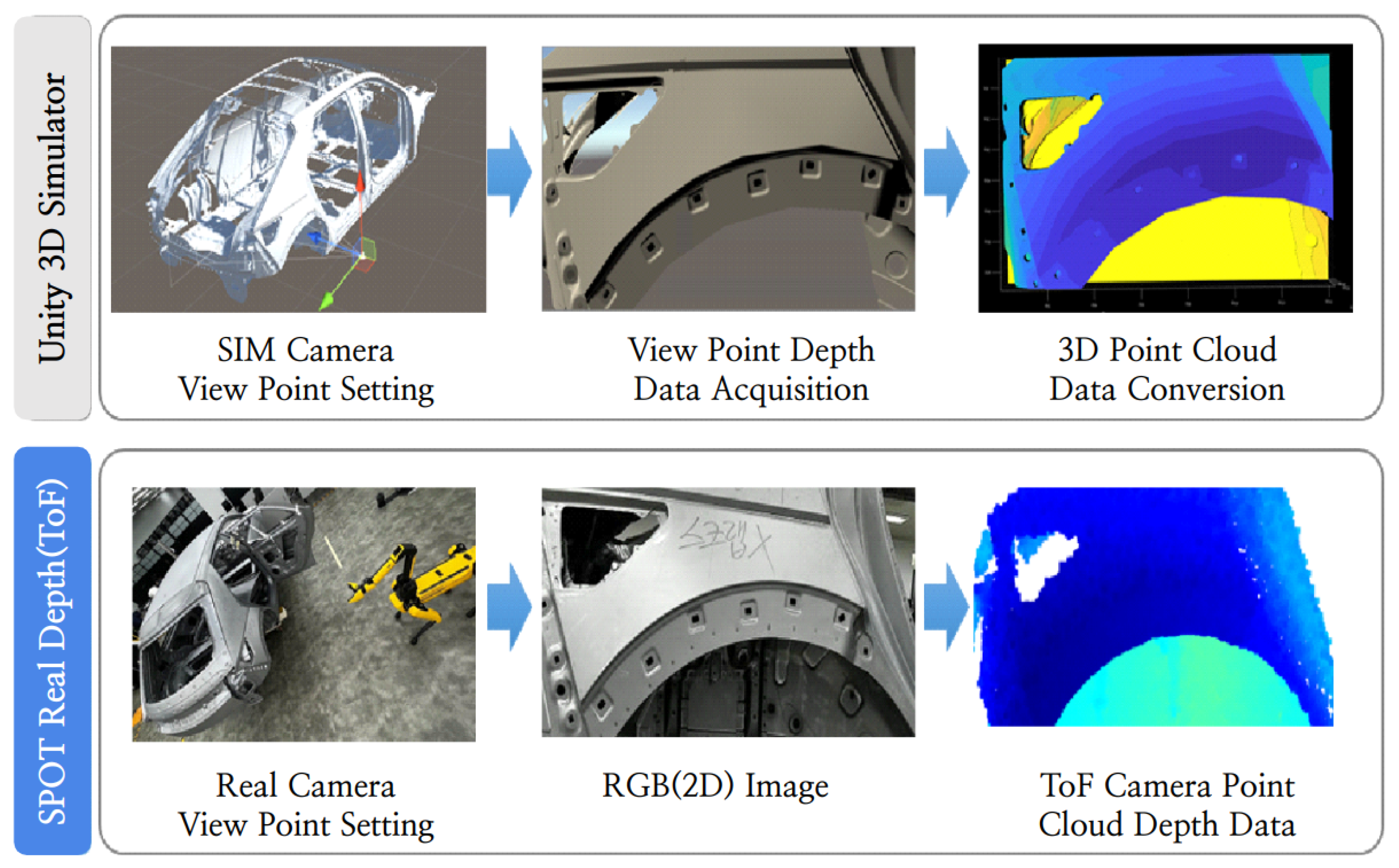

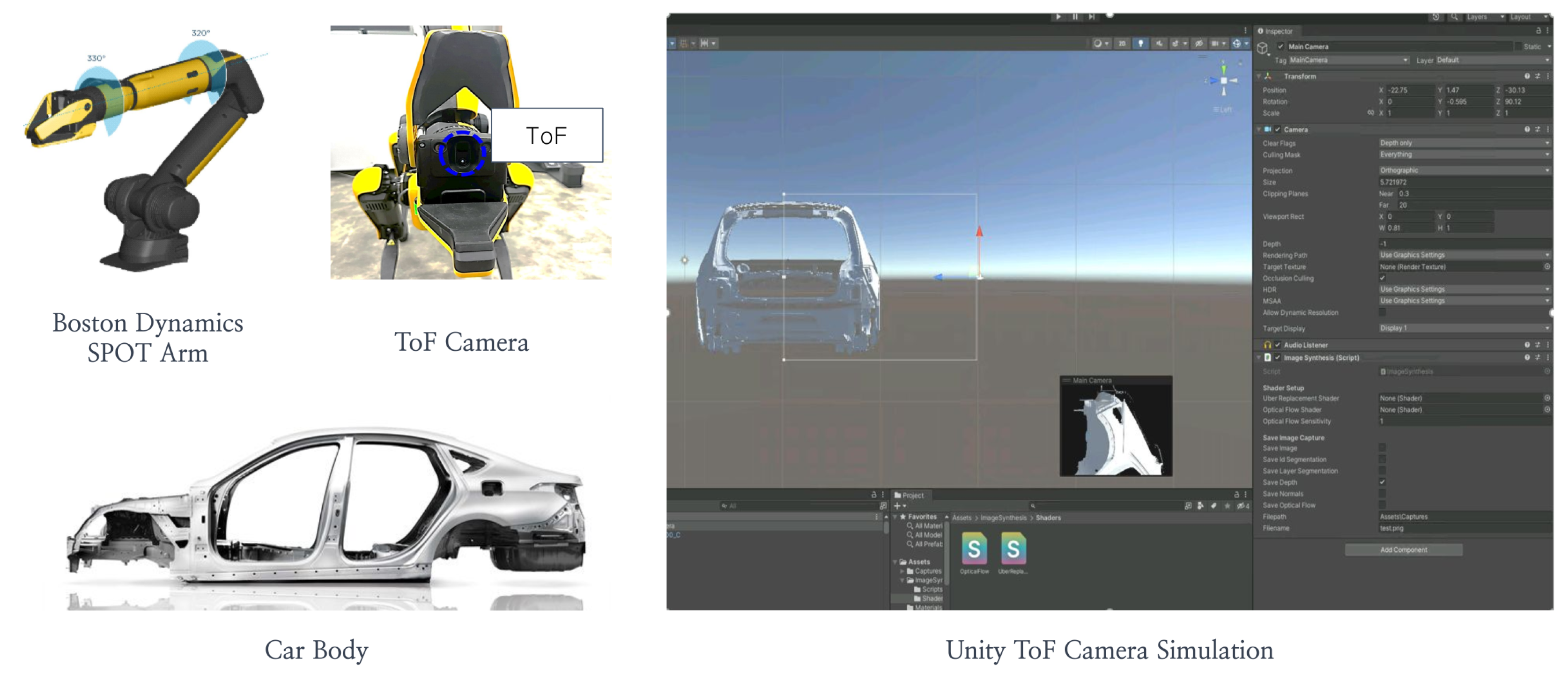

The Unity simulation environment was used for this study. In Unity, modeling of ToF sensors is possible, and techniques for capturing the viewpoint used by ToF sensors in real environments can be easily employed, facilitating the acquisition of depth data in virtual environments. As illustrated in

Figure 4, the ToF shooting location and viewpoint in the real environment are set identically in the Unity 3D simulation environment, allowing the depth values captured by the ToF sensor in the actual environment to be obtained. When capturing depth values in the Unity virtual environment, it is imperative to capture at the highest possible resolution to ensure that the depth values of component surfaces match the actual component depths, addressing issues caused by data resolution that could prevent the capture of curved surface data. Moreover, accurately synchronizing the ToF capture location and viewpoint from the real environment to the virtual environment is challenging, and this study identified the need for additional technological development to effectively synchronize the real and simulation environments in real-time using SLAM information from mobile robots in the actual environment.

The ToF depth data captured in the simulator and the noise-removed depth data from actual captures in

Section 3.1 do not precisely match due to discrepancies in location and viewpoint between the real and virtual environments. To use the virtual environment data as labeling values for real environment data, it is necessary to match each pixel precisely. To address this issue, a shape-matching process using the ICP algorithm was undertaken, as shown in

Figure 5. Even after alignment, the higher-resolution virtual environment depth data was downsampled to match the real environment depth data on a 1:1 basis. This downsampling process involved calculating the number of pixel details at overlapping locations during the shape matching of the actual captured depth data and downsampling the virtual environment depth data accordingly to acquire labeling values that match the actual captured depth values.

3.3. ToF Depth Data AI Training

In

Section 3.2, the depth data from the ToF (time-of-flight) sensor and the corresponding labeled values for the exact distance of each pixel were obtained. To develop an AI algorithm to improve the accuracy of ToF sensor values, a deep learning training process is required. The deep learning network model should take 400 × 300 pixel data as input and provide an output of the same size, 400 × 300 pixels, using a CNN. In this study, we conducted tests using U-Net [

17], which is widely used for segmentation and has a symmetrical structure that allows input data to be transformed directly into output data. MATLAB (2023b)was utilized, and the U-Net library provided by MATLAB was used. Specifically, the training set included 40 images, and the remaining 10 images were used as the test set. The detailed structure of the network model is as follows: the input layer receives 400 × 300 pixel depth images, and the output layer provides images of the original size, 400 × 300 pixels. The loss function used was the mean squared error (MSE) loss function. Training was performed over a total of 100 epochs, with a learning rate set to 0.001. During training, the loss function value started at approximately 0.02 in the initial epoch and decreased to about 0.005 in the final epoch.

As shown in

Figure 6, the training was conducted using depth data obtained from real-world environments and depth data obtained from 3D simulations as the labeled data. Using the inference executable file created through this process, we were able to input depth data obtained from ToF without preprocessing and obtain depth data with noise preprocessed and performance improved through the AI algorithm.

4. Results of Performance Evaluation and Industrial Field Application

This study utilized the SPOT robot by Boston Dynamics (Waltham, MA, USA), which is highly mobile and capable of autonomous navigation and mission execution in recent industrial settings [

18]. This robot can be equipped with various payloads, making it suitable for fire monitoring, safety inspections, patrolling tasks, and quality inspections during product manufacturing. When equipped with an arm payload, the SPOT robot can utilize a multi-joint arm, and notably, it employs the RGB and ToF cameras attached to the End Effector to capture image data for vision inspection purposes. In this research, as illustrated in

Figure 7, tests were conducted on an unpainted car body using the ToF camera mounted on the SPOT robot. The 3D models created during car design and the simulation environment established in the production factory for robot operations were utilized. The viewpoint of the ToF camera was constructed using Unity [

19], and depth information in the simulation was acquired.

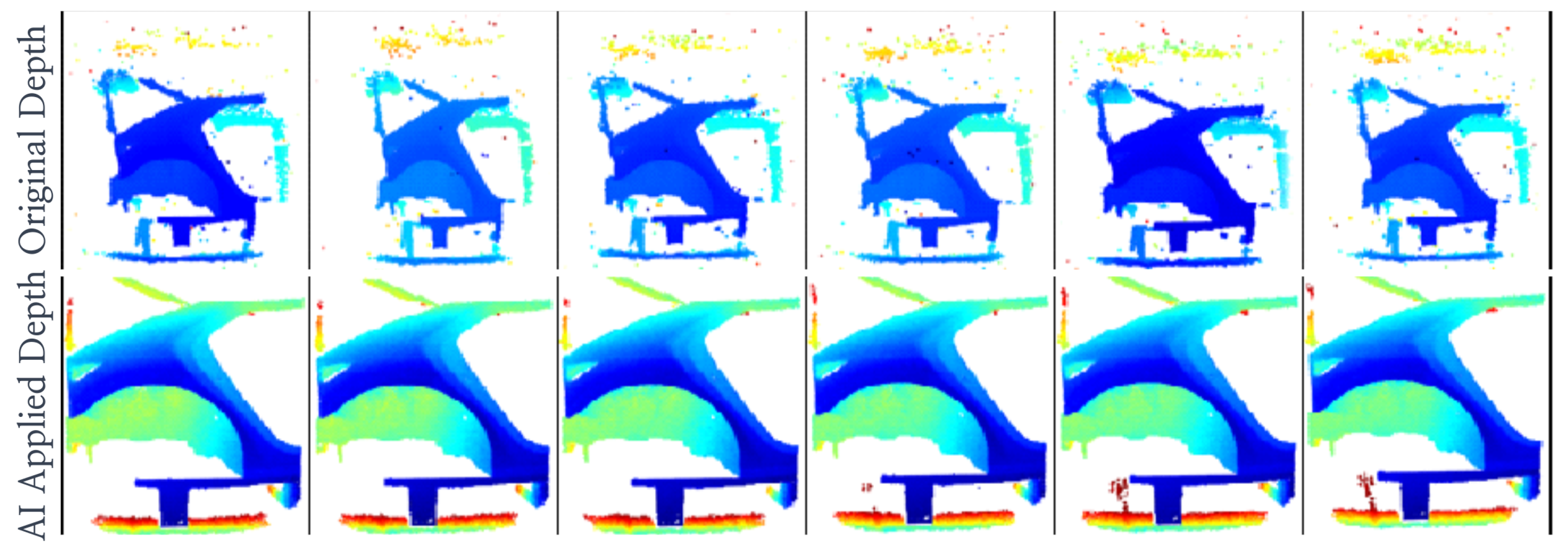

4.1. Performance Evaluation

In this study, we used inference files generated through deep learning to input real-world ToF data directly into an AI algorithm without any preprocessing, allowing the algorithm to automatically perform preprocessing and enhance accuracy. As a result, we were able to obtain improved depth data through noise removal and flattening, as shown in

Figure 8. Notably, it was confirmed that learning could be conducted in an end-to-end manner without the need for setting parameters such as threshold values, which are typically required in traditional preprocessing steps. However, the accuracy of the data obtained through this method has not yet surpassed the accuracy of data preprocessed by humans. Considering that there were fewer than 50 data samples, securing more data reflecting diverse environmental conditions could lead to improvements in the AI algorithm. Additionally, incorporating specific parameters such as the characteristics of the ToF sensor or material information of the components is expected to yield better results. This research is significant in that it has solved the problem of labeling necessary depth data.

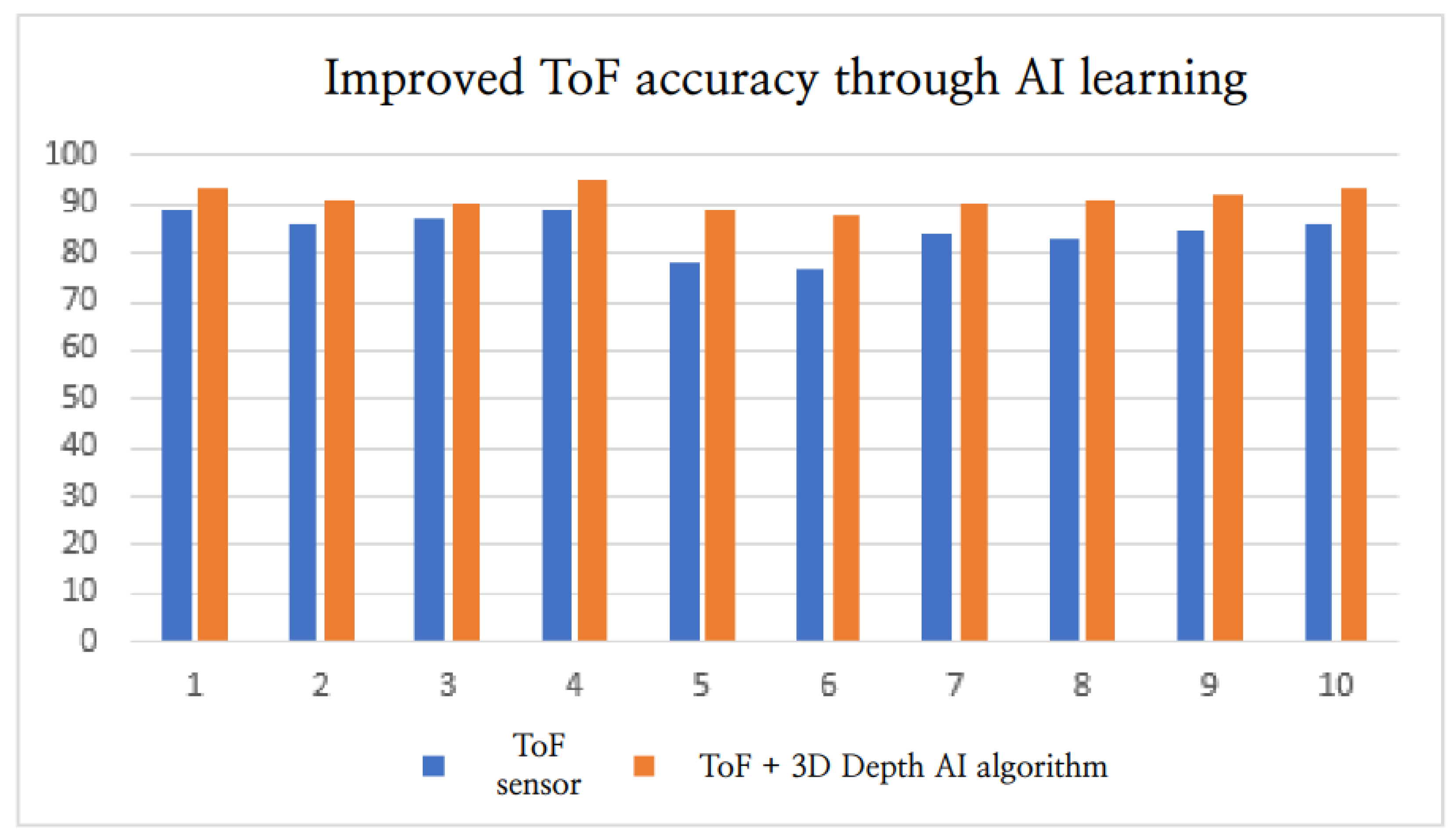

Additionally, to compare the alignment rate of simple depth data distance information, the difference in depth distances for each pixel between the depth values obtained from the simulation (close to the ground truth) and the actual depth data values was expressed as a percentage. The average alignment rate for all pixels was as shown in

Figure 9. In

Figure 9, the mobile robot manipulator was used to capture ToF sensor data 10 times by moving along the X-axis from 0° to 20° in 2° increments in the same environment. Depth data was acquired using both a preprocessing algorithm and a trained AI algorithm. When comparing these to the accurate depth data obtained from the simulation, it was observed that the accuracy of the data obtained through the AI algorithm was consistently higher in all 10 instances compared to the depth data processed by the rule-based preprocessing. This can be attributed to the fact that traditional algorithms involve manual adjustment of parameter values during preprocessing, making it difficult to find the optimal values. In contrast, the AI-based preprocessing method can automatically set the optimal parameter values during the training process to find depth values close to the ground truth. The study demonstrated its value by confirming the potential for achieving better results when additional information, such as temperature data, material properties and characteristics of depth acquisition parts, and environmental information, is provided as additional inputs for training.

4.2. Industrial Field Application

This research developed an AI algorithm aimed at enhancing preprocessing and depth accuracy for ToF cameras. As a result, we were able to more easily perform noise removal and flattening of ToF depth data through AI learning, and we established a foundation for accuracy improvement, although only slight. However, it was evident that the ToF sensors still lack the millimeter-level accuracy and precision required for industrial applications. Through this research, we were able to enhance the accuracy and precision of ToF depth data using the AI algorithm, and conducted application evaluations using this data.

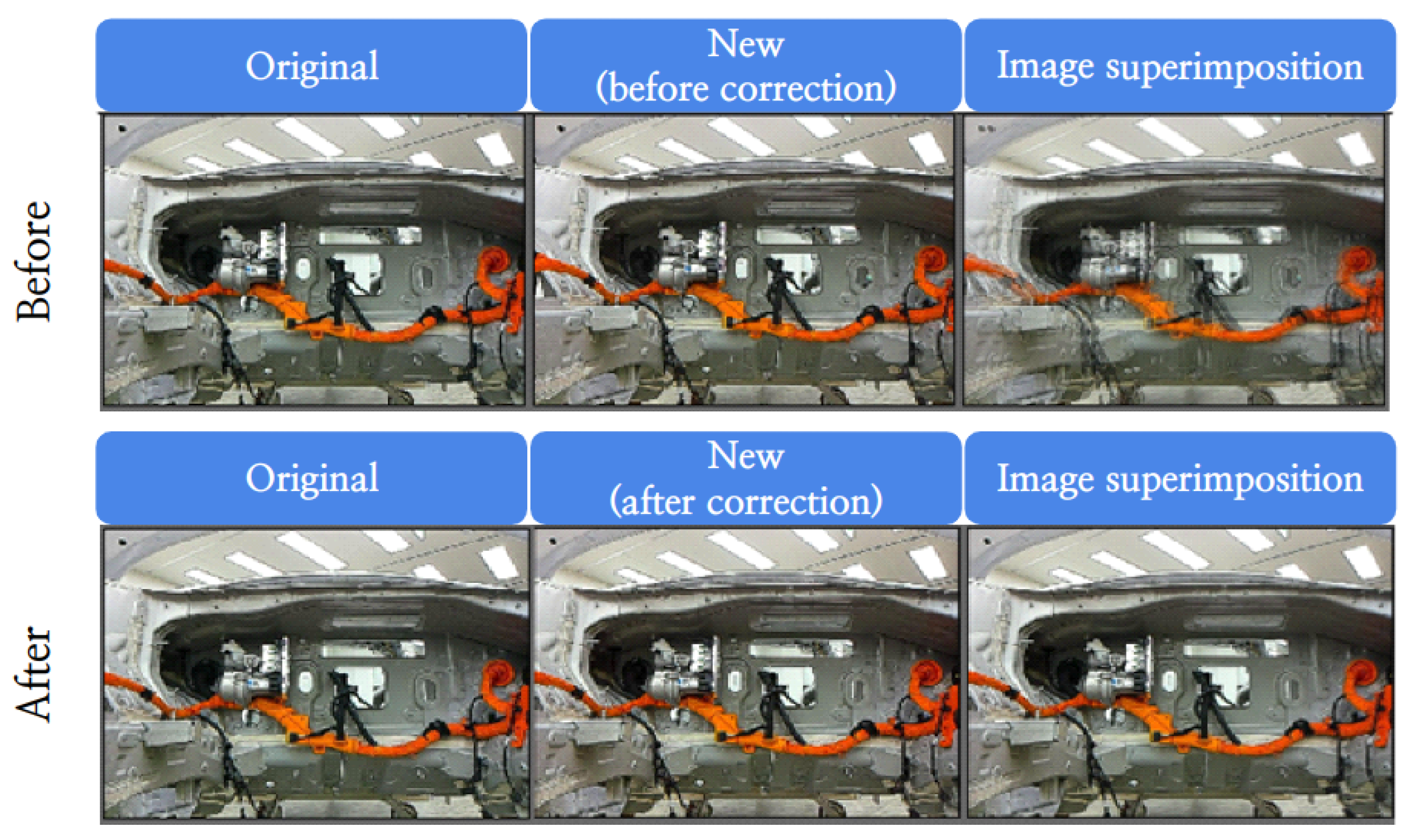

Firstly, as shown in

Figure 10, vision inspections using mobile robots are being conducted in industrial settings [

20]. Since the accuracy and precision of mobile robots are limited to tens of centimeters, the precision of repeated positioning during image acquisition is low. To improve this, we used a 2D-based image matching process to correct the inspection images themselves. However, because the alignment rate does not reach 100% when this correction algorithm is applied, pseudo defects often occur. The most effective solution is to acquire images under the same conditions as the original data captured at the beginning. To achieve this, we used the ToF depth sensor (Boston Dynamics, Waltham, MA, USA) to calculate the positional error with depth data, and used this information to extract the robot correction coordinates, moving the robot arm to the position of the initially captured image to acquire the same image. This process, illustrated in

Figure 10, was conducted using ToF depth data secured in this research.

As a result, as shown in

Figure 11, the performance of the 3D data correction method using ToF depth was significantly improved compared to the conventional 2D image matching.

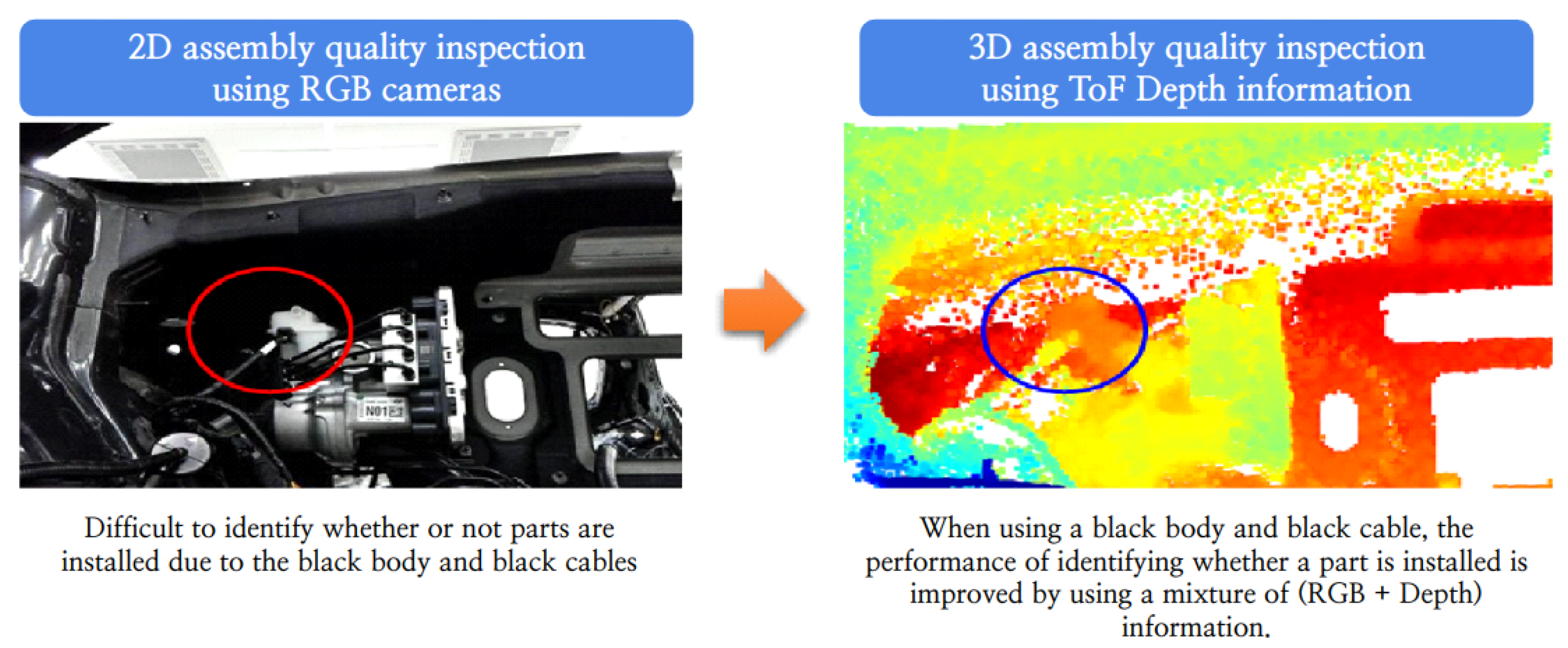

Secondly, there are parts that are difficult to inspect with 2D images alone during vision inspections. If the color of the part intended to determine the presence or absence of assembly matches the background color, the accuracy of the inspection results is significantly reduced. To improve this, as illustrated in

Figure 12, we applied a parallel algorithm that calculates the difference between 2D and 3D data using ToF depth information to enhance the accuracy of the inspections.

In this paper, efforts to remove noise and enhance the accuracy of ToF sensors, which are relatively inexpensive and consume less power compared to Lidar, have proven that they are suitable sensors for various mobile platforms used in recent industrial settings. As research on performance improvement progresses, the value of their use increases, emphasizing that continuous research is needed to expand their application areas and to use them more effectively in industrial settings.

5. Future Work

This study has secured labeling data that can be used to train on the pixel-by-pixel depth data of ToF sensors. However, synchronizing the viewpoint of a ToF sensor in real environments with that in simulation environments requires cumbersome manual work. Research is needed to develop a system that can perform this synchronization accurately in real-time. Once such a system is established, mobile robots will be able to autonomously move around industrial sites, capturing depth data which will then be automatically secured and accumulated in a synchronized simulation environment. This will enable the collection of data necessary for developing more effective AI algorithms. Furthermore, ToF sensors calculate distance by emitting light to an object and measuring the time it takes for the light to return, but this becomes challenging with black objects or dark colors that absorb light. While methods such as using RGB cameras or stereo cameras in conjunction with enhanced lighting exist, their effectiveness is limited. Therefore, research into a cost-effective solution for measuring distances on dark colors in industrial settings is necessary.

6. Conclusions

This study utilized time-of-flight (ToF) sensors, which are cost-effective depth measurement sensors, for various applications in industrial settings where high accuracy and precision are required. To achieve this, a 3D model identical to the real environment was created in the simulation setting, and the position and viewpoint of the ToF sensor were aligned accordingly. This setup enabled the capture of pixel-specific distance values, which were previously challenging to obtain, and these values were used as labels for training data in deep learning processes. As a result, the AI algorithms developed were able to achieve data accuracy comparable to traditional methods, laying the groundwork for the development of higher-performance AI algorithms in the future. Additionally, the study generated simulation data that could replace the high-precision devices typically used for evaluating ToF sensor performance, and this data can be used as ground truth for ToF sensor performance evaluations. While not fully meeting all the varied application needs of the industrial sector, the enhanced accuracy and precision of the ToF sensors have demonstrated their potential in applications such as vision inspection by mobile robots. Recently, many companies have been conducting research to enhance productivity using various robotic platforms including mobile robots, industrial robots, and humanoids, with a focus on autonomous operations facilitated by AI technology. A crucial part of this research involves acquiring and understanding real-time distance information about the surrounding environment. For this purpose, a compact sensor capable of economically acquiring real-time depth data of the environment and objects is essential. The authors anticipate that ToF sensors will fulfill this role effectively.

Author Contributions

Conceptualization, C.Y. and J.K.; Software, C.Y.; Validation, C.Y.; Formal analysis, J.K.; Investigation, C.Y.; Resources, J.K.; Writing—original draft, C.Y. and J.K.; Writing—review and editing, D.-S.E. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by Hyundai Motor Company under project number 2023_CSTG_0121.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the first author due to Hyundai Motor Company’s internal policies and therefore are not publicly available. Data access requests can be directed to the first author.

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could appear to influence the work reported in this paper. However, Changmo Yang is employee of Hyundai Motor Company, which provided funding and technical support for this work. The funder had no role in the design of the study; in the collection, analysis, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| ToF | Time of Flight |

| AI | Artificial Intelligence |

| RGB-D | Red, Green, Blue-Depth |

| ICP | Iterative Closest Point |

| CNN | Convolutional Neural Network |

| SOR | Statistical Outliers Removal |

| IQR | Interquartile Range |

| 3D | Three-Dimensional |

| RGB | Red, Green, Blue |

| SLAM | Simultaneous Localization and Mapping |

| Q1 | First Quartile |

| Q3 | Third Quartile |

References

- Thoman, P.; Hirsch, A.; Wippler, M.; Hranitzky, R. Optimizing Embedded Industrial Safety Systems Based on Time-of-flight Depth Imaging. In Proceedings of the 2021 IEEE 17th International Conference on eScience (eScience), Innsbruck, Austria, 20–23 September 2021; pp. 255–256. [Google Scholar]

- May, S.; Werner, B.; Surmann, H.; Pervölz, K. 3D Time-of-Flight Cameras for Mobile Robotics. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 790–795. [Google Scholar]

- Buratto, E.; Simonetto, A.; Agresti, G.; Schäfer, H.; Zanuttigh, P. Deep Learning for Transient Image Reconstruction from ToF Data. Sensors 2021, 21, 1962. [Google Scholar] [CrossRef] [PubMed]

- Yang, J.; Li, S.; Wang, Z.; Dong, H.; Wang, J.; Tang, S. Using deep learning to detect defects in manufacturing: A comprehensive survey and current challenges. Materials 2020, 13, 5755. [Google Scholar] [CrossRef] [PubMed]

- Frangez, R.; Reitmann, S.; Verd’ie, N. Assessment of Environmental Impact on Time-of-Flight Camera Accuracy. J. Sens. Technol. 2022, 15, 2389–2402. [Google Scholar]

- Verd’ie, N.; Pujol, P.; Camara, J. Cromo: Enhancing Time-of-Flight Camera Depth Accuracy Under Varied Environmental Conditions. Sens. Actuators 2022, 29, 112–125. [Google Scholar]

- Reitmann, S.; Frangez, R.; Verd’ie, N. Blainder: Addressing Dust Interference in Time-of-Flight Cameras. J. Adv. Opt. Technol. 2021, 10, 655–667. [Google Scholar]

- Yang, J.; Zhang, L.; Chen, X. Color Assisted Depth Data Enhancement for RGB-D Cameras. Multimed. Tools Appl. 2014, 70, 2331–2346. [Google Scholar]

- Ji, S.; Li, H.; Yang, J. Calibrated RGB-D Sensing: Improved Depth Accuracy with RGB Information. IEEE Sens. J. 2021, 21, 12905–12916. [Google Scholar]

- Al-Masni, M.A.; Kim, D.H.; Kim, T.S. Local Feature-Based 3D Reconstruction of Complex Objects Using RGB-D Data. Comput. Vis. Image Underst. 2018, 169, 29–41. [Google Scholar]

- Ruchay, A.; Anisimov, A.; Chekanov, N. Real-Time 3D Modeling Using RGB-D Sensors. J. Real-Time Image Process. 2020, 17, 203–214. [Google Scholar]

- Vinutha, H.P.; Prakash, J.B.; Dinesh, A.C. Detection of Outliers through Interquartile Range Method in Statistical Data Analysis. J. Stat. Anal. 2018, 29, 445–456. [Google Scholar]

- Buch, K.; Smith, S.; Jones, L. Decision Support Systems: Integrating Gaussian Distribution for Enhanced Data Analysis. Decis. Sci. J. 2014, 45, 981–1002. [Google Scholar]

- Jassim, F.A.; Al-Taee, M.A.; Wright, W. Image Enhancement Using Statistical Outliers Removal. J. Digit. Imaging 2013, 26, 112–122. [Google Scholar]

- Li, J.; Bi, Y.; Li, K.; Wu, L.; Cao, J.; Hao, Q. Improving the Accuracy of TOF LiDAR Based on Balanced Detection Method. Sensors 2023, 23, 4020. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Yang, B.; Xie, X.; Li, D.; Xu, L. Influence of Waveform Characteristics on LiDAR Ranging Accuracy and Precision. Sensors 2018, 18, 1156. [Google Scholar] [CrossRef] [PubMed]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Part III. Springer International Publishing: Berlin/Heidelberg, Germany, 2015; Volume 18, pp. 234–241. [Google Scholar]

- Boston Dynamics. SPOT. Available online: https://dev.bostondynamics.com/ (accessed on 24 March 2024).

- Unity. Unity Technologies Official Website. Available online: https://unity.com/kr (accessed on 27 April 2024).

- Yang, C.; Kim, J.; Kang, D.; Eom, D.-S. Vision AI System Development for Improved Productivity in Challenging Industrial Environments: A Sustainable and Efficient Approach. Appl. Sci. 2024, 14, 2750. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).