Unbiased 3D Semantic Scene Graph Prediction in Point Cloud Using Deep Learning

Abstract

1. Introduction

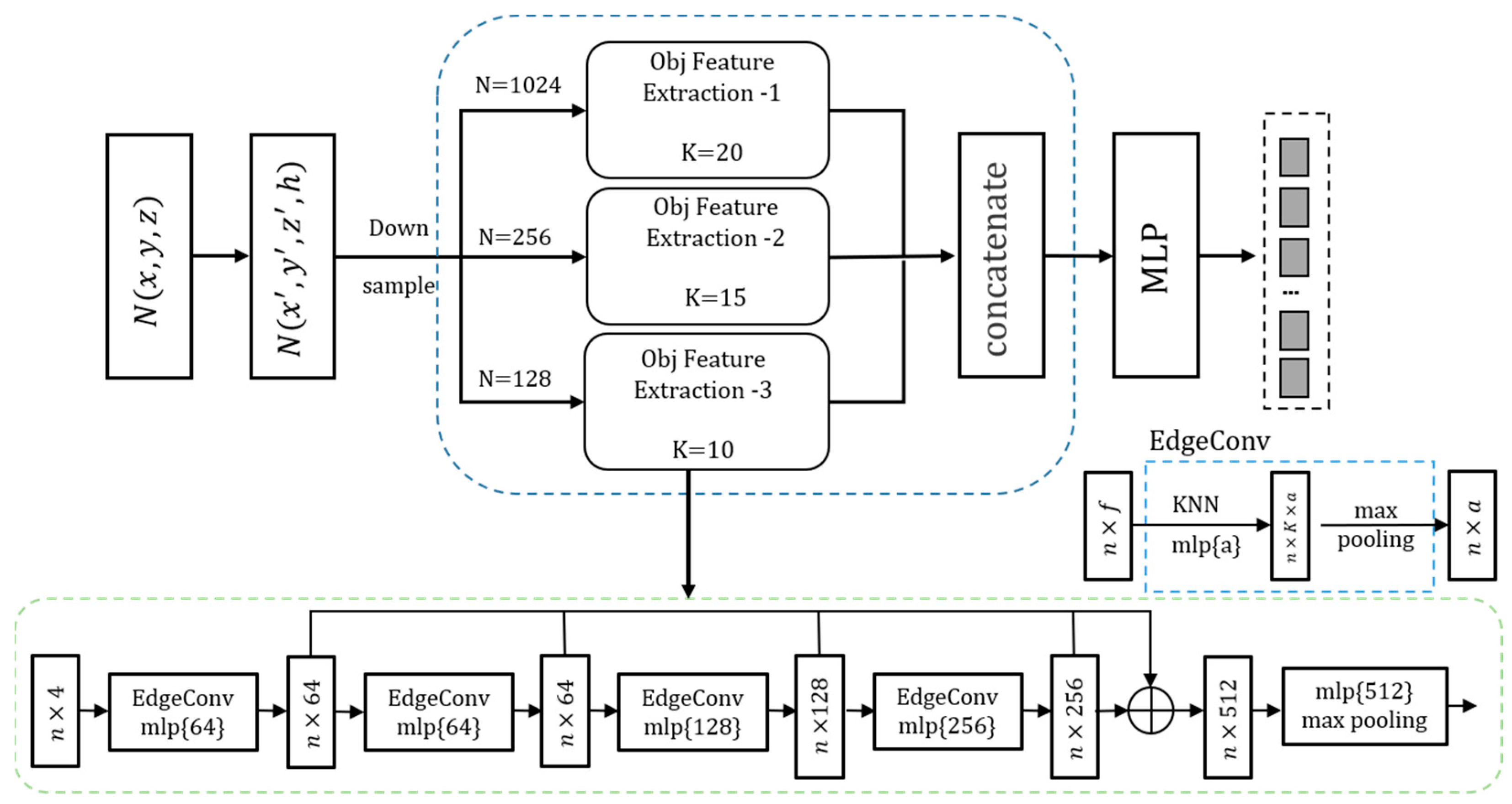

- A new feature extraction coding network, MP-DGCNN, extracts multiple scales of the scene entity features from point clouds more robustly.

- The ENA-GNN was introduced to perform node and edge cross-attention in message propagation, and it improves the node-edge correlation and complex relationship prediction performance.

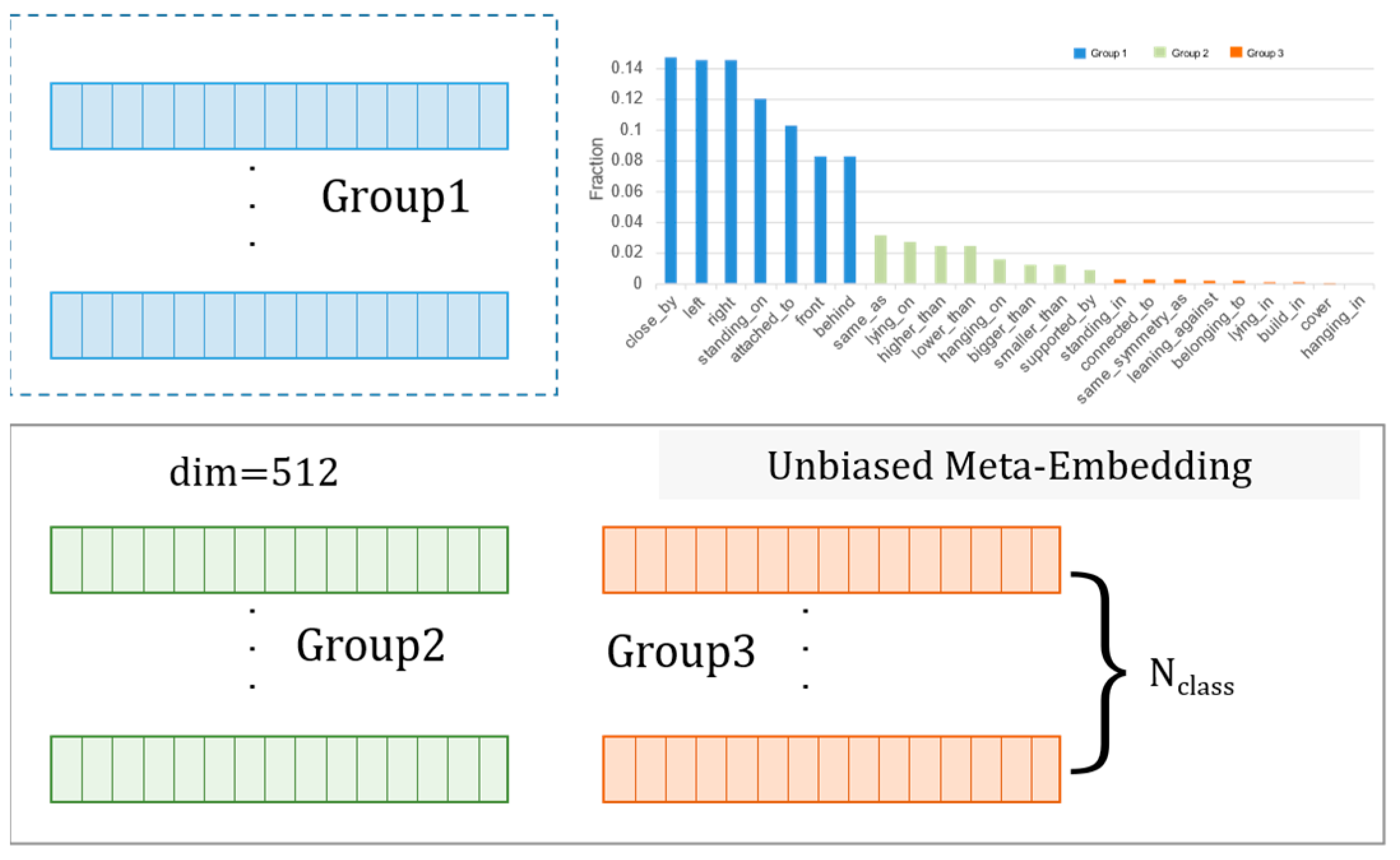

- The long-tail distribution of the dataset is addressed under a group-weighted scheme, including category-related embedding vectors as prior knowledge and a loss function for category balance.

- The experiments are validated, showing that the proposed model achieves state-of-the-art performance.

2. Related Work

2.1. Image-Based Scene Graph

2.2. 3D Scene Understanding and Scene Graph

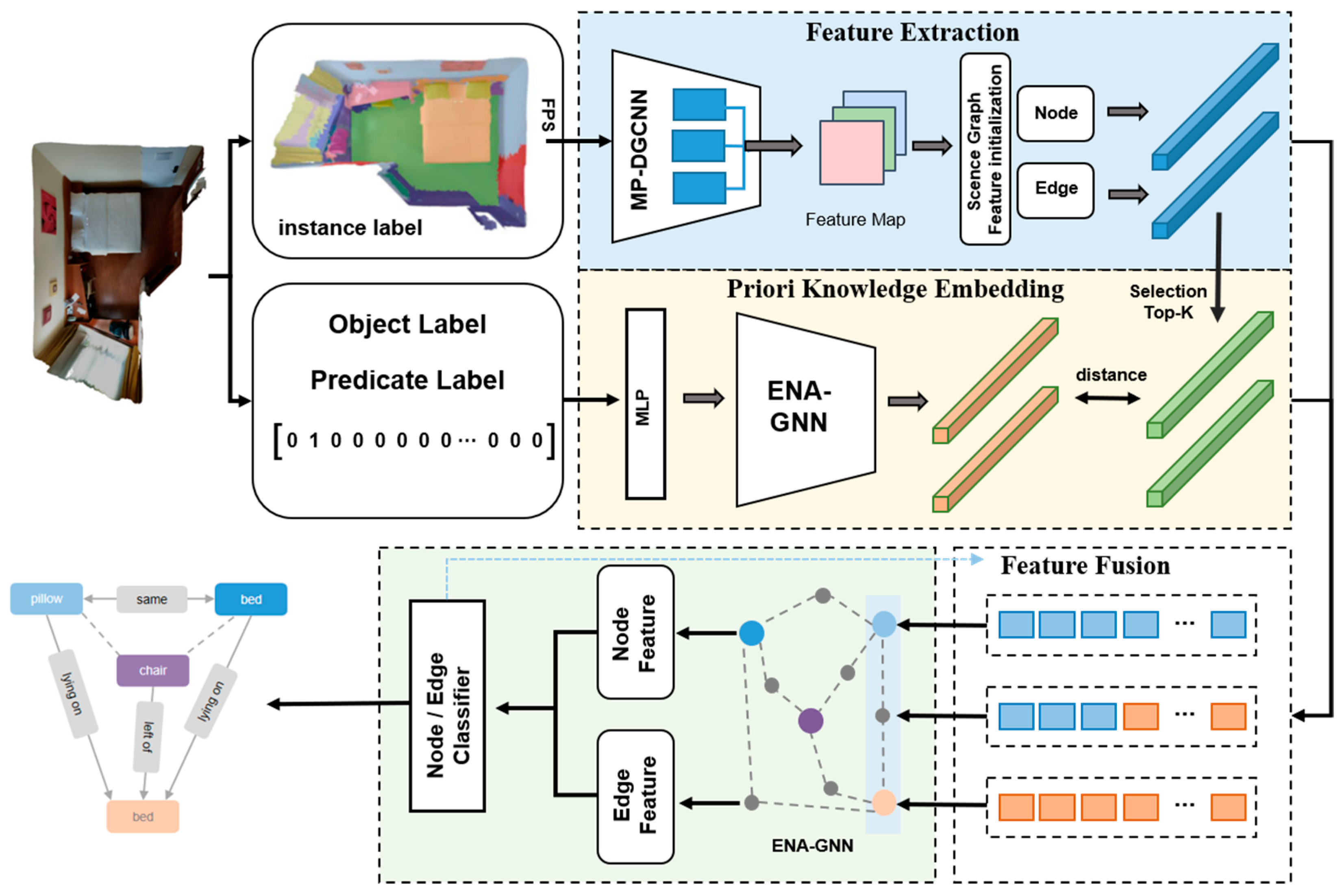

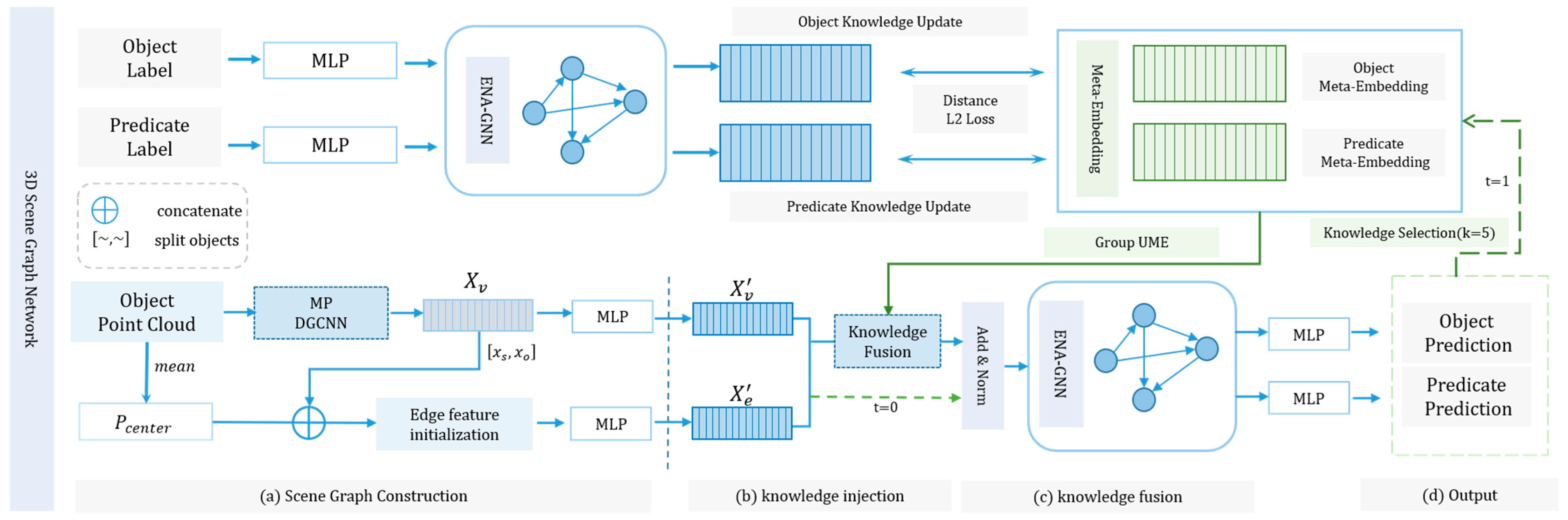

3. Methods

3.1. 3D Scene Graph

3.2. Network Design

3.2.1. Feature Extraction and Coding

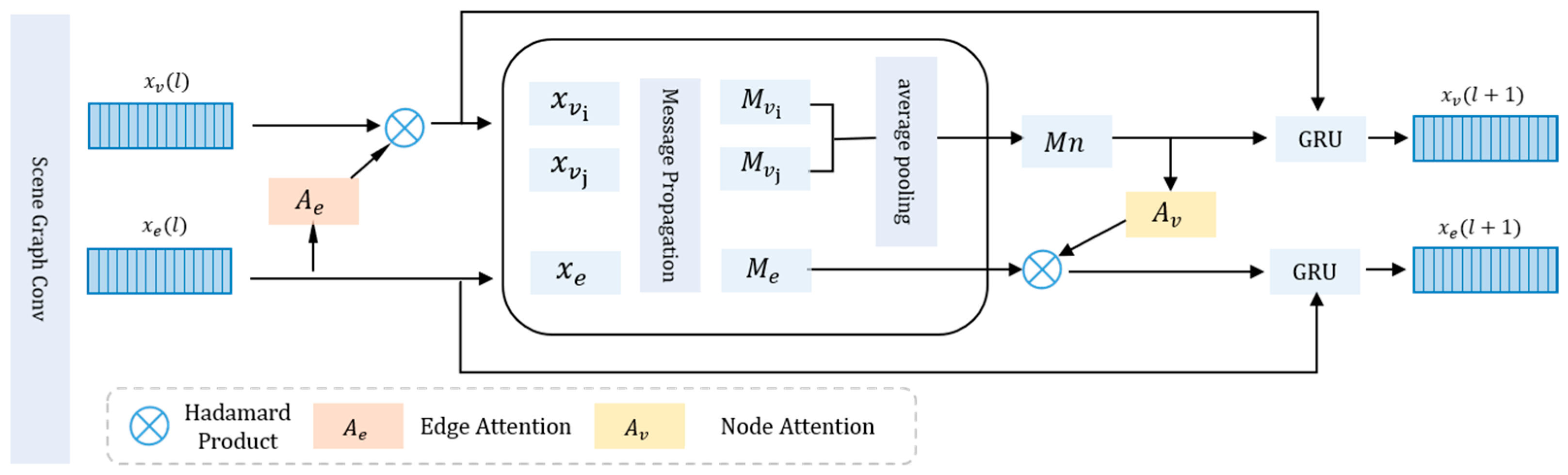

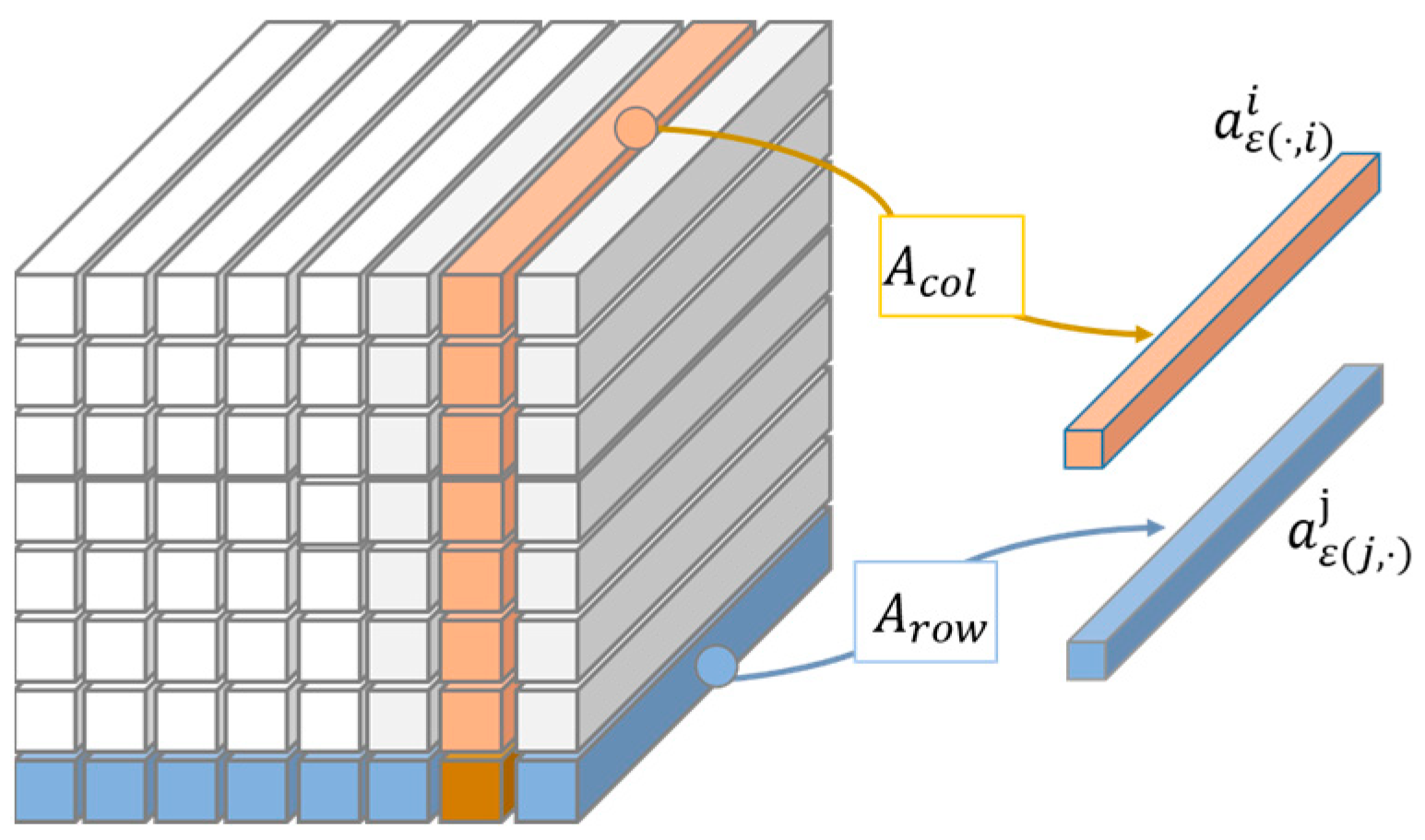

3.2.2. ENA-GNN: Node and Edge Cross-Attention

- (1)

- Node Feature Fusion Edge Attention.

- (2)

- Edge Feature Fusion Node Attention.

- (3)

- Message Propagation.

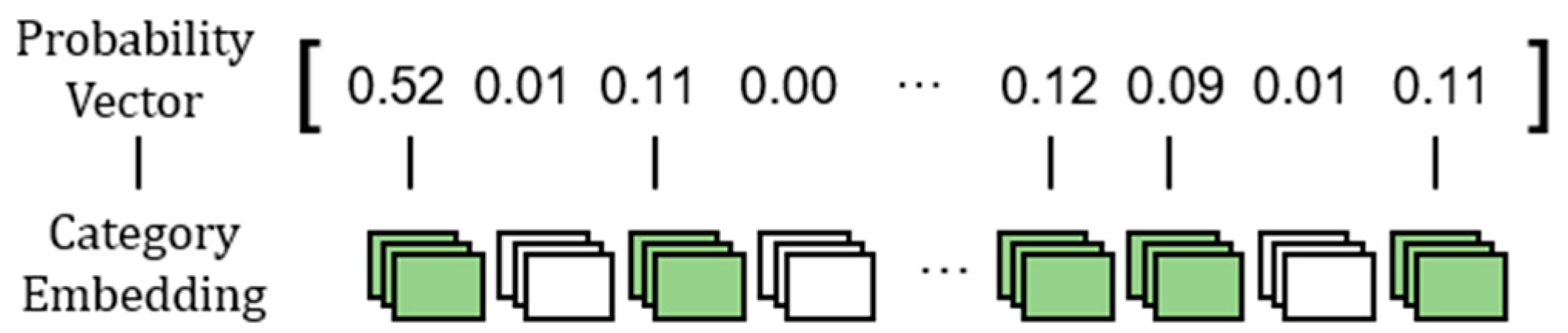

3.3. Unbiased Meta-Embedding

3.4. Scene Graph Relational Reasoning

3.5. Loss Function

4. Experimental Section

4.1. Experiment Preparation

- Obtain the instance IDs in each scene from the dataset and establish the mapping relationship with the corresponding point cloud.

- Conduct sampling by the farthest point sampling (FPS) algorithm, sampling 1024 coordinate points per object instance on average and using random sampling to complement the small target entities to align the training data.

- Using the ground-truth labels, remove some of the individual scenes cut that do not contain relationships or entities, count the frequency of each category and sort them, and remap the labels of each category.

- Save the scene point cloud and label the array files to a separate folder under each scene, and generate the training set and validation set files.

4.2. Evaluation Metrics

4.3. Baseline Models

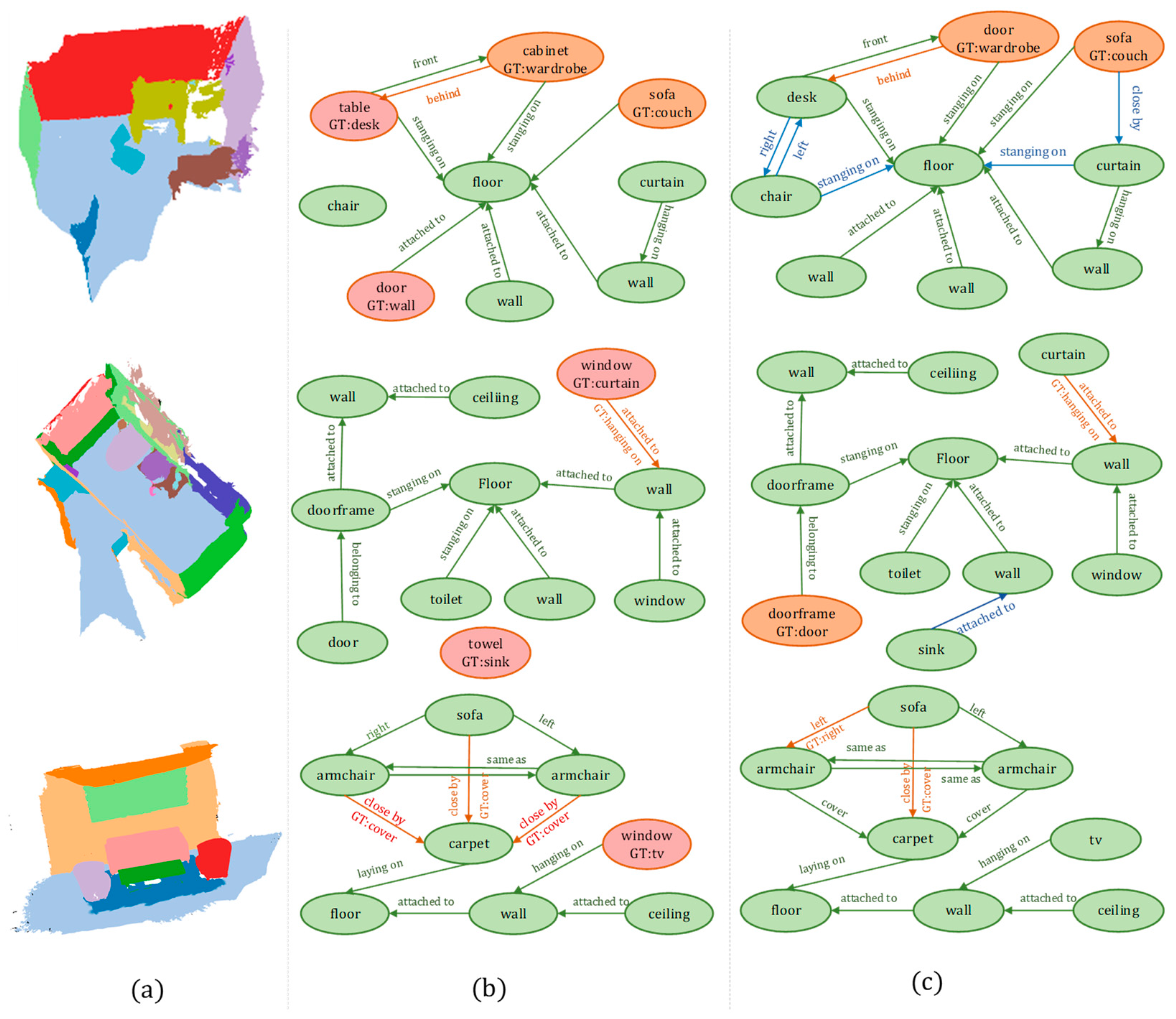

4.4. Experimental Results and Analysis

4.5. Ablation Experiments

4.5.1. Model Design

4.5.2. Point Cloud Data Dimension

4.5.3. Knowledge Integration Iterations

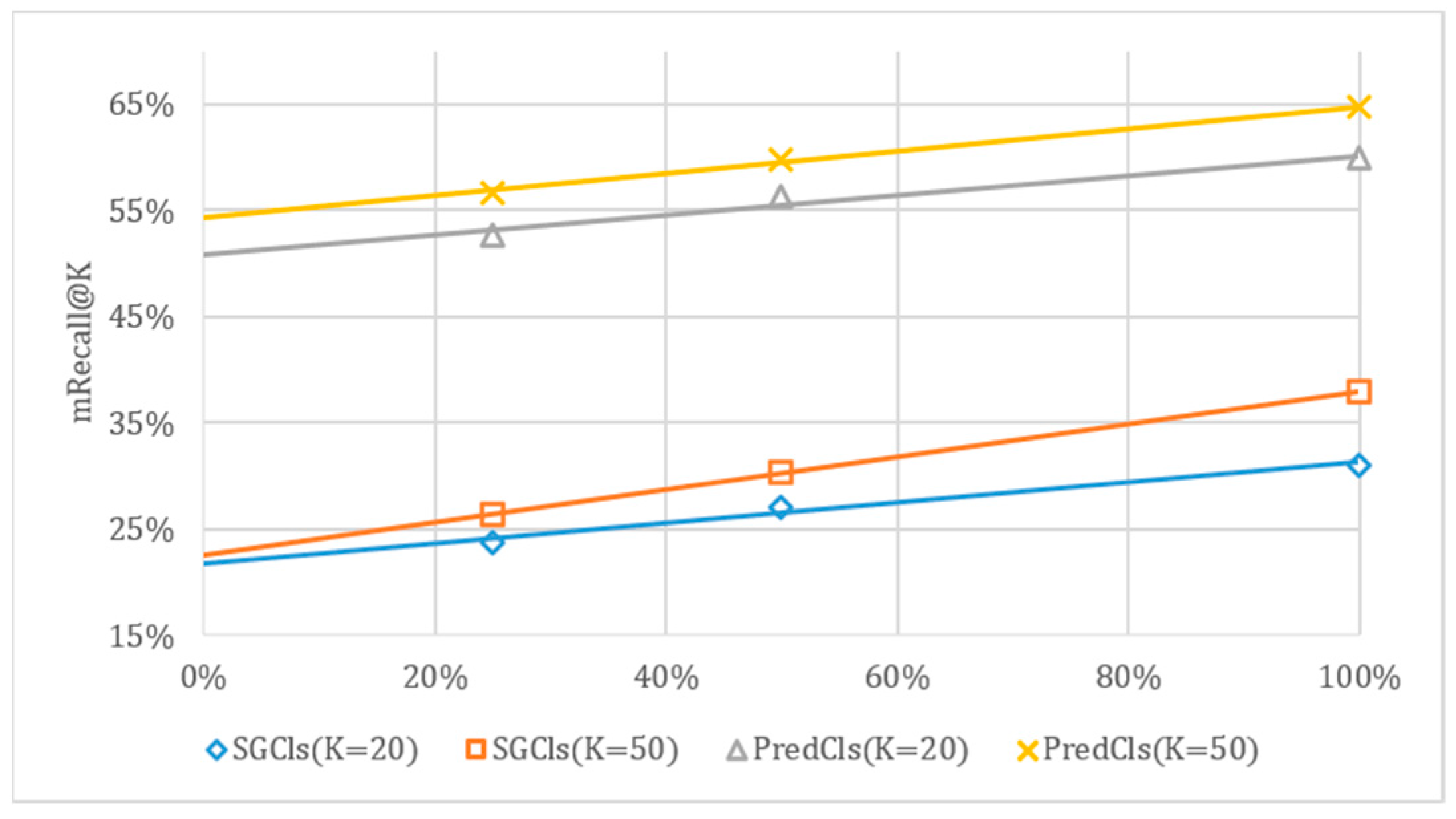

4.5.4. Weakly Supervised Ablation Tests

4.5.5. The Impact of Each Module of the Long-Tail Distribution Is Considered

5. Conclusions and Discussion

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Fei-Fei, L.; Koch, C.; Iyer, A.; Perona, P. What Do We See When We Glance at a Scene? J. Vis. 2004, 4, 863. [Google Scholar] [CrossRef]

- Tahara, T.; Seno, T.; Narita, G.; Ishikawa, T. Retargetable AR: Context-Aware Augmented Reality in Indoor Scenes Based on 3D Scene Graph. In Proceedings of the 2020 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Recife, Brazil, 9–13 November 2020; pp. 249–255. [Google Scholar]

- Luo, A.; Zhang, Z.; Wu, J.; Tenenbaum, J.B. End-to-End Optimization of Scene Layout. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3754–3763. [Google Scholar]

- Charles, R.; Su, H.; Kaichun, M.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE Computer Society: Los Alamitos, CA, USA, 2017; pp. 77–85. [Google Scholar]

- Wang, Y.; Sun, Y.; Liu, Z.; Sarma, S.E.; Bronstein, M.M.; Solomon, J.M. Dynamic Graph Cnn for Learning on Point Clouds. ACM Trans. Graph. 2019, 38, 146. [Google Scholar] [CrossRef]

- Guo, Y.; Wang, H.; Hu, Q.; Liu, H.; Liu, L.; Bennamoun, M. Deep Learning for 3D Point Clouds: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 4338–4364. [Google Scholar] [CrossRef]

- Johnson, J.; Krishna, R.; Stark, M.; Li, L.-J.; Shamma, D.; Bernstein, M.; Fei-Fei, L. Image Retrieval Using Scene Graphs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Chen, T.; Yu, W.; Chen, R.; Lin, L. Knowledge-Embedded Routing Network for Scene Graph Generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Sharifzadeh, S.; Baharlou, S.M.; Tresp, V. Classification by Attention: Scene Graph Classification with Prior Knowledge. Proc. AAAI Conf. Artif. Intell. 2021, 35, 5025–5033. [Google Scholar] [CrossRef]

- Chang, X.; Ren, P.; Xu, P.; Li, Z.; Chen, X.; Hauptmann, A. A Comprehensive Survey of Scene Graphs: Generation and Application. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 22359232. [Google Scholar] [CrossRef] [PubMed]

- Wald, J.; Dhamo, H.; Navab, N.; Tombari, F. Learning 3d Semantic Scene Graphs from 3d Indoor Reconstructions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3961–3970. [Google Scholar]

- Wald, J.; Avetisyan, A.; Navab, N.; Tombari, F.; Niessner, M. RIO: 3D Object Instance Re-Localization in Changing Indoor Environments. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Zhang, C.; Yu, J.; Song, Y.; Cai, W. Exploiting Edge-Oriented Reasoning for 3D Point-Based Scene Graph Analysis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 9705–9715. [Google Scholar]

- Zhang, S.; Hao, A.; Qin, H. Knowledge-Inspired 3D Scene Graph Prediction in Point Cloud. Adv. Neural Inf. Process. Syst. 2021, 34, 18620–18632. [Google Scholar]

- Liu, R.; Xing, P.; Deng, Z.; Li, A.; Guan, C.; Yu, H. Federated Graph Neural Networks: Overview, Techniques and Challenges. arXiv 2022, arXiv:2202.07256v2. [Google Scholar]

- Wu, Z.; Pan, S.; Chen, F.; Long, G.; Zhang, C.; Yu, P.S. A Comprehensive Survey on Graph Neural Networks. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 4–24. [Google Scholar] [CrossRef] [PubMed]

- Lipton, Z.C.; Berkowitz, J.; Elkan, C. A Critical Review of Recurrent Neural Networks for Sequence Learning. arXiv 2015, arXiv:1506.00019. [Google Scholar]

- Krishna, R.; Zhu, Y.; Groth, O.; Johnson, J.; Hata, K.; Kravitz, J.; Chen, S.; Kalantidis, Y.; Li, L.-J.; Shamma, D.A.; et al. Visual Genome: Connecting Language and Vision Using Crowdsourced Dense Image Annotations. Int. J. Comput. Vis. 2017, 123, 32–73. [Google Scholar] [CrossRef]

- Zhang, Y.; Kang, B.; Hooi, B.; Yan, S.; Feng, J. Deep Long-Tailed Learning: A Survey. CoRR 2021. [Google Scholar] [CrossRef]

- Dong, X.; Gan, T.; Song, X.; Wu, J.; Cheng, Y.; Nie, L. Stacked Hybrid-Attention and Group Collaborative Learning for Unbiased Scene Graph Generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 19427–19436. [Google Scholar]

- Yan, S.; Shen, C.; Jin, Z.; Huang, J.; Jiang, R.; Chen, Y.; Hua, X.-S. PCPL: Predicate-Correlation Perception Learning for Unbiased Scene Graph Generation. In Proceedings of the 28th ACM International Conference on Multimedia, Virtual, 12–16 October 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 265–273. [Google Scholar]

- Tang, K.; Niu, Y.; Huang, J.; Shi, J.; Zhang, H. Unbiased Scene Graph Generation from Biased Training. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3716–3725. [Google Scholar]

- Armeni, I.; He, Z.-Y.; Gwak, J.; Zamir, A.R.; Fischer, M.; Malik, J.; Savarese, S. 3D Scene Graph: A Structure for Unified Semantics, 3D Space, and Camera. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Kim, U.-H.; Park, J.-M.; Song, T.; Kim, J.-H. 3-D Scene Graph: A Sparse and Semantic Representation of Physical Environments for Intelligent Agents. IEEE Trans. Cybern. 2020, 50, 4921–4933. [Google Scholar] [CrossRef] [PubMed]

- Rosinol, A.; Violette, A.; Abate, M.; Hughes, N.; Chang, Y.; Shi, J.; Gupta, A.; Carlone, L. Kimera: From SLAM to Spatial Perception with 3D Dynamic Scene Graphs. Int. J. Robot. Res. 2021, 40, 1510–1546. [Google Scholar] [CrossRef]

- Rosinol, A.; Gupta, A.; Abate, M.; Shi, J.; Carlone, L. 3D Dynamic Scene Graphs: Actionable Spatial Perception with Places, Objects, and Humans. arXiv 2020, arXiv:2002.06289. [Google Scholar]

- Dhamo, H.; Manhardt, F.; Navab, N.; Tombari, F. Graph-to-3d: End-to-End Generation and Manipulation of 3d Scenes Using Scene Graphs. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 16352–16361. [Google Scholar]

- Wang, K.; Lin, Y.-A.; Weissmann, B.; Savva, M.; Chang, A.X.; Ritchie, D. Planit: Planning and Instantiating Indoor Scenes with Relation Graph and Spatial Prior Networks. ACM Trans. Graph. 2019, 38, 1–15. [Google Scholar] [CrossRef]

- Jiao, Y.; Chen, S.; Jie, Z.; Chen, J.; Ma, L.; Jiang, Y.-G. MORE: Multi-Order RElation Mining for Dense Captioning in 3D Scenes. arXiv 2022, arXiv:2203.05203v2. [Google Scholar]

- Huang, Z.; Yu, Y.; Xu, J.; Ni, F.; Le, X. PF-Net: Point Fractal Network for 3D Point Cloud Completion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Yu, L.; Li, X.; Fu, C.-W.; Cohen-Or, D.; Heng, P.-A. PU-Net: Point Cloud Upsampling Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Chen, Y.; Rohrbach, M.; Yan, Z.; Shuicheng, Y.; Feng, J.; Kalantidis, Y. Graph-Based Global Reasoning Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Wu, T.; Lu, Y.; Zhu, Y.; Zhang, C.; Wu, M.; Ma, Z.; Guo, G. GINet: Graph Interaction Network for Scene Parsing. In Proceedings of the Computer Vision—ECCV 2020, Glasgow, UK, 23–28 August 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 34–51. [Google Scholar]

- Liang, X.; Hu, Z.; Zhang, H.; Lin, L.; Xing, E.P. Symbolic Graph Reasoning Meets Convolutions. In Proceedings of the Advances in Neural Information Processing Systems, San Francisco, CA, USA, 30 November–3 December 1992; Bengio, S., Wallach, H., Larochelle, H., Grauman, K., Cesa-Bianchi, N., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2018; Volume 31. [Google Scholar]

- Li, Y.; Gupta, A. Beyond Grids: Learning Graph Representations for Visual Recognition. In Proceedings of the Advances in Neural Information Processing Systems, San Francisco, CA, USA, 30 November–3 December 1992; Bengio, S., Wallach, H., Larochelle, H., Grauman, K., Cesa-Bianchi, N., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2018; Volume 31. [Google Scholar]

- Gu, J.; Joty, S.; Cai, J.; Zhao, H.; Yang, X.; Wang, G. Unpaired Image Captioning via Scene Graph Alignments. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Cho, K.; van Merrienboer, B.; Gülçehre, Ç.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning Phrase Representations Using RNN Encoder-Decoder for Statistical Machine Translation. arXiv 2014, arXiv:1406.1078. [Google Scholar]

- Gilmer, J.; Schoenholz, S.S.; Riley, P.F.; Vinyals, O.; Dahl, G.E. Neural Message Passing for Quantum Chemistry. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 11–15 August 2017; Precup, D., Teh, Y.W., Eds.; PMLR: London, UK, 2017; Volume 70, pp. 1263–1272. [Google Scholar]

- Ba, J.L.; Kiros, J.R.; Hinton, G.E. Layer Normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning Representations by Back-Propagating Errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Li, B.; Yao, Y.; Tan, J.; Zhang, G.; Yu, F.; Lu, J.; Luo, Y. Equalized Focal Loss for Dense Long-Tailed Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 6990–6999. [Google Scholar]

- Lu, C.; Krishna, R.; Bernstein, M.S.; Fei-Fei, L. Visual Relationship Detection with Language Priors; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Zellers, R.; Yatskar, M.; Thomson, S.; Choi, Y. Neural Motifs: Scene Graph Parsing with Global Context. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Tang, K.; Zhang, H.; Wu, B.; Luo, W.; Liu, W. Learning to Compose Dynamic Tree Structures for Visual Contexts. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

| Models | R@20 | R@50 | R@ 100 | ngR@20 | ngR@50 | ngR@100 | mR@ 20 | mR@ 50 | mR@ 100 |

|---|---|---|---|---|---|---|---|---|---|

| KERN [8] | 20.3 ± 0.7 | 22.4 ± 0.8 | 22.7 ± 0.8 | 20.8 ± 0.7 | 24.7 ± 0.7 | 27.6 ± 0.5 | 9.5± 1.1 | 11.5 ± 1.2 | 11.9 ± 0.9 |

| SGPN [11] | 27.0 ± 0.1 | 28.8 ± 0.1 | 29.0 ± 0.1 | 28.2 ± 0.1 | 32.6 ± 0.1 | 35.3 ± 0.1 | 19.7 ± 0.1 | 22.6 ± 0.6 | 23.1 ± 0.5 |

| Schemata [9] | 27.4 ± 0.3 | 29.2 ± 0.4 | 29.4 ± 0.4 | 28.8 ± 0.1 | 33.5 ± 0.3 | 36.3 ± 0.2 | 23.8 ± 1.2 | 27.0 ± 0.2 | 27.2 ± 0.2 |

| KSGPN [14] | 28.5 ± 0.1 | 30.0 ± 0.1 | 30.1 ± 0.1 | 29.8 ± 0.2 | 34.3 ± 0.4 | 37.0 ± 0.2 | 24.4 ± 1.1 | 28.6 ± 0.8 | 28.8 ± 0.7 |

| KSGPN [14] (UME + GW) | 32.3 ± 0.1 | 33.7 ± 0.2 | 33.9 ± 0.1 | 34.1 ± 0.1 | 38.5 ± 0.3 | 41.1 ± 0.3 | 26.9 ± 0.6 | 29.5 ± 0.5 | 30.1 ± 0.3 |

| Ours (* UME) | 34.2 ± 0.2 | 35.6 ± 0.1 | 35.7 ± 0.2 | 36.4 ± 0.5 | 41.1 ± 0.4 | 44.1 ± 0.3 | 26.8 ± 0.5 | 29.3 ± 0.6 | 29.8 ± 0.2 |

| Ours | 35.8 ± 0.1 | 37.1 ± 0.2 | 37.2 ± 0.2 | 38.2 ± 0.2 | 42.6 ± 0.2 | 45.4 ± 0.1 | 31.1 ± 0.3 | 33.8 ± 0.5 | 33.9 ± 0.4 |

| Models | R@20 | R@50 | R@ 100 | ngR@20 | ngR@50 | ngR@100 | mR@ 20 | mR@ 50 | mR@ 100 |

|---|---|---|---|---|---|---|---|---|---|

| KERN [8] | 46.8 ± 0.4 | 55.7 ± 0.7 | 56.5 ± 0.7 | 48.3 ± 0.3 | 64.8 ± 0.6 | 77.2 ± 1.1 | 18.8 ± 0.7 | 25.6 ± 1.0 | 26.5 ± 0.9 |

| SGPN [11] | 51.9 ± 0.4 | 58.0 ± 0.5 | 58.5 ± 0.4 | 54.5 ± 0.6 | 70.1 ± 0.1 | 82.4 ± 0.2 | 32.1 ± 0.4 | 38.4 ± 0.6 | 38.9 ± 0.6 |

| Schemata [9] | 48.7 ± 0.4 | 58.2 ± 0.7 | 59.1 ± 0.6 | 49.6 ± 0.2 | 67.1 ± 0.3 | 80.2 ± 0.9 | 35.2 ± 0.8 | 42.6 ± 0.5 | 43.3 ± 0.5 |

| KSGPN [14] (* ME) | 52.9 ± 0.4 | 59.2 ± 0.4 | 59.8 ± 0.5 | 54.9 ± 0.4 | 71.6 ± 0.5 | 82.4 ± 0.8 | 35.3 ± 1.1 | 41.0 ± 0.7 | 41.5 ± 1.0 |

| KSGPN [14] | 59.3 ± 0.4 | 65.0 ± 0.4 | 65.3 ± 0.4 | 62.2 ± 0.5 | 78.4 ± 0.4 | 88.3 ± 0.2 | 56.6 ± 1.1 | 63.5 ± 0.1 | 63.8 ± 0.1 |

| KSGPN [14] (UME + GW) | 62.6 ± 0.3 | 65.7 ± 0.2 | 65.8 ± 0.2 | 67.3 ± 0.4 | 80.9 ± 0.1 | 88.8 ± 0.2 | 58.1 ± 0.5 | 64.2 ± 0.2 | 64.4 ± 0.2 |

| Ours (* UME) | 59.2 ± 0.2 | 62.8 ± 0.2 | 62.9 ± 0.3 | 63.8 ± 0.4 | 77.7 ± 0.4 | 88.7 ± 0.1 | 45.7 ± 0.8 | 49.4 ± 0.5 | 49.5 ± 0.4 |

| Ours | 63.8 ± 0.1 | 67.2 ± 0.5 | 67.3 ± 0.2 | 68.6 ± 0.2 | 83.0 ± 0.5 | 91.9 ± 0.2 | 60.9 ± 1.2 | 64.7 ± 0.9 | 65.0 ± 0.5 |

| Classification Tasks | Model | R@1 | R@5 | R@10 | mAcc |

| Node/obj | Obj-PointNet [14] | 51.7 | 78.4 | 86.4 | 17.2 |

| MP-DGCNN | 60.1 | 83.6 | 90.2 | 20.1 | |

| Classification Tasks | Model | R@1 | R@3 | R@5 | mAcc |

| Edge/pred | Pred-PointNet [14] | 38.9 | 68.3 | 85.6 | 23.9 |

| MP-DGCNN | 42.1 | 69.5 | 86.2 | 29.9 |

| Task | w/o Model | R@20 | R@50 | R@100 | ngR@20 | ngR@50 | ngR@ 100 | mR@20 | mR@50 | mR@ 100 |

|---|---|---|---|---|---|---|---|---|---|---|

| SGCls | -ENA | 34.6 | 35.7 | 35.9 | 36.3 | 40.8 | 43.7 | 28.3 | 31.1 | 31.4 |

| -MP | 32.7 | 34.1 | 34.2 | 34.6 | 39.0 | 41.6 | 27.9 | 30.2 | 30.4 | |

| -UME | 34.3 | 35.6 | 35.8 | 36.5 | 41.2 | 44.1 | 26.8 | 29.3 | 29.8 | |

| -GW | 34.8 | 36.6 | 36.7 | 36.7 | 41.0 | 43.8 | 25.5 | 28.7 | 29.3 | |

| Ours | 35.8 | 37.1 | 37.2 | 38.2 | 42.6 | 45.4 | 31.1 | 33.8 | 33.9 | |

| PredCls | -ENA | 63.2 | 66.1 | 66.2 | 68.2 | 83.2 | 91.7 | 59.7 | 63.9 | 64.0 |

| -MP | 63.1 | 66.5 | 66.7 | 68.3 | 81.0 | 89.8 | 59.4 | 63.8 | 63.9 | |

| -UME | 59.2 | 62.8 | 62.9 | 63.8 | 77.7 | 88.7 | 45.7 | 49.4 | 49.5 | |

| -GW | 65.0 | 68.7 | 68.9 | 69.4 | 83.2 | 91.0 | 56.2 | 60.3 | 60.4 | |

| Ours | 63.8 | 67.2 | 67.3 | 68.6 | 83.0 | 91.9 | 60.9 | 64.7 | 65.0 |

| Dimension | R@20 | R@50 | R@100 | mR@20 | mR@50 | mR@20 |

|---|---|---|---|---|---|---|

| xyz + normal | 36.2 | 38.0 | 38.1 | 27.0 | 30.4 | 31.2 |

| xyz | 35.8 | 37.1 | 37.2 | 31.1 | 33.8 | 33.9 |

| xyz + normal | 63.6 | 66.5 | 66.6 | 59.6 | 63.9 | 64.1 |

| xyz | 63.8 | 67.2 | 67.3 | 59.9 | 64.7 | 65.0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, C.; Li, H.; Xu, J.; Dong, B.; Wang, Y.; Zhou, X.; Zhao, S. Unbiased 3D Semantic Scene Graph Prediction in Point Cloud Using Deep Learning. Appl. Sci. 2023, 13, 5657. https://doi.org/10.3390/app13095657

Han C, Li H, Xu J, Dong B, Wang Y, Zhou X, Zhao S. Unbiased 3D Semantic Scene Graph Prediction in Point Cloud Using Deep Learning. Applied Sciences. 2023; 13(9):5657. https://doi.org/10.3390/app13095657

Chicago/Turabian StyleHan, Chaolin, Hongwei Li, Jian Xu, Bing Dong, Yalin Wang, Xiaowen Zhou, and Shan Zhao. 2023. "Unbiased 3D Semantic Scene Graph Prediction in Point Cloud Using Deep Learning" Applied Sciences 13, no. 9: 5657. https://doi.org/10.3390/app13095657

APA StyleHan, C., Li, H., Xu, J., Dong, B., Wang, Y., Zhou, X., & Zhao, S. (2023). Unbiased 3D Semantic Scene Graph Prediction in Point Cloud Using Deep Learning. Applied Sciences, 13(9), 5657. https://doi.org/10.3390/app13095657