A New Technical Ear Training Game and Its Effect on Critical Listening Skills

Abstract

1. Introduction

1.1. Critical Listening in Various Fields

1.2. General Gain of Critical Listening Training

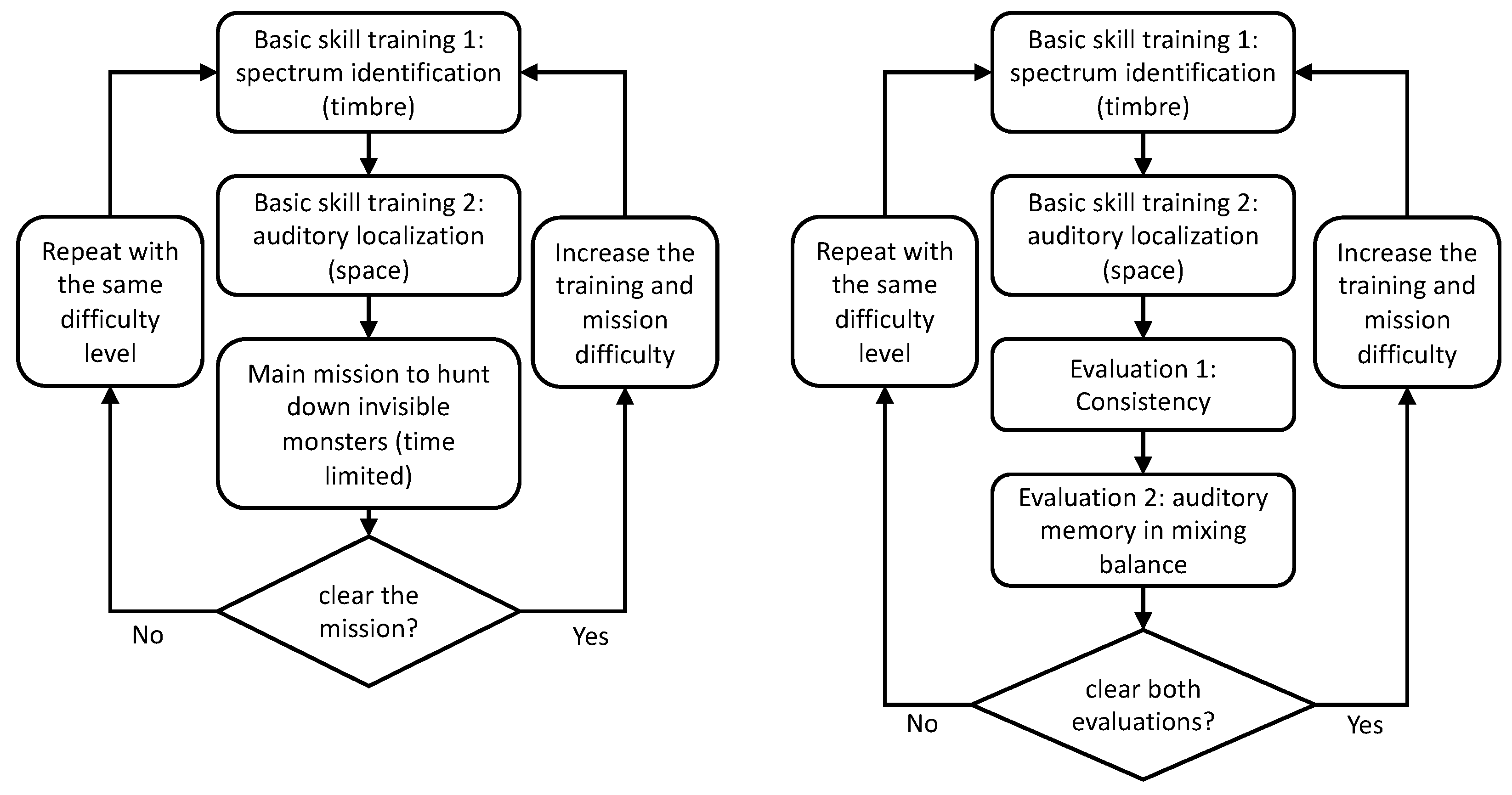

2. Design and Development of a New Technical Ear Training Game

Technical Details of Game Development

3. Details of Four Training Modules

3.1. Spectral Identification

3.2. Auditory Localization

3.3. Consistency

3.4. Memory Game of Mixing

4. Evaluation: Training Benefit in Speech Understanding

4.1. Methods

4.2. Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| SiN | Speech understanding in Nose |

| SRM | spatial release from masking |

Appendix A

| Level | Steps between Freqs | Min Freq | Max Freq | Boost to Target Freq | Total Questions |

|---|---|---|---|---|---|

| 1 | 1 Octave | 125 Hz | 8000 Hz | +9 dB | 25 |

| 2 | 1 Octave | 125 Hz | 8000 Hz | +9 dB | 25 |

| 3 | 1 Octave | 125 Hz | 8000 Hz | +6 dB | 25 |

| 4 | 1 Octave | 125 Hz | 8000 Hz | +6 dB | 25 |

| 5 | 1 Octave | 125 Hz | 8000 Hz | +3 dB | 25 |

| 6 | 1 Octave | 125 Hz | 8000 Hz | +3 dB | 25 |

| 7 | 1 Octave | 125 Hz | 8000 Hz | +1 dB | 25 |

| 8 | 1 Octave | 125 Hz | 8000 Hz | +1 dB | 25 |

| 9 | 1 Octave | 63 Hz | 16,000 Hz | +9 dB | 25 |

| 10 | 1 Octave | 63 Hz | 16,000 Hz | +6 dB | 25 |

| 11 | 1 Octave | 63 Hz | 16,000 Hz | +6 dB | 25 |

| 12 | 1 Octave | 63 Hz | 16,000 Hz | +3 dB | 25 |

| 13 | 1 Octave | 63 Hz | 16,000 Hz | +3 dB | 25 |

| 14 | 1 Octave | 63 Hz | 16,000 Hz | +1 dB | 25 |

| 15 | 1 Octave | 63 Hz | 16,000 Hz | +1 dB | 25 |

| 16 | 1 Octave | 63 Hz | 16,000 Hz | +1 dB | 25 |

| 17 | 1/3 Octave | 63 Hz | 8000 Hz | +9 dB | 25 |

| 18 | 1/3 Octave | 63 Hz | 8000 Hz | +9 dB | 25 |

| 19 | 1/3 Octave | 63 Hz | 8000 Hz | +6 dB | 25 |

| 20 | 1/3 Octave | 63 Hz | 8000 Hz | +3 dB | 25 |

| 21 | 1/3 Octave | 63 Hz | 16,000 Hz | +9 dB | 25 |

| 22 | 1/3 Octave | 63 Hz | 16,000 Hz | +6 dB | 25 |

| 23 | 1/3 Octave | 63 Hz | 16,000 Hz | +3 dB | 25 |

| 24 | 1/3 Octave | 63 Hz | 16,000 Hz | +3 dB | 50 |

| Level | Number of Noises | Target Gain | Target Delay (Seconds) | Moving Speed | Height Angle | Target Guess Radius | Time Limit (Seconds) | Number of Tries | Goal Distance |

|---|---|---|---|---|---|---|---|---|---|

| 1 | 0 | 0 dB | 0 | None | 0° | 1.25 | ∞ | 4 | 3 |

| 2 | 0 | 0 dB | 0 | None | 0° | 1.25 | ∞ | 3 | 3 |

| 3 | 1 | 0 dB | 1 | None | 0° | 1.25 | ∞ | 4 | 3 |

| 4 | 1 | 0 dB | 1 | None | 0° | 1.25 | ∞ | 3 | 3 |

| 5 | 1 | 0 dB | 1 | Base | 0° | 1.25 | 30 | 4 | 3 |

| 6 | 1 | 0 dB | 1 | Base | 0° | 1.25 | 30 | 3 | 3 |

| 7 | 2 | −6 dB | 1 | ×2 | 15° | 1 | ∞ | 4 | 6 |

| 8 | 2 | −6 dB | 1 | ×2 | 15° | 1 | ∞ | 3 | 6 |

| 9 | 2 | −6 dB | 2 | ×2 | 15° | 1 | 30 | 4 | 6 |

| 10 | 2 | −6 dB | 2 | ×2 | 15° | 1 | 30 | 3 | 6 |

| 11 | 2 | −6 dB | 2 | ×2 | 15° | 1 | ∞ | 4 | 6 |

| 12 | 2 | −6 dB | 2 | ×2 | 15° | 1 | ∞ | 3 | 6 |

| 13 | 3 | −12 dB | 3 | ×4 | 30° | 1 | 30 | 4 | 6 |

| 14 | 3 | −12 dB | 3 | ×4 | 30° | 1 | 30 | 3 | 6 |

| 15 | 3 | −12 dB | 3 | ×2 | 15° | 0.75 | ∞ | 4 | 9 |

| 16 | 3 | −12 dB | 3 | ×2 | 15° | 0.75 | ∞ | 3 | 9 |

| 17 | 2 | −12 dB | 2 | ×4 | 30° | 0.75 | 30 | 4 | 9 |

| 18 | 2 | −12 dB | 2 | ×4 | 30° | 0.75 | 30 | 3 | 9 |

| 19 | 3 | −12 dB | 3 | ×4 | 30° | 0.75 | ∞ | 4 | 9 |

| 20 | 3 | −12 dB | 3 | ×4 | 30° | 0.75 | ∞ | 3 | 9 |

| 21 | 3 | −12 dB | 3 | ×4 | 30° | 0.75 | 30 | 4 | 9 |

| 22 | 3 | −12 dB | 3 | ×4 | 30° | 0.75 | 30 | 4 | 9 |

| 23 | 3 | −12 dB | 3 | ×4 | 30° | 0.75 | 30 | 4 | 9 |

| 24 | 3 | −12 dB | 3 | ×4 | 30° | 0.75 | 30 | 3 | 9 |

| Level | Panning Knob Step Size | Volume Slider Step Size | Exposure Time (Seconds) | Total Mixing Time (Seconds) | Number of Sound Sources to Mix |

|---|---|---|---|---|---|

| 1 | 5 | 5 | 60 | ∞ | 1 |

| 2 | 7 | 9 | 60 | ∞ | 1 |

| 3 | 13 | 9 | 60 | ∞ | 1 |

| 4 | 13 | 9 | 45 | ∞ | 1 |

| 5 | 13 | 9 | 30 | ∞ | 1 |

| 6 | 5 | 5 | 60 | ∞ | 2 |

| 7 | 7 | 9 | 60 | ∞ | 2 |

| 8 | 13 | 9 | 60 | ∞ | 2 |

| 9 | 13 | 9 | 45 | ∞ | 2 |

| 10 | 13 | 9 | 60 | 120 | 2 |

| 11 | 5 | 5 | 60 | ∞ | 3 |

| 12 | 5 | 5 | 60 | ∞ | 3 |

| 13 | 7 | 5 | 60 | ∞ | 3 |

| 14 | 5 | 9 | 60 | ∞ | 3 |

| 15 | 7 | 9 | 60 | ∞ | 3 |

| 16 | 7 | 9 | 45 | ∞ | 3 |

| 17 | 7 | 9 | 30 | ∞ | 3 |

| 18 | 7 | 9 | 60 | 120 | 3 |

| 19 | 13 | 9 | 60 | ∞ | 3 |

| 20 | 13 | 9 | 60 | ∞ | 3 |

| 21 | 13 | 9 | 30 | ∞ | 3 |

| 22 | 13 | 9 | 60 | 120 | 3 |

| 23 | 13 | 25 | 60 | ∞ | 2 |

| 24 | 13 | 25 | 60 | ∞ | 2 |

References

- Indelicato, M.J.; Hochgraf, C.; Kim, S. How Critical Listening Exercises Complement Technical Courses to Effectively Provide Audio Education for Engineering Technology Students. In Proceedings of the Audio Engineering Society 137th International Convention, AES, Los Angeles, CA, USA, 9–12 October 2014. [Google Scholar]

- Brezina, P. Perspectives of advanced ear training using audio plug-ins. J. Audio Eng. Soc. 2021, 69, 351–358. [Google Scholar] [CrossRef]

- Elmosnino, S. A review of literature in critical listening education. J. Audio Eng. Soc. 2022, 70, 328–339. [Google Scholar] [CrossRef]

- Miśkiewicz, A. Timbre Solfege: A Course in Technical Listening for Sound Engineers. J. Audio Eng. Soc. 1992, 40, 621–625. [Google Scholar]

- Quesnel, R. Timbral Ear Trainer: Adaptive, Interactive Training of Listening Skills for Evaluation of Timbre. In Proceedings of the Audio Engineering Society 100th International Convention, AES, Copenhagen, Denmark, 11–14 May 1996. Preprint 4241. [Google Scholar]

- Zacharov, N. (Ed.) Sensory Evaluation of Sound; CRC Press: Boca Raton, FL, USA, 2019. [Google Scholar]

- ITU-R. Recommendation P.800, Methods for Subjective Determination of Transmission Quality; International Telecommunications Union Radiocommunication Assembly: Geneva, Switzerland, 1996. [Google Scholar]

- ITU-R. Recommendation BS.1534-5, Method for the Subjective Assessment of Intermediate Quality Level of Coding Systems; International Telecommunications Union Radiocommunication Assembly: Geneva, Switzerland, 2015. [Google Scholar]

- Howie, W.; Martin, D.; Kim, S.; Kamekawa, T.; King, R. Effect of Audio Production Experience, Musical Training, and Age on Listener Performance in 3D Audio Evaluation. J. Audio Eng. Soc. 2019, 67, 782–794. [Google Scholar] [CrossRef]

- Kim, S.; Howie, W. Influence of the Listening Environment on Recognition of Immersive Reproduction of Orchestral Music Sound Scenes. J. Audio Eng. Soc. 2021, 69, 834–848. [Google Scholar] [CrossRef]

- Olive, S.E. Differences in Performance and Preference of Trained versus Untrained Listeners in Loudspeaker Tests: A Case Study. J. Audio Eng. Soc. 2003, 51, 806–825. [Google Scholar]

- International, H. Harman’s How To Listen. Available online: http://harmanhowtolisten.blogspot.com/2011/01/welcome-to-how-to-listen.html (accessed on 10 February 2023).

- Olive, S.E. A new listener training software application. In Proceedings of the Audio Engineering Society 110th International Convention, AES, Amsterdam, The Netherlands, 12–15 May 2001. Preprint 5384. [Google Scholar]

- Iwamiya, S.; Nakajima, Y.; Ueda, K.; Kawahara, K.; Takada, M. Technical Listening Training: Improvement of sound sensitivity for acoustic engineers and sound designers. Acoust. Sci. Technol. 2003, 24, 27–31. [Google Scholar] [CrossRef]

- Sleeman, D.; Brown, J. Intelligent Tutoring Systems; Academic Press: New York, NY, USA, 1982. [Google Scholar]

- Garris, R.; Ahlers, R.; Driskell, J.E. Games, motivation, and learning: A research and practice model. Simul. Gaming 2002, 33, 441–467. [Google Scholar] [CrossRef]

- Pesek, M.; Vučko, Ž.; Šavli, P.; Kavčič, A.; Marolt, M. Troubadour: A Gamified e-Learning Platform for Ear Training. IEEE Access 2020, 8, 97090–97102. [Google Scholar] [CrossRef]

- Kim, S.; Emory, C.; Choi, I. Neurofeedback Training of Auditory Selective Attention Enhances Speech-In-Noise Perception. Front. Hum. Neurosci. 2021, 15, 676992. [Google Scholar] [CrossRef]

- Chon, S.H.; Kim, S. Auditory Localization Training Using Generalized Head Related Transfer Functions in Augmented Reality. Acoust. Sci. Technol. 2018, 39, 312–315. [Google Scholar] [CrossRef]

- Whitton, J.P.; Hancock, K.E.; Shannon, J.M.; Polley, D.B. Audiomotor Perceptual Training Enhances Speech Intelligibility in Background Noise. Curr. Biol. 2017, 27, 3237–3247.E6. [Google Scholar] [CrossRef] [PubMed]

- Burk, M.H.; Humes, L.E. Effects of Long-Term Training on Aided Speech-Recognition Performance in Noise in Older Adults. J. Speech Lang. Hear. Res. 2008, 51, 759–771. [Google Scholar] [CrossRef] [PubMed]

- Ferguson, M.A.; Henshaw, H.; Clark, D.P.; Moore, D.R. Benefits of Phoneme Discrimination Training in a Randomized Controlled Trial of 50- to 74-Year-Olds With Mild Hearing Loss. Ear Hear. 2014, 35, e110–e121. [Google Scholar] [CrossRef]

- Owen, A.M.; Hampshire, A.; Grahn, J.A.; Stenton, R.; Dajani, S.; Burns, A.S.; Howard, R.J.; Ballard, C.G. Putting brain training to the test. Nature 2010, 465, 775–778. [Google Scholar] [CrossRef]

- Anderson, S.; Kraus, N. Auditory Training: Evidence for Neural Plasticity in Older Adults. Perspect. Hear. Hear. Disord. Res. Diagn. 2013, 17, 37–57. [Google Scholar] [CrossRef]

- Iliadou, V.; Ptok, M.; Grech, H.; Pedersen, E.R.; Brechmann, A.; Deggouj, N.; Kiese-Himmel, C.; Śliwińska-Kowalska, M.; Nickisch, A.; Demanez, L.; et al. A European Perspective on Auditory Processing Disorder-Current Knowledge and Future Research Focus. Front. Neurol. 2017, 8, 622. [Google Scholar] [CrossRef]

- Iliadou, V.; Kiese-Himmel, C. Common Misconceptions Regarding Pediatric Auditory Processing Disorder. Front. Neurol. 2017, 8, 732. [Google Scholar] [CrossRef]

- Lad, M.; Billing, A.J.; Kumar, S.; Griffiths, T.D. A specific relationship between musical sophistication and auditory working memory. Sci. Rep. 2022, 12, 3517. [Google Scholar] [CrossRef]

- Swaminathan, J.; Mason, C.R.; Streeter, T.M.; Best, V.; Kidd, G., Jr.; Patel, A.D. Musical training, individual differences and the cocktail party problem. Sci. Rep. 2015, 5, 11628. [Google Scholar] [CrossRef]

- Kim, S.; Kaniwa, T.; Terasawa, H.; Yamada, T.; Makino, S. Inter-subject differences in personalized technical ear training and the influence of an individually optimized training sequence. Acoust. Sci. Technol. 2013, 34, 424–431. [Google Scholar] [CrossRef]

- Kim, S. An assessment of individualized technical ear training for audio production. J. Acoust. Soc. Am. 2015, 138, EL110–EL113. [Google Scholar] [CrossRef] [PubMed]

- Kim, S.; Imamura, H. An Assessment of a Spatial ear Training Program for Perceived Auditory Source Width. J. Acoust. Soc. Am. 2017, 142, EL201–EL204. [Google Scholar] [CrossRef] [PubMed]

- Kim, S.; Olive, S.E. Assessing influence of a Headphone Type on Individualized ear Training. In Proceedings of the Audio Engineering Society 138th International Convention, AES, Warsaw, Poland, 7–10 May 2015. [Google Scholar]

- Kim, S.; Bakker, R.; Okumura, H.; Ikeda, M. A cross-cultural comparison of preferred spectral balances for headphone-reproduced music. Acoust. Sci. Technol. 2017, 38, 272–273. [Google Scholar] [CrossRef]

- Kim, S.; Choi, I.; Schwalje, A.T. Sound Localization Training Using Augmented Reality. In Proceedings of the Association for Research in Otolaryngology Midwinter Meeting 2019, ARO, Baltimore, MD, USA, 9–13 February 2019. [Google Scholar]

- Bakker, R.; Ikeda, M.; Kim, S. Performance and Response: A framework to discuss the quality of audio systems. In Proceedings of the AES 137th International Convention E-Brif., AES, Los Angeles, CA, USA, 9–12 October 2014. [Google Scholar]

- Letowski, T. Sound quality assessment: Concepts and criteria. In Proceedings of the Audio Engineering Society 87th International Convention, New York, NY, USA, 18–21 October 1989. Preprint 2825. [Google Scholar]

- Corey, J. Audio Production and Critical Listening, 2nd ed.; Routledge: New York, NY, USA, 2016. [Google Scholar]

- Parseihian, G.; Katz, B.F. Rapid head-related transfer function adaptation using a virtual auditory environment. J. Acoust. Soc. Am. 2012, 131, 2948–2957. [Google Scholar] [CrossRef]

- Chon, S.H.; Kim, S. The Matter of Attention and Motivation—Understanding Unexpected Results from Auditory Localization Training using Augmented Reality. In Proceedings of the 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Osaka, Japan, 23–27 March 2019. [Google Scholar]

- Cowan, N. Working Memory Underpins Cognitive Development, Learning, and Education. Educ. Psychol. Rev. 2014, 26, 197–223. [Google Scholar] [CrossRef]

- Iliadou, V.; Moschopoulos, N.; Eleftheriadou, A.; Nimatoudis, I. Over-diagnosis of cognitive deficits in psychiatric patients may be the result of not controlling for hearing sensitivity and auditory processing. Psychiatry Clin. Neurosci. 2018, 72, 742. [Google Scholar] [CrossRef]

- Bolia, R.S.; Nelson, W.T.; Ericson, M.A.; Simpson, B.D. A speech corpus for multitalker communications research. JASA 2000, 107, 1065–1066. [Google Scholar] [CrossRef]

- Carlile, S.; Corkhill, C. Selective spatial attention modulates bottom-up informational masking of speech. Sci. Rep. 2015, 5, 8662. [Google Scholar] [CrossRef]

- Dirks, D.D.; Wilson, R.H. The effect of spatially separated sound sources on speech intelligibility. J. Speech Hear. Res. 1969, 12, 5–38. [Google Scholar] [CrossRef]

- Chon, S.H.; Kim, S. Does Technical ear Training Also Improve Speech-In-Noise Identification? In Proceedings of the 6th Conference of the Asia-Pacific Society for the Cognitive Sciences of Music, APSCOM, Kyoto, Japan, 25–27 August 2017. [Google Scholar]

- Bisogno, A.; Scarpa, A.; Girolamo, S.D.; Luca, P.D.; Cassandro, C.; Viola, P.; Ricciardiello, F.; Greco, A.; Vincentiis, M.D.; Ralli, M.; et al. Hearing Loss and Cognitive Impairment: Epidemiology, Common Pathophysiological Findings, and Treatment Considerations. Life 2021, 11, 1102. [Google Scholar] [CrossRef] [PubMed]

- Morais, A.A.; Rocha-Muniz, C.N.; Schochat, E. Efficacy of Auditory Training in Elderly Subjects. Front. Aging Neurosci. 2015, 7, 78. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, S.; Cozzarin, J. A New Technical Ear Training Game and Its Effect on Critical Listening Skills. Appl. Sci. 2023, 13, 5357. https://doi.org/10.3390/app13095357

Kim S, Cozzarin J. A New Technical Ear Training Game and Its Effect on Critical Listening Skills. Applied Sciences. 2023; 13(9):5357. https://doi.org/10.3390/app13095357

Chicago/Turabian StyleKim, Sungyoung, and Jacob Cozzarin. 2023. "A New Technical Ear Training Game and Its Effect on Critical Listening Skills" Applied Sciences 13, no. 9: 5357. https://doi.org/10.3390/app13095357

APA StyleKim, S., & Cozzarin, J. (2023). A New Technical Ear Training Game and Its Effect on Critical Listening Skills. Applied Sciences, 13(9), 5357. https://doi.org/10.3390/app13095357