Abstract

The main purpose of this research was to introduce a classification method, which combines a rule induction procedure with the Takagi–Sugeno inference model. This proposal is a continuation of our previous research, in which a classification process based on interval type-2 fuzzy rule induction was introduced. The research goal was to verify if the Mamdani fuzzy inference used in our previous research could be replaced with the first-order Takagi–Sugeno inference system. In the both cases to induce fuzzy rules, a new concept of a fuzzy information system was defined in order to deal with interval type-2 fuzzy sets. Additionally, the introduced rule induction assumes an optimization procedure concerning the footprint of uncertainty of the considered type-2 fuzzy sets. A key point in the concept proposed is the generalization of the fuzzy information systems’ attribute information to handle uncertainty, which occurs in real data. For experimental purposes, the classification method was tested on different classification benchmark data and very promising results were achieved. For the data sets: Breast Cancer Data, Breast Cancer Wisconsin, Data Banknote Authentication, HTRU 2 and Ionosphere, the following F-scores were achieved, respectively: 97.6%, 96%, 100%, 87.8%, and 89.4%. The results proved the possibility of applying the Takagi–Sugeno model in the classification concept. The model parameters were optimized using an evolutionary strategy.

1. Introduction

Classification is one of the most important and challenging machine learning tasks. As the main goal is to investigate similarities between groups of objects, the classification accuracy strongly depends on the initial data sets used for training. There are a lot of issues to be handled, such as unbalanced or biased training data, but also incomplete or vague information which is often the case for real data. A lot of research was done to address this problem, especially using fuzzy classification and by extending to type-2 fuzzy sets as well. For example, recently in [1], a new fuzzy reasoning method for an interval type-2 fuzzy classification system including cluster-based rules was introduced. Authors propose to incorporate the introduced reasoning procedure with a new Possibility-based fuzzy measure to handle uncertainty of cluster centers in an interval type-2 fuzzy rule-based classification system. In [2], a robust sparse representation for classification of medical images is proposed based on an introduced adaptive type-2 fuzzy dictionary learning. Remote-sensing image classification techniques using type-2 fuzzy sets were introduced [3,4,5] where, for example recently, considering the last cited research, a novel robust interval type-2 possibilistic fuzzy clustering model for the classification of complex remote sensing land cover was presented.

There are many fuzzy control applications based on the use of type-2 fuzzy sets. Recently, type-2 fuzzy logic system for ergonomic control of indoor environments was proposed [6]. The system calculates the effective working time and time-dependent change in carbon dioxide levels and aims to evaluate ergonomic comfort conditions. The authors proved better system performance using type-2 fuzzy sets for ergonomic control in fuzzy environments. Important medical application was proposed in [7]. An interval type-2 fuzzy stochastic modeling and control strategy to consider the uncertainties of the COVID-19 pandemic in order to control the number of infected people was introduced. A common strategy for fuzzy model parameter tuning is the use of evolutionary algorithms. In [8], authors applied a genetic algorithm to tune the parameters of an interval type-2 fuzzy proportional–derivative controller, in order to track the trajectory of a snake robot in the presence of system uncertainties. In [9,10], slime mold and particle swarm optimization algorithms were used for parameter tuning of interval type-2 fuzzy controllers, respectively. In [11], the design of membership functions for interval type-2 fuzzy tracking controllers was optimized using a metaheuristic algorithm.

In general, type-2 fuzzy sets provide better generalizations [12,13] as the main assumption here is the additional fuzzification of the membership values of type-1 fuzzy sets involved. Therefore, type-2 fuzzy sets can handle better with imprecise information [14,15].

Additionally, to deal with information issues, data discovery is used to induce knowledge. Information systems and rough sets, originally introduced by Pawlak [16,17,18], are often used to represent knowledge. Basic mathematical concepts, using rough sets theory to induce fuzzy rules, have been worked on for a long time. In [19], fuzzy learning methods for the automatic generation of membership functions and fuzzy if-then rules from training examples were introduced. Fuzzy decision rules induction was presented in [20] using the tolerance-based rough sets model. Rough sets were used to reduce the dimensionality of complex datasets as preprocessing for fuzzy rule induction [21].

The above guided us to investigate the possible combination of fuzzy reasoning with Pawlak’s information systems. We have already introduced our concept of a fuzzy information system [22]. This research is a continuation of our previous research, by evolving and combining our concept with the Takagi–Sugeno fuzzy model [23]. Fuzzy information systems introduce a relation between attributes and fuzzy sets [24]. Applications of fuzzy information systems can be found in the fields of decision-making [25,26] and rule extraction [26,27]. Recently, new decision-making concepts were introduced in [28] by combining regret theory with three-way decisions in fuzzy incomplete information systems, or in [29], by multi-attribute predictive analysis using fuzzy rough sets.

In this research, a fuzzy information system to induce rules was applied with respect to classification attribute values. Next, a transformation into fuzzy rules was proposed, and finally, the fuzzy sets used were expanded into type-2 fuzzy sets. To provide classification, the Takagi–Sugeno model was applied in order to simplify our previous concept, which was related to the Mamdani fuzzy model [30]. The Takagi–Sugeno model does not require rule consequents defined as fuzzy sets, but introduces additional parameters. For that reason, in this research, an optimization procedure was applied to determine the best fuzzy classifier for the benchmark data considered. The research experiments were provided for binary classification problems defined with well-known benchmark data.

Therefore, our research goal was to provide a classification method, by replacing the Mamdani model used in our previous research with the first-order Takagi–Sugeno fuzzy inference. This simplified the induction of type-2 fuzzy rules in the classification process, as fuzzy sets were not required to be defined as rule consequents. The main research advantage of this work states the proposal of a fuzzy information system used to induce type-2 fuzzy rules, incorporated with the Takagi–Sugeno inference to provide classification.

The rest of the paper is organized as follows: in Section 2, the benchmark data used and some theoretical background are introduced; in Section 3, the introduced classification procedure is explained; in Section 4, the classification results are presented; and finally, Section 5 and Section 6 draw corresponding discussion, possible further developments and conclusions.

2. Material and Methods

2.1. Materials

In this research, classification experiments were provided on well-known benchmark data [31] for binary classification. This is the same benchmark data as those used in our previous study [22], with details given in Table 1.

Table 1.

The benchmark data used.

2.2. Methods

2.2.1. Type-1 and Type-2 Fuzzy Sets

A Type-1 fuzzy set F consists of a non-empty domain X and a function X → [0, 1], called a membership function [32]. Considering a continuous membership function, a fuzzy set of type-1 as defined in Equation (1):

The above integral denotes the collection of all points x ∈ X with associated membership grade (x) ∈ [0, 1]. The function values define the grade of membership of the elements of X to the fuzzy set F. Membership functions are assumed to describe imprecise or vague information.

The type-1 fuzzy sets concept was expanded by fuzzifying the membership function values themselves and type-2 fuzzy sets were introduced. A type-2 fuzzy set, denoted as , is defined in Equation (2) [5]:

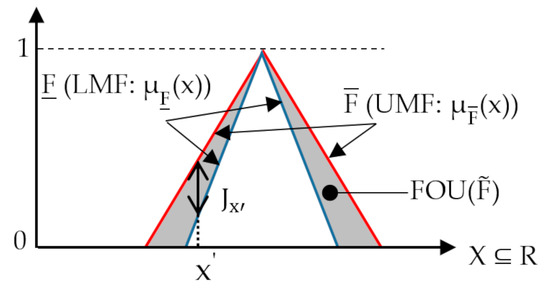

where denotes the union over all admissible x and u. The most widely used special cases of type-2 fuzzy sets, mostly because of their easy interpretation, are the interval type-2 (IT2) fuzzy sets [5]. Uncertainty about is handled by the so-called footprint of uncertainty (FOU) of , as shown in Equation (3):

The area of a FOU is directly related to the uncertainty that is conveyed by an interval type-2 fuzzy set, and what follows, a FOU with more area is more uncertain than the one with less area. The lower membership function (LMF) and upper membership function (UMF) of are two type-1 membership functions and that bound the FOU, which are used to describe Jx, see Equation (4):

which leads to Equation (5):

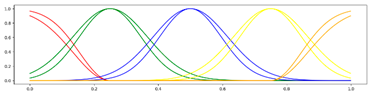

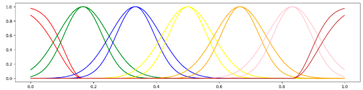

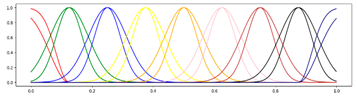

Figure 1 illustrates the graphical interpretation of the above definitions.

Figure 1.

An interval type-2 fuzzy set determined by membership functions of the type-1 fuzzy sets: and .

2.2.2. Takagi–Sugeno Type-2 Fuzzy Inference

The major difference between the type-1 and type-2 Takagi–Sugeno fuzzy models obviously lies in the incorporation of type-2 fuzzy sets in the inference process. This requires corresponding fuzzy rule interpretation and type–reducer mechanism.

A type-1 fuzzy rule in terms of the Takagi–Sugeno model, takes the following form:

where Xi (i = 1, …, I) are type-1 fuzzy sets defined over corresponding domains. The operator ‘o’ is assumed as a ⊗, ⊕ : [0, 1]2 → [0, 1] are the t- and s-norms [33], respectively. These binary operators are applied in fuzzy logic as generalizations of the conjunction and disjunction Boolean logic operators. The Zadeh’s t- and s- norms defined by the min and max operators, were applied, respectively. The rule consequents are multivariable functions, defined as combinations of polynomials. The most popular solution, i.e., first-order Takagi–Sugeno model, assumes combinations of linear functions. Therefore, any rule consequent takes the form:

Consequent: , where ak and bk are the parameters of the corresponding linear functions, defined with respect to each xi.

It is interesting to note that in the Takagi–Sugeno model, there is no need to define the consequents as fuzzy sets and therefore, there is no need to define any membership function in the consequents as it should be done using, for example, the Mamdani model [30].

The final output of the system is the weighted over all rule outputs, as defined in Equation (6):

where N is the number of rules, wk is the rule-firing value derived from the kth rule antecedent and fk is the value of the kth rule consequent.

Considering type-2 fuzzy sets, for an input vector , typical computations of an IT2 fuzzy system consist of the following steps:

- (1)

- Compute the membership intervals of for each , , i = 1,…, I; n = 1,…, N (N is the number of rules),

- (2)

- Compute the firing interval of the nth rule:,

- (3)

- Use type reduction to combine with corresponding rule consequents.

The most popular one is the center-of-set (COS) type reducer [34] using the Karnik–Mendel algorithm [34,35] or their modifications [36,37].

In order to apply the Karnik–Mendel algorithm, a single type-2 fuzzy set must be derived using the rules outputs. For a Takagi–Sugeno type-2 fuzzy system, the aggregate set is generated using the following procedure:

- Sort the rule outputs from all rules into ascending order,

- For each output, define the UMF value using the maximum firing range of the considered rule,

- For each output, define the LMF value using the minimum firing range of the considered rule.

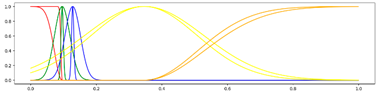

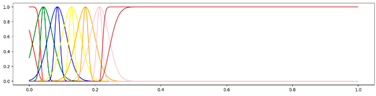

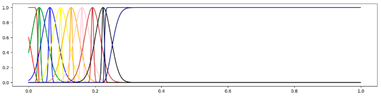

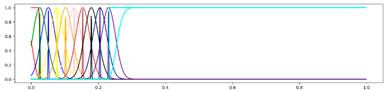

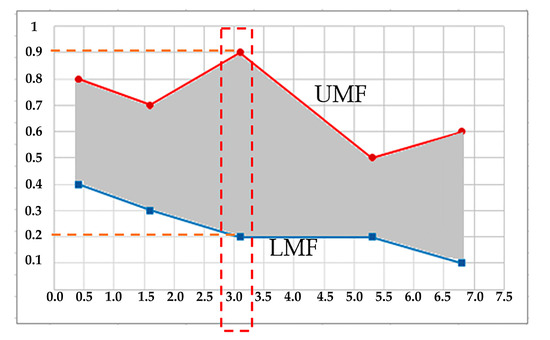

As an example, consider the data shown in Table 2. Let us assume five rules with output values and firing range limits. For these data, the aggregate type-2 fuzzy set shown in Figure 2 will be generated.

Table 2.

Example aggregate set generation.

Figure 2.

Exemplary output type-2 fuzzy set for an input vector. To make it easier to understand the structure of the aggregate set, the values for Rule 5 are marked.

Defining the above aggregate type-2 fuzzy output set, the Karnik–Mendel algorithm [34,35] can be applied in order to derive the final output system value. As the complete Takagi–Sugeno type-2 fuzzy inference procedure is well-described in the literature, we will omit further details.

3. Fuzzy Information System with Tagaki-Sugeno Reasoning

3.1. Type-1 Fuzzy Information System and Fuzzy Rule Induction

This research is based on our interpretation of a fuzzy information system, introduced in [22]. We assume that the values of the system information function are linguistic variables which corresponds to fuzzy sets. This is a granulation information proposal. A classic information system [16] is defined by the elements: (U, A, V, f), where U is a universe, A is a set of attributes, V represents attributes domains: V = df ⋃aVa, with nonempty domain Va of the a-th attribute (a ∈ A), and f is the information function f: U×A → V, ∀x ∊ U, a ∊ A f(u, a) ∊Va. The indiscernibility binary relation (IND), defined over U (IND ⊆ U2), plays in theory an important role. It is an equivalence relation, defined in Equation (7):

IND(B) = df {(ui, uj) ∈ U ’ U: ∀a∈B f(ui, a)=f(uj, a)}, ui, uj ∈ U, B ⊆ A

The above binary relation is used to define the lower and upper approximations of any subset of U. Any such a pair is defined as a rough set [17,18].

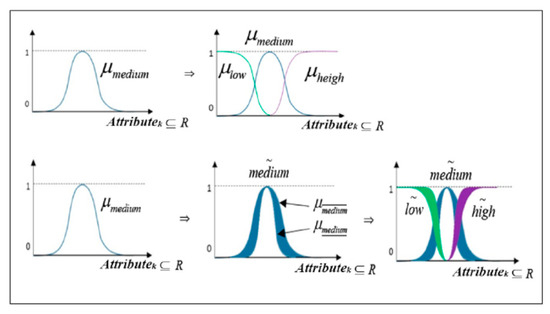

In accordance with our interpretation of a fuzzy information system over any attribute domain Va, three fuzzy subsets are defined: {low, medium, high}. The information function values are replaced with corresponding fuzzy sets, with respect to the maximum membership value: max{µlow(object, attribute), µmedium(f(object, attribute), µhigh(f(object, attribute)}. The membership function of the medium fuzzy set assumes Gaussian distribution of the attribute data for any a ∊ A.

Therefore, the membership functions for the considered fuzzy sets can be easily defined, see Equation (8):

The above attribute fuzzification introduces a very simple initialization of the information function as well as values’ generalization. For example, let us consider the below matrix (Table 3) which represents a fuzzy information system: U = {object1, object2, object3, object4}, A = {attibute1, attribute2, attribute3}, V = and we assume for each set a Gaussian distribution in order to obtain the fuzzy sets {lowi, mediumi, highi}.

Table 3.

Fuzzy information system example.

So, for example, f(object2, attribute2) is introduced as high because the following inequality is satisfied: µhigh(f(object2, attribute2)) ≥ max{ µlow(f(object2, attribute2), µmedium(f(object2, attribute2)} or f(object1, attribute3) is introduced as low, as µlow(f(object1, attribute3)) ≥ max{ µmedium(f(object1, attribute3), µhigh(f(object1, attribute3)}.

The corresponding partition (P) with respect to IND has two equivalence classes:

P/IND{attribute1, attribute2, attribute3} = {{object1, object4}, {object2, object3}}

Next, if a decision attribute (A*) is added to the set of attributes: A = A ∪ A* an information system can be represented by a decision table. Assuming such an extension, the Skowron and Suraj rule induction method can be applied [38,39] and next, transformation of the rules induced for each decision into fuzzy rules is introduced.

For example, for pairs: (object1, attribute1): low, (object2, attribute2): high, (object3, attribute1): medium, and a rule for decision D is induced as follows:

- decision D: (f(object1, attribute1) ∧ f(object2, attribute2)) ∨ f(object3, attribute1),

- the above rule can be transformed into the following fuzzy rule:

- If ((f(object1, attribute1) is low) ⊗ (f(object2, attribute2) is high)) ⊕ (f(object3,

- attribute1) is medium) Then D.

An explanation of the rule induction procedure and the generation of fuzzy rules directly from a decision table can be found in our previous research in more detail ([22], Sections 2.2.3 and 2.2.4).

The most important issues here are: (1) the possibility of attribute value generalization by applying fuzzy sets directly as values in the decision table. Therefore, the information function values are related directly to membership functions of fuzzy sets. (2) The rule induction procedure and the transformation of the rule induced into type-1 fuzzy rules.

3.2. Involving Type-2 Fuzzy Sets

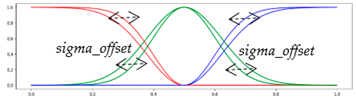

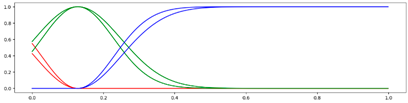

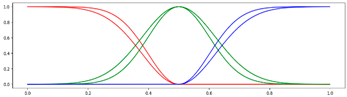

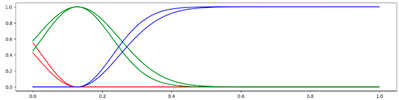

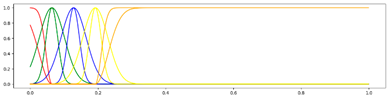

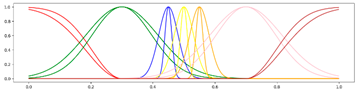

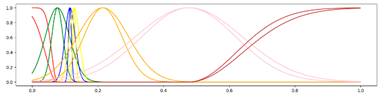

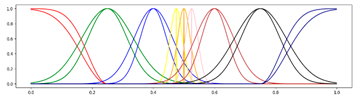

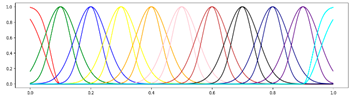

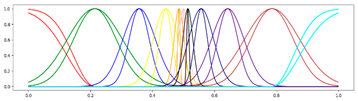

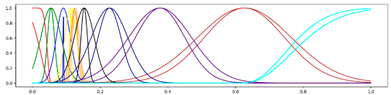

The idea of applying type-2 fuzzy sets is very simple and related to our previous research [22]. It is enough to change the values of the standard deviation of the Gaussian-type membership function of the fuzzy set medium to define the bounds of the FOU for the type-2 fuzzy set . In such a way, the assumed fuzzy sets {low, medium, high} can be replaced with the {} type-2 fuzzy sets. Figure 3 clarifies the issue. This transformation is done after rule induction and transformation into type-1 fuzzy rules.

Figure 3.

The type-1 and type-2 fuzzification process.

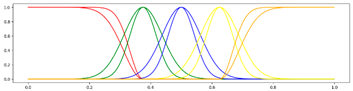

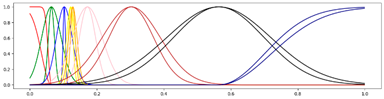

Nevertheless, the assumption of Gaussian distribution for each attribute value is hard to be fulfilled. That is the reason why we assumed the experiment with the fuzzy rule premises in terms of standard derivation values (defining the corresponding FOU) parameter named as sigma_offset and different configurations of membership functions. Meaning, we extend the assumption of using only three type-2 fuzzy sets {}. Without changing our previous research, the configurations assumed are shown in Table 4 below. This concerns the number of Gaussians in the rule premises, the applied sigma_offset, and the expected value for the medium membership function. For more details, see ([22], Section 2.2.6) concerning the fuzzy sets defined in the fuzzy rule premises.

Table 4.

The IT2 fuzzy sets used (presentation of the concept with respect to a chosen attribute of the blood transfusion dataset, assuming normalization as well).

3.3. Takagi–Sugeno Reasoning with Optimization

Consequently, for each rule premises, configuration of different sigma_offset are considered. Additionally, in terms of first-order Takagi–Sugeno systems, two parameters defining the corresponding linear function for each fuzzy set in the rule premises should be calculated. The assumed varieties of type-2 fuzzy sets along with the sigma_offset value and the linear function parameters define an optimization problem. A finite set of membership functions is considered, generated with respect to the medium membership function. The assumed numbers of membership functions are: {3, 5, 7, 9 11}. Considering the membership function configurations and the sigma_offset, the grid search algorithm in a k-fold cross-validation manner for these hyperparameters optimization was applied. The linear function parameters for each attribute, were optimized using the Covariance Matrix Adaptation Evolutionary Strategy (CMA-ES) [40], i.e., as each cross-validation step defines a new training set, the induced rules also differ. Therefore, at each cross-validation step, we applied CMA-ES for linear function parameters optimization.

Additionally, in the conducted experiments as possible data pre-processing, a dimension reduction procedure using the well-known principal component analysis method (PCA) and the Random Oversampling (ROS) [41,42,43] to handle with class imbalance were applied. The oversampling was important in terms of rule induction, as the inconsistency elimination step ([22], Section 2.2.3, algorithm 1) strongly favors classes with large presence of data.

The complete set of hyperparameters to be optimized is given in Table 5. On the other hand, Table 6 presents the hyperparameter values used in the CMA-ES procedure itself. The number of folds for each dataset are given in Table 7.

Table 5.

Tuned hyperparameters of sets of membership functions.

Table 6.

CMA-ES hyperparameters.

Table 7.

Number of folds applied in the cross-validation process.

The experimental procedure pipeline in this research was defined as follows:

- Split a considered dataset into k-folds.

- For each set of hyperparameters:

- For each cross-validation step:

- Set one fold as held-out for validation, use the rest for training.

- Induce the knowledge base using the training set.

- Infer the crisp value for each sample in the training set with the Takagi–Sugeno model.

- Classify the sample with the threshold function set at 0. Treat the sample as negative if the crisp value is lower than 0.

- Calculate the F1 metric.

- Optimize the parameters of linear functions with CMA-ES, defining the F1 metric as the fitness function.

- Evaluate the model on the validation set when the fitness function is optimized or the maximum number of evaluations passes. Otherwise return to (iii).

- Choose a set of hyperparameters which maximize the mean value of the F1 metric over the validation sets.

In order to evaluate the model for each dataset, we conduct the following procedure:

- (1)

- Choose the best hyperparameters set for a dataset.

- (2)

- Optimize the fitness function on the training set.

- (3)

- Choose the best parameters of linear functions.

- (4)

- Evaluate the model on a test set.

Repeat the procedure n times (n = 10) to minimize CMA-ES possible local extrema solutions and choose the best result.

4. Binary Classification Results

In Table 8 the best binary classification results achieved for the best hyperparameters setup are presented.

Table 8.

Classification results.

In Table 9, we present the best classification results achieved in comparison with our previous research [22] and other classifiers as well.

Table 9.

Comparison with other methods, concerning the F1 score measure.

5. Discussion

This research is a continuation of a previous one, published in [22]. Both papers present our newly introduced definition of a fuzzy information system, which has the following advantages:

- The information function values are interpreted as fuzzy sets, labeled with corresponding linguistic variables. This gives the possibility to generalize information—we do not consider numerical values for pairs (object, attribute), but general descriptions such as ‘small’, ‘medium’, and ‘high’.

- The decision table used is generated in an automatic manner for a considered data set, as the value ‘medium’ is assumed as the Gaussian distribution of the data for each attribute. Then, the sets ‘low’ and ‘high’ are easy to be defined using the ‘medium’ membership function. Next, a corresponding label (identifying the corresponding fuzzy set) is given for a pair (object, attribute), by using the maximum membership value.

Additionally, we propose an easy transformation in order to change the applied type-1 fuzzy sets into type-2 fuzzy sets.

Therefore, by defining such a decision table, the rule induction procedure described in [38,39] is applied and next, type-2 fuzzy rules are derived. What is more, different fuzzification strategies are possible (see Table 4).

In this research, the goal was to incorporate our fuzzy information system concept with the Takagi–Sugeno model, as it does not require the model outputs to be defined as fuzzy sets. Such an achievement would create new possibilities, using the advantages of the Takagi–Sugeno model. To incorporate the model, we had to optimize the model parameters with respect to the corresponding benchmark data. We have applied the CMA-ES (Covariance Matrix Adaptation Evolutionary Strategy) for this purpose. We conducted experiments with the same binary classification problems and the same fuzzification experiments, as presented in [22], in order to compare the results.

While performing experiments, we have discovered further promising features and potential disadvantages of the method proposed. The classification process is able to fit training data, but sometimes has issues with generalization. Applying PCA allows us to minimize this issue, making the method proposed more robust. Additionally, performing optimization with an evolutionary strategy allows the model to find very well-fitting decision boundaries. Therefore, one might achieve even better performance by applying additional regularization methods.

To solve the problem of imbalanced data, Random Oversampling was used, which was not beneficial in a few cases. If the imbalance is very high, ROS may produce too much redundancy; therefore, the information system may produce biased rules, ignoring important information contained in the data. In such a scenario, other sampling techniques should be applied instead.

Regarding the attribute fuzzification procedure, only Gaussians were assumed as membership functions. We suppose, there is room for improvement, adjusting membership functions to the attributes’ characteristics. Additionally, not only the first-order Takagi–Sugeno model could be applied.

Despite such constraints, good classification results for several datasets were achieved. We believe that the application of the Takagi–Sugeno model in our approach will support the solving of multi-class classification problems. This is because, in the model used, there is no need to define decision classes, contrary to the Mamdani fuzzy model.

Summarizing, a new classification concept was proposed in this research with the following main advantages:

- –

- Defining a decision table with fuzzy values. The fuzzification is provided in an automatic manner directly from a data set.

- –

- Using the rule induction method based on the information system concept, which has a solid mathematical background. Each rule is related to a corresponding class, regarding the classification problem considered.

- –

- Transformation of the induced rules into type-2 fuzzy rules.

- –

- Application of the Takagi–Sugeno model in the classification process. Therefore, there is no need to define the fuzzy rule consequents as fuzzy sets.

6. Conclusions

In this research, a novel classification method, which incorporates our previously introduced concept of a fuzzy information system with the first-order Takagi–Sugeno model, was introduced. A fuzzification procedure with membership function optimization and a corresponding rule induction of type-2 fuzzy rules were proposed as well. The research was a continuation of our previous approach. Here, the aim was to replace the fuzzy model previously applied with the Takagi–Sugeno model. The model parameters optimization was conducted with the CMA-ES evolutionary strategy with respect to the classification problem considered. The achieved classification results proved that the Takagi–Sugeno-type inference is fully implementable with our fuzzy type-2 rule induction concept. Therefore, there is no need to define the fuzzy rule consequents as fuzzy sets, which simplify the whole classification method and potentially support the solving of multi-class classification problems. Nevertheless, some improvements could be applied, such as better feature selection, detection of outliers, or data augmentations in order to increase the classification results. Limitations of the method proposed might occur if the generalizations applied in the decision table are too high. This means that if there is a low number of fuzzy sets and attributes, the rules induced will not differentiate the decision classes appropriately. Additionally, categorical variables are not appropriate due to the required data fuzzification.

As further research, we consider the possibility to use representation learning in order to generate attribute values for a considered problem and to fuzzify them. Therefore, we intend to involve the deep learning phase in the method proposed. We consider the concept proposed as a general approach, which is applicable to real-world classification problems, meant for data containing vague and incomplete information.

Author Contributions

Conceptualization, M.T.; Methodology, M.T.; Software, A.B.C. and A.R.C.; Validation, A.B.C. and A.R.C.; Formal analysis, M.T.; Investigation, M.T., A.B.C. and A.R.C.; Data curation, A.B.C. and A.R.C.; Writing—original draft, M.T.; Writing—review & editing, A.B.C. and A.R.C.; Supervision, M.T. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the statutory funds of the Department of Artificial Intelligence, Wroclaw University of Science and Technology.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The presented experiments were conducted on publicly available benchmark data.

Acknowledgments

All calculations were performed using computational resources provided by WCSS (Wroclaw Centre for Networking and Supercomputing). Additionally, we wish to acknowledge the help provided by Konrad Karanowski, Aleksy Walczak, Mateusz Grzesiuk, Jan Wielgus, Artur Zawisza and Piotr Majchrowski, for collaboration in implementation of software library dedicated to fuzzy techniques, which we have applied in our experiments.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Rostam Niakan Kalhori, M.; FazelZarandi, M.H. A new interval type-2 fuzzy reasoning method for classification systems based on normal forms of a possibility-based fuzzy measure. Inf. Sci. 2021, 581, 567–586. [Google Scholar] [CrossRef]

- Ghasemi, M.; Kelarestaghi, M.; Eshghi, F.; Sharifi, A. T2-FDL: A robust sparse representation method using adaptive type-2 fuzzy dictionary learning for medical image classification. Expert Syst. Appl. 2020, 158, 113500. [Google Scholar] [CrossRef]

- Xing, H.; He, H.; Hu, D.; Jiang, T.; Yu, X. An interval Type-2 fuzzy sets generation method for remote sensing imagery classification. Comput. Geosci. 2019, 133, 104287. [Google Scholar] [CrossRef]

- Xu, J.; Feng, G.; Zhao, T.; Sun, X.; Zhu, M. Remote sensing image classification based on semi-supervised adaptive interval type-2 fuzzy c-means algorithm. Comput. Geosci. 2019, 131, 132–143. [Google Scholar] [CrossRef]

- Wu, C.; Guo, X. Adaptive enhanced interval type-2 possibilistic fuzzy local information clustering with dual-distance for land cover classification. Eng. Appl. Artif. Intell. 2023, 119, 105806. [Google Scholar] [CrossRef]

- Erozan, İ.; Özel, E.; Erozan, D. A two-stage system proposal based on a type-2 fuzzy logic system for ergonomic control of classrooms and offices. Eng. Appl. Artif. Intell. 2023, 120, 105854. [Google Scholar] [CrossRef]

- Rafiei, H.; Salehi, A.; Baghbani, F.; Parsa, P.; Akbarzadeh-T, M.-R. Interval type-2 Fuzzy control and stochastic modeling of COVID-19 spread based on vaccination and social distancing rates. Comput. Methods Programs Biomed. 2023, 232, 107443. [Google Scholar] [CrossRef]

- Bhandari, G.; Raj, R.; Pathak, P.M.; Yang, J.-M. Robust control of a planar snake robot based on interval type-2 Takagi–Sugeno fuzzy control using genetic algorithm. Eng. Appl. Artif. Intell. 2022, 116, 105437. [Google Scholar] [CrossRef]

- Precup, R.E.; David, R.C.; Roman, R.C.; Szedlak-Stinean, A.I.; Petriu, E.M. Optimal tuning of interval type-2 fuzzy controllers for nonlinear servo systems using Slime Mould Algorithm. Int. J. Syst. Sci. 2021, 1–16. [Google Scholar] [CrossRef]

- Pozna, C.; Precup, R.E.; Horvath, E.; Petriu, E.M. Hybrid Particle filter-particle swarm optimization algorithm and application to fuzzy controlled servo systems. IEEE Trans. Fuzzy Syst. 2022, 30, 4286–4297. [Google Scholar] [CrossRef]

- Cuevas, F.; Castillo, O.; Cortes, P. Optimal Setting of Membership Functions for Interval Type-2 Fuzzy Tracking Controllers Using a Shark Smell Metaheuristic Algorithm. Int. J. Fuzzy Syst. 2022, 24, 799–822. [Google Scholar] [CrossRef]

- Mendel, J.M.; John, R.I. Type-2 fuzzy sets made simple. IEEE Trans. Fuzzy Syst. 2002, 10, 117–127. [Google Scholar] [CrossRef]

- Mendel, J.M. Type-2 Fuzzy Sets and Systems: How to Learn about Them. IEEE Smc Enewsletter 2009, 27, 1–7. [Google Scholar]

- Hagras, H. Type-2 FLCs: A new generation of fuzzy controllers. IEEE Comput. Intell. Mag. 2007, 2, 30–43. [Google Scholar] [CrossRef]

- Castillo, O.; Melin, P. Type-2 Fuzzy Logic Theory and Applications; Springer: Berlin, Germany, 2008. [Google Scholar]

- Pawlak, Z. Information systems—Theoretical foundations. Inf. Syst. 1981, 6, 205–218. [Google Scholar] [CrossRef]

- Pawlak, Z. Rough Sets; Basic notions, Report no 431; Institute of Computer Science, Polish Academy of Sciences: Warsaw, Poland, 1981. [Google Scholar]

- Pawlak, Z. Rough Sets: Theoretical Aspects of Reasoning about Data, System Theory, Knowledge Engineering and Problem Solving; Kluwer Academic Publishers: Dordrecht, The Netherlands, 1991; Volume 9. [Google Scholar]

- Hong, T.-P.; Chen, J.-B. Building a concise decision table for fuzzy rule induction. In Proceedings of the 1998 IEEE International Conference on Fuzzy Systems Proceedings. IEEE World Congress on Computational Intelligence (Cat. No.98CH36228), Anchorage, AK, USA, 4–9 May 1998; Volume 2, pp. 997–1002. [Google Scholar]

- Drwal, G.; Sikora, M. Induction of fuzzy decision rules based upon rough sets theory. In Proceedings of the 2004 IEEE International Conference on Fuzzy Systems (IEEE Cat. No.04CH37542), Budapest, Hungary, 25–29 July 2004; Volume 3, pp. 1391–1395. [Google Scholar]

- Shen, Q.; Chouchoulas, A. Data-driven fuzzy rule induction and its application to systems monitoring. In Proceedings of the FUZZ-IEEE’99. 1999 IEEE International Fuzzy Systems. Conference Proceedings (Cat. No.99CH36315), Seoul, Republic of Korea, 22–25 August 1999; Volume 2, pp. 928–933. [Google Scholar]

- Tabakov, M.; Chlopowiec, A.; Chlopowiec, A.; Dlubak, A. Classification with Fuzzification Optimization Combining Fuzzy Information Systems and Type-2 Fuzzy Inference. Appl. Sci. 2021, 11, 3484. [Google Scholar] [CrossRef]

- Takagi, T.; Sugeno, M. Fuzzy identification of systems and its applications to modeling and control. IEEE Trans. Syst. Man Cybern. 1985, 15, 116–132. [Google Scholar] [CrossRef]

- Wang, C.; Chen, D.; Hu, Q. Fuzzy information systems and their homomorphisms. Fuzzy Sets Syst. 2014, 249, 128–138. [Google Scholar] [CrossRef]

- Dubois, D. The role of fuzzy sets in decision sciences: Old techniques and new directions. Fuzzy Sets Syst. 2011, 184, 3–28. [Google Scholar] [CrossRef]

- Lee, M.-C.; Chang, T. Rule Extraction Based on Rough Fuzzy Sets in Fuzzy Information Systems. Trans. Comput. Collect. Intell. III 2011, 6560, 115–127. [Google Scholar]

- Cheruku, R.; Edla, D.R.; Kuppili, V.; Dharavath, R. RST-Bat-Miner: A fuzzy rule miner integrating rough set feature selection and bat optimization for detection of diabetes disease. Appl. Soft. Comput. 2018, 67, 764–780. [Google Scholar] [CrossRef]

- Wang, W.; Zhan, J.; Zhang, C.; Herrera-Viedma, E.; Kou, G. A regret-theory-based three-way decision method with a priori probability tolerance dominance relation in fuzzy incomplete information systems. Inf. Fusion 2023, 89, 382–396. [Google Scholar] [CrossRef]

- Kang, Y.; Yu, B.; Cai, M. Multi-attribute predictive analysis based on attribute-oriented fuzzy rough sets in fuzzy information systems. Inf. Sci. 2022, 608, 931–949. [Google Scholar] [CrossRef]

- Mamdani, E.H.; Assilian, S. An Experiment in Linguistic Synthesis with a Fuzzy Logic Controller. Int. J. Man-Mach. Stud. 1975, 7, 1–13. [Google Scholar] [CrossRef]

- Dua, D.; Graff, C. UCI Machine Learning Repository; University of California, School of Information and Computer Science: Irvine, CA, USA, 2019; Available online: http://archive.ics.uci.edu/ml (accessed on 31 December 2020).

- Zadeh, L. Fuzzy sets. Inf. Control. 1965, 8, 338–353. [Google Scholar] [CrossRef]

- Bronstein, I.N.; Semendjajew, K.A.; Musiol, G.; Mühlig, H. Taschenbuch der Mathematik; Verlag Harri Deutsch: Frankfurt, Germany, 2001; p. 1258. [Google Scholar]

- Mendel, J.M. Uncertain Rule-Based Fuzzy Logic Systems: Introduction and New Directions; Prentice-Hall: Upper Saddle River, NJ, USA, 2001. [Google Scholar]

- Karnik, N.N.; Mendel, J.M.; Liang, Q. Type-2 fuzzy logic systems. IEEE Trans. Fuzzy Syst. 1999, 7, 643–658. [Google Scholar] [CrossRef]

- Wu, D.; Mendel, J.M. Enhanced Karnik–Mendel algorithms. IEEE Trans. Fuzzy Syst. 2009, 17, 923–934. [Google Scholar]

- Wu, D.; Nie, M. Comparison and practical implementation of type reduction algorithms for type-2 fuzzy sets and systems. In Proceedings of the 2011 IEEE International Conference on Fuzzy Systems (FUZZ-IEEE 2011), Taipei, Taiwan, 27–30 June 2011; pp. 2131–2138. [Google Scholar]

- Skowron, A.; Suraj, Z. A Rough Set Approach to Real-Time State Identification for Decision Making; Institute of Computer Science Report 18/93; Warsaw University of Technology: Warsaw, Poland, 1993; p. 27. [Google Scholar]

- Skowron, A.; Suraj, Z. A Rough Set Approach to Real-Time State Identification. Bull. Eur. Assoc. Comput. Sci. 1993, 50, 264. [Google Scholar]

- Hansen, N.; Ostermeier, A. Completely derandomized self-adaptation in evolution strategies. Evol. Comput. 2001, 9, 159–195. [Google Scholar] [CrossRef]

- Hulse, J.V.; Khoshgoftaar, T.; Napolitano, A. Experimental perspectives on learning from imbalanced data. In Proceedings of the Twenty-Fourth International Conference on Machine Learning (ICML 2007), Corvallis, OR, USA, 20–24 June 2007. [Google Scholar]

- Shelke, M.; Deshmukh, D.P.R.; Shandilya, V. A review on imbalanced data handling using undersampling and oversampling technique. Comput. Sci. 2017, 3, 444–449. [Google Scholar]

- Mohammed, R.; Rawashdeh, J.; Abdullah, M. Machine learning with oversampling and undersampling techniques: Overview study and experimental results. In Proceedings of the 2020 11th International Conference on Information and Communication Systems (ICICS), Irbid, Jordan, 7–9 April 2020. [Google Scholar]

- Peng, L.; Chen, W.; Zhou, W.; Li, F.; Yang, J.; Zhang, J. An immune-inspired semi-supervised algorithm for breast cancer diagnosis. Comput. Methods Programs Biomed. 2016, 134, 259–265. [Google Scholar] [CrossRef]

- Utomo, C.P.; Kardiana, A.; Yuliwulandari, R. Breast Cancer Diagnosis using Artificial Neural Networks with Extreme Learning Techniques. Int. J. Adv. Res. Artif. Intell. Ijarai 2014, 3, 10–14. [Google Scholar]

- Akay, A.F. Support vector machines combined with feature selection for breast cancer diagnosis. Expert Syst. Appl. 2009, 36, 3240–3247. [Google Scholar] [CrossRef]

- Kumar, G.R.; Nagamani, K. Banknote authentication system utilizing deep neural network with PCA and LDA machine learning techniques. Int. J. Recent Sci. Res. 2018, 9, 30036–30038. [Google Scholar]

- Kumar, C.; Dudyala, A.K. Bank note authentication using decision tree rules and machine learning techniques. In Proceedings of the 2015 International Conference on Advances in Computer Engineering and Applications, Ghaziabad, India, 19–20 March 2015; pp. 310–314. [Google Scholar]

- Jaiswal, R.; Jaiswal, S. Banknote Authentication using Random Forest Classifier. Int. J. Digit. Appl. Contemp. Res. 2019, 7, 1–4. [Google Scholar]

- Sarma, S.S. Bank Note Authentication: A Genetic Algorithm Supported Neural based Approach. Int. J. Adv. Res. Comput. Sci. 2016, 7, 97–102. [Google Scholar]

- Lyon, R.J.; Stappers, B.W.; Cooper, S.; Brooke, J.M.; Knowles, J.D. Fifty Years of Pulsar Candidate Selection: From simple filters to a new principled real-time classification approach. Mon. Not. R. Astron. Soc. 2016, 459, 1104–1123. [Google Scholar] [CrossRef]

- Wang, Y.; Pan, Z.; Zheng, J.; Qian, L.; Li, M. A hybrid ensemble method for pulsar candidate classification. Astrophys. Space Sci. 2019, 8, 1–13. [Google Scholar] [CrossRef]

- Sağlam, F.; Yıldırım, E.; Cengiz, M.A. Clustered Bayesian classification for within-class separation. Expert Syst. Appl. 2022, 208, 118152. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).