Multimodal Fusion with Dual-Attention Based on Textual Double-Embedding Networks for Rumor Detection

Abstract

:1. Introduction

- Challenge 1: How to effectively utilize the internal dependencies of text and the dependencies of the local and global features of images to assist in the construction of multimodal rumor features?

- Challenge 2: How to make full use of the dense interaction information within and between modalities to increase the granularity of understanding and improve rumor detection performance?

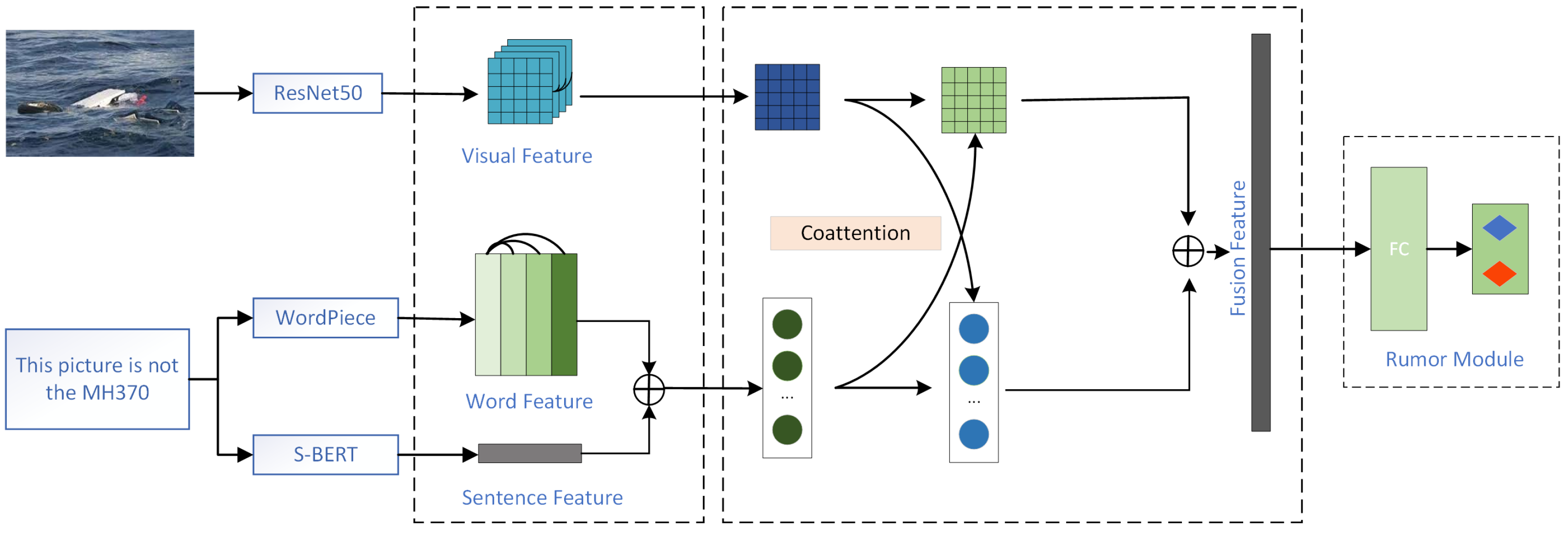

- A multimodal fusion neural network (TDEDA) is proposed for rumor detection. TDEDA explores the internal dependencies between local text features by building dual-embedding representations of words and sentences of text and captures the global feature representations of images through visual self-attention, helping to build and improve the feature representations of the text and image.

- Based on the enhanced text and visual feature representations, the information enhancement between modalities is achieved based on a dual coattention mechanism to discover the extensive interaction information between words and visual objects.

- A large number of experiments are conducted to validate the effectiveness of this method on a multimodal rumor detection dataset collected from Weibo and Twitter. The results show that TDEDA outperforms other baseline models in multimodal rumor detection.

2. Related Work

2.1. Rumor Detection

2.2. Multimodal Feature Fusion

3. Multimodal Fusion Network Based on Attention Mechanism

- A feature extractor extracts potential features from multimodal inputs consisting of text and additional images;

- A multimodal fusion module conveys information between text and visual objects, enhances the information within and the information interaction between modalities, and fuses features between modalities;

- A rumor detector uses a deep fusion of features to determine whether a tweet is a rumor or not.

3.1. Feature Extractor

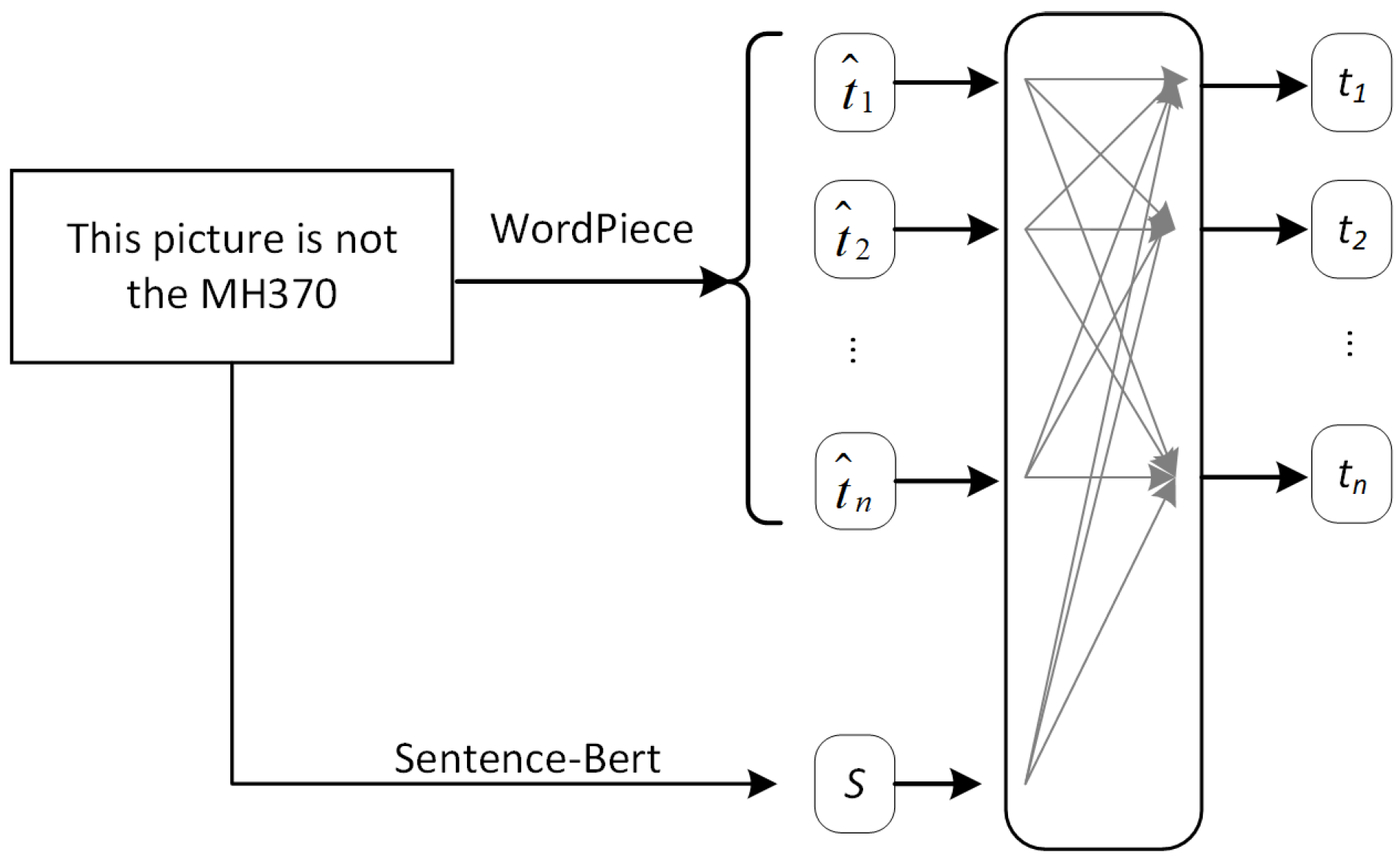

3.1.1. Text Feature Extractor

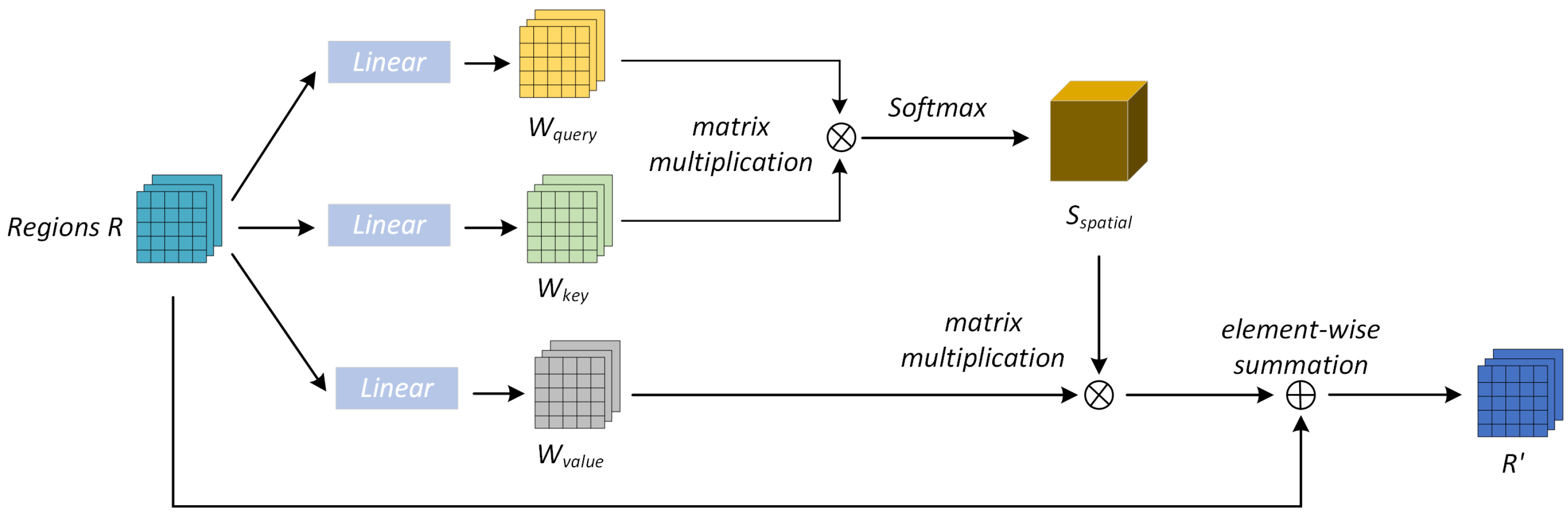

3.1.2. Visual Feature Extractor

3.2. Multimodal Fusion Module

3.2.1. Textual Double-Embedding and Self-Attention Module

3.2.2. Image Self-Attention Module

3.2.3. Feature Fusion Module for Text–Visual Coattention

3.3. Rumor Detector

4. Experiment

4.1. Dataset

4.1.1. Weibo Dataset

4.1.2. Twitter Dataset

4.2. Experimental Model

4.2.1. Single-Modal Models

- SVM-TS [34]: SVM-TS uses heuristic rules and SVM-based linear classifiers for rumor detection.

- GRU [16]: GRU detects rumors by using a multilayer GRU network, treating the content of posts as a series of variable lengths.

- CNN [18]: the CNN sets fixed length windows on posts to extract features by a convolutional neural network.

- TextGCN [35]: text-graph convolutional network (TextGCN) models the entire corpus as a heterogeneous graph and inputs it into a text graph convolutional network to obtain the semantic features of the text.

4.2.2. Multimodal Models

- att-RNN [2]: The att-RNN is based on an LSTM (long short-term memory) model to extract text and social context features and a pretrained VGG-19 model to extract visual features. The association features between text/social context features and visual features are obtained and a rumor classification is performed by the attention mechanism.

- EANN [4]: The text features and image features are extracted with TextCNN and VGG-19, respectively, and the features are stitched and input to the rumor classifier and event discriminator. Among them, the event discriminator is used to learn the invariant representation of events, and in this paper, we removed the event discriminator to make a fair comparison.

- MVAE [5]: MVAE uses an encoding–decoding approach to construct a multimodal feature expression. By training the multimodal variational self-encoder, two modalities can be reconstructed from the learned shared representation to find the correlation between the cross-modalities.

- SAFE [6]: SAFE is a multimodal rumor detection method based on similarity-aware method, which extracts text features and visual features from posts and explores the common representations between them.

- CARMN [7]: CARMN extracts text feature representations from both the original text and fused text by a multichannel convolutional neural network (MCN) and uses VGG19 to extract image feature representations for multimodal rumor detection.

- CAFE [8]: CAFE analyzes cross-modal ambiguity learning from the perspective of information theory, adaptively aggregates single-modal and cross-modal correlation features, and performs rumor detection.

4.3. Experimental Settings

5. Experimental Results and Analysis

5.1. Performance Analysis

5.2. Ablation Study

- TSA: TSA used only the textual double-embedding and self-attention without the fusion of the multimodal features part.

- VSA: VSA used only the visual self-attention without the fusion of the multimodal features part.

- DSA: DSA represented the dual self-attention part of the multimodal rumor detection, while the multimodal feature part was not fused, and the visual feature and text feature were simply connected for the prediction.

- DCA: DCA was used to indicate that the self-attention mechanism of the text and images was not used, but only dual text–visual coattention was used for the feature fusion for the prediction.

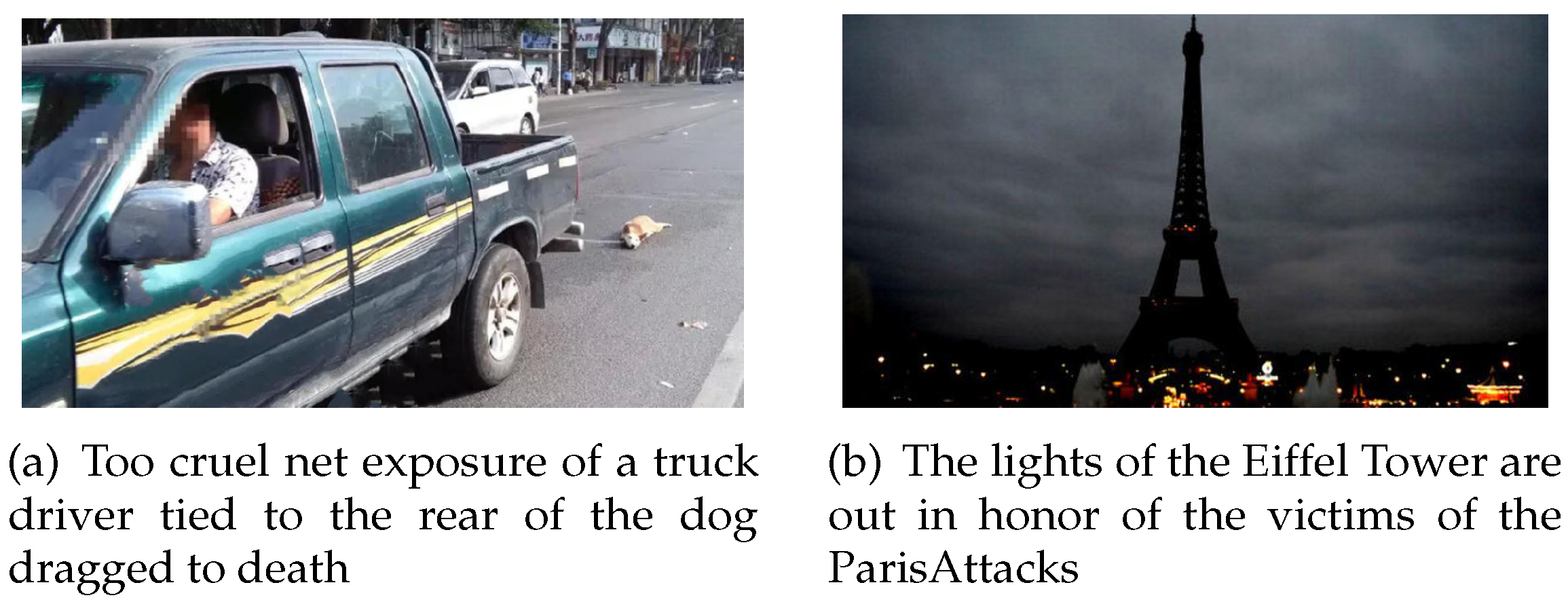

5.3. Case Studies

5.3.1. Case of Correct Identification

5.3.2. Case of Misidentification

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wu, K.; Yang, S.; Zhu, K.Q. False rumors detection on sina weibo by propagation structures. In Proceedings of the 2015 IEEE 31st International Conference on Data Engineering, Seoul, Republic of Korea, 13–17 April 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 651–662. [Google Scholar]

- Jin, Z.; Cao, J.; Guo, H.; Zhang, Y.; Luo, J. Multimodal fusion with recur rent neural networks for rumor detection on microblogs. In Proceedings of the 25th ACM International Conference on Multimedia, Mountain View, CA, USA, 23–27 October 2017; pp. 795–816. [Google Scholar]

- Zaremba, W.; Sutskever, I.; Vinvals, O. Recurrent neural network regularization. arXiv 2014, arXiv:1409.2329. [Google Scholar]

- Wang, Y.; Ma, F.; Jin, Z.; Yuan, Y.; Xun, G.; Jha, K.; Su, L.; Gao, J. EANN: Event adversarial neural networks for multi-modal fake news detection. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018; pp. 849–857. [Google Scholar]

- Khattar, D.; Goud, J.S.; Gupta, M.; Varma, V. MVAE: Multimodal variational autoencoder for fake news detection. In Proceedings of the 10 Computer Engineering and Applications World Wide Web Conference, San Francisco, CA, USA, 13–17 May 2019; pp. 2915–2921. [Google Scholar]

- Zhou, X.; Wu, J.; Zafarani, R. Similarity-Aware Multi-modal Fake News Detection. In Proceedings of the Advances in Knowledge Discovery and Data Mining: 24th Pacific-Asia Conference, PAKDD 2020, Singapore, 11–14 May 2020; Proceedings, Part II. Springer: Berlin/Heidelberg, Germany, 2020; pp. 354–367. [Google Scholar]

- Song, C.; Ning, N.; Zhang, Y.; Wu, B. A multimodal fake news detection model based on crossmodal attention residual and multichannel convolutional neural networks. Inf. Process. Manag. 2021, 58, 102437. [Google Scholar] [CrossRef]

- Chen, Y.; Li, D.; Zhang, P.; Sui, J.; Lv, Q.; Tun, L.; Shang, L. Cross-modal ambiguity learning for multimodal fake news detection. In Proceedings of the ACM Web Conference 2022, Lyon, France, 25–29 April 2022; pp. 2897–2905. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of deep dual transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Zhu, Y.; Wang, X.; Zhong, E.; Liu, N.; Li, H.; Yang, Q. Discovering spammers in social networks. In Proceedings of the AAAI Conference on Artificial Intelligence, Toronto, ON, Canada, 22–26 July 2012; Volume 26, pp. 171–177. [Google Scholar]

- Rubin, V.L.; Conroy, N.; Chen, Y.; Cornwell, S. Fake news or truth? using satirical cues to detect potentially misleading news. In Proceedings of the Second Workshop on Computational Approaches to Deception Detection, San Diego, CA, USA, 17 June 2016; pp. 7–17. [Google Scholar]

- Castillo, C.; Mendoza, M.; Poblete, B. Information credibility on twitter. In Proceedings of the 20th International Conference on World Wide Web, Hyderabad, India, 28 March–1 April 2011; pp. 675–684. [Google Scholar]

- Qazvinian, V.; Rosengren, E.; Radev, D.; Mei, Q. Rumor has it: Identifying misinformation in microblogs. In Proceedings of the 2011 Conference on Empirical Methods in Natural Language Processing, Scotland, UK, 27–31 July 2011; pp. 1589–1599. [Google Scholar]

- Kwon, S.; Cha, M.; Jung, K.; Chen, W.; Wang, Y. Prominent features of rumor propagation in online social media. In Proceedings of the 2013 IEEE 13th International Conference on Data Mining, Dallas, TX, USA, 7–13 December 2013; pp. 1103–1108. [Google Scholar]

- Ma, J.; Gao, W.; Mitra, P.; Kwon, S.; Jansen, B.J.; Wong, K.F.; Cha, M. Detecting rumors from microblogs with recurrent neural networks. In Proceedings of the Twenty-Fifth International Joint Conference on Artificial Intelligence (IJCAI-16), New York, NY, USA, 9–15 July 2016. [Google Scholar]

- Chen, T.; Li, X.; Yin, H.; Zhang, J. Call attention to rumors: Deep attention based recurrent neural networks for early rumor detection. In Proceedings of the Trends and Applications in Knowledge Discovery and Data Mining: PAKDD 2018 Workshops, BDASC, BDM, ML4Cyber, PAISI, DaMEMO, Melbourne, Australia, 3 June 2018; Revised Selected Papers 22. Springer: Cham, Switzerland, 2018; pp. 40–52. [Google Scholar]

- Yu, F.; Liu, Q.; Wu, S.; Wang, L.; Tan, T. A Convolutional Approach for Misinformation Identification. In Proceedings of the Twenty-Sixth International Joint Conference on Artificial Intelligence, Melbourne, Australia, 19–25 August 2017; pp. 3901–3907. [Google Scholar]

- Ma, J.; Gao, W.; Wong, K.F. Rumor detection on twitter with tree-structured recursive neural networks. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics, Melbourne, Australia, 15–20 July 2018; Association for Computational Linguistics: Stroudsburg, PA, USA, 2018. [Google Scholar]

- Zhang, J.; Dong, B.; Philip, S.Y. Deep diffusive neural network based fake news detection from heterogeneous social networks. In Proceedings of the 2019 IEEE International Conference on Big Data (Big Data), Los Angeles, CA, USA, 9–12 December 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1259–1266. [Google Scholar]

- Liu, Y.; Wu, Y.F. Early detection of fake news on social media through propagation path classification with recurrent and convolutional networks. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Jin, Z.; Cao, J.; Zhang, Y.; Zhou, J.; Tian, Q. Novel visual and statistical image features for microblogs news verification. IEEE Trans. Multimed. 2016, 19, 598–608. [Google Scholar] [CrossRef]

- Zadeh, A.; Chen, M.; Poria, S.; Cambria, E.; Morency, L.P. Tensor fusion network for multimodal sentiment analysis. arXiv 2017, arXiv:1707.07250. [Google Scholar]

- Hou, M.; Tang, J.; Zhang, J.; Kong, W.; Zhao, Q. Deep multimodal multilinear fusion with high-order polynomial pooling. Adv. Neural Inf. Process. Syst. 2019, 32, 12136–12145. [Google Scholar]

- Xu, N.; Mao, W.; Chen, G. Multi-interactive memory network for aspect based multimodal sentiment analysis. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 371–378. [Google Scholar]

- Gao, P.; Jiang, Z.; You, H.; Lu, P.; Hoi, S.C.; Wang, X.; Li, H. Dynamic fusion with intra-and inter-modality attention flow for visual question answering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 6639–6648. [Google Scholar]

- Bengio, Y. Learning deep architectures for AI. Found. Trends® Mach. Learn. 2009, 2, 1–127. [Google Scholar] [CrossRef]

- Yang, Z.; He, X.; Gao, J.; Deng, L.; Smola, A. Stacked attention networks for image question answering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 21–29. [Google Scholar]

- Liu, Y.; Zhang, X.; Huang, F.; Li, Z. Adversarial learning of answer-related representation for visual question answering. In Proceedings of the 27th ACM International Conference on Information and Knowledge Management, Torino, Italy, 22–26 October 2018; pp. 1013–1022. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Ke, G.; He, D.; Liu, T.Y. Rethinking positional encoding in language pre-training. arXiv 2020, arXiv:2006.15595. [Google Scholar]

- Lu, J.; Batra, D.; Parikh, D.; Lee, S. Vilbert: Pretraining task-agnostic visiolinguistic representations for vision-and-language tasks. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; Volume 32. [Google Scholar]

- Boididou, C.; Andreadou, K.; Papadopoulos, S.; Dang-Nguyen, D.T.; Boato, G.; Riegler, M.; Kompatsiaris, Y. Verifying multimedia use at mediaeval 2015. MediaEval 2015, 3, 7. [Google Scholar]

- Ma, J.; Gao, W.; Wei, Z.; Lu, Y.; Wong, K.F. Detect rumors using time series of social context information on microblogging websites. In Proceedings of the 24th ACM International on Conference on Information and Knowledge Management, Melbourne, Australia, 19–23 October 2015; pp. 1751–1754. [Google Scholar]

- Yao, L.; Mao, C.; Luo, Y. Graph convolutional networks for text classification. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 7370–7377. [Google Scholar]

- Godbole, S.; Sarawagi, S. Discriminative methods for multi-labeled classification. In Proceedings of the Advances in Knowledge Discovery and Data Mining: 8th Pacific-Asia Conference, PAKDD 2004, Sydney, Australia, 26–28 May 2004; Proceedings 8. Springer: Berlin/Heidelberg, Germany, 2004; pp. 22–30. [Google Scholar]

| Rumor | 4749 | 7898 |

| Nonrumor | 4779 | 6026 |

| Image | 9528 | 514 |

| Dataset | Method | Accuracy | Rumor | Nonrumor | ||||

|---|---|---|---|---|---|---|---|---|

| Precision | Recall | F1 | Precision | Recall | F1 | |||

| SVM-TS | 0.640 | 0.741 | 0.573 | 0.646 | 0.651 | 0.798 | 0.711 | |

| GRU | 0.702 | 0.671 | 0.794 | 0.727 | 0.747 | 0.609 | 0.671 | |

| CNN | 0.740 | 0.736 | 0.756 | 0.744 | 0.747 | 0.723 | 0.735 | |

| TextGCN | 0.787 | 0.975 | 0.573 | 0.727 | 0.712 | 0.985 | 0.827 | |

| att-RNN | 0.788 | 0.862 | 0.686 | 0.764 | 0.738 | 0.890 | 0.807 | |

| EANN | 0.782 | 0.827 | 0.697 | 0.756 | 0.752 | 0.863 | 0.804 | |

| MVAE | 0.824 | 0.854 | 0.769 | 0.809 | 0.802 | 0.875 | 0.837 | |

| SAFE | 0.816 | 0.818 | 0.815 | 0.817 | 0.816 | 0.818 | 0.817 | |

| CARMN | 0.853 | 0.891 | 0.814 | 0.851 | 0.818 | 0.894 | 0.854 | |

| CAFE | 0.840 | 0.855 | 0.830 | 0.842 | 0.825 | 0.851 | 0.837 | |

| TDEDA | 0.872 | 0.846 | 0.838 | 0.842 | 0.889 | 0.895 | 0.892 | |

| SVM-TS | 0.529 | 0.488 | 0.497 | 0.496 | 0.565 | 0.556 | 0.561 | |

| GRU | 0.634 | 0.581 | 0.812 | 0.677 | 0.758 | 0.502 | 0.604 | |

| CNN | 0.549 | 0.508 | 0.597 | 0.549 | 0.598 | 0.509 | 0.550 | |

| TextGCN | 0.703 | 0.808 | 0.365 | 0.503 | 0.680 | 0.939 | 0.779 | |

| att-RNN | 0.682 | 0.780 | 0.615 | 0.689 | 0.603 | 0.770 | 0.676 | |

| EANN | 0.719 | 0.642 | 0.474 | 0.545 | 0.771 | 0.870 | 0.817 | |

| MVAE | 0.737 | 0.801 | 0.719 | 0.758 | 0.689 | 0.777 | 0.730 | |

| SAFE | 0.766 | 0.777 | 0.795 | 0.786 | 0.752 | 0.731 | 0.742 | |

| CARMN | 0.741 | 0.854 | 0.619 | 0.718 | 0.670 | 0.880 | 0.760 | |

| CAFE | 0.806 | 0.807 | 0.799 | 0.803 | 0.805 | 0.813 | 0.809 | |

| TDEDA | 0.824 | 0.782 | 0.846 | 0.813 | 0.864 | 0.807 | 0.834 | |

| Dataset | Method | Accuracy | Rumor | Nonrumor | ||||

|---|---|---|---|---|---|---|---|---|

| Precision | Recall | F1 | Precision | Recall | F1 | |||

| TSA | 0.840 | 0.791 | 0.824 | 0.807 | 0.875 | 0.850 | 0.863 | |

| VSA | 0.838 | 0.775 | 0.847 | 0.810 | 0.888 | 0.831 | 0.859 | |

| DSA | 0.854 | 0.797 | 0.861 | 0.828 | 0.899 | 0.849 | 0.873 | |

| DCA | 0.863 | 0.823 | 0.847 | 0.835 | 0.893 | 0.874 | 0.883 | |

| TDEDA | 0.872 | 0.846 | 0.838 | 0.842 | 0.889 | 0.895 | 0.892 | |

| TSA | 0.799 | 0.727 | 0.813 | 0.767 | 0.860 | 0.790 | 0.823 | |

| VSA | 0.790 | 0.698 | 0.852 | 0.768 | 0.880 | 0.747 | 0.808 | |

| DSA | 0.805 | 0.735 | 0.815 | 0.773 | 0.862 | 0.798 | 0.829 | |

| DCA | 0.814 | 0.773 | 0.771 | 0.772 | 0.843 | 0.844 | 0.843 | |

| TDEDA | 0.824 | 0.782 | 0.846 | 0.813 | 0.864 | 0.807 | 0.834 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, H.; Ke, Z.; Nie, X.; Dai, L.; Slamu, W. Multimodal Fusion with Dual-Attention Based on Textual Double-Embedding Networks for Rumor Detection. Appl. Sci. 2023, 13, 4886. https://doi.org/10.3390/app13084886

Han H, Ke Z, Nie X, Dai L, Slamu W. Multimodal Fusion with Dual-Attention Based on Textual Double-Embedding Networks for Rumor Detection. Applied Sciences. 2023; 13(8):4886. https://doi.org/10.3390/app13084886

Chicago/Turabian StyleHan, Huawei, Zunwang Ke, Xiangyang Nie, Li Dai, and Wushour Slamu. 2023. "Multimodal Fusion with Dual-Attention Based on Textual Double-Embedding Networks for Rumor Detection" Applied Sciences 13, no. 8: 4886. https://doi.org/10.3390/app13084886

APA StyleHan, H., Ke, Z., Nie, X., Dai, L., & Slamu, W. (2023). Multimodal Fusion with Dual-Attention Based on Textual Double-Embedding Networks for Rumor Detection. Applied Sciences, 13(8), 4886. https://doi.org/10.3390/app13084886