The Use of Attentive Knowledge Graph Perceptual Propagation for Improving Recommendations

Abstract

1. Introduction

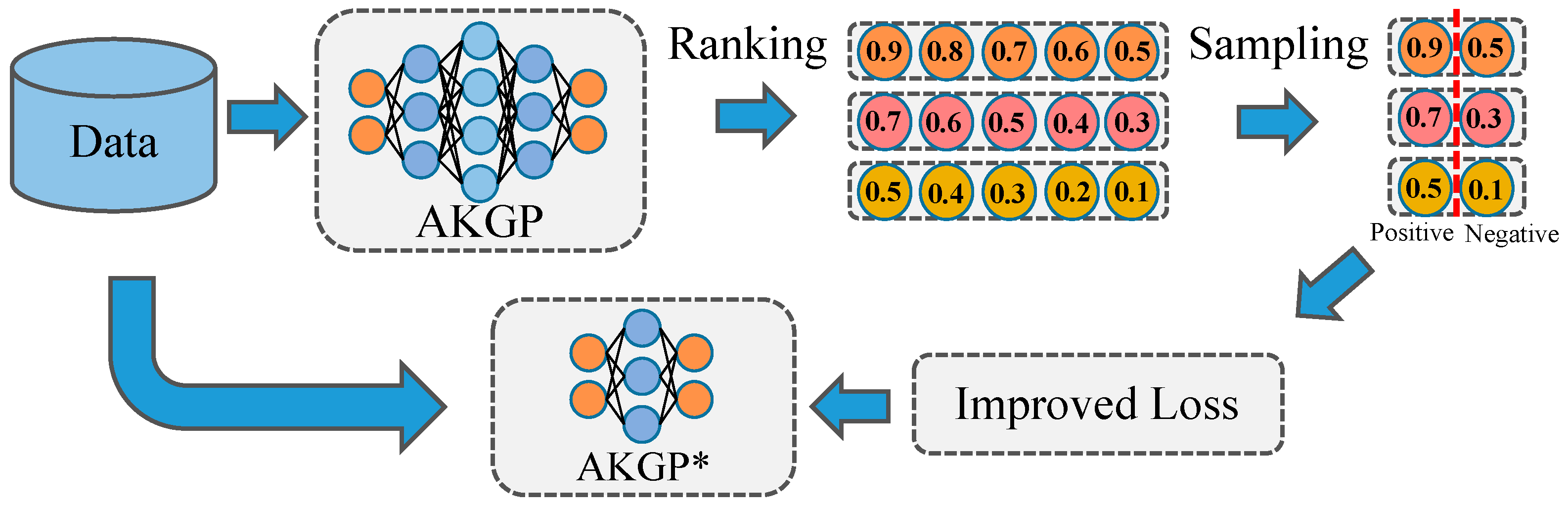

- This article proposes an end-to-end algorithmic framework, AKGP, that uses knowledge graphs as auxiliary information, fusing structured knowledge with contextual information to build fine-grained knowledge models.

- This article proposes an attention network that emphasizes the influence of different relationships between entities and explores users’ higher-order interests through perceptual propagation methods. Moreover, a stratified sampling strategy is used to retain entity features and reduce sample bias.

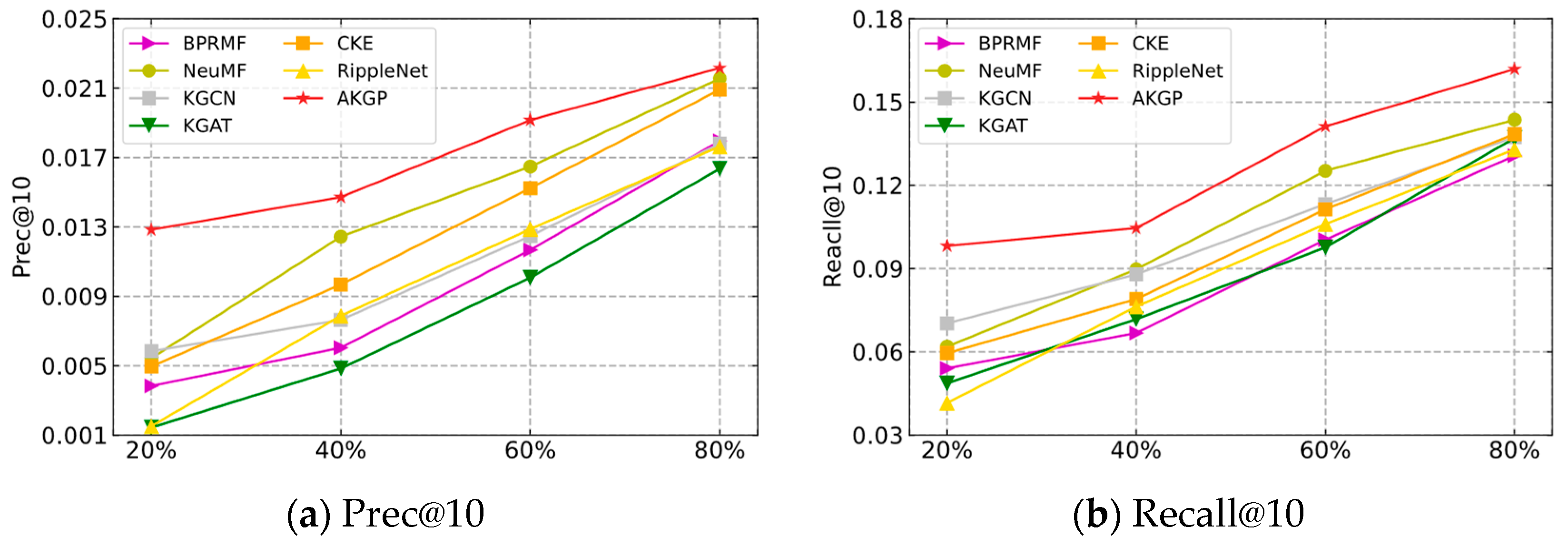

- Extensive experiments are conducted on three datasets, and the experimental results demonstrate the effectiveness of the model in solving the sparsity and cold-start problems.

2. Related Work

2.1. Embedded-Based Methods

2.2. Path-Based Methods

2.3. Propagation-Based Methods

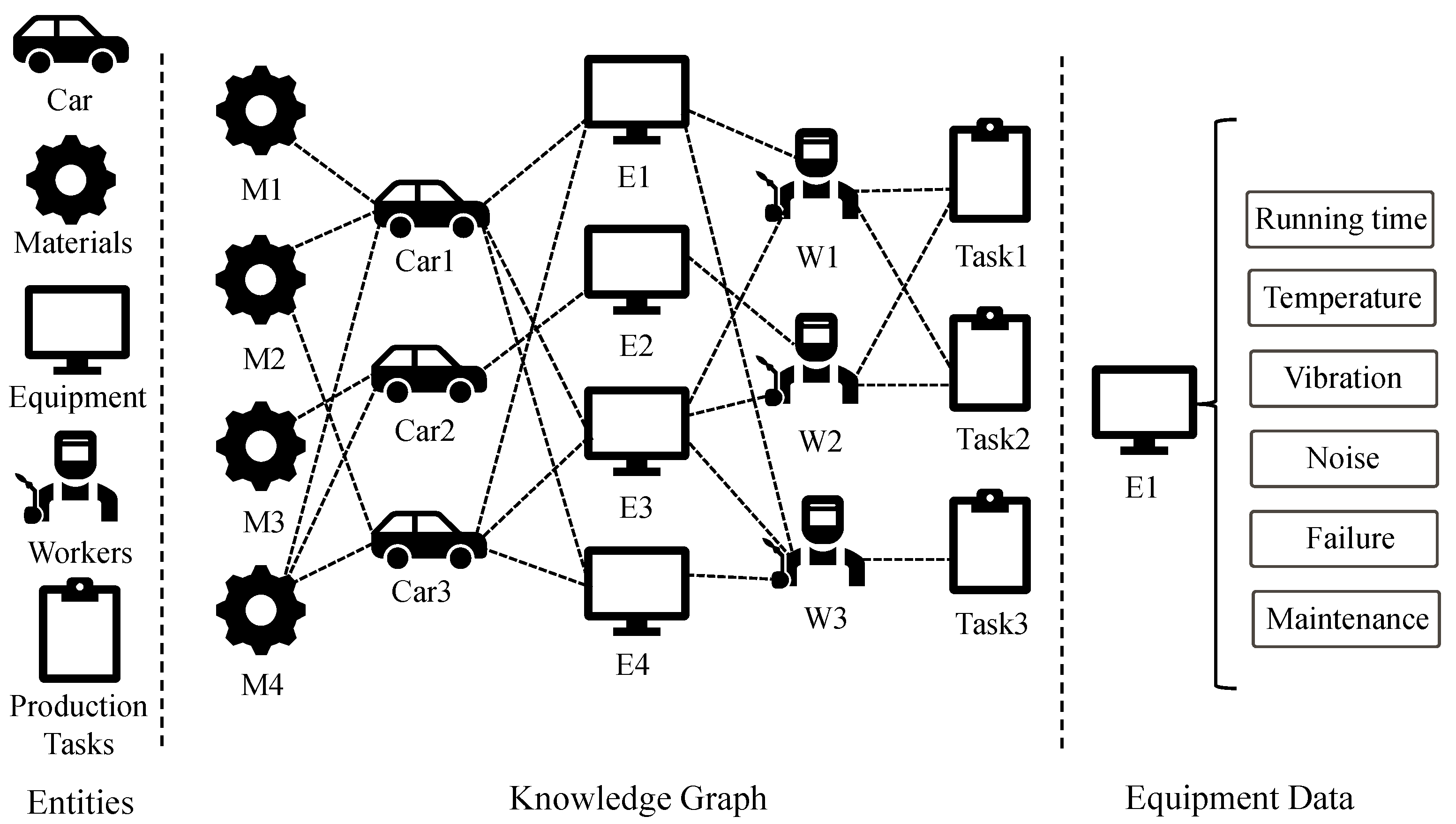

3. Preliminaries

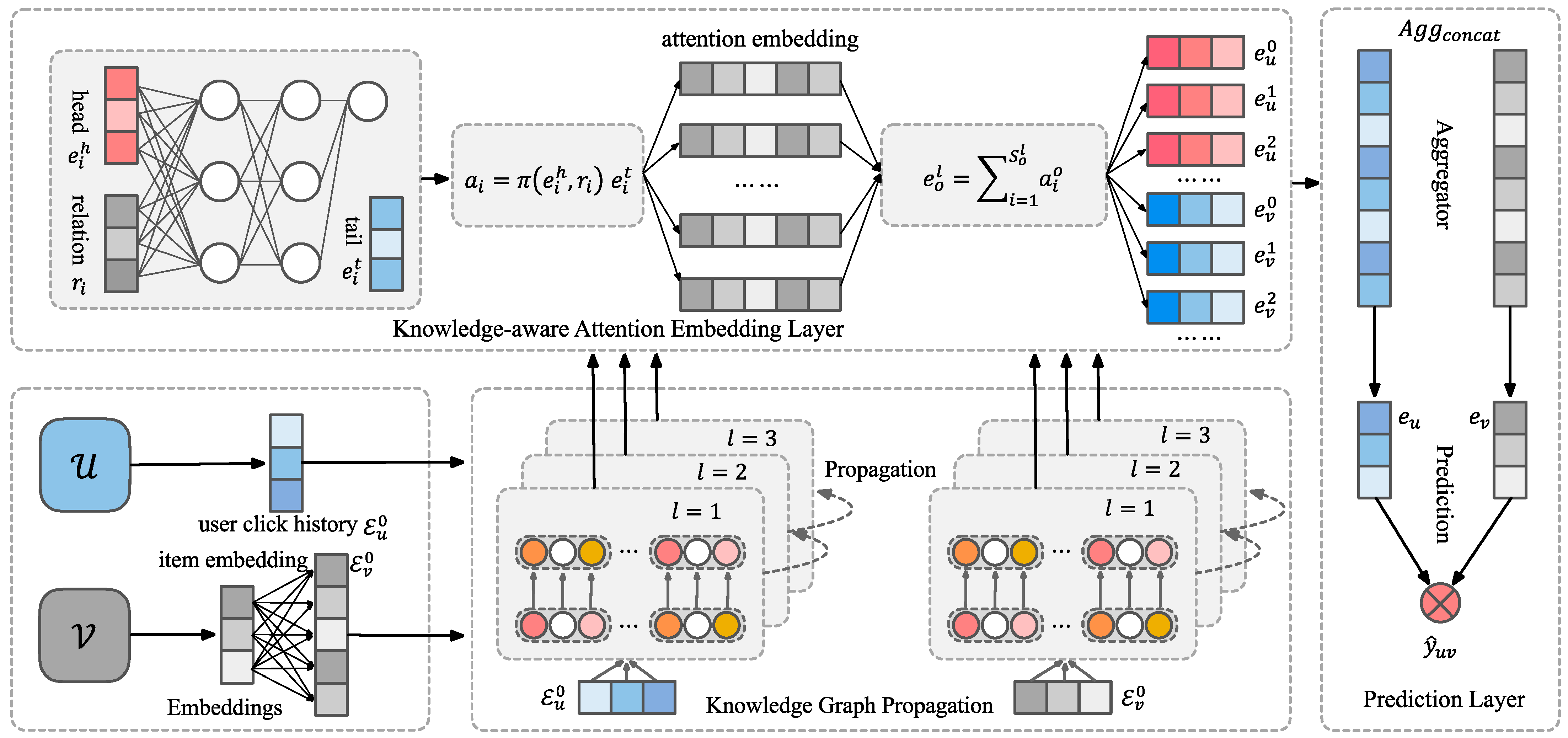

4. Methodology

4.1. Initial Embedding Representation

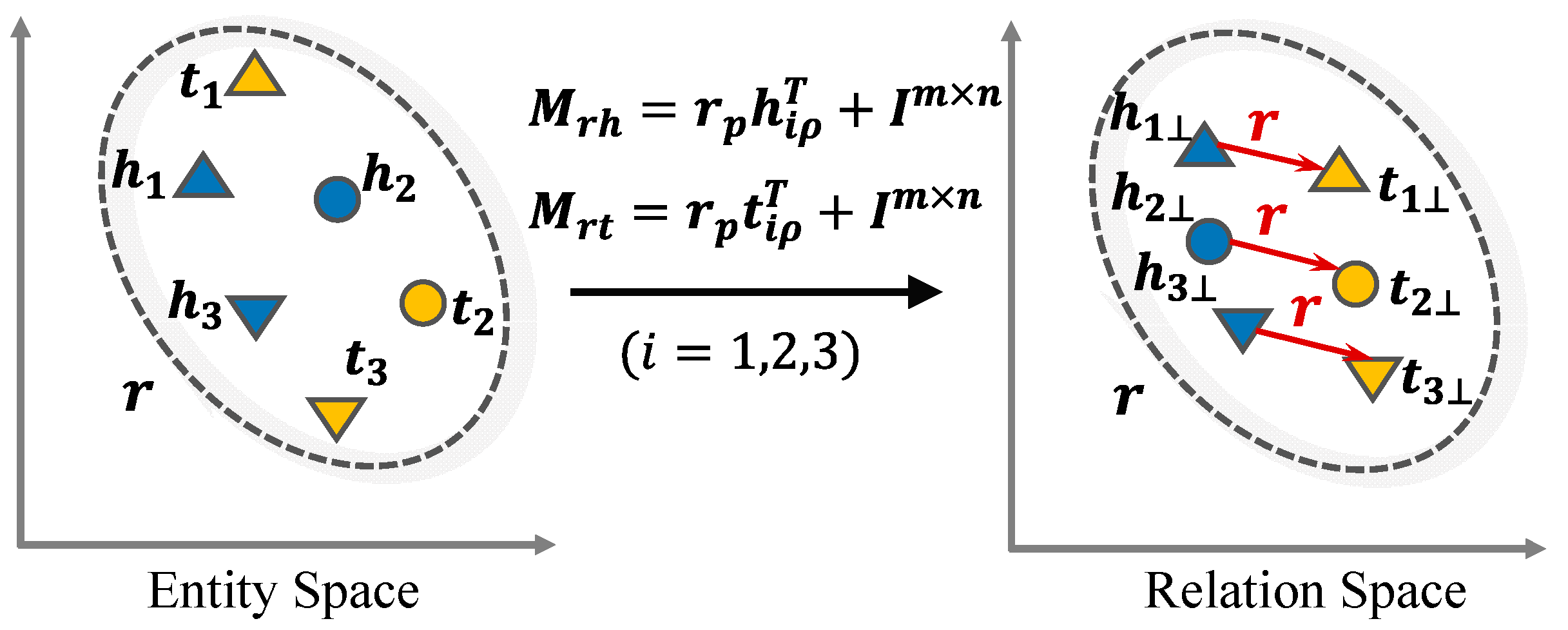

4.1.1. Knowledge Graph Embedding

4.1.2. Fine-Grained Hierarchical Modeling

4.1.3. Initial User–Item Embedding Representation

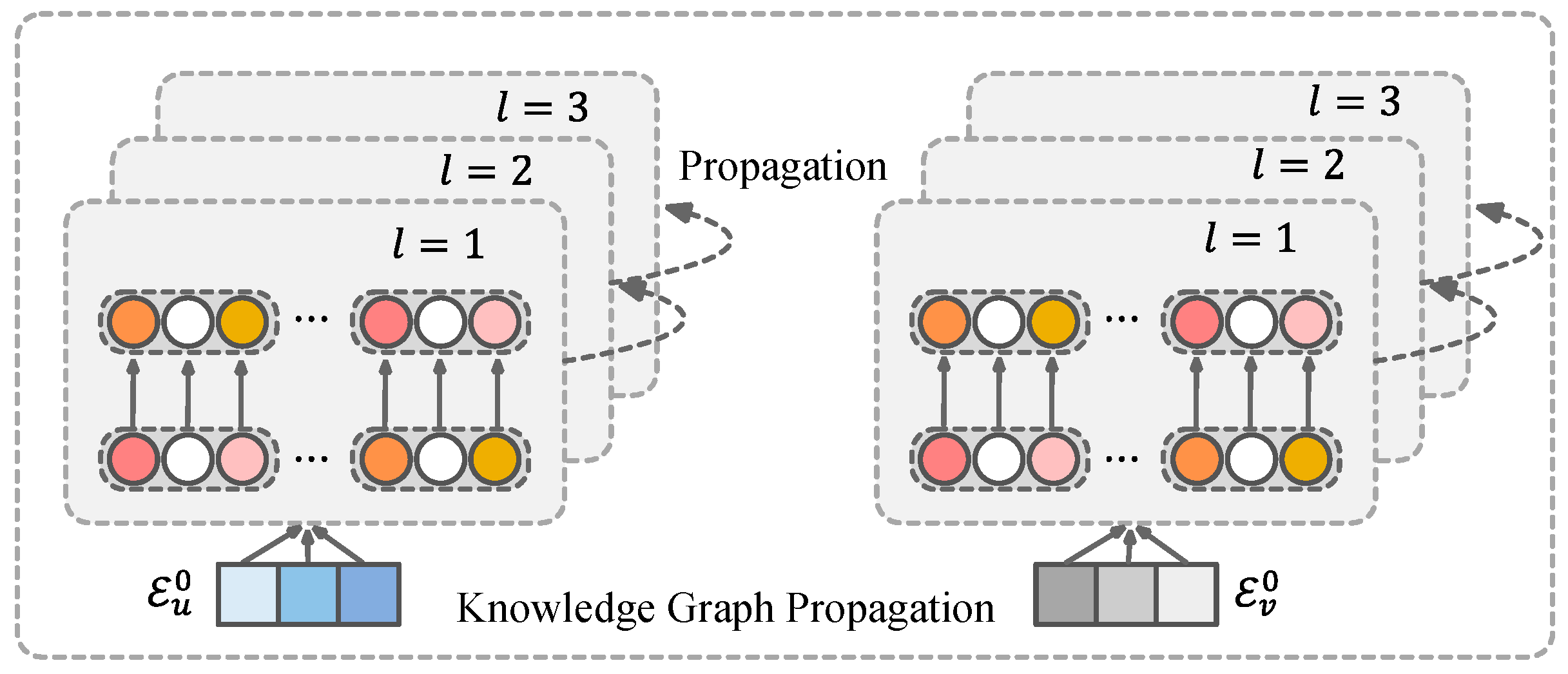

4.2. Multi-Layer Perceptual Propagation and Attention Networks

4.3. Aggregate and Prediction

4.4. Stratified Sampling and Iterations

5. Experiments

5.1. Datasets

5.2. Baselines

5.3. Experimental Settings

5.4. Experimental Results

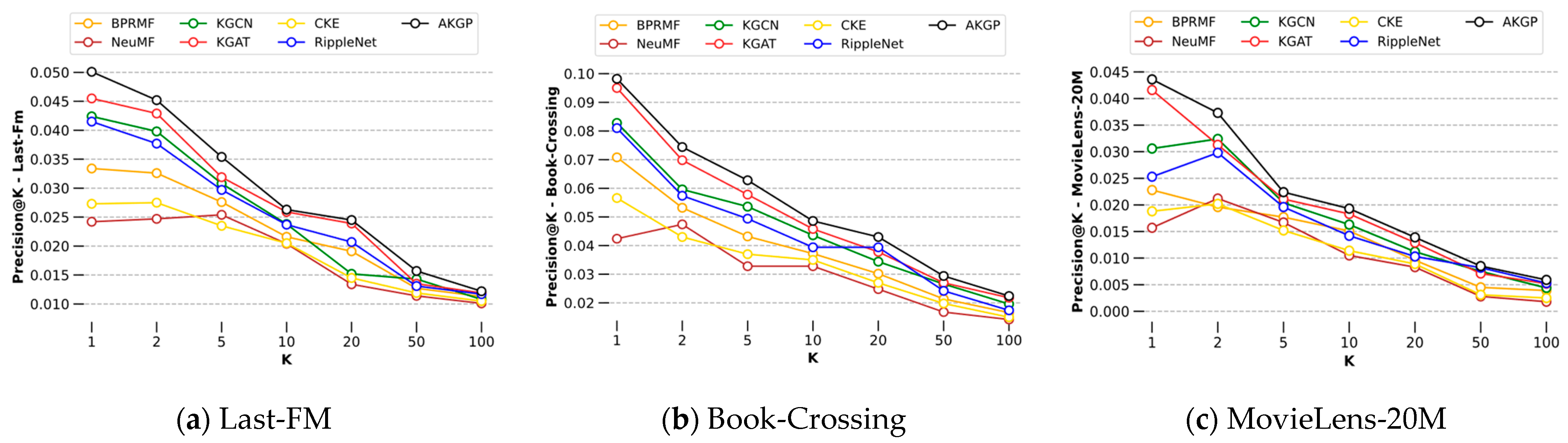

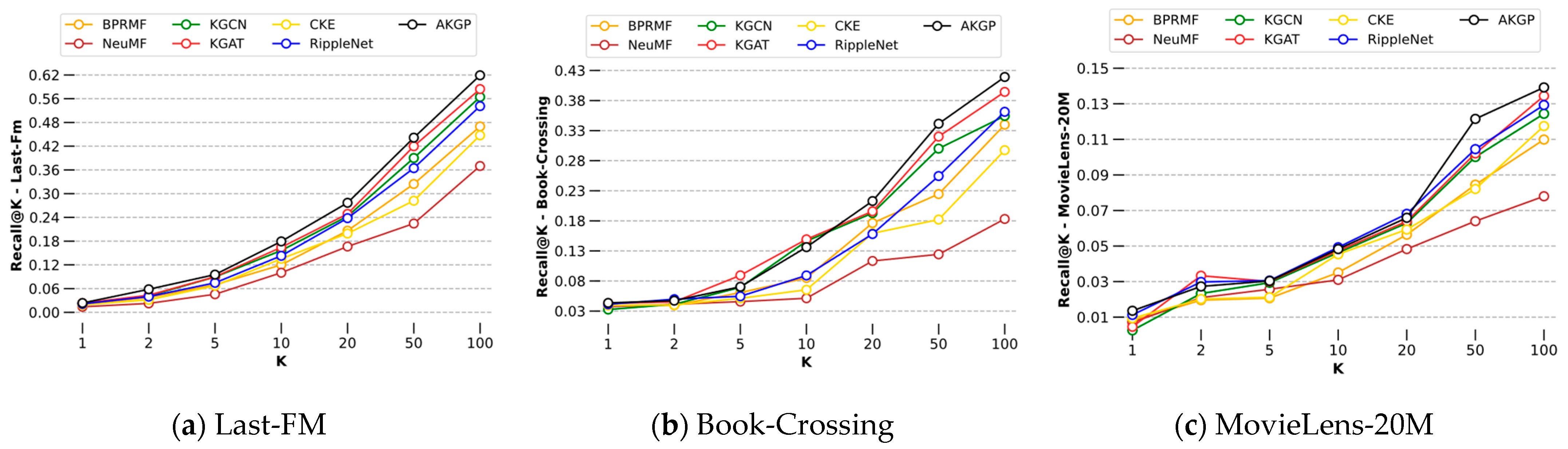

5.4.1. Performance Comparisons with Baselines

5.4.2. Performance Comparisons with Variants

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Sun, Z.; Guo, Q.; Yang, J.; Fang, H.; Guo, G.; Zhang, J.; Burke, R. Research commentary on recommendations with side information: A survey and research directions. Electron. Commer. Res. Appl. 2019, 37, 100879. [Google Scholar] [CrossRef]

- Sharma, R.; Ray, S. Explanations in recommender systems: An overview. Int. J. Bus. Inf. Syst. 2016, 23, 248–262. [Google Scholar] [CrossRef]

- Song, Y.; Elkahky, A.M.; He, X. Multi-rate deep learning for temporal recommendation. In Proceedings of the 39th International ACM SIGIR Conference on Research and Development in Information Retrieval, Pisa, Italy, 17–21 July 2016; pp. 909–912. [Google Scholar]

- Bai, B.; Fan, Y.; Tan, W.; Zhang, J. DLTSR: A deep learning framework for recommendations of long-tail web services. IEEE Trans. Serv. Comput. 2017, 13, 73–85. [Google Scholar] [CrossRef]

- Feldmann, A.; Whitt, W. Fitting mixtures of exponentials to long-tail distributions to analyze network performance models. Perform. Eval. 1998, 31, 245–279. [Google Scholar] [CrossRef]

- Bobadilla, J.; Ortega, F.; Hernando, A.; Gutiérrez, A. Recommender systems survey. Knowl.-Based Syst. 2013, 46, 109–132. [Google Scholar] [CrossRef]

- Klašnja-Milićević, A.; Ivanović, M.; Nanopoulos, A. Recommender systems in e-learning environments: A survey of the state-of-the-art and possible extensions. Artif. Intell. Rev. 2015, 44, 571–604. [Google Scholar] [CrossRef]

- Adomavicius, G.; Tuzhilin, A. Toward the next generation of recommender systems: A survey of the state-of-the-art and possible extensions. IEEE Trans. Knowl. Data Eng. 2005, 17, 734–749. [Google Scholar] [CrossRef]

- Lops, P.; De Gemmis, M.; Semeraro, G. Content-Based Recommender Systems: State of the Art and Trends; Springer: Boston, MA, USA, 2011; pp. 73–105. [Google Scholar]

- Wang, D.; Liang, Y.; Xu, D.; Feng, X.; Guan, R. A content-based recommender system for computer science publications. Knowl.-Based Syst. 2018, 157, 1–9. [Google Scholar] [CrossRef]

- He, X.; Liao, L.; Zhang, H.; Nie, L.; Hu, X.; Chua, T.-S. Neural collaborative filtering. In Proceedings of the 26th International Conference on World Wide Web, Perth, Australia, 3–7 April 2017; pp. 173–182. [Google Scholar]

- Zhang, H.; Shen, F.; Liu, W.; He, X.; Luan, H.; Chua, T.-S. Discrete collaborative filtering. In Proceedings of the 39th International ACM SIGIR Conference on Research and Development in Information Retrieval, Pisa, Italy, 17–21 July 2016; pp. 325–334. [Google Scholar]

- Mongia, A.; Jhamb, N.; Chouzenoux, E.; Majumdar, A. Deep latent factor model for collaborative filtering. Signal Process. 2020, 169, 107366. [Google Scholar] [CrossRef]

- Pang, S.; Yu, S.; Li, G.; Qiao, S.; Wang, M. Time-Sensitive Collaborative Filtering Algorithm with Feature Stability. Comput. Inform. 2020, 39, 141–155. [Google Scholar] [CrossRef]

- Nikolakopoulos, A.N.; Karypis, G. Boosting item-based collaborative filtering via nearly uncoupled random walks. ACM Trans. Knowl. Discov. Data (TKDD) 2020, 14, 1–26. [Google Scholar] [CrossRef]

- Herlocker, J.L.; Konstan, J.A.; Borchers, A.; Riedl, J. An algorithmic framework for performing collaborative filtering. In Proceedings of the 22nd Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, Berkeley, CA, USA, 15–19 August 1999; pp. 230–237. [Google Scholar]

- Kim, B.M.; Li, Q.; Park, C.S.; Kim, S.G.; Kim, J.Y. A new approach for combining content-based and collaborative filters. J. Intell. Inf. Syst. 2006, 27, 79–91. [Google Scholar] [CrossRef]

- Kim, B.-D.; Kim, S.-O. A new recommender system to combine content-based and collaborative filtering systems. J. Database Mark. Cust. Strat. Manag. 2001, 8, 244–252. [Google Scholar] [CrossRef]

- Bellogín, A.; Castells, P.; Cantador, I. Neighbor selection and weighting in user-based collaborative filtering: A performance prediction approach. ACM Trans. Web (TWEB) 2014, 8, 1–30. [Google Scholar] [CrossRef]

- Koohi, H.; Kiani, K. User based collaborative filtering using fuzzy C-means. Measurement 2016, 91, 134–139. [Google Scholar] [CrossRef]

- Yue, W.; Wang, Z.; Liu, W.; Tian, B.; Lauria, S.; Liu, X. An optimally weighted user-and item-based collaborative filtering approach to predicting baseline data for Friedreich’s Ataxia patients. Neurocomputing 2021, 419, 287–294. [Google Scholar] [CrossRef]

- Xue, F.; He, X.; Wang, X.; Xu, J.; Liu, K.; Hong, R. Deep item-based collaborative filtering for top-n recommendation. ACM Trans. Inf. Syst. 2019, 37, 1–25. [Google Scholar] [CrossRef]

- Çano, E.; Morisio, M. Hybrid recommender systems: A systematic literature review. Intell. Data Anal. 2017, 21, 1487–1524. [Google Scholar] [CrossRef]

- Ahmadian, S.; Joorabloo, N.; Jalili, M.; Ahmadian, M. Alleviating data sparsity problem in time-aware recommender systems using a reliable rating profile enrichment approach. Expert Syst. Appl. 2022, 187, 115849. [Google Scholar] [CrossRef]

- Kouki, P.; Fakhraei, S.; Foulds, J.; Eirinaki, M.; Getoor, L. Hyper: A flexible and extensible probabilistic framework for hybrid recommender systems. In Proceedings of the 9th ACM Conference on Recommender Systems, Vienna, Austria, 16–20 September 2015; pp. 99–106. [Google Scholar]

- Zhang, Y.; Shi, Z.; Zuo, W.; Yue, L.; Liang, S.; Li, X. Joint Personalized Markov Chains with social network embedding for cold-start recommendation. Neurocomputing 2020, 386, 208–220. [Google Scholar] [CrossRef]

- Catherine, R.; Cohen, W. Transnets: Learning to transform for recommendation. In Proceedings of the Eleventh ACM Conference on Recommender Systems, Como, Italy, 27–31 August 2017; pp. 288–296. [Google Scholar]

- Tay, Y.; Luu, A.T.; Hui, S.C. Multi-pointer co-attention networks for recommendation. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018; pp. 2309–2318. [Google Scholar]

- Xu, Y.; Yang, Y.; Han, J.; Wang, E.; Zhuang, F.; Xiong, H. Exploiting the sentimental bias between ratings and reviews for enhancing recommendation. In Proceedings of the 2018 IEEE International Conference on Data Mining (ICDM), Singapore, 17–20 November 2018; pp. 1356–1361. [Google Scholar]

- Rendle, S. Factorization machines. In Proceedings of the 2010 IEEE International Conference on Data Mining, Sydney, NSW, Australia, 13–17 December 2010; pp. 995–1000. [Google Scholar]

- Guo, Q.; Zhuang, F.; Qin, C.; Zhu, H.; Xie, X.; Xiong, H.; He, Q. A survey on knowledge graph-based recommender systems. IEEE Trans. Knowl. Data Eng. 2020, 34, 3549–3568. [Google Scholar] [CrossRef]

- Cao, Y.; Wang, X.; He, X.; Hu, Z.; Chua, T.-S. Unifying knowledge graph learning and recommendation: Towards a better understanding of user preferences. In Proceedings of the The World Wide Web Conference, San Francisco, CA, USA, 13–17 May 2019; pp. 151–161. [Google Scholar]

- Wang, H.; Zhang, F.; Wang, J.; Zhao, M.; Li, W.; Xie, X.; Guo, M. Ripplenet: Propagating user preferences on the knowledge graph for recommender systems. In Proceedings of the 27th ACM International Conference on Information and Knowledge Management, Torino, Italy, 22–26 October 2018; pp. 417–426. [Google Scholar]

- Wang, H.; Zhang, F.; Zhang, M.; Leskovec, J.; Zhao, M.; Li, W.; Wang, Z. Knowledge-aware graph neural networks with label smoothness regularization for recommender systems. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 968–977. [Google Scholar]

- Wang, X.; He, X.; Cao, Y.; Liu, M.; Chua, T.-S. Kgat: Knowledge graph attention network for recommendation. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 950–958. [Google Scholar]

- Wang, Z.; Lin, G.; Tan, H.; Chen, Q.; Liu, X. CKAN: Collaborative knowledge-aware attentive network for recommender systems. In Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual Event China, 25–30 July 2020; pp. 219–228. [Google Scholar]

- Zhang, F.; Yuan, N.J.; Lian, D.; Xie, X.; Ma, W.-Y. Collaborative knowledge base embedding for recommender systems. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 353–362. [Google Scholar]

- Yu, X.; Ren, X.; Sun, Y.; Gu, Q.; Sturt, B.; Khandelwal, U.; Norick, B.; Han, J. Personalized entity recommendation: A heterogeneous information network approach. In Proceedings of the 7th ACM International Conference on Web Search and Data Mining, New York, NY, USA, 24–28 February 2014; pp. 283–292. [Google Scholar]

- Liang, K.; Zhou, B.; Zhang, Y.; Li, Y.; Zhang, B.; Zhang, X. PF2RM: A Power Fault Retrieval and Recommendation Model Based on Knowledge Graph. Energies 2022, 15, 1810. [Google Scholar] [CrossRef]

- Lin, Y.; Liu, Z.; Sun, M.; Liu, Y.; Zhu, X. Learning entity and relation embeddings for knowledge graph completion. In Proceedings of the AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015. [Google Scholar]

- Wang, H.; Zhang, F.; Xie, X.; Guo, M. DKN: Deep knowledge-aware network for news recommendation. In Proceedings of the 2018 World Wide Web Conference, Lyon, France, 23–27 April 2018; pp. 1835–1844. [Google Scholar]

- Zhang, S.; Zhang, N.; Fan, S.; Gu, J.; Li, J. Knowledge Graph Recommendation Model Based on Adversarial Training. Appl. Sci. 2022, 12, 7434. [Google Scholar] [CrossRef]

- Zhang, H.; Wang, Y.; Chen, C.; Liu, R.; Zhou, S.; Gao, T. Enhancing Knowledge of Propagation-Perception-Based Attention Recommender Systems. Electronics 2022, 11, 547. [Google Scholar] [CrossRef]

- Ji, G.; He, S.; Xu, L.; Liu, K.; Zhao, J. Knowledge graph embedding via dynamic mapping matrix. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Beijing, China, 26–31 July 2015; pp. 687–696. [Google Scholar]

- Goldberg, Y.; Levy, O. word2vec Explained: Deriving Mikolov et al.’s negative-sampling word-embedding method. arXiv 2014, arXiv:1402.3722. [Google Scholar]

- Harper, F.M.; Konstan, J. The movielens datasets: History and context. ACM Trans. Interact. Intell. Syst. 2015, 5, 1–19. [Google Scholar] [CrossRef]

- Rendle, S.; Freudenthaler, C.; Gantner, Z.; Schmidt-Thieme, L. BPR: Bayesian personalized ranking from implicit feedback. arXiv 2012, arXiv:1205.2618. [Google Scholar]

- Zhang, L.; Kang, Z.; Sun, X.; Sun, H.; Zhang, B.; Pu, D. KCRec: Knowledge-aware representation graph convolutional network for recommendation. Knowl.-Based Syst. 2021, 230, 107399. [Google Scholar] [CrossRef]

- Bordes, A.; Usunier, N.; Garcia-Duran, A.; Weston, J.; Yakhnenko, O. Translating embeddings for modeling multi-relational data. In Proceedings of the 26th International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 5–10 December 2013; Volume 2, pp. 2787–2795. [Google Scholar]

- Wang, Z.; Zhang, J.; Feng, J.; Chen, Z. Knowledge graph embedding by translating on hyperplanes. In Proceedings of the AAAI Conference on Artificial Intelligence, Québec City, QC, Canada, 27–31 July 2014. [Google Scholar]

| Data Type | Element | Last.FM | Book-Crossing | MovieLens-20M |

|---|---|---|---|---|

| User–Item Interaction | #Users | 1872 | 19,676 | 138,159 |

| #Items | 3846 | 20,003 | 16,954 | |

| #Interactions | 42,346 | 172,576 | 13,501,622 | |

| Knowledge Graph | #Entities | 9366 | 25,787 | 102,569 |

| #Relationships | 60 | 18 | 32 | |

| #Triples | 15,518 | 60,787 | 499,474 |

| Parameter Name | Parameter Value |

|---|---|

| Optimizer | Adam |

| Learning Rate | 0.001 |

| Embed Dimension | 64 |

| Batch Size | 1024 |

| L2 weighs | 1 × 10−4 |

| Model | Last-FM | Book-Crossing | Movielens-20M | |||

|---|---|---|---|---|---|---|

| AUC | F1 | AUC | F1 | AUC | F1 | |

| NEUMF | 0.725 (−12.5%) | 0.645 (−14.8%) | 0.631 (−14.9%) | 0.603 (−8.5%) | 0.904 (−7.3%) | 0.906 (−2.1%) |

| BPRMF | 0.766 (−7.6%) | 0.692 (−6.6%) | 0.665 (−10.4%) | 0.612 (−7.1%) | 0.903 (−7.4%) | 0.913 (−1.3%) |

| CKE | 0.743 (−10.3%) | 0.675 (−8.9%) | 0.678 (−8.6%) | 0.620 (−5.9%) | 0.912 (−6.5%) | 0.906 (−2.1%) |

| RippleNet | 0.778 (−6.2%) | 0.702 (−5.3%) | 0.720 (−2.9%) | 0.645 (−2.1%) | 0.921 (−5.5%) | 0.920 (−0.5%) |

| KGCN | 0.802 (−3.2%) | 0.722 (−2.6%) | 0.686 (−7.5%) | 0.631 (−4.2%) | 0.974 (−0.2%) | 0.924 (−0.1%) |

| KGAT | 0.825 (−0.4%) | 0.739 (−0.3%) | 0.725 (−2.3%) | 0.654 (−0.7%) | 0.972 (−0.4%) | 0.922 (−0.3%) |

| AKGP | 0.829 | 0.741 | 0.742 | 0.659 | 0.976 | 0.925 |

| Model | Last-FM | Book-Crossing | MovieLens-20M |

|---|---|---|---|

| AKGP/E | 0.788 | 0.702 | 0.898 |

| AKGP/A | 0.723 | 0.642 | 0.846 |

| AKGP/S | 0.791 | 0.727 | 0.912 |

| AKGP | 0.829 | 0.742 | 0.976 |

| Model | Last-FM | Book-Crossing | MovieLens-20M |

|---|---|---|---|

| AKGP-TransE | 0.815 | 0.723 | 0.973 |

| AKGP-TransH | 0.824 | 0.732 | 0.964 |

| AKGP-TransR | 0.830 | 0.735 | 0.957 |

| AKGP-TransD | 0.831 | 0.742 | 0.976 |

| Depth | Last-FM | Book-Crossing | MovieLens-20M |

|---|---|---|---|

| 1 | 0.813 | 0.724 | 0.976 |

| 2 | 0.827 | 0.742 | 0.965 |

| 3 | 0.831 | 0.733 | 0.947 |

| 4 | 0.825 | 0.721 | 0.938 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, C.; Huang, B. The Use of Attentive Knowledge Graph Perceptual Propagation for Improving Recommendations. Appl. Sci. 2023, 13, 4667. https://doi.org/10.3390/app13084667

Wang C, Huang B. The Use of Attentive Knowledge Graph Perceptual Propagation for Improving Recommendations. Applied Sciences. 2023; 13(8):4667. https://doi.org/10.3390/app13084667

Chicago/Turabian StyleWang, Chenming, and Bo Huang. 2023. "The Use of Attentive Knowledge Graph Perceptual Propagation for Improving Recommendations" Applied Sciences 13, no. 8: 4667. https://doi.org/10.3390/app13084667

APA StyleWang, C., & Huang, B. (2023). The Use of Attentive Knowledge Graph Perceptual Propagation for Improving Recommendations. Applied Sciences, 13(8), 4667. https://doi.org/10.3390/app13084667