Candidate Term Boundary Conflict Reduction Method for Chinese Geological Text Segmentation

Abstract

1. Introduction

2. Related Work

2.1. Technology of CWS in the General Field

2.2. CWS Technology in the Geological Field

2.3. Zero-Sample Text Segmentation

2.4. CWS Technology Based on Information Entropy

3. Candidate Term Boundary Conflict Reduction Method Based on Information Entropy

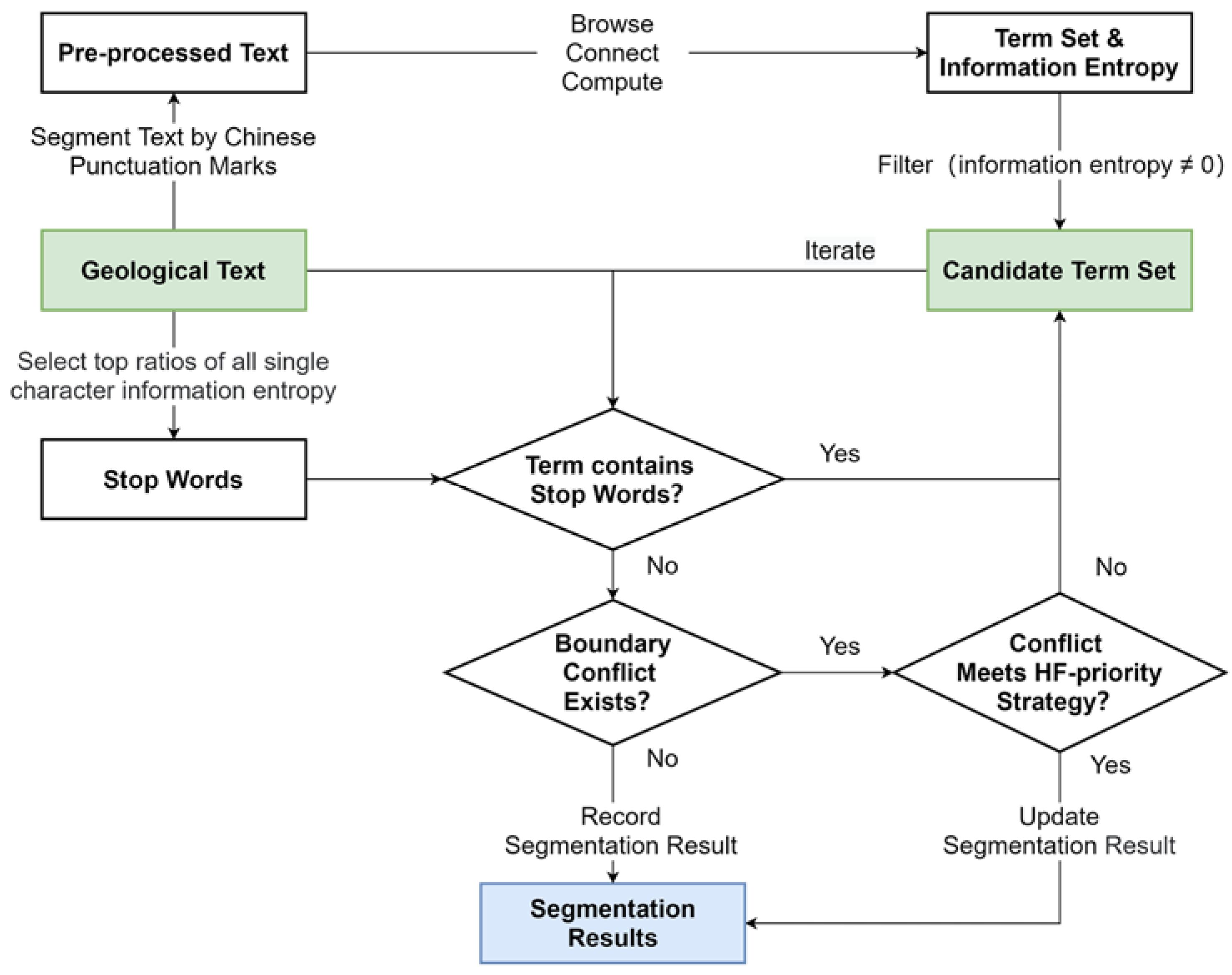

3.1. Technical Route of Word Segmentation Algorithm for Single Geological Text

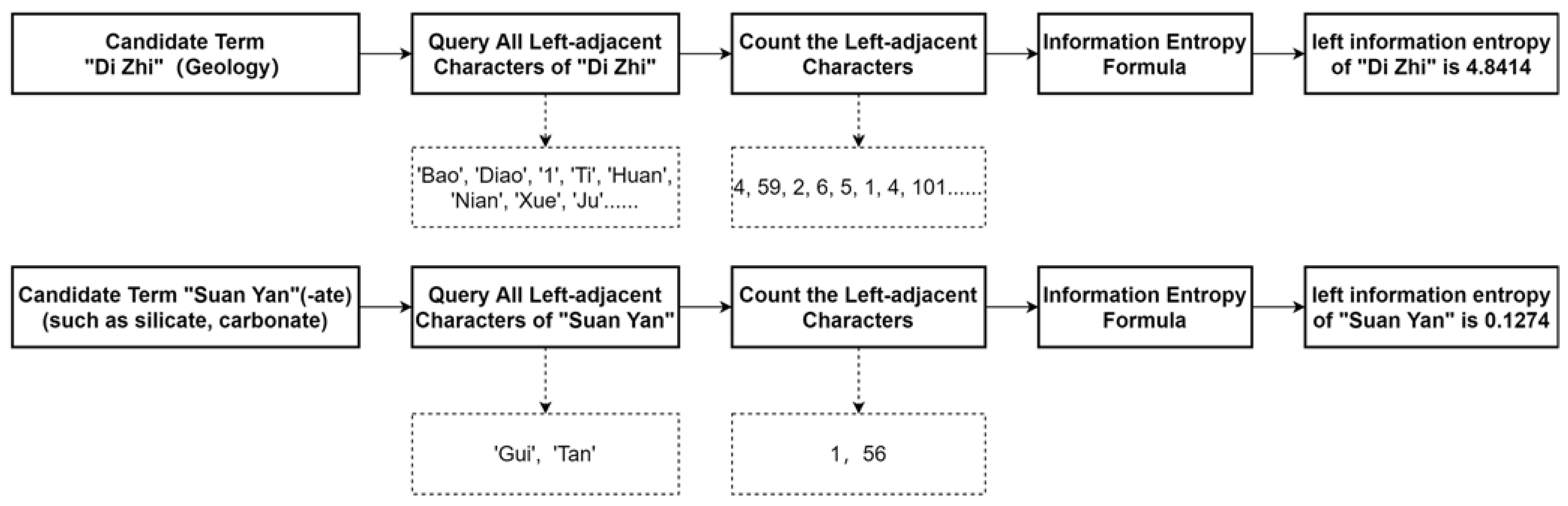

3.2. Information Entropy of Single Geological Text Candidate Terms

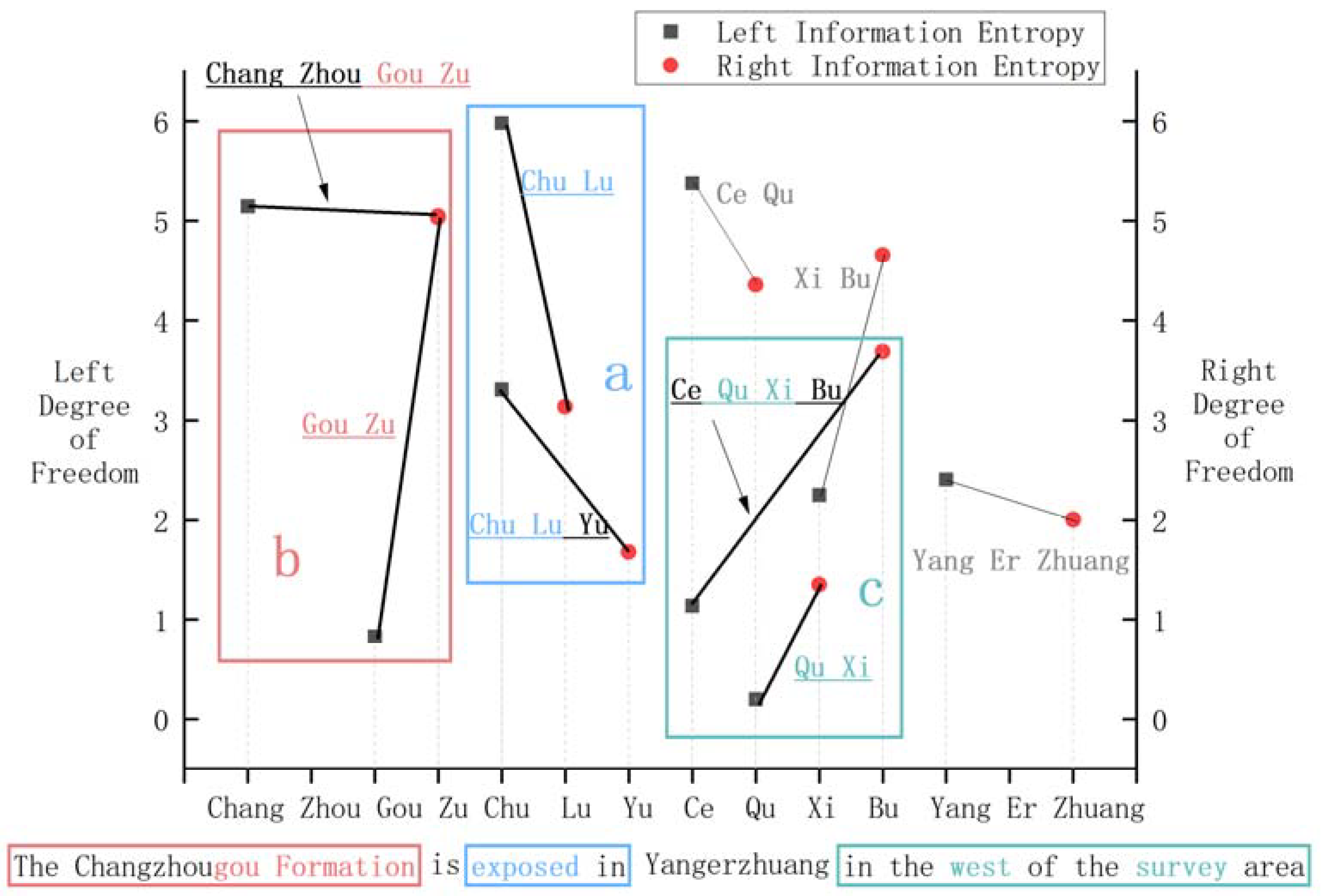

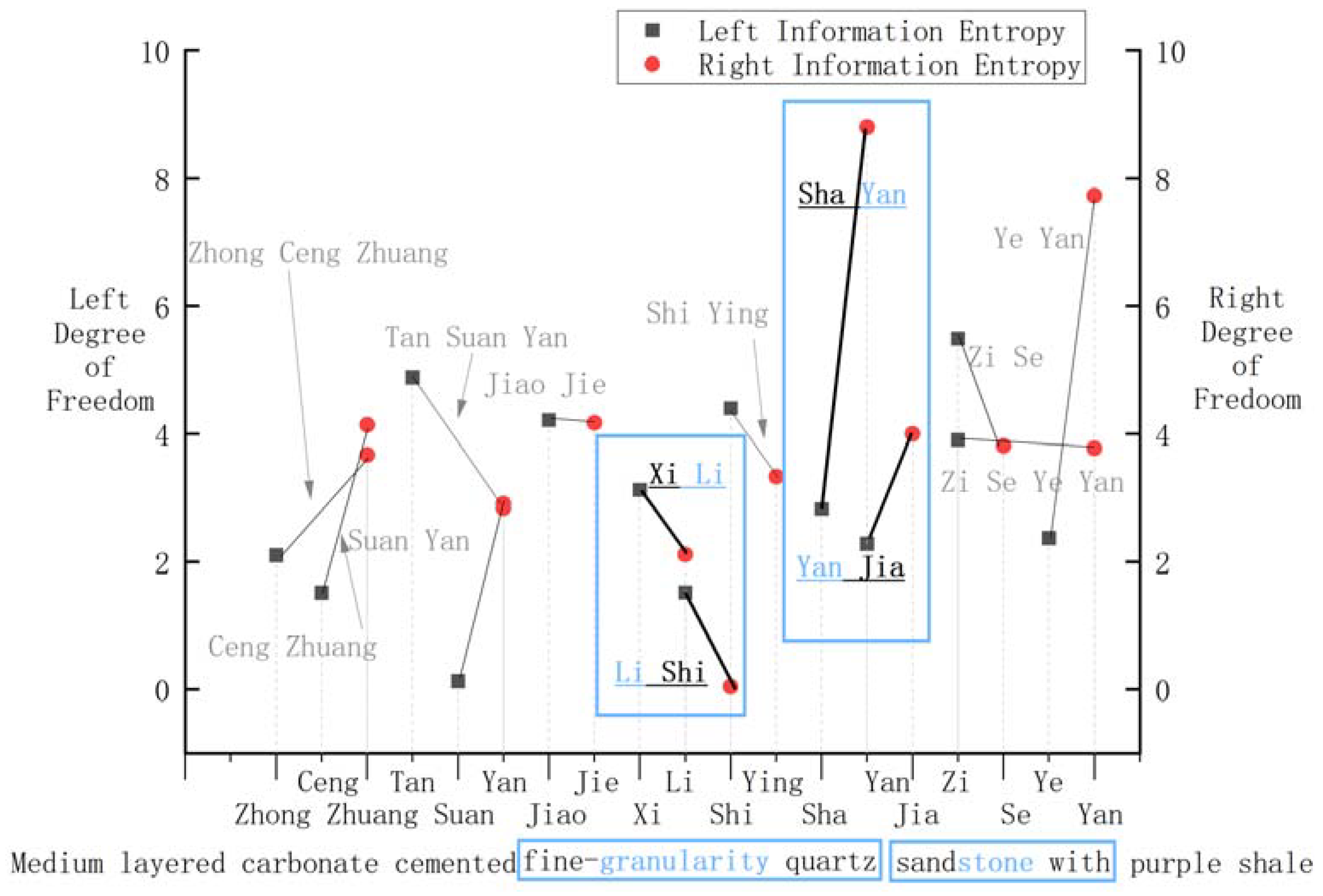

3.3. Boundary Conflict Reduction for Candidate Term

4. Experiments and Discussion

4.1. Data and Evaluation Indicators

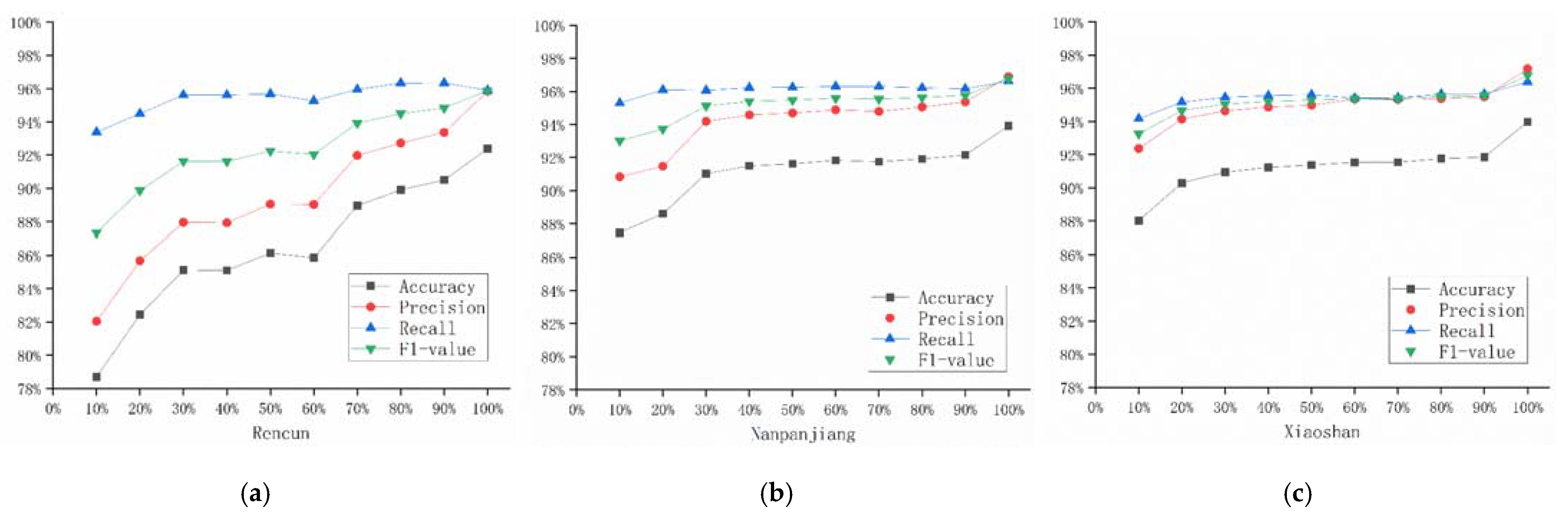

4.2. Experiment and Results

4.3. More Comparisons with SOTA Model

4.4. Discussion and Analysis

- (1)

- (2)

- Our experiments solve the problems of ambiguity recognition and OOV recognition without any samples, labels, or high-performance devices. As shown in Table 2, place names such as “Lin Zhou Shi (Linzhou City)” and “Ren Cun Zhen (Rencun Town)”, rock names such as “Zan Huang Yan Qun (Zanhuang Group)” and “Xie Chang Shi (plagioclase)”, numerical values, and common words received the correct segmentation.

- (3)

- Our algorithm uses dynamic numerical comparison instead of setting a fixed threshold manually, which is good to avoid the randomness in the segmentation based on information entropy. Based on the rapid advantages of statistical methods and the cost advantage without manual labor, large-scale informatization of geological text can be realized in a short time.

- (1)

- As the text length increases, most of the words are cut correctly and the accuracy will increase; however, more cases of identical end-word concatenation increase too. For example, the forward connection richness of the suffix “Jia Zhuang (Jiazhuang)” is richer than that of complete place names such as “Zhao Jia Zhuang (Zhao Jiazhuang)”, “Bai Jia Zhuang (Bai Jiazhuang)”, and “Guo Jia Zhuang (Guo Jiazhuang)”, resulting in error segmentation of “Zhao/Jia Zhuang (Zhao/Jiazhuang)”, “Bai/Jia Zhuang (Bai Jiazhuang)” and “Guo/Jia Zhuang (Guo Jiazhuang)”.

- (2)

- There are always terms that appear only once or are monotonically connected in a text of any length, but they appear more often in short texts, which may account for the lower accuracy of shorter texts. For example, sentences such as “Lue Xian Ya Bian La Chang (slightly flattened and elongated)” and “Bu Yi Qu Xiao Huo Geng Ming (not suitable for cancellation or renaming)” have only a fixed type of connection and are easy to be regarded as fake long terms and be segmented incompletely. However, some long words such as “He Nan Sheng/Guo Tu/Zi Yuan/Ting (Henan Provincial Department of Land and Resources)” and “Bei Jing/Da Di/Xi Yuan/Kuang Ye/Guan Li/Ji Shu/You Xian/Gong Si (Beijing Dadi Xiyuan Mining Management Technology Co. LTD)” will be segmented correctly.

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wu, C.L.; Liu, G.; Zhang, X.L.; He, Z.W.; Zhang, Z.T. Discussion on geological science big data and its applications. Chin. Sci. Bull. 2016, 61, 1797–1807. [Google Scholar] [CrossRef]

- Ma, K. Research on the Key Technologies of Geological Big Data Representation and Association. Ph.D. Thesis, China University of Geosciences, Wuhan, China, 2018. [Google Scholar]

- Chen, J.W.; Chen, J.G.; Wang, C.G.; Zhu, Y.Q. Research on segmentation of geological mineral text using conditional random fields. China Min. Mag. 2018, 101, 69–74. [Google Scholar]

- Wang, M.G.; Li, X.G.; Wei, Z.; Zhi, S.T.; Wang, H.Y. Chinese Word Segmentation Based on Deep Learning. In Proceedings of the 10th International Conference on Machine Learning and Computing (ICMLC 2018), New York, NY, USA, 26–28 February 2018; pp. 16–20. [Google Scholar] [CrossRef]

- Xie, X.J.; Xie, Z.; Ma, K.; Chen, J.G.; Qiu, Q.J.; Li, H.; Pan, S.Y.; Tao, L.F. Geological Named Entity Recognition based on Bert and BiGRU-Attention-CRF Model. Geol. Bull. China 2021, 1–13. Available online: http://kns.cnki.net/kcms/detail/11.4648.p.20210913.1040.002.html (accessed on 30 March 2023).

- Tian, Y.H.; Song, Y.; Xia, F.; Wang, Y.G. Improving Chinese Word Segmentation with Wordhood Memory Networks. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020. [Google Scholar]

- Zheng, M.G.; Liu, M.L.; Shen, Y.M. An improved Chinese word segmentation algorithm based on dictionary. Softw. Guide 2016, 15, 42–44. [Google Scholar]

- Sun, M.S.; Zuo, Z.P.; Huang, C.N. An experimental Study on Dictionary Mechanism for Chinese Word Segmentation. J. Chin. Inf. Process. 2000, 14, 1–6. [Google Scholar]

- Yang, Y. Mechanical Statistical Word Segmentation System Based on Hash Structure. Master’s Thesis, Central South University, Changsha, China, 2005. [Google Scholar]

- Mo, J.W.; Zheng, Y.; Shou, Z.Y.; Zhang, S.L. Improved Chinese word segmentation method based on dictionary. Comput. Eng. Des. 2013, 34, 1802–1807. [Google Scholar]

- Rabiner, L.R. A Tutorial on Hidden Markov Models and Selected Applications in Speech Recognition. Proc. IEEE 1989, 77, 257–286. [Google Scholar] [CrossRef]

- Low, J.K.; Ng, H.T.; Guo, W.Y. A maximum entropy approach to Chinese word segmentation. In Proceedings of the 4th Sighan Workshop on Chinese Language Processing, Jeju Island, Republic of Korea, 14–15 July 2005; pp. 181–184. [Google Scholar]

- McCallum, A.; Freitag, D.; Pereira, F. Maximum Entropy Markov Models for Information Extraction and Segmentation. In Proceedings of the 17th International Conference on Machine Learning, Stanford, CA, USA, 29 June–2 July 2000; pp. 447–454. [Google Scholar]

- Zhou, J.S.; Dai, X.Y.; Ni, R.Y.; Chen, J.J. A Hybrid Approach to Chinese Word Segmentation around CRFs. In Proceedings of the 4th Sighan Workshop on Chinese Language Processing, Jeju Island, Republic of Korea, 14–15 July 2005. [Google Scholar]

- Collobert, R.; Weston, J.; Bottou, L.; Karlen, M.; Kavukcuoglu, K.; Kuksa, P. Natural language processing (almost) from scratch. J. Mach. Learn. Res. 2011, 12, 2493–2537. [Google Scholar]

- Vincent, P.; Larochelle, H.; Lajoie, I.; Bengio, Y.; Manzagol, P.A. Stacked Denoising Autoencoders: Learning Useful Representations in a Deep Network with a Local Denoising Criterion. J. Mach. Learn. Res. 2010, 11, 12. [Google Scholar]

- Cai, D.; Zhao, H. Neural Word Segmentation Learning for Chinese. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics, Berlin, Germany, 7–12 August 2016; pp. 409–420. [Google Scholar]

- Jin, C.; Li, W.H.; Chen, J.; Jin, X.; Guo, Y. Bi-directional Long Short-term Memory Neural Networks for Chinese Word Segmentation. J. Chin. Inf. Process. 2018, 32, 29–37. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Zhang, X. Research on Chinese Word Segmentation for Construction Domain. Master’s Thesis, Beijing University of Engineering and Architecture, Beijing, China, 2021. [Google Scholar]

- Liu, S.Q.; Zhou, L.; Li, C.Y.; Zhang, Y.Z.; Li, Y.D. Research on Modeling of Traditional Chinese Medicine Word Segmentation Model Based on Sentencepiece. World Chin. Med. 2021, 16, 981–985+990. [Google Scholar]

- Zhang, X.Y.; Ye, P.; Wang, S.; Du, M. Geological entity recognition method based on Deep Belief Networks. Acta Petrol. Sin. 2018, 34, 343–351. [Google Scholar]

- Wang, H.; Zhu, X.L.; Zeng, T.; Qiao, D.Y.; Guo, J.T. A method of Geologic Words Identification Based on Statistics. Softw. Guide 2020, 19, 211–218. [Google Scholar]

- Chu, D.P.; Wan, B.; Li, H.; Fang, F.; Wang, R. Geological Entity Recognition Based on ELMO-CNN-BILSTM-CRF Model. Earth Sci. 2021, 46, 3039–3048. [Google Scholar]

- Liu, Y.; Zhang, K.; Wang, H.J.; Yang, G.Q. Unsupervised Low-resource Name Entities Recognition in Electric Power Domain. J. Chin. Inf. Process. 2022, 36, 69–79. [Google Scholar]

- Gu, C.; Yu, C.H.; Yu, Y.; Guan, W.W. Unsupervised keyword extraction model for Chinese single text based on BERT model. J. Zhejiang Sci.-Tech. Univ. Nat. Sci. Ed. 2022, 47, 424–432. [Google Scholar]

- Zhang, S.H.; Ye, Q.; Cheng, C.L.; Zou, J. Domain Adaptive Unsupervised Word Segmentation for Traditional Chinese Medicine Ancient Books. Softw. Guide 2022, 21, 96–100. [Google Scholar]

- Zhang, M.; Li, S.; Zhao, T.J. Algorithm of n-gram Statistics for Arbitrary n and Knowledge Acquisition Based on Statistics. J. China Soc. Sci. Tech. Inf. 1997, 16, 1. [Google Scholar]

- Ren, H.; Zeng, J.F. A Chinese Word Segmentation Algorithm Based on Information Entropy. J. Chin. Inf. Process. 2006, 5, 40–43+90. [Google Scholar]

- Chen, G.Q.; Liu, G.N. An Overview on Information Gain-Based GARC-Type Association Classification. J. Inf. Resour. Manag. 2011, 2, 4–9. [Google Scholar]

- Gong, W. Watershed Model Uncertainty Analysis Based on Information Entropy and Mutual Information. Ph.D. Thesis, Tsinghua University, Beijing, China, 2012. [Google Scholar]

- Zheng, J.S. An Image Information Entropy-Based Algorithm of No-Reference Image Quality Assessment. Master’s Thesis, Beijing Jiaotong University, Beijing, China, 2015. [Google Scholar]

- Meng, Y.Y.; Guo, J. Link prediction algorithm based on information entropy improved PCA model. J. Comput. Appl. 2022, 42, 2823. [Google Scholar]

- Gao, J.Q.; Zhao, Q.C. Study on word Segmentation Method of classical Literature Based on New Word Discovery. Comput. Technol. Dev. 2021, 31, 178–181+207. [Google Scholar]

- Zhang, G.D.; Yang, C.; Zhan, X.L.; Fang, H.; Wang, J.F. An Identification Method Text of Overall Commendatory and Derogatory Tendency of Sentences Based on Information Entropy. Microcomput. Appl. 2021, 37, 12–15. [Google Scholar]

| Type of Conflict | Side | Candidate Term 1 | Information Entropy 1 | Candidate Term 2 | Information Entropy 2 |

|---|---|---|---|---|---|

| same-starting-point inclusive | right | Zhong Yuan Gu (Mesoproterozoic) | 0.27 | Zhong Yuan Gu Jie (n.Mesoproterozoic) | 3.24 |

| Zan Huang (Zanhuang) | 0.42 | Zan Huang Yan Qun (Zanhuang Group) | 5.27 | ||

| same-ending-point inclusive | left | Sha Zhi Ye Yan (sandy shale) | 2.86 | Zhi Ye Yan (quality shale) | 1.35 |

| Li Shi Ceng (gravel layer) | 3.65 | Jia Li Shi Ceng (with gravel layer) | 1.00 | ||

| inner inclusive | both | Jia Gou (jiagou) | (0.82, 0.53) | Ma Jia Gou Zu (Majiagou Formation) | (4.89, 3.87) |

| Shi Ying (quartz) | (4.40, 3.33) | Yu Shi Ying Xiang Jian (alternate with quartz) | (0.91, 2.25) | ||

| intersecting | connected | Hui Se (grey) | (7.74, 3.30) | Se Zhong (color middle) | (2.13, 1.40) |

| Ju Di (office No.) | (1.76, 1.36) | Di Qi (seventh) | (2.16, 1.49) |

| Description | Text |

|---|---|

| Result in Pinyin | /Lin Zhou Shi/Ren Cun Zhen/Mu Qiu Quan/Zan Huang Yan Qun/Shi Ce/Di Zhi/Pou Mian/: Pou Mian/Qi Dian/Zuo Biao/X……/Hui Hei Se/Shi Bian/Hui Chang Hui Lü Yan/,/Zhu Yao Kuang Wu/Cheng Fen:/Xie Chang Shi/50%,/Tou Hui Shi/44% |

| Result in English | /Geological profile/measured in/Muqiuquan/Zanhuang Rock Group/,/Rencun Town/,/Linzhou City/:The profile/starting coordinates/X……/Gray-black/altered gabbro, main mineral composition:/plagioclase/50%,/diopside/44% |

| Data | Text Length | Tools | Accuracy | Precision | Recall | F1-Value |

|---|---|---|---|---|---|---|

| Rencun | 241k | HFCR | 92.38% | 95.85% | 95.90% | 95.88% |

| Baidu LAC | 80.63% | 86.62% | 89.86% | 88.21% | ||

| Pkuseg | 83.35% | 95.55% | 83.91% | 89.35% | ||

| Hanlp | 81.49% | 88.23% | 89.18% | 88.70% | ||

| Jieba | 78.95% | 87.94% | 84.99% | 86.44% | ||

| Nanpanjiang | 346k | HFCR | 93.91% | 96.89% | 96.62% | 96.76% |

| Baidu LAC | 78.13% | 80.12% | 95.77% | 87.25% | ||

| Pkuseg | 86.18% | 97.60% | 86.07% | 91.48% | ||

| Hanlp | 79.31% | 83.00% | 92.90% | 87.69% | ||

| Jieba | 83.99% | 91.46% | 89.27% | 90.35% | ||

| Xiaoshan | 576k | HFCR | 93.98% | 97.18% | 96.39% | 96.78% |

| Baidu LAC | 80.62% | 86.47% | 90.06% | 88.23% | ||

| Pkuseg | 82.72% | 92.55% | 86.04% | 89.18% | ||

| Hanlp | 80.73% | 89.20% | 86.62% | 87.89% | ||

| Jieba | 77.28% | 87.62% | 82.17% | 84.80% |

| Description | Text |

|---|---|

| Result in Pinyin | /Zhe Xie/Te Shu/De/She Ji/,/Dui Zhe/Li De/Bing Ren/Lai Shuo/Shi Tie/Xin De/Guan Huai/./Zuo Wei/Ao Si Lu/Da Xue/De Yi/Bu Fen/,/Song Nuo Si/Yi Yuan/Dan Fu Zhe/Shu Xiang/Zhong Yao/De/Can Ji Ren/Kang Fu/Ke Yan/Gong Zuo/,/Bing Shi/Nuo Wei/Yan Jiu/Wai Ke/Yong Yao/He/Kang Fu/Yi Liao/De/Zhu Yao/Yi Yuan/. |

| Result in English | /These/special/designs/,/for/the patients/here/is intimate/care/./As/part/of the/University of/Oslo/,/Sonnos/Hospital/is responsible for/several/important/research/on the rehabilitation of/persons with disabilities/and is/the main/hospital/in Norway/for research/on surgical medicine/and/rehabilitation/. |

| Data | Tools | Accuracy | Precision | Recall | F1-value |

|---|---|---|---|---|---|

| MSR | HFCR | 79.37% | 86.63% | 87.51% | 87.07% |

| Baidu LAC | 86.46% | 90.41% | 94.35% | 92.38% | |

| Pkuseg | 87.40% | 94.81% | 90.54% | 92.63% | |

| Hanlp | 85.53% | 90.99% | 92.21% | 91.60% | |

| Jieba | 78.63% | 87.85% | 84.50% | 86.15% | |

| PKU | HFCR | 78.93% | 83.20% | 91.86% | 87.32% |

| Baidu LAC | 82.51% | 84.00% | 97.35% | 90.18% | |

| Pkuseg | 90.25% | 92.41% | 97.18% | 94.73% | |

| Hanlp | 86.43% | 88.20% | 97.32% | 92.54% | |

| Jieba | 77.17% | 83.22% | 88.20% | 85.64% |

| Data | Tools | Accuracy | Precision | Recall | F1-Value |

|---|---|---|---|---|---|

| Rencun | HFCR | 92.38% | 95.85% | 95.90% | 95.88% |

| WMSEG | 68.34% | 79.27% | 72.68% | 75.84% | |

| GPT-3 | 92.45% * | ||||

| Nanpanjiang | HFCR | 93.91% | 96.89% | 96.62% | 96.76% |

| WMSEG | 80.03% | 92.83% | 81.33% | 86.70% | |

| GPT-3 | 94.59% * | ||||

| Xiaoshan | HFCR | 93.98% | 97.18% | 96.39% | 96.78% |

| WMSEG | 76.25% | 86.05% | 82.16% | 84.06% | |

| GPT-3 | 92.24% * | ||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tang, Y.; Deng, J.; Guo, Z. Candidate Term Boundary Conflict Reduction Method for Chinese Geological Text Segmentation. Appl. Sci. 2023, 13, 4516. https://doi.org/10.3390/app13074516

Tang Y, Deng J, Guo Z. Candidate Term Boundary Conflict Reduction Method for Chinese Geological Text Segmentation. Applied Sciences. 2023; 13(7):4516. https://doi.org/10.3390/app13074516

Chicago/Turabian StyleTang, Yu, Jiqiu Deng, and Zhiyong Guo. 2023. "Candidate Term Boundary Conflict Reduction Method for Chinese Geological Text Segmentation" Applied Sciences 13, no. 7: 4516. https://doi.org/10.3390/app13074516

APA StyleTang, Y., Deng, J., & Guo, Z. (2023). Candidate Term Boundary Conflict Reduction Method for Chinese Geological Text Segmentation. Applied Sciences, 13(7), 4516. https://doi.org/10.3390/app13074516