A Full-Color Holographic System Based on Taylor Rayleigh–Sommerfeld Diffraction Point Cloud Grid Algorithm

Abstract

1. Introduction

1.1. Background

1.2. Related Researches

1.3. Research of Our Paper

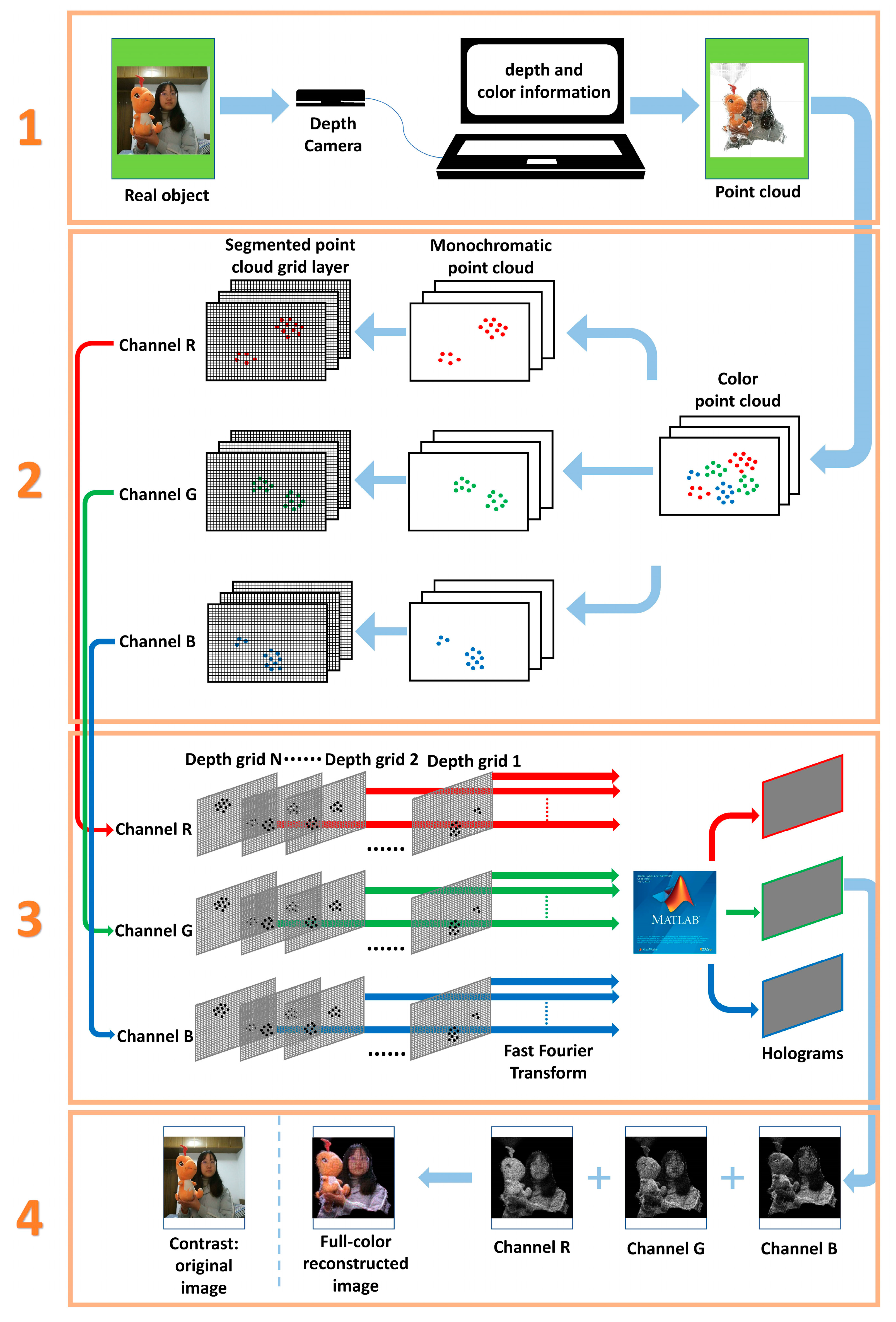

2. Introduction to Full-Color Holographic System Module

3. Theoretical Derivation of the TR-PCG Algorithm

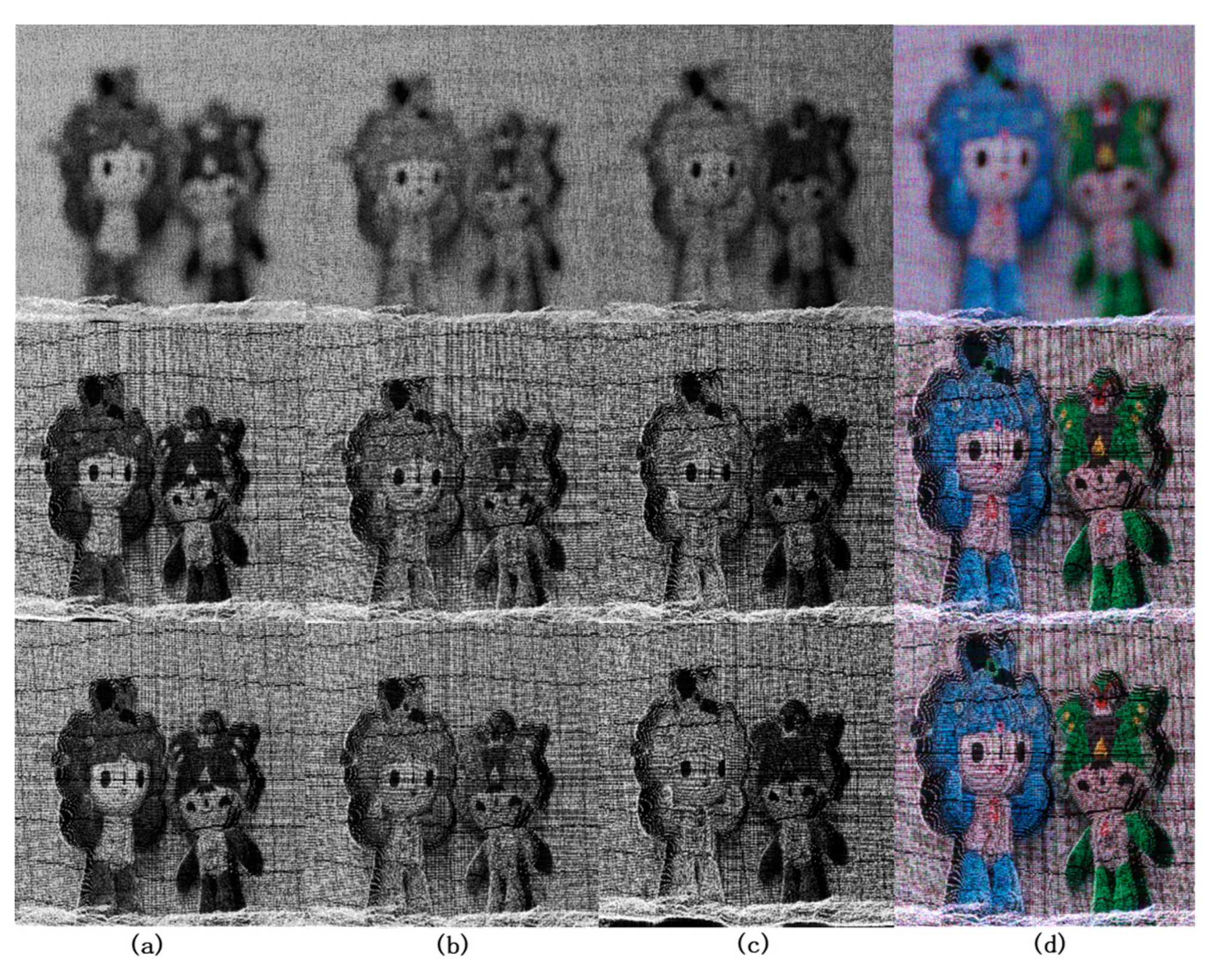

4. Experiments and Results

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Cao, L.C.; He, Z.H.; Liu, K.X.; Sui, X.M. Progress and challenges in dynamic holographic 3D display for the metaverse. Infrared Laser Eng. 2022, 51, 267–281. [Google Scholar]

- Sahin, E.; Stoykova, E.; Makinen, J.; Gotchev, A. Computer-Generated Holograms for 3D Imaging: A Survey. ACM Comput. Surv. (CSUR) 2020, 53, 1–35. [Google Scholar] [CrossRef]

- Zhao, Y.; Shi, C.X.; Kwon, K.C.; Piao, Y.L.; Piao, M.L.; Kim, N. Fast calculation method of computer-generated hologram using a depth camera with point cloud gridding. Opt. Commun. 2018, 411, 166–169. [Google Scholar] [CrossRef]

- Nishitsuji, T.; Shimobaba, T.; Kakue, T.; Ito, T. Fast calculation of computer-generated hologram of line-drawn objects without FFT. Opt. Express 2020, 28, 15907–15924. [Google Scholar] [CrossRef] [PubMed]

- Lucente, M.E. Interactive computation of holograms using a look-up table. J. Electron. Imaging 1993, 2, 28–34. [Google Scholar] [CrossRef]

- Pan, Y.C.; Xu, X.W.; Solanki, S.; Liang, X.N.; Tanjung, R.B.A.; Tan, C.W.; Chong, T.C. Fast CGH computation using S-LUT on GPU. Opt. Express 2009, 17, 18543–18555. [Google Scholar] [CrossRef] [PubMed]

- Jia, J.; Wang, Y.T.; Liu, J.; Li, X.; Pan, Y.J.; Sun, Z.M.; Zhang, B.; Zhao, Q.; Jiang, W. Reducing the memory usage for effective computer-generated hologram calculation using compressed look-up table in full-color holographic display. Appl. Opt. 2013, 52, 1404–1412. [Google Scholar] [CrossRef] [PubMed]

- Gao, C.; Liu, J.; Xue, G.L.; Jia, J.; Wang, Y.T. Accurate compressed look up table method for CGH in 3D holographic display. Opt. Express 2015, 23, 33194–33204. [Google Scholar] [CrossRef]

- Pi, D.P.; Liu, J.; Han, Y.; Yu, S.; Xiang, N. Acceleration of computer-generated hologram using wavefront-recording plane and look-up table in three-dimensional holographic display. Opt. Express 2020, 28, 9833–9841. [Google Scholar] [CrossRef]

- Shimobaba, T.; Masuda, N.; Ito, T. Simple and fast calculation algorithm for computer-generated hologram with wavefront recording plane. Opt. Lett. 2009, 34, 3133–3135. [Google Scholar] [CrossRef]

- Zhao, Y.; Piao, M.L.; Li, G.; Kim, N. Fast calculation method of computer-generated cylindrical hologram using wave-front recording surface. Opt. Lett. 2015, 40, 3017–3020. [Google Scholar] [CrossRef] [PubMed]

- Islam, M.S.; Piao, Y.L.; Zhao, Y.; Kwon, K.C.; Cho, E.; Kim, N. Max-depth-range technique for faster full-color hologram generation. Appl. Opt. 2020, 59, 3156–3164. [Google Scholar] [CrossRef] [PubMed]

- Piao, Y.L.; Erdenebat, M.U.; Zhao, Y.; Kwon, K.C.; Kim, N. Improving the quality of full-color holographic three dimensional displays using depth related multiple wavefront recording planes with uniform active areas. Appl. Opt. 2020, 59, 5179–5188. [Google Scholar] [CrossRef] [PubMed]

- Phan, A.H.; Piao, M.L.; Gil, S.K.; Kim, N. Generation speed and reconstructed image quality enhancement of a long-depth object using double wavefront recording planes and a GPU. Appl. Opt. 2014, 53, 4817–4824. [Google Scholar] [CrossRef] [PubMed]

- Wei, L.J.; Okuyama, F.; Sakamoto, Y.J. Fast calculation method with saccade suppression for a computer-generated hologram based on Fresnel zone plate limitation. Opt. Express 2020, 28, 13368–13383. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Cao, L.C.; Jin, G.F. Scaling of Three-Dimensional Computer-Generated Holograms with Layer-Based Shifted Fresnel Diffraction. Appl. Sci. 2019, 9, 2118. [Google Scholar] [CrossRef]

- Park, J.H. Recent progress in computer-generated holography for three-dimensional scenes. J. Inf. Disp. 2017, 18, 1–12. [Google Scholar] [CrossRef]

- Shen, F.B.; Wang, A.B. Fast-Fourier-transform based numerical integration method for the Rayleigh-Sommerfeld diffraction formula. Appl. Opt. 2006, 45, 1102–1110. [Google Scholar] [CrossRef]

- He, Z.H.; Liu, K.X.; Sui, X.M.; Cao, L.C. Full-Color Holographic Display with Enhanced Image Quality by Iterative Angular-Spectrum Method. In Proceedings of the Digital Holography and Three-Dimensional Imaging 2022, Cambridge, UK, 1–4 August 2022. [Google Scholar]

- Chen, N.; Wang, C.L.; Heidrich, W. HTRSD: Hybrid Taylor Rayleigh-Sommerfeld diffraction. Opt. Express 2022, 30, 37727–37735. [Google Scholar] [CrossRef]

- Zhao, Y.; Kwon, K.C.; Erdenebat, M.U.; Jeon, S.H.; Piao, M.L.; Kim, N. Implementation of full-color holographic system using non-uniformly sampled 2D images and compressed point cloud gridding. Opt. Express 2019, 27, 29746–29758. [Google Scholar] [CrossRef]

- Zhao, Y.; Erdenebat, M.U.; Alam, M.S.; Piao, M.L.; Jeon, S.H.; Kim, N. Multiple-camera holographic system featuring efficient depth grids for representation of real 3D objects. Appl. Opt. 2019, 58, A242–A250. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Y.; Cao, L.; Zhang, H.; Kong, D.; Jin, G. Accurate calculation of computer-generated holograms using angular-spectrum layer-oriented method. Opt. Express 2015, 23, 25440–25449. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Y.; Cao, L.; Zhang, H.; Tan, W.; Wu, S.; Wang, Z.; Jin, G. Time-division multiplexing holographic display using angu-lar-spectrum layer-oriented method. Chin. Opt. Lett. 2016, 14, 16–20. [Google Scholar]

- Zhao, Y.; Bu, J.W.; Liu, W.; Ji, J.H.; Yang, Q.H.; Lin, S.F. Implementation of a full-color holographic system using RGB-D Salient Object Detection and Divided point cloud gridding. Opt. Express 2023, 31, 1641–1655. [Google Scholar] [CrossRef] [PubMed]

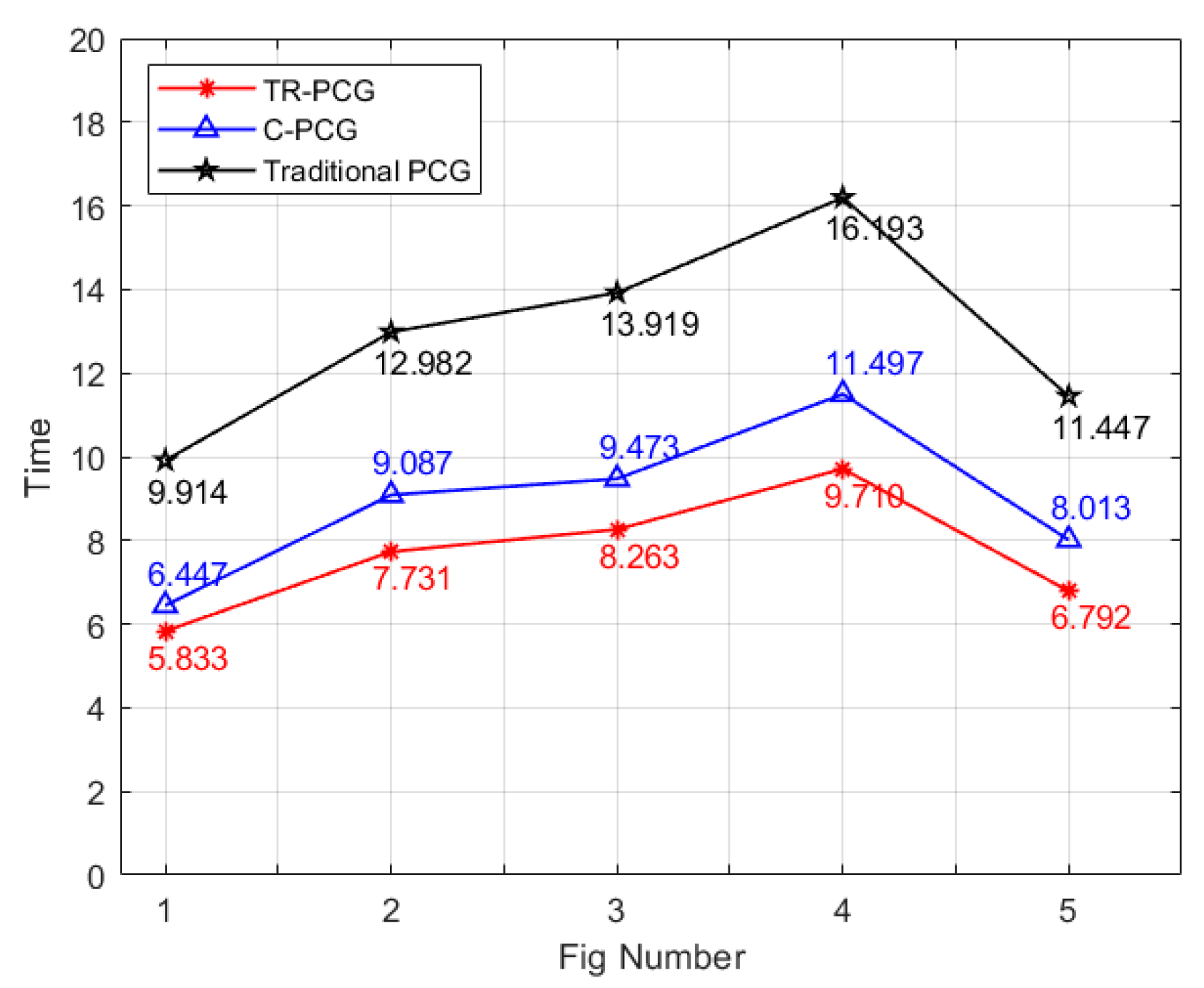

| Object | CPU Running Time | ||||||

|---|---|---|---|---|---|---|---|

| Name | Number of points | Number of layers | WRP | Traditional PCG | C-PCG | RS-PCG | TR-PCG |

| beibei_nini | 1,273,824 | 350 | 15,125.533 | 9.914 | 6.447 | 7.815 | 5.833 |

| person with beibei | 694,269 | 466 | 9372.064 | 12.982 | 9.087 | 10.080 | 7.731 |

| person with dragon | 671,427 | 500 | 8996.601 | 13.919 | 9.473 | 10.914 | 8.263 |

| dragon | 481,815 | 586 | 8088.917 | 16.193 | 11.497 | 12.784 | 9.710 |

| person with nini | 617,904 | 410 | 8523.862 | 11.447 | 8.013 | 8.963 | 6.792 |

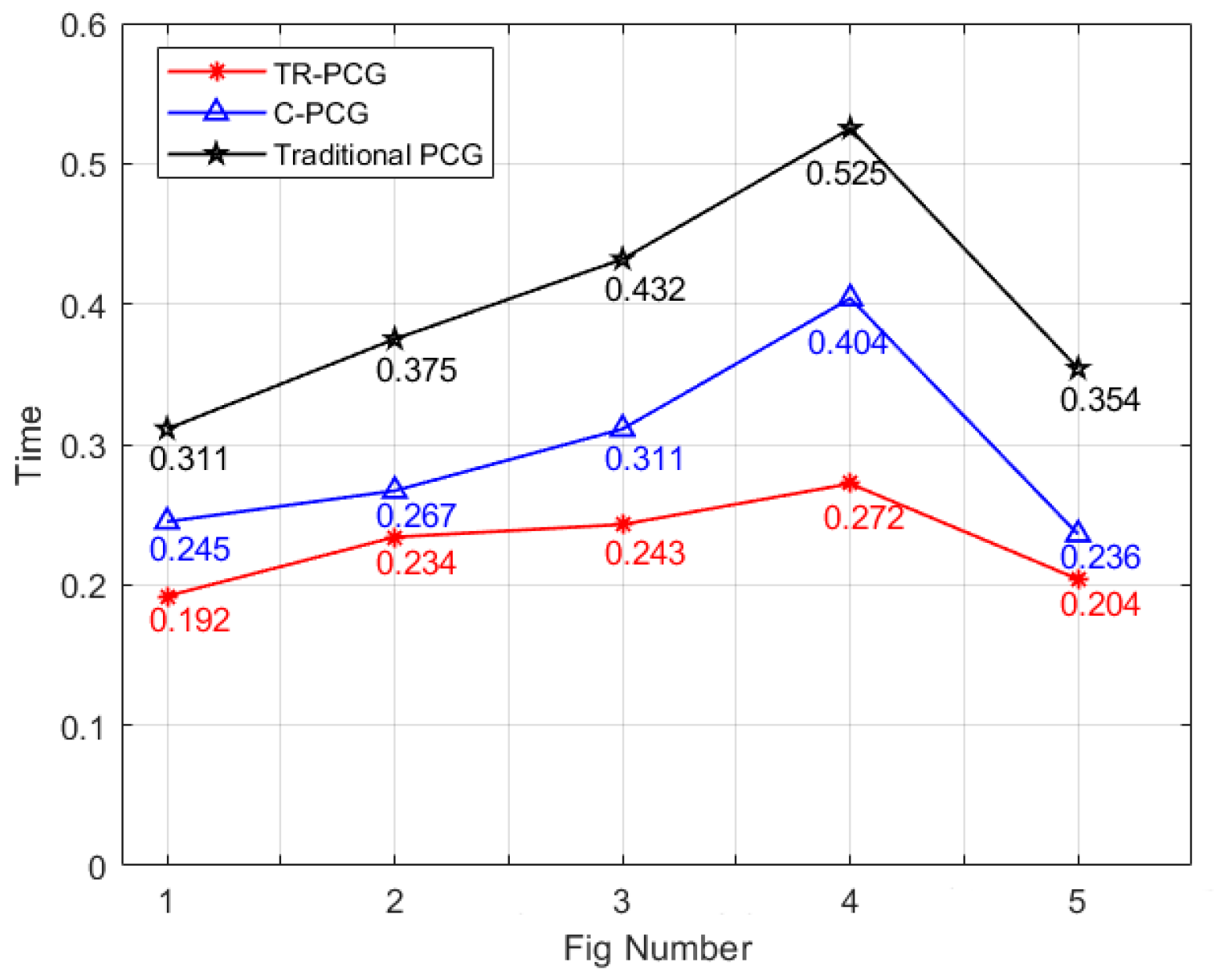

| Object | GPU running time | ||||||

| Name | Number of points | Number of layers | WRP | Traditional PCG | C-PCG | RS-PCG | TR-PCG |

| beibei_nini | 1,273,824 | 350 | 78.162 | 0.311 | 0.245 | 0.253 | 0.192 |

| person with beibei | 694,269 | 466 | 49.338 | 0.375 | 0.267 | 0.295 | 0.234 |

| person with dragon | 671,427 | 500 | 45.791 | 0.432 | 0.311 | 0.336 | 0.243 |

| dragon | 481,815 | 586 | 40.985 | 0.525 | 0.404 | 0.431 | 0.272 |

| person with nini | 617,904 | 410 | 43.272 | 0.354 | 0.236 | 0.262 | 0.204 |

| Object | CPU Running Time | |||||

|---|---|---|---|---|---|---|

| Name | Number of points | Number of layers | Traditional PCG | C-PCG | RS-PCG | TR-PCG |

| beibei_nini | 1,255,023 | 473 | 65.854 | 43.171 | 54.377 | 37.633 |

| person with beibei | 726,075 | 765 | 105.332 | 77.425 | 87.368 | 60.756 |

| person with dragon | 600,759 | 837 | 115.258 | 83.689 | 95.639 | 66.412 |

| dragon | 1,240,452 | 486 | 67.147 | 46.077 | 55.384 | 38.661 |

| person with nini | 643,329 | 691 | 94.962 | 71.343 | 78.135 | 54.530 |

| Object | GPU running time | |||||

| Name | Number of points | Number of layers | Traditional PCG | C-PCG | RS-PCG | TR-PCG |

| beibei_nini | 1,255,023 | 473 | 2.126 | 1.808 | 1.816 | 1.143 |

| person with beibei | 726,075 | 763 | 3.464 | 2.571 | 2.971 | 1.972 |

| person with dragon | 600,759 | 836 | 4.038 | 2.942 | 3.385 | 2.303 |

| dragon | 1,240,452 | 486 | 2.327 | 1.648 | 1.917 | 1.152 |

| person with nini | 643,329 | 690 | 2.971 | 2.026 | 2.532 | 1.684 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, Q.; Zhao, Y.; Liu, W.; Bu, J.; Ji, J. A Full-Color Holographic System Based on Taylor Rayleigh–Sommerfeld Diffraction Point Cloud Grid Algorithm. Appl. Sci. 2023, 13, 4466. https://doi.org/10.3390/app13074466

Yang Q, Zhao Y, Liu W, Bu J, Ji J. A Full-Color Holographic System Based on Taylor Rayleigh–Sommerfeld Diffraction Point Cloud Grid Algorithm. Applied Sciences. 2023; 13(7):4466. https://doi.org/10.3390/app13074466

Chicago/Turabian StyleYang, Qinhui, Yu Zhao, Wei Liu, Jingwen Bu, and Jiahui Ji. 2023. "A Full-Color Holographic System Based on Taylor Rayleigh–Sommerfeld Diffraction Point Cloud Grid Algorithm" Applied Sciences 13, no. 7: 4466. https://doi.org/10.3390/app13074466

APA StyleYang, Q., Zhao, Y., Liu, W., Bu, J., & Ji, J. (2023). A Full-Color Holographic System Based on Taylor Rayleigh–Sommerfeld Diffraction Point Cloud Grid Algorithm. Applied Sciences, 13(7), 4466. https://doi.org/10.3390/app13074466