TS-CGANet: A Two-Stage Complex and Real Dual-Path Sub-Band Fusion Network for Full-Band Speech Enhancement

Abstract

:1. Introduction

- We introduced the CGAU into full-band speech enhancement and used spectrum splitting to process full-band speech. It can better process the global and local information of low-frequency speech, and provide external knowledge guidance when processing high-frequency speech;

- We improved on the CGAU and built a complex and real dual-path speech enhancement network that can realize the information interaction between real and virtual parts, as well as improve the speech enhancement effect;

- Regarding the Voice Bank + DEMAND dataset [21], the TS-CGANet we proposed was superior to other previous methods, and an ablation experiment verified our design choice.

2. Related Works

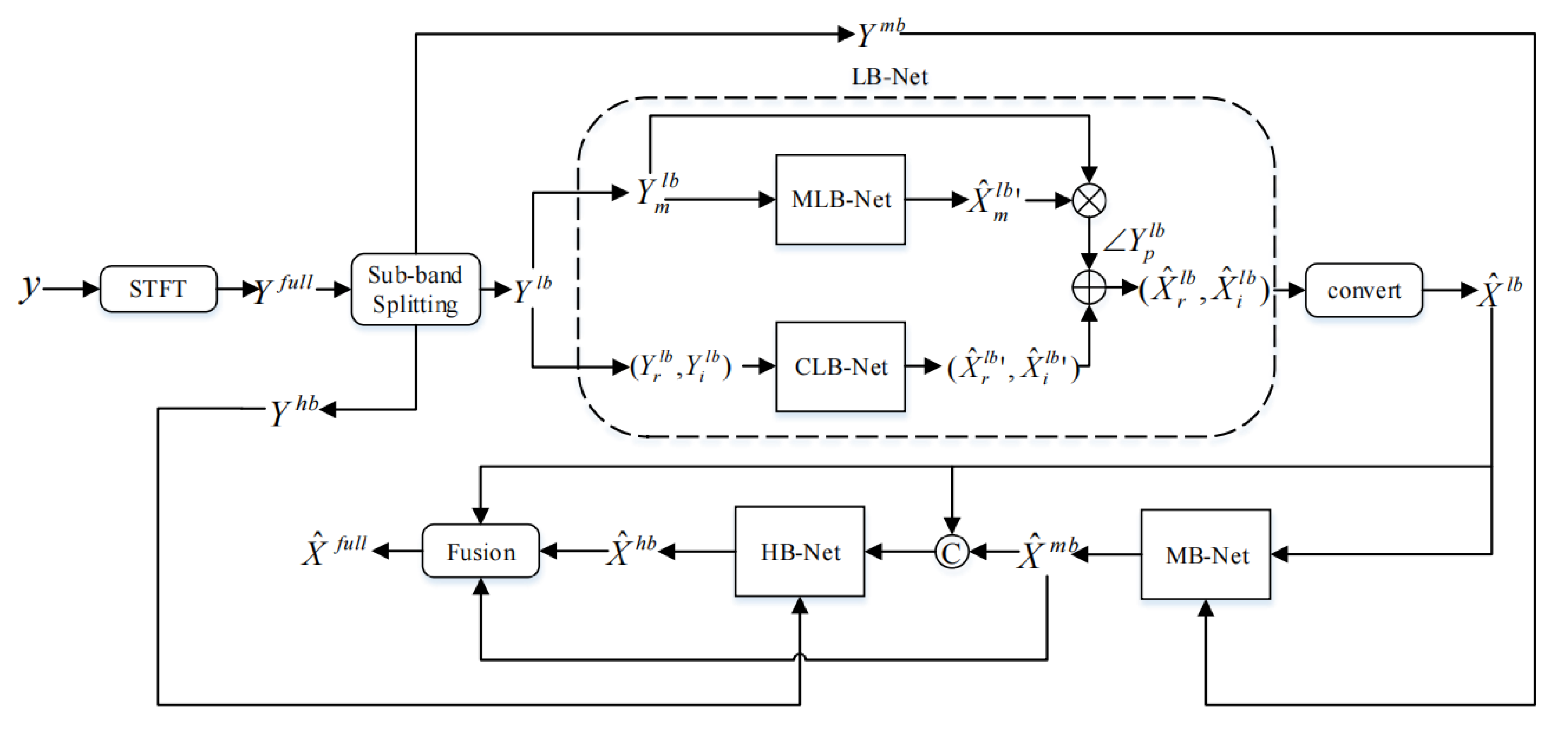

3. Methodology

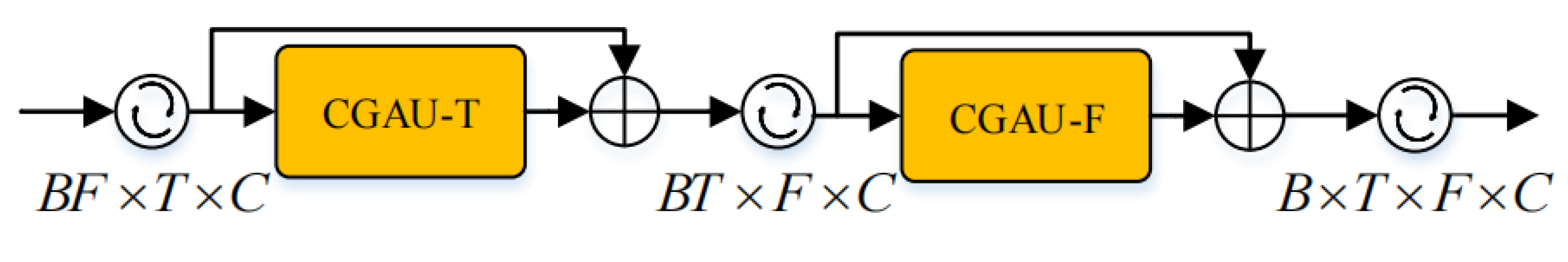

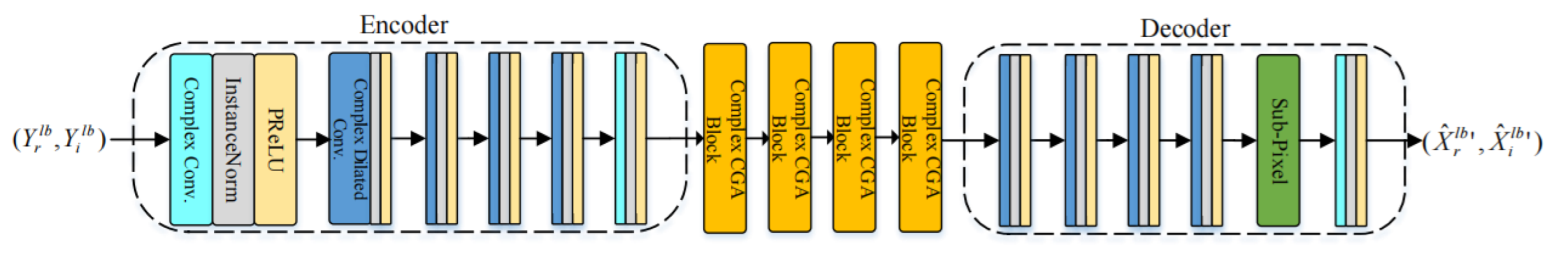

3.1. Dual-Branch Low-Band Speech Enhancement Network LB-Net

3.1.1. Encoder and Decoder

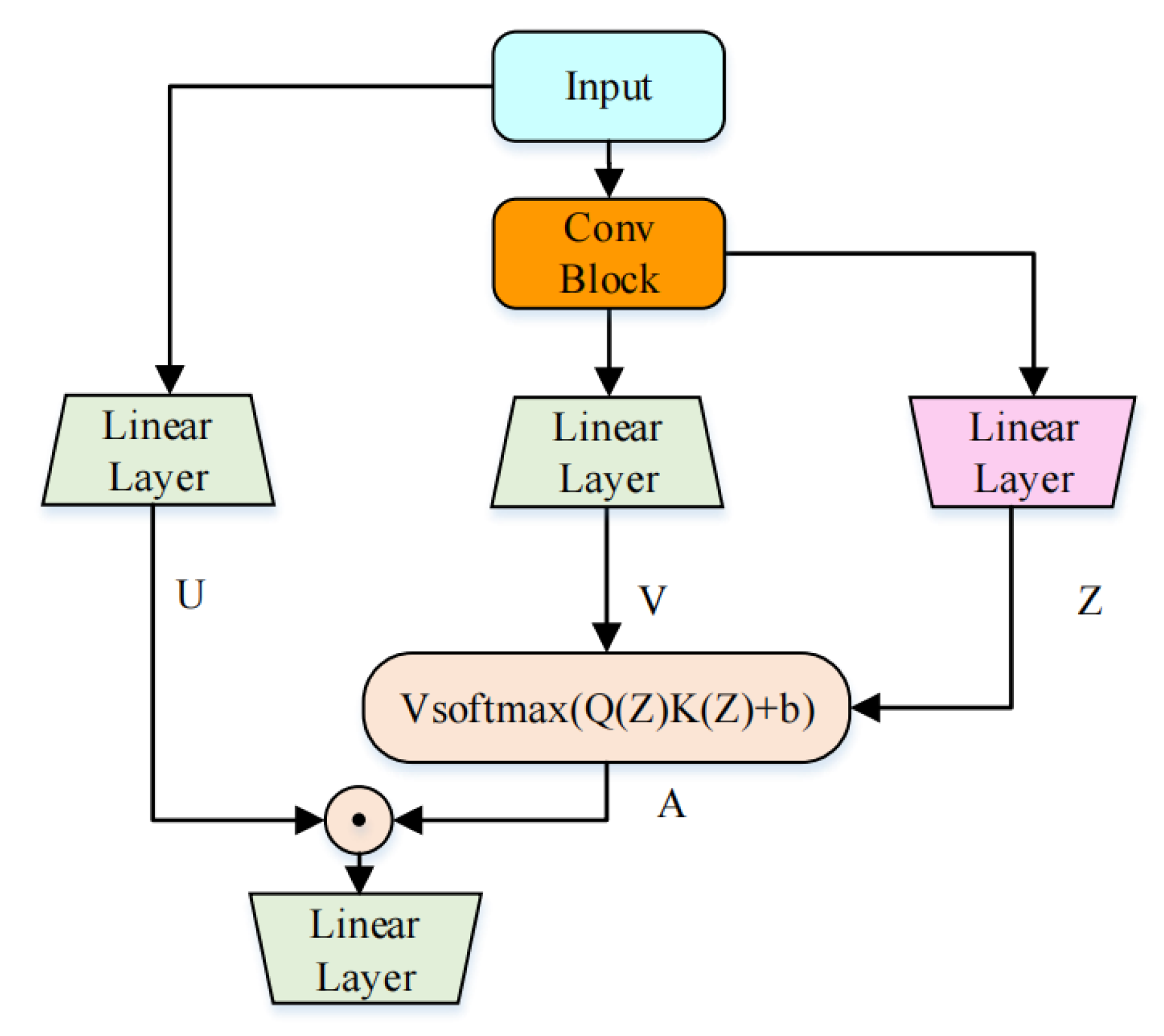

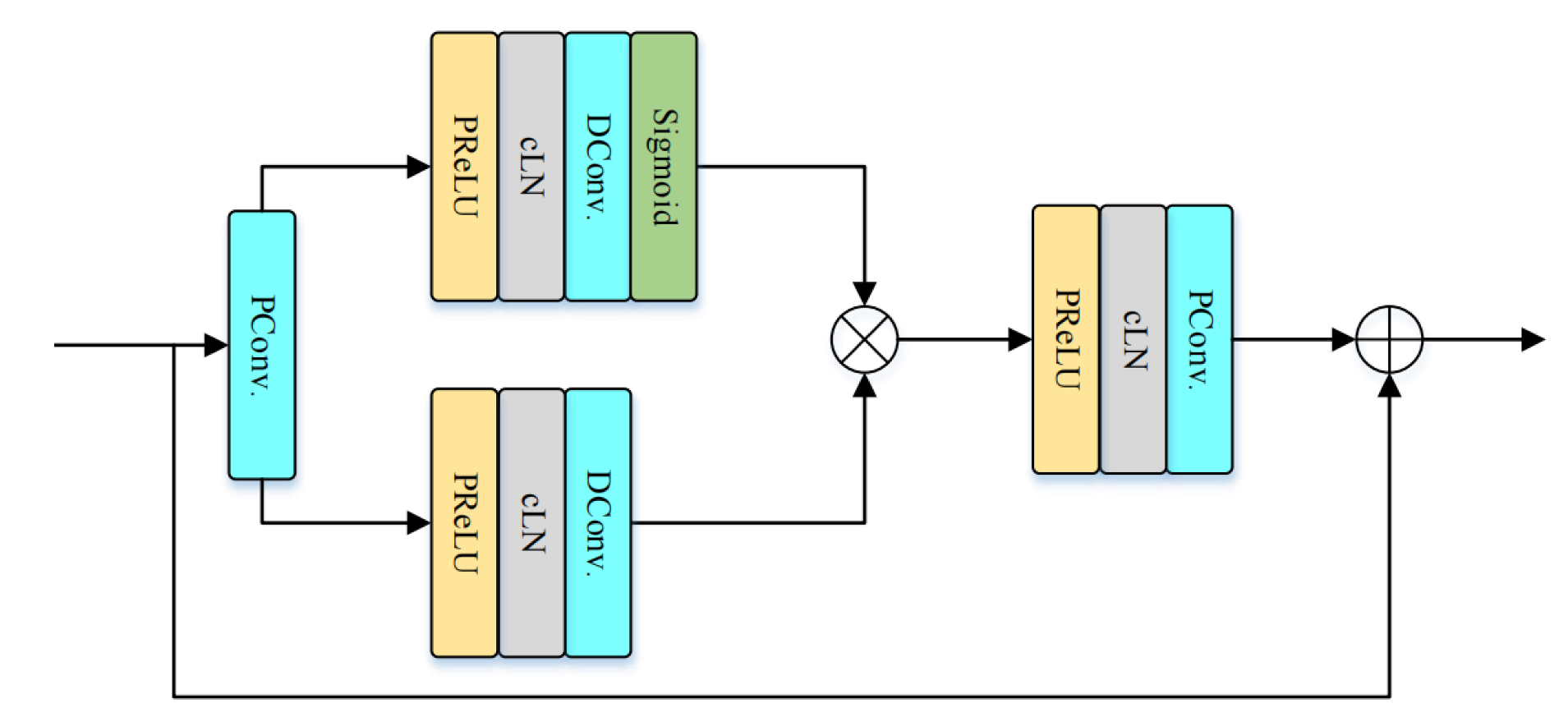

3.1.2. Complex Convolution-Augmented Gated Attention Units

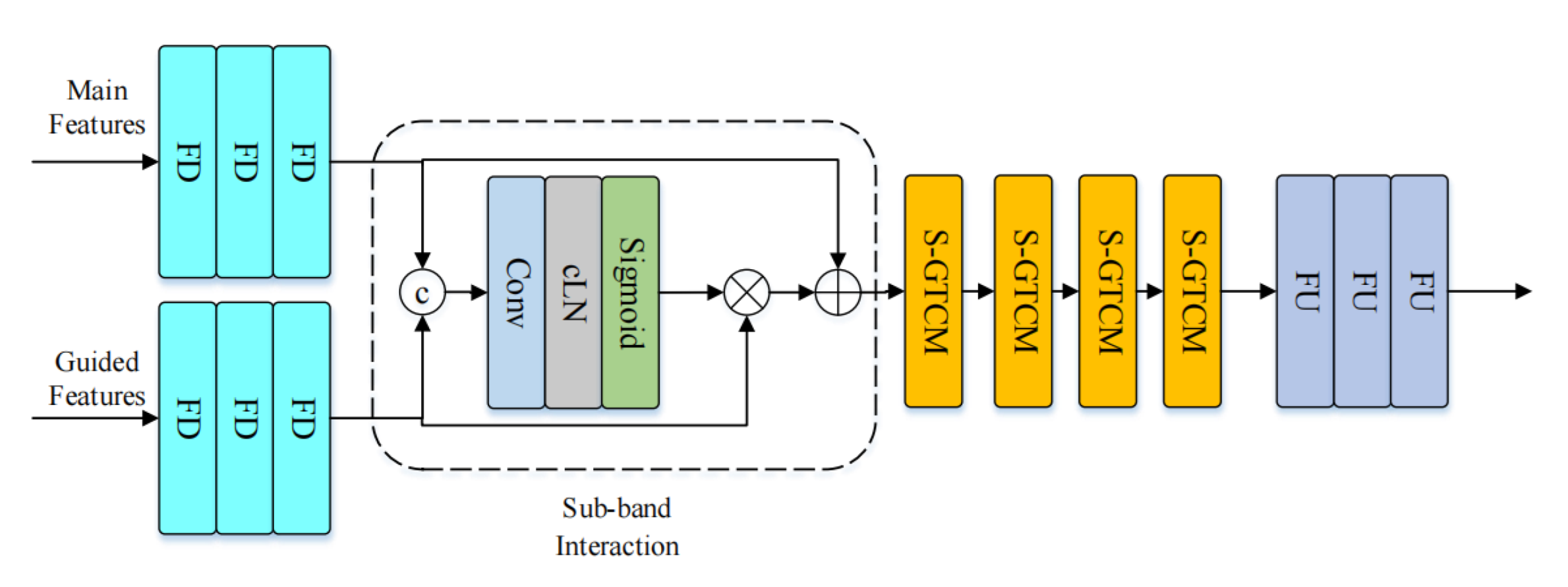

3.2. Medium and High Band Speech Enhancement Network

3.3. Loss Function

4. Experiments

4.1. Datasets and Settings

4.2. Evaluation Indicators

- PESQ [32]: The perceptual evaluation of speech quality—ranges from −0.5 to 4.5;

- CSIG [33]: The MOS prediction of the signal distortion—ranging from 1 to 5;

- CBAK [33]: The MOS prediction of the background noise intrusiveness—ranging from 1 to 5;

- COVL [33]: The MOS prediction of the overall effect—ranging from 1 to 5;

- STOI [34]: The short-time objective intelligibility—ranging from 0 to 1.

5. Results and Discussion

5.1. Baselines and Results Analysis

5.2. Ablation Study

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Yuliani, A.R.; Amri, M.F.; Suryawati, E.; Ramdan, A.; Pardede, H.F. Speech Enhancement Using Deep Learning Methods: A Review. J. Elektron. Telekomun. 2021, 21, 19–26. [Google Scholar] [CrossRef]

- Chen, J.; Wang, Z.; Tuo, D.; Wu, Z.; Kang, S.; Meng, H. FullSubNet+: Channel attention fullsubnet with complex spectrograms for speech enhancement. In Proceedings of the ICASSP 2022-2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 22–27 May 2022; pp. 7857–7861. [Google Scholar]

- Michelsanti, D.; Tan, Z.-H.; Zhang, S.-X.; Xu, Y.; Yu, M.; Yu, D.; Jensen, J. An overview of deep-learning-based audio-visual speech enhancement and separation. IEEE ACM Trans. Audio Speech Lang. Process. 2021, 29, 1368–1396. [Google Scholar] [CrossRef]

- Tan, K.; Wang, D. Towards model compression for deep learning based speech enhancement. IEEE ACM Trans. Audio Speech Lang. Process. 2021, 29, 1785–1794. [Google Scholar] [CrossRef] [PubMed]

- Schröter, H.; Maier, A.; Escalante-B., A.; Rosenkranz, T. Deepfilternet2: Towards Real-Time Speech Enhancement on Embedded Devices for Full-Band Audio. In Proceedings of the 2022 International Workshop on Acoustic Signal Enhancement (IWAENC), Bamberg, Germany, 5–8 September 2022; pp. 1–5. [Google Scholar]

- Ochieng, P. Deep neural network techniques for monaural speech enhancement: State of the art analysis. arXiv 2022, arXiv:2212.00369. [Google Scholar]

- Hao, X.; Wen, S.; Su, X.; Liu, Y.; Gao, G.; Li, X. Sub-band knowledge distillation framework for speech enhancement. arXiv 2020, arXiv:2005.14435. [Google Scholar]

- Hu, Q.; Hou, Z.; Le, X.; Lu, J. A light-weight full-band speech enhancement model. arXiv 2022, arXiv:2206.14524. [Google Scholar]

- Dehghan Firoozabadi, A.; Irarrazaval, P.; Adasme, P.; Zabala-Blanco, D.; Durney, H.; Sanhueza, M.; Palacios-Játiva, P.; Azurdia-Meza, C. Multiresolution speech enhancement based on proposed circular nested microphone array in combination with sub-band affine projection algorithm. Appl. Sci. 2020, 10, 3955. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhang, L.; Zhuang, X.; Qian, Y.; Li, H.; Wang, M. FB-MSTCN: A full-band single-channel speech enhancement method based on multi-scale temporal convolutional network. In Proceedings of the ICASSP 2022-2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 22–27 May 2022; pp. 9276–9280. [Google Scholar]

- Valin, J.-M. A hybrid DSP/deep learning approach to real-time full-band speech enhancement. In Proceedings of the 2018 IEEE 20th International Workshop on Multimedia Signal Processing (MMSP), Vancouver, BC, Canada, 29–31 August 2018; pp. 1–5. [Google Scholar]

- Giri, R.; Venkataramani, S.; Valin, J.-M.; Isik, U.; Krishnaswamy, A. Personalized percepnet: Real-time, low-complexity target voice separation and enhancement. arXiv 2021, arXiv:2106.04129. [Google Scholar]

- Yu, C.; Lu, H.; Hu, N.; Yu, M.; Weng, C.; Xu, K.; Liu, P.; Tuo, D.; Kang, S.; Lei, G. Durian: Duration informed attention network for multimodal synthesis. arXiv 2019, arXiv:1909.01700. [Google Scholar]

- Yang, G.; Yang, S.; Liu, K.; Fang, P.; Chen, W.; Xie, L. Multi-band melgan: Faster waveform generation for high-quality text-to-speech. In Proceedings of the 2021 IEEE Spoken Language Technology Workshop (SLT), Shenzhen, China, 19–22 January 2021; pp. 492–498. [Google Scholar]

- Proakis, J.G. Digital Signal Processing: Principles, Algorithms, and Applications, 4/E.; Pearson Education India: Noida, India, 2007. [Google Scholar]

- Lv, S.; Hu, Y.; Zhang, S.; Xie, L. Dccrn+: Channel-wise subband dccrn with snr estimation for speech enhancement. arXiv 2021, arXiv:2106.08672. [Google Scholar]

- Li, J.; Luo, D.; Liu, Y.; Zhu, Y.; Li, Z.; Cui, G.; Tang, W.; Chen, W. Densely connected multi-stage model with channel wise subband feature for real-time speech enhancement. In Proceedings of the ICASSP 2021-2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Virtual, 6–11 June 2021; pp. 6638–6642. [Google Scholar]

- Yu, G.; Guan, Y.; Meng, W.; Zheng, C.; Wang, H. DMF-Net: A decoupling-style multi-band fusion model for real-time full-band speech enhancement. arXiv 2022, arXiv:2203.00472. [Google Scholar]

- Yu, G.; Li, A.; Liu, W.; Zheng, C.; Wang, Y.; Wang, H. Optimizing Shoulder to Shoulder: A Coordinated Sub-Band Fusion Model for Real-Time Full-Band Speech Enhancement. arXiv 2022, arXiv:2203.16033. [Google Scholar]

- Chen, H.; Zhang, X. CGA-MGAN: Metric GAN based on Convolution-augmented Gated Attention for Speech Enhancement. Preprints 2023, 2023020465. [Google Scholar] [CrossRef]

- Valentini-Botinhao, C.; Wang, X.; Takaki, S.; Yamagishi, J. Investigating RNN-based speech enhancement methods for noise-robust Text-to-Speech. In Proceedings of the SSW, Sunnyvale, CA, USA, 13–15 September 2016; pp. 146–152. [Google Scholar]

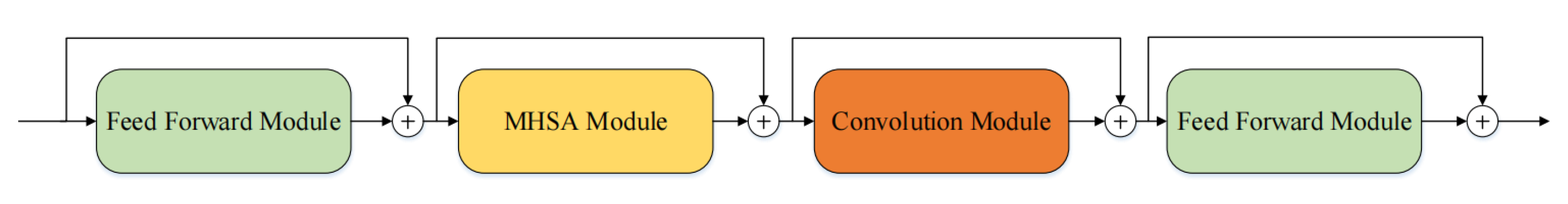

- Gulati, A.; Qin, J.; Chiu, C.-C.; Parmar, N.; Zhang, Y.; Yu, J.; Han, W.; Wang, S.; Zhang, Z.; Wu, Y. Conformer: Convolution-augmented transformer for speech recognition. arXiv 2020, arXiv:2005.08100. [Google Scholar]

- Pandey, A.; Wang, D. Densely connected neural network with dilated convolutions for real-time speech enhancement in the time domain. In Proceedings of the ICASSP 2020-2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 6629–6633. [Google Scholar]

- Ulyanov, D.; Vedaldi, A.; Lempitsky, V. Instance normalization: The missing ingredient for fast stylization. arXiv 2016, arXiv:1607.08022. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1026–1034. [Google Scholar]

- Fu, Y.; Liu, Y.; Li, J.; Luo, D.; Lv, S.; Jv, Y.; Xie, L. Uformer: A unet based dilated complex & real dual-path conformer network for simultaneous speech enhancement and dereverberation. In Proceedings of the ICASSP 2022-2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 22–27 May 2022; pp. 7417–7421. [Google Scholar]

- Su, J.; Lu, Y.; Pan, S.; Murtadha, A.; Wen, B.; Liu, Y. Roformer: Enhanced transformer with rotary position embedding. arXiv 2021, arXiv:2104.09864. [Google Scholar]

- Dauphin, Y.N.; Fan, A.; Auli, M.; Grangier, D. Language modeling with gated convolutional networks. In Proceedings of the International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 933–941. [Google Scholar]

- Zhang, S.; Wang, Z.; Ju, Y.; Fu, Y.; Na, Y.; Fu, Q.; Xie, L. Personalized acoustic echo cancellation for full-duplex communications. arXiv 2022, arXiv:2205.15195. [Google Scholar]

- Ju, Y.; Zhang, S.; Rao, W.; Wang, Y.; Yu, T.; Xie, L.; Shang, S. TEA-PSE 20: Sub-Band Network for Real-Time Personalized Speech Enhancement. In Proceedings of the 2022 IEEE Spoken Language Technology Workshop (SLT), Doha, Qatar, 9–12 January 2023; pp. 472–479. [Google Scholar]

- Pandey, A.; Wang, D. TCNN: Temporal convolutional neural network for real-time speech enhancement in the time domain. In Proceedings of the ICASSP 2019-2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 6875–6879. [Google Scholar]

- Rix, A.W.; Beerends, J.G.; Hollier, M.P.; Hekstra, A.P. Perceptual evaluation of speech quality (PESQ)-a new method for speech quality assessment of telephone networks and codecs. In Proceedings of the 2001 IEEE International Conference on Acoustics, Speech, and Signal Processing, Proceedings (Cat. No. 01CH37221), Salt Lake City, UT, USA, 7–11 May 2001; pp. 749–752. [Google Scholar]

- Hu, Y.; Loizou, P.C. Evaluation of objective quality measures for speech enhancement. IEEE Trans. Audio Speech Lang. Process. 2007, 16, 229–238. [Google Scholar] [CrossRef]

- Taal, C.H.; Hendriks, R.C.; Heusdens, R.; Jensen, J. A short-time objective intelligibility measure for time-frequency weighted noisy speech. In Proceedings of the 2010 IEEE International Conference on Acoustics, Speech and Signal Processing, Dallas, TX, USA, 14–19 March 2010; pp. 4214–4217. [Google Scholar]

- Tan, K.; Wang, D. Learning complex spectral mapping with gated convolutional recurrent networks for monaural speech enhancement. IEEE/ACM Trans. Audio Speech Lang. Process. 2019, 28, 380–390. [Google Scholar] [CrossRef] [PubMed]

- Li, A.; Liu, W.; Zheng, C.; Fan, C.; Li, X. Two heads are better than one: A two-stage complex spectral mapping approach for monaural speech enhancement. IEEE/ACM Trans. Audio Speech Lang. Process. 2021, 29, 1829–1843. [Google Scholar] [CrossRef]

- Schroter, H.; Escalante-B, A.N.; Rosenkranz, T.; Maier, A. DeepFilterNet: A low complexity speech enhancement framework for full-band audio based on deep filtering. In Proceedings of the ICASSP 2022-2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 22–27 May 2022; pp. 7407–7411. [Google Scholar]

- Lv, S.; Fu, Y.; Xing, M.; Sun, J.; Xie, L.; Huang, J.; Wang, Y.; Yu, T. S-dccrn: Super wide band dccrn with learnable complex feature for speech enhancement. In Proceedings of the ICASSP 2022-2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 22–27 May 2022; pp. 7767–7771. [Google Scholar]

| Method | Algorithm |

|---|---|

| Feature Compression | RNNoise, PercepNet |

| Finite Impulse Response | Multi-band WaveRNN, Multi-band MelGAN |

| Spectrum Splitting | DMF-Net, SF-Net |

| Method | PESQ | CSIG | CBAK | COVL | STOI |

|---|---|---|---|---|---|

| Noisy | 1.97 | 3.35 | 2.44 | 2.63 | 0.91 |

| GCRN [35] | 2.71 | 4.12 | 3.23 | 3.41 | 0.94 |

| RNNoise [11] | 2.34 | 3.40 | 2.51 | 2.84 | 0.92 |

| PercepNet [12] | 2.73 | - * | - | - | - |

| CTS-Net [36] | 2.92 | 4.22 | 3.43 | 3.62 | 0.94 |

| DeepFilterNet [37] | 2.81 | - | - | - | - |

| S-DCCRN [38] | 2.84 | 4.03 | 2.97 | 3.43 | 0.94 |

| DMF-Net [18] | 2.97 | 4.26 | 3.25 | 3.48 | 0.94 |

| SF-Net [19] | 3.02 | 4.36 | 3.54 | 3.67 | 0.94 |

| TS-CGANet | 3.30 | 4.31 | 3.69 | 3.82 | 0.95 |

| Method | PESQ | CSIG | CBAK | COVL | STOI |

|---|---|---|---|---|---|

| TS-CGANet | 3.30 | 4.31 | 3.69 | 3.82 | 0.95 |

| LB-Net (full) | 3.16 | 4.24 | 3.64 | 3.71 | 0.95 |

| LB-Net (with real CGAU) | 3.11 | 4.22 | 3.61 | 3.68 | 0.95 |

| LB-Net (w/o phase enhancement) | 2.81 | 3.24 | 3.04 | 3.01 | 0.94 |

| TS-CGANet (with real CGAU) | 3.24 | 4.37 | 3.69 | 3.82 | 0.95 |

| TS-CGANet (w/o phase enhancement) | 3.18 | 4.33 | 3.62 | 3.77 | 0.95 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, H.; Zhang, X. TS-CGANet: A Two-Stage Complex and Real Dual-Path Sub-Band Fusion Network for Full-Band Speech Enhancement. Appl. Sci. 2023, 13, 4431. https://doi.org/10.3390/app13074431

Chen H, Zhang X. TS-CGANet: A Two-Stage Complex and Real Dual-Path Sub-Band Fusion Network for Full-Band Speech Enhancement. Applied Sciences. 2023; 13(7):4431. https://doi.org/10.3390/app13074431

Chicago/Turabian StyleChen, Haozhe, and Xiaojuan Zhang. 2023. "TS-CGANet: A Two-Stage Complex and Real Dual-Path Sub-Band Fusion Network for Full-Band Speech Enhancement" Applied Sciences 13, no. 7: 4431. https://doi.org/10.3390/app13074431

APA StyleChen, H., & Zhang, X. (2023). TS-CGANet: A Two-Stage Complex and Real Dual-Path Sub-Band Fusion Network for Full-Band Speech Enhancement. Applied Sciences, 13(7), 4431. https://doi.org/10.3390/app13074431