1. Introduction

Database management systems (DBMSs) are widely used worldwide as the core of modern information systems. Like other complicated computer applications, the security and reliability of DBMSs face severe challenges. Malicious attacks on DBMSs, such as remote code execution or denial of service, will seriously harm the information system. Therefore, it is of great significance to efficiently detect DBMS vulnerabilities to improve their robustness and the security of the information system built on them.

Black-box fuzzing, or generation-based fuzzing, has been extensively used in finding DBMS bugs, such as SQLsmith [

1] and SQLancer [

2,

3,

4]. Security researchers have found a considerable number of bugs using this technique. A black-box fuzzer treats the program as a black box and is unaware of internal program structure [

5]. It randomly generates a large number of SQL statements and executes them in the DBMS. The current input is saved for subsequent analysis when unexpected behavior occurs, such as a crash. The disadvantage of black-box fuzzing has been thoroughly discussed by the academic circle, which is inefficiency. Since the generation of SQL statements is entirely random, considering the complexity of the DBMS, most of the inputs generated by the black-box fuzzer will be difficult to trigger the deep program logic, in which bugs often hide. Despite inefficiency, this technique still has a wide range of uses. Since black-box fuzzing does not require the source code of the DBMS, it can test some commercial DBMSs that are not open source.

Researchers have studied grey-box fuzzing actively in recent years. The main difference between grey-box fuzzing and black-box fuzzing is that the former leverages instrumentation to glean information about the program [

5], such as code coverage. With an initial seed queue, the grey-box fuzzer performs a series of mutations on seeds to generate new inputs and saves the inputs that trigger a new state (or crash) of the program for future mutation. Therefore, compared with black-box fuzzer, grey-box fuzzer can explore the deep states of the program gradually. The well-known AFL [

6] collects the code coverage of the program during fuzzing by instrumentation, and DBMS vendors have applied it to DBMS testing. For example, SQLite used AFL as a standard part of the testing strategy until it was superseded by better fuzzers [

7]. However, since fuzzer [

8,

9,

10], like AFL, was not initially designed for DBMS fuzzing, the SQL statements generated by AFL often have syntactic or semantic errors, making it hard to trigger the deep logic of DBMSs (such as the optimizer). Squirrel [

11], a recent work focusing on DBMS fuzzing, has solved this problem to some extent, making it the state-of-art grey-box DBMS fuzzer. It introduces the structure-aware mutator for SQL statements into AFL. After mutation, it fills inputs with new semantics to improve the syntactic and semantic correctness.

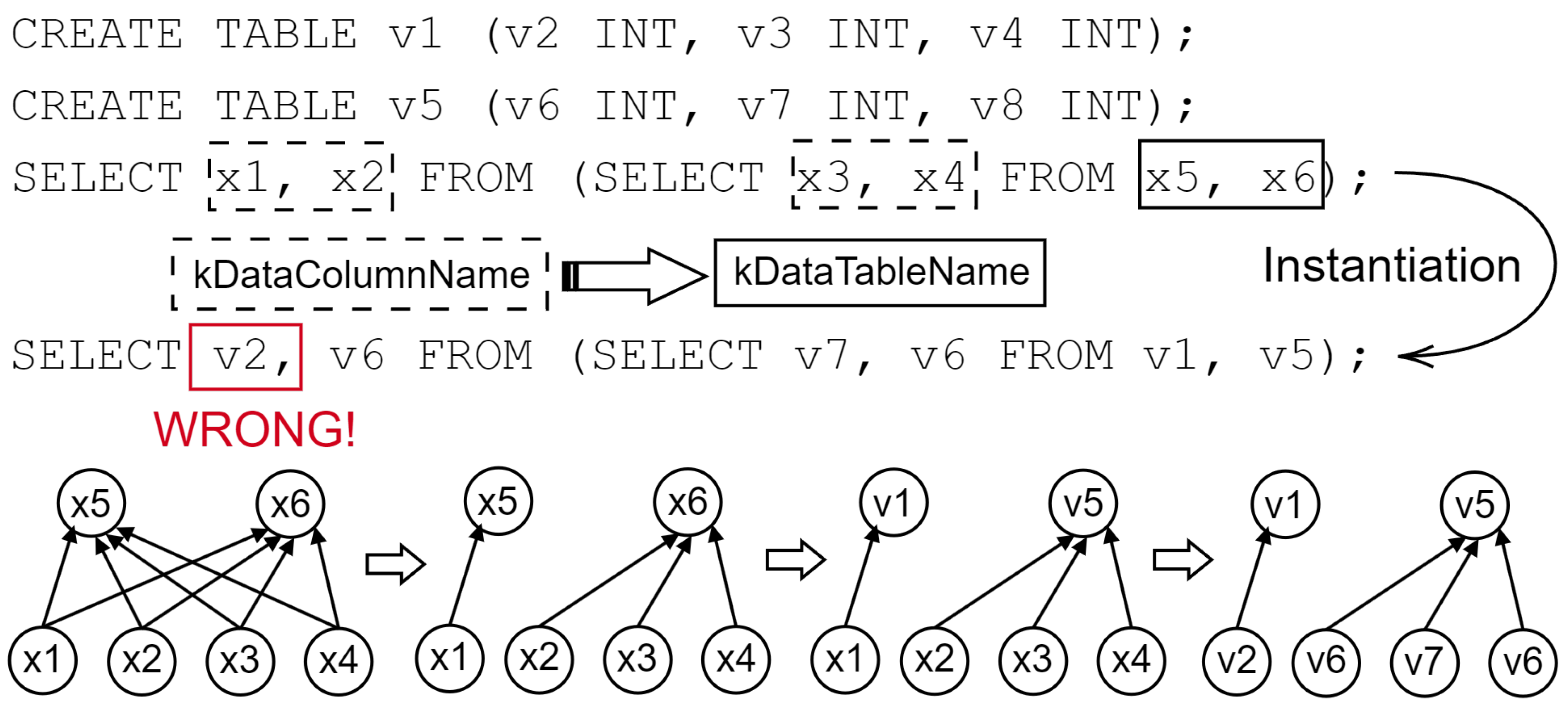

In recent years, many new solutions have been proposed for grey-box fuzzer to improve fuzzing efficiency. An important one is improving the seed scheduling strategy. However, less attention has been paid to the seed scheduling strategy in the DBMS fuzzing area. In DBMS fuzzing, different seeds have different correctness, and seeds with different correctness contribute differently to fuzzing. Hence, scheduling seeds by speed and size, the seed scheduling strategy in the existing grey-box DBMS fuzzer, is inefficient. Another challenge in grey-box DBMS fuzzing is the semantics filling of SQL statements. In order to make the SQL statement generated by mutation pass the semantic check of DBMSs, Squirrel proposes a method called Semantics-Guided Instantiation to fill the SQL skeleton with concrete semantics. However, the instantiation method of Squirrel does not perform well on SQL statements with nested structures due to design issues. A significant reason is that Squirrel cannot distinguish between nodes with the same type but at different levels. The problem of instantiation makes Squirrel hard to generate complex SQL statements, limiting its effectiveness in finding DBMS bugs.

In this paper, we implement a grey-box fuzzer, Squill, to address the challenges faced in current DBMS fuzzing. As the particularity of DBMS fuzzing scenarios, we propose correctness-guided mutation, which utilizes the correctness of SQL statements as feedback to guide fuzzing. We design two heuristic methods to improve the fuzzing efficiency by collecting the correctness (valid, syntax-error, semantics-error) of each seed. First, we prioritize mutating valid seeds because of their effectiveness in generating new paths and crashes. Second, we give some seeds with syntactic or semantic errors more opportunities to participate in mutation as material to activate interesting SQL structures in them more rapidly. In addition, we propose semantics-aware instantiation, which has the ability to guarantee the semantic correctness of the inputs with nested structures. We design a new instantiation stage in which we fill the nodes with semantics according to the predetermined constraints. During instantiation, we traverse each node of the AST in turn and parse according to the node type. While traversing, we collect the context information of each node so that we can distinguish nodes of the same type but at different levels and assign different dependencies to them. For example, with the context information of a node, we can distinguish whether it is at the beginning of a SELECT statement or a subquery in FROM clause and treat it differently.

We implemented Squill based on Squirrel. To understand the effectiveness of Squill, we evaluated it on three popular databases: MySQL [

12], MariaDB [

13], and OceanBase [

14]. Squill successfully found 63 memory error issues, including 30 bugs in MySQL, 27 bugs in MariaDB, and 6 bugs in OceanBase. We have reported all of our findings to the developers of the appropriate DBMS. At the time of paper writing, 19 bugs have been fixed, and 9 CVE numbers have been assigned due to the danger of these vulnerabilities. Our evaluation shows that correctness-guided mutation helps to improve the efficiency of fuzzers in path exploration and bug finding. We also compare our work with the current state-of-the-art tool, Squirrel. After 24 h of testing, Squill found 15, 17, and 2 bugs in each of the three DBMSs, while Squirrel found only 3, 7, and 0 bugs. Furthermore, results show that semantics-aware instantiation outperforms the instantiation of Squirrel in the correct semantic filling of complex SQL statements.

In this paper, we first introduce Squirrel’s mutation and instantiation method. Then we illustrate the necessity of scheduling seeds according to correctness through experiments and illustrate the drawbacks of Squirrel’s instantiation method with examples. In addition, we introduce our solutions Squill to these two problems, including correctness-guided mutation and semantics-aware instantiation. Eventually, we prove the effectiveness of Squill through experiments.

In conclusion, this paper makes the following contributions:

We investigated the drawbacks of the current seed scheduling strategy and the problem of Squirrel’s instantiation method. We conclude that seeds should be scheduled based on correctness, and a new instantiation method that can correctly generate semantics for SQL statements with nested structures is demanded.

We propose correctness-guided mutation, which utilizes the correctness of seed execution as feedback to guide fuzzing and improve efficiency. Moreover, we propose semantics-aware instantiation to address the challenge of correct semantics generation for SQL statements with nested structures. We implement Squill, a coverage-guided DBMS fuzzer that applies the two solutions above.

We evaluated Squill on several real-world DBMSs and found 63 bugs.The results show that Squill outperforms the previous fuzzer in terms of both code coverage and bug discovery. We have released the source code of Squill at

https://github.com/imbawenzi/Squill (accessed on 22 November 2022).

3. Design of Squill

We propose two practical solutions to address the above challenges. First, we provide correctness-guided mutation, which contains two heuristic methods, utilizing the correctness of seeds as feedback to improve the efficiency of fuzzing (

Section 3.1). Second, we introduce semantics-aware instantiation (

Section 3.2). During instantiation, we collect the context information of nodes. So we can know the level of the node according to the context information and build dependencies across levels when traversing to a nested structure.

Figure 4 shows an overview of Squill, where the white components are the original Squirrel, and our design is marked in grey. Squill follows the general flow of grey-box DBMS fuzzing, which mainly includes mutation, instantiation, and fuzzing. First, Squill selects the next seed to mutate from the seed queue. Squill will preferentially select the seeds with syntactic and semantic correctness. Then, the seed is translated into AST. The mutator randomly performs replacement, insertion, and deletion mutations on the AST. Squill adds an interesting material library to participate in the mutation. During the instantiation phase, new inputs generated by mutation will be filled with semantics to maintain semantic correctness. We design a new instantiator to address the challenge of correct semantics generation for SQL statements with nested structures. In the end, Squill will take these test cases as input to the DBMS, detect whether the DBMS has crashed, and add the input that triggers new code coverage to the seed queue.

3.1. Correctness-Guided Mutation

Since seeds with different correctness contribute differently to fuzzing, the fuzzer should not treat them equally. In this section, we propose two correctness-guided heuristic methods to improve the efficiency of fuzzing in path exploration and bug finding.

3.1.1. Correctness-Focused Seed Selection

In DBMS fuzzing, most of the seed increments come from valid seeds. Valid seeds can trigger deeper logic of DBMS than those with syntactic and semantic errors, exploring more paths. In addition, valid seeds have a higher probability of generating valid seeds, producing more crashes. Based on the conclusion above, we propose a correctness-focused seed selection strategy. We mutate valid seeds first, then seeds with semantics-error, and finally seeds with syntax-error. Because mutating valid seeds is more likely to generate valid seeds, leading to more path exploration and bug finding. The process of seed selection is shown in Algorithm 1.

| Algorithm 1: Correctness-focused seed selection. |

- 1:

// Run when fuzzer generates new seed - 2:

functionupdate_bitmap_score() - 3:

for i from 0 to MAP_SIZE do - 4:

// trace_bits is the bitmap of current seed - 5:

if trace_bits[i] is not 0 then - 6:

if top_rated[i] is not 0 then - 7:

if new_seed has better correctness than top_rated[i] then - 8:

top_rated[i] = new_seed; - 9:

end if - 10:

// Compare speed and size with top_rated[i] - 11:

// when they have same correctness - 12:

if new_seed has the same correctness as top_rated[i] then - 13:

if new_seed is faster and smaller than top_rated[i] then - 14:

top_rated[i] = new_seed; - 15:

end if - 16:

end if - 17:

else - 18:

top_rated[i] = new_seed; - 19:

end if - 20:

end if - 21:

end for - 22:

end function

|

We implement correctness-focused seed selection based on AFL’s original seed selection mechanism. AFL updates top_rated whenever it finds a new seed top_rated is an array that has the same size as the bitmap, where each value records the seed with the highest score on the corresponding edge in the bitmap. The faster, smaller seed will have a higher score. Then, AFL uses a greedy algorithm to select a minimum subset of seeds that contain all edges in the bitmap from seeds recorded in top_rated. Seeds in this subset are marked as favored. The favored seeds have a higher probability of mutating. AFL uses this mechanism to reduce the seed queue and improve the efficiency of fuzzing.

In the original seed selection mechanism, AFL prioritizes fuzzing faster and smaller seeds. This mechanism tends to select more syntax-error or semantics-error seeds to participate in fuzzing. The seeds with syntactic or semantic errors usually have a faster execution speed, as they terminate at the syntactic and semantic check phase of DBMS. The subsequent phases of DBMS, such as the optimization and storage phase, are often time-consuming. In our method, we preferentially update seeds with better correctness into top_rated, as shown in Algorithm 1 Line 7–9. We define that valid seeds are better than semantics-error seeds, which are better than syntax-error seeds. We first compare the correctness of seeds and then consider their execution speed and size only if they have the same correctness (Line 12–16). It ensures that valid seeds are mutated preferentially.

3.1.2. Mutation with Interesting Material Library

Valid seeds usually trigger deep program logic. In contrast, seeds with syntactic or semantic errors (in other words, invalid) often terminate at the early phase of DBMS, such as the syntactic and semantic check. It means that the optimizer and executor of DBMS do not actually process these invalid SQL statements. So some SQL structures in the syntax-error or semantics-error seed are unactivated, as subsequent phases of DBMS do not actually process them. That is, although some SQL structures can trigger new DBMS logic, they are not actually executed because they are in an invalid seed. We call these SQL structures interesting structures here. For example, the query in Line 4 of Listing 1 is an invalid input, which uses a not existing window w. The valid one is shown in Line 2. The function row_number() in Line 4 may be an interesting structure. It is not actually executed since it is in a semantics-error SQL statement that does not pass the semantic check of DBMS.

Therefore, we designed a method to filter out these interesting structures and activate them, as shown in Algorithm 2. We maintain an interesting material library, which contains subtrees of all current

favored and invalid seeds (Line 15–17). When the fuzzer needs a material (subtree) from the mutation material library to participate in mutation, it has a certain probability of obtaining the material from the interesting material library (Line 21–30). The variable

probability in Line 25 is an input parameter, which is set to 5 by default.

| Algorithm 2: Mutation with interesting material library. |

- 1:

// Run when top_rated changed - 2:

functioncull_queue() - 3:

temp_bitmap[MAP_SIZE] = 0; - 4:

set_empty(interesting_library); - 5:

for i from 0 to MAP_SIZE do - 6:

if top_rated[i] is not 0 and temp_bitmap[i] is 0 then - 7:

// Favored means mutating first - 8:

top_rated[i] is favored; - 9:

// Record the bitmap of top_rated[i] to temp_bitmap - 10:

for j from 0 to MAP_SIZE do - 11:

if top_rated[i].bitmap[j] is not 0 then - 12:

temp_bitmap[j] = 1; - 13:

end if - 14:

end for - 15:

if top_rated[i] is invalid then - 16:

add_into_interesting_library(top_rated[i]); - 17:

end if - 18:

end if - 19:

end for - 20:

end function - 21:

// Run when insertion or replacement - 22:

functionget_ir_from_library() - 23:

// Get a random number from 1 to 100 - 24:

rand_int = get_rand_int(100); - 25:

if rand_int<probability then - 26:

get_from_interestring_library(type); - 27:

else - 28:

get_from_all_library(type); - 29:

end if - 30:

end function

|

We utilize the favored mechanism in AFL to select seeds that may contain interesting structures. After correctness-focused seed selection, the favored and invalid seed must trigger the program state (edge) that valid seeds have not triggered. For example, some SQL structures in these seeds might trigger a unique logic of the DBMS parser. When these SQL structures are actually executed, it is likely to bring path exploration or bug finding in the optimizer or executor of DBMS. Since it is difficult to generate valid seeds from the mutation of seeds with syntactic and semantic errors, we give the mutation material (subtrees) of these seeds more opportunities to participate in mutation, making interesting structures executed in valid seeds after insertion or replacement.

3.2. Semantics-Aware Instantiation

We design an instantiation algorithm to address the challenge of correct semantics generation for SQL statements with nested structures. In instantiation, while traversing AST nodes in the order of SQL statements, we parse nodes according to the node’s type and context information (such as the type of parent and adjacent nodes). In this way, we can distinguish nodes of the same type but at different levels, as their context information is different. For example, the type of the parent node of a main SELECT statement and a subquery is distinct. With this information, we can construct a series of detailed constraints on nodes based on prior knowledge (the relationship between semantics in SQL statements) and then fill them with semantics correctly according to these constraints. For example, for a table name node in a CREATE statement, we can know whether it comes from a CREATE TABLE statement or a CREATE TRIGGER statement according to the context information when parsing it. For the former, we will fill it with a newly generated unique table name. For the latter, we will randomly assign a table name to it from the currently existing table name (created in the previous SQL statement).

We divide semantics into simple and complicated semantics, depending on the complexity of constraints. When traversing to a node, if we can instantly assign semantics to it without error, we call the semantics that the node has as simple semantics. The dependency constraints of nodes with such semantics are relatively simple, usually across statements. For example, the table name dropped in the DROP statement is from tables created in the previous CREATE statements. When filling a DROP statement with semantics, the previous CREATE statement has been traversed and instantiated. At this point, the existing table names are determined, which can be instantly assigned to the table name node in the DROP statement. When traversing to a node, if we cannot instantly assign semantics to it but need to wait until the entire SQL statement is parsed and fill it with consideration of the semantics of other nodes, we call the semantics that the node has as complicated semantics. For example, the column name in a SELECT clause depends on one of the tables in the FROM clause, which means that the former should be a column of the latter, and we need to instantiate the latter before the former.

3.2.1. Instantiation of Simple Semantics

Simple semantics mainly exist in CREATE, DROP, and ALTER statements, as well as nodes that do not have dependencies, such as function names. In instantiation, Squill maintains a data structure called the information table that stores the current database information, which mainly contains the table name and column name of the created tables. This information table also stores information of indexes, views, and triggers. When instantiating CREATE, DROP, and ALTER statements, we perform creating, deleting, and modifying operations in the information table correspondingly, such as in real DBMS. For the CREATE statement, we generate and assign a unique table name and column name (or index name) to the corresponding node. We record this information in the information table described above. For the ALTER statement, we will randomly choose a table name from the currently existing table name. Whether it is to modify, delete, or add a column name, Squill randomly assigns a column name from the table chosen above and modifies the corresponding information in the information table. Similarly to the DROP statement, we randomly assign a table name and delete it in the information table. For nodes without dependency, including function, integer, and floating point number, Squill will randomly assign a predefined value to them. In addition, the alias node will be assigned a unique name when traversed.

3.2.2. Instantiation of Complicated Semantics

Instantiation of complicated semantics is performed in SQL statements with column-table dependency, including SELECT, INSERT, and UPDATE statements. It is performed within one SQL statement, as the dependency between column and table is not across statements. For example, there are two independent SELECT statements. The column name in the former and the table name in the latter are irrelevant. The instantiation of complicated semantics includes three stages, collecting nodes, building dependency, and filling semantics, as shown in Algorithm 3. Note that since an input of Squill is composed of multiple SQL statements, the instantiation of complicated semantics is often performed multiple times for an input.

| Algorithm 3: Instantiation of complicated semantics. |

- 1:

ColumnList ← Column[]; - 2:

VirtualTableList ← VirtualTable[]; - 3:

DependencyList ← map(Column,VirtualTable); - 4:

functionInstantiation() - 5:

// A. Collecting Nodes - 6:

for each ColumnNode in SelectTarget, Where… do - 7:

// Convert node to Column - 8:

ColumnList.add(ColumnParser(ColumnNode)); - 9:

end for - 10:

for each TableNode in From, InsertTable, UpdateTable do - 11:

// Convert node to VirtualTable - 12:

VirtualTableList.add(TableParser(TableNode)); - 13:

end for - 14:

for each SubQueryNode do - 15:

// Process subquery recursively - 16:

Instantiation(SubQueryNode); - 17:

if SubQueryNode is in From then - 18:

VirtualTableList.add(SubQueryParser(SubQueryNode)); - 19:

end if - 20:

end for - 21:

- 22:

// B. Building Dependency - 23:

for each Column in ColumnList do - 24:

DependencyList[Column]=RandChoose(VirtualTableList); - 25:

end for - 26:

- 27:

// C. Filling Semantics - 28:

for each (Column, VirtualTable) in DependencyList do - 29:

if VirtualTable is not filled then - 30:

FillVirtualTable(VirtualTable); - 31:

end if - 32:

FillColumn(Column); - 33:

end for - 34:

FillVirtualTableNotInDependencyList(); - 35:

end function

|

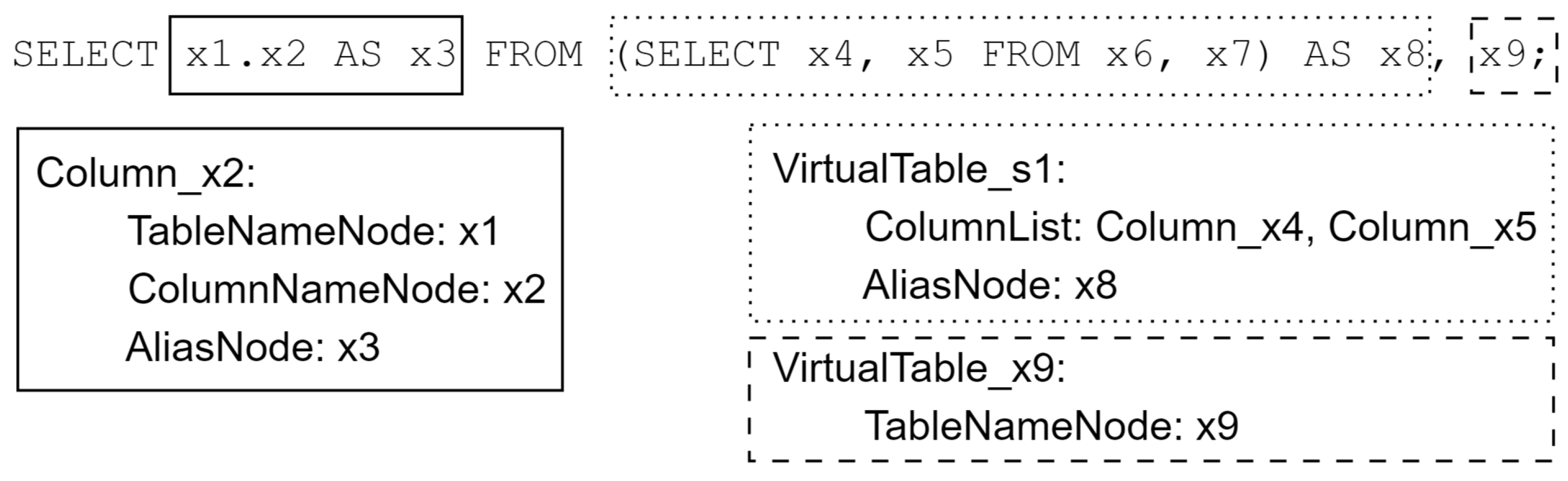

A. Collecting Nodes. While traversing AST, we collect the node with complicated semantics based on the type and context information of the current node and store it in the corresponding data structure (Line 5–20). For the SELECT statement, we collect column name nodes in select target, function parameter, WHERE, GROUP BY, ORDER BY, and WINDOW clauses, storing them in the data structure called Column (Line 6–9). Column stores not only the column name node but also the alias node and the table name node corresponding to the column name node, if they exist. With the help of context information, we can describe a column abstractly. Similarly, we collect the column name node in the insert and update the target clause of the INSERT and UPDATE statement.

For the table name, since we want to treat the result of the subquery as a table, we define a data structure called VirtualTable to represent a table. VirtualTable includes a table name node, an array of Column, and the alias node of the table, which can describe a table or the result of a subquery. For the SELECT statement, we collect the table name node in the FROM clause. For INSERT and UPDATE statements, we collect their target table name nodes (Line 10–13). For subqueries, we process them recursively, instantiating them from inner to outer (Line 14–20). For the subquery in FROM clause of the SELECT statement, we treat the result of it as a table in the subsequent dependency construction. For the subquery in other clauses, such as in the WHERE clause, we instantiate it like a SELECT statement since there is no external dependency within it.

Figure 5 shows the data structure that contains nodes in the main

SELECT statement, where

Column_x4 and

Column_x5 are the data structure

Column which contains nodes

x4 and

x5.

VirtualTable describes a table (

x10) or the result of a subquery (

s1) by filling different fields (

TableNameNode or

ColumnList). When parsing, we recursively processed subqueries, which means that the subquery and the main

SELECT statement will be parsed with the same function, and the subquery will be instantiated before the outer query. Therefore, the corresponding data structure (such as

Column_x4 and

Column_x5) is created while parsing the subquery.

B. Building Dependency. After creating the corresponding data structure, we construct the dependency between Column and VirtualTable (Line 22–25). Obviously, after processing, the dependency is very clear, which is that all Column depends on the VirtualTable in FROM clause. We randomly select a VirtualTable for each Column to depend on and record the dependency.

C. Filling Semantics. For each Column-VirtualTable dependency recorded, we fill nodes in it with semantics (Line 27–34). We fill VirtualTable first, and then the Column which depends on it. If the VirtualTable describes a table, we randomly assign the table name node in it with a table name from currently existing table names. The column name node in the Column which depends on the VirtualTable will be assigned a random column name from the table selected. The table name node in the Column will be filled with the same table name in VirtualTable, if it exists. If the VirtualTable describes the result of a subquery, we do not need to instantiate nodes of the Column in it, as they have been filled with semantics in the instantiation of the subquery (Line 16). The column name node in the Column will be filled with a column name in a random one of the Column in the VirtualTable. The table name node in the Column will be filled with the alias of the VirtualTable. At last, we fill semantics of the VirtualTable that is not depended on by any Column (Line 34).

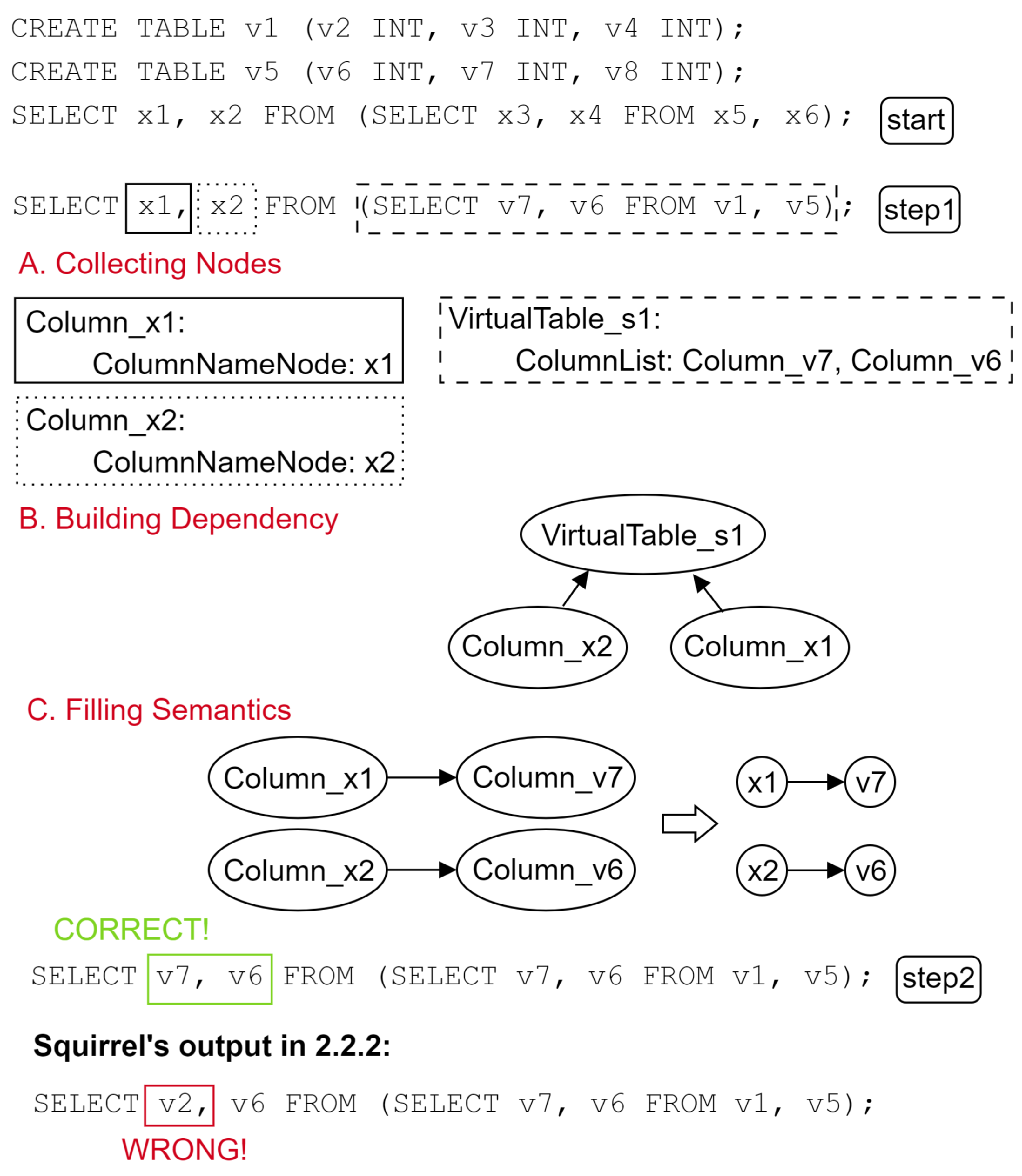

Example. Figure 6 is an example of the instantiation of complicated semantics in which the SQL statement is the same as that in

Section 2.2.2. Suppose that the first two CREATE statements have been instantiated, where the table names and column names have been generated, filled in nodes, and recorded in the information table. For the SELECT statement, there are two instantiation processes, one is the instantiation of the subquery, and the other is the instantiation of the main SELECT statement. Since the process is similar, here we focus on the instantiation of the main SELECT statement. Assume that the instantiation result of the subquery is as in

step1. This SELECT statement contains two

Column and a

VirtualTable, where the

VirtualTable describes the result of a subquery. Obviously, both

Column_x1 and

Column_x2 depend on

VirtualTable_s1. We can construct a dependency graph as shown in the figure. Compared with Squirrel, the dependency graph here is more abstract. The dependency graph in Squirrel is constructed with AST nodes, while the dependency graph in Squill consists of abstract data structures, such as Column and VirtualTable. As

VirtualTable_s1 contains the result of a subquery, assuming that we randomly choose

Column_v7 for

Column_x1, and

Column_v6 for

Column_x2, we can fill nodes in them with semantics based on the dependencies. The result after filling in semantics is shown in

step2. Compared with Squirrel’s method, semantics-aware instantiation can effectively handle the SQL statement with nested structures like subquery.

5. Evaluation

We applied our tool Squill on real-world DBMSs to verify its effectiveness. The evaluation was designed to answer the following questions:

- Q1.

Can Squill detect bugs from well-tested DBMSs? (

Section 5.1).

- Q2.

Can Squill perform better than existing tools? (

Section 5.2).

- Q3.

How does correctness-guided mutation help fuzzing? (

Section 5.3).

- Q4.

What is the contribution of semantics-aware instantiation? (

Section 5.4).

We selected three popular real-world DBMSs for evaluation, including MySQL, MariaDB, and OceanBase. We mainly compared Squill with Squirrel, as Squirrel had been shown to outperform other mutation-based fuzzers, such as AFL, and generation-based fuzzers, such as SQLsmith. We did not compare Squill with SQLRight [

18] and SQLancer, because their target is the logic bug of DBMSs, while Squill, like Squirrel, focuses on the memory error of DBMSs. We perform the experiments on three computers with Ubuntu 18.04 system, Intel(R) Core(TM) i7-10700 (2.90 GHz) CPU, and 32 GB memory. We used the llvm mode of AFL to instrument the DBMS. Because of the large codebase of DBMSs, we set the bitmap size to 256 K and used a 20% ratio instrumentation. The DBMS versions in the experiment are all the latest, including MySQL 8.0.29, MariaDB 10.10.0, and OceanBase 3.1.4. In the experiments, due to resource bottleneck, we ran one DBMS and a fuzzer on each machine for 24 h at a time and repeated three times. Squill and Squirrel used the same seed and initial library in experiments.

5.1. DBMS Bugs

In total, Squill found 63 bugs, including 30 bugs from MySQL, 27 from MariaDB, and 6 from OceanBase. The details of these bugs are shown in

Table 1. We have reported all bugs to the developers of the appropriate DBMS. At the time of paper writing, 19 of all bugs have been fixed, with 9 CVEs assigned. The type of bugs found by Squill are listed in the second column of

Table 1. Specifically, Squill found 10 bugs related to buffer overflows and use-after-free.

Case Study. Squill detected a bug in MariaDB (ID 44 in

Table 1, PoC in Listing 2), which can cause a DBMS crash by a null pointer accessing. This bug happened in INSERT…SELECT statements whose WHERE condition contains an IN/ANY/ALL predicand with a special GROUP clause, which can be eliminated and contains a subquery over a mergeable derived table referencing the updated table. It is caused by the incorrect access to the derived table which has been eliminated. The bug can cause a similar crash when executing a single-table DELETE statement with EXISTS subquery whose WHERE condition is like this. Executing this kind of query will cause a crash of DBMS in the preparation phase. The stability of the DBMS is critical, as it is usually the infrastructure for some information systems which require high availability, such as business systems in banks. Denial-of-service attacks based on such vulnerabilities can make the DBMS crash, resulting in serious consequences.

| Listing 2. A PoC of ID 44 in Table Table 1. |

| CREATE TABLE v0 ( v1 BOOLEAN, v2 INT, v3 INT ); |

| CREATE TABLE v4 ( v5 INT NOT NULL, v6 INT, v7 INT ); |

| INSERT INTO v4 ( v7 ) VALUES ( ( ( TRUE ,v5 ) NOT IN |

| ( SELECT ( - 49 ) AS v8, -128 FROM v0 GROUP BY ( TRUE, v3 ) |

| NOT IN ( SELECT v5, ( SELECT v2 FROM ( WITH v9 AS ( SELECT v7 |

| FROM ( SELECT NOT v5 <= ’x’ , FROM v4 GROUP BY v7 ) AS v10 ) |

| SELECT v7, ( v7 = 67 OR v7 > ’x’ ) FROM v4 ) AS v11 NATURAL JOIN |

| v0 WHERE v7 = v3 ) AS v12 FROM v4 ), v2 ) OR v5 > ’x’ ) ); |

5.2. Comparison with Existing Tools

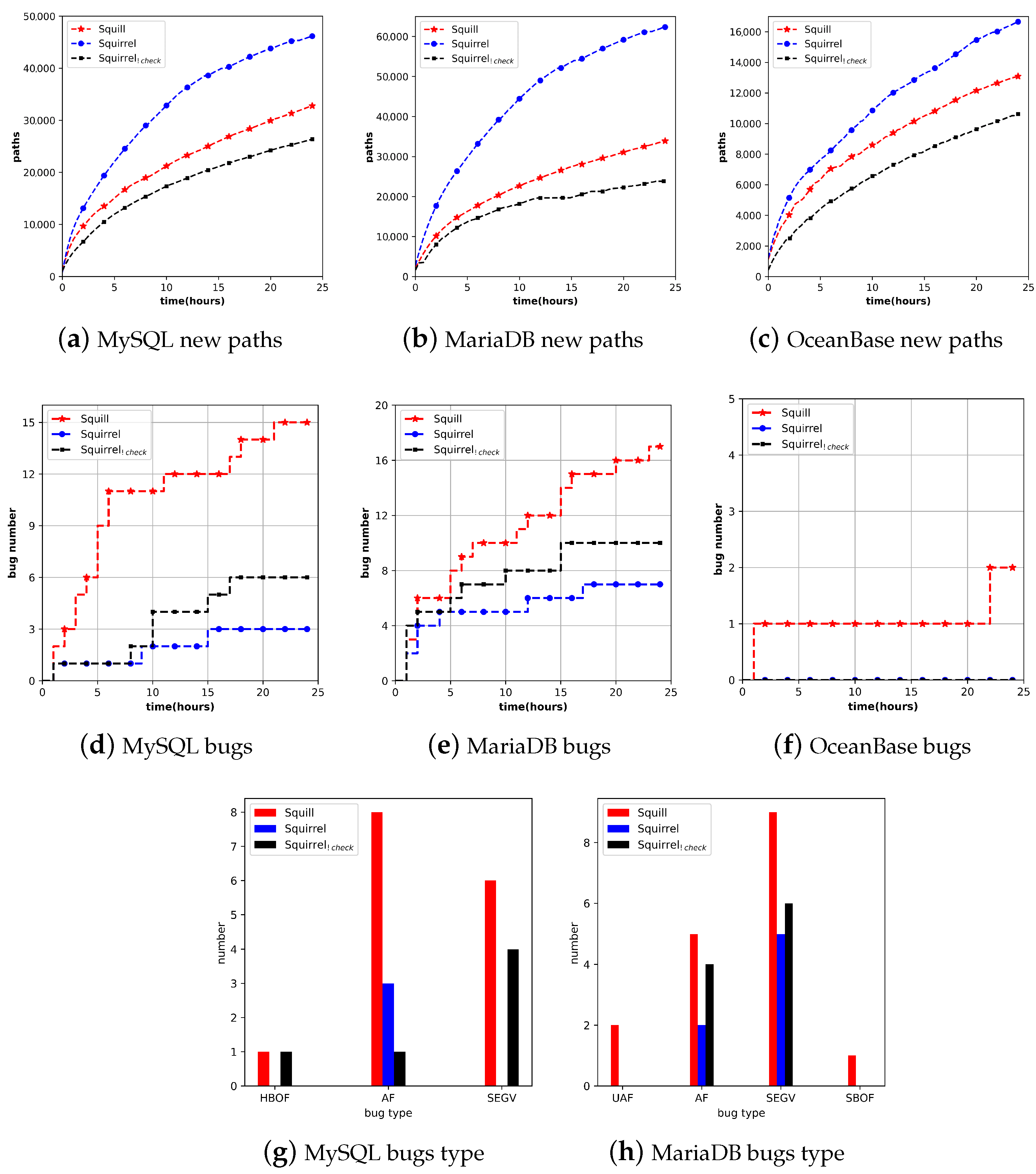

We evaluate Squill and Squirrel on three real-world DBMSs, MySQL, MariaDB, and OceanBase, to help us better understand the performance of Squill. As shown in

Figure 7, we compare the capability of bug finding and path exploration between the two tools. The number details are listed in

Table 2. More program paths explored and more bugs found per unit of time means better fuzzer performance. We also compared the type of bugs they found, which represents how harmful the bug is. Since Squirrel will drop long inputs with multiple subqueries, we disable the length and the subquery check of Squirrel, denoted as Squirrel

.

In statistics, we deduplicate crashes to the corresponding bug since a bug often causes hundreds of crashes and summarize the number of bugs by the hour. Due to the multithreading feature of DBMSs, the unique crash mechanism of AFL is hard to deduplicate DBMS crashes accurately. For MySQL and MariaDB, we deduplicate crashes according to the report output by ASan [

19]. For OceanBase, we use GDB [

20] to debug each crash after fuzzing and deduplicate according to the information, such as the call stack of functions, at the time of the DBMS crash.

Path Exploration. Figure 7a–c show the number of paths explored by Squill, Squirrel, and Squirrel

over time in MySQL, MariaDB, and OceanBase. As we can see, Squirrel explored more paths than Squill and Squirrel

. It is because Squirrel drops long inputs with multiple subqueries for their low semantics-correct rate after instantiation. The input generated by Squirrel is very short and simple and with a fast execution speed. However, with semantics-aware instantiation, we do not need to limit the number of subqueries in inputs generated by Squill. Thus Squill can generate long and complex inputs, which means a slow execution speed. Faster execution usually means more paths. So we tested Squirrel

, which can also generate long and complex inputs, to evaluate Squill more comprehensively. Compared with Squirrel

, Squill explored 24% more paths in MySQL, 40% in MariaDB, and 23% in OceanBase. Moreover, Squill and Squirrel found many more paths on MySQL and MariaDB than OceanBase. We think this may be caused by the feature of OceanBase as a distributed database and the bad grammar compatibility of the fuzzer with OceanBase.

Bug Finding. Figure 7d–f show the number of bugs found by Squill, Squirrel, and Squirrel

over time in MySQL, MariaDB, and OceanBase. In total, Squill found 3.4

x and 2

x more bugs than Squirrel and Squirrel

, which shows the effectiveness of Squill in bug finding. Note that Squill and Squirrel

found more bugs than Squirrel in MySQL and MariaDB. The result proves that there is no fundamental reason that maximizing the number of paths (or seeds) is directly connected to finding bugs [

21].

Figure 7g,h show the type of bugs found by Squill, Squirrel, and Squirrel

. The main types of bugs are assertion fails and SEGV. It shows that Squill found a total of four buffer-related errors, while Squirrel and Squirrel

only found one.

Overall, Squill outperforms Squirrel in finding memory error bugs of real-world DBMSs. Because Squill has the ability to generate valid complex SQL while Squirrel cannot. Moreover, Squill embeds correctness-guided mutation, which can improve the efficiency of fuzzing. Squill can also explore more paths than Squirrel, which shows the effectiveness of Squill.

5.3. Contribution of Correctness-Guided Mutation

To understand the contribution of different factors in correctness-guided mutation, we disable each factor to perform unit tests in MySQL and measure various aspects of the fuzzing process. In addition to the capabilities of bug finding and path exploration, we also compare the correctness of the input.

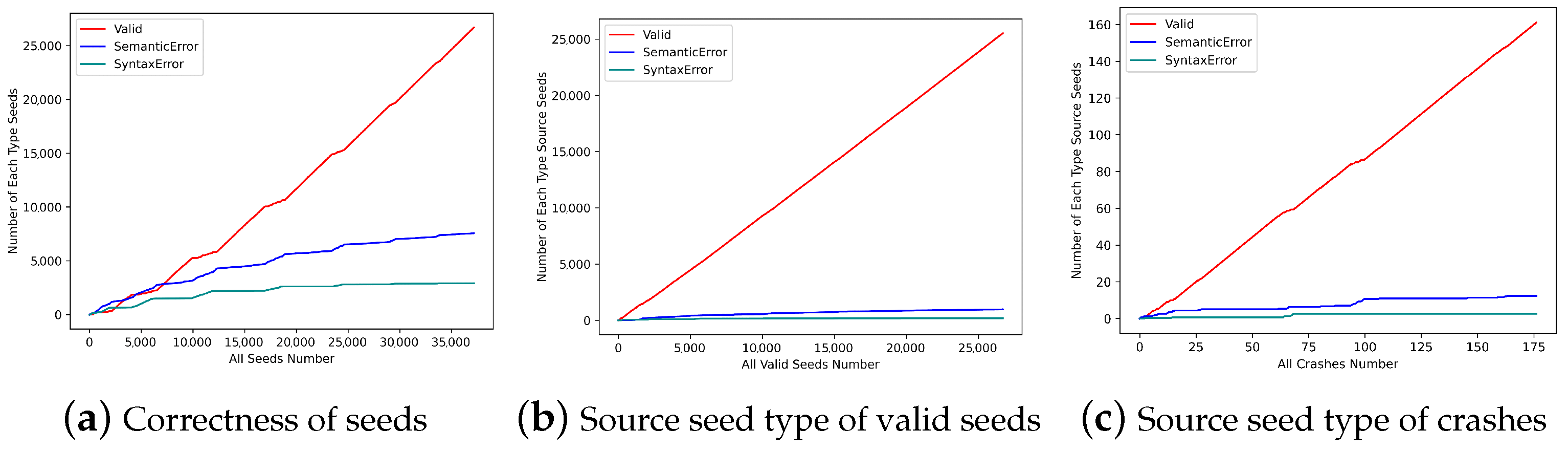

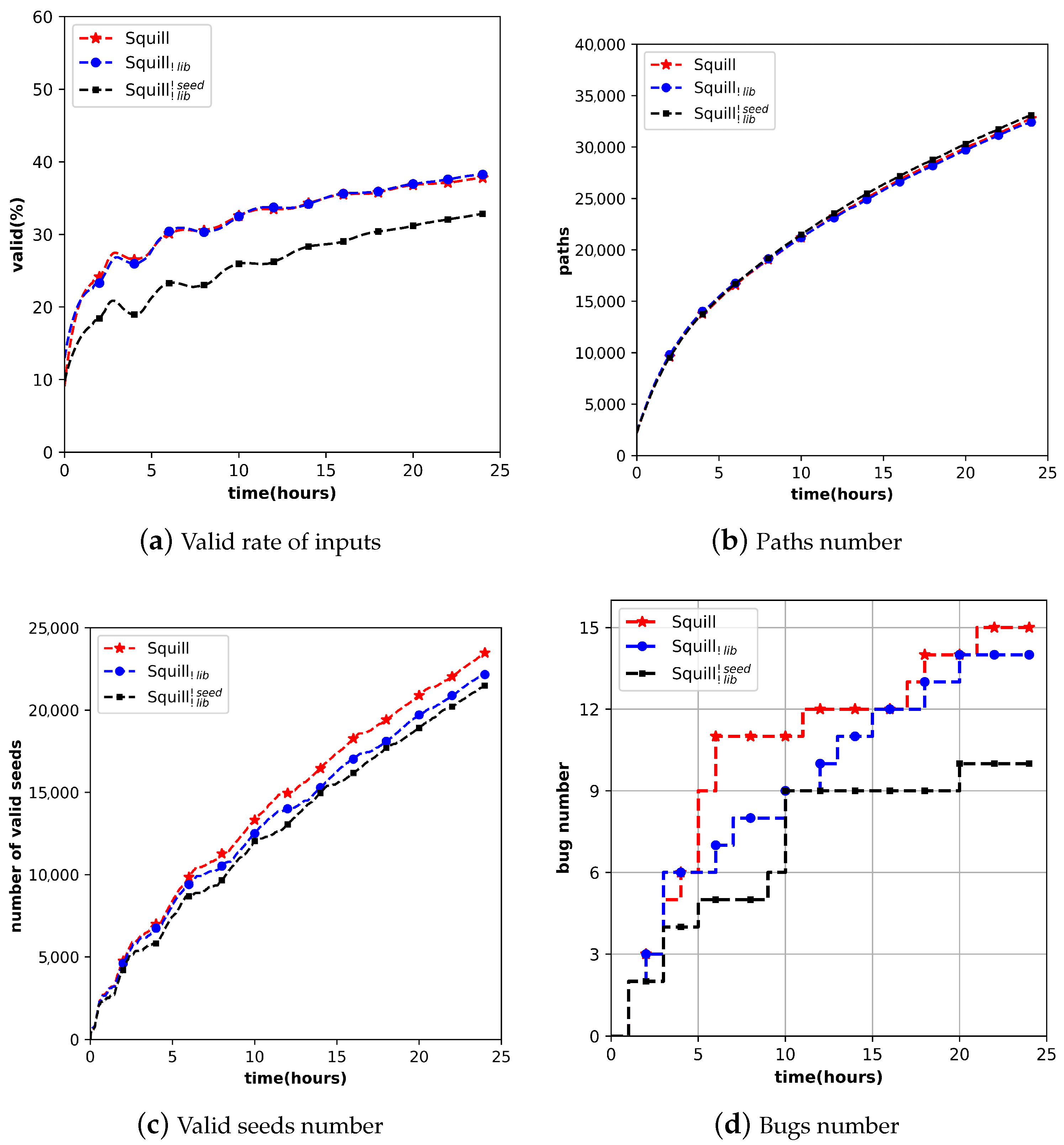

Figure 8 shows the result, where Squill

means we disable both correctness-focused seed selection and mutation with interesting material library, and Squill

means we only disabled interesting material library. Since the implementation of the mutation with interesting material library relies on correctness-focused seed selection, we do not disable the latter and keep the former.

Correctness of Inputs.Figure 8a shows the valid rate of inputs when fuzzing, which means the proportion of valid inputs in all inputs. Higher valid rate of inputs when fuzzing is better, because we want the input to pass the validity check of the DBMS. The result is Squill ≈ Squill

> Squill

, where Squill

is 6% higher than Squill

. The result shows that the correctness-focused seed selection improves the ability of the fuzzer to generate more valid inputs because of its strategy to prioritize mutating seeds which is valid.

Path Exploration.Figure 8b shows the number of paths explored by each fuzzer. Squill, Squill

, and Squill

are almost equal in the number of paths, and Squill

is slightly higher than the other two. Due to that Squirrel

generates more syntactically and semantically incorrect inputs, as shown in

Figure 8a, its inputs are executed faster, leading to more paths. In addition, we count the number of valid seeds generated during fuzzing, as shown in

Figure 8c. A seed represents a path since only if an input triggers a new path will it be saved into the seed queue as a seed. Therefore, the number of valid seeds reflects the capability of exploring the path which passes the syntactic and semantic check of DBMS. The result is Squill > Squill

> Squill

, where Squill is approximately 9% higher than Squill

, and Squill

is about 3% higher than Squill

. The result shows that both two mechanisms can help fuzzing in path exploration.

Bug Finding.Figure 8d shows the number of bugs found by Squill with each setting, where the original Squill achieves the best results. Squill and Squill

found 15 and 14 bugs in MySQL, while Squill

only found 10. The results show that the correctness-guided mutation plays an important role in bug finding.

Overall, both mechanisms of correctness-guided mutation improve the effectiveness of Squill in path exploration and bug finding, where correctness-focused seed selection improves the ability of Squill to generate more valid inputs and mutation with interesting material library helps Squill explore more DBMS states after the syntactic and semantic check.

5.4. Contribution of Semantics-Aware Instantiation

In this section, we evaluate semantics-aware instantiation introduced in

Section 3.2. We perform instantiation method of Squill and Squirrel to instantiate SQL statements of the same dataset and input the SQL statements with semantics to DBMS. We evaluate the instantiation of Squill by comparing the correctness of these inputs. The higher valid rate of input after instantiation is, the better instantiation method is. In the end, we illustrate the advantages of Squill instantiation through a practical example.

The dataset contains all valid seeds in one MySQL fuzzing of Squill because we want to compare the two methods’ capability to instantiate some critical inputs and ensure that these inputs can be correctly instantiated. We normalize the seeds before adding them to the dataset, that is, removing the semantics in them. Due to the design of translating AST to string, the input generated by Squill has a very tiny probability that it cannot be parsed by itself (same with Squirrel). Moreover, there are differences between the grammar of Squill and Squirrel, and Squirrel cannot parse some inputs of Squill. So we remove the seeds that both Squill and Squirrel cannot parse. The evaluation results are shown in

Table 3.

The results show that the instantiation of Squill (92.5% valid rate) outperforms Squirrel’s (58.24% valid rate). In addition, we make separate statistics according to the file size of the seeds. The file size of seeds corresponds to the length of the SQL statement, which we think is positively related to the complexity of the SQL statement. Long SQL statement usually means more complicated dependencies between nodes and more nested structures, such as subquery. With the increased complexity of SQL statements (file size), the valid rate of Squirrel’s instantiation is significantly reduced, while the correct rate of Squill’s instantiation changes less. This shows the advantage of Squill’s instantiation in processing complex SQL statements. Because of the randomness in semantics filling, the valid rate of Squill’s instantiation is not 100%, though the input was instantiated correctly before.

Listing 3 is a PoC of ID 23 in

Table 1. It can be seen that there is a nested structure containing subqueries in the SELECT statement in Line 3. This kind of nested structure is pervasive in SQL statements generated by mutation, which may be closely related to overflow vulnerabilities. It is difficult for Squirrel to instantiate such type of structure since Squirrel is hard to build correct dependencies between subqueries, such as the semantics of the first two

v4 positions in Line 3.

| Listing 3. A PoC of ID 23 in Table 1. |

| CREATE TABLE v0 ( v1 NUMERIC UNIQUE, v2 BIGINT ) ; |

| CREATE TABLE v3 ( v4 INT, v5 INT ) ; |

| SELECT 1 FROM v3 GROUP BY ( SELECT v4 FROM |

| ( SELECT v4 FROM ( SELECT v4 FROM v3 UNION SELECT v1 FROM v0 ) |

| AS v7 WHERE (v4 = 0 AND v4 = -1 AND v4 = 67 ) ) AS v9); |

6. Discussion

In this section, we discuss several limitations of our current implementation and possible future directions.

Universality of Fuzzer. In this paper, the instantiation of Squill is based on the grammar of MySQL, which has low universality. So we chose MariaDB and OceanBase, which are compatible with MySQL grammar, for evaluation. The cost of migrating this approach to other DBMSs is slightly higher than Squirrel. Moreover, the universality of the method is also very important [

22,

23]. In the future, we plan to achieve the universality of the fuzzer by implementing an instantiation method that satisfies the intersection of most SQL grammars and then writing extensions for each DBMS based on this universal method.

Mode of Input. Both Squill and Squirrel start with an empty database, and the input is a combination of CREATE, INSERT, and SELECT statements. We observed that most of the seeds that triggered new code coverage were mutated in SELECT statements. Changing the data inserted and the table structure created usually does not bring new paths. We think there is room for optimization. For example, we can construct a series of tables with complex structure and data as the initial database and only input SELECT statements in fuzzing. This can save the overhead of table creation and data insertion of each input.

Mutation Operator. Squill and Squirrel use the same mutation operators, including insertion, deletion, and replacement of AST nodes. We think there are other mutation operators suitable for DBMS fuzzing scenarios. For example, the random recursive mutation operator mentioned in Nautilus [

24] randomly selects a recursive tree and repeats the recursion

times. Such mutation operators may help trigger buffer overflow vulnerabilities of DBMSs.

Fuzzing Partial. Most of the vulnerabilities detected by Squill and Squirrel are located in the parser and optimizer components of the DBMS. It means the main target of the current DBMS fuzzer is the parser and optimizer rather than the executor of the DBMS. However, the storage process in the executor is time-consuming, as it involves the disk IO. So one optimization idea is to separate the parser and optimizer by analyzing the source code of the DBMS. Fuzzing these separated-out functions can significantly reduce the overhead during the execution phase of the DBMS, improving the efficiency of fuzzing.

7. Related Work

In this section, we discuss the recent DBMS testing technologies related to Squill.

Black-box DBMS Fuzzing. Black-box fuzzing, or generation-based fuzzing, has been widely used to detect DBMS bugs. With a specific predefined schema, continuously generating a large number of SQL statements into the DBMS to trigger abnormal behaviors (usually crashes) of the DBMS is one method of black-box DBMS fuzzing. Sqlsmith [

1] is a representative of this kind of black-box DBMS fuzzer. Based on AST, it randomly generates SQL query statements for the initial database through a series of highly customized rules. In addition, differential testing is another standard method used to detect DBMS vulnerabilities in black-box DBMS fuzzing. Rags [

25] and Sparkfuzz [

26] send the same SQL query to different DBMSs and detect correctness bugs by comparing the differences in the results. Sqlancer [

2,

3,

4] constructs different SQL statements of functionally equivalent through several different patterns and inputs them into the same DBMS. If the results are different, the DBMS might have a logical bug. Similarly, AMOEBA [

27] constructs query pairs that are semantically equivalent to each other and then compares their response time on the same database system to detect performance bugs. The main difference between Squill and the works above is that Squill is a grey-box fuzzer with feedback like code coverage. Compared with blind fuzzing, fuzzing with feedback can comprehensively explore program states and trigger the deep logic of DBMSs.

Grey-box DBMS Fuzzing. In recent years, grey-box or mutation-based fuzzing has shown its effectiveness in memory error bug detection [

28,

29,

30,

31,

32,

33,

34,

35,

36,

37]. AFL [

6], which is an important milestone in the area of software security testing [

38], has been applied to DBMS fuzzing. However, the fuzzer, like AFL, performs poorly in generating structural inputs, such as SQL statements. Though there are many works trying to address this challenge, such as Zest [

39], GRIMOIRE [

40], and Nautilus [

24]. Their ability to generate syntactically and semantically correct SQL queries is still not good enough due to the strict syntactic and semantic requirements of the DBMS. The recent work Squirrel [

11] focuses on the DBMS fuzzing scenarios. Through a customized parser based on Bison [

41] and Flex [

42], Squirrel translates SQL statements into AST and mutates based on the AST to guarantee the syntax correctness of the inputs. After mutation, Squirrel fills the newly generated inputs with semantics to increase their semantic correctness. There are many works based on Squirrel. With its industry-oriented design, Ratel [

43] improves the feedback precision in DBMS fuzzing and enhances the robustness of input generation. SQLRight [

18] combines differential testing and mutation-based fuzzing to detect logic bugs of the DBMS. Squill is also based on Squirrel, using the correctness of seeds as feedback to guide fuzzing. Moreover, Squill introduces an instantiation method that can generate correct semantics for SQL statements with nested structures.