1. Introduction

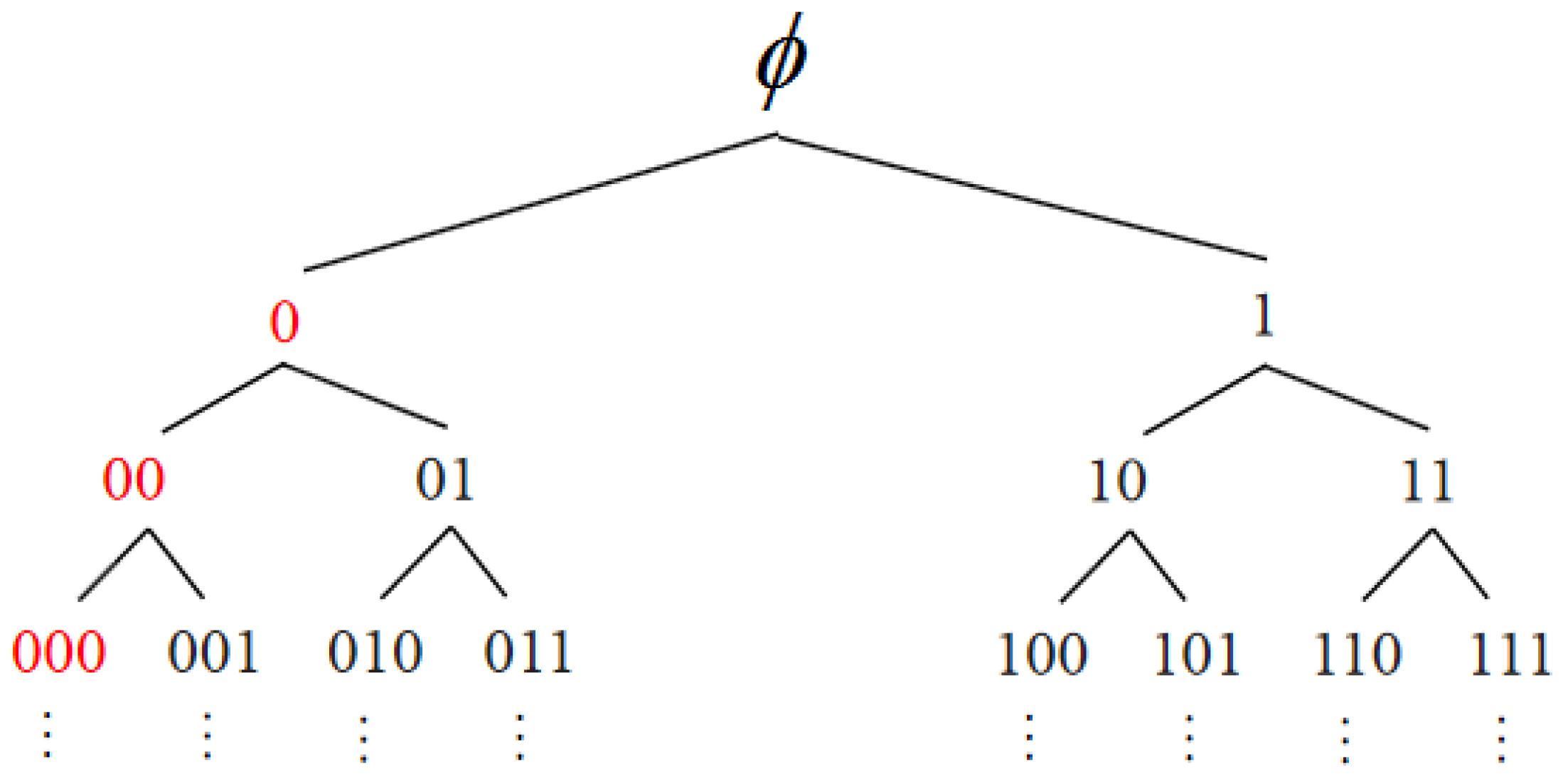

Data hiding (DH) in plaintext images embeds secret information by a slight modification of pixel values that is imperceptible for human eyes [

1]. To protect cover images, reversible data hiding (RDH) [

2] is proposed, which can perfectly restore the cover image after the data are extracted. Nowadays, RDH is of enormous use in fields such as military communication and medical image management.

There are several successful categories of RDH approaches. The first type is histogram shifting [

3,

4,

5,

6], which first analyses the histogram of the image and then creates the available embedding bin by shifting bins on one side outward. The second is difference expansion [

7,

8,

9], which doubles the difference between a pair of pixels to vacate embeddable state. The third is based on lossless compression [

10,

11,

12], which vacates spare room for data embedding by compressing the cover image. The last is prediction error expansion [

13,

14], which utilizes the spatial correlation between image pixel values to vacate available space. Some subsequent RDH methods improve or combine the above approaches to enlarge the data payload [

15].

Puech [

16] proposed the first work on reversible data hiding in encrypted images (RDHEI). Later on, improved versions were proposed by combining various image encryption techniques and data-hiding methods [

17,

18,

19,

20]. In recent years, RDHEI schemes are commonly classified into two approaches, namely vacating room after encryption (VRAE) [

21] and reserving room before encryption (RRBE) [

22,

23,

24,

25]. In the framework of VRAE, RDH is performed directly on the encrypted image. The correlation between neighboring pixel values is disrupted by encryption, therefore, the redundant space is relatively less.

RRBE leverages the correlation between neighboring pixel values to compress the cover image before image encryption and thus creates more spare room for data embedding. Ma et al. [

22] divided image pixels into two regions, A and B, according to a smoothness function. The least significant bits (LSBs) in region A were hidden into LSBs of region B and then the secret message was embedded into LSBs of region A. Puteaux et al. [

23] predicted the most significant bit (MSB) according to the correlation between a pixel and its neighbors, which improved the image quality and embedding capability. Wang et al. [

25] calculated the prediction error between adjacent pixels and made the use of the correlation between adjacent image pixels to further compress the code length.

Yi et al. [

26] proposed a separable RDHEI, which allows independent execution of data extraction or image recovery. The cover image is first divided into blocks and a pixel in each block is assigned as the reference pixel. Values of the remaining pixels are predicted based on the reference pixels and classified into embeddable and non-embeddable groups. Through efficient labeling of embeddable pixels, spare room is vacated for data hiding. Wu et al. [

27] proposed an improved parametric binary tree labeling (IPBTL) RDHEI scheme. Instead of using sampling pixels as reference set, they defined the first row and the first column of the cover image as the reference set. Thus, the number of embeddable pixels can be greatly increased and the resulting data payload is expanded.

The main features of the proposed EPBTL are as follows:

- (a)

Instead of directly recording the replaced pixel bits, we try to make the non-embeddable pixels self-recordable.

- (b)

The reduction of auxiliary information significantly increases the total data payload.

- (c)

Experimental results demonstrate that the proposed scheme outperforms state-of-the-art methods in embedding rate.

The rest of this paper is organized as follows:

Section 2 introduces the related work.

Section 3 specifies the Proposed EPBTL scheme.

Section 4 shows the experimental results.

Section 5 concludes this paper and elaborates the future works.

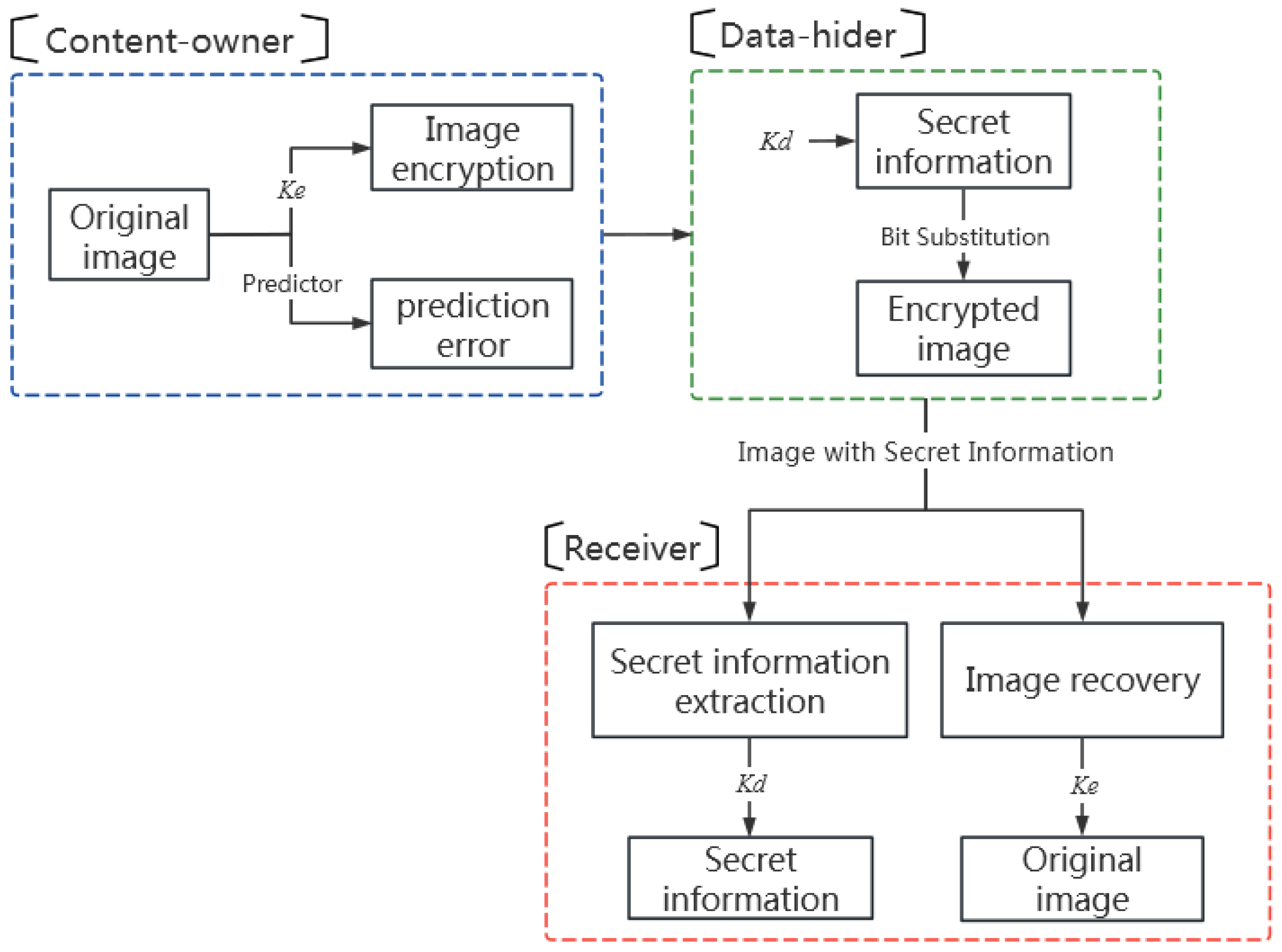

3. Proposed EPBTL-RDHEI

The proposed reversible data hiding in encrypted images with extended parametric binary tree labeling (EPBTL-RDHEI) consists of three phases, namely (1) the image encryption and pixel grouping, (2) the pixel labeling and data hiding, and (3) the data extraction and image recovery. As shown in

Figure 4, in the first phase, the content owner uses a predictor to calculate the prediction errors of the cover image. Then, the image is encrypted with the encryption key

and the pixels are grouped according to the prediction errors. In the second phase, the data hider embeds the secret information, which is encrypted by the data-hiding key

, into the encrypted image by bit substitution. In the third phase, the receiver can separately use the data-hiding key

to correctly extract the secret information from the encrypted image, or use the encryption key

to recover the original image based on the prediction errors. The receiver can do both when the two keys are in hand.

3.1. Image Encryption and Pixel Grouping

There are three steps in this phase, namely the prediction error calculation, the image encryption, and the pixel grouping. Details are as follows.

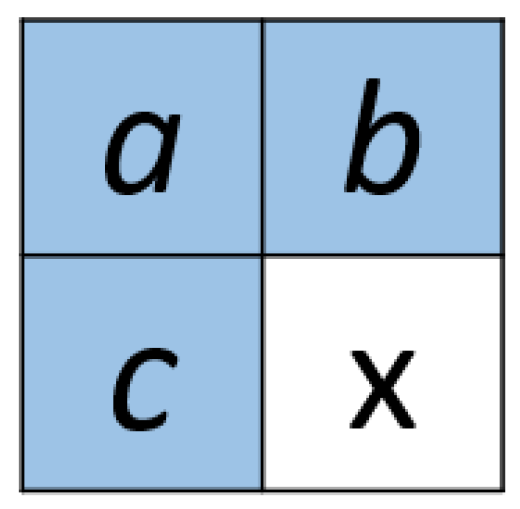

3.1.1. Prediction Error Calculation

Our approach shares the same framework as IPBTL [

27]. The top row and left column of the original image are selected as the reference pixels. The values of the remaining pixels are predicted based on the reference pixels. The median-edge detector (MED) [

12] is applied to predict pixel values in the raster scan order. The prediction of MED is illustrated in

Figure 5. The pixel marked ‘

x’ is predicted using pixels ‘

a’, ‘

b’, and ‘

c’. The predicted value

is given by

The prediction error is therefore given by

Figure 6 shows an example of image

I to calculate the prediction error. The subsequent demonstrations of detailed operations in

Section 3 are based on this original image

I.

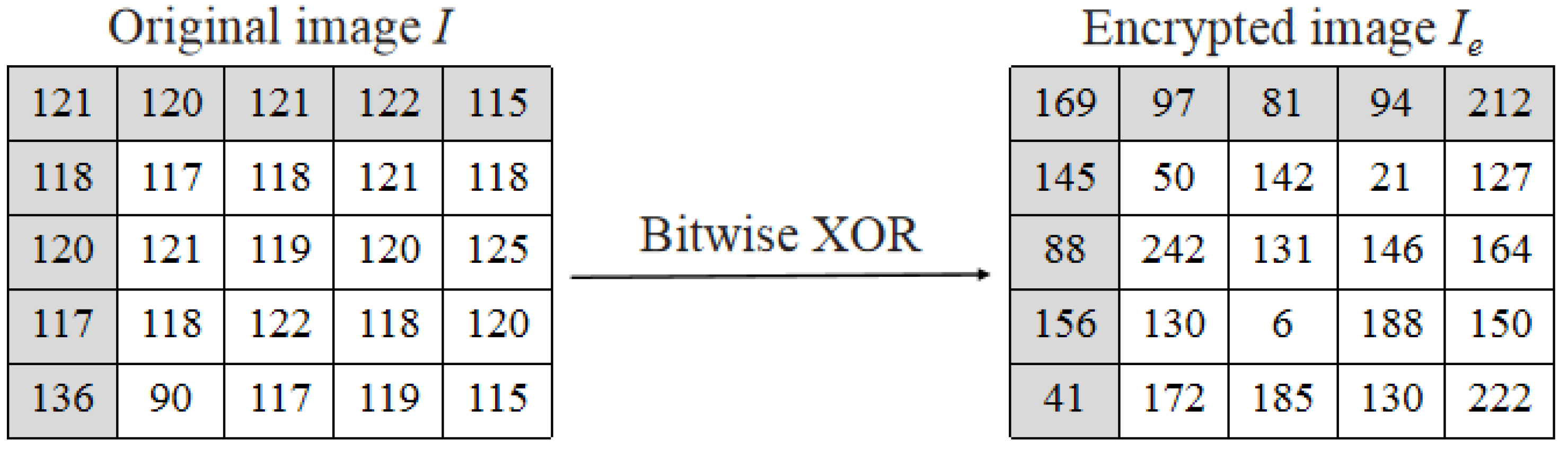

3.1.2. Image Encryption

The stream cypher is adopted as the image encryption scheme. A pseudo-random matrix

R is generated with the encryption key

. Then, the bitwise exclusive-or (XOR) operation is applied as

where ⊕ is the XOR operation, the notations

i and

j are the row and column indices of the image, and

k denotes the bit index of a pixel. The resulting pixel array is the encrypted image

as shown in

Figure 7.

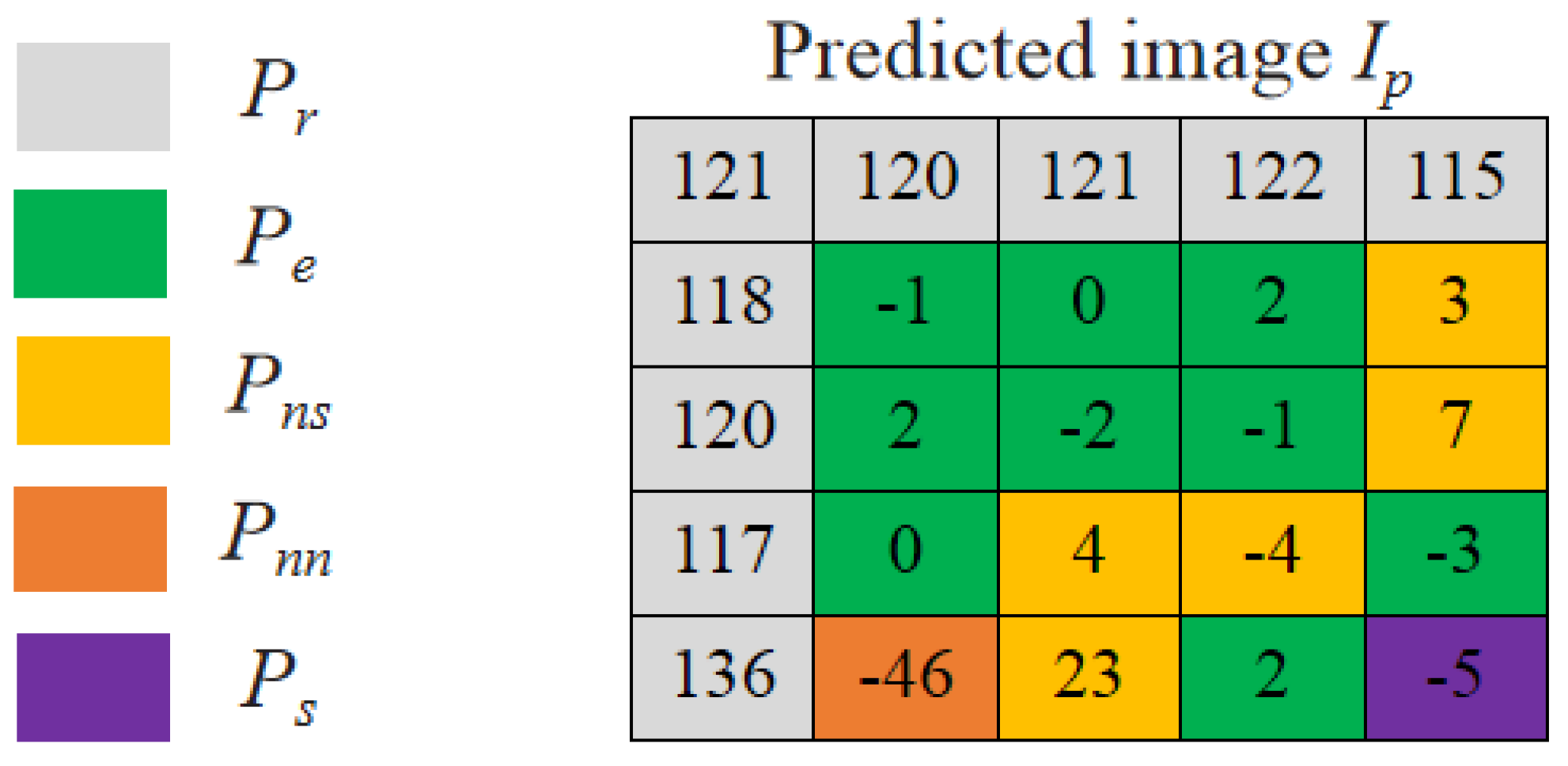

3.1.3. Pixel Grouping

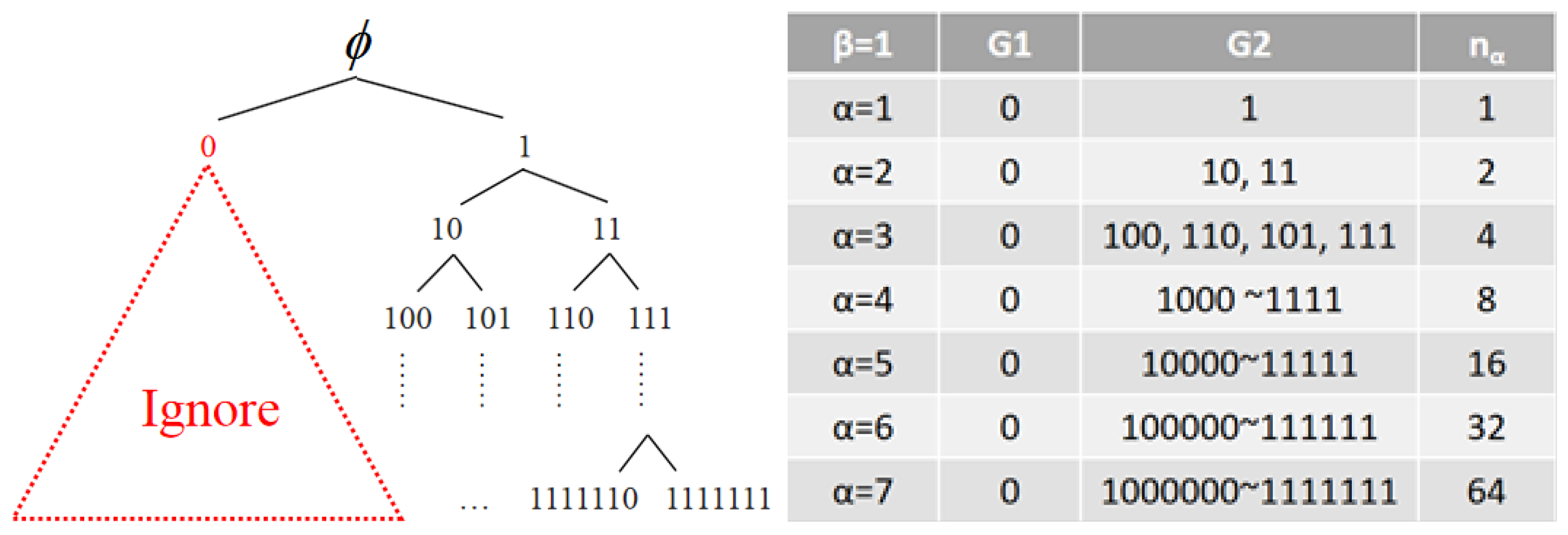

The pixels in the encrypted image are divided into four groups, namely the reference pixel group , the special pixel group , the embeddable pixel group , and the non-embedded pixel group . The non-embeddable pixel group is further divided into the self-recordable subgroup and the non-recordable subgroup according to their prediction errors. is composed of the pixels in the top row and the left column of the image. The pixel at the bottom right corner of the image is chosen as the only pixel in the group and is used to record parameter values and .

The remaining pixels are classified into groups

,

, and

based on their prediction errors. The embeddable pixel group

corresponds to the group G2 in PBTL. Recall that the number of different codes in G2 is defined in Equation (

1). Thus, we exploit these G2 codes to label the embeddable pixels

of prediction errors within the range defined by

For a non-embeddable pixel, if its prediction error can be encoded in

bits, it is classified as a

pixel. Otherwise, it is classified as a

pixel. The precise range of prediction error for the group

is given in Equation (

6).

Figure 8 gives an illustrative example of pixel grouping for

and

.

3.2. Pixel Labeling and Data Hiding

After classifying the pixels into groups, the encrypted image is ready for labeling. This subsection presents the details for pixel labeling and data hiding.

3.2.1. Recording Parameters

The parameter values of and are converted into 4-bit and 3-bit, respectively. Then, the 7 bits is recorded to the special pixel . The remaining 1 bit is reserved to record the version code, which will be explained later. The replaced eight bits of encrypted pixel value are treated as auxiliary information and appended to the front of secret data.

3.2.2. Labeling of Embeddable Group

The labeling of embeddable pixels follows PBTL. The mapping of prediction errors to the label codes for

and

is given in

Table 1. An example of pixel labeling is illustrated in

Figure 9.

According to

Table 1, the pixels with prediction error ranged from −3 to 2 are embeddable. The lowest three bits of an embeddable pixel are used to record the label code. All label codes are recorded backward. For example, the prediction error −1 maps to ‘100’. Thus, ‘001’ is recorded to the lowest three bits of the pixel.

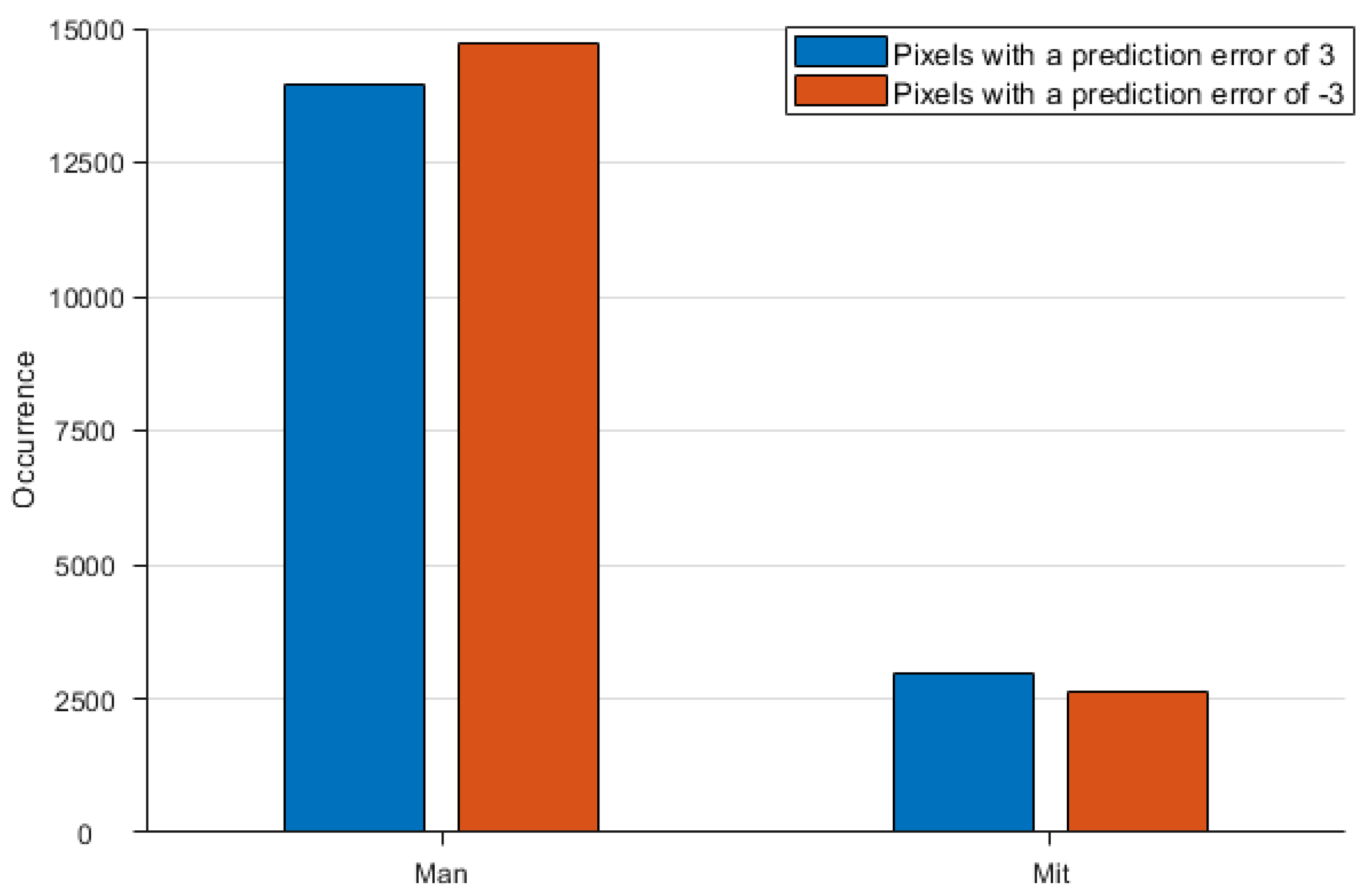

The mapping table in

Table 1 is not a fixed version. In general, the histogram of prediction error decreases with increasing absolute error. For

and

, there are six G2 codes. Therefore, two possible ranges of prediction error can be selected, i.e., −3 to 2 or −2 to 3. We can select a better version according to the error distribution of the given image.

Figure 10 shows two test images and

Figure 11 is the histogram of prediction error at −3 and 3 for the two images. As shown in the plot, the number of pixels with a prediction error of −3 is greater than the number of pixels with an error of 3. But the other image behaves quite contrary. Thus, we select the version of −3 to 2 for image ‘Man’ and the version of −2 to 3 for image ‘Mit’. The bit of version code is used to indicate the actual version applied. For the example given in

Figure 9, we record the parameters and version code with ‘0011’ for, ‘010’ for, and ‘0’ for the version of −3 to 2.

3.2.3. Labeling of Non-Embeddable Group

Our labeling of the non-embeddable pixels is completely different from PBTL or IPBTL. By extending the representation range of prediction error, we further divide the non-embeddable group into the self-recordable group

and the non-recordable group

. When the prediction error satisfies Equation (

6), it can be represented with

bits and recorded in its current position and classified as a self-recordable pixel.

Suppose , , and the embeddable range of prediction error is to . Then, the bits are used to represent prediction error of a self-recordable pixel. The leading bit is used as sign bit and the remaining five bits are used to represent for or for . For example, a non-embeddable pixel with is represented as ‘10000100’, where the leading code is ‘1’ for positive, ‘00001’ represents the absolute error and ‘00’ is the group label. Thus, no auxiliary information is required to record a self-recordable pixel.

For a non-recordable pixel in the group, in addition to the group label ‘00’, the remaining six bits are marked with a stream of zeros ‘000000’ and the whole eight bits are recorded as a part of the auxiliary information.

3.2.4. Data Hiding

The secret data is encrypted with the data-hiding key

. After appending the auxiliary information (AI), the whole data stream is embedded into the vacated space of the embeddable pixels. The data embedding process is illustrated in

Figure 12. Thus, the marked encrypted image

is obtained.

3.3. Data Extraction and Image Recovery

To extract secret data, we first classify the pixels into groups according to the labels. Then, extract embedded data from the embeddable group. Count the number of non-recordable pixels and the special pixel and remove their corresponding auxiliary information from the head of the data stream. Finally, decrypt the secret data with the data-hiding key.

To recover image, the parameters and the version code are decoded from the special pixel first. Then, pixels are classified into groups according to the labels. Extract the embedded data from the embeddable group and retrieve the auxiliary information to recover the special pixel and the non-recordable pixels. The reference pixels are recovered with the image encryption key. Finally, the embeddable pixels and the self-recordable pixels are recovered according to the recorded prediction errors. Thus, the original image can be completely restored.

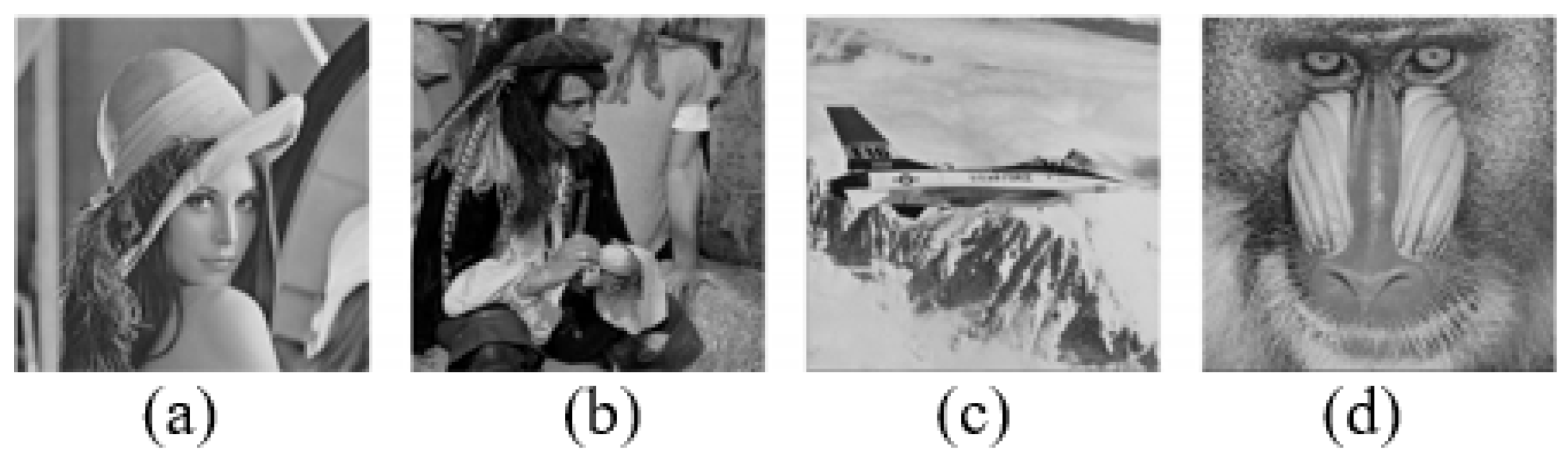

4. Experimental Results

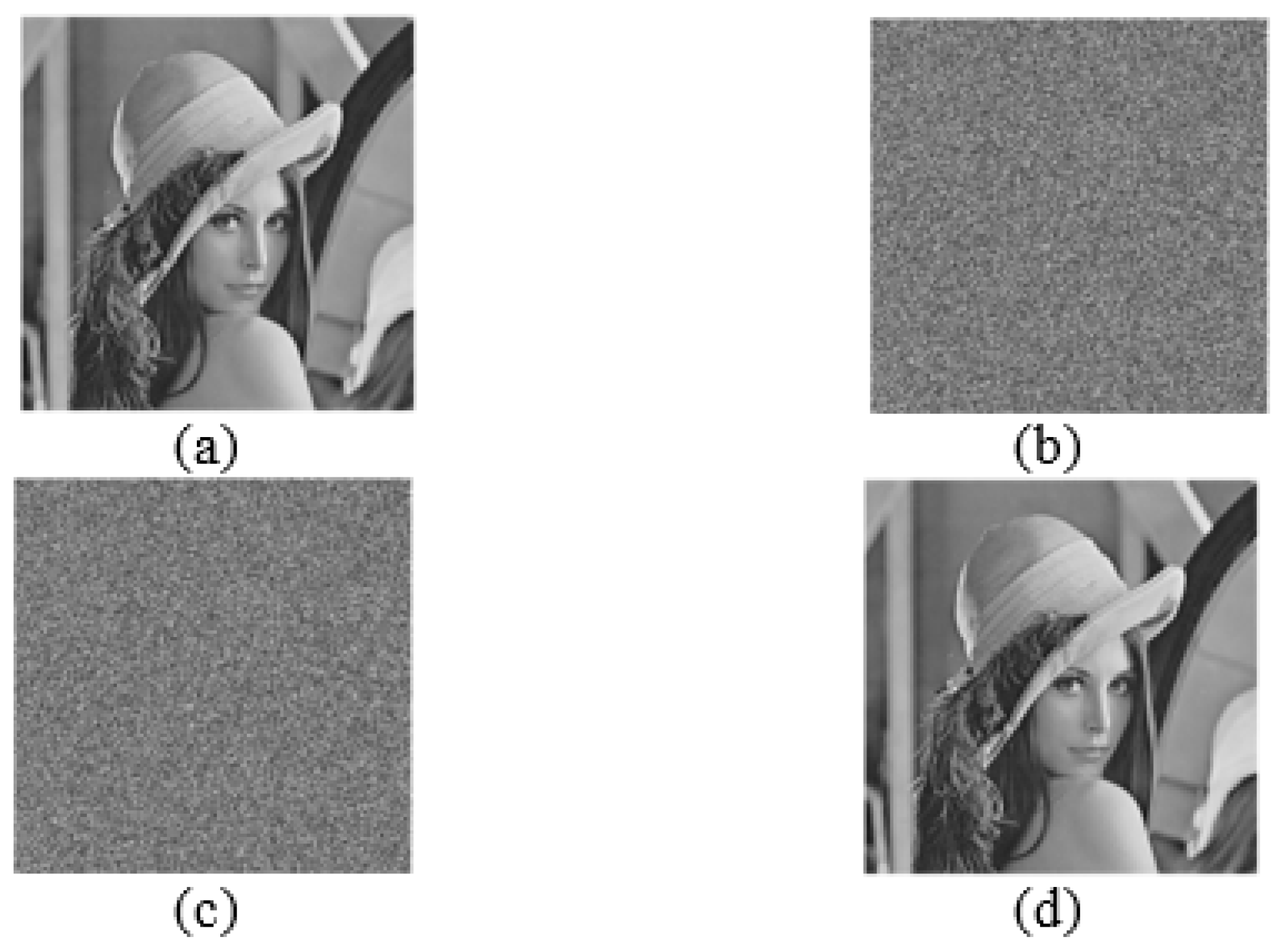

To evaluate the performance of the proposed scheme, we conducted experiments on security analysis, image quality, embedding capacity and comparison of state-of-the-art schemes. As shown in

Figure 13, we chose four standard grayscale images as the test images in the experiment. More, the datasets UCID [

28], BOSSbase [

29], and BOWS-2 [

30] were used in the experiments to verify whether the scheme can be applied to most images.

4.1. Security Analysis

Security analysis is a very important step to test the ability of a scheme to resist attacks. Then, we will test the security of the scheme in two parts: the histogram of image and the information entropy.

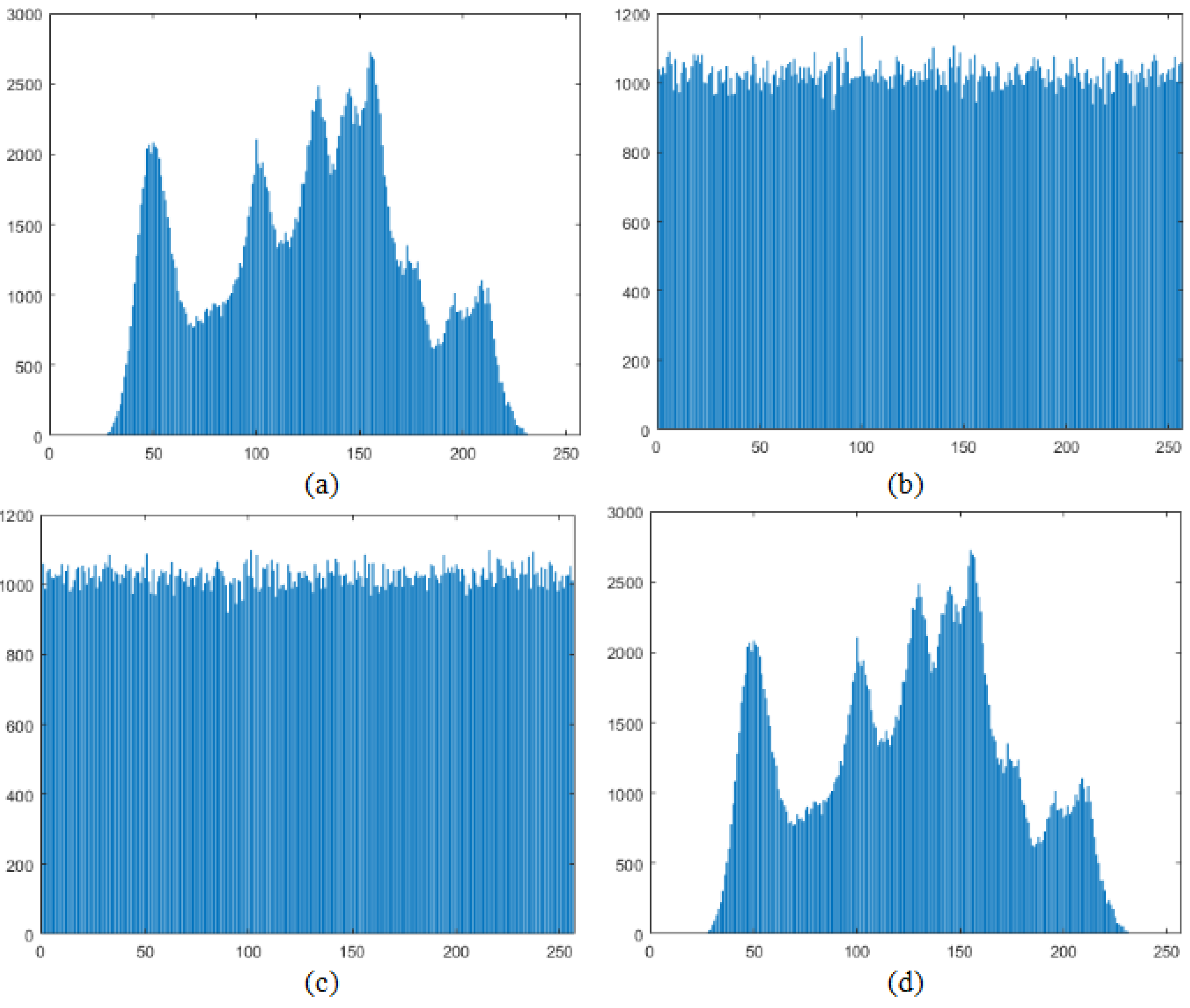

4.1.1. Histogram of Images

In order to test the pixel distribution of the images generated in different phases, we give the corresponding experimental results and histograms for Lena as an example, as shown in

Figure 14 and

Figure 15.

As can be seen in

Figure 15, the original image (a) and the recovered image (d) in

Figure 14 are the same, indicating that the proposed scheme can recover the original image losslessly. In addition, the pixel distribution of the encrypted image (b) and the embebded encrypted image (c) is even and chaotic that is different from the pixel distribution of the original image. It can be concluded that it is almost impossible to obtain the information of the original image from the encrypted image.

4.1.2. Entropy of Image

The advantage of encrypted images is that the information patterns about the pixels cannot be inferred. Therefore, to further verify the security of the proposed scheme, we use information entropy to calculate the pixel randomness of the images at different phases. The information entropy can be obtained from Equation (

7), where

s represents the gray level of an image pixel,

represents the relative probability of a particular gray level

in the image, and

represents the total number of gray levels.

We conducted experiments using the test images in

Figure 13 including Lena, Man, Jetplane and Baboon.

Table 2 shows the information entropy of the test images at different stages, from which we can see that both the encrypted image and the embedded encrypted image are very close to 8. The theoretical maximum of the information entropy is 8, and the closer to 8 the higher the randomness of the image is. This shows that the proposed scheme security is reliable.

4.2. Image Quality Assessment

It is important to assess the quality of the recovered image compared to the original image and the encrypted image. If the recovered image is different from the original cover image, full reversibility is not ensured. If the quality of the encrypted image is high, the scheme is poorly encrypted and there is a risk of information leakage.

The PSNR and the SSIM are often used to evaluate the quality of an image after it has been processed compared to the original image. the PSNR is the peak signal to noise ratio, which is the ratio between the maximum possible power of the signal and the power of the corrupted noise that affects the fidelity of its representation. The unit is decibels (dB). The higher the PSNR, the closer the image is to the original. The SSIM is the Structural Similarity Index. It is a measure of the quality of images based on visual perception and takes a value from 0 to 1. The SSIM value is 1 when the two images are structurally identical.

Four test images in

Figure 13 were used for the experiment.

Table 3 shows the PSNR and SSIM for the original and recovered images, where PSNR is

∞ and SSIM is 1. This indicates that the proposed scheme can be made fully reversible.

Table 4 shows a comparison of the original image and embedded encrypted image, where the PSNR does not exceed 10 and the SSIM is close to 0. This shows that the two images are so different that the proposed scheme cannot derive the original image from the embedded encrypted image.

4.3. Embedding Capacity

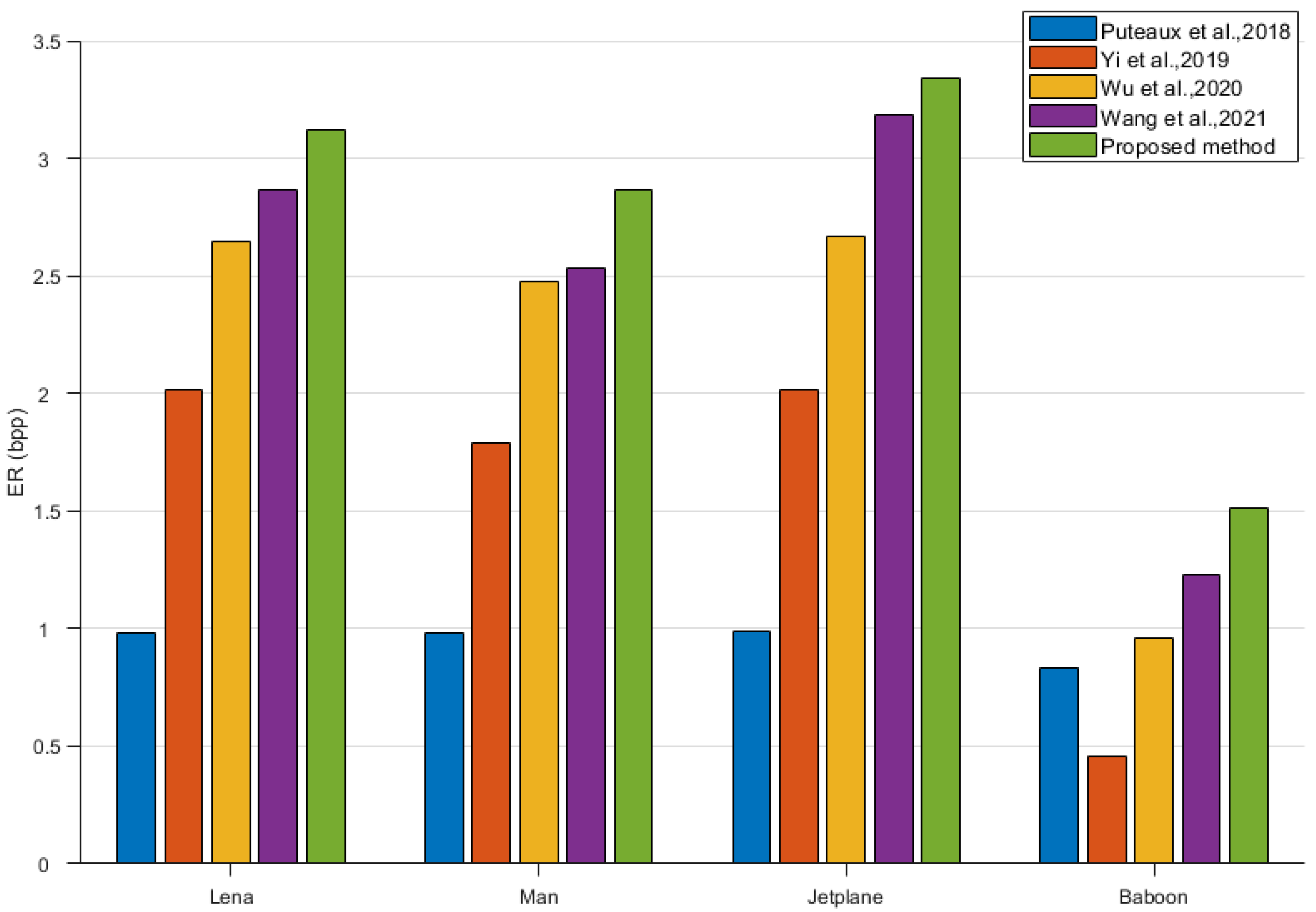

In order to calculate the embedding capacity of the proposed scheme, we conducted some experiments with test images and datasets. Also, state-of-the-art schemes were compared.

The embedding capacity is the total number of bits in the embedded image. Therefore the embedding rate is used to measure the embedding ability of the original image. The embedding rate represents the number of bits of information that can be embedded into each pixel, and it is measured in bits per pixel (bpp). A larger embedding rate means that the solution is more capable of embedding.

In order to obtain the highest embedding rates in the experiments, all scenarios used the best effect parameter settings.

Figure 16 compares the proposed EPBTL scheme and four SOTA schemes including Puteaux et al. [

23], Wang et al. [

25], Yi et al. [

26], Wu et al. [

27] on the four testing images including Lena, Man, Jetplane and Baboon in terms of embedding rate. As can be seen in the four test images, the proposed scheme shows varying degrees of improvement in embedding rate over the other four SOTA schemes. The images ’Jetplane’ and ’Lena’ both have an embedding rate of over 3.0 bpp, which is a significant change. The image ’Jetplane’ has a smaller increase compared to the other three images. This is because the prediction errors for the pixels of the image ’Jetplane’ fall less in the self-recordable subgroup (

). It leads to an increase in the data that needs to be recorded by the auxiliary information.

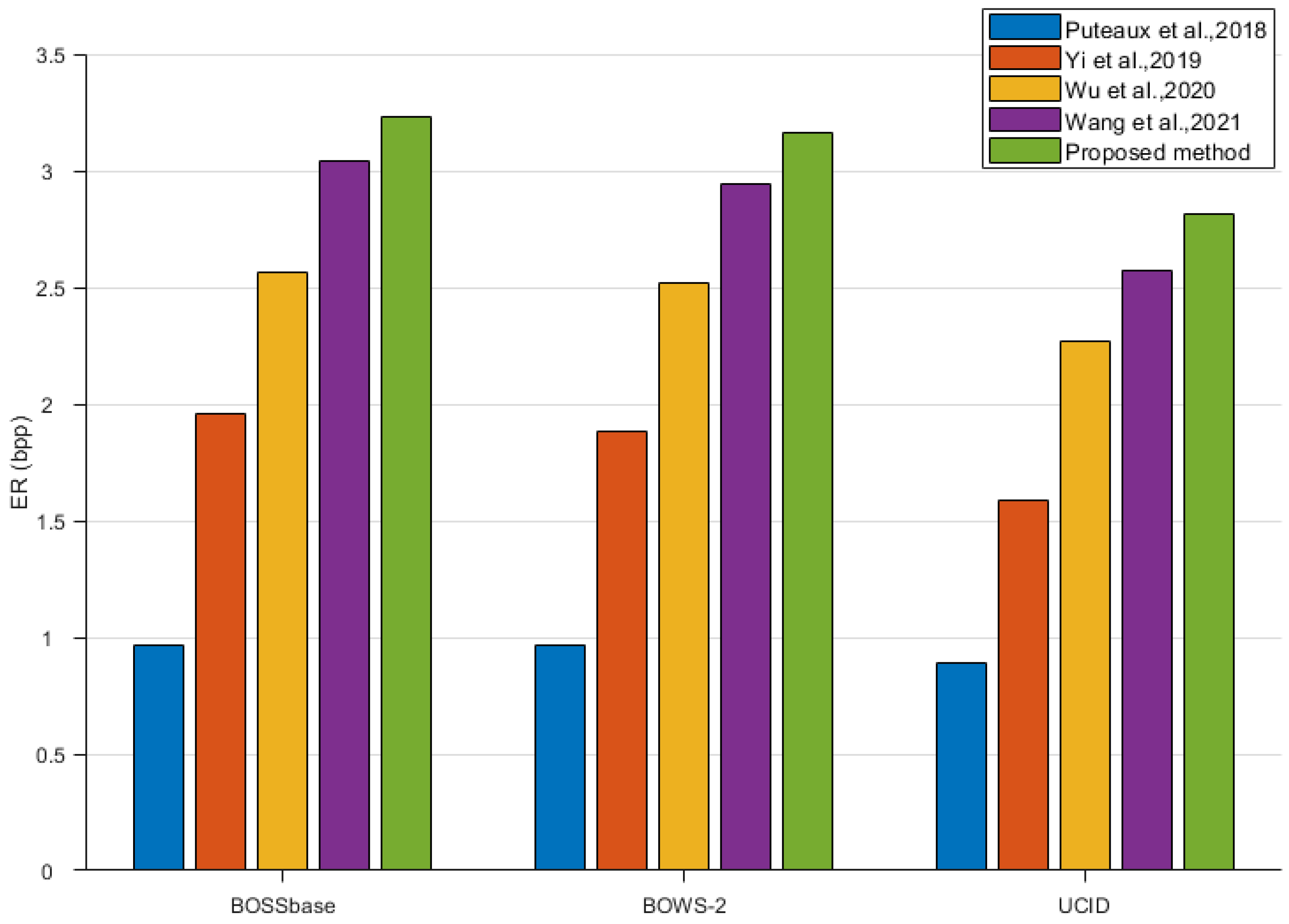

To verify the generality of the scheme, we compare this schemes on the three datasets including UCID [

28], BOSSbase [

29], and BOWS-2 [

30] in terms of average embedding rate. As can be seen in

Figure 17, in the datasets BOSSbase and BOWS-2, the proposed scheme also has an embedding rate of more than 3.0 bpp. The embedding rate also improved on the dataset UCID. This indicates that the proposed scheme has a higher embedding rate than the other schemes in the experiment with random images.

Table 5,

Table 6,

Table 7 and

Table 8 show the embedding rate of the IPBTL-RDHEI scheme and in the proposed EPBTL scheme when

, and

and 3. We only need to pay attention to the optimal value of each image in all situations. The reason for choosing

, 3,

is that the experiments can achieve the better embedding rate at this configuration.

In

Table 5,

Table 6,

Table 7 and

Table 8, “/” indicates that it is not possible to embed information in this case. The bold data are the best embedding rate for the images. The embedding rates of these four images in the proposed EPBTL scheme can reach 3.1249, 2.8687, 3.3413, and 1.5125, respectively, which have an increase of about 0.3 bpp compared with the results of the IPBTL-RDHEI scheme, i.e., 2.7872, 2.5517, 3.0589, and 1.2502, respectively.

The increase of data payload is due to the reduction of the size of auxiliary information. The reduction in auxiliary information is due to the fact that the remaining bits of the pixel point in the group are used to record the prediction error for that pixel point. The prediction error can recover the value of that pixel so that the auxiliary information does not need to record the original pixel value of that pixel. Because of the high number of pixels belonging to the group, there is a significant embedding rate improvement in the proposed EPBTL scheme.