The Concurrent Validity and Test-Retest Reliability of Possible Remote Assessments for Measuring Countermovement Jump: My Jump 2, HomeCourt & Takei Vertical Jump Meter

Abstract

Featured Application

Abstract

1. Introduction

2. Materials & Methods

2.1. Participants

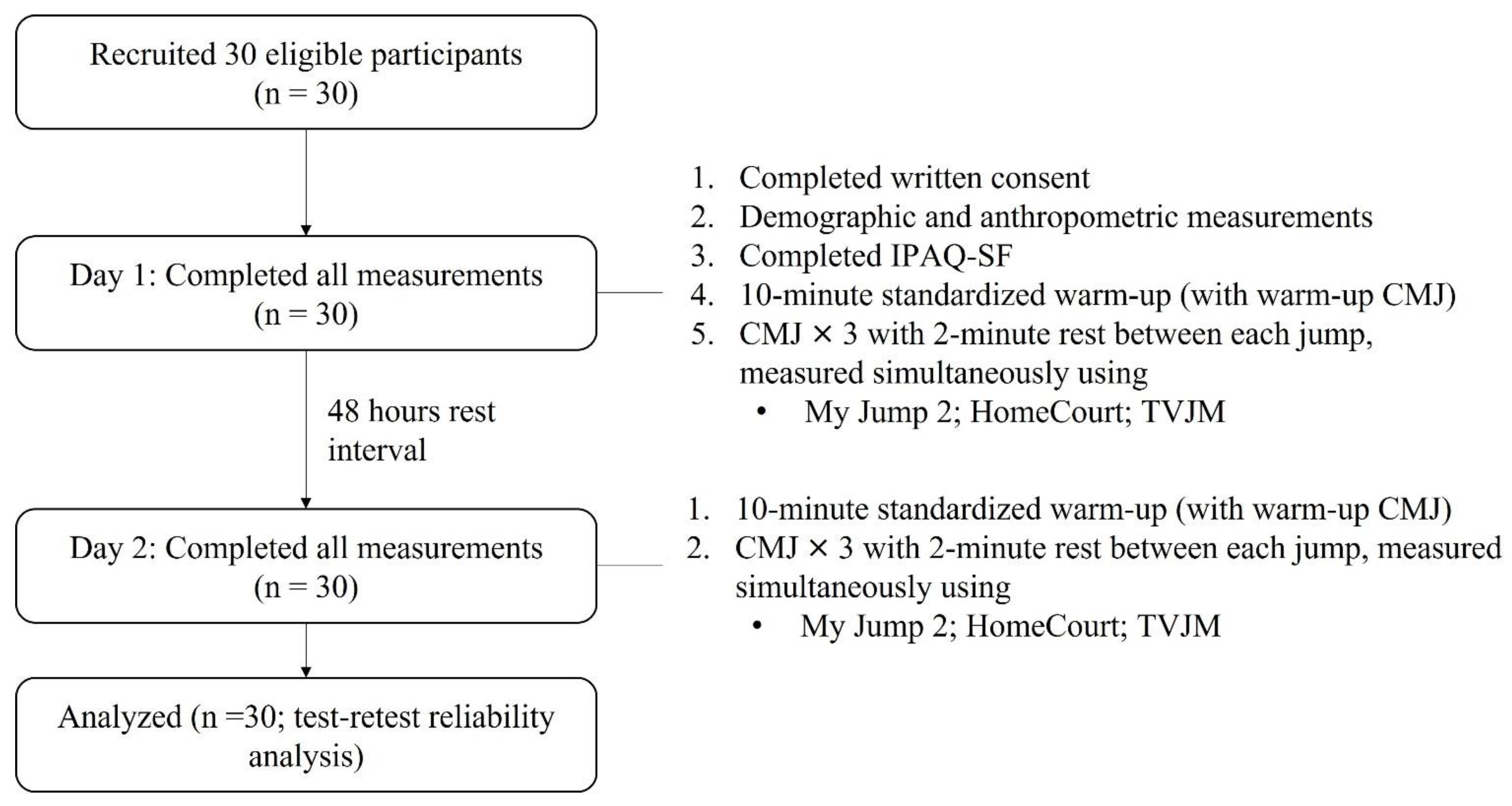

2.2. Procedures

2.3. CMJ

2.4. Measurements

2.5. Statistical Analyses

3. Results

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Grazioli, R.; Loturco, I.; Baroni, B.M.; Oliveira, G.S.; Saciura, V.; Vanoni, E.; Dias, R.; Veeck, F.; Pinto, R.S.; Cadore, E.L. Coronavirus disease-19 quarantine is more detrimental than traditional off-season on physical conditioning of professional soccer players. J. Strength Cond. Res. 2020, 34, 3316–3320. [Google Scholar] [CrossRef] [PubMed]

- Claudino, J.G.; Cronin, J.; Mezêncio, B.; McMaster, D.T.; McGuigan, M.; Tricoli, V.; Amadio, A.C.; Serrão, J.C. The countermovement jump to monitor neuromuscular status: A meta-analysis. J. Sci. Med. Sport 2017, 20, 397–402. [Google Scholar] [CrossRef] [PubMed]

- Rampinini, E.; Donghi, F.; Martin, M.; Bosio, A.; Riggio, M.; Maffiuletti, N.A. Impact of COVID-19 lockdown on serie A soccer players’ physical qualities. Int. J. Sport. Med. 2021, 42, 917–923. [Google Scholar] [CrossRef] [PubMed]

- Spyrou, K.; Alcaraz, P.E.; Marín-Cascales, E.; Herrero-Carrasco, R.; Cohen, D.D.; Calleja-Gonzalez, J.; Pereira, L.A.; Loturco, I.; Freitas, T. Effects of the COVID-19 lockdown on neuromuscular performance and body composition in elite futsal players. J. Strength Cond. Res. 2021, 35, 2309–2315. [Google Scholar] [CrossRef] [PubMed]

- Taylor, K.; Chapman, D.W.; Cronin, J.B.; Newton, M.J.; Gill, N. Fatigue monitoring in high performance sport: A survey of current trends. J. Aust. Strength Cond. 2012, 20, 12–23. [Google Scholar]

- Beattie, C.E.; Fahey, J.T.; Pullinger, S.A.; Edwards, B.J.; Robertson, C.M. The sensitivity of countermovement jump, creatine kinase and urine osmolality to 90-min of competitive match-play in elite English Championship football players 48-h post-match. Sci. Med. Footb. 2021, 5, 165–173. [Google Scholar] [CrossRef]

- Rago, V.; Brito, J.; Figueiredo, P.; Carvalho, T.; Fernandes, T.; Fonseca, P.; Rebelo, A. Countermovement jump analysis using different portable devices: Implications for field testing. Sports 2018, 6, 91. [Google Scholar] [CrossRef]

- McMahon, J.J.; Jones, P.A.; Comfort, P. Comment on: “Anthropometric and physical qualities of elite male youth rugby league players”. Sport. Med. 2017, 47, 2667–2668. [Google Scholar] [CrossRef]

- Bogataj, Š.; Pajek, M.; Hadžić, V.; Andrašić, S.; Padulo, J.; Trajković, N. Validity, reliability, and usefulness of My Jump 2 app for measuring vertical jump in primary school children. Int. J. Environ. Res. Public Health 2020, 17, 3708. [Google Scholar] [CrossRef]

- Casartelli, N.; Müller, R.; Maffiuletti, N.A. Validity and reliability of the Myotest accelerometric system for the assessment of vertical jump height. J. Strength Cond. Res. 2010, 24, 3186–3193. [Google Scholar] [CrossRef]

- Balsalobre-Fernández, C.; Glaister, M.; Lockey, R.A. The validity and reliability of an iPhone app for measuring vertical jump performance. J. Sport. Sci. 2015, 33, 1574–1579. [Google Scholar] [CrossRef] [PubMed]

- Stanton, R.; Wintour, S.; Kean, C.O. Validity and intra-rater reliability of MyJump app on iPhone 6s in jump performance. J. Sci. Med. Sport 2017, 20, 518–523. [Google Scholar] [CrossRef] [PubMed]

- Till, K.; Scantlebury, S.; Jones, B. Authors’ reply to McMahon et al. Comment on: “Anthropometric and physical qualities of elite male youth rugby league players”. Sport. Med. 2017, 47, 2669–2670. [Google Scholar] [CrossRef] [PubMed]

- Driller, M.; Tavares, F.; McMaster, D.; O’Donnell, S. Assessing a smartphone application to measure counter-movement jumps in recreational athletes. Int. J. Sport. Sci. Coach. 2017, 12, 661–664. [Google Scholar] [CrossRef]

- Watkins, C.M.; Maunder, E.; Tillaar, R.V.D.; Oranchuk, D.J. Concurrent validity and reliability of three ultra-portable vertical jump assessment technologies. Sensors 2020, 20, 7240. [Google Scholar] [CrossRef]

- Portney, L.G. Foundations of Clinical Research: Applications to Evidence-Based Practice, 4th ed.; F.A. Davis Company: Philadelphia, PA, USA, 2020. [Google Scholar]

- Balsalobre-Fernández, C.; Tejero-González, C.M.; del Campo-Vecino, J.; Bavaresco, N. The concurrent validity and reliability of a low-cost, high-speed camera-based method for measuring the flight time of vertical jumps. J. Strength Cond. Res. 2014, 28, 528–533. [Google Scholar] [CrossRef]

- Gallardo-Fuentes, F.; Gallardo-Fuentes, J.; Ramírez-Campillo, R.; Balsalobre-Fernández, C.; Martínez, C.; Caniuqueo, A.; Cañas, R.; Banzer, W.; Loturco, I.; Nakamura, F.Y.; et al. Intersession and intrasession reliability and validity of the My Jump app for measuring different jump actions in trained male and female athletes. J. Strength Cond. Res. 2016, 30, 2049–2056. [Google Scholar] [CrossRef]

- Sjostrom, M.; Ainsworth, B.E.; Bauman, A.; Bull, F.C.; Hamilton-Craig, C.R.; Sallis, J.F. Guidelines for Data Processing Analysis of the International Physical Activity Questionnaire (IPAQ)—Short and Long Forms. 2005. Available online: http://www.IPAQ.ki.se (accessed on 1 January 2020).

- McMahon, J.J.; Suchomel, T.J.; Lake, J.P.; Comfort, P. Understanding the key phases of the countermovement jump force-time curve. Strength Cond. J. 2018, 40, 96–106. [Google Scholar] [CrossRef]

- Petrigna, L.; Karsten, B.; Marcolin, G.; Paoli, A.; D’Antona, G.; Palma, A.; Bianco, A. A review of countermovement and squat jump testing methods in the context of public health examination in adolescence: Reliability and feasibility of current testing procedures. Front. Physiol. 2019, 10, 1384. [Google Scholar] [CrossRef]

- Carlock, J.M.; Smith, S.L.; Hartman, M.J.; Morris, R.T.; Ciroslan, D.A.; Pierce, K.C.; Newton, R.U.; Harman, E.A.; Sands, W.A.; Stone, M.H. The relationship between vertical jump power estimates and weightlifting ability: A field-test approach. J. Strength Cond. Res. 2004, 18, 534–539. [Google Scholar]

- Cruvinel-Cabral, R.M.; Oliveira-Silva, I.; Medeiros, A.R.; Claudino, J.G.; Jiménez-Reyes, P.; Boullosa, D.A. The validity and reliability of the “My Jump App” for measuring jump height of the elderly. PeerJ 2018, 6, e5804. [Google Scholar] [CrossRef] [PubMed]

- Till, K.; Cobley, S.; O’Hara, J.; Chapman, C.; Cooke, C. Anthropometric, physiological and selection characteristics in high performance UK junior rugby league players. Talent. Dev. Excell. 2010, 2, 193–207. [Google Scholar]

- Till, K.; Cobley, S.; O’Hara, J.; Chapman, C.; Cooke, C. A longitudinal evaluation of anthropometric and fitness characteristics in junior rugby league players considering playing position and selection level. J. Sci. Med. Sport 2012, 16, 438–443. [Google Scholar] [CrossRef] [PubMed]

- Till, K.; Cobley, S.; O’Hara, J.; Brightmore, A.; Cooke, C.; Chapman, C. Using anthropometric and performance characteristics to predict selection in junior UK Rugby League players. J. Sci. Med. Sport 2011, 14, 264–269. [Google Scholar] [CrossRef] [PubMed]

- Hopkins, W.G.; Marshall, S.W.; Batterham, A.M.; Hanin, J. Progressive statistics for studies in sports medicine and exercise science. Med. Sci. Sport. Exerc. 2009, 41, 3–12. [Google Scholar] [CrossRef]

- Koo, T.K.; Li, M.Y. A guideline of selecting and reporting intraclass correlation coefficients for reliability research. J. Chiropr. Med. 2016, 15, 155–163. [Google Scholar] [CrossRef] [PubMed]

- Bartolomei, S.; Sadres, E.; Church, D.D.; Arroyo, E.; Gordon, I.; Joseph, A.; Varanoske, A.N.; Wang, R.; Beyer, K.S.; Oliveira, L.P.; et al. Comparison of the recovery response from high-intensity and high-volume resistance exercise in trained men. Eur. J. Appl. Physiol. 2017, 117, 1287–1298. [Google Scholar] [CrossRef]

- Bosco, C.; Luhtanen, P.; Komi, P.V. A simple method for measurement of mechanical power in jumping. Eur. J. Appl. Physiol. Occup. Physiol. 1983, 50, 273–282. [Google Scholar] [CrossRef]

- Drazan, J.F.; Phillips, W.T.; Seethapathi, N.; Hullfish, T.J.; Baxter, J.R. Moving outside the lab: Markerless motion capture accurately quantifies sagittal plane kinematics during the vertical jump. J. Biomech. 2021, 125, 110547. [Google Scholar] [CrossRef]

- NEX Team Inc. About NBA Global Scout. Available online: https://www.homecourt.ai/faq/globalscout (accessed on 13 August 2021).

| CMJ Height (cm) Mean ± SD | |||

|---|---|---|---|

| My Jump 2 (I) | HomeCourt (J) | TVJM (K) | |

| Overall | 40.85 ± 7.86 | 46.10 ± 7.57 | 42.02 ± 8.11 |

| Day 1 | 40.91 ± 7.75 | 45.58 ± 7.50 | 41.80 ± 8.02 |

| Day 2 | 40.79 ± 7.44 | 46.62 ± 6.83 | 42.23 ± 8.05 |

| Overall Mean Difference (J–I) (95% CI) ES (d) | 5.25 (4.49, 6.01) * d = 0.68 | ||

| Overall Mean Difference (K–I) (95% CI) ES (d) | 1.17 (0.63, 1.70) * d = 0.15 | ||

| My Jump 2 | HomeCourt | TVJM | |

|---|---|---|---|

| My Jump 2 | 1 | 0.85 * | 0.93 * |

| HomeCourt | 1 | 0.85 * | |

| TVJM | 1 |

| Day 1 | Day 2 | |||||||

|---|---|---|---|---|---|---|---|---|

| ICC3,1 (95% CI) | CV% | SEM | MDC (cm) | ICC3,1 (95% CI) | CV% | SEM | MDC (cm) | |

| My Jump 2 | 0.86 (0.72–0.93) | 6.45 | 3.07 | 8.51 | 0.88 (0.79–0.94) | 5.92 | 2.72 | 7.55 |

| HomeCourt | 0.83 (0.71–0.91) | 6.14 | 3.34 | 9.26 | 0.82 (0.68–0.90) | 5.52 | 3.14 | 8.71 |

| TVJM | 0.92 (0.85–0.96) | 4.70 | 2.32 | 6.43 | 0.95 (0.91–0.97) | 3.82 | 1.82 | 5.05 |

| CMJ [Mean ± SD (cm)] | ICC3,1 (95% CI) | CV% | SEM | MDC (cm) | ||

|---|---|---|---|---|---|---|

| Day 1 | Day 2 | |||||

| My Jump 2 | 40.91 ± 7.75 | 40.79 ± 7.44 | 0.93 (0.87–0.97) | 3.68 | 1.95 | 5.41 |

| HomeCourt | 45.58 ± 7.50 | 46.62 ± 6.83 | 0.89 (0.77–0.94) | 4.31 | 2.42 | 6.71 |

| TVJM | 41.80 ± 8.02 | 42.23 ± 8.05 | 0.97 (0.93–0.98) | 2.58 | 1.46 | 4.05 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chow, G.C.-C.; Kong, Y.-H.; Pun, W.-Y. The Concurrent Validity and Test-Retest Reliability of Possible Remote Assessments for Measuring Countermovement Jump: My Jump 2, HomeCourt & Takei Vertical Jump Meter. Appl. Sci. 2023, 13, 2142. https://doi.org/10.3390/app13042142

Chow GC-C, Kong Y-H, Pun W-Y. The Concurrent Validity and Test-Retest Reliability of Possible Remote Assessments for Measuring Countermovement Jump: My Jump 2, HomeCourt & Takei Vertical Jump Meter. Applied Sciences. 2023; 13(4):2142. https://doi.org/10.3390/app13042142

Chicago/Turabian StyleChow, Gary Chi-Ching, Yu-Hin Kong, and Wai-Yan Pun. 2023. "The Concurrent Validity and Test-Retest Reliability of Possible Remote Assessments for Measuring Countermovement Jump: My Jump 2, HomeCourt & Takei Vertical Jump Meter" Applied Sciences 13, no. 4: 2142. https://doi.org/10.3390/app13042142

APA StyleChow, G. C.-C., Kong, Y.-H., & Pun, W.-Y. (2023). The Concurrent Validity and Test-Retest Reliability of Possible Remote Assessments for Measuring Countermovement Jump: My Jump 2, HomeCourt & Takei Vertical Jump Meter. Applied Sciences, 13(4), 2142. https://doi.org/10.3390/app13042142