Abstract

Urine cytology, which is based on the examination of cellular images obtained from urine, is widely used for the diagnosis of bladder cancer. However, the diagnosis is sometimes difficult in highly heterogeneous carcinomas exhibiting weak cellular atypia. In this study, we propose a new deep learning method that utilizes image information from another organ for the automated classification of urinary cells. We first extracted 3137 images from 291 lung cytology specimens obtained from lung biopsies and trained a classification process for benign and malignant cells using VGG-16, a convolutional neural network (CNN). Subsequently, 1380 images were extracted from 123 urine cytology specimens and used to fine-tune the CNN that was pre-trained with lung cells. To confirm the effectiveness of the proposed method, we introduced three different CNN training methods and compared their classification performances. The evaluation results showed that the classification accuracy of the fine-tuned CNN based on the proposed method was 98.8% regarding sensitivity and 98.2% for specificity of malignant cells, which were higher than those of the CNN trained with only lung cells or only urinary cells. The evaluation results showed that urinary cells could be automatically classified with a high accuracy rate. These results suggest the possibility of building a versatile deep-learning model using cells from different organs.

1. Introduction

Bladder cancer is the 11th most prevalent cancer, and its global incidence is gradually increasing [1]. Most bladder cancers are urothelial carcinomas, occurring in the urothelium lining of the bladder. Cystoscopy, urine cytology, and ultrasonography are the gold standard for diagnosing bladder cancer. Urine cytology examines the presence or absence of cancer cells in the excreted urine, thereby making it a non-invasive technique that can be performed repeatedly. The detection rate of this technique for advanced stages of cancer is higher than that of cystoscopy, making it extremely useful in clinical diagnosis. However, the detection sensitivity is low for early stages of cancer, and the weak cellular atypia in highly differentiated cancers makes the identification of malignant cells challenging. Therefore, in clinical practice, there is a need to develop diagnostic support technologies that can test urinary cells with high accuracy. This study aimed to develop a computer-aided diagnosis (CAD) technique [2,3] for this purpose.

This study focuses on deep learning [4,5], an artificial intelligence technology, to provide diagnostic assistance in urine cytology. Deep learning is based on multi-layer neural network technology. In particular, convolutional neural networks (CNNs), which mimic animal vision, have been widely used for image classification, object detection, and the prediction of future events.

There are many applications of medical imaging, and there have been many reports on applications with computed tomography, magnetic resonance imaging [6,7,8], and pathological images [9,10,11]. For pathological images, we have developed diagnostic support technology for lung cytology in which a CNN is used to differentiate between benign and malignant cells [12,13,14]. A method to input a finely cut patch image into a CNN to classify benign and malignant cells and create a malignancy map for the entire microscopic field of view was proposed [12]. The detection sensitivity and specificity for malignant cells aggregated per patch image were 94.6% and 63.4%, respectively, and those aggregated per case were 89.3% and 83.3%, respectively. During the classification of cytology images, it is important to include a wide variety of images in the training data to improve the detection efficiency. Therefore, we proposed a method to output benign and malignant cell images using a generative adversarial network (GAN), which can virtually generate images and use them as part of the training data for the CNN [13]. We also proposed a weakly supervised learning method that uses the attention mechanism to classify benign and malignant cell images on a case-by-case basis without providing correct labels for individual patch images and showed that the performance is comparable to supervised learning [14].

Vaickus et al. attempted to automate the Paris System for reporting urinary cytology by using whole slide images in combination with cell shape analysis and deep learning, and they obtained an area under the curve (AUC) value of 0.92 for the classification of high- and low-risk groups [15]. Further, Awan et al. proposed a method for risk stratification of urinary cytology using a CNN after recognizing urinary cells using image processing [16].

In these studies, the CNN model was trained by collecting the cellular images of interest. Good classification performance can be achieved when the disease has a high prevalence and sufficient data are available. However, rare diseases or unbalanced data sets such as the presence of very few malignant cells compared to benign cells can result in poor classification performance. In such cases, it would be ideal to train the CNN using cell images from different sites to enable variation learning, thereby generating a CNN model with high performance.

Transfer learning and fine-tuning are techniques used for diverting CNNs to other applications. For instance, a CNN with excellent classification performance, such as the GoogLeNet [17] or VGGNet [18], which has been trained on a large number of natural images from the Internet, can be partially modified for the classification of medical images as the processing target, and high performance can be obtained by fine-tuning the model with new medical images as the processing target [12,13,19]. In this case, an acceptable performance may be obtained even if the number of medical images is much smaller than the number of natural images used to train the original deep learning model. Considering the fact that the lung and urine cytology images share common cell features, a deep learning model constructed using lung cells together with the classification of urinary cells may display a higher performance than a deep learning model trained using urinary cells alone. Therefore, in this study, we propose an automated classification method for urinary cell images using a convolutional neural network pre-trained on lung cell images.

2. Materials and Methods

2.1. Outline of the Proposed Scheme

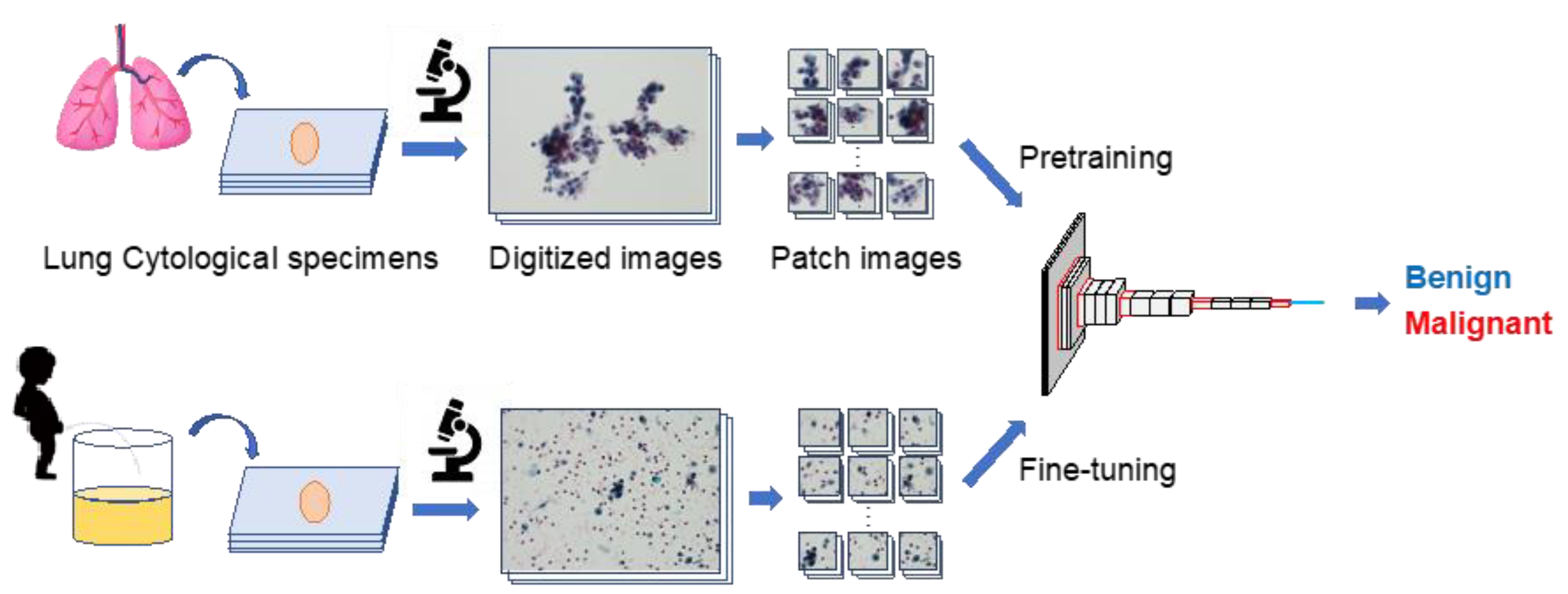

An outline of the proposed method is shown in Figure 1. First, several patch images were created using lung and urine cytology specimens. Subsequently, a CNN was trained to classify the lung cell patch images as either benign or malignant. The CNN was then given a patch image of urinary cells for fine-tuning, and the accuracy of urinary cell classification was evaluated.

Figure 1.

Outline of the proposed scheme.

2.2. Image Dataset

2.2.1. Lung Cells

Liquid-based cytology specimens (BD SurePath Liquid-based Pap Test; Beckton Dickinson, Durham, NC, USA) prepared using lung cells collected from forceps biopsy and subsequently stained using the Papanicolaou method were obtained from the Fujita Health University Hospital, Japan. There were 116 benign and 175 malignant cases. Malignant cases included 122 adenocarcinomas and 53 squamous cell carcinomas.

2.2.2. Urinary Cells

Urothelial cells collected from voided urine samples were processed using the liquid-based cytology technique, as described above. Initially diagnosed cases were chosen since recurrent or chemotherapied cases may show irregular morphology. Two categories, including “Negative for malignancy” and “Malignant”, from The 2015 Japan Reporting System for Urinary Cytology, the latter of which consisted of high-grade urothelial cells and urothelial carcinoma in situ (HGUC/CIS), were selected for this analysis. Intermediate categories such as “Atypical cells” and “Suspicious for malignancy”, possibly from low-grade urothelial carcinomas (LGUC), were excluded to avoid uncertain diagnosis. All of the cases were validated by 2 cytotechnologists and a certified cytopathologist at the time of diagnosis. The samples included 64 benign and 59 malignant cases. A subsequent histopathological analysis diagnosed the malignant cases as urothelial carcinoma. In all cases, the final diagnosis was made based on histopathological or immunohistochemical findings.

A microscope (BX53, Olympus, Tokyo, Japan), with a digital camera (DP74, Olympus) attached at 40× objective lens, produced 1172 images of benign and 842 images of malignant lung cells and 706 images of benign and 664 images of malignant urinary cells, which were digitized to 1280 × 960 pixels. Subsequently, they were resized to a patch image of 296 × 296 pixels. Finally, 2249 benign and 888 malignant digital images of lung cells, and 716 benign and 664 malignant digital images of urinary cells, were obtained. A maximum of five pictures were taken per case. The evaluation of each cell was again performed by one cytotechnologist and one certified cytopathologist. Those judged to be malignant by the two evaluators were included.

3. Network Architectures

We introduced VGG-16 [18], proposed by the Vision Geometry Group at Oxford University in 2014, as a network architecture for CNN for the image classification. Our previous studies [12,13] in which VGG-16 was introduced for benign and malignant classification of lung cells showed that VGG-16 has a better classification performance than the other state-of-the-art models. Therefore, in this study, we developed a classification model for urinary cells by fine-tuning a network that was pre-trained with lung cells using VGG-16.

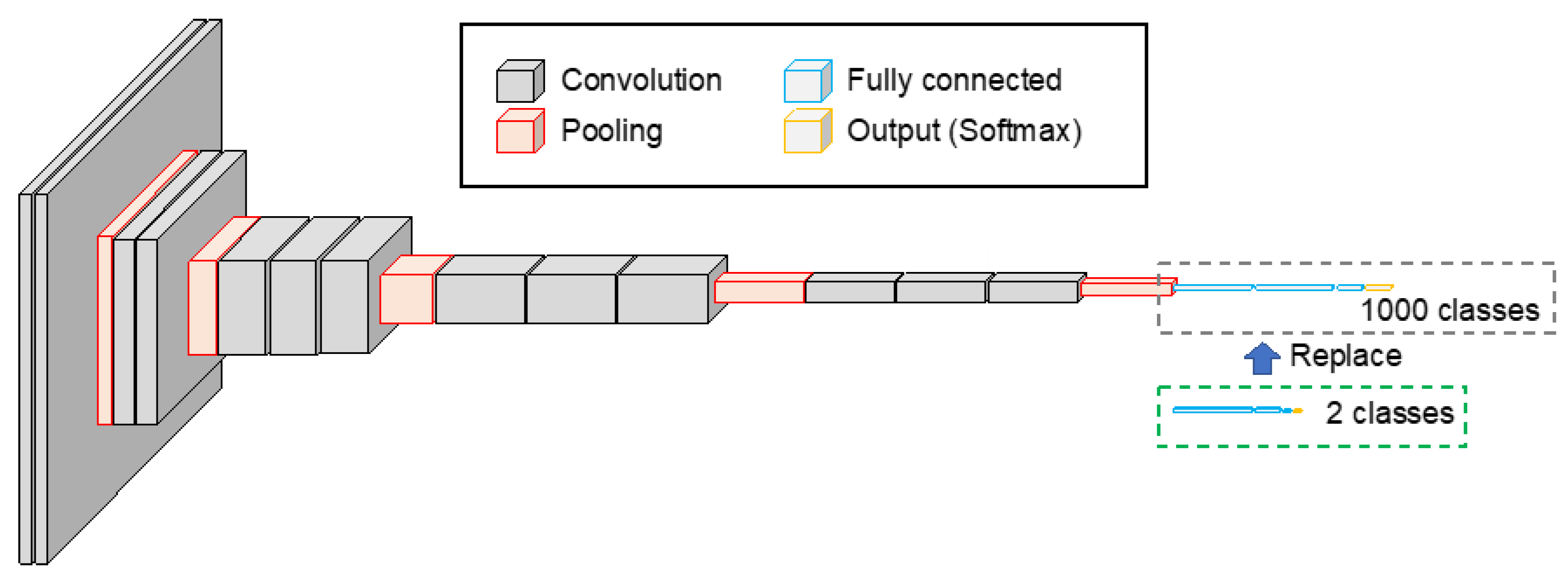

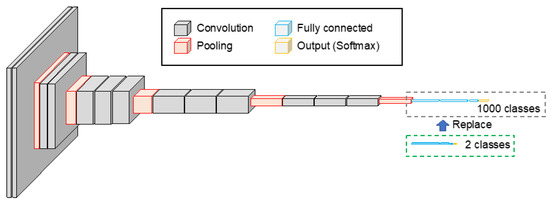

The original VGG-16 was a CNN with a simple structure, consisting of 13 convolution layers, 5 pooling layers, and 3 fully connected layers, as shown in Figure 2. The fully connected layers of VGG-16, which were pre-trained using 1.2 million images in ImageNet, a database of natural images, were replaced with 1024, 256, and 2 units of fully connected layers to enable the classification of benign and malignant lung cells. The activation function for the fully connected layer was the ReLU, and the softmax function was used to normalize the categorical output. The network was then fine-tuned using 2249 and 888 patch images of benign and malignant cases, respectively, as described above. The number of training epochs was 50, and the learning coefficient was set to 10−5, using Adam as the optimization algorithm. Further, 20% of the training data were used as validation data.

Figure 2.

CNN architecture introduced to the proposed scheme. Two-class classification model was built by replacing the fully connected layer of the VGG-16 model.

Next, fine-tuning of the pre-trained VGG-16 was performed using urine cytology images (716 benign and 664 malignant). The number of training sessions was 50 epochs, and the learning coefficient was set to 10−6, using Adam as the optimization algorithm.

4. Evaluation Metrics

In this study, we developed a novel method by fine-tuning the CNN model trained on lung cells with urinary cells, to obtain good classification accuracy with a small number of urinary cells. To confirm the effectiveness of the proposed method, we evaluated the classification accuracy of the urinary cells using three different methods:

Method 1: The CNN trained using lung cell images was applied directly to the urinary cell image classification process. In other words, images of urinary cells were not used in training the CNN.

Method 2: The CNN was trained and evaluated using only the images of urinary cells. In other words, the images of the lung cells were not used in training the CNN.

Method 3: The CNN pre-trained on lung cell images was fine-tuned using urinary cell images, and the classification accuracy of the urinary cell images was evaluated. This is the method proposed in this study.

The classification methods described above produce different results. However, it is difficult to examine the basis of the classification from the VGG-16 results because it is not possible to understand the parts of the image that were focused on for the classification. Therefore, to visualize the basis for classification, we introduced gradient-weighted class activation mapping (Grad-CAM) [20], which can output the areas that contribute to the CNN classification in the form of a heat map (activation map).

In addition, as an index for evaluating classification accuracy, we calculated the number of true positives (TP), true negatives (TN), false positives (FP), and false negatives (FN) and created a confusion matrix. Subsequently, the detection sensitivity (Recall), specificity, precision, accuracy, and F1 score were calculated based on the following equations:

To obtain the above results for all the data, CNN was trained and evaluated using the cross-validation method for Methods 2 and 3 since the urinary data were used for both training and validation. The cross-validation method divides the data into N datasets, trains them on N-1 datasets, and evaluates the classification accuracy of the remaining 1 dataset. By replacing the dataset to be excluded for evaluation and resetting the parameters for training, the classification results were obtained for all the data. In this study, N = 5 was used. That is, 80% of the data were used for training and 20% for testing. Here, images of the same case were distributed such that they would not be mixed in the training data and the data for evaluation. In contrast, single validation was employed since the training (lung) and the validation (urine) data were different for the Method 1.

The CNN calculations were performed using the software we developed in the Python programming language with an AMD Ryzen 9 3950X processor (16 CPU cores, 4.7 GHz) with 128 GB of DDR4 memory. The training processes of the CNNs were accelerated using an NVIDIA Quadro RTX 8000 GPU (48 GB memory).

5. Results

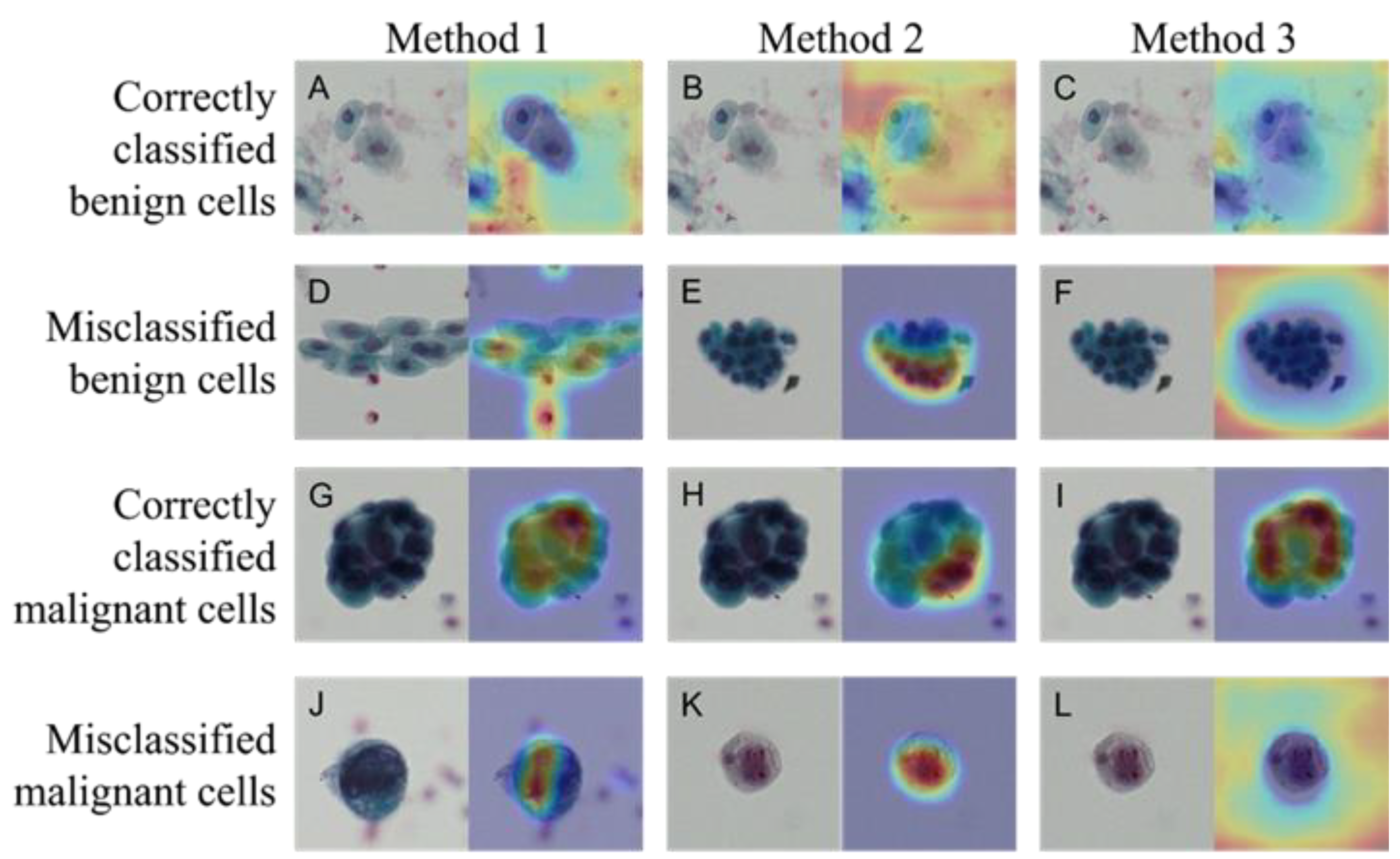

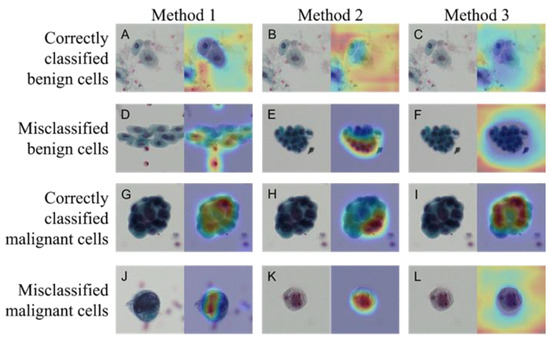

Figure 3 shows the images that were correctly and incorrectly classified using Methods 1–3. The activation map obtained by Grad-CAM is also shown for each image. The CNN used in this study tended to classify cells as benign when there were no malignant cells or when the densest chromatin was noted. Table 1 shows the confusion matrix, outlining the classification results obtained using the three methods. The numbers shown are the sum of five consecutive analyses of five-fold cross validation in the Methods 2 and 3, whereas the figures are drawn by single analysis in the Method 1. Table 2 shows the results of the calculation of the detection sensitivity, specificity, precision, accuracy, and F1 score based on the confusion matrix. Averages and standard deviations (Ave ± SD) were obtained via five analyses of the five-fold cross validation method in Methods 2 and 3, whereas figures were from single analysis without SD in Method 1. Method 3 was the most accurate among the three methods.

Figure 3.

Correctly classified and misclassified images and their activation maps. Benign cells correctly classified (A–C) and misclassified (D–F) images in Methods 1–3, respectively. Same for the Malignant cells: correctly classified (G–I) and misclassified (J–L) images. Papanicolaou staining (left panel) and activation map by Grad-CAM (right panel) in each photo.

Table 1.

Confusion matrices of the three classification methods.

Table 2.

Summary of evaluation index for image classification.

6. Discussion

This study revealed that the method of training the CNN model with lung cell images and then fine-tuning with urinary cell images was more accurate than training with urinary cell images alone. In clinical practice, urinary cytology is determined based on cellular characteristics such as increased nuclear chromatin, increased nuclear-cytoplasmic ratio (N/C ratio), and nuclear enlargement [21], which are often applied in lung cytology as well. The malignant-lung-cell images used in this study contained two histologically different tumor cells: squamous cell carcinoma and adenocarcinoma. The common characteristics of these cells are increased nuclear chromatin, increased N/C ratio, and nuclear enlargement. Other features include nucleolus clarity, flow-like arrangement, and the presence of bright cells in squamous cell carcinoma; and nucleolus clarity, enlargement, nuclear maldistribution, and mucus production in adenocarcinoma [21].

In this study, liquid-based cytology specimens stained using Papanicolaou were prepared from urinary cells collected from spontaneous urine. In this process, detached urothelial cells in the urine are prone to degeneration, forming degenerated normal urothelial cells called reactive urothelial cells. The degeneration is caused by a variety of factors, including a high degree of inflammation, the presence of stones, drug and radiation therapy, early morning urine, and mechanical manipulation with catheterized urine. Degenerated urinary cells are characterized by nuclear enlargement and clear nucleoli compared to those of normal cells, and it is difficult to distinguish them from malignant cells. In actual clinical practice, the distinction is made based on the presence or absence of an irregular nuclear shape and increased nucleochromatin, which requires advanced techniques. To solve this problem, this study proposed an automated method for the more accurate classification of urinary cytology. Individual cell-based judgement should further evolve to case-based diagnosis by using all of the cells, which could collaborate with the actual diagnostic process.

The results of the evaluation showed that Method 3 was the most accurate among all methods. Grad-CAM visualizes important pixels by weighting the gradient against the predicted value. The visualization of the region of interest using Grad-CAM revealed the characteristics of this region for each method. The Grad-CAM results for Methods 1 through 3 reveal the following three things about the limitations of the model. First, normal urothelial cells (Figure 3A–C) are judged to be benign by looking at areas other than urinary cells. This can be inferred from the absence of malignant cells—not by looking at the urinary cells but by the absence of malignant cells. In the light-colored stained specimens (Figure 3K,L), the cytoplasm and nucleus appear paler than other malignant cells, and it can be inferred that the cells themselves could not be recognized. This suggests that it will be essential to construct a system capable of color correction in the future, especially when using specimens with reduced stainability. Second, when recognizing cell clusters, Methods 1 and 3 focused on cells with the highest chromatin volume, whereas Method 2 focused on the edges of the clusters. These points of view seemed similar to those used in manual cytodiagnosis. Third, some degenerated urothelial cells (Figure 3E,F) tended to be misclassified as malignant cells, suggesting the need for further training with more image data.

Previous studies on cytological diagnosis either used only cell images that were to be processed or used cell images to fine-tune a model that was pre-trained with natural images. This study attempted to classify urinary cells with different image characteristics using a deep learning model that was pre-trained with natural images and lung cytology images and evidently obtained satisfactory results. This suggests that rare diseases, for which only a limited number of images are available, may be processed in combination with samples of highly prevalent diseases. This might solve one of the problems of deep learning, which is the requirement of a large number of training data.

A limitation of this study is that the samples were prepared at a single facility and images were collected using a single microscope. In the future, the validity of this method should be confirmed using specimens and images collected from multiple institutions. In addition, malignant cases in this study comprised high-grade patients. Future studies should include low-grade and atypical cell cases, which are more difficult to diagnose. Although the effect of fine tuning was confirmed using a limited number of urinary cells in this study, there is a close relationship between data size and fine-tuning performance. In the future, it is necessary to prepare data from a larger number of urinary cells to clarify the relationship between the number of training data and classification performance, as well as to further investigate the methods of fine-tuning.

7. Conclusions

In this study, we propose a new deep learning method for the automated classification of urinary cells. We observed that pre-training the model with lung cells and fine-tuning with urinary cells produced more accurate results than training with urinary cells alone. Using cells from different organs has the potential to develop versatile deep-learning models. These results are important for establishing a more accurate and automated method for the cytological diagnosis of rare diseases.

Author Contributions

Conceptualization, A.T. and T.T.; data curation, A.M., Y.K., E.S. and R.S.; investigation, A.T., A.M. and T.T.; methodology, A.T.; project administration, T.T.; software, A.T.; validation, A.M., A.T., Y.K. and E.S.; visualization: A.T. and A.M.; writing—original draft preparation, A.T. and A.M.; and writing—review and editing, Y.K. and T.T. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by a grant-in-aid for Scientific Research from Fujita Health University, 2022 to our Department. The authors received no external funding for this research.

Institutional Review Board Statement

All of the procedures performed in this study involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki Declaration and its later amendments or comparable ethical standards. This study was approved by an institutional review board, Fujita Health University, and patient informed consents were obtained under the condition that all data were anonymized (No. HM21-107).

Informed Consent Statement

Informed consent was obtained from all of the subjects for research use, while they retained the option to opt out of this study.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors have no conflict of interest to declare (No. CI22-310).

References

- Sung, H.; Ferlay, J.; Siegel, R.; Laversanne, M.; Soerjomataram, I.; Jemal, A.; Bray, F. Global cancer statistics 2020: Globocan estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J. Clin. 2021, 71, 209–249. [Google Scholar] [CrossRef] [PubMed]

- Doi, K. Computer-aided diagnosis in medical imaging: Historical review, current status and future potential. Comput. Med. Imaging Graph. 2007, 31, 198–211. [Google Scholar] [CrossRef] [PubMed]

- Fujita, H. Ai-based computer-aided diagnosis (AI-CAD): The latest review to read first. Radiol. Phys. Technol. 2020, 13, 6–19. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G. Imagenet classification with deep convolutional neural networks. Commun. ACM 2012, 60, 84–90. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Zhou, X.; Takayama, R.; Wang, S.; Hara, T.; Fujita, H. Deep learning of the sectional appearances of 3d ct images for anatomical structure segmentation based on an fcn voting method. Med. Phys. 2017, 44, 5221–5233. [Google Scholar] [CrossRef] [PubMed]

- Yan, K.; Wang, X.; Lu, L.; Summers, R. Deeplesion: Automated mining of large-scale lesion annotations and universal lesion detection with deep learning. J. Med. Imaging 2018, 5, 036501. [Google Scholar] [CrossRef] [PubMed]

- Teramoto, A.; Fujita, H.; Yamamuro, O.; Tamaki, T. Automated detection of pulmonary nodules in pet/ct images: Ensemble false-positive reduction using a convolutional neural network technique. Med. Phys. 2016, 43, 2821–2827. [Google Scholar] [CrossRef] [PubMed]

- Sirinukunwattana, K.; Raza, S.A.; Yee-Wah, T.; Snead, D.; Cree, I.; Rajpoot, N. Locality sensitive deep learning for detection and classification of nuclei in routine colon cancer histology images. IEEE Trans. Med. Imaging 2016, 35, 1196–1206. [Google Scholar] [CrossRef] [PubMed]

- Iizuka, O.; Kanavati, F.; Kato, K.; Rambeau, M.; Arihiro, K.; Tsuneki, M. Deep learning models for histopathological classification of gastric and colonic epithelial tumours. Sci. Rep. 2020, 10, 1504. [Google Scholar] [CrossRef]

- Hashimoto, N.; Fukushima, D.; Koga, R.; Takagi, Y.; Ko, K.; Kohno, K.; Nakaguro, M.; Nakamura, S.; Hontani, H.; Takeuchi, I. Multi-scale domain-adversarial multiple-instance cnn for cancer subtype classification with unannotated histopathological images. arXiv 2020, arXiv:2001.01599v2. [Google Scholar] [CrossRef]

- Teramoto, A.; Yamada, A.; Kiriyama, Y.; Tsukamoto, T.; Yan, K.; Zhang, L.; Imaizumi, K.; Saito, K.; Fujita, H. Automated classification of benign and malignant cells from lung cytological images using deep convolutional neural network. Inform. Med. Unlocked 2019, 16, 100205. [Google Scholar] [CrossRef]

- Teramoto, A.; Tsukamoto, T.; Yamada, A.; Kiriyama, Y.; Imaizumi, K.; Saito, K.; Fujita, H. Deep learning approach to classification of lung cytological images: Two-step training using actual and synthesized images by progressive growing of generative adversarial networks. PLoS ONE 2020, 15, e0229951. [Google Scholar] [CrossRef] [PubMed]

- Teramoto, A.; Kiriyama, Y.; Tsukamoto, T.; Sakurai, E.; Michiba, A.; Imaizumi, K.; Saito, K.; Fujita, H. Weakly supervised learning for classification of lung cytological images using attention-based multiple instance learning. Sci. Rep. 2021, 11, 20317. [Google Scholar] [CrossRef] [PubMed]

- Vaickus, L.J.; Suriawinata, A.; Wei, J.; Liu, X. Automating the paris system for urine cytopathology-a hybrid deep-learning and morphometric approach. Cancer Cytopathol. 2019, 127, 98–115. [Google Scholar] [CrossRef] [PubMed]

- Awan, R.; Benes, K.; Azam, A.; Song, T.; Shaban, M.; Verrill, C.; Tsang, Y.; Snead, D.; Minhas, F.; Rajpoot, N. Deep learning based digital cell profiles for risk stratification of urine cytology images. Cytom. Part A 2021, 99, 732–742. [Google Scholar] [CrossRef] [PubMed]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. arXiv 2014, arXiv:1409.4842v. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2015, arXiv:arXiv:1409.1556. [Google Scholar] [CrossRef]

- Tsukamoto, T.; Teramoto, A.; Yamada, A.; Kiriyama, Y.; Sakurai, E.; Michiba, A.; Imaizumi, K.; Fujita, H. Comparison of fine-tuned deep convolutional neural networks for the automated classification of lung cancer cytology images with integration of additional classifiers. Asian Pac. J. Cancer Prev. 2022, 23, 1315–1324. [Google Scholar] [CrossRef] [PubMed]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual explanations from deep networks via gradient-based localization. arXiv 2016, arXiv:1610.02391v4. [Google Scholar] [CrossRef]

- Koss, L.G. Fundamental concepts of neoplasia: Benign tumors and cancer. In Koss’ Diagnostic Cytology and its Histopathologic Bases, 5th ed.; Koss, L.G., Melamed, M.R., Eds.; Lippincott Williams & Wilkins: Philadelphia, PA, USA, 2006; pp. 143–179. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).