Automatic Measurement of Inclination Angle of Utility Poles Using 2D Image and 3D Point Cloud

Abstract

1. Introduction

- Without large labeled reference pole data, locating the pole in the 3D point cloud is time consuming; additionally, the resolution of the 3D point cloud is relatively low, and thus, the pole segmentation result from the point cloud in a complex background with a relatively large search space is usually not ideal [17,18,19,20,21,22,23].

- The accuracy of pole skeleton segmentation is improved by expanding the bounding box of Mask R-CNN to different values and adding attention mechanism to the head network of Mask R-CNN.

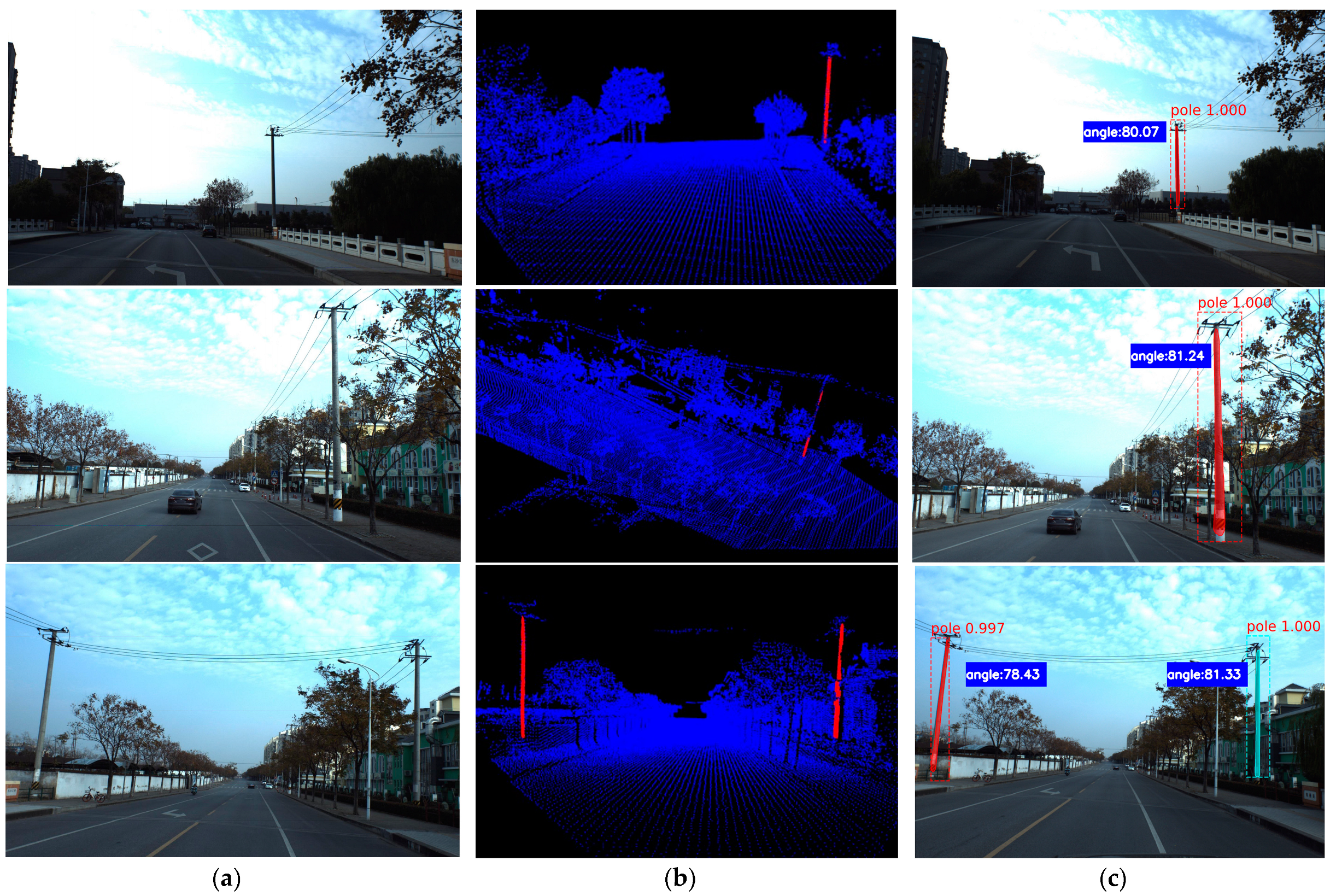

- The method of piecewise fitting is used to realize the fitting of the central axis of cylinder-like objects, such as utility poles, and calculate the inclination angle, which increases the accuracy of angle calculation in the case of interference points.

- The fusion of 2D image and 3D point cloud makes full use of their complementary features, which can not only realize the measurement of pole inclination at once, but also meet the requirements of automatic inspection of poles.

2. The Proposed Method

2.1. The Framework of the Proposed Method

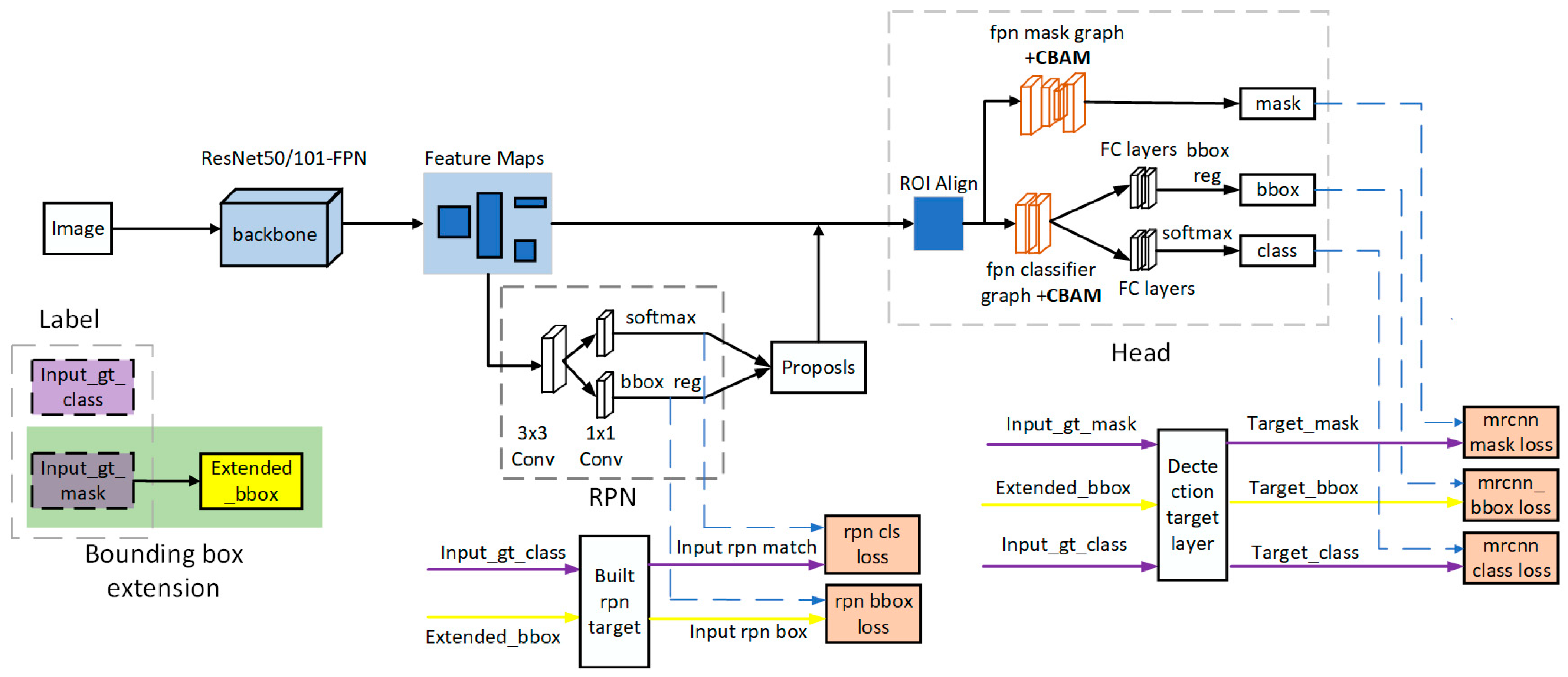

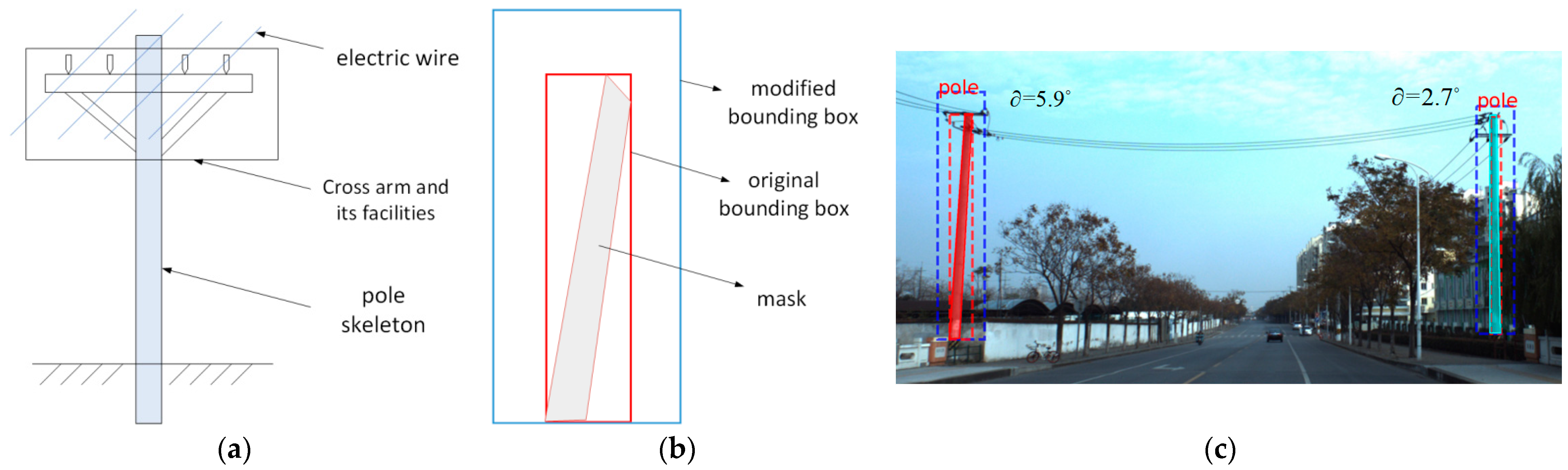

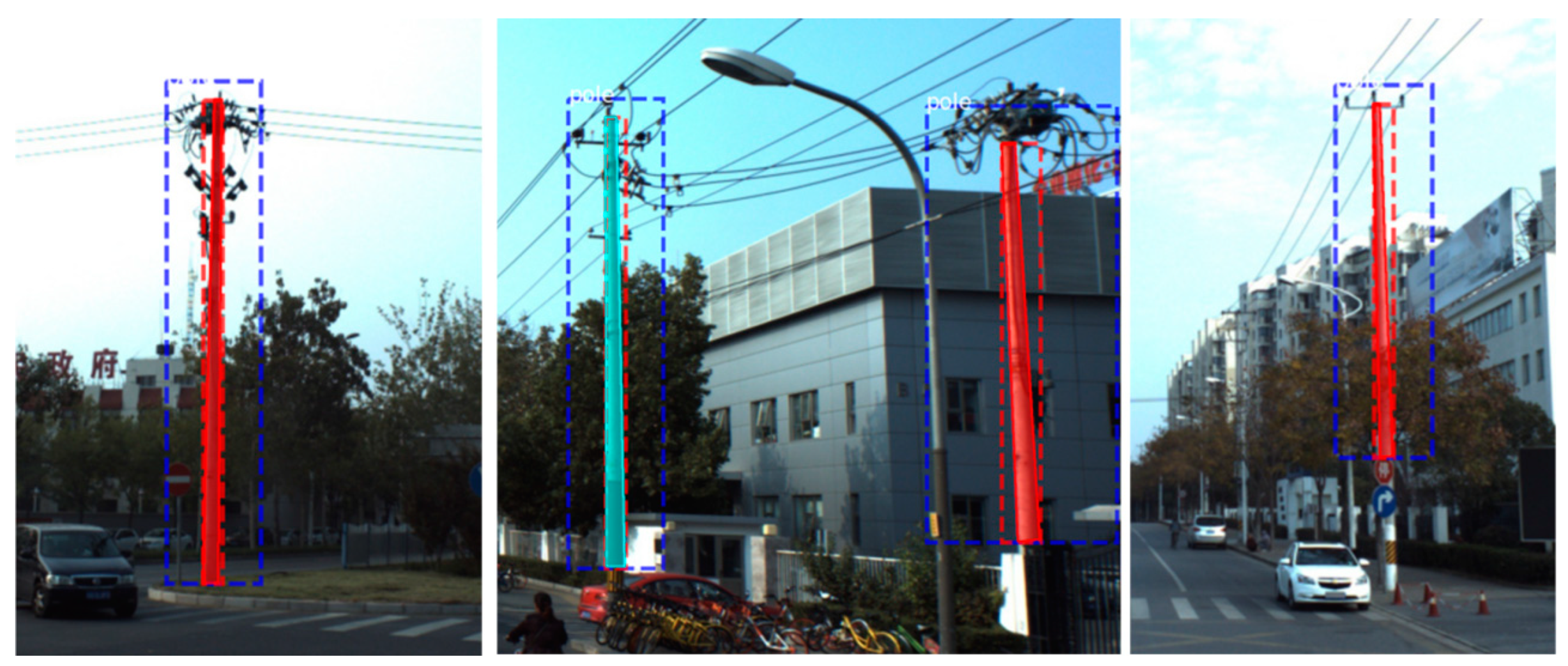

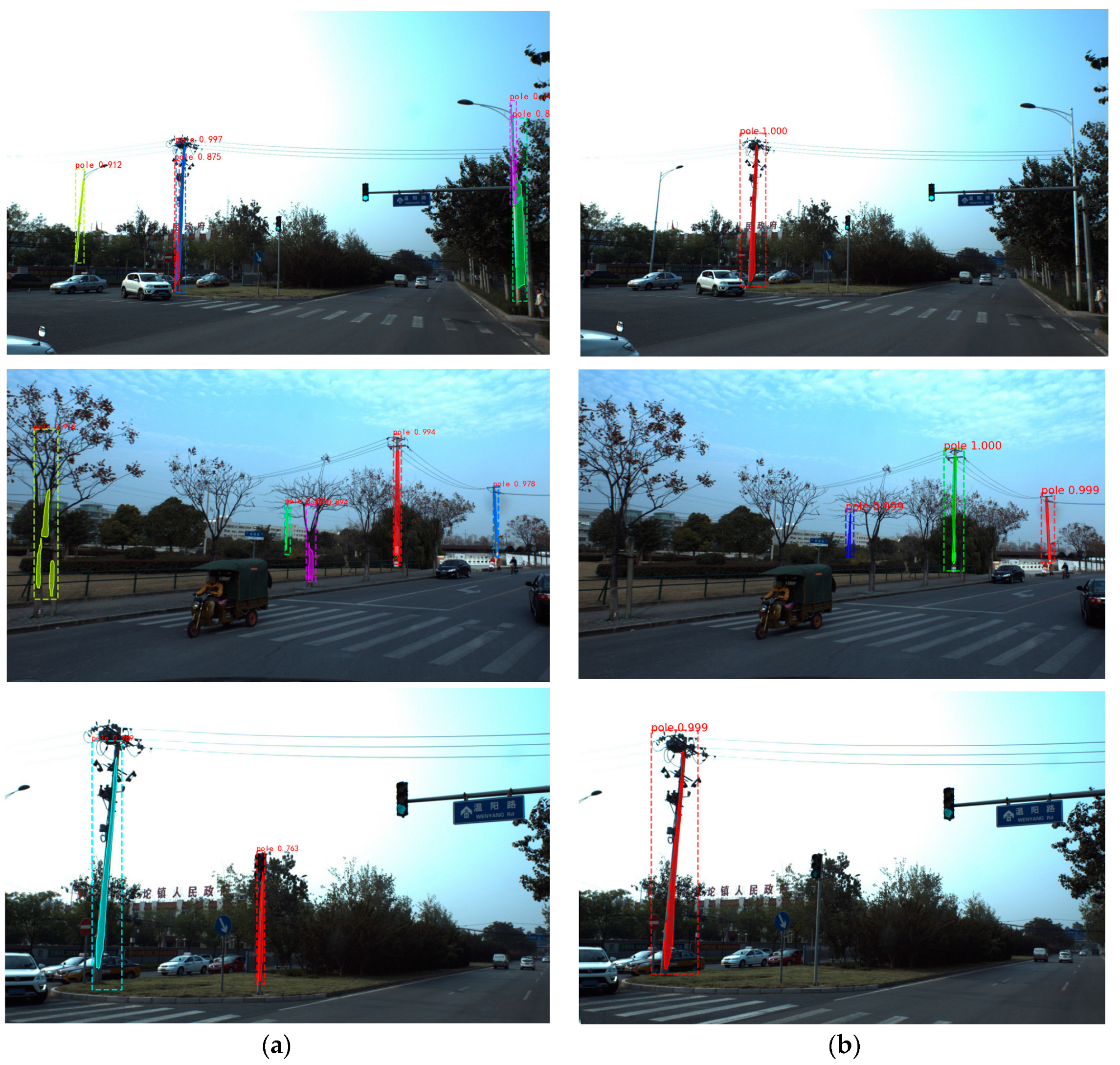

2.2. Pole Segmentation Based on Improved Mask R-CNN

2.2.1. Mask R-CNN

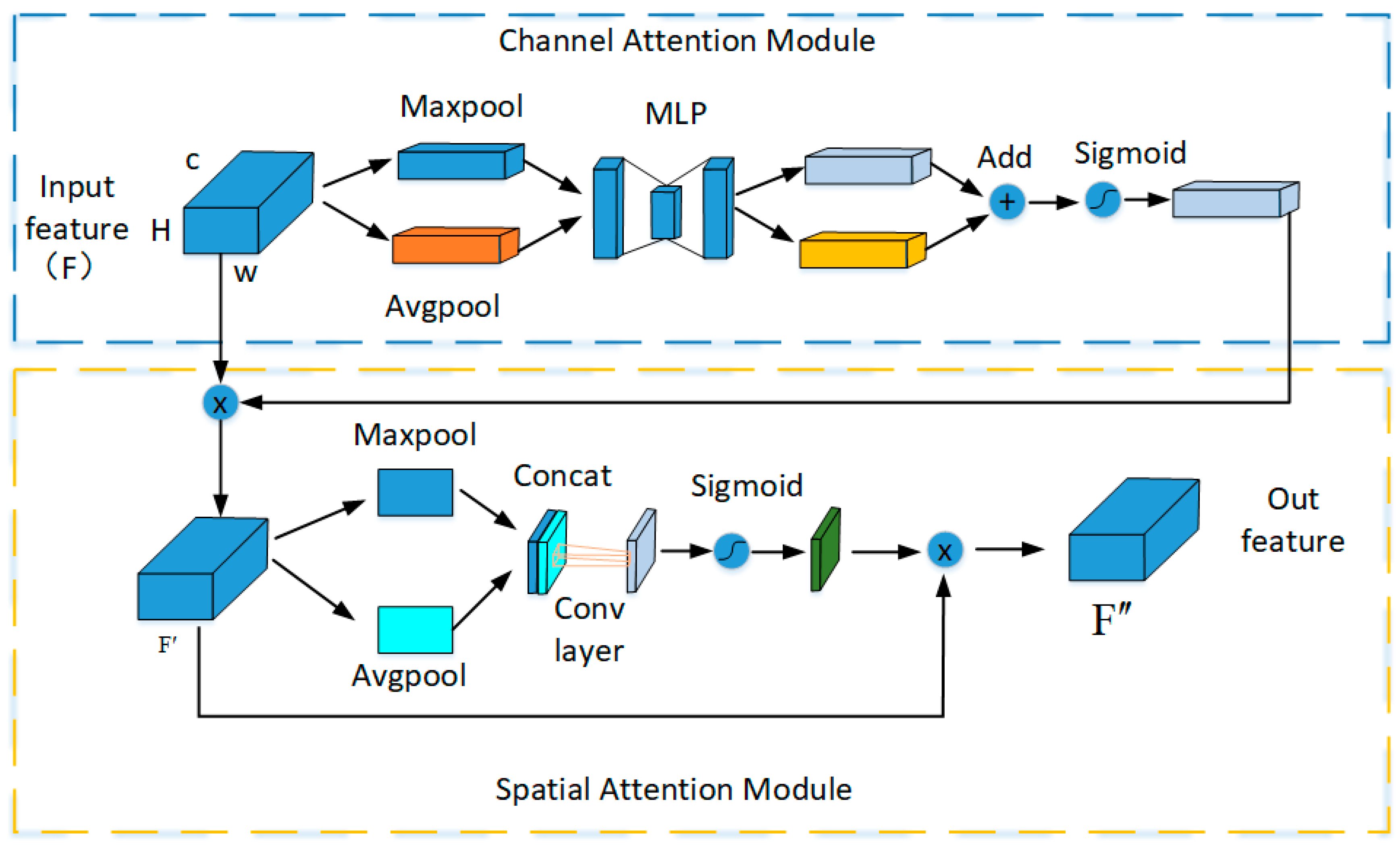

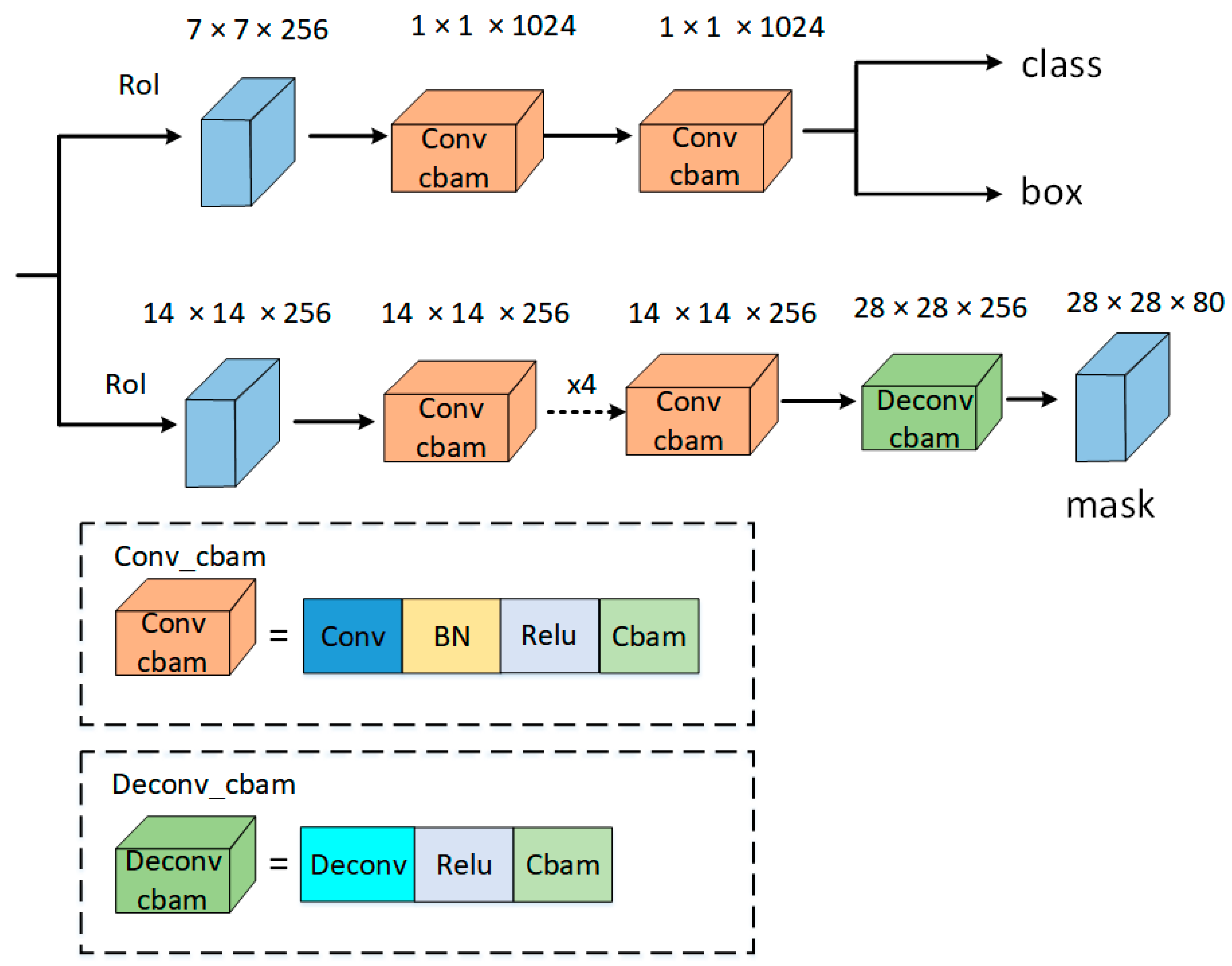

2.2.2. Improvement of Mask R-CNN

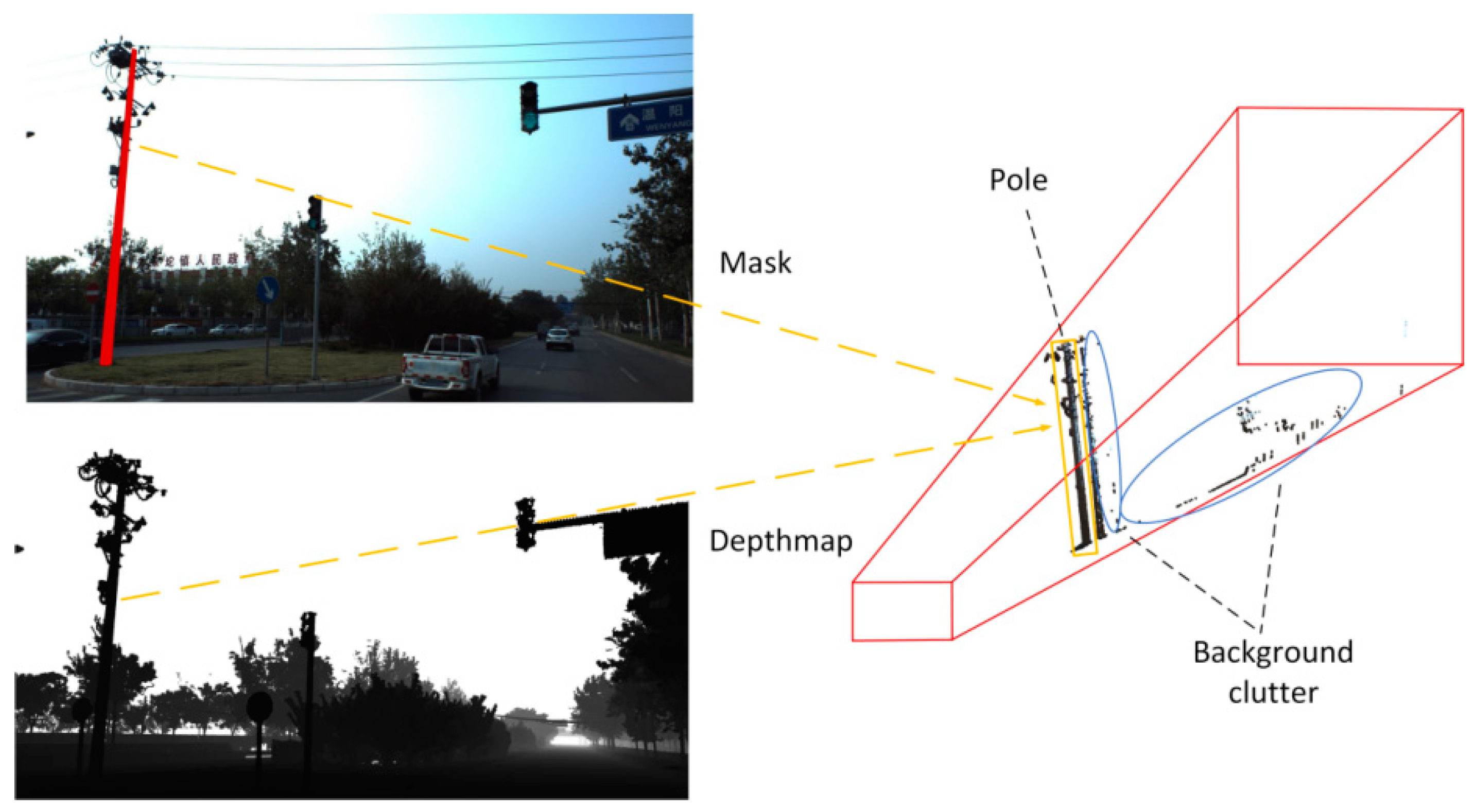

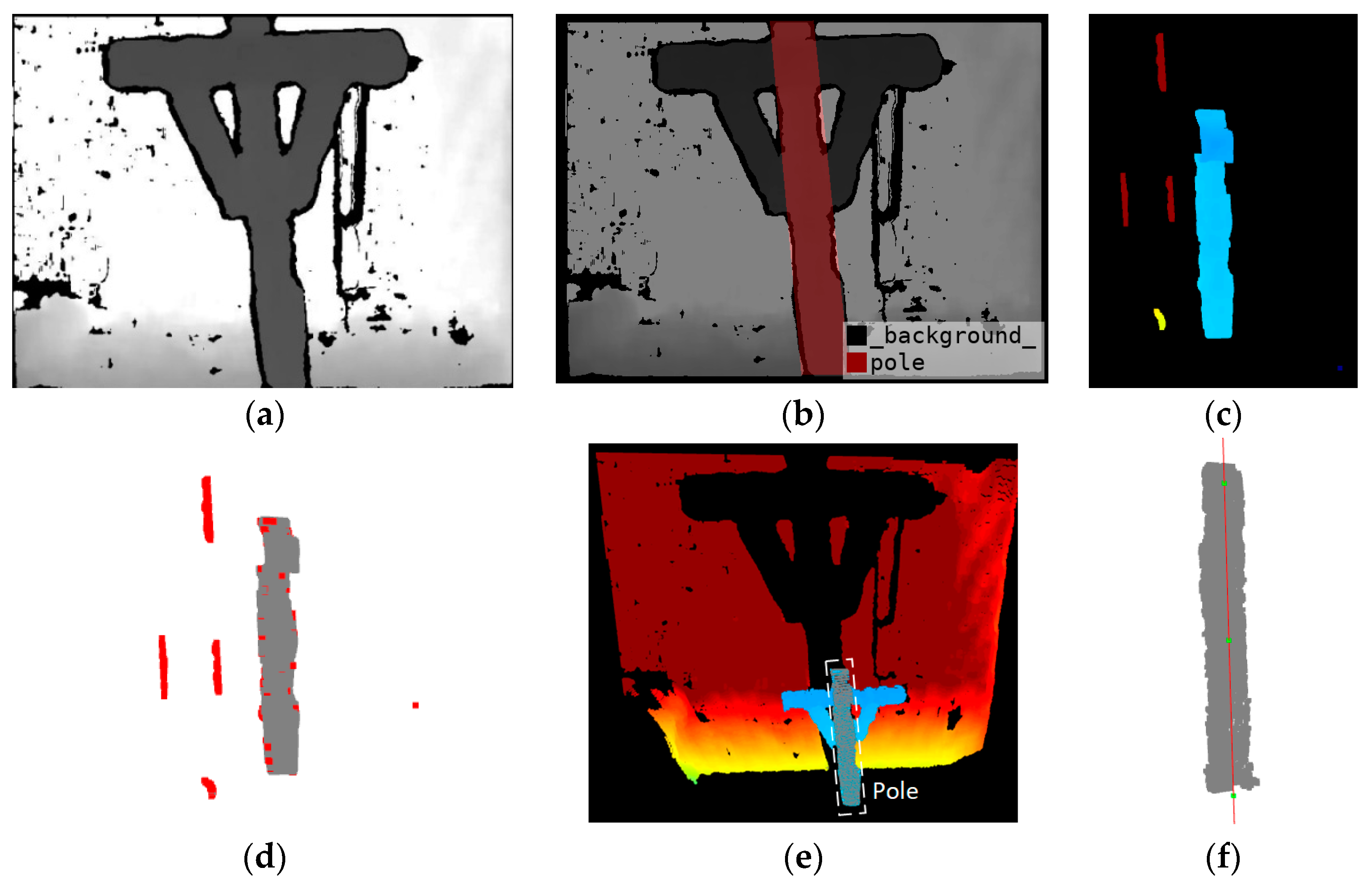

2.3. Pole Mask and Depth Map Fusion

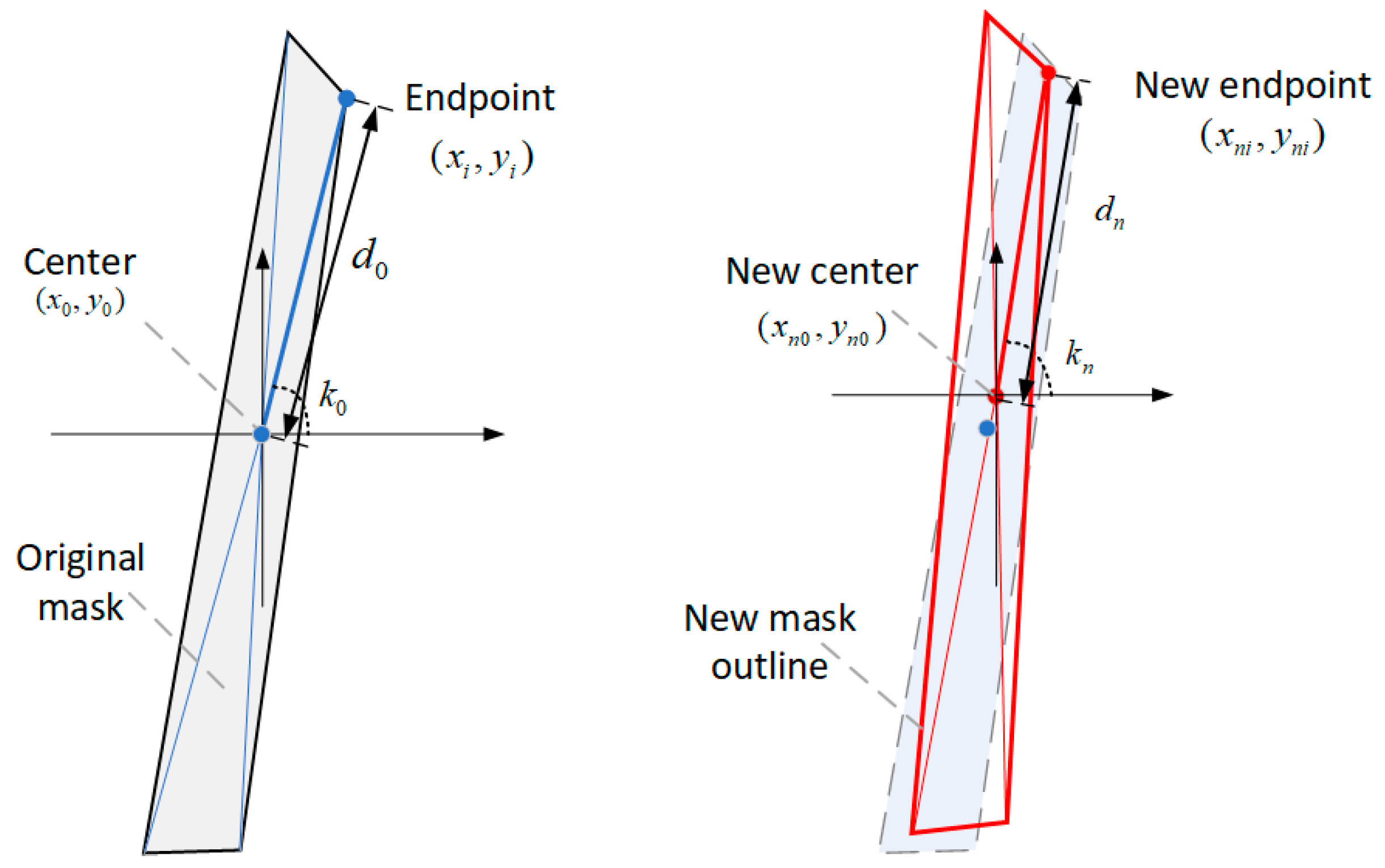

2.3.1. Labeling Mask Random Transform

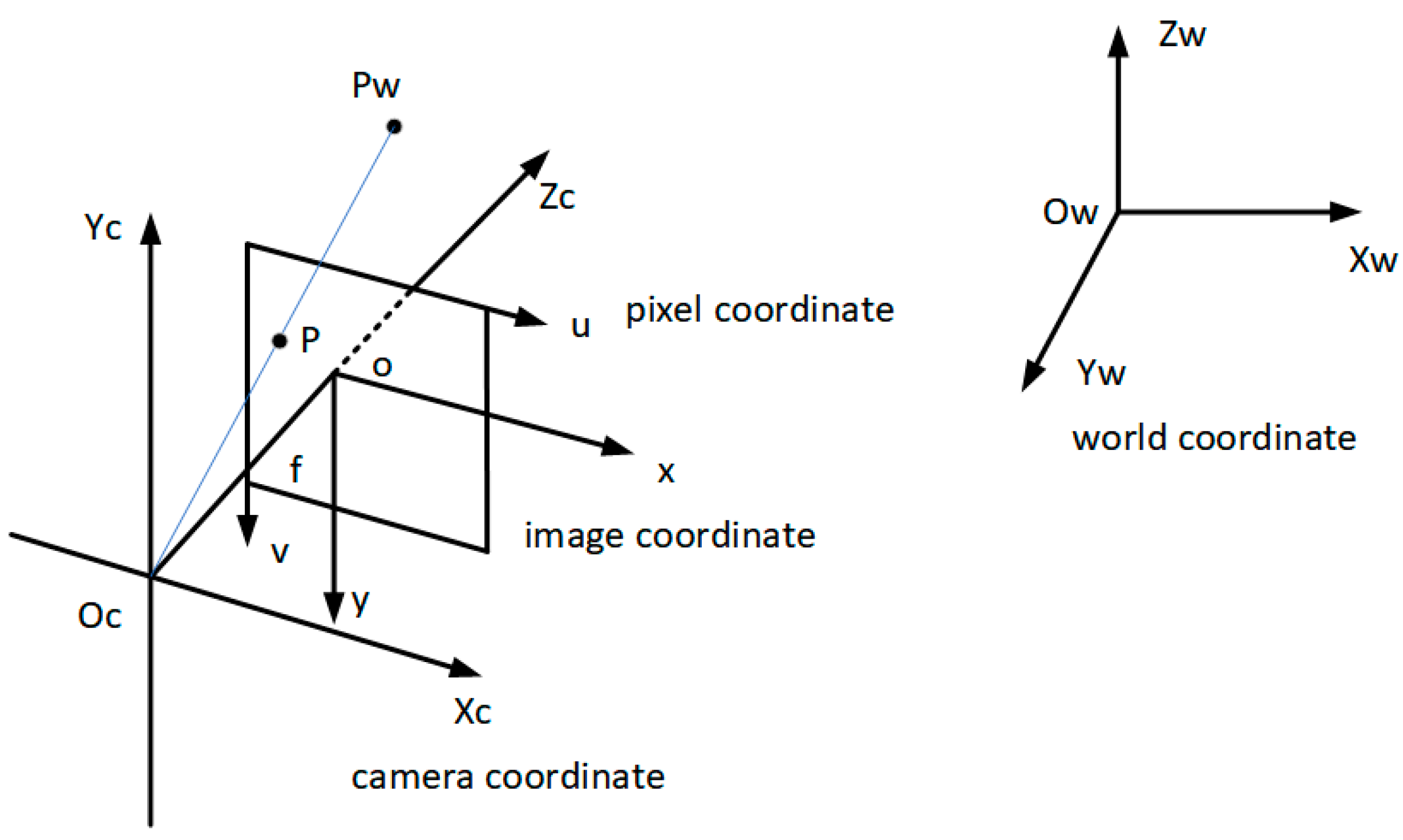

2.3.2. 3D Reconstruction of Utility Poles

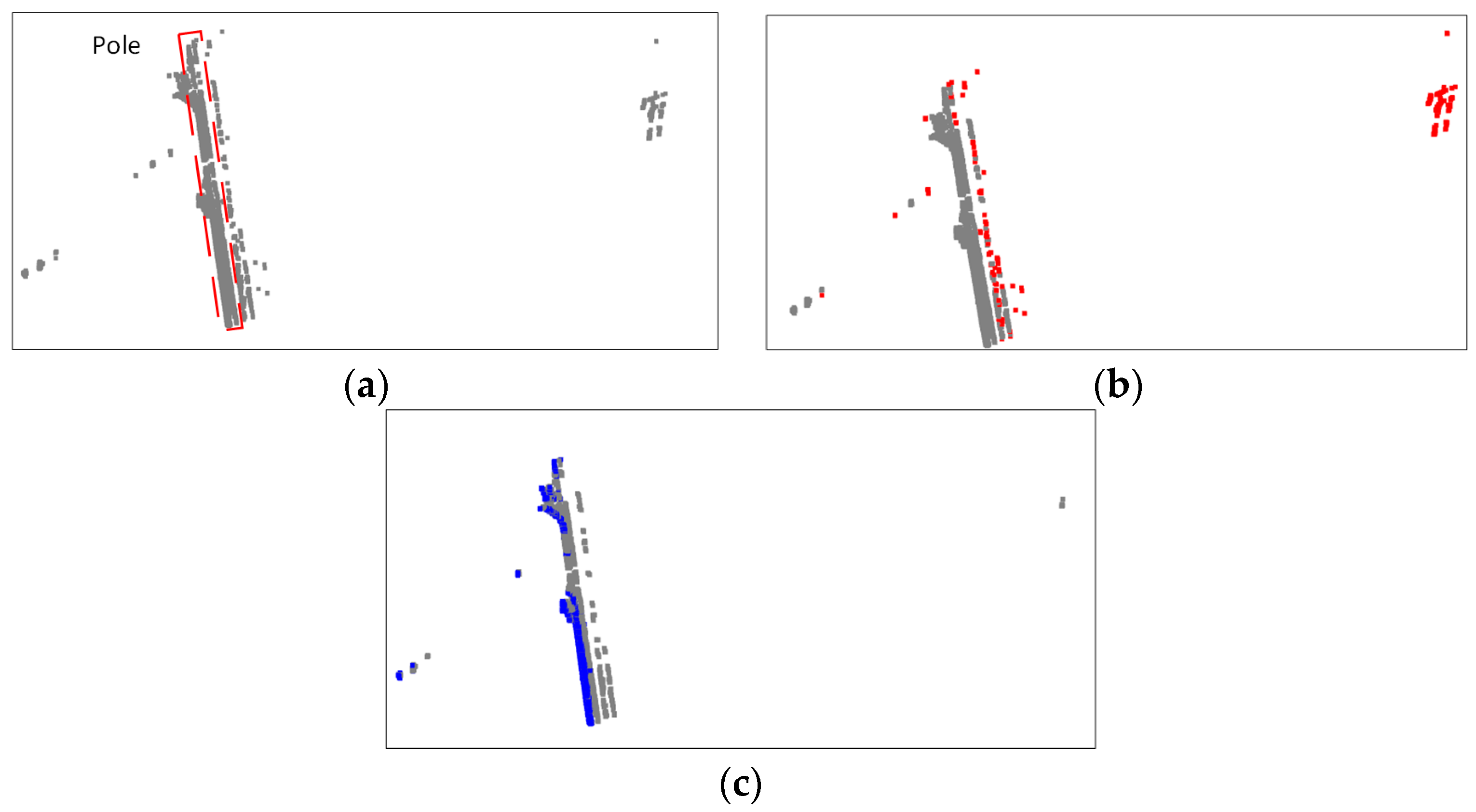

2.4. Utility Pole Point Cloud Segmentation

2.4.1. Preprocessing

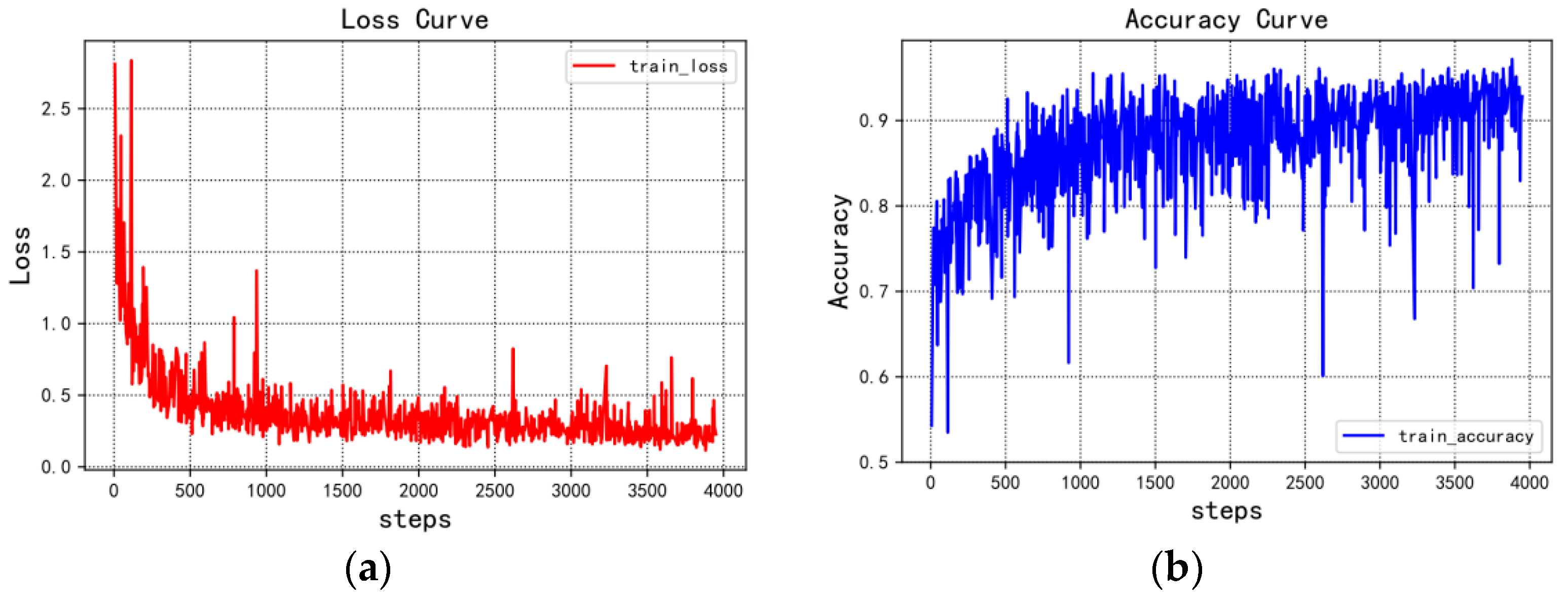

2.4.2. PointNet Point Cloud Segmentation

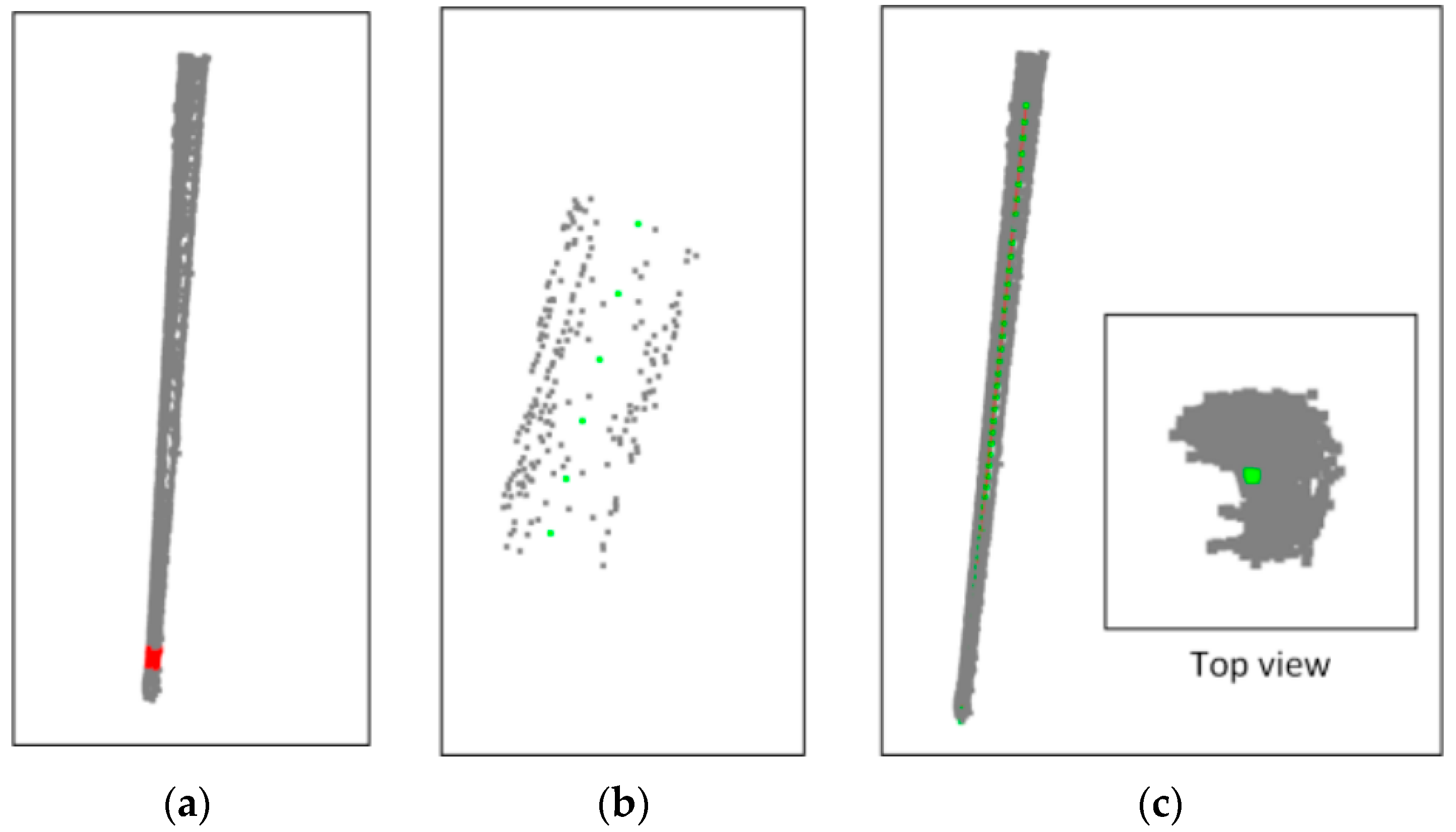

2.5. Pole Central Axis Fitting and Angle Calculation

3. Experiment and Analysis

3.1. Datasets

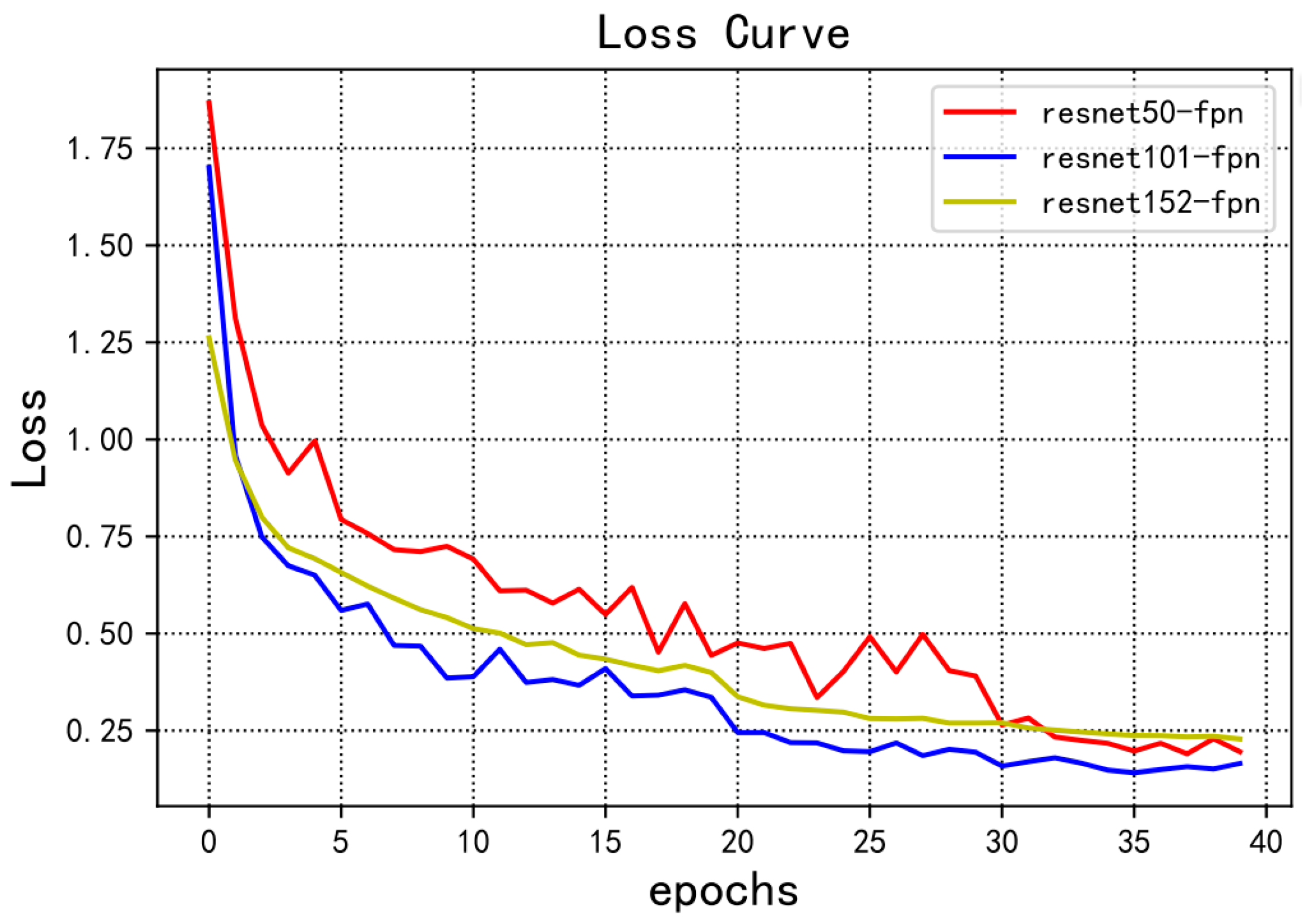

3.2. Model Training

3.3. Model Evaluation and Result Analysis

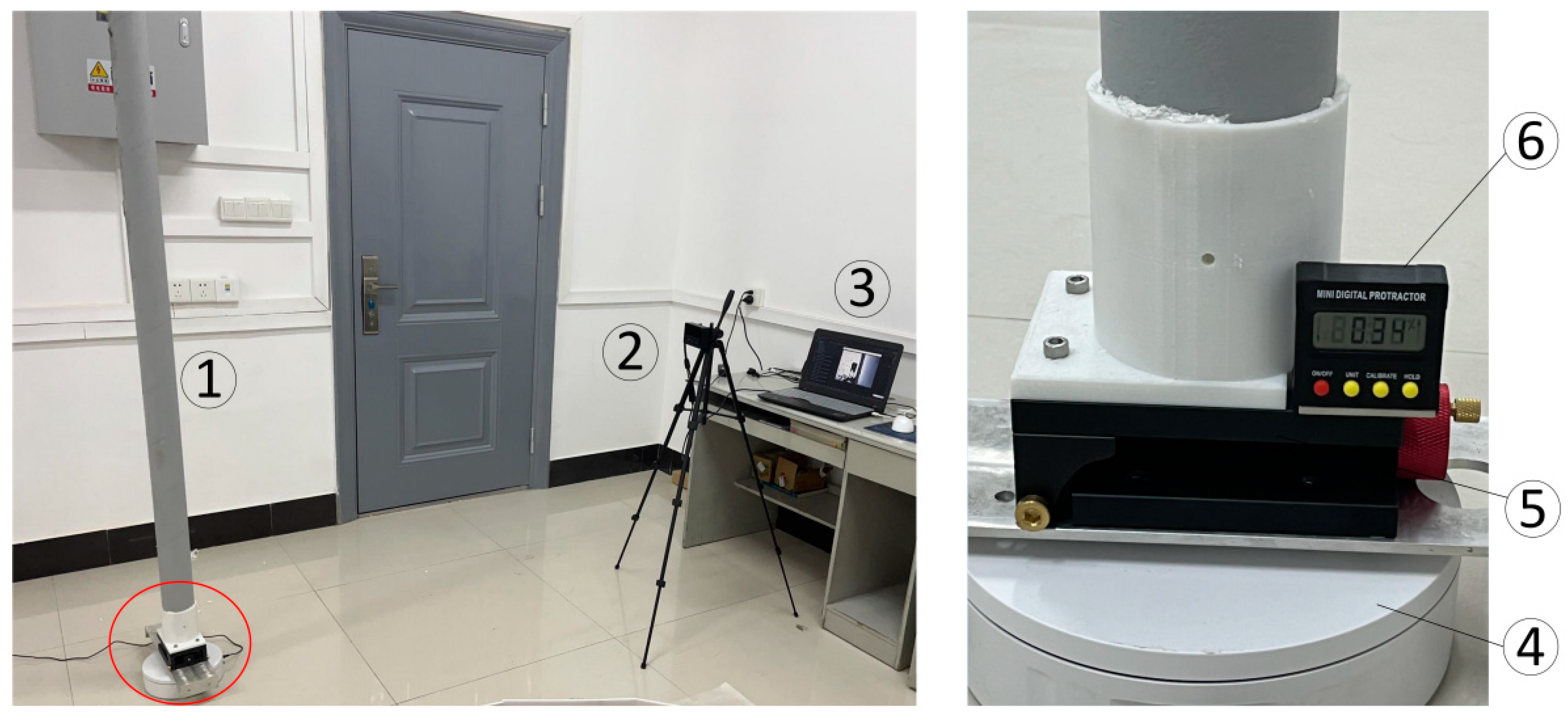

3.4. Angle Verification and Analysis

3.4.1. Experimental Environment

3.4.2. Processing

3.4.3. Analysis of Experimental Results

4. Conclusions

- The expanded bounding box and the attention mechanism are the main influencing factors for improving Mask R-CNN in pole detection. It effectively executes to locate the pole by reducing the searching space in the 3D point cloud.

- It can segment high-quality pole data from the raw point cloud by introducing the pole mask feature and depth map fusion.

- It can estimate the inclination angle of the pole point cloud by fitting the central axis of the cylinder-like objects, even in scenarios of noise or missing point cloud.

- As the quality of the pole visible light (RGB) image could be insufficient in variable light, such as strong sunlight or rain, the improved Mask R-CNN might not accurately detect the pole. The preprocessing step of enhancing the RGB image is necessary for the automatic detection method.

- As the unmatched 2D image is captured from a visible light camera and the 3D point cloud is captured from LiDAR or a depth camera, the reduced point cloud searching space in the new method might not able to locate the pole.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Meng, W.; Dai, Z.; Chen, Y.; Huang, Y.; Li, X. Research on active safety protection technology of distribution network poles. Ind. Saf. Environ. Prot. 2022, 48, 74–76 + 106. [Google Scholar]

- Luo, J.; Yu, C.; Xie, Y.; Chen, B.; Huang, W.; Cheng, S.; Wu, Y. Review of power system security and stability defense methods under natural disasters. Power Syst. Prot. Control 2018, 46, 158–170. [Google Scholar]

- Zhang, X.; Wang, N.; Wang, W.; Feng, J.; Liu, X.; Hou, K. Resilience assessment of power systems considering typhoon weather. Proc. CSU-EPSA 2019, 31, 21–26. [Google Scholar]

- Wang, Y.; Yin, Z.; Li, L.; Wang, X.; Zhang, G.; Mu, Y. Risk assessment method for distribution network considering operation status under typhoon disaster scenario. Proc. CSU-EPSA 2018, 30, 60–65. [Google Scholar]

- Liang, Q.; Liang, S.; Peng, J.; Bian, M. Wind-Resistant monitoring technology of pole-line structure in transmission lines. J. Electr. Power Sci. Technol. 2020, 35, 181–186. [Google Scholar]

- Kim, J.; Kamari, M.; Lee, S.; Ham, Y. Large-scale visual data–driven probabilistic risk assessment of utility poles regarding the vulnerability of power distribution infrastructure systems. J. Constr. Eng. Manag. 2021, 147. [Google Scholar] [CrossRef]

- Li, Y.; Du, Y.; Shen, X.; Wang, R. Comparison of several transmission line tower inclination measurement methods. Hubei Electr. Power 2014, 38, 55–57 + 74. [Google Scholar]

- Wang, S.; Du, Y.; Sun, J.; Fang, Q.; Weng, Y.; Ma, L.; Zhang, X.; Wu, J.; Qin, Q.; Shi, Q. Transmission tower deformation and tilt detection research status. Telecom Power Technol. 2018, 35, 91–92. [Google Scholar] [CrossRef]

- Tragulnuch, P.; Kasetkasem, T.; Isshiki, T.; Chanvimaluang, T.; Ingprasert, S. High voltage transmission tower detection and tracking in aerial video sequence using object-based image classification. In Proceedings of the International Conference on Embedded Systems and Intelligent Technology (ICESIT)/International Conference on Information and Communication Technology for Embedded Systems (ICICTES), Khon Kaen, Thailand, 7–9 May 2018. [Google Scholar]

- Yang, Y.; Chen, Y.; Chen, Y.; Xiao, F.; He, W. A new method of retrieving the inclination direction of power transmission tower by geocoding. In Proceedings of the 38th IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Valencia, Spain, 22–27 July 2018. [Google Scholar]

- Li, L.; Gao, X.; Liu, W. On-line monitoring method of transmission tower tilt based on remote sensing satellite optical image and neural network. In Proceedings of the 11th International Conference on Power and Energy Systems (ICPES), Shanghai, China, 18–20 December 2021. [Google Scholar]

- Alam, M.M.; Zhu, Z.; Tokgoz, B.E.; Zhang, J.; Hwang, S. Automatic assessment and prediction of the resilience of utility poles using unmanned aerial vehicles and computer vision techniques. Int. J. Disaster Risk Sci. 2020, 11, 119–132. [Google Scholar] [CrossRef]

- Mo, Y.F.; Xie, R.; Pan, Q.; Zhang, B. Automatic power transmission towers detection based on the deep learning algorithm. In Proceedings of the 2nd International Conference on Computer Engineering and Intelligent Control (ICCEIC), Chongqing, China, 12–14 November 2021. [Google Scholar]

- Zhang, W.; Witharana, C.; Li, W.; Zhang, C.; Li, X.; Parent, J. Using deep learning to identify utility poles with crossarms and estimate their locations from google street view images. Sensors 2018, 18, 2484. [Google Scholar] [CrossRef]

- Gomes, M.; Silva, J.; Goncalves, D.; Zamboni, P.; Perez, J.; Batista, E.; Ramos, A.; Osco, L.; Matsubara, E.; Li, J.; et al. Mapping utility poles in aerial orthoimages using ATSS deep learning method. Sensors 2020, 20, 6070. [Google Scholar] [CrossRef] [PubMed]

- Hosseini, M.; Umunnakwe, A.; Parvania, M.; Tasdizen, T. Intelligent damage classification and estimation in power distribution poles using unmanned aerial vehicles and convolutional neural networks. IEEE Trans. Smart Grid 2020, 11, 3325–3333. [Google Scholar] [CrossRef]

- Lu, Z.; Gong, H.; Jin, Q.; Hu, Q.; Wang, S. A transmission tower tilt state assessment approach based on dense point cloud from UAV-Based LiDAR. Remote Sens. 2022, 14, 408. [Google Scholar] [CrossRef]

- Chen, M.; Chan, T.; Wang, X.; Luo, M.; Lin, Y.; Huang, H.; Sun, Y.; Cui, G.; Huang, Y. A risk analysis framework for transmission towers under potential pluvial flood—LiDAR survey and geometric modelling. Int. J. Disaster Risk Reduct. 2020, 50, 14. [Google Scholar] [CrossRef]

- Wang, Y.; Han, J.; Zhao, Q.; Wang, Y. The method of power transmission tower inclination detection based on UAV image. Comput. Simul. 2017, 34, 426–431. [Google Scholar]

- Shi, Z.; Kang, Z.; Lin, Y.; Liu, Y.; Chen, W. Automatic recognition of Pole-Like objects from mobile laser scanning point clouds. Remote Sens. 2018, 10, 1891. [Google Scholar] [CrossRef]

- Kang, Z.; Yang, J.; Zhong, R.; Wu, Y.; Shi, Z.; Lindenbergh, R. Voxel-Based extraction and classification of 3-D Pole-Like objects from mobile LiDAR point cloud data. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2018, 11, 4287–4298. [Google Scholar] [CrossRef]

- Teo, T.A.; Chiu, C.M. Pole-Like road object detection from mobile lidar system using a Coarse-to-Fine approach. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2015, 8, 4805–4818. [Google Scholar] [CrossRef]

- Ha, T.; Chaisomphob, T. Automated localization and classification of expressway Pole-Like road facilities from mobile laser scanning data. Adv. Civ. Eng. 2020, 2020, 18. [Google Scholar]

- Tian, X.; Wang, L.; Ding, Q. A review of image semantic segmentation methods based on deep learning. J. Softw. 2019, 30, 440–468. [Google Scholar]

- Su, L.; Sun, Y.; Yuan, S. A review of case segmentation based on deep learning. CAAI Trans. Intell. Syst. 2022, 17, 16–31. [Google Scholar]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask r-cnn. In Proceedings of the 16th IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Zhang, J.; Zhao, X.; Chen, Z. Review of semantic segmentation of point cloud based on deep learning. Laser Optoelectron. Prog. 2020, 57, 28–46. [Google Scholar] [CrossRef]

- Ma, C.; Huang, M. A unified cone, cone, cylinder fitting method. In Proceedings of the Ninth Beijing-Hong Kong-Macao Surveying and Mapping Geographic Information Technology Exchange Conference, Beijing, China, 6–7 November 2015. [Google Scholar]

- Ghiasi, G.; Lin, T.; Le, Q.V.; Soc, I.C. Nas-fpn: Learning scalable feature pyramid architecture for object detection. In Proceedings of the 32nd IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Woo, S.H.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the 15th European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Qi, C.; Liu, W.; Wu, C.; Su, H.; Guibas, L.J. Frustum pointnets for 3D object detection from RGB-D data. In Proceedings of the 31st IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Qi, C.; Su, H.; Mo, K.C.; Guibas, L.J. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the 30th IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Brachmann, E.; Rother, C. Neural-Guided RANSAC: Learning where to sample model hypotheses. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Cui, L.; Yang, R.; Qian, J.; Zhang, H.; Chen, K. Spatial linear fitting algorithm based on total least squares. J. Chengdu Univ. 2019, 38, 102–105. [Google Scholar]

- Huang, X.; Wang, P.; Cheng, X.; Zhou, D.; Geng, Q.; Yang, R. The apolloscape open dataset for autonomous driving and its application. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2702–2719. [Google Scholar] [CrossRef] [PubMed]

- Peng, Y.; Zheng, W.; Zhang, J. Summarization of 3D object detection methods based on deep learning. Automob. Technol. 2020, 540, 1–7. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common objects in context. In European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014; Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Song, S.; Lichtenberg, S.P.; Xiao, J. Sun rgb-d: A rgb-d scene understanding benchmark suite. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 567–576. [Google Scholar]

| Method | P | R | AP50 | AP75 |

|---|---|---|---|---|

| Mask R-CNN | 63.3% | 99.25% | 90.55% | 6.58% |

| Mask R-CNN + box expanded | 93.16% | 99.5% | 90.11% | 57.05% |

| Mask R-CNN + CBAM | 67.23% | 95.3% | 90.62% | 8.03% |

| Mask R-CNN + box expanded + CBAM | 95.03% | 99.7% | 91.13% | 58.15% |

| Inclination Angle | Rotation Angle | Average Error | Variance | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 45 | 90 | 135 | 180 | 225 | 270 | 315 | |||

| 0 | 0.91 | 0.35 | 0.82 | 0.45 | 0.65 | 1.15 | 0.32 | 1.14 | 0.72 | 0.11 |

| 2 | 2.35 | 2.34 | 2.65 | 2.42 | 2.85 | 2.32 | 3.35 | 2.92 | 0.65 | 0.14 |

| 4 | 4.33 | 4.27 | 4.53 | 5.15 | 4.71 | 4.53 | 5.12 | 5.07 | 0.71 | 0.13 |

| 6 | 6.56 | 6.69 | 6.05 | 6.74 | 7.32 | 6.15 | 7.13 | 6.32 | 0.62 | 0.20 |

| 8 | 8.35 | 8.86 | 8.44 | 9.13 | 8.62 | 8.81 | 8.06 | 8.78 | 0.63 | 0.11 |

| 10 | 10.53 | 10.36 | 10.94 | 10.43 | 10.52 | 11.11 | 10.84 | 10.13 | 0.61 | 0.11 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, L.; Chang, J.; Xu, J.; Yang, Z. Automatic Measurement of Inclination Angle of Utility Poles Using 2D Image and 3D Point Cloud. Appl. Sci. 2023, 13, 1688. https://doi.org/10.3390/app13031688

Chen L, Chang J, Xu J, Yang Z. Automatic Measurement of Inclination Angle of Utility Poles Using 2D Image and 3D Point Cloud. Applied Sciences. 2023; 13(3):1688. https://doi.org/10.3390/app13031688

Chicago/Turabian StyleChen, Lei, Jiazhen Chang, Jinli Xu, and Zuowei Yang. 2023. "Automatic Measurement of Inclination Angle of Utility Poles Using 2D Image and 3D Point Cloud" Applied Sciences 13, no. 3: 1688. https://doi.org/10.3390/app13031688

APA StyleChen, L., Chang, J., Xu, J., & Yang, Z. (2023). Automatic Measurement of Inclination Angle of Utility Poles Using 2D Image and 3D Point Cloud. Applied Sciences, 13(3), 1688. https://doi.org/10.3390/app13031688