Augmented Reality in Surgical Navigation: A Review of Evaluation and Validation Metrics

Abstract

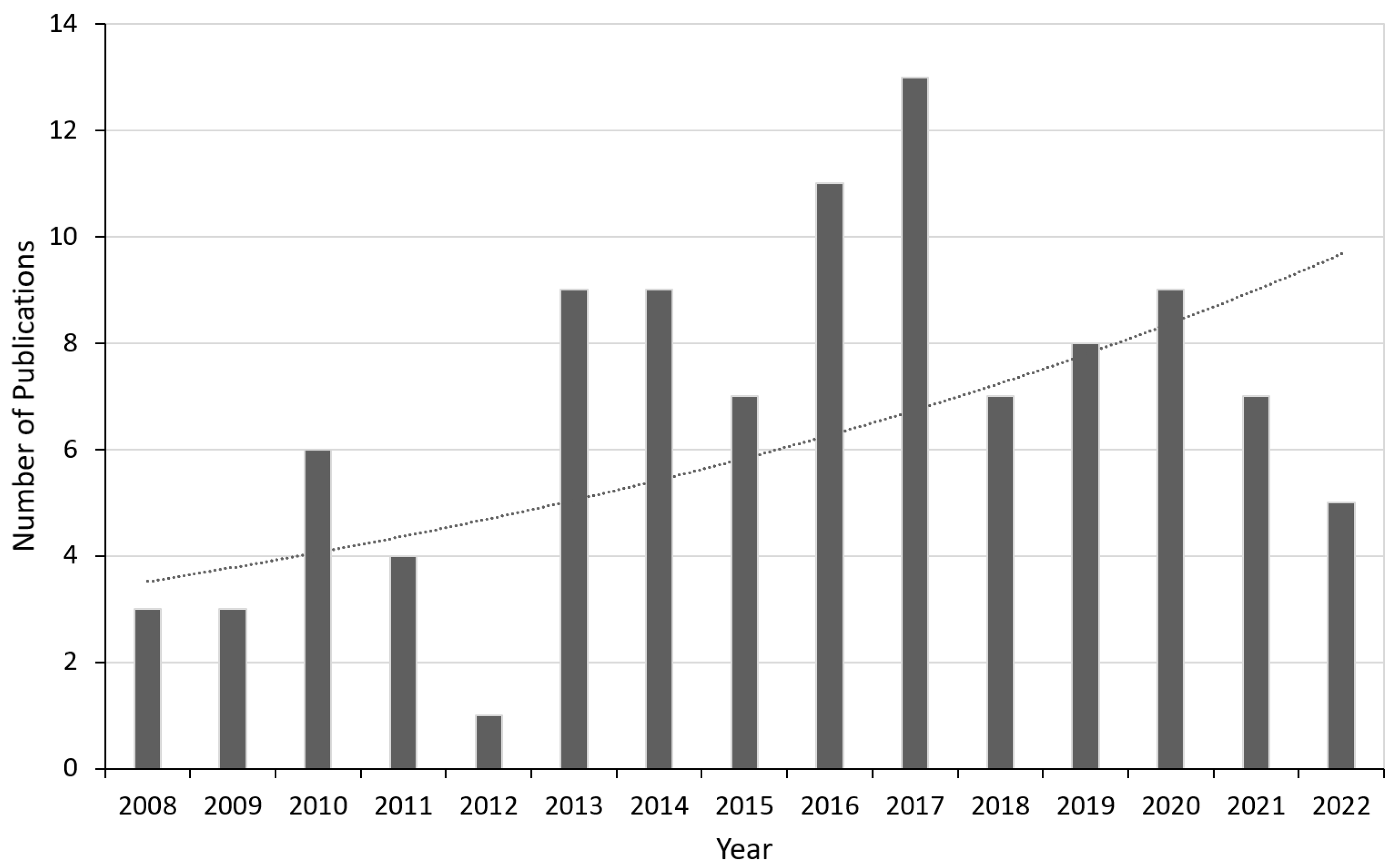

1. Introduction

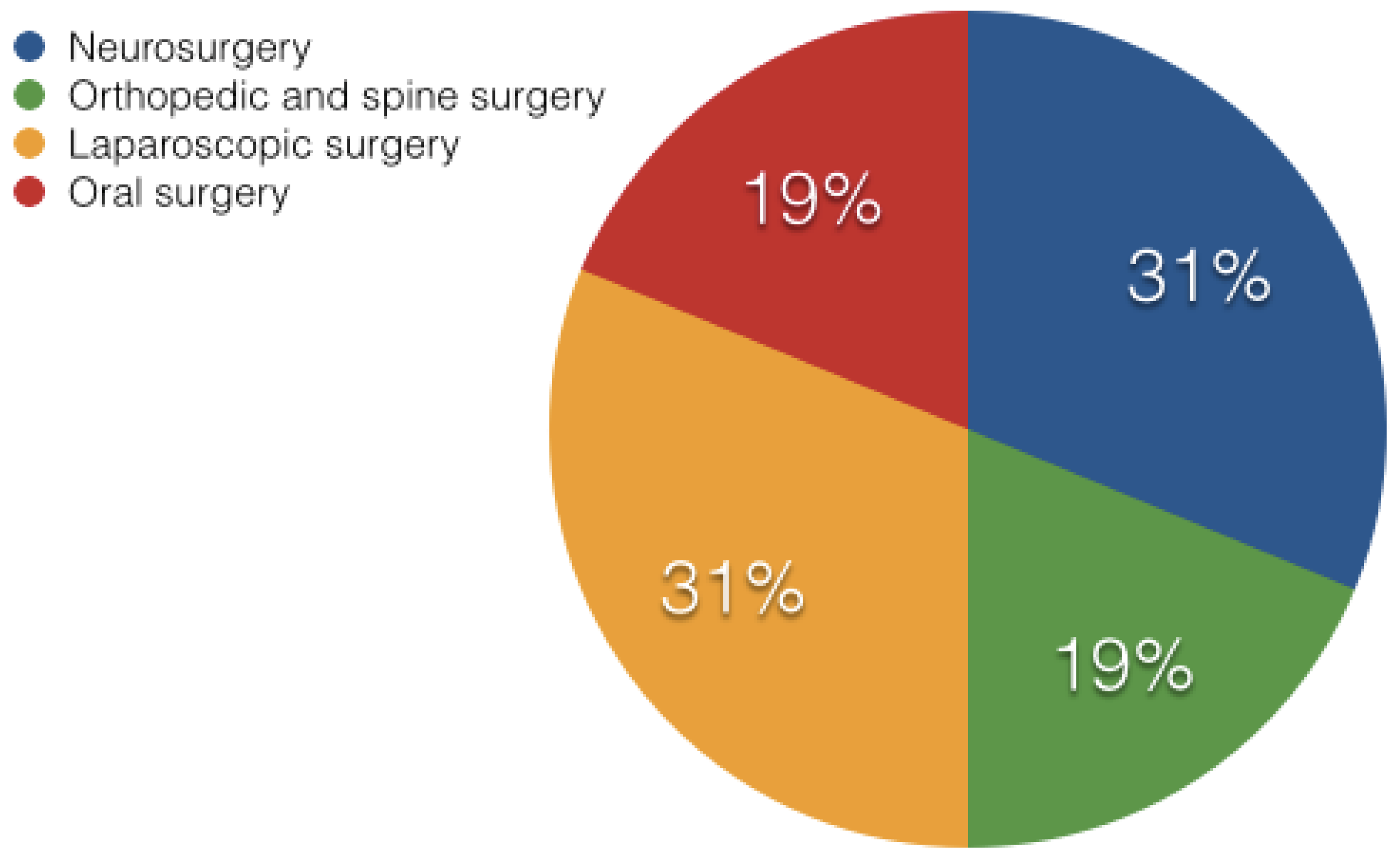

2. State of the Art AR Based Surgical Systems

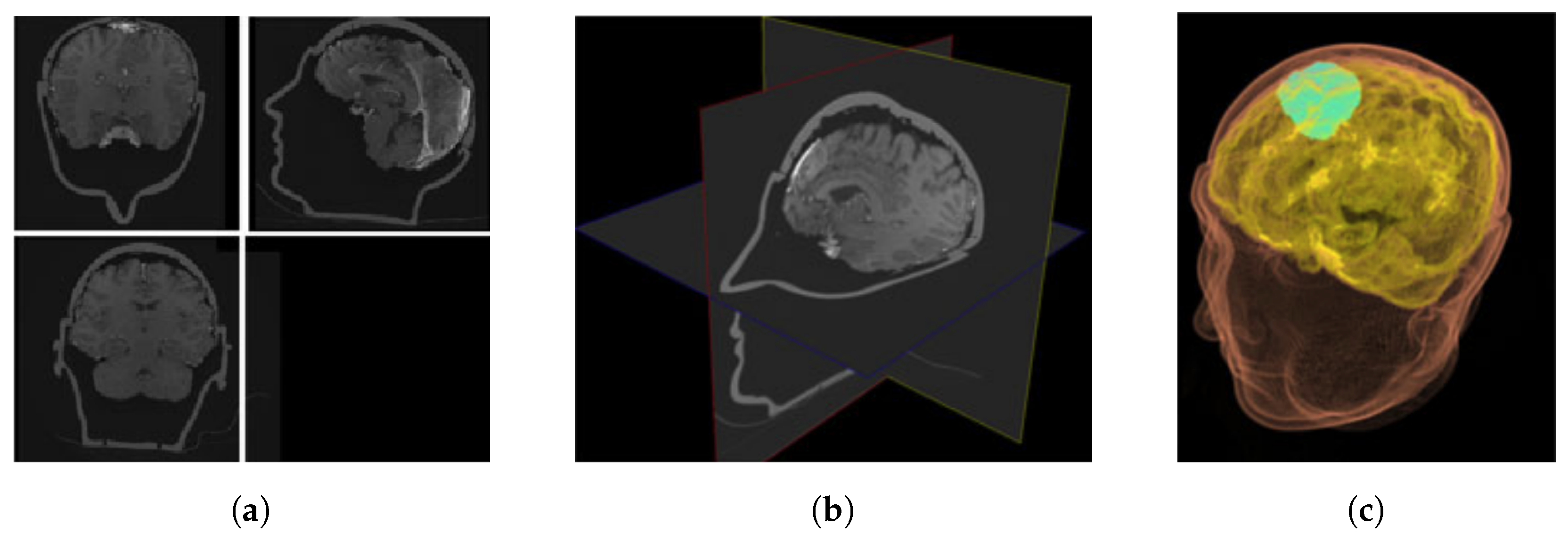

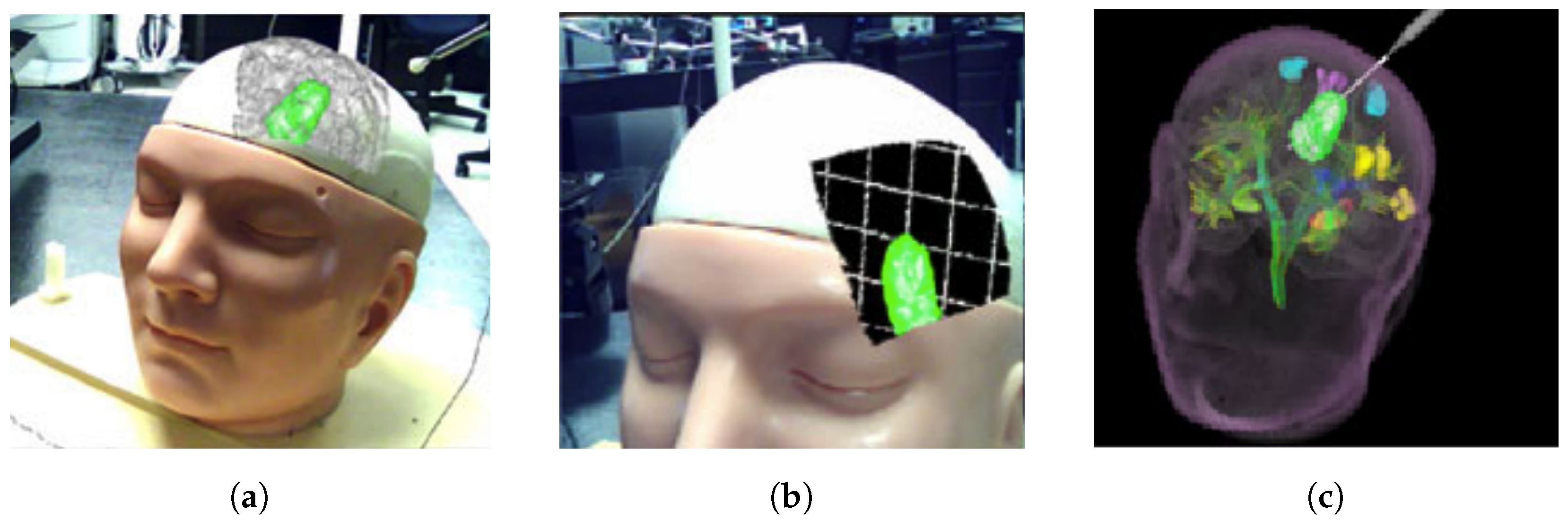

2.1. Neurosurgery

2.2. Orthopedic and Spine Surgery

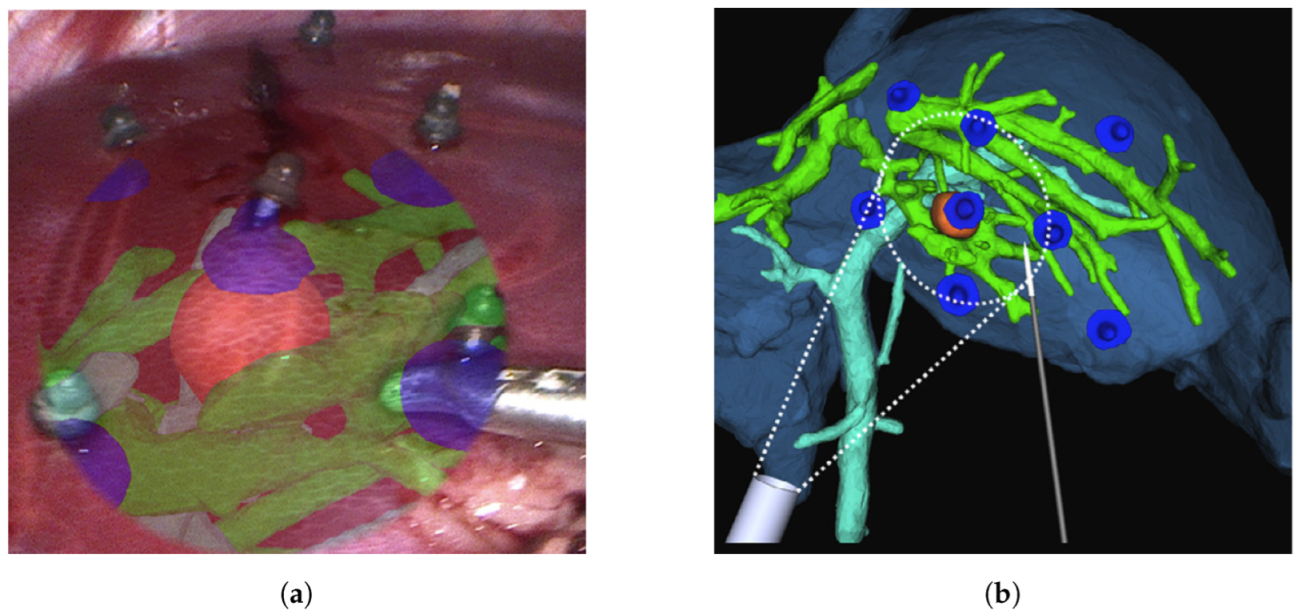

2.3. Laparoscopic Surgery

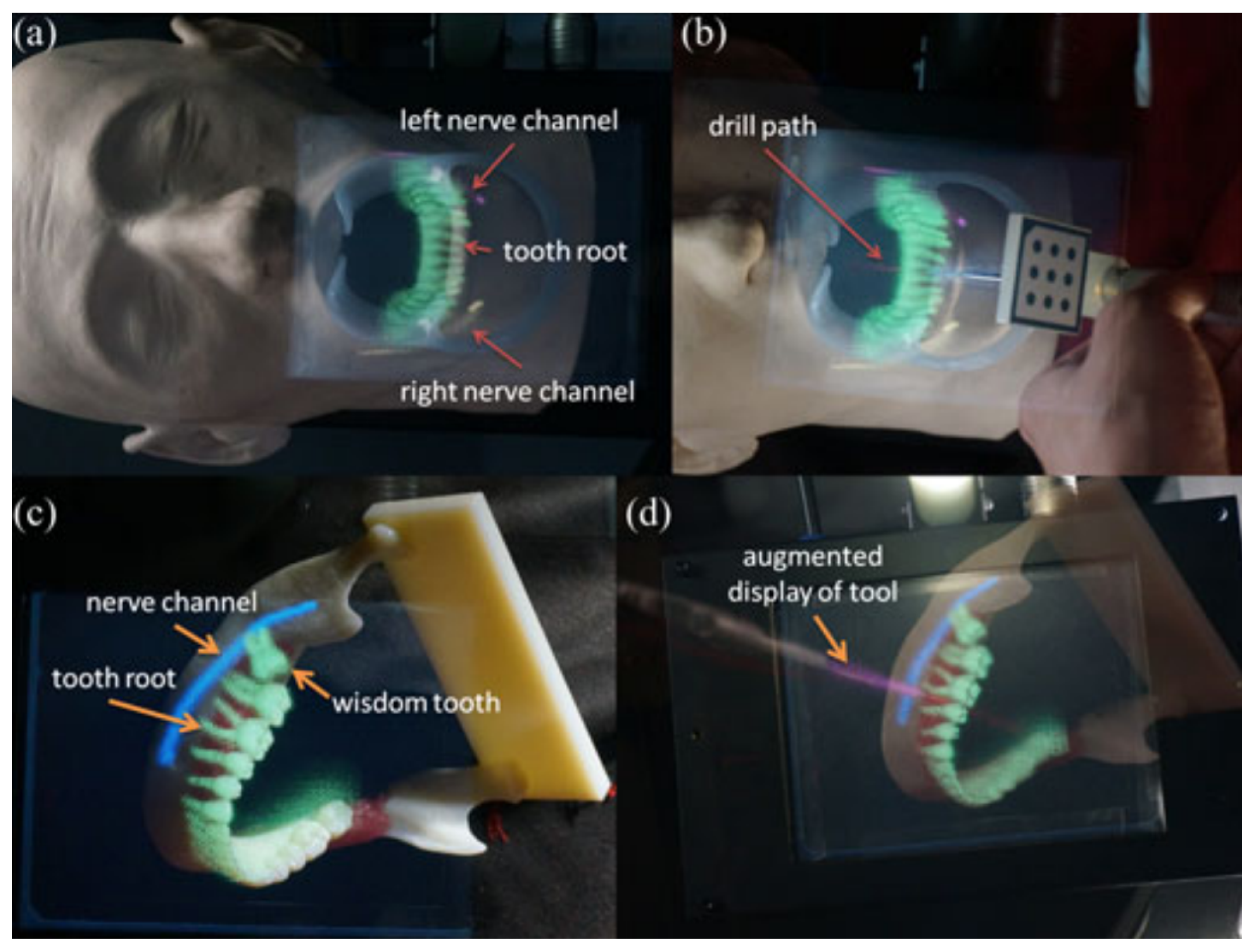

2.4. Oral Surgery

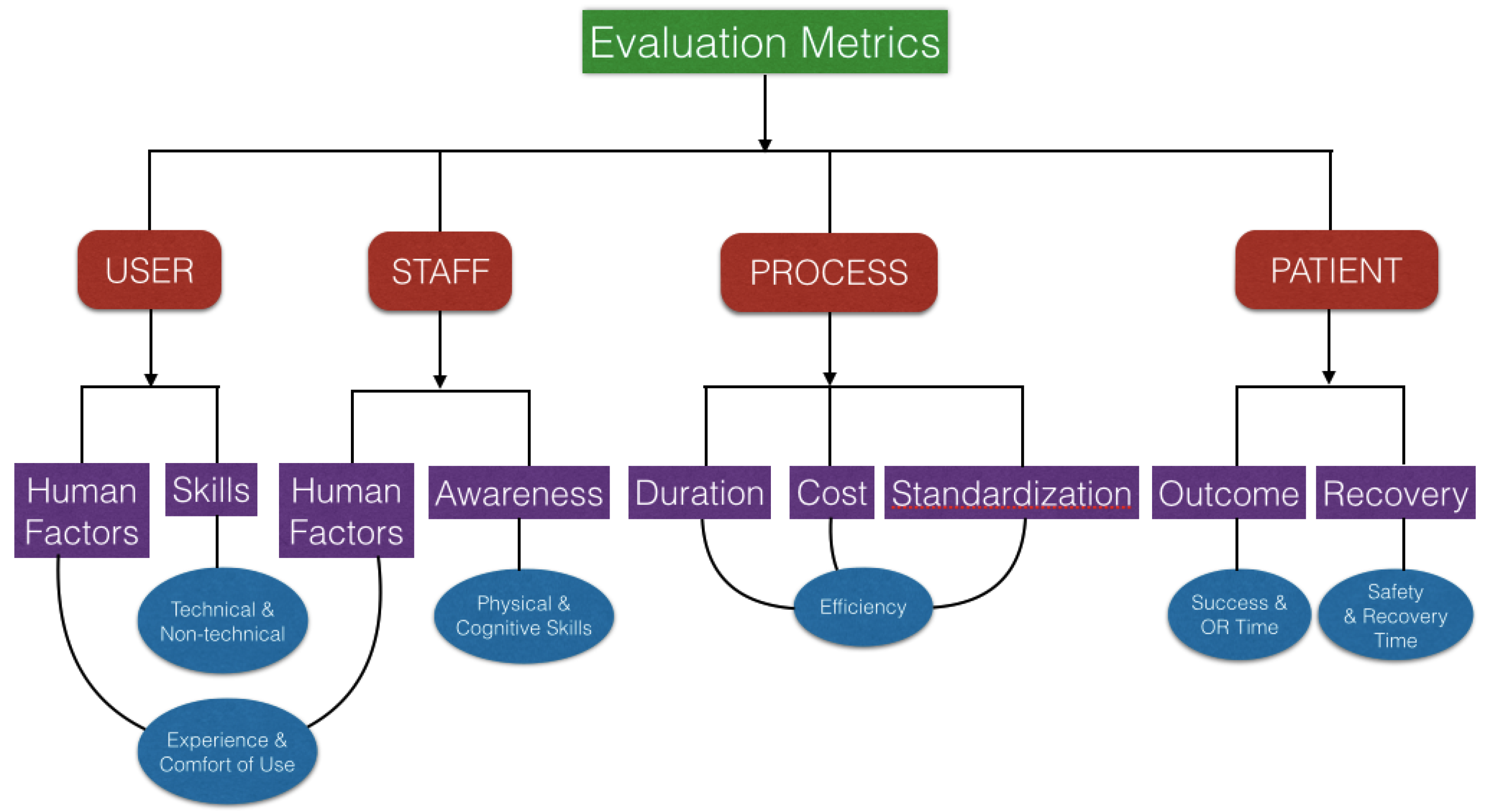

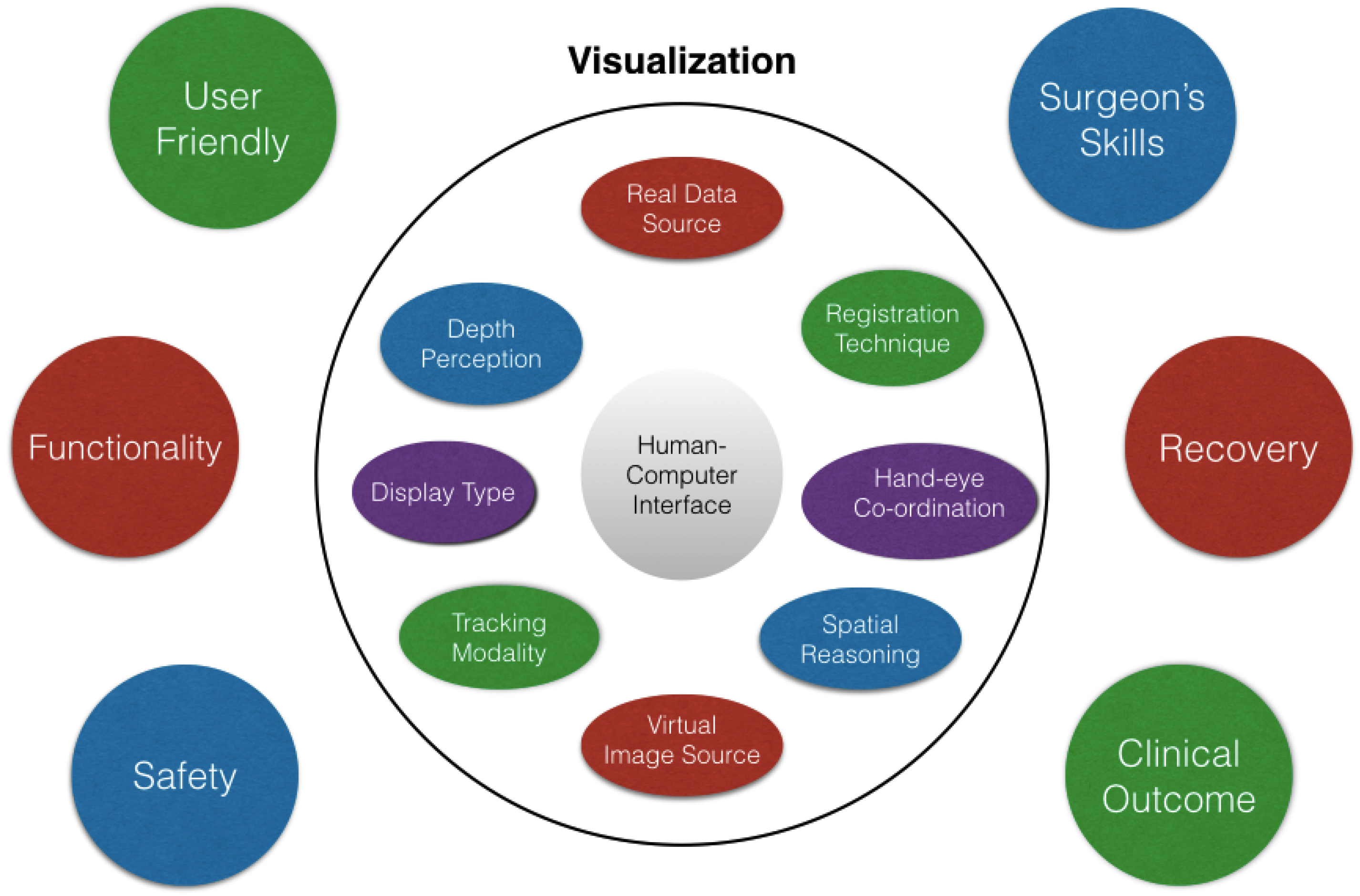

3. Evaluation and Validation of AR Surgical Technology

4. Discussion

4.1. Limitations

4.2. Future Outlook

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Cleary, K.; Peters, T.M. Image-guided interventions: Technology review and clinical applications. Annu. Rev. Biomed. Eng. 2010, 12, 119–142. [Google Scholar] [CrossRef] [PubMed]

- Mezger, U.; Jendrewski, C.; Bartels, M. Navigation in surgery. Langenbeck’s Arch. Surg. 2013, 398, 501–514. [Google Scholar] [CrossRef] [PubMed]

- Azuma, R.T. A survey of augmented reality. Presence Teleoperators Virtual Environ. 1997, 6, 355–385. [Google Scholar] [CrossRef]

- Sielhorst, T.; Feuerstein, M.; Navab, N. Advanced medical displays: A literature review of augmented reality. J. Disp. Technol. 2008, 4, 451–467. [Google Scholar] [CrossRef]

- Kalkofen, D.; Mendez, E.; Schmalstieg, D. Comprehensible visualization for augmented reality. IEEE Trans. Vis. Comput. Graph. 2008, 15, 193–204. [Google Scholar] [CrossRef]

- Shuhaiber, J.H. Augmented reality in surgery. Arch. Surg. 2004, 139, 170–174. [Google Scholar] [CrossRef]

- Marescaux, J.; Clément, J.M.; Tassetti, V.; Koehl, C.; Cotin, S.; Russier, Y.; Mutter, D.; Delingette, H.; Ayache, N. Virtual reality applied to hepatic surgery simulation: The next revolution. Ann. Surg. 1998, 228, 627. [Google Scholar] [CrossRef]

- Wang, J.; Suenaga, H.; Liao, H.; Hoshi, K.; Yang, L.; Kobayashi, E.; Sakuma, I. Real-time computer-generated integral imaging and 3D image calibration for augmented reality surgical navigation. Comput. Med. Imaging Graph. 2015, 40, 147–159. [Google Scholar] [CrossRef]

- Meola, A.; Cutolo, F.; Carbone, M.; Cagnazzo, F.; Ferrari, M.; Ferrari, V. Augmented reality in neurosurgery: A systematic review. Neurosurg. Rev. 2017, 40, 537–548. [Google Scholar] [CrossRef]

- Tagaytayan, R.; Kelemen, A.; Sik-Lanyi, C. Augmented reality in neurosurgery. Arch. Med. Sci. AMS 2018, 14, 572. [Google Scholar] [CrossRef]

- Si, W.; Liao, X.; Wang, Q.; Heng, P.A. Augmented reality-based personalized virtual operative anatomy for neurosurgical guidance and training. In Proceedings of the 2018 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Tuebingen/Reutlingen, Germany, 18–22 March 2018; pp. 683–684. [Google Scholar]

- Lee, J.D.; Wu, H.K.; Wu, C.T. A projection-based AR system to display brain angiography via stereo vision. In Proceedings of the 2018 IEEE 7th Global Conference on Consumer Electronics (GCCE), Nara, Japan, 9–12 October 2018; pp. 130–131. [Google Scholar]

- Shirai, R.; Chen, X.; Sase, K.; Komizunai, S.; Tsujita, T.; Konno, A. AR brain-shift display for computer-assisted neurosurgery. In Proceedings of the 2019 58th Annual Conference of the Society of Instrument and Control Engineers of Japan (SICE), Hiroshima, Japan, 10–13 September 2019; pp. 1113–1118. [Google Scholar]

- Fallavollita, P.; Wang, L.; Weidert, S.; Navab, N. Augmented reality in orthopaedic interventions and education. In Computational Radiology for Orthopaedic Interventions; Springer: Cham, Switzerland, 2016; pp. 251–269. [Google Scholar]

- Zhang, X.; Fan, Z.; Wang, J.; Liao, H. 3D augmented reality based orthopaedic interventions. In Computational Radiology for Orthopaedic Interventions; Springer: Cham, Switzerland, 2016; pp. 71–90. [Google Scholar]

- Härtl, R.; Lam, K.S.; Wang, J.; Korge, A.; Kandziora, F.; Audigé, L. Worldwide survey on the use of navigation in spine surgery. World Neurosurg. 2013, 79, 162–172. [Google Scholar] [CrossRef] [PubMed]

- Nicolau, S.; Soler, L.; Mutter, D.; Marescaux, J. Augmented reality in laparoscopic surgical oncology. Surg. Oncol. 2011, 20, 189–201. [Google Scholar] [CrossRef] [PubMed]

- Bernhardt, S.; Nicolau, S.A.; Soler, L.; Doignon, C. The status of augmented reality in laparoscopic surgery as of 2016. Med. Image Anal. 2017, 37, 66–90. [Google Scholar] [CrossRef] [PubMed]

- Tsutsumi, N.; Tomikawa, M.; Uemura, M.; Akahoshi, T.; Nagao, Y.; Konishi, K.; Ieiri, S.; Hong, J.; Maehara, Y.; Hashizume, M. Image-guided laparoscopic surgery in an open MRI operating theater. Surg. Endosc. 2013, 27, 2178–2184. [Google Scholar] [CrossRef] [PubMed]

- Hallet, J.; Soler, L.; Diana, M.; Mutter, D.; Baumert, T.F.; Habersetzer, F.; Marescaux, J.; Pessaux, P. Trans-thoracic minimally invasive liver resection guided by augmented reality. J. Am. Coll. Surg. 2015, 220, e55–e60. [Google Scholar] [CrossRef]

- Tang, R.; Ma, L.F.; Rong, Z.X.; Li, M.D.; Zeng, J.P.; Wang, X.D.; Liao, H.E.; Dong, J.H. Augmented reality technology for preoperative planning and intraoperative navigation during hepatobiliary surgery: A review of current methods. Hepatobiliary Pancreat. Dis. Int. 2018, 17, 101–112. [Google Scholar] [CrossRef]

- Zhang, F.; Zhang, S.; Zhong, K.; Yu, L.; Sun, L.N. Design of navigation system for liver surgery guided by augmented reality. IEEE Access 2020, 8, 126687–126699. [Google Scholar] [CrossRef]

- Gavriilidis, P.; Edwin, B.; Pelanis, E.; Hidalgo, E.; de’Angelis, N.; Memeo, R.; Aldrighetti, L.; Sutcliffe, R.P. Navigated liver surgery: State of the art and future perspectives. Hepatobiliary Pancreat. Dis. Int. 2022, 21, 226–233. [Google Scholar] [CrossRef]

- Okamoto, T.; Onda, S.; Matsumoto, M.; Gocho, T.; Futagawa, Y.; Fujioka, S.; Yanaga, K.; Suzuki, N.; Hattori, A. Utility of augmented reality system in hepatobiliary surgery. J. Hepato-Biliary Sci. 2013, 20, 249–253. [Google Scholar] [CrossRef]

- Wang, J.; Suenaga, H.; Yang, L.; Kobayashi, E.; Sakuma, I. Video see-through augmented reality for oral and maxillofacial surgery. Int. J. Med. Robot. Comput. Assist. Surg. 2017, 13, e1754. [Google Scholar] [CrossRef]

- Jiang, J.; Huang, Z.; Qian, W.; Zhang, Y.; Liu, Y. Registration technology of augmented reality in oral medicine: A review. IEEE Access 2019, 7, 53566–53584. [Google Scholar] [CrossRef]

- Okamoto, T.; Onda, S.; Yanaga, K.; Suzuki, N.; Hattori, A. Clinical application of navigation surgery using augmented reality in the abdominal field. Surg. Today 2015, 45, 397–406. [Google Scholar] [CrossRef] [PubMed]

- Navab, N.; Heining, S.M.; Traub, J. Camera augmented mobile C-arm (CAMC): Calibration, accuracy study, and clinical applications. IEEE Trans. Med. Imaging 2009, 29, 1412–1423. [Google Scholar] [CrossRef] [PubMed]

- von der Heide, A.M.; Fallavollita, P.; Wang, L.; Sandner, P.; Navab, N.; Weidert, S.; Euler, E. Camera-augmented mobile C-arm (CamC): A feasibility study of augmented reality imaging in the operating room. Int. J. Med. Robot. Comput. Assist. Surg. 2018, 14, e1885. [Google Scholar] [CrossRef]

- Cartucho, J.; Shapira, D.; Ashrafian, H.; Giannarou, S. Multimodal mixed reality visualisation for intraoperative surgical guidance. Int. J. Comput. Assist. Radiol. Surg. 2020, 15, 819–826. [Google Scholar] [CrossRef]

- Kersten-Oertel, M.; Jannin, P.; Collins, D.L. The state of the art of visualization in mixed reality image guided surgery. Comput. Med. Imaging Graph. 2013, 37, 98–112. [Google Scholar] [CrossRef]

- Chou, B.; Handa, V.L. Simulators and virtual reality in surgical education. Obstet. Gynecol. Clin. 2006, 33, 283–296. [Google Scholar] [CrossRef]

- Shamir, R.R.; Horn, M.; Blum, T.; Mehrkens, J.; Shoshan, Y.; Joskowicz, L.; Navab, N. Trajectory planning with augmented reality for improved risk assessment in image-guided keyhole neurosurgery. In Proceedings of the 2011 IEEE International Symposium on Biomedical Imaging: From Nano to Macro, Chicago, IL, USA, 30 March–2 April 2011; pp. 1873–1876. [Google Scholar]

- Thomas, R.G.; William John, N.; Delieu, J.M. Augmented reality for anatomical education. J. Vis. Commun. Med. 2010, 33, 6–15. [Google Scholar] [CrossRef]

- Fang, T.Y.; Wang, P.C.; Liu, C.H.; Su, M.C.; Yeh, S.C. Evaluation of a haptics-based virtual reality temporal bone simulator for anatomy and surgery training. Comput. Methods Programs Biomed. 2014, 113, 674–681. [Google Scholar] [CrossRef]

- Stefan, P.; Wucherer, P.; Oyamada, Y.; Ma, M.; Schoch, A.; Kanegae, M.; Shimizu, N.; Kodera, T.; Cahier, S.; Weigl, M.; et al. An AR edutainment system supporting bone anatomy learning. In Proceedings of the 2014 IEEE Virtual Reality (VR), Minneapolis, MN, USA, 29 March–2 April 2014; pp. 113–114. [Google Scholar]

- Ma, M.; Fallavollita, P.; Seelbach, I.; von der Heide, A.M.; Euler, E.; Waschke, J.; Navab, N. Personalized augmented reality for anatomy education. Clin. Anat. 2016, 29, 446–453. [Google Scholar] [CrossRef]

- Krueger, E.; Messier, E.; Linte, C.A.; Diaz, G. An interactive, stereoscopic virtual environment for medical imaging visualization, simulation and training. In Medical Imaging 2017: Image Perception, Observer Performance, and Technology Assessment; SPIE: Bellingham, WA, USA, 2017; Volume 10136, pp. 399–405. [Google Scholar]

- Huang, H.M.; Liaw, S.S.; Lai, C.M. Exploring learner acceptance of the use of virtual reality in medical education: A case study of desktop and projection-based display systems. Interact. Learn. Environ. 2016, 24, 3–19. [Google Scholar] [CrossRef]

- Küçük, S.; Kapakin, S.; Göktaş, Y. Learning anatomy via mobile augmented reality: Effects on achievement and cognitive load. Anat. Sci. Educ. 2016, 9, 411–421. [Google Scholar] [CrossRef] [PubMed]

- Moro, C.; Štromberga, Z.; Raikos, A.; Stirling, A. The effectiveness of virtual and augmented reality in health sciences and medical anatomy. Anat. Sci. Educ. 2017, 10, 549–559. [Google Scholar] [CrossRef]

- Peterson, D.C.; Mlynarczyk, G.S. Analysis of traditional versus three-dimensional augmented curriculum on anatomical learning outcome measures. Anat. Sci. Educ. 2016, 9, 529–536. [Google Scholar] [CrossRef] [PubMed]

- Cabrilo, I.; Bijlenga, P.; Schaller, K. Augmented reality in the surgery of cerebral arteriovenous malformations: Technique assessment and considerations. Acta Neurochir. 2014, 156, 1769–1774. [Google Scholar] [CrossRef] [PubMed]

- Tabrizi, L.B.; Mahvash, M. Augmented reality–guided neurosurgery: Accuracy and intraoperative application of an image projection technique. J. Neurosurg. 2015, 123, 206–211. [Google Scholar] [CrossRef] [PubMed]

- Kersten-Oertel, M.; Gerard, I.; Drouin, S.; Mok, K.; Sirhan, D.; Sinclair, D.S.; Collins, D.L. Augmented reality in neurovascular surgery: Feasibility and first uses in the operating room. Int. J. Comput. Assist. Radiol. Surg. 2015, 10, 1823–1836. [Google Scholar] [CrossRef]

- Abhari, K.; Baxter, J.S.; Chen, E.C.; Khan, A.R.; Peters, T.M.; De Ribaupierre, S.; Eagleson, R. Training for planning tumour resection: Augmented reality and human factors. IEEE Trans. Biomed. Eng. 2014, 62, 1466–1477. [Google Scholar] [CrossRef]

- Fick, T.; van Doormaal, J.A.; Hoving, E.W.; Willems, P.W.; van Doormaal, T.P. Current accuracy of augmented reality neuronavigation systems: Systematic review and meta-analysis. World Neurosurg. 2021, 146, 179–188. [Google Scholar] [CrossRef]

- López, W.O.C.; Navarro, P.A.; Crispin, S. Intraoperative clinical application of augmented reality in neurosurgery: A systematic review. Clin. Neurol. Neurosurg. 2019, 177, 6–11. [Google Scholar] [CrossRef]

- Rennert, R.C.; Santiago-Dieppa, D.R.; Figueroa, J.; Sanai, N.; Carter, B.S. Future directions of operative neuro-oncology. J. Neuro-Oncol. 2016, 130, 377–382. [Google Scholar] [CrossRef] [PubMed]

- Jud, L.; Fotouhi, J.; Andronic, O.; Aichmair, A.; Osgood, G.; Navab, N.; Farshad, M. Applicability of augmented reality in orthopedic surgery–a systematic review. BMC Musculoskelet. Disord. 2020, 21, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Phillips, F.M.; Cheng, I.; Rampersaud, Y.R.; Akbarnia, B.A.; Pimenta, L.; Rodgers, W.B.; Uribe, J.S.; Khanna, N.; Smith, W.D.; Youssef, J.A.; et al. Breaking through the “glass ceiling” of minimally invasive spine surgery. Spine 2016, 41, S39–S43. [Google Scholar] [CrossRef]

- Manbachi, A.; Cobbold, R.S.; Ginsberg, H.J. Guided pedicle screw insertion: Techniques and training. Spine J. 2014, 14, 165–179. [Google Scholar] [CrossRef]

- Mason, A.; Paulsen, R.; Babuska, J.M.; Rajpal, S.; Burneikiene, S.; Nelson, E.L.; Villavicencio, A.T. The accuracy of pedicle screw placement using intraoperative image guidance systems: A systematic review. J. Neurosurg. Spine 2014, 20, 196–203. [Google Scholar] [CrossRef]

- Ma, L.; Zhao, Z.; Chen, F.; Zhang, B.; Fu, L.; Liao, H. Augmented reality surgical navigation with ultrasound-assisted registration for pedicle screw placement: A pilot study. Int. J. Comput. Assist. Radiol. Surg. 2017, 12, 2205–2215. [Google Scholar] [CrossRef]

- Bonjer, H.J.; Deijen, C.L.; Abis, G.A.; Cuesta, M.A.; van der Pas, M.H.; De Lange-De Klerk, E.S.; Lacy, A.M.; Bemelman, W.A.; Andersson, J.; Angenete, E.; et al. A randomized trial of laparoscopic versus open surgery for rectal cancer. N. Engl. J. Med. 2015, 372, 1324–1332. [Google Scholar] [CrossRef]

- Lamata, P.; Ali, W.; Cano, A.; Cornella, J.; Declerck, J.; Elle, O.J.; Freudenthal, A.; Furtado, H.; Kalkofen, D.; Naerum, E.; et al. Augmented reality for minimally invasive surgery: Overview and some recent advances. In Augmented Reality; IntechOpen: London, UK, 2010; p. 73. [Google Scholar]

- Wild, C.; Lang, F.; Gerhäuser, A.; Schmidt, M.; Kowalewski, K.; Petersen, J.; Kenngott, H.; Müller-Stich, B.; Nickel, F. Telestration with augmented reality for visual presentation of intraoperative target structures in minimally invasive surgery: A randomized controlled study. Surg. Endosc. 2022, 36, 7453–7461. [Google Scholar] [CrossRef]

- Fuchs, H.; Livingston, M.A.; Raskar, R.; Colucci, D.; Keller, K.; State, A.; Crawford, J.R.; Rademacher, P.; Drake, S.H.; Meyer, A.A. Augmented reality visualization for laparoscopic surgery. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Berlin/Heidelberg, Germany, 1998; pp. 934–943. [Google Scholar]

- Modrzejewski, R.; Collins, T.; Seeliger, B.; Bartoli, A.; Hostettler, A.; Marescaux, J. An in vivo porcine dataset and evaluation methodology to measure soft-body laparoscopic liver registration accuracy with an extended algorithm that handles collisions. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 1237–1245. [Google Scholar] [CrossRef] [PubMed]

- Pelanis, E.; Teatini, A.; Eigl, B.; Regensburger, A.; Alzaga, A.; Kumar, R.P.; Rudolph, T.; Aghayan, D.L.; Riediger, C.; Kvarnstr, N.; et al. Evaluation of a novel navigation platform for laparoscopic liver surgery with organ deformation compensation using injected fiducials. Med. Image Anal. 2021, 69, 101946. [Google Scholar] [CrossRef]

- Zhang, W.; Zhu, W.; Yang, J.; Xiang, N.; Zeng, N.; Hu, H.; Jia, F.; Fang, C. Augmented reality navigation for stereoscopic laparoscopic anatomical hepatectomy of primary liver cancer: Preliminary experience. Front. Oncol. 2021, 11, 996. [Google Scholar] [CrossRef] [PubMed]

- Teatini, A.; Pelanis, E.; Aghayan, D.L.; Kumar, R.P.; Palomar, R.; Fretland, Å.A.; Edwin, B.; Elle, O.J. The effect of intraoperative imaging on surgical navigation for laparoscopic liver resection surgery. Sci. Rep. 2019, 9, 18687. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Plishker, W.; Kane, T.D.; Geller, D.A.; Lau, L.W.; Tashiro, J.; Sharma, K.; Shekhar, R. Preclinical evaluation of ultrasound-augmented needle navigation for laparoscopic liver ablation. Int. J. Comput. Assist. Radiol. Surg. 2020, 15, 803–810. [Google Scholar] [CrossRef] [PubMed]

- Shekhar, R.; Dandekar, O.; Bhat, V.; Philip, M.; Lei, P.; Godinez, C.; Sutton, E.; George, I.; Kavic, S.; Mezrich, R.; et al. Live augmented reality: A new visualization method for laparoscopic surgery using continuous volumetric computed tomography. Surg. Endosc. 2010, 24, 1976–1985. [Google Scholar] [CrossRef]

- Luo, H.; Yin, D.; Zhang, S.; Xiao, D.; He, B.; Meng, F.; Zhang, Y.; Cai, W.; He, S.; Zhang, W.; et al. Augmented reality navigation for liver resection with a stereoscopic laparoscope. Comput. Methods Programs Biomed. 2020, 187, 105099. [Google Scholar] [CrossRef]

- Schneider, C.; Allam, M.; Stoyanov, D.; Hawkes, D.; Gurusamy, K.; Davidson, B. Performance of image guided navigation in laparoscopic liver surgery–A systematic review. Surg. Oncol. 2021, 38, 101637. [Google Scholar] [CrossRef]

- Bernhardt, S.; Nicolau, S.A.; Agnus, V.; Soler, L.; Doignon, C.; Marescaux, J. Automatic localization of endoscope in intraoperative CT image: A simple approach to augmented reality guidance in laparoscopic surgery. Med. Image Anal. 2016, 30, 130–143. [Google Scholar] [CrossRef]

- Jayarathne, U.L.; Moore, J.; Chen, E.; Pautler, S.E.; Peters, T.M. Real-time 3D ultrasound reconstruction and visualization in the context of laparoscopy. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Cham, Switzerland, 2017; pp. 602–609. [Google Scholar]

- Casap, N.; Nadel, S.; Tarazi, E.; Weiss, E.I. Evaluation of a navigation system for dental implantation as a tool to train novice dental practitioners. J. Oral Maxillofac. Surg. 2011, 69, 2548–2556. [Google Scholar] [CrossRef]

- Yamaguchi, S.; Ohtani, T.; Yatani, H.; Sohmura, T. Augmented reality system for dental implant surgery. In International Conference on Virtual and Mixed Reality; Springer: Berlin/Heidelberg, Germany, 2009; pp. 633–638. [Google Scholar]

- Tran, H.H.; Suenaga, H.; Kuwana, K.; Masamune, K.; Dohi, T.; Nakajima, S.; Liao, H. Augmented reality system for oral surgery using 3D auto stereoscopic visualization. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Berlin/Heidelberg, Germany, 2011; pp. 81–88. [Google Scholar]

- Wang, J.; Suenaga, H.; Hoshi, K.; Yang, L.; Kobayashi, E.; Sakuma, I.; Liao, H. Augmented reality navigation with automatic marker-free image registration using 3-D image overlay for dental surgery. IEEE Trans. Biomed. Eng. 2014, 61, 1295–1304. [Google Scholar] [CrossRef]

- Katić, D.; Sudra, G.; Speidel, S.; Castrillon-Oberndorfer, G.; Eggers, G.; Dillmann, R. Knowledge-based situation interpretation for context-aware augmented reality in dental implant surgery. In International Workshop on Medical Imaging and Virtual Reality; Springer: Berlin/Heidelberg, Germany, 2010; pp. 531–540. [Google Scholar]

- Morineau, T.; Morandi, X.; Le Moëllic, N.; Jannin, P. A cognitive engineering framework for the specification of information requirements in medical imaging: Application in image-guided neurosurgery. Int. J. Comput. Assist. Radiol. Surg. 2013, 8, 291–300. [Google Scholar] [CrossRef]

- Mikhail, M.; Mithani, K.; Ibrahim, G.M. Presurgical and intraoperative augmented reality in neuro-oncologic surgery: Clinical experiences and limitations. World Neurosurg. 2019, 128, 268–276. [Google Scholar] [CrossRef] [PubMed]

- Andress, S.; Johnson, A.; Unberath, M.; Winkler, A.F.; Yu, K.; Fotouhi, J.; Weidert, S.; Osgood, G.M.; Navab, N. On-the-fly augmented reality for orthopedic surgery using a multimodal fiducial. J. Med. Imaging 2018, 5, 021209. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Suenaga, H.; Yang, L.; Liao, H.; Kobayashi, E.; Takato, T.; Sakuma, I. Real-time marker-free patient registration and image-based navigation using stereovision for dental surgery. In Augmented Reality Environments for Medical Imaging and Computer-Assisted Interventions; Springer: Berlin/Heidelberg, Germany, 2013; pp. 9–18. [Google Scholar]

- Gribaudo, M.; Piazzolla, P.; Porpiglia, F.; Vezzetti, E.; Violante, M.G. 3D augmentation of the surgical video stream: Toward a modular approach. Comput. Methods Programs Biomed. 2020, 191, 105505. [Google Scholar] [CrossRef] [PubMed]

- Linte, C.A.; White, J.; Eagleson, R.; Guiraudon, G.M.; Peters, T.M. Virtual and augmented medical imaging environments: Enabling technology for minimally invasive cardiac interventional guidance. IEEE Rev. Biomed. Eng. 2010, 3, 25–47. [Google Scholar] [CrossRef]

- Baum, Z.; Ungi, T.; Lasso, A.; Fichtinger, G. Usability of a real-time tracked augmented reality display system in musculoskeletal injections. In Medical Imaging 2017: Image-Guided Procedures, Robotic Interventions, and Modeling; SPIE: Bellingham, WA, USA, 2017; Volume 10135, pp. 727–734. [Google Scholar]

- Cercenelli, L.; Carbone, M.; Condino, S.; Cutolo, F.; Marcelli, E.; Tarsitano, A.; Marchetti, C.; Ferrari, V.; Badiali, G. The wearable VOSTARS system for augmented reality-guided surgery: Preclinical phantom evaluation for high-precision maxillofacial tasks. J. Clin. Med. 2020, 9, 3562. [Google Scholar] [CrossRef]

- Maisto, M.; Pacchierotti, C.; Chinello, F.; Salvietti, G.; De Luca, A.; Prattichizzo, D. Evaluation of wearable haptic systems for the fingers in augmented reality applications. IEEE Trans. Haptics 2017, 10, 511–522. [Google Scholar] [CrossRef]

- Cutolo, F.; Fida, B.; Cattari, N.; Ferrari, V. Software framework for customized augmented reality headsets in medicine. IEEE Access 2019, 8, 706–720. [Google Scholar] [CrossRef]

- Gavaghan, K.; Oliveira-Santos, T.; Peterhans, M.; Reyes, M.; Kim, H.; Anderegg, S.; Weber, S. Evaluation of a portable image overlay projector for the visualisation of surgical navigation data: Phantom studies. Int. J. Comput. Assist. Radiol. Surg. 2012, 7, 547–556. [Google Scholar] [CrossRef]

- Rosenthal, M.; State, A.; Lee, J.; Hirota, G.; Ackerman, J.; Keller, K.; Pisano, E.D.; Jiroutek, M.; Muller, K.; Fuchs, H. Augmented reality guidance for needle biopsies: An initial randomized, controlled trial in phantoms. Med. Image Anal. 2002, 6, 313–320. [Google Scholar] [CrossRef]

- Jiang, Y.; Wang, H.R.; Wang, P.F.; Xu, S.G. The Surgical Approach Visualization and Navigation (SAVN) System reduces radiation dosage and surgical trauma due to accurate intraoperative guidance. Injury 2019, 50, 859–863. [Google Scholar] [CrossRef]

- Wen, R.; Tay, W.L.; Nguyen, B.P.; Chng, C.B.; Chui, C.K. Hand gesture guided robot-assisted surgery based on a direct augmented reality interface. Comput. Methods Programs Biomed. 2014, 116, 68–80. [Google Scholar] [CrossRef] [PubMed]

- Wen, R.; Chng, C.B.; Chui, C.K. Augmented reality guidance with multimodality imaging data and depth-perceived interaction for robot-assisted surgery. Robotics 2017, 6, 13. [Google Scholar] [CrossRef]

- Giannone, F.; Felli, E.; Cherkaoui, Z.; Mascagni, P.; Pessaux, P. Augmented Reality and Image-Guided Robotic Liver Surgery. Cancers 2021, 13, 6268. [Google Scholar] [CrossRef]

- Maier-Hein, L.; Mountney, P.; Bartoli, A.; Elhawary, H.; Elson, D.; Groch, A.; Kolb, A.; Rodrigues, M.; Sorger, J.; Speidel, S.; et al. Optical techniques for 3D surface reconstruction in computer-assisted laparoscopic surgery. Med. Image Anal. 2013, 17, 974–996. [Google Scholar] [CrossRef] [PubMed]

- Peters, T.M.; Linte, C.A.; Yaniv, Z.; Williams, J. Mixed and Augmented Reality in Medicine; CRC Press: Boca Raton, FL, USA, 2018. [Google Scholar]

- Jannin, P.; Korb, W. Assessment of image-guided interventions. In Image-Guided Interventions; Springer: Boston, MA, USA, 2008; pp. 531–549. [Google Scholar]

- Benckert, C.; Bruns, C. The surgeon’s contribution to image-guided oncology. Visc. Med. 2014, 30, 232–236. [Google Scholar] [CrossRef]

- Marcus, H.J.; Pratt, P.; Hughes-Hallett, A.; Cundy, T.P.; Marcus, A.P.; Yang, G.Z.; Darzi, A.; Nandi, D. Comparative effectiveness and safety of image guidance systems in surgery: A preclinical randomised study. Lancet 2015, 385, S64. [Google Scholar] [CrossRef]

- Khor, W.S.; Baker, B.; Amin, K.; Chan, A.; Patel, K.; Wong, J. Augmented and virtual reality in surgery-the digital surgical environment: Applications, limitations and legal pitfalls. Ann. Transl. Med. 2016, 4, 454. [Google Scholar] [CrossRef] [PubMed]

- Chen, F.; Cui, X.; Liu, J.; Han, B.; Zhang, X.; Zhang, D.; Liao, H. Tissue structure updating for In situ augmented reality navigation using calibrated ultrasound and two-level surface warping. IEEE Trans. Biomed. Eng. 2020, 67, 3211–3222. [Google Scholar] [CrossRef]

- Nicolau, S.; Pennec, X.; Soler, L.; Buy, X.; Gangi, A.; Ayache, N.; Marescaux, J. An augmented reality system for liver thermal ablation: Design and evaluation on clinical cases. Med. Image Anal. 2009, 13, 494–506. [Google Scholar] [CrossRef]

- Chen, E.C.; Morgan, I.; Jayarathne, U.; Ma, B.; Peters, T.M. Hand-eye calibration using a target registration error model. Healthc. Technol. Lett. 2017, 4, 157–162. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhang, L.; Yang, G.Z. A computationally efficient method for hand-eye calibration. Int. J. Comput. Assist. Radiol. Surg. 2017, 12, 1775–1787. [Google Scholar] [CrossRef] [PubMed]

- Chen, E.; Ma, B.; Peters, T.M. Contact-less stylus for surgical navigation: Registration without digitization. Int. J. Comput. Assist. Radiol. Surg. 2017, 12, 1231–1241. [Google Scholar] [CrossRef] [PubMed]

- Schneider, C.; Thompson, S.; Totz, J.; Song, Y.; Allam, M.; Sodergren, M.; Desjardins, A.; Barratt, D.; Ourselin, S.; Gurusamy, K.; et al. Comparison of manual and semi-automatic registration in augmented reality image-guided liver surgery: A clinical feasibility study. Surg. Endosc. 2020, 34, 4702–4711. [Google Scholar] [CrossRef]

- Golse, N.; Petit, A.; Lewin, M.; Vibert, E.; Cotin, S. Augmented reality during open liver surgery using a markerless non-rigid registration system. J. Gastrointest. Surg. 2021, 25, 662–671. [Google Scholar] [CrossRef]

- Ansari, M.Y.; Abdalla, A.; Ansari, M.Y.; Ansari, M.I.; Malluhi, B.; Mohanty, S.; Mishra, S.; Singh, S.S.; Abinahed, J.; Al-Ansari, A.; et al. Practical utility of liver segmentation methods in clinical surgeries and interventions. BMC Med. Imaging 2022, 22, 1–17. [Google Scholar]

- Reinke, A.; Eisenmann, M.; Tizabi, M.D.; Sudre, C.H.; Rädsch, T.; Antonelli, M.; Arbel, T.; Bakas, S.; Cardoso, M.J.; Cheplygina, V.; et al. Common limitations of image processing metrics: A picture story. arXiv 2021, arXiv:2104.05642. [Google Scholar]

- Dixon, B.J.; Daly, M.J.; Chan, H.; Vescan, A.D.; Witterick, I.J.; Irish, J.C. Surgeons blinded by enhanced navigation: The effect of augmented reality on attention. Surg. Endosc. 2013, 27, 454–461. [Google Scholar] [CrossRef] [PubMed]

| Surgical Field | Reference | Evaluation | Objective | Validation |

|---|---|---|---|---|

| Neuro Surgery | Meola et al., 2017 [9] | ✓ | Clinical applications | × |

| Si et al., 2018 [11] | ✓ | Training & guidance | × | |

| Lee et al., 2018 [12] | ✓ | Medical imaging | × | |

| Shamir et al., 2011 [33] | ✓ | Pre-operative planning | × | |

| Cabrilo et al., 2014 [43] | ✓ | Surgery on patients | × | |

| Tabrizi et al., 2015 [44] | ✓ | Surgery on patients | × | |

| Kersten et al., 2015 [45] | ✓ | Surgery on patients | × | |

| Abhari et al., 2014 [46] | ✓ | Pre-operative planning | × | |

| Fick et al., 2021 [47] | ✓ | Clinical applications | × | |

| López et al., 2019 [48] | ✓ | Clinical applications | × | |

| Morineau et al., 2013 [74] | ✓ | Medical imaging | × | |

| Mikhail et al., 2019 [75] | ✓ | Clinical applications | × | |

| Orthopedic & Spine Surgery | Zhang et al., 2016 [15] | ✓ | Medical imaging | × |

| Härtl et al., 2013 [16] | ✓ | Surgical navigation | × | |

| Jud et al. 2020 [50] | ✓ | Clinical applications | × | |

| Ma et al., 2017 [54] | ✓ | Pre-clinical trials | × | |

| Andress et al. 2020 [76] | ✓ | Training & guidance | × | |

| Laparoscopic Surgery | Nicolau et al., 2011 [17] | ✓ | Clinical testing | × |

| Bernhardt et al., 2017 [18] | ✓ | Clinical testing | × | |

| Tsutsumi et al., 2013 [19] | ✓ | Surgery on patients | × | |

| Modrzejewski et al., 2019 [59] | ✓ | Registration testing | × | |

| Pelanis et al., 2021 [60] | ✓ | Surgical navigation | × | |

| Zhang et al., 2021 [61] | ✓ | Surgery on patients | × | |

| Teatini et al., 2019 [62] | ✓ | Pre-clinical trials | × | |

| Liu et al., 2020 [63] | ✓ | Pre-clinical trials | × | |

| Shekhar et al., 2010 [64] | ✓ | Pre-clinical trials | × | |

| Luo et al., 2020 [65] | ✓ | Pre-clinical trials | ✓ | |

| Schneider et al., 2021 [66] | ✓ | Clinical applications | × | |

| Bernhardt et al., 2016 [67] | ✓ | Pre-clinical trials | × | |

| Jayarathne et al., 2017 [68] | ✓ | Pre-clinical trials | × | |

| Oral Surgery | Wang et al., 2017 [25] | ✓ | Clinical applications | ✓ |

| Jiang et al. 2019 [26] | ✓ | Medical imaging | × | |

| Casap et al., 2011 [69] | ✓ | Training & guidance | × | |

| Yamaguchi et al., 2009 [70] | ✓ | Medical imaging | × | |

| Tran et al., 2011 [71] | ✓ | Medical imaging | × | |

| Wang et al., 2014 [72] | ✓ | Medical imaging | × | |

| Katić et al., 2010 [73] | ✓ | Medical imaging | × | |

| Wang et al., 2013 [77] | ✓ | Medical imaging | × |

| Operator | Location | Medical Process | Patient |

|---|---|---|---|

| Engineer | Laboratory | Technical Scenario | Simulations |

| Medical Student | Simulated OR | Simulated Procedure | Physical Phantoms |

| Surgeon | Operation Room | Real Procedure | Clinical Dataset |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Malhotra, S.; Halabi, O.; Dakua, S.P.; Padhan, J.; Paul, S.; Palliyali, W. Augmented Reality in Surgical Navigation: A Review of Evaluation and Validation Metrics. Appl. Sci. 2023, 13, 1629. https://doi.org/10.3390/app13031629

Malhotra S, Halabi O, Dakua SP, Padhan J, Paul S, Palliyali W. Augmented Reality in Surgical Navigation: A Review of Evaluation and Validation Metrics. Applied Sciences. 2023; 13(3):1629. https://doi.org/10.3390/app13031629

Chicago/Turabian StyleMalhotra, Shivali, Osama Halabi, Sarada Prasad Dakua, Jhasketan Padhan, Santu Paul, and Waseem Palliyali. 2023. "Augmented Reality in Surgical Navigation: A Review of Evaluation and Validation Metrics" Applied Sciences 13, no. 3: 1629. https://doi.org/10.3390/app13031629

APA StyleMalhotra, S., Halabi, O., Dakua, S. P., Padhan, J., Paul, S., & Palliyali, W. (2023). Augmented Reality in Surgical Navigation: A Review of Evaluation and Validation Metrics. Applied Sciences, 13(3), 1629. https://doi.org/10.3390/app13031629