Intelligent Data-Enabled Task Offloading for Vehicular Fog Computing

Abstract

:1. Introduction

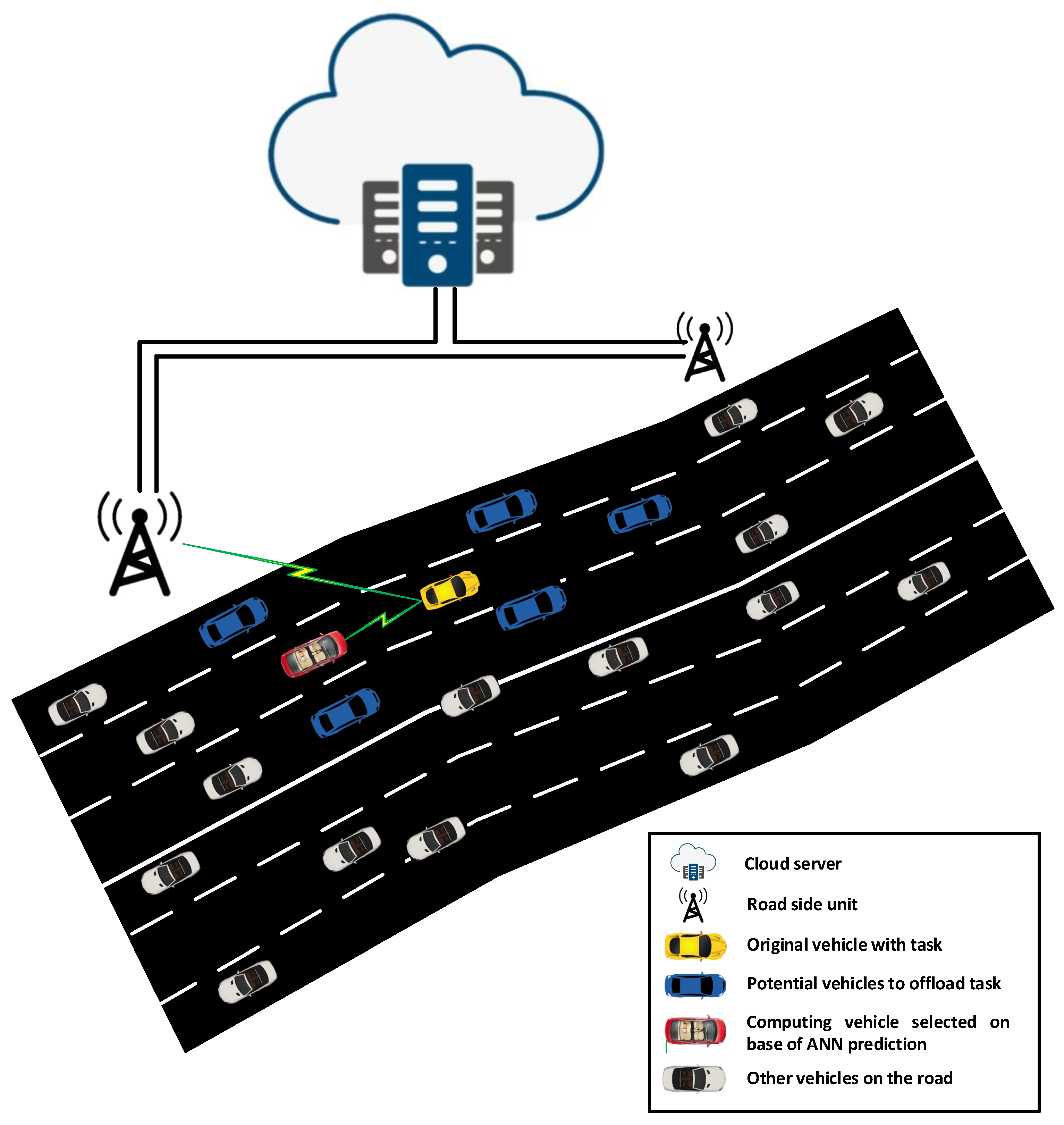

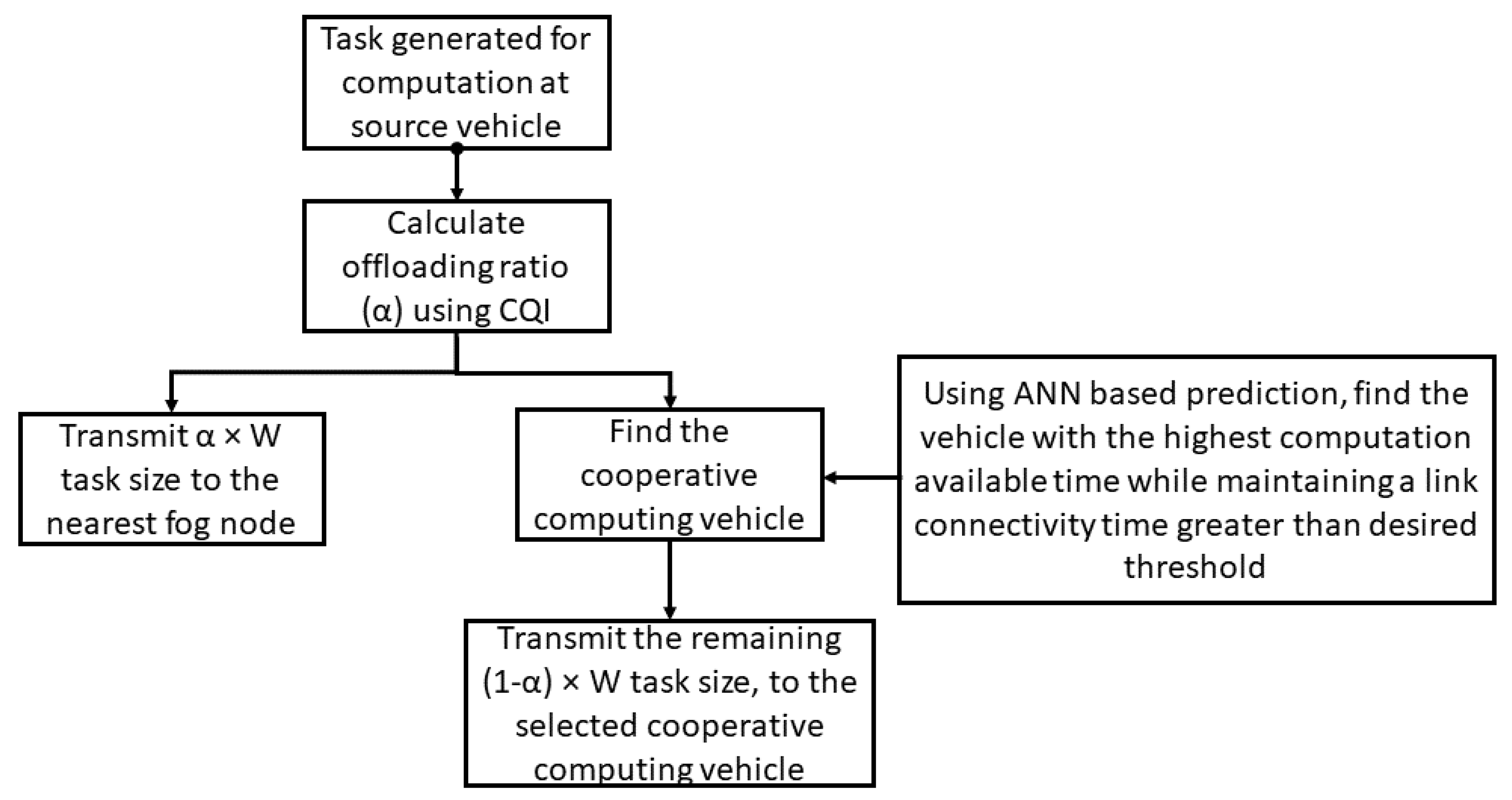

- We consider a scenario in which vehicles use fog RSUs and other nearby vehicles to compute a task. A vehicle with a task to compute offloads some percentage of the task to a fog RSU, based on the channel quality indicator (CQI) of the link with the fog RSU. The remaining task is offloaded to a cooperative computing vehicle in its neighborhood.

- We propose a cooperative computing vehicle selection algorithm, based on the metrics of link connectivity time and computing availability time. These metrics consider factors such as source vehicle speed, potential computing vehicles’ speed, distance between vehicles, the computing loads of vehicles and channel quality between vehicles.

- We propose an ANN-based prediction model to predict the values of the link connectivity time and computing availability time of potential cooperative computing vehicles. This prediction helps to evaluate the computing loads at the potential cooperative computing vehicles once the task has been transmitted and find the link connectivity value once the task result is transmitted back from the potential computing vehicle to the source vehicle.

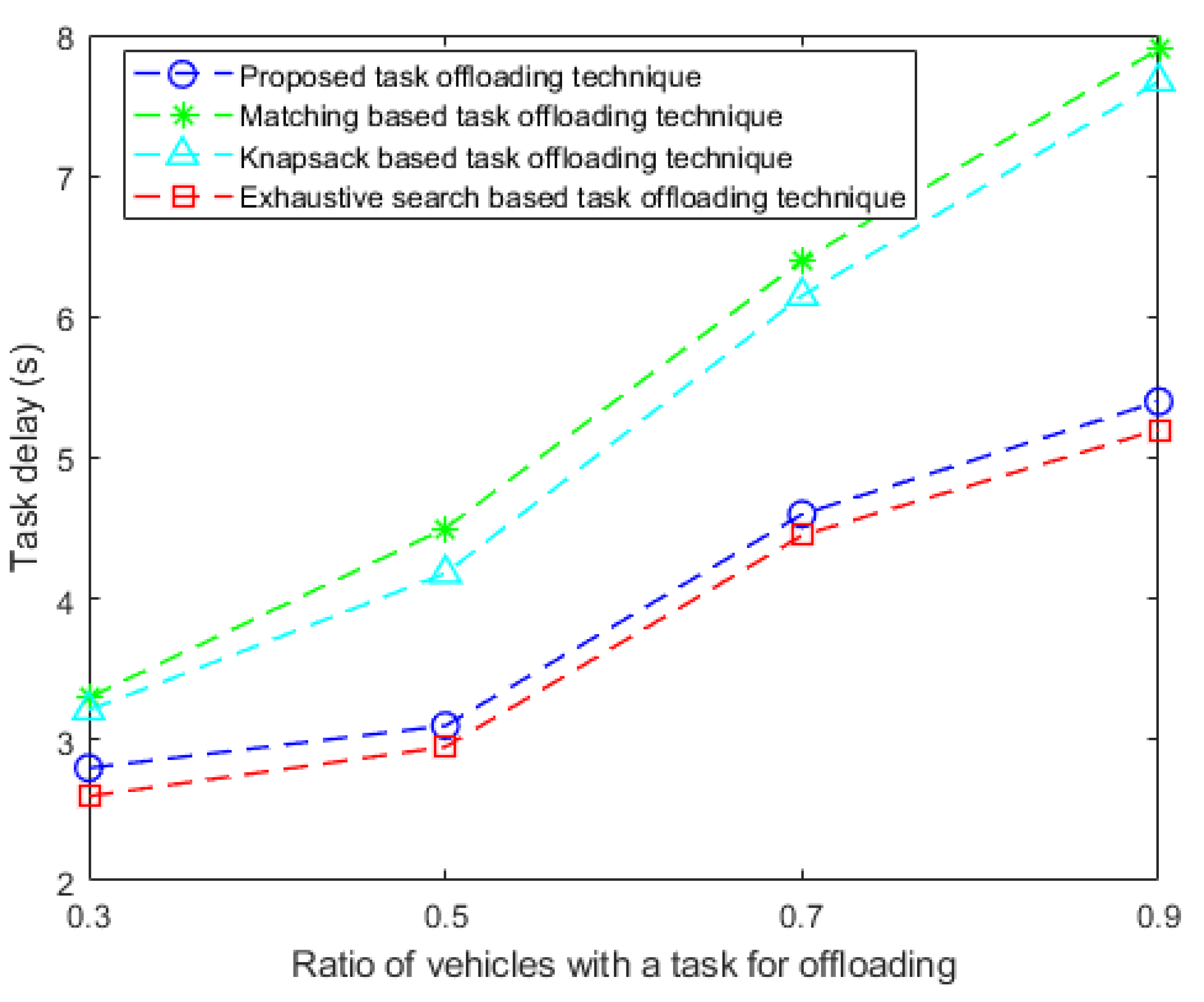

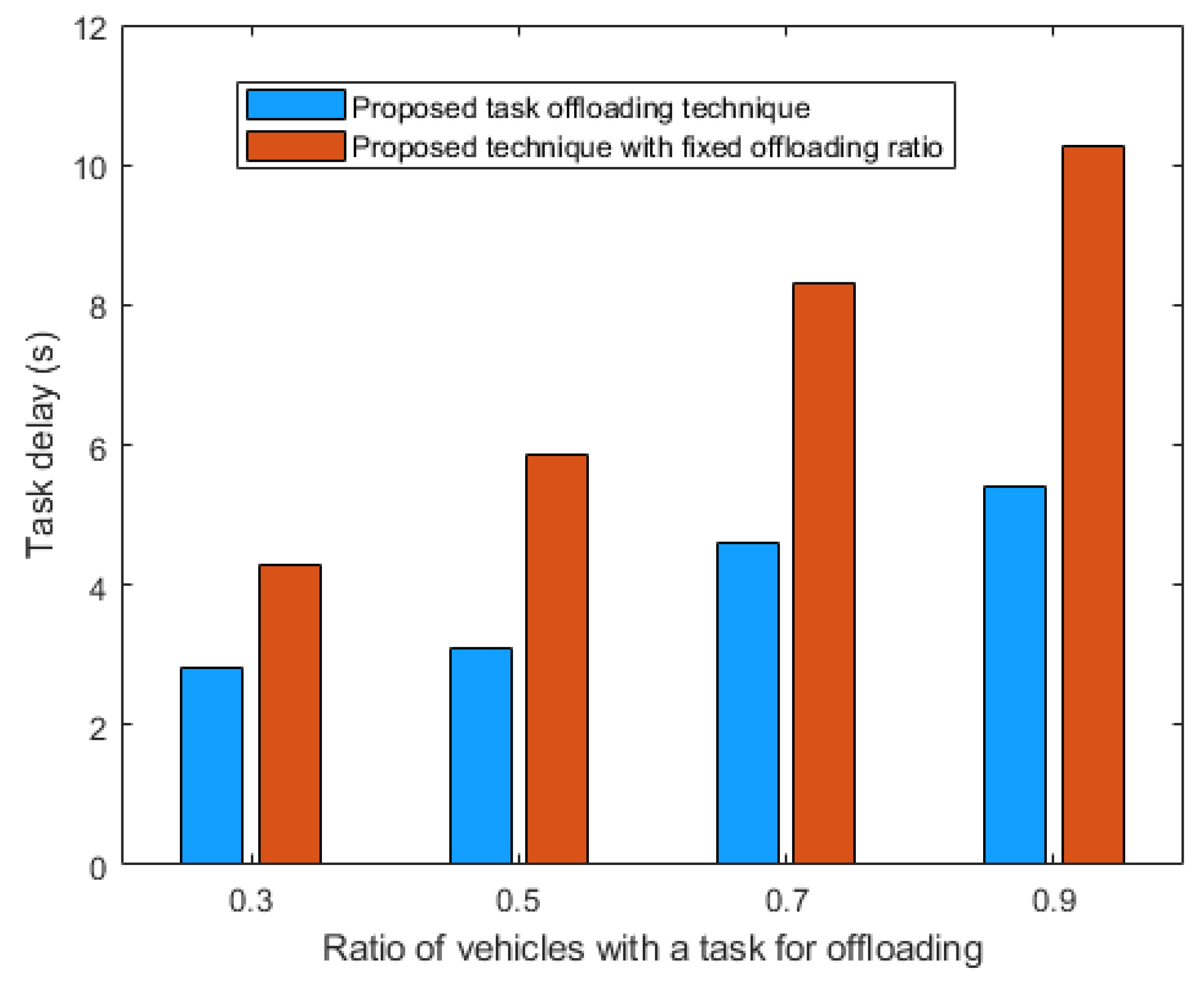

- We present detailed simulation results to show the high accuracy of the proposed prediction model. Moreover, we also implement the complete task offloading process and show that task delay is significantly reduced when using the proposed technique compared to using matching-based task offloading techniques.

2. Literature Review

3. System Model

3.1. Mobility Modeling

3.2. Data Sharing Model

3.3. Computational Model

4. Proposed Technique

4.1. CQI-Based Offloading to Fog Nodes

4.2. Cooperative Computing Vehicle Selection and Task Offloading

4.3. ANN-Based Prediction for Cooperative Computing Vehicle Selection

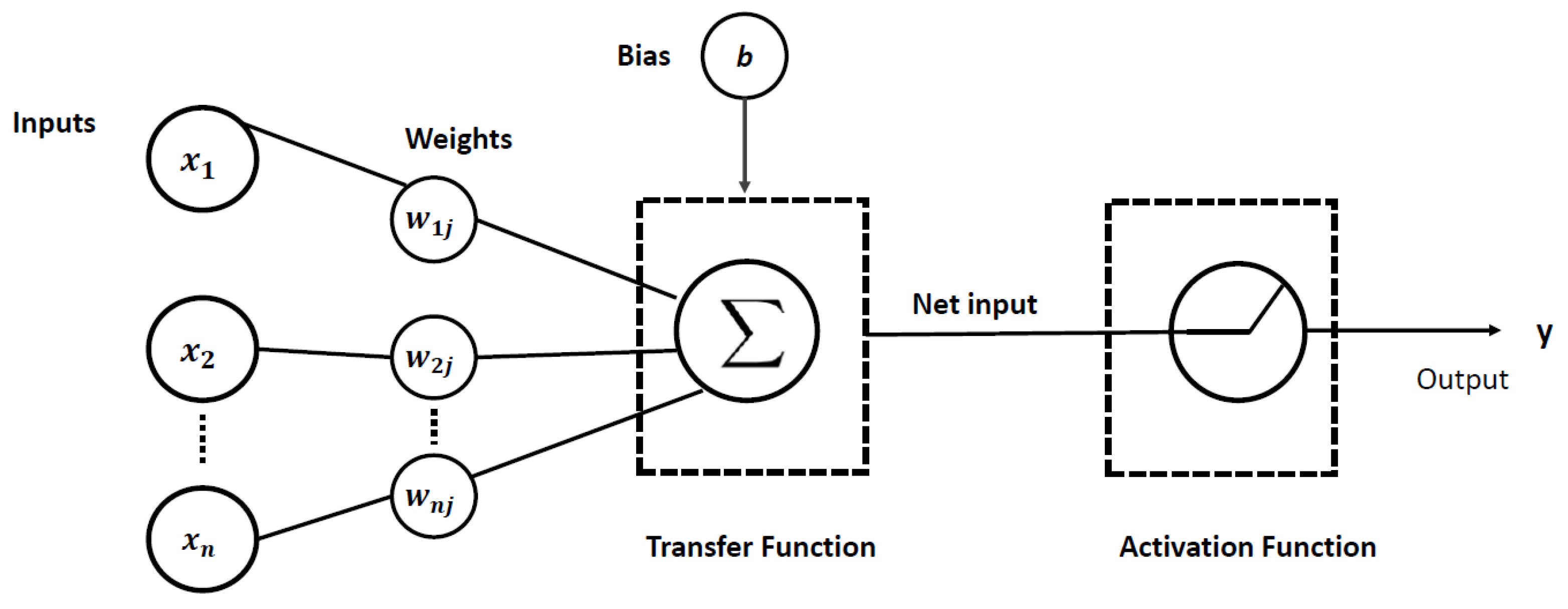

4.3.1. Structure of ANN

4.3.2. Activation Function

4.3.3. Loss Function

4.3.4. Backpropagation Algorithm

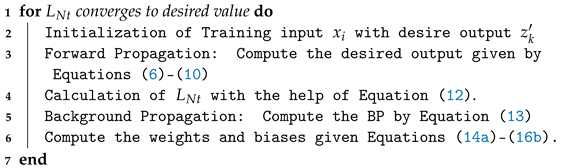

| Algorithm 1: Backpropagation algorithm |

|

4.3.5. Adam Optimization

4.3.6. Configuration Parameters for the Adam Optimization Algorithm

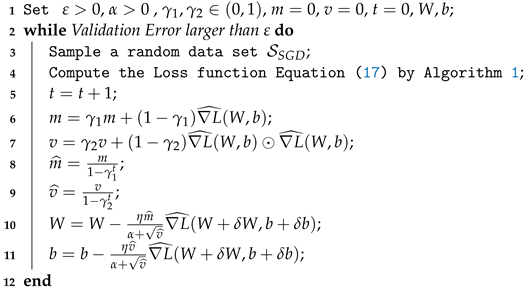

- represents the learning rate or the step size;

- and are the exponential decay rate parameters that are to be initialized close to 1 (such as and , respectively);

- is given as a tolerance factor (usually taken as the very small value of );

- m and v represent the moving averages of the first and second moments of the gradient, while their corresponding corrected biases are further introduced by and ;

- At the end of the algorithm, W and b are the updated adaptive weights and biases.

| Algorithm 2: Adam optimization algorithm for ANN model training. |

|

4.3.7. Proposed Prediction Model

- Link connectivity time prediction: The link connectivity time between the source vehicle and all potential computing vehicles is predicted in the first part of the model using four inputs ((i) the speed of the source vehicle, (ii) the speed of a potential computing vehicle, (iii) the distance between the source and potential computing vehicles and (iv) channel gain between the vehicles).

- Computation availability time prediction: The computation availability time of potential computing vehicles is predicted in the second part of the model using two inputs ((i) the number of tasks already at the potential computing vehicle and (ii) the size of those tasks).

5. Performance Evaluation

5.1. Dataset Generation for ANN Training

- Link connectivity time model: There are three input parameters for the link connectivity time model. In Table 2, we mention the ranges of the values for all features used to generate the dataset. The value of speed is taken from the range of 30–120 km/h, which is generated in our case using a Gaussian random distribution. The second input feature is the distance between the vehicles. This depends on the speeds of the source and potential computing vehicles and the range of the distance is taken as 5–100 m. The last input parameter is the channel gain, which indicates the conditions of the vehicle-to-vehicle channel. The channel gain is generated using complex Gaussian random variables of zero mean, thus providing a Rayleigh fading channel.

- Computation availability model: There are two inputs for the computation availability model. The first input is the number of tasks or requests to offload at a particular time slot t. The Poisson distribution is used to generate the number of tasks, with an average rate of . The second input is the task size, the value of which lies in the range of 0.5 MB–16 MB. This range is used to realistically model the computation availability as at a particular time slot; however, there may be scenarios in which there are fewer tasks but the tasks are larger or more tasks but the tasks are smaller, etc.

5.2. Simulation Parameters

5.3. Performance Metrics

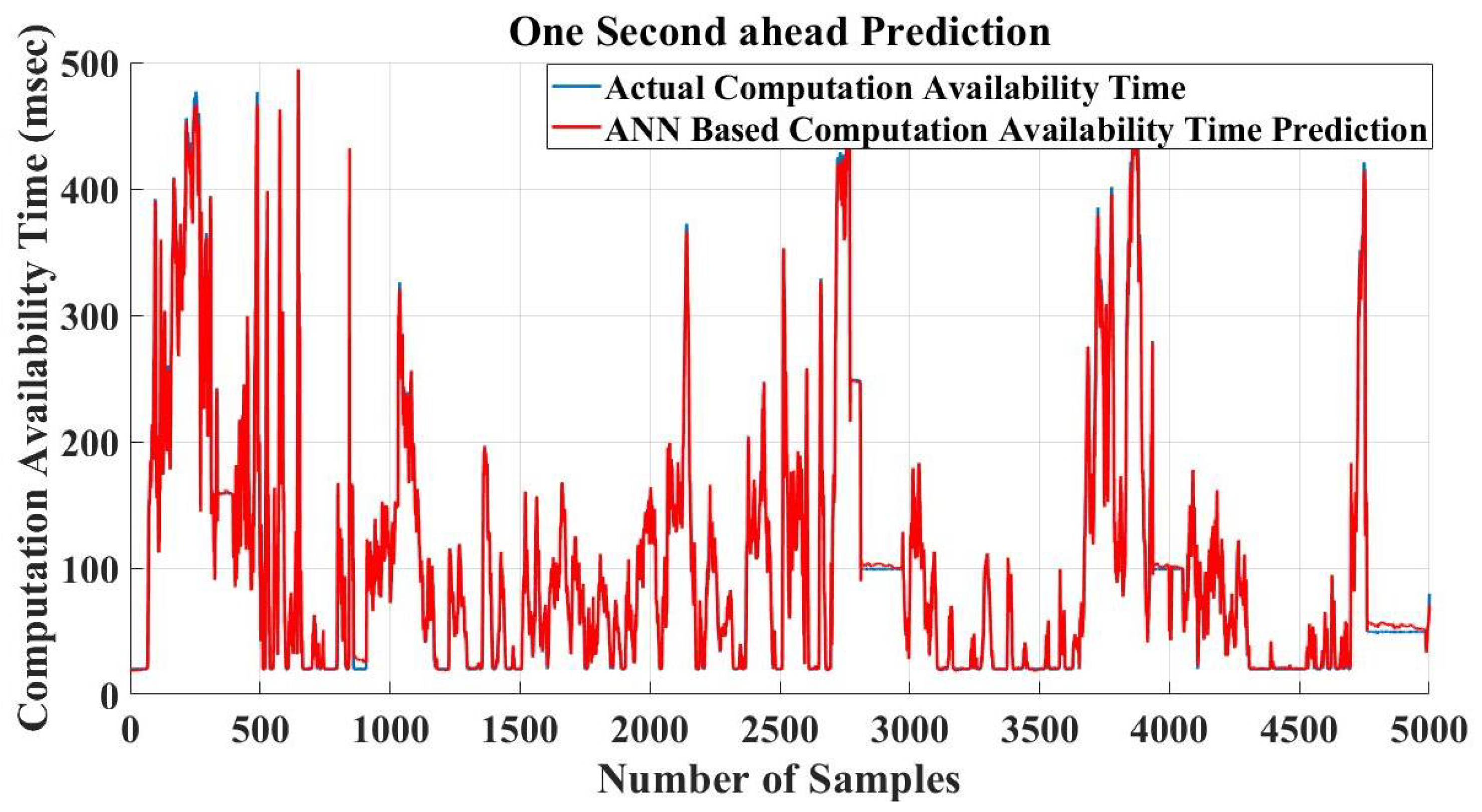

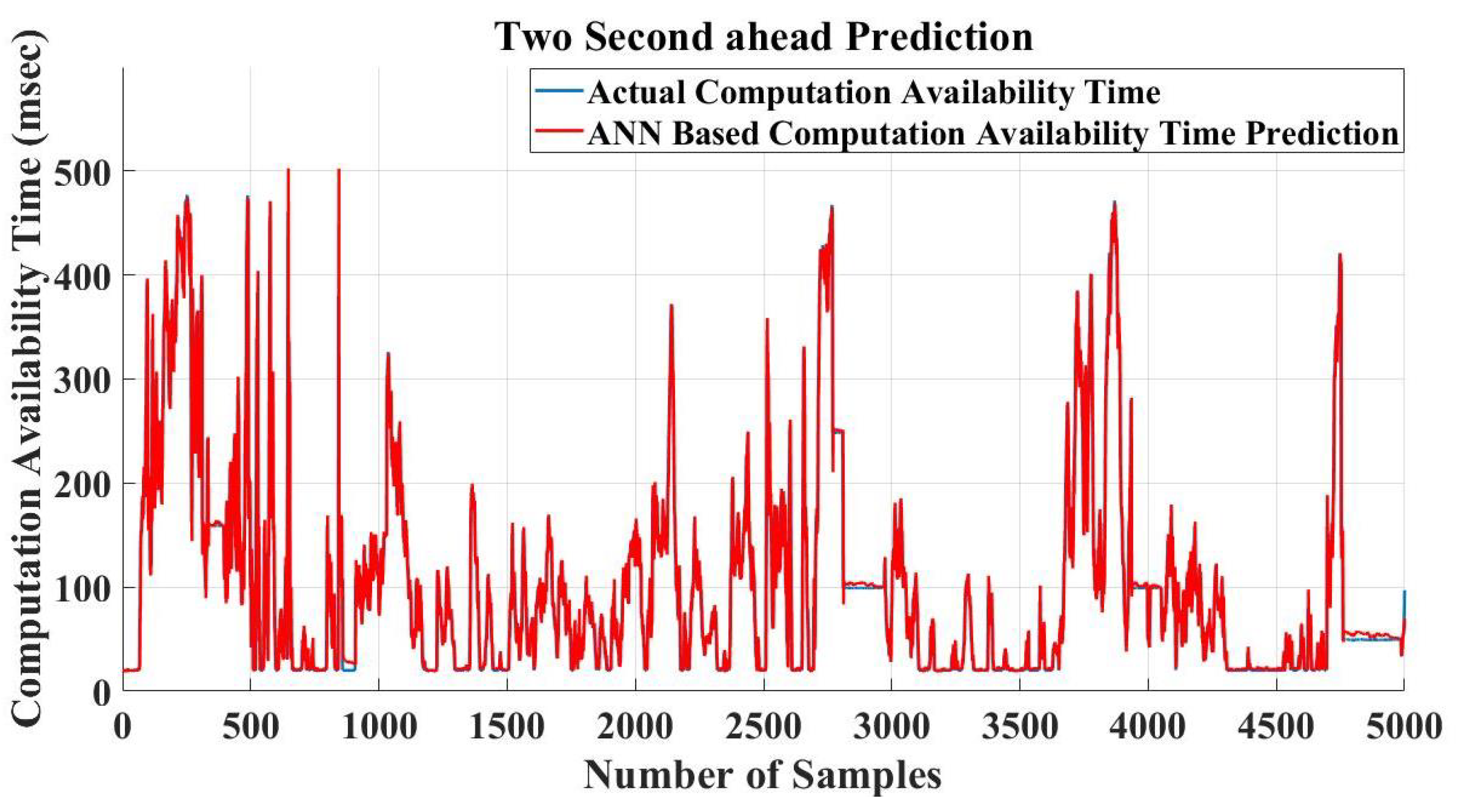

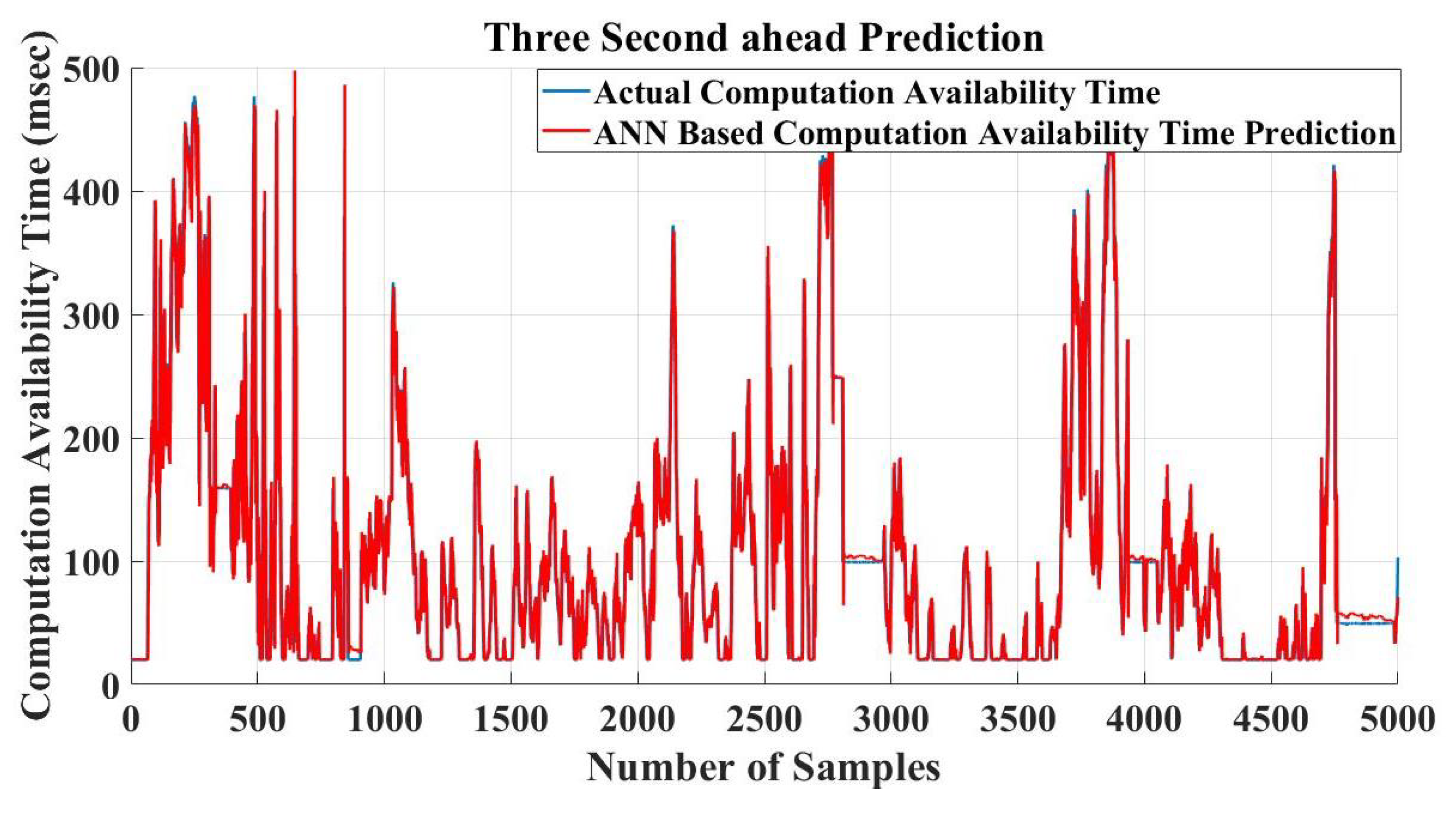

5.4. ANN Prediction Model Results

- Training Phase: In the training phase of the ANN model for link connectivity time, the learning rate is taken as 0.01. The value of momentum is adaptively changed by the Adam optimization algorithm and is finally set to 0.9 for optimal results. The number of training samples is taken as 100,000. The number of neurons in the hidden layer is taken as ), which provides the best model results in terms of all four evaluation error scores (, , and ).In the computation availability time ANN model, the same parameters are used, except that the number of hidden layer neurons is taken as to provide the best results. The error scores for this model are calculated as , , and .

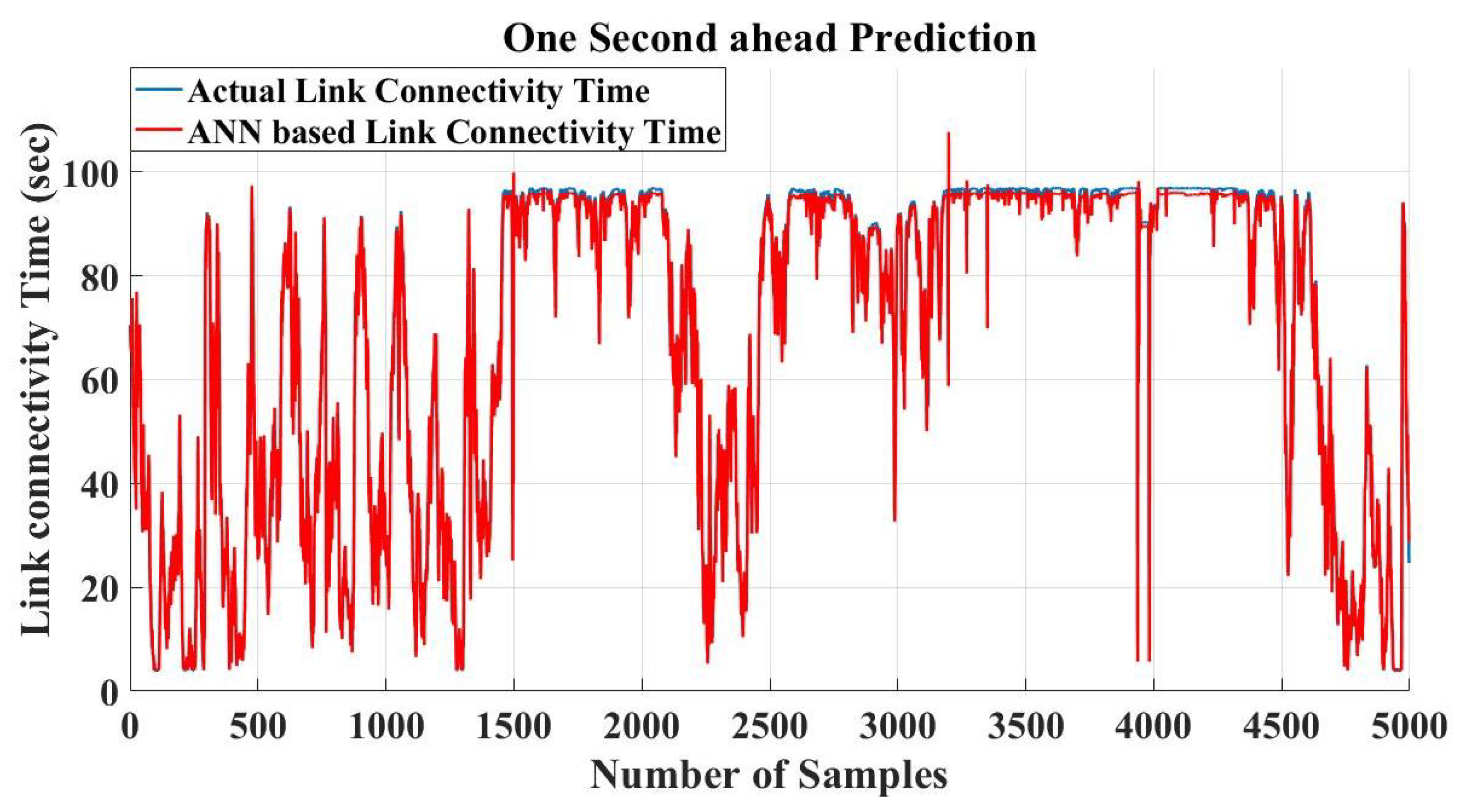

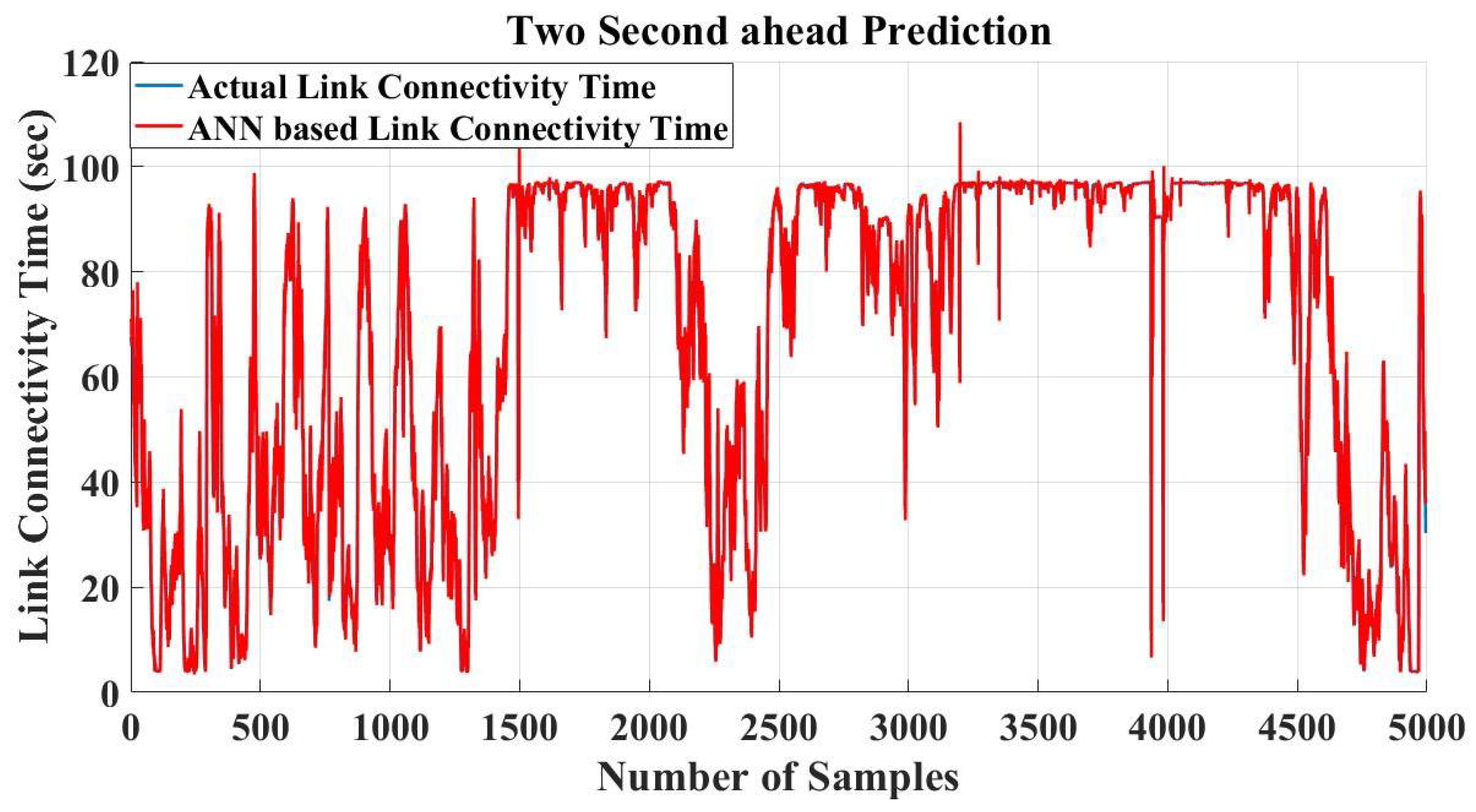

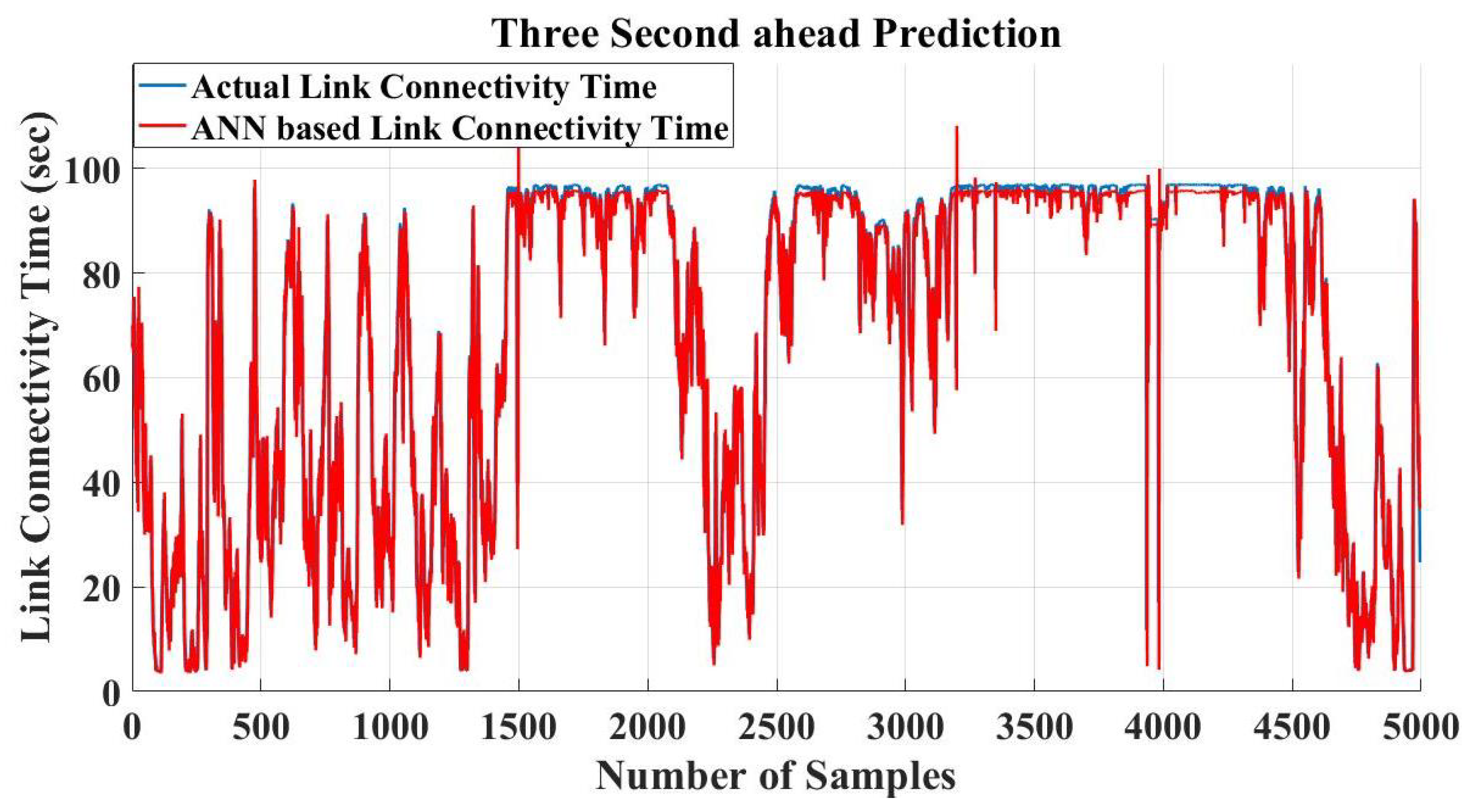

- Testing Phase: After describing the details of the training data, the data samples are subjected to the testing phase. The prediction is performed based on both models and the time values and are taken as 1, 2 and 3 s. Generally, , which is associated with link connectivity time, is higher than as link connectivity is needed once the task is computed and returned to the source vehicle.

5.5. Task Offloading Results

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, F.Y.; Lin, Y.; Ioannou, P.A.; Vlacic, L.; Liu, X.; Eskandarian, A.; Lv, Y.; Na, X.; Cebon, D.; Ma, J.; et al. Transportation 5.0: The DAO to Safe, Secure, and Sustainable Intelligent Transportation Systems. IEEE Trans. Intell. Transp. Syst. 2023, 24, 10262–10278. [Google Scholar] [CrossRef]

- Chen, J.; Zhang, Y.; Teng, S.; Chen, Y.; Zhang, H.; Wang, F.Y. ACP-Based Energy-Efficient Schemes for Sustainable Intelligent Transportation Systems. IEEE Trans. Intell. Veh. 2023, 8, 3224–3227. [Google Scholar] [CrossRef]

- Sun, Y.; Hu, Y.; Zhang, H.; Chen, H.; Wang, F.Y. A Parallel Emission Regulatory Framework for Intelligent Transportation Systems and Smart Cities. IEEE Trans. Intell. Veh. 2023, 8, 1017–1020. [Google Scholar] [CrossRef]

- Gong, T.; Zhu, L.; Yu, F.R.; Tang, T. Edge Intelligence in Intelligent Transportation Systems: A Survey. IEEE Trans. Intell. Transp. Syst. 2023, 24, 8919–8944. [Google Scholar] [CrossRef]

- Moso, J.C.; Cormier, S.; de Runz, C.; Fouchal, H.; Wandeto, J.M. Streaming-Based Anomaly Detection in ITS Messages. Appl. Sci. 2023, 13, 7313. [Google Scholar] [CrossRef]

- Javed, M.A.; Zeadally, S.; Hamida, E.B. Data analytics for Cooperative Intelligent Transport Systems. Veh. Commun. 2019, 15, 63–72. [Google Scholar] [CrossRef]

- Falahatraftar, F.; Pierre, S.; Chamberland, S. An Intelligent Congestion Avoidance Mechanism Based on Generalized Regression Neural Network for Heterogeneous Vehicular Networks. IEEE Trans. Intell. Veh. 2023, 8, 3106–3118. [Google Scholar] [CrossRef]

- Hosseini, M.; Ghazizadeh, R. Stackelberg Game-Based Deployment Design and Radio Resource Allocation in Coordinated UAVs-Assisted Vehicular Communication Networks. IEEE Trans. Veh. Technol. 2023, 72, 1196–1210. [Google Scholar] [CrossRef]

- Al-Essa, R.I.; Al-Suhail, G.A. AFB-GPSR: Adaptive Beaconing Strategy Based on Fuzzy Logic Scheme for Geographical Routing in a Mobile Ad Hoc Network (MANET). Computation 2023, 11, 174. [Google Scholar] [CrossRef]

- Hamdi, A.M.A.; Hussain, F.K.; Hussain, O.K. Task offloading in vehicular fog computing: State-of-the-art and open issues. Future Gener. Comput. Syst. 2022, 133, 201–212. [Google Scholar] [CrossRef]

- Li, L.; Fan, P. Latency and Task Loss Probability for NOMA Assisted MEC in Mobility-Aware Vehicular Networks. IEEE Trans. Veh. Technol. 2023, 72, 6891–6895. [Google Scholar] [CrossRef]

- Hui, Y.; Huang, Y.; Li, C.; Cheng, N.; Zhao, P.; Chen, R.; Luan, T.H. On-Demand Self-Media Data Trading in Heterogeneous Vehicular Networks. IEEE Trans. Veh. Technol. 2023, 72, 11787–11799. [Google Scholar] [CrossRef]

- Ren, Q.; Liu, K.; Zhang, L. Multi-objective optimization for task offloading based on network calculus in fog environments. Digit. Commun. Netw. 2022, 8, 825–833. [Google Scholar] [CrossRef]

- Hamdi, A.; Hussain, F.K.; Hussain, O.K. iVFC: An Intelligent Framework for Task Offloading in Vehicular Fog Computing. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4465948 (accessed on 30 November 2023).

- Geng, N.; Bai, Q.; Liu, C.; Lan, T.; Aggarwal, V.; Yang, Y.; Xu, M. A Reinforcement Learning Framework for Vehicular Network Routing Under Peak and Average Constraints. IEEE Trans. Veh. Technol. 2023, 72, 6753–6764. [Google Scholar] [CrossRef]

- Wei, Z.; Li, B.; Zhang, R.; Cheng, X.; Yang, L. Dynamic Many-to-Many Task Offloading in Vehicular Fog Computing: A Multi-Agent DRL Approach. In Proceedings of the GLOBECOM 2022—2022 IEEE Global Communications Conference, Rio de Janeiro, Brazil, 4–8 December 2022; pp. 6301–6306. [Google Scholar] [CrossRef]

- Gao, Z.; Yang, L.; Dai, Y. Fast Adaptive Task Offloading and Resource Allocation via Multiagent Reinforcement Learning in Heterogeneous Vehicular Fog Computing. IEEE Internet Things J. 2023, 10, 6818–6835. [Google Scholar] [CrossRef]

- Giordani, M.; Polese, M.; Mezzavilla, M.; Rangan, S.; Zorzi, M. Toward 6G Networks: Use Cases and Technologies. IEEE Commun. Mag. 2020, 58, 55–61. [Google Scholar] [CrossRef]

- Saad, W.; Bennis, M.; Chen, M. A Vision of 6G Wireless Systems: Applications, Trends, Technologies, and Open Research Problems. IEEE Netw. 2020, 34, 134–142. [Google Scholar] [CrossRef]

- Singh, J.; Singh, P.; Hedabou, M.; Kumar, N. An Efficient Machine Learning-Based Resource Allocation Scheme for SDN-Enabled Fog Computing Environment. IEEE Trans. Veh. Technol. 2023, 72, 8004–8017. [Google Scholar] [CrossRef]

- Tong, S.; Liu, Y.; Chang, X.; Mišić, J.; Zhang, Z. Joint Task Offloading and Resource Allocation: A Historical Cumulative Contribution Based Collaborative Fog Computing Model. IEEE Trans. Veh. Technol. 2023, 72, 2202–2215. [Google Scholar] [CrossRef]

- Ning, Z.; Huang, J.; Wang, X. Vehicular Fog Computing: Enabling Real-Time Traffic Management for Smart Cities. IEEE Wirel. Commun. 2019, 26, 87–93. [Google Scholar] [CrossRef]

- Wang, K.; Tan, Y.; Shao, Z.; Ci, S.; Yang, Y. Learning-Based Task Offloading for Delay-Sensitive Applications in Dynamic Fog Networks. IEEE Trans. Veh. Technol. 2019, 68, 11399–11403. [Google Scholar] [CrossRef]

- Zhou, Z.; Liao, H.; Zhao, X.; Ai, B.; Guizani, M. Reliable Task Offloading for Vehicular Fog Computing Under Information Asymmetry and Information Uncertainty. IEEE Trans. Veh. Technol. 2019, 68, 8322–8335. [Google Scholar] [CrossRef]

- Zhou, Z.; Liu, P.; Feng, J.; Zhang, Y.; Mumtaz, S.; Rodriguez, J. Computation Resource Allocation and Task Assignment Optimization in Vehicular Fog Computing: A Contract-Matching Approach. IEEE Trans. Veh. Technol. 2019, 68, 3113–3125. [Google Scholar] [CrossRef]

- Zhou, Z.; Liao, H.; Wang, X.; Mumtaz, S.; Rodriguez, J. When Vehicular Fog Computing Meets Autonomous Driving: Computational Resource Management and Task Offloading. IEEE Netw. 2020, 34, 70–76. [Google Scholar] [CrossRef]

- Zhao, J.; Kong, M.; Li, Q.; Sun, X. Contract-Based Computing Resource Management via Deep Reinforcement Learning in Vehicular Fog Computing. IEEE Access 2020, 8, 3319–3329. [Google Scholar] [CrossRef]

- Li, H.; Li, X.; Wang, W. Joint optimization of computation cost and delay for task offloading in vehicular fog networks. Trans. Emerg. Telecommun. Technol. 2020, 31, e3818. [Google Scholar] [CrossRef]

- Wu, Q.; Ge, H.; Liu, H.; Fan, Q.; Li, Z.; Wang, Z. A Task Offloading Scheme in Vehicular Fog and Cloud Computing System. IEEE Access 2020, 8, 1173–1184. [Google Scholar] [CrossRef]

- Tian, S.; Deng, X.; Chen, P.; Pei, T.; Oh, S.; Xue, W. A dynamic task offloading algorithm based on greedy matching in vehicle network. Ad Hoc Netw. 2021, 123, 102639. [Google Scholar] [CrossRef]

- Elhoseny, M.; El-Hasnony, I.M.; Tarek, Z. Intelligent energy aware optimization protocol for vehicular adhoc networks. Sci. Rep. 2023, 13, 9019. [Google Scholar] [CrossRef]

- Naeem, A.; Rizwan, M.; Alsubai, S.; Almadhor, A.; Akhtaruzzaman, M.; Islam, S.; Rahman, H. Enhanced clustering based routing protocol in vehicular ad-hoc networks. IET Electr. Syst. Transp. 2023, 13, e12069. [Google Scholar] [CrossRef]

- Karabulut, M.A.; Shah, A.F.M.S.; Ilhan, H.; Pathan, A.S.K.; Atiquzzaman, M. Inspecting VANET with Various Critical Aspects—A Systematic Review. Ad Hoc Netw. 2023, 150, 103281. [Google Scholar] [CrossRef]

- Kumar, S.; Raw, R.S.; Bansal, A.; Singh, P. UF-GPSR: Modified geographical routing protocol for flying ad-hoc networks. Trans. Emerg. Telecommun. Technol. 2023, 34, e4813. [Google Scholar] [CrossRef]

- Arafat, M.Y.; Moh, S. A Q-Learning-Based Topology-Aware Routing Protocol for Flying Ad Hoc Networks. IEEE Internet Things J. 2022, 9, 1985–2000. [Google Scholar] [CrossRef]

- Zhang, J.; Dai, L.; He, Z.; Jin, S.; Li, X. Performance Analysis of Mixed-ADC Massive MIMO Systems Over Rician Fading Channels. IEEE J. Sel. Areas Commun. 2017, 35, 1327–1338. [Google Scholar] [CrossRef]

- Zhang, Z.; Xu, K.; Gan, C. The Vehicle-to-Vehicle Link Duration Scheme Using Platoon-Optimized Clustering Algorithm. IEEE Access 2019, 7, 78584–78596. [Google Scholar] [CrossRef]

- Mohamed, Z.E. Using the artificial neural networks for prediction and validating solar radiation. J. Egypt. Math. Soc. 2019, 27, 47. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Zappone, A.; Di Renzo, M.; Debbah, M. Wireless networks design in the era of deep learning: Model-based, AI-based, or both? IEEE Trans. Commun. 2019, 67, 7331–7376. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Alvi, A.N.; Javed, M.A.; Hasanat, M.H.A.; Khan, M.B.; Saudagar, A.K.J.; Alkhathami, M.; Farooq, U. Intelligent Task Offloading in Fog Computing Based Vehicular Networks. Appl. Sci. 2022, 12, 4521. [Google Scholar] [CrossRef]

| Ref. | Scenario | Proposed Technique | Design Objective |

|---|---|---|---|

| [23] | Vehicular fog computing | Online learning-based offloading | 1. Resources are shared between fog nodes while maintaining lower computation costs 2. Task allocation decisions and spectrum scheduling are used to minimize offloading delay |

| [24] | Vehicular fog computing | Convex–concave optimization and price-matching task offloading | 1. The efficient assignment of servers is achieved via the convex–concave optimization approach while maximizing the anticipated utility of the operator 2. The total delay of the network is minimized using a price matching solution |

| [25] | Vehicular fog computing | Contract theoretical modeling and stable matching algorithm | 1. Based on contract theoretical modeling, a mechanism is proposed to maximize the projected utility of BS 2. A stable matching algorithm is used for task assignment amongst user equipment and vehicles |

| [26] | Vehicular fog computing | Contract theory and learning -based matching | 1. A contract theory computing resource management mechanism is proposed 2. A learning-based matching task offloading method is also proposed |

| [27] | Multi-vehicular fog computing | Deep reinforcement learning | 1. Resources are allocated while reducing the complexity of the system 2. A queuing model is proposed to solve the collision problem faced by simultaneous offloading |

| [28] | Multi-vehicular fog computing | Lagrange dual approach | 1. A low-complexity algorithm is proposed to find the optimized values of offloading ratios, which vehicles to select and the consumption of the system |

| [29] | Vehicular fog and cloud computing | Semi-Markov decision process | 1. The task offloading problem is presented as a semi-Markov decision process 2. A value iteration algorithm is proposed to maximize the total long-term reward of the system |

| [30] | Vehicular fog computing | Stable matching | 1. Vehicle mobility is considered while offloading 2. The Kuhn–Munkres algorithm is used to find a stable match |

| ANN Model | Features Used | Data Range |

|---|---|---|

| Computation Available Time | Vehicle speed | 30–120 km/h |

| Distance between source and computing vehicles | 5–100 m | |

| Channel gain | Rayleigh distribution | |

| Link Connectivity Time | Number of tasks | Poisson distribution |

| Task size | 0.5 MB–16 MB |

| Parameter | Value |

|---|---|

| Task size | 0.5 MB–16 MB |

| of vehicles’ CPUs | 500 cycles/bit |

| of fog node’s CPU | 2000 cycles/bit |

| Frequency C of vehicles’ CPUs | 2G cycles/s |

| Frequency C of fog node’s CPU | 8G cycles/s |

| Error | One-Second-Ahead Prediction | Two-Seconds-Ahead Prediction | Three-Seconds-Prediction |

|---|---|---|---|

| RMSE | 3.9839 | 6.0147 | 7.4742 |

| MABE | 2.3066 | 3.2745 | 4.4182 |

| 0.9838 | 0.9631 | 0.9430 | |

| MAPE | 5.7767 | 8.8480 | 11.4301 |

| Error | One-Second-Ahead Prediction | Two-Seconds-Ahead Prediction | Three-Seconds-Ahead Prediction |

|---|---|---|---|

| RMSE | 1.5859 | 2.5756 | 3.2739 |

| MABE | 0.7570 | 1.2707 | 1.6789 |

| 0.9746 | 0.9331 | 0.8919 | |

| MAPE | 8.8964 | 14.8086 | 18.3649 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alfakeeh, A.S.; Javed, M.A. Intelligent Data-Enabled Task Offloading for Vehicular Fog Computing. Appl. Sci. 2023, 13, 13034. https://doi.org/10.3390/app132413034

Alfakeeh AS, Javed MA. Intelligent Data-Enabled Task Offloading for Vehicular Fog Computing. Applied Sciences. 2023; 13(24):13034. https://doi.org/10.3390/app132413034

Chicago/Turabian StyleAlfakeeh, Ahmed S., and Muhammad Awais Javed. 2023. "Intelligent Data-Enabled Task Offloading for Vehicular Fog Computing" Applied Sciences 13, no. 24: 13034. https://doi.org/10.3390/app132413034

APA StyleAlfakeeh, A. S., & Javed, M. A. (2023). Intelligent Data-Enabled Task Offloading for Vehicular Fog Computing. Applied Sciences, 13(24), 13034. https://doi.org/10.3390/app132413034