Abstract

With the application of graph neural network (GNN) in the communication physical layer, GNN-based channel decoding algorithms have become a research hotspot. Compared with traditional decoding algorithms, GNN-based channel decoding algorithms have a better performance. GNN has good stability and can handle large-scale problems; GNN has good inheritance and can generalize to different network settings. Compared with deep learning-based channel decoding algorithms, GNN-based channel decoding algorithms avoid a large number of multiplications between learning weights and messages. However, the aggregation edges and nodes for GNN require many parameters, which requires a large amount of memory storage resources. In this work, we propose GNN-based channel decoding algorithms with shared parameters, called shared graph neural network (SGNN). For BCH codes and LDPC codes, the SGNN decoding algorithm only needs a quarter or half of the parameters, while achieving a slightly degraded bit error ratio (BER) performance.

1. Introduction

Deep learning (DL) technology has become increasingly crucial in many disciplines, especially in image processing [1,2,3], wireless communication [4,5,6], speech recognition [7], and other fields. In particular, DL was applied in multiple-input multiple-output (MIMO) channel state information (CSI) feedback [8], intelligent reflecting surfaces [9], and the channel decoding algorithm [10,11,12,13,14]. Although the bit error ratio (BER) performance has been improved, it brings higher storage complexity and computational complexity compared with traditional decoder algorithm. Tanner graphs and factor graphs are commonly used in decoding algorithms for low-density parity check (LDPC), Hamming, and BCH codes. Graph theory is a very broad branch of mathematics that is applicable to real world problems. Compared with the dimensional disaster [15] caused by the increase of the block code length of the DL-based decoding algorithm, GNN [16,17,18] is an extension of existing neural network methods that take graph-passing messages and add aggregation networks. GNN can find a suitable model by training the graph structure data, including edge data and node data. Model complexity is independent of graph size, and the trained model is easily applicable to arbitrarily large graphs. GNN has effectively, stability, and strong generalization ability. A special heterogeneous graph with different node types and edge types is proposed in ref. [19]. A GNN-based scalable polar code is proposed in ref. [20], which is a unique graph called a reconstructed code message passing (PCCMP) diagram for polar code, which changes the successive cancellation list decoding algorithm into a heterogeneous graph, while an iterative message passing algorithm is added to the graph to find the best graph with a minimum frame ratio (FER) or BER for a suitable polar code. A GNN-based channel decoding algorithm that adds weights to Tanner graph edges for short block codes is proposed in ref. [21]. This algorithm is a graph-theoretic extension of the neural belief propagation (NBP) algorithm. A GNN structure for BCH codes and LDPC codes is proposed in ref. [22]. This network structure is suitable for codewords of any length, and the training will not be limited by the curse of dimensionality. The BER performance of the network for eight iterations exceeds the BER performance of the weighted BP for 20 iterations. However, it uses a large number of multilayer perceptron (MLP) network aggregation messages in each iteration, which requires a lot of computing costs and storage resources. Excessive complexity is very challenging to implement on resource-constrained communication hardware, which is also the problem this paper attempts to solve.

In this work, model compression technologies are used in GNN-based channel decoding algorithms. Methods to reduce the number of correction factors of GNN-based decoder are proposed, which involve changing the directed-edge to the non-directed edge, sharing weights in factor nodes, variable nodes, factor-to-variable node messages and variable-to-factor nodes messages, and applying it to the BCH and LDPC decoders. The main contribution we proposed is a low-complexity GNN-based channel decoding algorithm. The novelty of this paper can be summarized as follows:

- We share the same weights in GNN-based channel decoding algorithm especially in the update of factor nodes, variable nodes, factor-to-variable node messages, and variable-to-factor node messages.

- Based on different sharing schemes, we propose two shard GNN (SGNN)-based channel decoding algorithms to balance BER performance and storage complexity.

- Furthermore, we apply the SGNN-based channel decoding algorithm to BCH and LDPC decoders, which reduces the storage resources required by GNN-based channel decoders with a slight decrease in BER performance.

The rest of this paper can be organized as follows: GNN-based channel decoding is described briefly in Section 2. In Section 3, the SGNN-based channel decoding algorithms is proposed. The performance evaluation and complexity analysis are shown in Section 4, which systematically analyzes the performance and complexity of the SGNN and GNN-based channel decoding algorithms. Section 5 discusses the issues of the paper and future research directions. Section 6 concludes the paper.

2. Preliminary

LDPC, BCH, and Hamming codes are all linear block codes, and the Tanner graph of these codes are bipartite graph. We denote these codes by , where N denotes the length of codewords and K denotes the number of information bits.

Suppose that, in a communication system, binary phase shift keying (BPSK) or quadrature phase shift keying (QPSK) modulation are used after information are encoded. Assume that the message obtained is over the additive white Gaussian noise (AWGN) channel, where is the transmitted complex symbol, is the complex AWGN noise. M represents the number of transmitted symbols. In BPSK modulation, M measures the length of the transmitted signal as the number of bits per symbol, B is one, and M is half the signal’s length in QPSK as the number of bits per symbol, and B is 2. is a matrix of size M-by-M, is the variance of the channel noise, and is noise spectral density. The log-likelihood ratio (LLR) in ref. [23] of the v-th bit can be computed as

where , denotes the constellation points with unit energy and denotes the subset of X with the i-th bit being . LLR in [23] for BPSK modulation can be expressed as

We use as the signal-to-noise ratio, where is the energy per information bit, is the v-th value of the received message.

GNN-Based Channel Decoding

Similar to the Tanner graph, the GNN-based channel decoding also [22] has two types of nodes, namely, variable nodes (VN) and factor nodes (FN). The variable nodes correspond to the j-th column of the H matrix, and the factor nodes correspond to the i-th row of the H matrix, similar to the check node in the BP algorithm. There are K VNs, denoted by , and there are FNs, denoted by . denotes all of the edges in the Tanner graph, which means all the 1s in H. According to graph theory, we assign value and attribute to each node and each edge in the graph, similar to the training parameters in weighted BP and min-sum algorithms [12,24]. We use , to denote each of the node values for VN and FN, respectively, which are dimensional vectors. , are used to assign messages to each directed edge, while different message values are assigned for different directions of each edge. In order to improve the BER performance, appropriate weights to each directional edge and each node are added, similar to the model-driven training weights. We use , to denote each of the node attribute for VN and FN, respectively, and there are node attributes. , are used to assign each directed edge attributes; there is a total of edge attributes.

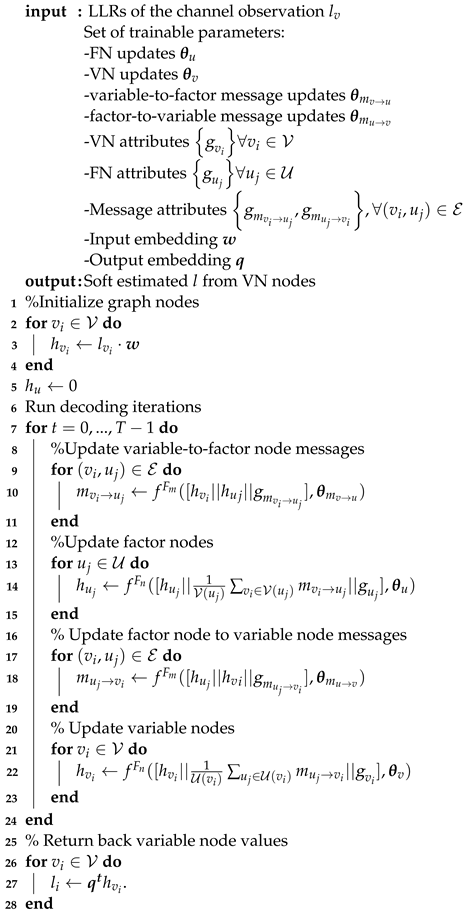

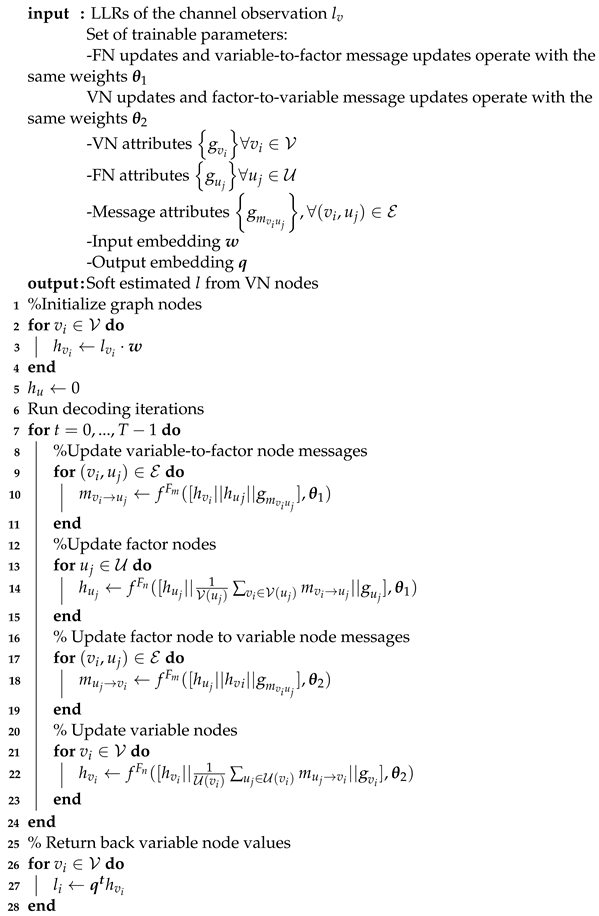

As shown in Algorithm 1, we first initialize the FN and VN values, and then enter the decoding process until the maximum number T of decodings is reached and the iteration terminates. The edge directed message update function and the two-node FN, VN values’ update functions are trained in the GNN-based channel decoding algorithm. The GNN-based channel decoding algorithm requires the following four steps:

- Step 1:

- From the VN to FN, the message is passed in the graph aswhere denotes the parameters of the node, and the symbol denotes that the two tensors are concatenation together. The function maps messages to high-dimensional features by multiplying weights. Simple multilayer perceptrons (MLPs) are used to expand the dimension.

- Step 2:

- Update the FN value aswhere denotes the parameters in FN. We use aggregation similar to min-sum algorithm [24]. We may also use , , and operations, and the updated FN value is given by .

- Step 3:

- From the FN to VN, the message is passed in the graph aswhere denotes the trainable parameters in another direction edge.

- Step 4:

- Update the VN value aswhere denotes the parameters in VN. The decoding result is also output from this step. We may also use , , and operations, and the updated VN value is given by .

| Algorithm 1: GNN-based Channel Decoding Algorithm in ref. [22]. |

|

Repeat the above Steps 1, 2, 3, and 4 until the conditions of iterations are reached. The recovered binary vector can be computed by as

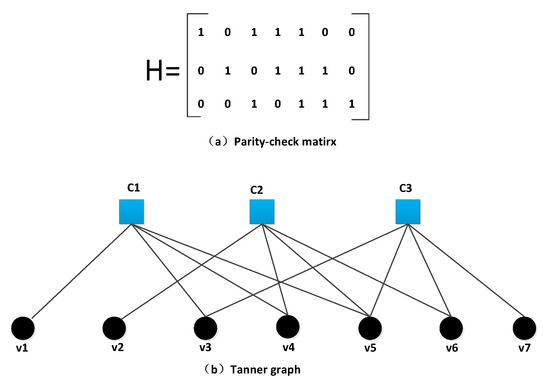

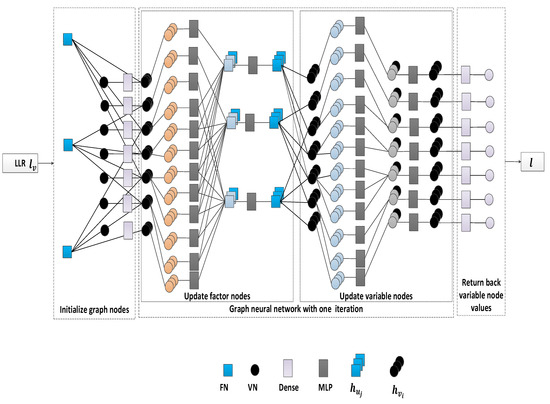

Next, we use an example to illustrate the working principle of the GNN-based channel decoding algorithm. As shown in Figure 1, A parity-check matrix H of is shown in Figure 1a, and the Tanner graph corresponding to this matrix is shown in Figure 1b, similar to that in ref. [25]. There are three FNs and seven VNs. Figure 2 shows the detailed process of converting the Tanner graph into the GNN-based channel decoding algorithm. After passing through the BPSK modulation and AWGN channels, we receive the LLR, whose dimension is × 7, where denotes the size of the batch. First, we initialize the parameters of the GNN-based channel decoding algorithm and then initialize the graph nodes values, including the values of the FN nodes and VN nodes and , whose dimension is × 3 × 20. , where is input embedding with a dense network, whose dimension is × 7 × 20, and the number of dimensions for vertex embeddings is 20.

Figure 1.

The Tanner graph corresponding to the parity matrix, (a) parity-check matrix; (b) Tanner graph.

Figure 2.

GNN-based channel decoding algorithm for code (7,4) with one iteration.

The iterative link of the GNN-based channel decoding algorithm is shown in Figure 2, which includes the update of the FN nodes and the update of the VN nodes. Converting the values of the FN nodes and VN nodes into edge features, the edge of this code is 12. After adding a message attribute, we compute messages for all edges using MLPs. In this code, MLPs are implemented by a two-layer dense network; these two layers contain 40 and 20 neurons, respectively. Messages are reduced per receiving vertex by message aggregation, where we use aggregation. After aggregation, messages dimension is , and then we add a node attribute. After concatenating this information, a new embedding will be calculated by two layers of dense of MLPs, and we update the FN value . The next step is to update the VN value. The update process of the VN value is similar to that of the FN value; the only difference is that the dimension of the feature information after aggregation is . We update the VN value , where is the information recovered after one iteration. Repeat the iteration until the conditions of the iterations are reached. The recovered binary vector is computed by as a hard threshold, where is an output embedding of a dense network with one neuron and l denotes the recovered soft output.

3. SGNN Based Channel Decoding Algorithm

In order to reduce the computational complexity and the required storage resources of the GNN-based channel decoding algorithm, the model parameters and multiplication need to be reduced. The parameters of the GNN-based channel decoding algorithm include node attributes, edge attributes, and the weights brought by the mapping of each iteration. Each node has independent properties, and each directed edge also has independent properties. In order to reduce the attributes of half of the edges, we change the attributes of the edges in different directions to the attributes of edges without directions, that is, each edge has only one attribute. Four mappings are used per iteration: an update variable-to-factor node messages, update factor nodes, update factor nodes to variable node messages, and update variable nodes. In order to reduce the parameters of the mapping, we set the weights of the mapping to be shared. Shared parameters are directly linked to performance. We propose two different sharing methods, which are introduced below.

3.1. SGNN-Based Channel Decoding Algorithm 1

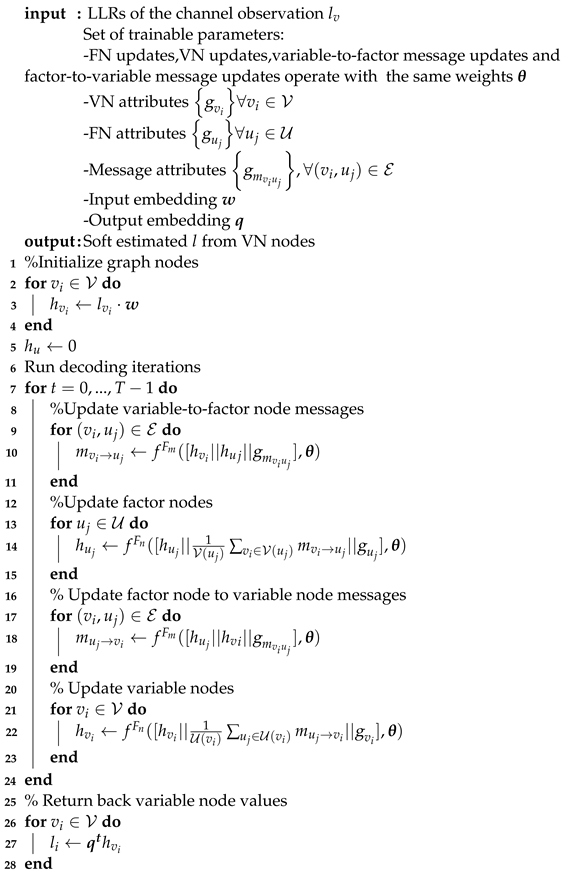

In order to minimize the number of parameters of the GNN-based channel decoding algorithm, we use the same weights for all mappings . At the same time, for directed graphs, we change them to non-directed graphs; therefore, edges in different directions in the attributes of the graph use the same assigned edge attribute . This sharing method is called SGNN1 and is referred to as Algorithm 2 on the next page.

| Algorithm 2: The Proposed Shared GNN-based Channel Decoding Algorithm 1. |

|

3.2. SGNN-Based Channel Decoding Algorithm 2

In order to balance the number of parameters and the BER performance of the GNN-based channel decoding algorithm, we use the same weights for the FN updates map and the variable-to-factor message updates map, the same weights for the VN updates map, and the factor-to-variable message updates map. At the same time, for directed graphs, we change them to non-directed graphs; therefore, edges in different directions in the attributes of the graph use the same assigned edge attribute . This sharing method is called SGNN2 and is referred to as Algorithm 3.

| Algorithm 3: The Proposed Shared GNN-based Channel Decoding Algorithm 2. |

|

4. Simulation Results and Discussions

We investigated the performance of the SGNN1 and SGNN2-based channel decoding algorithms for BCH codes and LDPC codes. The hyperparameters of the simulation are shown in Table 1. As in ref. [22], node and edge attributes are also not used. For each list-element in the training parameters, we run the SGD-updates and use a pre-defined number of training iterations.

Table 1.

Parameter of simulation.

4.1. BCH Codes

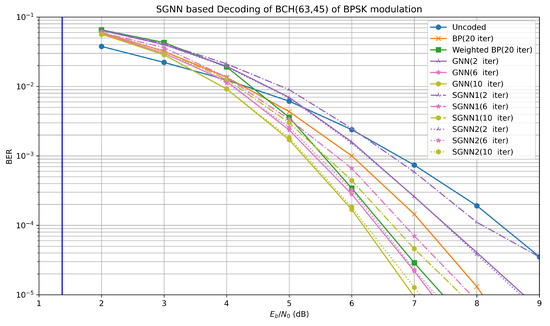

First, the performance of the proposed SGNN1 and SGNN2-based channel decoding algorithms [22] are obtained, in which we use eight iterations as shown in Table 1; other hyperparameters are also provided in Table 1. As shown in Figure 3, the performances of Uncoded, BP, Weighted BP, GNN, SGNN1, and SGNN2-based channel decoding algorithms are consistently improving for the BCH(63,45) code. The blue vertical line is the shannon limit of the code rate corresponding to BCH codes. A fixed number of iterations is used in training; its model parameters can be used to test a variable number of iterations , which is also a major advantage of GNN similar to hypernetwork technology [26]. However, a GNN-based channel decoder with only three iterations achieves an almost equivalent performance compared to the traditional BP decoder with twenty iterations. The SGNN1-based channel decoding algorithm with only four iterations achieves an equivalent performance, and the SGNN2-based channel decoding algorithm with only four decoding iterations also achieves an equivalent performance. Overall, the BER performance of the SGNN1 algorithm degrades by 0.75 dB compared to the GNN-based channel decoding algorithm with the same number of iterations, and the BER performance of the SGNN2-based channel decoding algorithm degrades by 0.1 dB. However, SGNN1 and SGNN2 only use 2440 and 4840 parameters compared to the GNN, which uses 9640 parameters; the parameters are reduced to a quarter and a half of those of the GNN, respectively, as listed in Table 2. As the number of iterations increases, the BER performance improves, but when it increases to a certain number, the BER performance degrades, so it is necessary to introduce an early stop mechanism. The number of trainable parameters in ref. [12] is 2–3 orders of magnitude larger than our decoder. However, multiplication in our decoder is much larger than that in traditional communication decoding algorithms.

Figure 3.

BER performance of GNN [22] and SGNN for BCH (63,45) code of BPSK modulation.

Table 2.

Comparison of parameters in GNN [22] and SGNN-based channel decoding algorithms.

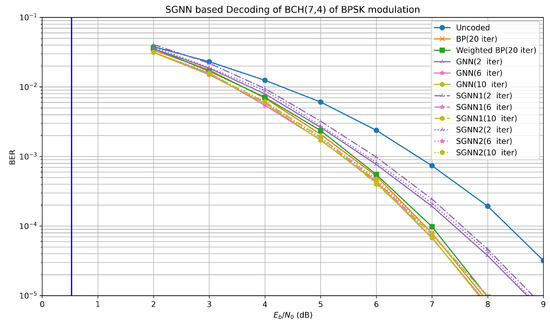

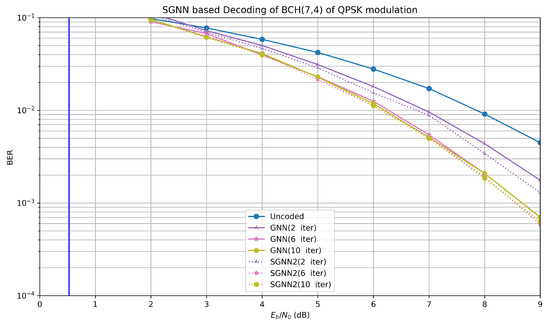

As shown in Figure 4 and Figure 5, the BER performances of the Uncoded, BP, Weighted BP, GNN, SGNN1, and SGNN2-based channel decoding algorithms are consistently improving for the BCH (7,4) code of BPSK modulation and QPSK modulation. The GNN-based channel decoding algorithm with only four iterations achieves an almost equivalent BER performance compared to the traditional BP decoder with twenty iterations. Overall, the BER performance of the SGNN1-based channel decoding algorithm degrades by 0.15 dB compared to the GNN-based channel decoding algorithm with the same number of iterations, and the BER performance of the SGNN2-based channel decoding algorithm degrades by 0.05 dB. However, SGNN1 and SGNN2 only use 2440 and 4840 parameters compared to the GNN, which uses 9640 parameters. BCH (7,4) has the same number of parameters as BCH (63,45), and the parameters are reduced to a quarter and a half, respectively, as listed in Table 2. According to our experience, the training weights of BCH (63,45) cannot achieve the desired BER performance when used in BCH (7,4).

Figure 4.

BER performance of GNN [22] and SGNN for BCH (7,4) code of BPSK modulation.

Figure 5.

BER performance of GNN [22] and SGNN for BCH (7,4) code of QPSK modulation.

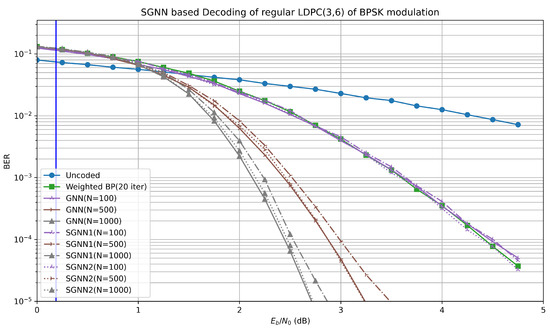

4.2. Regular LDPC Code

In order to increase the applicability of the GNN, we compare the GNN and the SGNN-based channel decoding algorithms for the regular LDPC code (v = 3, c = 6), when the training parameter T is 10 and the codeword length N is 100. In order to achieve fast convergence of training, MLP adds multiple neural network layers, the result is shown in Figure 6. The blue vertical line is the shannon limit of the code rate corresponding to reg LDPC codes. The SGNN1-based channel decoding algorithm performs slightly better than the twenty iterations of the weighted BP algorithm. In addition, when , the BER performances of the SGNN1 and SGNN2-based channel decoding algorithms are basically the same as that of the GNN-based channel decoding algorithm. As the length of the code increases, the BER performance gap between the SGNN algorithm and the GNN-based channel decoding algorithm gradually becomes larger, with a difference of about 0.2 dB; however, the BER performance gains between the BP algorithms and the GNN-based channel decoding algorithm tend to diminish with increasing n, but the weight is reduced from 28,700 to 32,016,352, as listed in Table 2.

Figure 6.

BER performance of GNN [22] and SGNN for regular LDPC (3,6) code of BPSK modulation.

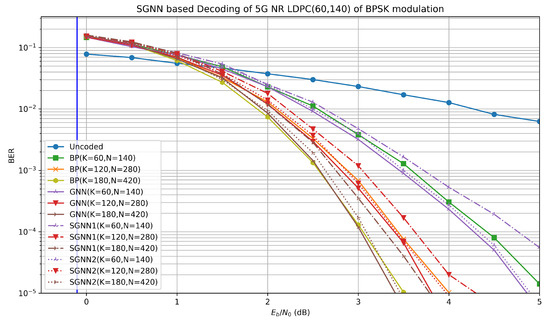

4.3. 5g NR LDPC Code

In order to increase the applicability of the GNN network, we compare the 5G NR LDPC code [27] GNN network with the SGNN network, when the training parameter T is 10 and the codeword length , . The blue vertical line is the shannon limit of the code rate corresponding to 5G NR LDPC codes. As the 5G NR LDPC code requires rate-matching, the codewords tested all contain the same code rate, such as , , , . As shown in Figure 7, the same weights can be used to provide parameters for the longer codeword, and flexibility is also one of the advantages of GNN. We can adapt to the training LDPC code of simple codewords, but we can test different codewords. At the same time, please note that different code rates appear in training and testing, and the weights need to be adjusted at the same time. Both algorithms use schedules. The updates of its VN nodes and FN nodes, as well as the required number of iterations, will change with different schedules. The difference between the BER performance of SGNN1 and the GNN-based channel decoding algorithm when testing the same code of , is about 0.5 dB, and the difference between the BER performance of the SGNN2 and GNN-based channel decoding algorithms is about 0.1 dB when testing different codes of , . A small gain can be observed between SGNN and GNN-based channel decoding algorithms. There is little room for improvement in the deep learning GNN-based channel decoding algorithm and the BP algorithm for optimal settings for 5G LDPC codes, but there is room for further improvement in the SGNN algorithm.

Figure 7.

BER performance of GNN [22] and SGNN for 5G LDPC (60,140) code of BPSK modulation.

4.4. Complexity Analysis

The number of weights in the SGNN1 and SGNN2-based channel decoding algorithms are greatly reduced, but the BER performance is nearly the same or slightly reduced, as listed in Table 2. We can find than the SGNN1 and SGNN2-based channel decoding algorithms have low complexity compared with that of the GNN-based channel decoding algorithm. The dense network is used to initialize graph nodes and return variable node values, and the number of multiplications in this process is small and is related to the output of the dense network. In each iteration of decoding, the MLPs network is used to update factor nodes and update variable nodes, so the number of layers of the MLPs network and the output of each dense network determine the number of multiplications here. As shown in Figure 2, a total of four MLPs are 208w in each iteration. The reduction in the number of parameters therefore requires fewer memory storage resources, which is more beneficial for implementation on resource-constrained communication hardware. But at the same time, investigating how to reduce the number of multiplications is a potential direction for future research.

5. Open Issues

As mentioned above, the complexity of GNN is much higher than that of the NBP algorithm due to the multiple use of MLPs. Although the SGN-based channel decoding algorithm reduces the number of parameters to even less than that of the NBP algorithm, the number of multiplications has not been reduced. Such a large amount of calculation limits its implementation in hardware such as FPGA, let alone enabling it to replace traditional decoding algorithms in communication chips. The trained codeword weights cannot reflect the same BER performance in other codewords. Therefore, possible future research directions are as follows:

- A possible way to implement a universal GNN-based channel decoder is to train GNN-based channel decoder weights for multiple forward error correction (FEC) parallel codes.

- The decoding complexity of the proposed SGNN is higher compared with that of BP, and other complexity reduction methods are put together to further reduce complexity, such as pruning, quantification, etc.

- Extensions to non-AWGN channels and other modulation methods are possible.

6. Conclusions

This paper proposes two weight sharing methods to reduce the complexity of channel decoders based on SGNN1 and SGNN2, which have almost the same or a slightly reduced BER performance, while SGNN1 and SGNN2 decoding algorithms have fewer parameters in comparison with the GNN-based channel decoding algorithm. We have also provided a detailed algorithm flow of SGNN1 and SGNN2 algorithms. We conducted experiments on the proposed SGNN1- and SGNN2-based BCH decoders, regular LDPC decoders, and 5G NR LDPC decoders. Numerical results show that, compared with the original decoder, the decoders based on SGNN1 and SGNN2 have a comparable performance, while the proposed algorithm requires fewer memory storage resources. The proposed low-complexity decoding algorithms have advantages when applied to resource-constrained devices such as internet of things communications, but performance requirements need to be considered.

Author Contributions

Conceptualization, Y.L. and Q.W.; Methodology, Y.L.; Validation, Q.W. and X.C.; Formal analysis, Q.W.; Investigation, Q.W.; Resources, Q.W. and Y.L.; Data curation, Q.W. and X.C.; Writing—original draft, Q.W. and Y.L.; Writing—review and editing, B.K.N., C.-T.L. and Y.M.; Visualization, Y.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was funded by the research funding of the Macao Polytechnic University, Macao SAR, China (Project no. RP/FCA-01/2023).

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

For privacy reasons, the data source of this article has not been made public.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Pak, M.; Kim, S. A review of deep learning in image recognition. In Proceedings of the 2017 4th International Conference on Computer Applications and Information Processing Technology (CAIPT), Kuta Bali, Indonesia, 8–10 August 2017; pp. 1–3. [Google Scholar]

- Shorten, C.; Khoshgoftaar, T.M. A survey on image data augmentation for deep learning. J. Big Data 2019, 6, 1–48. [Google Scholar] [CrossRef]

- Minaee, S.; Boykov, Y.; Porikli, F. Plaza, A.; Kehtarnavaz, N.; Terzopoulos, D. Image segmentation using deep learning: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 3523–3542. [Google Scholar]

- Jiang, W. Graph-based deep learning for communication networks: A survey. Comput. Commun. 2022, 185, 40–54. [Google Scholar] [CrossRef]

- Zhang, C.; Patras, P.; Haddadi, H. Deep learning in mobile and wireless networking: A survey. IEEE Commun. Surv. Tutor. 2019, 21, 2224–2287. [Google Scholar] [CrossRef]

- Liang, Y.; Lam, C.; Ng, B. Joint-Way Compression for LDPC Neural Decoding Algorithm with Tensor-Ring Decomposition. IEEE Access 2023, 11, 22871–22879. [Google Scholar] [CrossRef]

- Deng, L.; Hinton, G.; Kingsbury, B. New types of deep neural network learning for speech recognition and related applications: An overview. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Vancouver, BC, Canada, 26–31 May 2013; pp. 8599–8603. [Google Scholar]

- Wen, C.K.; Shih, W.T.; Jin, S. Deep learning for massive mimo csi feedback. IEEE Wirel. Commun. Lett. 2018, 7, 748–751. [Google Scholar] [CrossRef]

- Taha, A.; Alrabeiah, M.; Alkhateeb, A. Enabling large intelligent surfaces with compressive sensing and deep learning. IEEE Access 2021, 9, 44304–44321. [Google Scholar] [CrossRef]

- Nachmani, E.; Be’ery, Y.; Burshtein, D. Learning to decode linear codes using deep learning. In Proceedings of the 2016 54th Annual Allerton Conference on Communication, Control, and Computing (Allerton), Monticello, IL, USA, 27–30 September 2016; pp. 341–346. [Google Scholar]

- Nachmani, E.; Marciano, E.; Burshtein, D.; Be’ery, Y. Rnn decoding of linear block codes. arXiv 2017, arXiv:1702.07560. [Google Scholar]

- Nachmani, E.; Marciano, E.; Lugosch, L.; Gross, W.J.; Burshtein, D.; Be’ery, Y. Deep learning methods for improved decoding of linear codes. IEEE J. Sel. Top. Signal Process. 2018, 12, 119–131. [Google Scholar] [CrossRef]

- Wang, M.; Li, Y.; Liu, J.; Guo, T.; Wu, H.; Brazi, F. Neural layered min-sum decoders for cyclic codes. Phys. Commun. 2023, 61, 102194. [Google Scholar] [CrossRef]

- Lei, Y.; He, M.; Song, H.; Teng, X.; Hu, Z.; Pan, P.; Wang, H. A Deep-Neural-Network-Based Decoding Scheme in Wireless Communication Systems. Electronics 2023, 12, 2973. [Google Scholar] [CrossRef]

- Gruber, T.; Cammerer, S.; Hoydis, J.; Ten Brink, S. On deep learning-based channel decoding. In Proceedings of the 2017 51st Annual Conference on Information Sciences and Systems (CISS), Baltimore, MD, USA, 22–24 March 2017; pp. 1–6. [Google Scholar]

- Scarselli, F.; Gori, M.; Tsoi, A.C.; Hagenbuchner, M.; Monfardini, G. The graph neural network model. IEEE Trans. Neural Netw. 2008, 20, 61–80. [Google Scholar] [CrossRef] [PubMed]

- Zhou, J.; Cui, G.; Hu, S.; Zhang, Z.; Yang, C.; Liu, Z.; Wang, L.; Li, C.; Sun, M. Graph neural networks: A review of methods and applications. AI Open 2020, 1, 57–81. [Google Scholar] [CrossRef]

- Wu, Z.; Pan, S.; Chen, F.; Long, G.; Zhang, C.; Philip, S.Y. A comprehensive survey on graph neural networks. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 4–24. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Song, D.; Huang, C.; Swami, A.; Chawla, N.V. Heterogeneous graph neural network. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 793–803. [Google Scholar]

- Liao, Y.; Hashemi, S.A.; Yang, H.; Cioffi, J.M. Scalable polar code construction for successive cancellation list decoding: A graph neural network-based approach. arXiv 2022, arXiv:2207.01105. [Google Scholar] [CrossRef]

- Tian, K.; Yue, C.; She, C.; Li, Y. Vucetic, B. A scalable graph neural network decoder for short block codes. arXiv 2022, arXiv:2211.06962. [Google Scholar]

- Cammerer, S.; Hoydis, J.; Aoudia, F.A.; Keller, A. Graph neural networks for channel decoding. In Proceedings of the 2022 IEEE Globecom Workshops (GC Wkshps), Rio de Janeiro, Brazil, 4–8 December 2022; pp. 486–491. [Google Scholar]

- Yuanhui, L.; Lam, C.-T.; Ng, B.K. A low-complexity neural normalized min-sum ldpc decoding algorithm using tensor-train decomposition. IEEE Commun. Lett. 2022, 26, 2914–2918. [Google Scholar] [CrossRef]

- Lugosch, L.; Gross, W.J. Neural offset min-sum decoding. In Proceedings of the 2017 IEEE International Symposium on Information Theory (ISIT), Aachen, Germany, 25–30 June 2017; pp. 1361–1365. [Google Scholar]

- Chen, X.; Ye, M. Cyclically equivariant neural decoders for cyclic codes. arXiv 2021, arXiv:2105.05540. [Google Scholar]

- Nachmani, E.; Wolf, L. Hyper-graph-network decoders for block codes. Adv. Neural Inf. Process. Syst. 2019, 32, 2326–2336. [Google Scholar]

- ETSI. ETSI TS 138 212 v16. 2.0: Multiplexing and Channel Coding; Technical Report; ETSI: Sophia Antipolis, France, 2020. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).