Abstract

This study investigated the effects of wearing AR devices on users’ performance and comfort ratings while performing order-picking (OP) tasks. In addition to a picking-by-paper list, two AR devices combined with three order information display designs were examined. Thirty adult participants joined. They searched for and found the boxes in the order list, picked them up, and returned to the origin. The time to complete the task and the number of incorrect boxes picked up were analyzed to assess the performance of the tasks. The subjective ratings of the participants on the comfort rating scale (CRS) and the simulator sickness questionnaire (SSQ) were collected to assess the designs of both the AR devices and the order information displays. It was found that the participants could complete the OP tasks faster when adopting the order map (4.97 ± 1.57 min) or the 3D graph display (4.87 ± 1.50 min) using either one of the AR devices than when using a paper list (6.03 ± 1.28 min). However, they needed more time to complete the OP tasks when wearing both types of AR glasses when the Quick Response (QR) code option was adopted (10.16 ± 4.30 min) than when using a paper list. The QR code scanning and display design using either one of the AR devices guaranteed 100% accuracy but sacrificed efficiency in task completion. The AR device with a binocular display and hand gesture recognition functions had a significantly lower CRS score in the dimensions of attachment and movement (5.6 and 6.3, respectively) than the corresponding dimensions (8.0 and 8.3, respectively) of the other device with a monocular and hand touch input design. There were complaints of eye strain after using both AR devices examined in this study. This implies that these AR devices may not be suitable to wear for extended periods of time. Users should take off the AR device whenever they do not need to view the virtual image to avoid eye strain and other discomfort symptoms.

1. Introduction

Augmented reality (AR) is a technology that enriches our physical environment by superimposing computer-generated information onto a real background [1]. It offers the ability to annotate objects and surroundings and possesses distinctive features that facilitate collaborative/remote maintenance [2,3,4,5]. The usage of AR devices is increasingly prevalent in both professional and everyday contexts. These devices enable the augmentation of real-world environments with visualized entities, either directly or indirectly [6,7]. Moreover, AR devices not only present 2D or 3D virtual elements in the real world but also allow real-time interactions between humans and the rendered objects [8,9,10]. AR can be implemented using either stationary or mobile devices, with the latter being notably more convenient. While mobile devices such as tablets and smartphones also offer AR functionalities, head-mounted displays (HMDs) and AR glasses provide a hands-free experience and deliver superior user satisfaction compared to handheld devices [11,12,13].

Order picking (OP) is typical in logistics and service industries and is commonly performed in warehousing and retail operations. It is a labor-intensive job that requires operators to go to storage areas to find and collect designated items and is the most expansive operation in warehouses [14,15]. The traditional method of OP involves operators finding listed items on paper or on a tablet and picking them up [16]. Using AR glasses is one alternative for performing such tasks. AR glasses have been adopted in OP tasks by providing visual guidance information when searching for items in a storage area [17,18]. They allow workers to access logistic information in a hands-free manner [6,14,19,20,21,22,23,24] and, thus, could improve task efficiency [16,25]. The benefits of AR devices in facilitating OP tasks, however, depend on the hardware and software designs of the device. Verifications are required to confirm the advantage of different AR designs [15].

The pick-by-vision system for an AR device, which uses arrows and attention tunnels to guide workers to find the locations of items, has been proposed [20,21,25,26]. However, such a system resulted in only a small percentage increase in picking speed compared to using a paper list and did not significantly improve picking accuracy. Baumann et al. [27] coordinated colors and symbols on picking charts and part bins in the display of an AR device and found that using such a system significantly improved their OP tasks. However, using colors to help workers to find ordered items in storage creates problems if workers have color deficiency problems. The design of guidance information presented on AR glasses in the literature varies. It is apparent that many more guidance information designs for AR glasses are required to meet the needs of specific warehousing environments. More studies are required to fulfill these requirements.

There are many options for presenting order information in an OP task using an AR device. One option is to provide a list in the image of an AR device, similar to a paper list. However, this provides few extra benefits in addition to hands-free advantages. Another option is to use the Quick Response (QR) code scanner on an AR device to view order information. QR codes have been used in warehousing and inventory management systems [19,28]. A QR code, attached to shelves or the stored item, may be used to store the item and logistic information of a product. When the user scans the QR code using the QR code scanner on the AR device, the content of the QR code appears in the display of the device. The content provides the logistic information and location of the item and may help workers to find the current and following ordered items. Alternatively, displaying highlighted ordered items on a 2D order map or a 3D graph, indicating the locations of the items to be picked, can also be considered. Scientific research is required to determine whether text-based QR codes and highlighted ordered item information displayed in an AR device are helpful in improving the efficiency of OP tasks. Theoretically, highlighted ordered-item hints provide better OP information than QR code information because the former is more intuitive and conspicuous, while the latter requires the matching of the code information and the shelf arrangement. However, this may also depend on the design of the AR device. Such issues have not been reported in the literature. This study was designed to fill this research gap.

Virtual components displayed in AR glasses enrich our visual information. However, safety and health concerns for people wearing AR glasses at work have been noticed [1,29,30,31,32]. Brusie et al. [33] indicated that the unbalanced weight distribution of AR glasses leads to user discomfort. Visual fatigue and eye strain have also been reported when wearing AR glasses [34,35,36,37,38]. Additionally, increased burden on the neck, resulting in poor head and neck posture, hindered mobility, motion sickness, headaches, and performance deterioration, when using AR glasses has been addressed [39,40,41,42]. Comfort/discomfort issues related to the usage of AR glasses in performing OP tasks were also our concerns when proposing the current study.

The findings in the literature [15,16,43,44] concerning the benefits of using AR devices are inconsistent. More studies are needed to confirm these benefits. HMD AR glasses predominantly comprise a unit of display and an input component. The visual display may be monocular or binocular. The input component may be a physical input device, such as a touch pad and buttons, or a more advanced hand gesture recognition device. The visual display and input design may affect the performance and comfort/discomfort of the user. It was our anticipation that the AR glasses with a binocular display would provide a better visual experience than the monocular ones, and that the AR glasses with a hand gesture recognition input design would allow faster hand input operations in performing OP tasks than those with a touch pad and buttons design.

Based on our review of the literature, the following hypotheses were proposed. The first hypothesis was that the efficiency and success of OP tasks using a pair of AR glasses are better than those using a paper list. The second hypothesis was that highlighted graphical order presentations in an AR device are more beneficial than QR code order information in terms of the efficiency and success of the OP tasks. The third hypothesis was that AR glasses with a binocular visual display and hand gesture recognition input design provide better efficiency and success in performing OP tasks than those with a monocular display and hand touch input design. The last hypothesis was that the subjective ratings of perceived comfort/discomfort are dependent on the design of both the AR devices and the order information display. The objectives of this study were to test these hypotheses.

2. Methodology

In order to test our hypotheses, a simulated OP experiment was conducted in a laboratory. The participants picked up 20 boxes in a storage area following different order information presentation conditions.

2.1. Participant

A total of 30 healthy adults (15 females and 15 males) were recruited in this study. Their mean (±SD) age and the median (range) of the corrected decimal visual acuity (both eyes) were 20.23 (±1.23) years and 1.0 (0.7–1.5), respectively. None of the participants had a color deficiency problem. The mean (±SD) statures of the males and females were 174.6 (±6.4) cm and 161.3 (±4.3) cm, respectively. The mean (±SD) head circumferences of the males and females were 54.1 (±0.7) cm and 53.5 (±1.4) cm, respectively. Over 50% of the participants wore framed glasses. All the participants read and signed an informed consent form before joining the experiment.

2.2. Order-Picking Devices

In addition to a paper list, two AR devices were adopted. They were the M400 (Vuzix®, Rochester, NY, USA) and the HoloLens 2 (Microsoft®, Redmond, WA, USA) (see Figure 1). The M400 can be mounted on a glasses frame and has a fixed-sized monocular visual display on the right. Users give commands by touching the touch pad or pushing the buttons on the frame. The HoloLens 2 is head-mounted and provides a binocular visual display on the glasses. The size of the binocular visual image may be adjusted and may be located anywhere in the visual field of the user. Users give commands by using hand gestures. The weights of the M400 and HoloLens 2 are 190 g and 566 g, respectively.

Figure 1.

AR devices tested in the order-picking tasks.

2.3. Performance and Subjective Ratings

The dependent variables include performance and subjective ratings. The performance of the OP tasks was measured using the time to accomplish the tasks. The subjective ratings included the comfort rating scale (CRS), and the simulator sickness questionnaire (SSQ). The CRS was utilized to assess the comfort/discomfort of users when wearing wearable devices. A total of five dimensions and 13 questions with a 20-point Likert scale (1 = extremely low to 20 = extremely high) were adopted (see Table 1) [38].

Table 1.

Dimensions and questions of the comfort rating scales.

The SSQ was used to assess the level of sickness symptoms [45,46,47]. There are 16 symptoms in the original SSQ. Four of them were excluded because our participant did not experience any of these symptoms in a pilot study. The remaining 12 symptoms (e.g., general discomfort, fatigue, headache, eye strain, difficulty focusing, increased salivation, sweating, nausea, difficulty concentrating, blurred vision, dizziness with eyes open, and dizziness with eyes closed) were included to evaluate the sickness symptoms after using an AR device. A 4-point scale (0 = none, 1 = slight, 2 = moderate, and 3 = severe) was adopted for each of these ratings [46]. The abbreviations for the 12 symptoms in the SSQ are shown in Appendix A.

2.4. Shelf Arrangement and OP Tasks

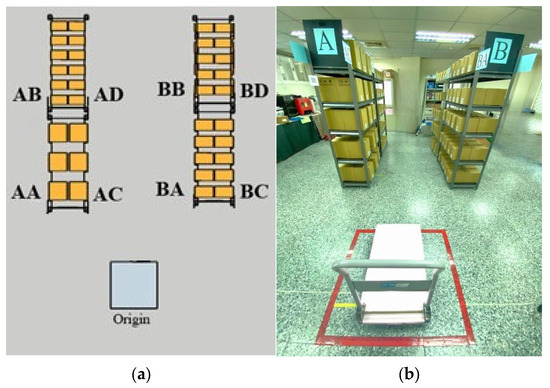

The laboratory setup for the OP tasks included the installation of eight shelves measuring 1.85 × 1.22 × 0.5 m, with five levels on each shelf (see Figure 2). The eight shelves comprised a total of eight stocking areas: AA, AB, AC, AD, BA, BB, BC, and BD. The first four constituted zone A and the other four constituted zone B. The AA and BA areas were positioned near the origin, approximately 2 m from the edge of the shelves. The distance between the AC-AD and BA-BB shelves was approximately 1 m. A total of 199 boxes, varying in size, were allocated to these storage areas. In the AA and AC areas, 15 large boxes measuring 25 cm × 25 cm × 25 cm were stored. The AB and AD areas housed 36 small boxes measuring 12.5 cm × 23 cm × 15.5 cm, while the BA and BC areas contained 25 medium-sized boxes measuring 15 cm × 26 cm × 18 cm. The BB and BD areas accommodated boxes of all three dimensions. The boxes to be picked by each participant were randomly arranged on the eight shelves.

Figure 2.

The layout of the experiment: (a) top view; (b) view from the origin.

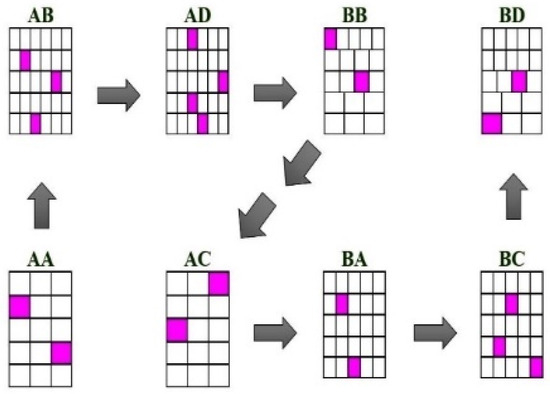

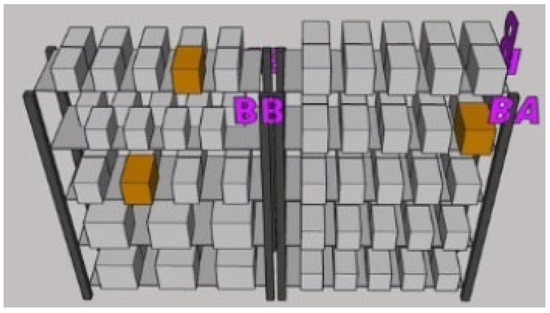

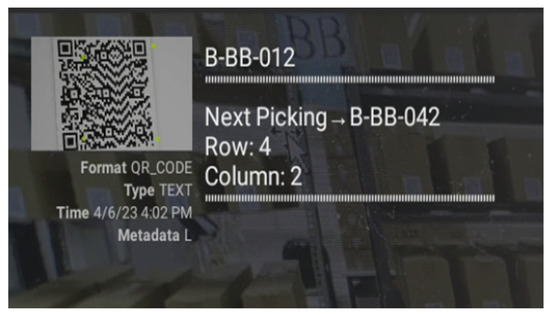

QR codes were designed for each box on the shelves. The QR code and text-based code number were attached to the bottom of each box (see Figure 3). When the participant scanned the code, the code information of the current box and the code and location information of the next box to be picked appeared. For example, when the participant scanned the QR code in Figure 3, the code and location information of the next item to be picked (pick => A-AA-041; row: 4; column: 1) appeared in addition to the current code number (A-AA-023). An error message occurred if the participant scanned the incorrect current box. For the order map and 3D graph picking, an activation QR code was attached to the side of shelf AA near the origin. Participants could scan this code to activate the order map (see Figure 4) and the 3D graph (see Figure 5) and access the box location information for picking. Arrows were provided only for the order map picking to show the sequence of the item-picking tasks. Participants were instructed to accomplish the picking task following the task instructions on the AR device. All the QR codes, order maps, and 3D graphs were designed and pre-arranged by the second author (SK).

Figure 3.

A QR code attached on a box.

Figure 4.

An example of an order map showing the locations of the boxes to be picked and picking sequence.

Figure 5.

An example of a 3D graph showing the locations of the boxes to be picked.

2.5. Procedure

The participant joined trials for both picking by paper and picking using one of the two AR devices. A total of 20 boxes were picked up in each trial, with or without an AR device. This included 3 large, 7 medium-sized, and 10 small boxes. For the picking-by-paper trials, the participant received a paper list (see Table 2) and a trolley at the origin. They read the list and proceeded to the storage areas. The participant confirmed the text-based code numbers on the boxes, picked them up, and placed them on the trolley. They then continued to find the next box. Once all the listed boxes were collected and were on the trolley, the participant pulled the trolley back to the origin. An experimenter checked the boxes on the trolley and recorded the number of incorrect boxes collected. The time to accomplish the task, from leaving the origin with the empty trolley to returning to the origin with the fully loaded trolley, was measured using a stopwatch.

Table 2.

An example of a paper list.

For order picking using an AR device, all the participants received brief training on the use of each of the AR glasses before their first trials using an AR device. The participants learned to read virtual images on the glasses and to activate a QR code reader for all the AR glasses.

For the QR code picking, the participant wore a set of AR glasses and stood at the origin with an empty trolley. They scanned the activation QR code attached on the side of shelf AA to read the information of the first box to be picked (see Figure 6). The box location information, including the zone, shelf, row, column, and codes, similar to the code information in Table 3, was displayed in the AR glasses. The participant walked to the first shelf to find this box and scanned the QR code on the box to confirm the codes of both this box and the code of the next box to collect (see Figure 7). The participant picked up the current box and put it on the trolley and then walked to find the next box. Upon picking up the last box, a “picking task has completed” message appeared in the AR display. The participant then pulled the trolley and returned to the origin. An experimenter checked the boxes on the trolley and recorded the number of incorrect boxes picked up. Each participant performed the QR code picking task using each of the AR glasses. The boxes to be picked for the two AR glasses were different and their locations on the shelves were randomly arranged.

Figure 6.

A participant scanned the starting QR code using the HoloLens 2.

Table 3.

Number of incorrect boxes picked up.

Figure 7.

QR code information displayed in the M400 device.

For the order map and 3D graph order-picking tasks, the participants wore a set of AR glasses and stood at the origin with an empty trolley. They walked to shelf AA and activated the order map or the 3D graph by scanning the initial QR code. Once the order map or the 3D graph was activated and displayed on the AR glasses (see Figure 4 and Figure 5), the participants could see the highlighted boxes and then walked to the shelves to find them. They could manipulate the order map and rotate the 3D graph by touching the touchpad on the M400 device or using hand gestures when wearing the HoloLens 2, in order to have the best view in different areas. They picked up the boxes one by one following the appearance of the highlighted boxes and then pulled the trolley back to the origin after collecting all the ordered boxes. Both AR glasses were tested. The boxes to be picked up differed for the two AR glasses and the three order information displays, and their locations on the shelves were randomly arranged. The time to accomplish the task, starting when the participant left the origin and ending when they returned to the origin, was measured. The participants also reported their subjective ratings for the CRS and SSQ upon completing each trial.

2.6. Experimental Design and Statistical Analysis

Each participant performed seven tasks, including a paper list picking task and six AR device picking tasks (2 devices × 3 order information displays). The sequence of the trials was randomly arranged for each participant so that the effects of learning and fatigue could be evenly distributed into each experimental condition. The experiment then shifted to a completely randomized block design experiment. Each participant was regarded as a block. Means and standard deviations were calculated for all the dependent variables. The time to accomplish the task and the number of incorrect boxes picked were used as indicators of task performance. An analysis of variance (ANOVA) was performed to analyze the effects of gender, the device adopted, and the order information display on the time to accomplish the task. The dependent variable may be described using the model in Equation (1) if the effects of the participant were ignored:

where yijk and εijk are the dependent variable and random error in ith gender, jth AR device, and kth order information display conditions, respectively, and εijk is normally distributed with a mean value of 0 and a standard deviation of ε; αi, βj, and γk are the effects of ith gender, jth device, and kth order information display, respectively; (αβ)ij, (αγ)ik, and (βγ)jk are the two-way interaction effects of gender × device, gender × order information display, and device × order information display; and (αβγ)ijk is the three-way interaction effect of gender × device × order information display.

yijk = μ + αi + βj + γk + (αβ)ij + (αγ)ik + (βγ)jk + (αβγ)ijk + εijk

Student–Newman–Keuls (SNK) tests were performed for posterior comparisons if the main effect of a factor was significant. Non-parametric statistical methods, including the Wilcoxon rank-sum test and Kruskal–Wallis test, were adopted to compare the number of incorrect boxes picked and the ratings of the SSQ and CRS. Paper and paper list were included as one of the levels when analyzing the factors of device adopted and order information display, respectively. The statistical analyses were performed using the SPSS 20.0 software (IBM®, Armonk, NY, USA).

3. Results

3.1. Time to Accomplish the Tasks

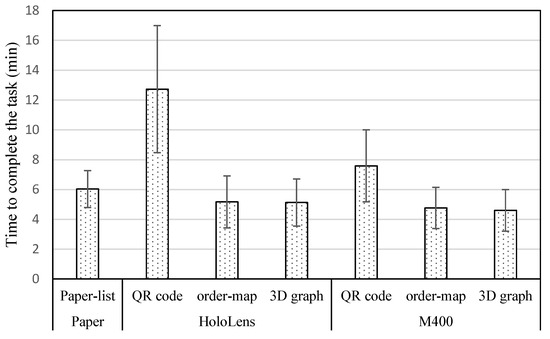

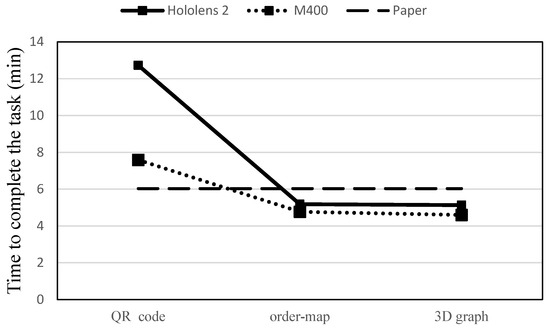

Figure 8 shows the descriptive statistics for the time to accomplish the task. The ANOVA results testing the effects of device, order information display, and gender on the time to accomplish the task showed that the effects of device (F = 19.19, p < 0.0001) and order information display (F = 108.16, p < 0.0001) were both significant, while the effects of gender were insignificant (F = 1.35, p = 0.246). The interaction effects of the device and order information display were significant (F = 21.43, p < 0.0001) (see Figure 9). All other interaction effects were not statistically significant. The SNK test results showed that the time to accomplish the task for the HoloLens 2 (7.68 ± 2.53 min) condition was statistically significantly (p < 0.05) higher than that of paper (6.03 ± 1.24 min) and M400 (5.65 ± 1.73 min) conditions. However, the difference in the time to accomplish the task between the paper list and M400 AR glasses was not statistically significant.

Figure 8.

Time to accomplish the tasks under experimental conditions.

Figure 9.

Interaction effects of AR device.

Considering the order information display, the SNK test results showed that the time to accomplish the task using the QR code information (10.16 ± 4.30 min) was significantly (p < 0.05) higher than that of the paper list (6.03 ± 1.28 min), order map (4.97 ± 1.57 min), and 3D graph (4.87 ± 1.50 min) conditions. Additionally, the time to accomplish the task for the paper list condition was significantly (p < 0.05) higher than both the order map and 3D graph conditions. The difference between the latter two conditions was insignificant.

3.2. The Accuracy of Order Picking

Table 3 presents the means, standard deviations, and range for the number of incorrect boxes picked up for all the participants. There was no incorrect picking when the participants were wearing the HoloLens 2. The Wilcoxon rank-sum test results showed that the difference in the rank of the number of incorrect boxes picked between the two genders was insignificant. The Kruskal–Wallis test results showed that the device used had a significant effect (χ2 = 12.81, p = 0.0017) on the rank of the number of incorrect boxes picked. The Wilcoxon rank-sum test results showed that the ranks of the number of incorrect boxes picked for the paper list condition were significantly higher than those of the HoloLens 2 (χ2 = 15.5, p < 0.0001) conditions. The difference between the paper list and M400 conditions was insignificant.

The Kruskal–Wallis test results showed that the order information display had a significant effect (χ2 = 10.9, p = 0.0012) on the rank of the number of incorrect boxes picked. There was no incorrect picking when a QR code display was adopted in using either of the AR devices tested. The ranks of the number of incorrect boxes picked using the QR code display were significantly lower than those of the paper list (χ2 = 10.5, p = 0.0012) and order map (χ2 = 7.4, p = 0.0066) but were not significantly different from the 3D graph displays (χ2 = 3.05, p = 0.081). The ranks of the number of incorrect boxes picked using the paper list were not significantly different from that of the 3D graph (χ2 = 3.28, p = 0.069) and the order map display (χ2 = 0.16, p = 0.690). The difference between the ranks of the number of incorrect boxes picked up using the order map and 3D graphic displays was also insignificant.

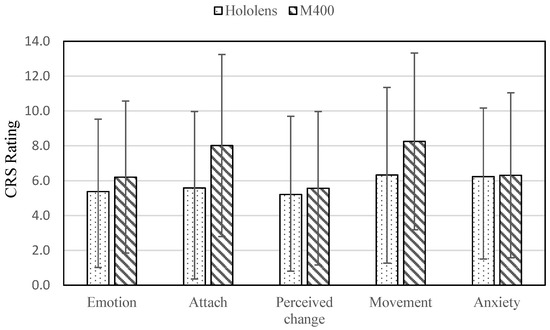

3.3. Results of the Comfort Rating Scale

Figure 10 displays the means and standard deviations of the ratings for the five dimensions of the CRS. The Wilcoxon rank-sum test results showed that gender was a significant factor affecting the ranks of all five dimensions of the CRS: emotion (χ2 = 6.74, p = 0.0094), attachment (χ2 = 5.87, p = 0.015), perceived change (χ2 = 7.73, p = 0.0054), movement (χ2 = 10.99, p = 0.0009), and anxiety (χ2 = 9.57, p = 0.002). The mean scores of all these dimensions for females were significantly higher than those for males. Females exhibited ratings that were 16.6%, 13.4%, 14.7%, 19.8%, and 28.3% higher than their male counterparts in the dimensions of emotion, attachment, perceived change, movement, and anxiety, respectively.

Figure 10.

CSR measurement of the two AR glasses.

The results of the Kruskal–Wallis test showed that the AR device also had a significant impact on the ranks of the ratings of attachment (χ2 = 10.02, p = 0.0016) and movement (χ2 = 7.35, p = 0.0067). The significance of the AR device on the ranks of the ratings of anxiety were marginal (χ2 = 3.58, p = 0.058). The effects of the AR device on the ranks of the other two dimensions were all insignificant. The mean rating for the HoloLens 2 was lower than that of the M400 AR glasses in the dimensions of attachment and movement. Specifically, the HoloLens 2 had ratings 12.2% lower in attachment and 8.8% lower in movement compared to the M400 device. The effects of the order information display on any of the CRS dimensions were insignificant.

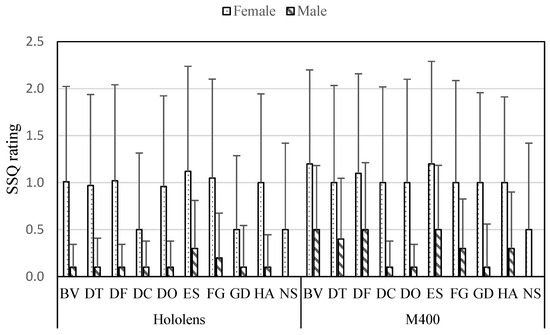

3.4. Results of the Simulator Sickness Questionnaire

The Wilcoxon rank-sum test results showed that gender had significant effects on all 12 SSQ ratings (see Figure 11). Female participants reported significantly (p < 0.0001) higher ratings in all 12 symptoms compared to their male counterparts. The χ2 of these effects ranged from 10.89 (increased salivation) to 32.60 (blurred vision). The Kruskal–Wallis test results revealed that the effects of the AR device on the symptoms of blurred vision (χ2 = 9.16, p = 0.0025), difficulty concentrating (χ2 = 4.23, p = 0.039), and difficulty focusing (χ2 = 8.52 p = 0.035) were significant and those on all other dimensions were insignificant. The ratings of these three significant symptoms for the HoloLens 2 were significantly lower than those for the M400.

Figure 11.

Rating of the simulator sickness questionnaire for the two AR devices.

When wearing the HoloLens 2, eye strain was the highest rated sickness symptom for both females (1.1 ± 1.1) and males (0.3 ± 0.3), followed by fatigue, which was the second highest rated symptom for females (1.05 ± 1.1) and males (0.2 ± 0.4). For the M400 device, eye strain and blurred vision were both the highest rated sickness symptoms for females (1.2 ± 1.1), with difficulty focusing as the second highest rated symptom (1.1 ± 1.0). For males, eye strain, blurred vision, and difficulty focusing were the highest rated symptoms (0.5 ± 0.7), with difficulty concentrating as the next highest rated symptom (0.4 ± 0.6).

The Kruskal–Wallis test results revealed that the effects of the order information display on all the sickness symptoms were not statistically significant.

4. Discussions

4.1. Efficiency of the OP Tasks

The performance of the tasks was assessed using the time to complete the task and the number of incorrect boxes collected. Our first hypothesis was that using an AR device would lead to better performance than that using a paper list. This hypothesis was partially supported considering the time to complete the task. Figure 9 shows that the time to accomplish the task using both the HoloLens 2 and the M400 glasses was shorter than using a paper list when an order map and 3D graph were used in the AR glasses. For both AR glasses, using them was more time-consuming than using a paper list when the QR code option was used. Our results were partially consistent with the findings in Lin et al.’s study [16], where they found that only one of the three types of AR devices tested showed superior performance than the standard paper list picking in terms of the picking time. Reading order information on a paper list requires hand movement and hand–eye coordination when reading and checking the order information on the paper. The participants needed to read the item code on the paper and then visually search for the item on the shelf. They potentially had to repeat the read-and-search process several times before they could find an ordered item, even though the number of read-and-search motions that occurred in finding each ordered item was counted. Hand movements were also required when using the AR devices to read the QR code for every item picked when activating the QR code reader, but they were required less frequently when using the order map and 3D graph.

In addition to the hand movement issues, the participants spent a significantly longer time to accomplish the task when they adopted the QR code option using an AR device than the other two visual display designs because they needed to check the code on the boxes to confirm the item was the correct one. The succeeding item to pick did not appear if the participant had picked the wrong item. This order confirmation step was time-consuming and was not required in the order map and 3D graph designs. This was the other reason that the QR code option was less efficient than the other two order information designs. The participants could accomplish the task significantly (p < 0.05) faster when using the order map and 3D graph designs than when using a paper list and the QR code design in an AR device. The implication of this finding is that both the order map and 3D graph with highlighted order information designs were advantageous considering the efficiency of the task and the QR code-scanning design was less advantageous. The efficiency of using an AR device in performing the OP tasks was, therefore, dependent upon the order information display adopted in the device. Our second hypothesis, which highlighted that graphical order presentations in an AR device are more beneficial than QR code order information, was supported considering the efficiency of the OP tasks.

4.2. Accuracy of the OP Tasks

The results of the incorrect boxes picked indicated that the participants made significantly more mistakes in picking the orders when using a paper list than when wearing a HoloLens 2. This was consistent with the findings in Dorloh et al.’s study [48], where they found that reading a paper menu involved significantly more mistakes in assembling a computer than when reading job instructions presented via a set of AR glasses. Lin et al. [16] also found that the Microsoft HoloLens performed significantly better than a paper in terms of accuracy in their OP tasks. For the M400, the number of incorrect boxes picked depended on the order information displayed. The 3D graph was associated with fewer incorrect boxes than the order map presentations and the paper list.

The trade-off that existed between the efficiency and success of the task being performed was apparent. As previously mentioned, the check-and-confirmation process when using the QR code option was time-consuming but could guarantee the accuracy of the order picking. The order map and 3D graph designs apparently allowed for better efficiency in the OP tasks at the cost of possible incorrect picking. It should be noted that using a paper list to pick up ordered boxes also involved checking and confirming the QR codes on the boxes. However, the checking and confirmation associated with paper list picking did not guarantee the accuracy of the picking. This was why there were incorrect boxes picked in the paper list picking trials. The participants could proceed to search for the next item even when a wrong item was collected. There was a difference in the checking and confirmation between using a paper list and using the QR code reader on an AR device. The former involved utilizing the working memory of the participants’ human information processing system, while the latter did not because the QR code was always presented in their field of vision. This is one of the advantages of using an AR device compared to using a paper list. There was no incorrect order picking when the participants used the HoloLens 2, even when the order map and 3D graph were adopted. This is one of the advantages of the HoloLens 2 over the M400. Our second hypothesis, which highlighted that graphical order presentations in an AR device are more beneficial than QR code order information, was not supported considering the success of the OP tasks performed. The third hypothesis, that AR glasses with a binocular visual display and hand gesture recognition input design provide better efficiency and success in performing OP tasks than those with a monocular display and hand touch input design, was also partially supported. The HoloLens 2 did not provide better efficiency than that of the M400. It, however, was associated with significantly fewer incorrect boxes picked up than that of the latter.

4.3. Comfort/Discomfort and Sickness Ratings

The CRS results indicated that female participants gave significantly higher ratings in all dimensions of the CRS than their male counterparts. This could be due to multiple reasons, such as females caring more about their appearance when wearing AR glasses, as the glasses could potentially affect their long hair and hairstyle, resulting in emotional, attachment, perceived change, and anxiety responses. Regarding the AR device, the average CRS ratings for the M400 (6.87) were significantly higher than that of the HoloLens 2 (5.75). The M400 had issues with unbalanced weight and the monocular visual display. The glasses could slip off due to the unbalanced weight, which was disturbing. Although the HoloLens 2 was heavier than the M400, its head-mounted design made it more secure on the head. It was evident that the ratings for attachment and movement dimensions of the HoloLens 2 were both lower than those of the M400 glasses.

The SSQ results indicated that the HoloLens 2 led to significantly lower ratings in both blurred vision and difficulty focusing symptoms than the M400. This might be attributed to the binocular display and relatively large image design of this device. Eye strain was the leading undesirable symptom for both AR devices tested, which is consistent with findings in the literature [34,35,36]. In addition to eye strain, blurred vision was also a leading symptom for the M400 device. The images on the monocular displays of the M400 were tiny. Participants had to hold the frame and focus their right eye on the order information in the tiny display, which could lead to discomfort and was disadvantageous. Our last hypothesis that the subjective ratings of perceived comfort/discomfort are dependent on the design of the AR devices was supported. However, the claim that subjective ratings of perceived comfort/discomfort are dependent on the order information display design was not supported.

4.4. Implications

An implication of this study is that wearing the AR devices tested in the current study for a longer period of time at work may not be desirable. It is suggested that workers using an AR device to perform OP tasks should take the device off whenever it is not needed in order to avoid eye strain and other discomfort symptoms. Users of the HoloLens 2 may flip the glasses up when they do not need to view the virtual image. The M400 glasses, on the other hand, do not allow for the temporary removal of the glasses and are not easy to take off. These shortcomings should be addressed in the future redesign of these devices.

4.5. Limitations

There are limitations to the current study. Our storage areas are much smaller than real warehouses in industry. OP tasks in industry are much more complicated than those in our study. Therefore, the results of our study may not be generalizable to general warehousing environments. The next limitation is that our participants were young individuals (18~22 years old). It is possible that there may be a discrepancy in job performance and comfort/discomfort perception among people in different age groups when wearing an AR device. Future research may be conducted to investigate the effects of age on performance and the rating of comfort/discomfort when wearing AR glasses. Finally, only three order information display designs and two AR devices were tested in this study. Other designs may be explored and tested in the future to improve the performance of OP tasks with minimal worker discomfort.

5. Conclusions

Whether an AR device leads to better performance in OP tasks compared to using a paper list depends on the design of the order information display. The ideas of QR code, order map, and 3D graph picking in the current study are novel. The 3D graph display was the most superior design, resulting in the shortest time to accomplish the OP tasks with less incorrect order picking compared to order map and paper list picking. On the other hand, the QR code scanning and display design guaranteed 100% accuracy in order picking but sacrificed the efficiency of task completion. The results of this study help in understanding the pros and cons of the design of both the order information and the AR devices tested in this study. Shop engineers or job designers may decide which type of order-picking design using an AR device they should adopt considering their priority of efficiency or accuracy in the tasks being performed. This is one of the major contributions of this study. The results of the CRS and SSQ ratings indicate that the tested binocular HMD (HoloLens 2) led to significantly lower discomfort ratings than the tested monocular AR device (M400). There were complaints of eye strain for both AR devices tested. This implies that both AR devices examined in the current study may not be suitable to wear for an extended period of time. Users should remove the AR device whenever they do not need to view the virtual image to avoid eye strain and other discomfort symptoms.

Author Contributions

Conceptualization, K.W.L.; methodology, S.K.; software, S.K.; validation, L.P.; formal analysis, K.W.L. and S.K.; investigation, K.W.L. and L.P.; data curation, K.W.L. and S.K.; writing—original draft, K.W.L.; writing—review and editing, L.P.; visualization, S.K.; supervision, K.W.L.; funding acquisition, K.W.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Council of Science and Technology of Taiwan (grant number NSTC 112-2221-E-216-002), and the 2nd Batch of 2022 MOE of PRC Industry-University Collaborative Education Program (Program No. 220705329202903, Kingfar-CES “Human Factors and Ergonomics” program).

Institutional Review Board Statement

This study was conducted according to the guidelines of the Declaration of Helsinki and was approved by an external committee (Central Regional Research Ethics Committee of the China Medical University, Taichung, Taiwan, CRREC 112-022).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interests.

Appendix A. Abbreviations of Sickness and Discomfort Symptoms

| Sickness and Discomfort Symptoms | Abbreviation |

| Blurred vision | BV |

| Difficulty concentrating | DT |

| Difficulty focusing | DF |

| Dizziness with eyes closed | DC |

| Dizziness with eyes open | DO |

| Eye strain | ES |

| Fatigue | FG |

| General discomfort | GD |

| Headache | HA |

| Nausea | NS |

| Salivation increasing | SI |

| Sweating | ST |

References

- Kim, S.; Nussbaum, M.A.; Gabbard, J.L. Influences of augmented reality head-worn display type and user interface design on performance and usability in simulated warehouse order picking. Appl. Ergon. 2019, 74, 186–193. [Google Scholar] [CrossRef] [PubMed]

- Shen, Y.; Ong, S.K.; Nee, A.Y. Augmented reality for collaborative product design and development. Des. Stud. 2010, 31, 118–145. [Google Scholar] [CrossRef]

- Webel, S.; Bockholt, U.; Engelke, T.; Gavish, N.; Olbrich, M.; Preusche, C. An augmented reality training platform for assembly and maintenance skills. Rob. Auton. Syst. 2013, 61, 398–403. [Google Scholar] [CrossRef]

- Wang, S.; Zargar, S.A.; Yuan, F.G. Augmented reality for enhanced visual inspection through knowledge-based deep learning. Struct. Health Monit. 2021, 20, 426–442. [Google Scholar]

- Wang, J.; Feng, Y.; Zeng, C.; Li, S. An augmented reality based system for remote collaborative maintenance instruction of complex products. In Proceedings of the 2014 IEEE International Conference on Automation Science and Engineering (CASE), New Taipei, Taiwan, 18–22 August 2014; pp. 309–314. [Google Scholar]

- Cirulis, A.; Ginters, E. Augmented reality in logistics. Procedia Computer. Sci. 2013, 26, 14–20. [Google Scholar]

- Mendoza-Ramírez, C.E.; Tudon-Martinez, J.C.; Félix-Herrán, L.C.; Lozoya-Santos, J.d.J.; Vargas-Martínez, A. Augmented Reality: Survey. Appl. Sci. 2023, 13, 10491. [Google Scholar] [CrossRef]

- Bright, A.G.; Ponis, S.T. Introducing gamification in the AR-enhanced order picking process: A proposed approach. Logistics 2021, 5, 14. [Google Scholar] [CrossRef]

- Zhao, C.; Li, K.W.; Peng, L. Movement time for pointing tasks in real and augmented reality environments. Appl. Sci. 2023, 13, 788. [Google Scholar] [CrossRef]

- Li, K.W.; Nguyen, T.L.A. Movement time and subjective rating of difficulty in real and virtual pipe transferring tasks. Appl. Sci. 2023, 13, 10043. [Google Scholar] [CrossRef]

- Deng, W.; Li, F.; Wang, M.; Song, Z. Easy-to-use augmented reality neuronavigation using a wireless tablet PC. Stereotact. Funct. Neurosurg. 2014, 1, 17–24. [Google Scholar] [CrossRef]

- Watanabe, E.; Satoh, M.; Konno, T.; Hirai, M.; Yamaguchi, T. The trans-visible navigator: A see-through neuronavigation system using augmented reality. World Neurosurg. 2016, 87, 399–405. [Google Scholar] [CrossRef] [PubMed]

- Li, M.; Seifabadi, R.; Long, D.; De Ruiter, Q.; Varble, N.; Hecht, R.; Negussie, A.H.; Krishnasamy, V.; Xu, S.; Wood, B.J. Smartphone-versus smart glasses-based augmented reality (AR) for percutaneous needle interventions: System accuracy and feasibility study. Int. J. Comput. Assist. Radiol. Surg. 2020, 15, 1921–1930. [Google Scholar] [CrossRef] [PubMed]

- Demir, S.; Yilmaz, I.; Paksoy, T. Augmented reality in supply chain management. In Logistics 4.0; Paksoy, T., Kochan, C.G., Ali, S.S., Eds.; CRC Press: Boca Raton, FL, USA, 2020; pp. 136–145. [Google Scholar]

- Jumahat, S.; Sidhu, M.S.; Shah, S.M. Pick-by-vision of augmented reality in warehouse picking process optimization—A review. In Proceedings of the 2022 IEEE International Conference on Artificial Intelligence in Engineering and Technology (IICAIET), Kota Kinabalu, Malaysia, 13–15 September 2022; pp. 1–6. [Google Scholar]

- Lin, G.; Panigrahi, T.; Womack, J.; Ponda, D.J.; Kotipalli, P.; Starner, T. Comparing order picking guidance with Microsoft Hololens, Magic Leap, Google Glass XE and paper. In Proceedings of the 22nd International Workshop on Mobile Computing Systems and Applications, Virtual, 24–26 February 2021; pp. 133–139. [Google Scholar]

- Murauer, C.S. Full Shift Usage of Smart Glasses in Order Picking Processes Considering a Methodical Approach of Continuous User Involvement. Ph.D. Thesis, Technische Universität, Berlin, Germany, 2019. [Google Scholar]

- Rejeb, A.; Keogh, J.G.; Leong, G.K.; Treiblmaier, H.; Klinker, G.J.; Hamacher, D.; Schega, L.; Böckelmann, I.; Doil, F.; Tümler, J. Potentials and challenges of augmented reality smart glasses in logistics and supply chain management: A systematic literature review. Int. J. Prod. Res. 2021, 59, 3747–3776. [Google Scholar] [CrossRef]

- de Koster, R.; Le-Duc, T.; Roodbergen, K.J. Design and control of warehouse order picking: A literature review. Eur. J. Oper. Res. 2007, 182, 481–501. [Google Scholar] [CrossRef]

- Schwerdtfeger, B.; Reif, R.; Günthner, W.A.; Klinker, G.; Hamacher, D.; Schega, L.; Bockelmann, I.; Doil, F.; Tumler, J. Pick-by-vision: A first stress test. In Proceedings of the 2009 8th IEEE International Symposium on Mixed and Augmented Reality, Orlando, FL, USA, 19–22 October 2009; pp. 115–124. [Google Scholar]

- Reif, R.; Günthner, W.A.; Schwerdtfeger, B.; Klinker, G. Evaluation of an augmented reality supported picking system under practical conditions. Comput. Graph. Forum 2010, 29, 2–12. [Google Scholar] [CrossRef]

- Reif, R.; Günthner, W.A. Pick-by-vision: Augmented reality supported order picking. Vis. Comput. 2009, 25, 461–467. [Google Scholar] [CrossRef]

- Smith, E.; Strawderman, L.; Chander, H.; Smith, B.K. A comfort analysis of using smart glasses during “picking” and “putting” tasks. Int. J. Ind. Ergon. 2021, 83, 103133. [Google Scholar] [CrossRef]

- Pereira, A.C.; Alves, A.C.; Arezes, P. Augmented Reality in a Lean Workplace at Smart Factories: A Case Study. Appl. Sci. 2023, 13, 9120. [Google Scholar] [CrossRef]

- Schwerdtfeger, B.; Reif, R.; Günthner, W.A.; Klinker, G.J. Pick-by-vision: There is something to pick at the end of the augmented tunnel. Virtual Real. 2011, 15, 213–223. [Google Scholar] [CrossRef]

- Weaver, K.; Baumann, H.; Starner, T.; Iben, H.; Lawo, M. An empirical task analysis of warehouse order picking using head-mounted displays. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Atlanta, GA, USA, 10–15 April 2010; pp. 1695–1704. [Google Scholar]

- Baumann, H.; Starner, T.; Iben, H.; Lewandowski, A.; Zschaler, P. Evaluation of graphical user-interfaces for order picking using head-mounted displays. In Proceedings of the 13th International Conference on Multimodal Interfaces. ACM Request Permissions, Alicante, Spain, 14–18 November 2011; pp. 377–384. [Google Scholar]

- Chen, S.K.; Ti, Y.W. A design of multi-purpose image-based QR code. Symmetry 2021, 13, 2446. [Google Scholar] [CrossRef]

- Davis, F.D.; Bagozzi, R.P.; Warshaw, P.R. User acceptance of computer technology: A comparison of two theoretical models. Manag. Sci. 1989, 35, 982–1003. [Google Scholar] [CrossRef]

- Venkatesh, V.; Morris, M.G.; Davis, G.B.; Davis, F.D. User acceptance of information technology: Toward a unified view. MIS Q. 2003, 27, 425–478. [Google Scholar] [CrossRef]

- Venkatesh, V.; Thong, J.Y.; Xu, X. Consumer acceptance and use of information technology: Extending the unified theory of acceptance and use of technology. MIS Q. 2012, 36, 157–178. [Google Scholar] [CrossRef]

- Rauschnabel, P.A.; He, J.; Ro, Y.K. Antecedents to the adoption of augmented reality smart glasses: A closer look at privacy risks. J. Bus. Res. 2018, 92, 374–384. [Google Scholar] [CrossRef]

- Brusie, T.; Fijal, T.; Keller, A.; Lauff, C.; Barker, K.; Schwinck, J.; Calland, J.F.; Guerlain, S. Usability evaluation of two smart glass systems. In Proceedings of the 2015 Systems and Information Engineering Design Symposium, Charlottesville, VA, USA, 24 April 2015. [Google Scholar]

- Han, J.; Bae, S.H.; Suk, H.-J. Comparison of visual discomfort and visual fatigue between head-mounted display and smartphone. IS. T. Int. Symp. Electron. Imaging Sci. Technol. 2017, 29, 212–217. [Google Scholar] [CrossRef]

- Vujica Herzog, N.; Buchmeister, B.; Beharic, A.; Gajsek, B. Visual and optometric issues with smart glasses in Industry 4.0 working environment. Adv. Prod. Eng. Manag. 2018, 13, 417–428. [Google Scholar] [CrossRef]

- Wang, C.H.; Tsai, N.H.; Lu, J.M.; Wang, M.J.J. Usability evaluation of an instructional application based on Google Glass for mobile phone disassembly tasks. Appl. Ergon. 2019, 77, 58–69. [Google Scholar] [CrossRef]

- Kim, Y.M.; Bahn, S.; Yun, M.H. Wearing comfort and perceived heaviness of smart glasses. Hum. Factors Ergon. Manuf. 2021, 31, 484–495. [Google Scholar] [CrossRef]

- Knight, J.; Baber, C.; Schwirtz, A.; Bristow, H.W. The comfort assessment of wearable computers. Proc.-Int. Symp. Wearable Comput. 2022, 2, 65–72. [Google Scholar]

- Knight, J.F.; Baber, C. Neck muscle activity and perceived pain and discomfort due to variations of head load and posture. Aviat. Space Environ. Med. 2004, 75, 123–131. [Google Scholar]

- Vovk, A.; Wild, F.; Guest, W.; Kuula, T. Simulator sickness in augmented reality training using the Microsoft HoloLens. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–9. [Google Scholar]

- Stockinger, C.; Steinebach, T.; Petrat, D.; Bruns, R.; Zöller, I. The effect of pick-by-light-systems on situation awareness in order picking activities. Procedia Manuf. 2020, 45, 96–101. [Google Scholar] [CrossRef]

- Li, W.-C.; Zhang, J.; Court, S.; Kearney, P.; Braithwaite, G. The influence of augmented reality interaction design on Pilot’s perceived workload and situation awareness. Int. J. Ind. Ergon. 2022, 92, 103382. [Google Scholar] [CrossRef]

- Knight, J.F.; Baber, C. Effect of head-mounted displays on posture. Hum. Factors 2007, 49, 797–807. [Google Scholar] [CrossRef] [PubMed]

- Lee, L.H.; Hui, P. Interaction methods for smart glasses: A survey. IEEE Access 2018, 6, 28712–28732. [Google Scholar] [CrossRef]

- Gerdenitsch, C.; Sackl, A.; Hold, P. Augmented reality assisted assembly: An action regulation theory perspective on performance and user experience. Int. J. Ind. Ergon. 2022, 92, 103384. [Google Scholar] [CrossRef]

- Kennedy, R.S.; Lane, N.E.; Berbaum, K.S.; Lilienthal, M.G. Simulator sickness questionnaire: An enhanced method for quantifying simulator sickness. Int. J. Aviat. Psychol. 1993, 3, 203–220. [Google Scholar] [CrossRef]

- Vlad, R.; Nahorna, O.; Ladret, P.; Guérin, A. The influence of the visualization task on the Simulator Sickness symptoms-a comparative SSQ study on 3DTV and 3D immersive glasses. In Proceedings of the 3DTV Vision Beyond Depth (3DTV-CON), Aberdeen, UK, 7–8 October 2013; pp. 1–4. [Google Scholar]

- Dorloh, H.; Li, K.W.; Khaday, S. Presenting job instructions using an augmented reality device, a printed manual, and a video display for assembly and disassembly tasks: What are the differences? Appl. Sci. 2023, 13, 2186. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).