Abstract

Aerial manipulator systems possess active operational capability, and by incorporating various sensors, the systems’ autonomy is further enhanced. In this paper, we address the challenge of accurate positioning between an aerial manipulator and the operational targets during tasks such as grasping and delivery in the absence of motion capture systems indoors. We propose a vision-guided aerial manipulator system comprising a quad-rotor UAV and a single-degree-of-freedom manipulator. First, the overall structure of the aerial manipulator is designed, and a hierarchical control system is established. We employ the fusion of LiDAR-based SLAM (simultaneous localization and mapping) and IMU (inertial measurement unit) to enhance the positioning accuracy of the aerial manipulator. Real-time target detection and recognition are achieved by combining a depth camera and laser sensor for distance measurements, enabling adjustment of the grasping pose of the aerial manipulator. Finally, we employ a segmented grasping strategy to position and grasp the target object precisely. Experimental results demonstrate that the designed aerial manipulator system maintains a stable orientation within a certain range of ±5° during operation; its position movement is independent of orientation changes. The successful autonomous grasping of lightweight cylindrical objects in real-world scenarios verifies the effectiveness and rationality of the proposed system, ensuring high operational efficiency and robust disturbance resistance.

1. Introduction

With the development of indoor positioning and navigation systems and intelligent control technologies, rotary-wing unmanned aerial vehicles (UAVs) have gained increasing attention in various fields. Their simple structure, agile movement, and ability to hover in place make them widely applicable in indoor search, disaster site patrols, and fire extinguishing [1,2,3]. In these applications, the rotary-wing UAV is typically used as an environmental perception and motion platform equipped with sensors. During its flight, it does not contact the external environment, lacking the ability to interact with it actively [4]. By equipping UAVs with an aerial manipulator, effectively forming an aerial manipulator system, their active operational capabilities can be significantly enhanced, enabling them to perform tasks such as sample collection in extreme environments and transfer and delivery of hazardous materials, increasing the interaction capability between rotary-wing UAVs and the environment [5].

Given that these aerial manipulator systems can perform operations in the air with high precision, they have emerged as a new research focus in robotics [6]. Typically, the aerial manipulator comprises a rotary-wing UAV and a multi-degree-of-freedom manipulator. The rotary-wing UAV possesses high dimensionality, underactuation, and nonlinearity. When operating with a multi-degree-of-freedom manipulator, the overall dimensionality of the aerial manipulator system increases significantly, greatly expanding the application range of rotary-wing UAVs and providing broad prospects and societal implications [7].

However, the manipulator’s accuracy of UAV positioning and target grasping currently requires improvement. To address this challenge, research has achieved the fast grasping capability of an aerial manipulator using a motion capture system (Vicon, Hauppauge, NY, USA) for UAV localization [8]. However, achieving autonomous grasping relying solely on the UAV’s perception is yet to be fully realized. Especially in practical applications, the aerial operation of the manipulator is prone to losing target information, making it challenging to reconstruct three-dimensional spatial information and estimate real-time depth. Therefore, this paper analyzes the latest research and development trends in aerial manipulators and robot grasping. It focuses on key technologies during the grasping process of an aerial manipulator without the motion capture system (Vicon) in indoor environments. We propose a vision-guided autonomous positioning and control system for the aerial manipulator. The paper designs a hierarchical control system for the aerial manipulator’s pose and utilizes visual guidance for feature extraction and grasping pose estimation. Considering the range of motion during target grasping and the requirement to maintain a safe distance from the ground, a laser sensor is introduced to measure the distance between the aerial robot and the target object. An iterative algorithm is applied for target searching. Finally, the autonomous grasping functionality of lightweight cylindrical objects is achieved.

The main contributions of our study are as follows:

- (1)

- We propose an integrated platform for a single-degree-of-freedom aerial manipulator that is low-cost, structurally compact, and easy to implement in hardware.

- (2)

- We design a hierarchical control system for an aerial manipulator with a flight control system for UAV attitude, position control achieved via Pixhawk4’s inner and outer loop PID algorithms, and an aerial manipulator with end-effector control controlled by Jetson Xavier NX.

- (3)

- We propose a visual guidance algorithm for the real-time perception of target object position information by fusing a depth camera with laser-ranging data. Ultimately, a segmented grasping strategy is employed to achieve autonomous object retrieval. This system can effectively address target loss, 3D spatial information reconstruction, and real-time depth estimation during aerial operations.

- (4)

- We propose a SLAM positioning method that integrates LiDAR and IMU, effectively addressing the low positioning accuracy of existing SLAM algorithms in the three-dimensional motion of UAVs, reducing cumulative errors, and enhancing map-building results.

This paper has five sections. Section 1 introduces the background and significance of the aerial manipulator and summarizes relevant research. Section 2 discusses the related work in the field of aerial manipulators. Section 3 presents the hardware system of the aerial manipulator. The rotary-wing UAV and manipulator each have a control system with coupled interactions to achieve stable flight and precise positioning of the manipulator. Section 4 provides a detailed analysis of the implementation approach for autonomous localization and grasping of the aerial manipulator. Section 5 presents experimental analysis through simulations and physical demonstrations of target grasping tasks. Section 6 presents the conclusions of our work.

2. Related Works

Research on aerial manipulator systems focuses primarily on the system’s structure, control system approach, and visual navigation strategy. For example, in the field of aerial manipulator system structure, Meng et al. [9] used a 5-DOF aerial manipulator system to achieve the grasping of cylindrical objects. Suarez et al. [10] designed a humanoid dual-arm aerial manipulator for grasping operations. Meanwhile, considering the high cost and risk involved in large infrastructure inspections, Hamaza et al. [11] utilized a rotary-wing UAV equipped with a compact single-DOF manipulator to perform facility inspections and sensor installation. Yamada et al. [12] researched a three-arm aerial manipulator using a flying robot for rope fixation and removal tasks and developed a manipulator structure suitable for complex operations. Peng et al. [13] presented a novel aerial manipulator system with a self-locking universal joint mechanism. They also designed a dynamic gravity compensation mechanism and optimized the battery placement and gear ratio to minimize the weight imbalance during arm movements. Additionally, Zhang et al. [14] designed a novel aerial manipulator structure with a 5-DOF manipulator and batteries placed at the front and rear of a quad-rotor UAV, respectively. This design expanded the workspace and minimized the center of mass offset. Experimental results demonstrated the successful capture of targets on a moving platform.

Regarding the aerial manipulator control system, Chaikalis et al. [15] proposed an aerial manipulator system with a low-dimensional simplified mode. The system was validated through simulated aerial flights in various target-tracking scenarios. Furthermore, Chaikalis et al. [16] addressed the issue related to team coordination for transporting payloads with aerial manipulator systems. A leader–follower setup was implemented to increase the system’s autonomy, where each manipulator’s gripper was equipped with force/torque sensors to enhance the UAV’s flight stability. The proposed control method was validated through simulation in the Gazebo environment. Meanwhile, Nguyen et al. [17] presented the system design, modeling, and control of a chain-based aerial manipulator. This system provided a hybrid modeling framework for modeling the system in free-flight and aerial manipulation modes. Moreover, Nekoo et al. [18] proposed a constraint-oriented design optimization method for dual-arm aerial robots to increase the robot’s workspace while reducing weight. In this configuration, the arms are separated from the aerial platform through long-distance linkages similar to pendulums, improving safety in human interaction scenarios.

Due to the development of machine vision and the decreasing cost of visual sensors, the research on utilizing vision sensors to obtain target object position information to estimate grasping poses in aerial manipulators has gained widespread attention. Luo et al. [19] proposed a machine vision servoing method based on natural features to control an aerial manipulator for autonomous aerial grasping of target objects. Thomas et al. [20] used an image-based visual servoing (IBVS) controller to maintain a simple gripper for autonomously grasping stationary objects. However, the depth estimation in the IBVS controller may be less accurate. Accordingly, Wu et al. [21] adopted a UAV vision-based object detection method based on the YOLOv3 framework to identify target objects in complex environments using a gimbal camera. Meanwhile, Zhou et al. [22] designed a three-dimensional point cloud extraction method that combines object detection and background subtraction to improve the grasp evaluation strategy. The proposed method was experimentally validated to estimate effective grasping poses for aerial manipulators. Chen et al. [23] presented an IBVS control strategy for tracking and grasping moving targets in UAV systems. The method was validated through simulation and demonstrated effective tracking and grasping of moving targets. Hu et al. [24] proposed an image-based underactuated aerial manipulator control scheme with an image space visual servoing controller on a dynamic horizontal plane. Moreover, it decoupled the attitude tracking task from visual impedance control to address the underactuated nature of the system. Ramon-Soria et al. [25] described a system with dual manipulator arms with an RGB-D camera. The position information of the target object was obtained using artificial neural networks, and the grasping position and pose of the aerial manipulator were estimated by establishing a 3D model of the target object. Kim et al. [26] proposed a guidance method based on IBVS comprising a passive adaptive controller based on position and velocity control to achieve autonomous grasping and transportation of target objects. Seo et al. [27] introduced a vision-guided control method utilizing an image-based cylinder detection algorithm to identify elliptical surfaces and perform aerial operations. Kanellakis et al. [28] used stereo-depth perception to extract the target’s position in the surrounding scene, obtaining the relative pose of the aerial manipulator and target object.

While this research indicates that the aerial manipulator system has become a research hotspot in robotics, the results are relatively singular. Indeed, the control and positioning navigation of the aerial manipulator system continue to face challenges when interacting with the environment. Regarding the structure of the aerial manipulator, numerous designs for the flight mechanical arm platform have been proposed for specific application scenarios [9,10,11,12,13,14]. However, these are limited by complex structures, low control accuracy, and insufficient robustness. Therefore, developing a flexible and highly expandable aerial manipulator structure is the main focus of this study.

Moreover, the aerial manipulator control algorithms proposed by previous studies require relatively complex kinematic and dynamic modeling, most of which are in the simulation stage and are challenging to apply in actual systems. Hence, real-machine flight has yet to be achieved [12,14,15,19,21,23]. Therefore, this paper focuses on developing an experimental platform for a UAV with a manipulator with lower-level control, positioning and navigation, and top-level application algorithms, ultimately achieving real-machine flight of target object grasping.

In addition, regarding the navigation and positioning strategy of the aerial manipulator, most apply the motion capture system (Vicon) [26,27,28]. Although the Vicon system can significantly improve the positioning accuracy of flying robots, its high price limits the aerial manipulator’s application.

Accordingly, this paper proposes an effective aerial manipulator control method and positioning navigation method in an indoor environment without a Vicon system. Innovatively, a vision-guided algorithm is proposed to perceive the target’s position information in real time and adjust the attitude of the aerial manipulator. This study will guide other researchers in constructing experimental platforms and autonomous control of flight mechanical arms in the future.

3. Hardware Structure and Control Method of Aerial Manipulator System

3.1. Hardware Structure of Aerial Manipulator System

The aerial manipulator system combines the flexible operation of the manipulator with the maneuverable flight and precise hovering capabilities of the quad-rotor UAV. This system can actively influence the surrounding environment and perform tasks, including approaching, grasping, and delivering target objects [29].

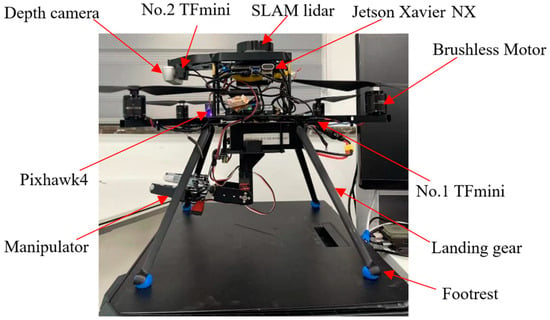

Considering the payload, stability, and system complexity, the hardware platform of the aerial manipulator system is shown in Figure 1. It comprises two main components: the quad-rotor UAV body and a single-degree-of-freedom manipulator. The structure is constructed using lightweight materials, and the mechanical design is compact, light, and flexible. The system’s overall weight is approximately 2016 g (including the battery), and it can achieve a flight endurance of approximately 18 min when equipped with a 5300 mAh 4S battery. The landing gear designed for this platform seamlessly integrates with the main body of the UAV. The footrests utilize sponge balls with shock-absorbing capabilities, enabling safe landing and take-off even in complex and dynamic terrain conditions. In the event of a crash, only the landing gear will become damaged, preventing the destruction of other structural components of the aircraft.

Figure 1.

Hardware structure of the aerial manipulator.

In this system, the quad-rotor UAV utilizes an F450 carbon fiber frame with a wheelbase length of 450 mm. It is equipped with 920 kV brushless motors and Hobbywing 20A ESCs and is fitted with 10-inch nylon propellers. The flight control system is the Pixhawk 4, an open-source flight controller based on the Nuttx operating system and developed by the Pixhawk team (http://docs.px4.io/main/en/flight_controller/pixhawk4.html, accessed on 5 March 2023). Pixhawk 4 has an integrated 3-axis 16-bit accelerometer/3-axis gyroscope ICM-20689. Furthermore, the UAV is equipped with the NVIDIA Jetson Xavier NX processor (https://www.nvidia.cn/autonomous-machines/embedded-systems/jetson-xavier-nx/, accessed on 8 March 2023), possessing powerful artificial intelligence computing capabilities. It also incorporates the Slamtec RPLIDAR S2, an omnidirectional LiDAR, for SLAM map construction and autonomous navigation (https://www.slamtec.com/cn/S2?wt.mc_id=Slbd_qg_ld_100142pc&sdclkid=AL2G15o615F_152R&bd_vid=6769858087028764337, accessed on 10 April 2023). The installation of a LiDAR on the UAV should meet the following requirements: horizontal angle between 0° and +2° (tilted upwards); opening angle of ≥+270°, preferably in the front and rear of the UAV; angle of coverage of ≥+220°. The sensor should avoid a scanning blind spot of 15° and the laser sensor TFmini (https://www.benewake.com/TFminiS/index.html?proid=325, accessed on 12 April 2023) is installed on the manipulator of the UAV for altitude control. In Figure 1, the TFmini labeled “1” represents the laser sensor mounted on the manipulator.

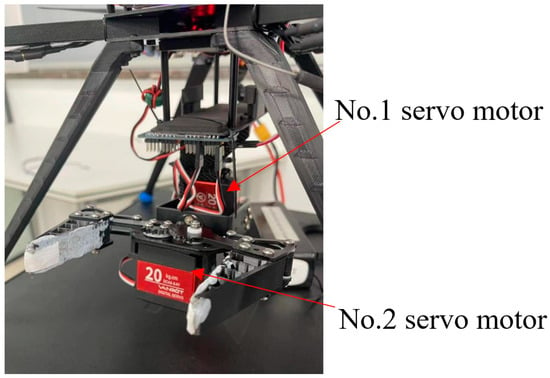

Considering that a heavy hardware platform will increase the overall payload of the system, and too many degrees of freedom in the manipulator will affect system stability, resulting in robustness, this paper adopts a single-degree-of-freedom manipulator. Specifically, it has one pitch degree of freedom. The manipulator is depicted in Figure 2. The mechanism comprises the manipulator and the gripper. The manipulator’s joints drive the motion of the gripper, which extends forward from the central position of the quad-rotor UAV to align the arm and the UAV’s center of gravity in a vertical direction. The arm joints utilize servo motors for motion control, communicating via half-duplex asynchronous serial communication with a maximum speed of 1 Mbps. The PCA9685 16-channel servo driver board (https://singtown.com/learn/49611, accessed on 6 May 2023) is used for motor control. Two HWZ020 servo motors are employed: the joint-one servo motor operates the opening and closing of the gripper (No. 1 servo motor in Figure 2), while the other controls the grasping action of the gripper (No. 2 servo motor). The maximum angle of rotation of the servo motors is 180°.

Figure 2.

Manipulator and gripper.

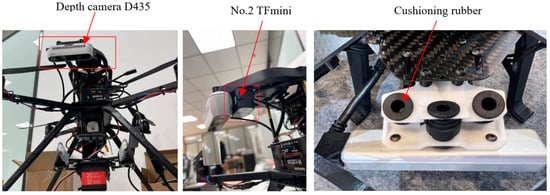

To accomplish precise positioning and grasping of target objects in the aerial manipulator system, a D435 depth camera and TFmini laser sensor (No. 2 TFmini in Figure 3) are simultaneously installed at the front of the quad-rotor UAV. The D435 depth camera is positioned directly in front of the center of the gripper. Compared to a regular camera, the D435 obtains a clearer RGB image, enabling precise identification of target objects from a clear global exposure and a panoramic viewpoint. Additionally, cushioning rubber is installed between the depth camera drone to overcome the error identification caused by the minor motion and high-frequency oscillation generated by the UAV during flight. The No. 2 TFmini sensor is installed behind the D435 camera to determine if the gripper can grasp the target object. Importantly, this sensor must align with the hollow space in the center of the gripper, and the distance measurement laser should pass through the space between the gripper fingers without touching the fingers. When a target object is in the gripper, the measured distance should be between 28 and 30 cm. By default, the manipulator is in a retracted position. After the quad-rotor takes off, autonomous positioning is achieved through LiDAR-based SLAM map construction. Target object search is performed, and the D435 collects characteristic information of the target object to transmit control signals and adjust the swinging angle of the manipulator.

Figure 3.

The depth camera D435 and the laser sensor No. 2 TFmini.

In summary, the designed vision-guided aerial manipulator system has a compact structure with a moderate weight, ensuring stable flight performance. It can grasp, carry, and deliver target objects with a maximum payload capacity of around 400 g. The primary physical structural parameters of the system are listed in Table 1.

Table 1.

Parameters of the aerial manipulator system.

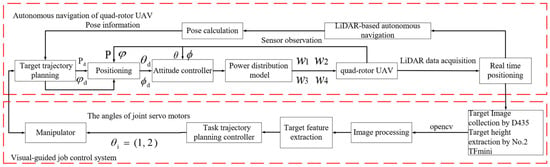

3.2. Implementation of the Aerial Manipulator System

The control system proposed for the aerial manipulator adopts a hierarchical control approach comprising the autonomous navigation system for quad-rotor UAVs and the vision-guided manipulator operation control system. The autonomous navigation system for quad-rotor UAVs includes real-time positioning and attitude control via LiDAR. The vision-guided manipulator operation control system extracts target object feature information and controls the arm for grasping tasks. The entire control system for the aerial manipulator is shown in Figure 4.

Figure 4.

Control system of the aerial manipulator.

The implementation process is as follows:

First, the UAV takes off and uses the LiDAR-based SLAM to construct surrounding map information, generating a target trajectory. Then, the trajectory is determined by the attitude settlement module, obtaining the desired position and the desired attitude angle . These are outputted to the position controller. The actual position of the UAV can be obtained through sensors, assumed to be . Utilizing the PID control algorithm, a linear relationship is established between the error quantity of the desired position and the actual position, which is represented as follows:

where , , and represent the proportional, integral, and derivative coefficients, respectively; represents the first-order derivative of ; and represents the second-order derivative of .

Second, based on Equation (1), the position controller calculates the UAV’s total lift, desired roll angle , and desired pitch angle through the dynamic equations [30]. The actual roll angle and pitch angle , obtained from the sensors, are input into the attitude controller module. Using PD control and proceeding through a dynamic allocation model, the motor rotation speed is decoupled.

Third, when the UAV enters the operational range, it utilizes the depth camera D435 to search for the target object. The laser sensor No. 2 TFmini, obtains the height information between the UAV and target object, enabling the extraction of three-dimensional feature information of the target object. Finally, the control system outputs the angles of various joint servo motors, denoted as , to the servo motor controller for controlling the grasping pose, which controls the manipulator to complete the grasping task.

4. Autonomous Positioning and Grasping

The designed aerial manipulator system achieves autonomous positioning and navigation through LiDAR-based SLAM map construction after the UAV takes off. It performs target search, gathers target object features using the depth camera D435 and laser sensor TFmini, extracts target feature information, obtains the target’s coordinate information, and transmits control signals to adjust the swinging angle of the manipulator to achieve accurate target grasping. Below, we discuss the SLAM-based autonomous positioning, visual navigation algorithm for grasping targets, and control method for aerial manipulator operations guided by vision.

4.1. SLAM-Based Autonomous Positioning and Navigation System

The autonomous positioning of quad-rotor UAVs is a requisite for indoor obstacle avoidance, path planning, and grasping. Localization is the primary challenge; currently, quad-rotor UAV localization primarily falls into two categories [31,32]: obtaining position information through the UAV’s perception or relying on external devices, such as the motion capture system (Vicon). However, the Vicon systems are expensive and require specialized environments, restricting the application range of aerial manipulators.

Therefore, we integrated low-cost sensor data, such as LiDAR, IMU, with a laser altimeter module. SLAM [33,34] was utilized to achieve high-precision pose estimation and environment mapping and realize indoor autonomous navigation for aerial manipulators.

4.1.1. System Framework of the LiDAR SLAM-Based Positioning Method

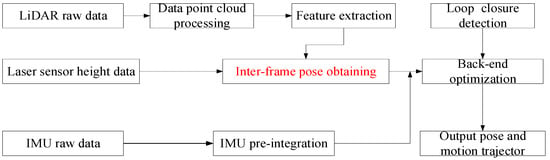

The SLAM-based positioning method combines the high-precision autonomous positioning requirements of the aerial manipulator, comprising three parts: LiDAR odometry [35], IMU module, and back-end optimization. The overall framework is illustrated in Figure 5.

Figure 5.

Framework of the SLAM-based positioning method.

4.1.2. LiDAR Odometry

First, the raw data collected by the LiDAR sensor is processed to handle point clouds. During the UAV flight, laser beams may hit screens, sky, or other non-reflective surfaces, causing laser degradation and refraction, resulting in ineffective reflection information. Such laser points must be removed using a point cloud library tool. Subsequently, a breadth-first traversal method is employed to downsample the point cloud, reducing the number of laser points and eliminating uncertain objects in the environment. Equation (2) computes the curvature of laser points [36]:

where represents points in the current frame under the coordinate system of the LiDAR, denotes the set, and represents the curvature of the point. The laser points are arranged in descending order based on their curvature values. Larger curvatures indicate lower smoothness and correspond to corner points, while smaller curvatures indicate higher smoothness and correspond to line points. Here, two curvature thresholds, and , are set in descending order. Neutral points are eliminated based on Equation (3), resulting in the current frame’s point cloud , as follows:

Second, we perform inter-frame matching—a crucial step for constraining the pose relationship between frames. We use a point-line registration approach [37] to improve computational efficiency and ensure real-time performance. Corner points and line points extracted from the current frame point cloud are respectively labeled as and . The corner points and line points extracted from the previous frame are denoted as and , respectively. Since the UAV is in motion from times to , the associated pose of each point is adjusted to the corresponding time. The laser points from each frame are projected onto the initial time, denoted as and . Corner point matching involves selecting a point in and identifying the two closest points in to form a distance constraint between a point and line. The line point matching involves selecting a point in and identifying the three closest points in to form a distance constraint between a point and plane. We use the Levenberg-Marquardt optimization method to identify the rotation and translation matrices that minimize these two distance constraints. This yields the pose transformation obtained through inter-frame matching.

4.1.3. IMU Module

A LiDAR sends out all data from the same frame simultaneously. However, the UAV is constantly in motion, causing the position of the LiDAR’s coordinate system to change. This movement introduces distortions to the collected LiDAR data. Assuming the UAV undergoes uniform motion, the IMU module performs distortion correction on the LiDAR data. The IMU can provide angular and linear acceleration measurements. By considering the installation position of the IMU and LiDAR, the transformation relationship between the IMU and LiDAR coordinate systems can be determined. The point cloud is then transformed to the IMU coordinate system. The pose transformation between two consecutive IMU frames is represented by Equation (4) and denoted as :

The transformation of the LiDAR pose at time relative to the LiDAR pose at time is given by Equation (5):

4.1.4. Back-End Optimization

The loop closure detection module [36] determines whether a loop closure has occurred in the system by calculating the Euclidean distance between the current frame and previous keyframes. The keyframes that meet the criteria are stored in a subset (i.e., subkeyframes). For each point in the subkeyframes, a corresponding point is searched in the current frame to form a distance constraint. We then use the ICP (iterative closest point) algorithm [38] to compute the optimal rotation and translation matrices that satisfy the constraints. The transformation parameters are solved by constructing an error function using the matched points, aiming to minimize the error, as shown in Equation (6):

where is a point in the original point cloud ; is the point in the historical point cloud that has the minimum Euclidean distance with ; and represents the number of points in the subset of keyframes.

By using the obtained transformation matrices and , we update the point cloud in the subset of keyframes. We then continue to construct the error function until the result is smaller than the set threshold or the iteration reaches the specified limit.

4.2. Visual Navigation Algorithms for Grasping Targets

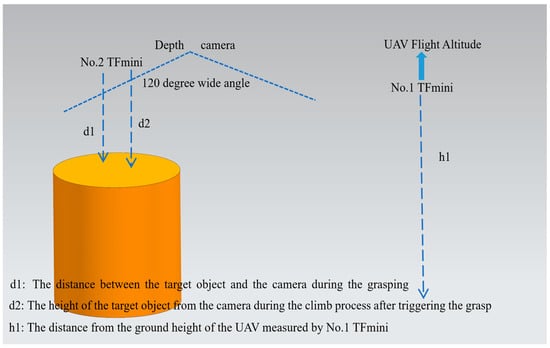

4.2.1. Vision-Guided Laser Range Measurement

The visual guidance-based laser range measurement used in this paper is shown in Figure 6. First, the two-dimensional image information of the target object is captured with the D435 depth camera with a 120° wide field of view to facilitate rapid target object identification. Next, the edge detection algorithm is applied using OpenCV to extract the contour information of the target object’s edges. The NO. 2 TFmini sensor is employed to collect real-time height information for the UAV and target object to determine the height information of the UAV relative to the target object. Finally, by transforming the coordinates between the target object and the camera coordinate system, the position relationship between the UAV and the target object is established, facilitating the adjustment of the manipulator’s various joint servo angles to achieve precise grasping.

Figure 6.

Principle of laser ranging based on visual guidance.

4.2.2. Target Grasping Analysis

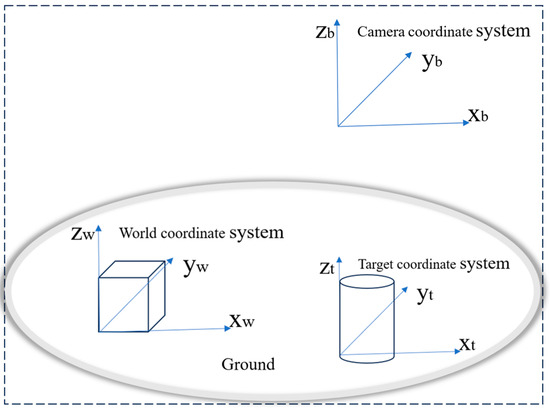

By integrating the depth camera and laser ranging data, the UAV’s and target object’s coordinate information is achieved. Combined with the UAV’s own pose data, the current three-dimensional spatial position of the target object in the world coordinate system is obtained. Figure 7 presents the target object grasping scenario in the aerial manipulator system, including the world coordinate system W, camera coordinate system C, and target coordinate system. Hereafter, we describe the process of transforming the target’s coordinates from the camera coordinate system to the world coordinate system.

Figure 7.

Coordinate system of the target grasping scene.

First, the camera’s attitude can be obtained from the UAV’s onboard inertial navigation system. The obtained camera attitude quaternion can be denoted as , and its corresponding rotation matrix can be represented as [39]:

Assume that the coordinates of the target object in the world coordinate system and camera coordinate system are represented as and , respectively. denotes the translation matrix between the world coordinate system and the camera coordinate system, obtained using the camera coordinates provided by the UAV’s inertial navigation system. Therefore, our algorithm ultimately calculates the coordinates of the target object in the world coordinate system as follows:

4.2.3. Visual Guidance Algorithm

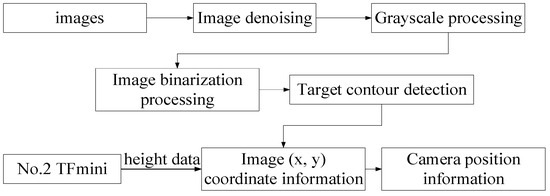

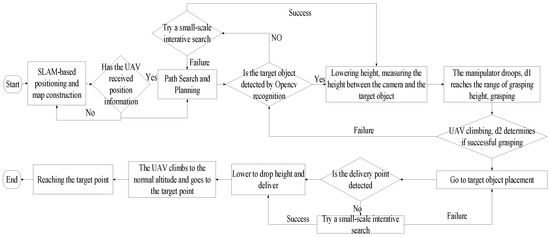

We propose a novel grasping method for aerial manipulators based on a visual guidance algorithm. This model utilizes a front-mounted depth camera D435 on a rotary-wing UAV to detect and recognize the positional information of the target object, enabling the adjustment of the aerial manipulator’s pose angles. The implementation process of the visual guidance algorithm is depicted in Figure 8, and the specific steps are as follows:

Figure 8.

Visual guidance algorithm process.

Step 1: Utilize the VideoCapture class in OpenCV to capture the video stream from the camera and read the images.

Step 2: Apply Kalman filtering to denoise the captured image, reducing image distortion caused by UAV vibrations.

Step 3: Process the denoised image by converting it to grayscale and performing binary thresholding. Utilize the Canny algorithm to obtain the edge information of the image.

Step 4: Obtain the two-dimensional coordinates of the target object image and integrate the height data acquired from the No. 2 TFmini to obtain the camera position information. Subsequently perform coordinate transformation to obtain the target object’s coordinate information, enabling real-time adjustment of the aerial manipulator’s attitude.

4.3. Control Method of Visual Guidance Based on the Aerial Manipulator

The complete process for implementing the visual-guided aerial manipulator’s control method is below (Figure 9).

Figure 9.

Process of aerial manipulator’s control method.

Step 1: The quad-rotor UAV takes off and uses LiDAR for SLAM positioning and map construction. It performs path searching and planning for the target object while the aerial manipulator remains in the initial folded posture.

Step 2: The UAV searches for the target object within the working range of the manipulator using a depth camera. It utilizes OpenCV to recognize and detect the two-dimensional coordinate information of the target object. The UAV performs a small-scale iterative search if the target object is undetected. If the target object is detected, the UAV lowers its height and utilizes the No. 2 TFmini for real-time detection of the vertical distance from the depth camera to the target object. Based on the coordinate transformation, the three-dimensional position information of the target object is obtained.

Step 3: The target object’s positional information is continuously updated in real time until it reaches the operational grasping range. The manipulator’s pose is adjusted, with the arm hanging down to enter the pre-grasping pose. Considering the relative position of the UAV to the target object, as well as the shape and size of the object to be grasped, the grasping range of the manipulator is set. The aerial manipulator enters the grasping range, and the trajectory planning and control system outputs the desired motion angles for each servo joint involved in the grasping process to the manipulator’s servo controller. Finally, the aerial manipulator completes the grasping of the target object.

Step 4: After the UAV completes the grasping process, it continues to climb. During its ascent, the real-time height information from the No. 2 TFmini () is collected. If fluctuates within a range of 3 cm above or below a reference value (), the target object is within the grasp range of the mechanical claw, and the grasping is successful. In such a case, the UAV flies towards the designated target delivery point. However, if is significantly larger than , the grasping has failed, and the UAV returns to searching for the target object.

Step 5: The UAV proceeds toward the designated target delivery point for searching. If the search is successful, the UAV descends to the predetermined placement height to deliver the target object. If the search is unsuccessful, the UAV continues with a small-scale iterative search. By maintaining a constant UAV altitude and implementing a delayed hovering approach, a certain buffer time is provided to the manipulator control system to make angular adjustments, ensuring synchronization between the manipulator control system and the flight control system.

Step 6: After the aerial manipulator successfully delivers the target object, the manipulator returns to its initial state and proceeds to the landing point.

5. Experimental Results

Experimental analysis and validation were conducted on the visual-guided hierarchical control and autonomous positioning system for the aerial manipulator based on simulation and physical real-world experiments.

5.1. Simulation Experiment for LiDAR SLAM-Based Autonomous Navigation of UAVs

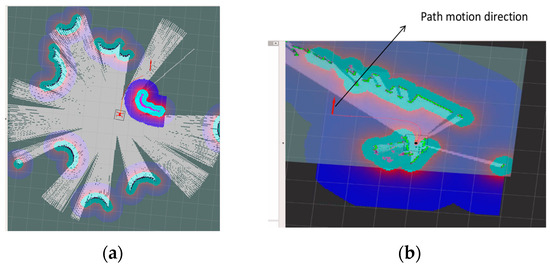

In this study, an autonomous navigation and path planning experiment for a LiDAR-based SLAM system was built on the robot operating system (ROS) platform. The operating system used was Linux Ubuntu 18.04, and the experiment employed the physical simulation platform Gazebo and the data visualization tool rviz for real-time monitoring. The testing environment was set up and obstacles were added to simulate the autonomous navigation system of the aerial manipulator.

First, in the simulator, the origin of the world coordinate system was taken as the initial position, along with a specified take-off height. At the beginning of the simulation, the UAV slowly ascended to the specified height and performed LiDAR scanner localization and map construction in the world coordinate system. The map data topic was visualized and displayed by rviz. The target object position was specified on the map and published as a topic. The UAV system in Gazebo then subscribed to this topic to obtain the optimized path planning algorithm for the shortest path and track to the position above the target object. Figure 10 shows the result of the SLAM autonomous navigation map building of a UAV from takeoff to near the target object in the Gazebo simulation environment. Figure 10a represents the laser radar scanning, where green and purple indicate obstacles. Figure 10b shows the UAV automatically planning its navigation based on the obstacles, with the planned flight path depicted as the red route. Based on the flight trajectory, the UAV readily avoided obstacles during flight, with no obvious swaying traces and a relatively smooth overall flight path.

Figure 10.

LiDAR-based SLAM autonomous navigation: (a) LiDAR scanning; (b) Autonomous navigation.

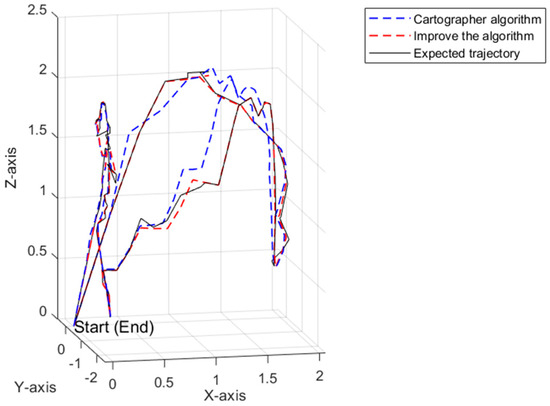

Based on the fused IMU laser SLAM algorithm proposed in this paper, the trajectory map of the UAV was constructed. This algorithm was compared with the classical laser SLAM algorithm Cartographer [39]. The Cartographer algorithm and the expected trajectory exhibited a relatively large cumulative drift error, while the trajectory obtained by the algorithm proposed in this paper had a relatively smaller cumulative drift error (Figure 11).

Figure 11.

Comparison of algorithm trajectory results.

5.2. Motion Test of the Aerial Manipulator

Before performing tasks, the vision-guided aerial manipulator required a series of debugging operations, including flight controller calibration, ESC initialization, PID parameter configuration, camera activation, and setting the initial position of the manipulator. At the system’s initial takeoff, the uneven terrain may have introduced uncertainty and error into the subsequent task execution. To further enhance the success rate of the mission, in our practical testing, we selected a flight scenario with dimensions of 4 m × 5 m × 4 m and a flight speed of 0.6 m/s.

Additionally, testing and experimental analysis of the motion process were conducted for the aerial manipulator system to ensure that the quad-rotor UAV maintained stable aerial operational capability and flight performance after mounting the manipulator.

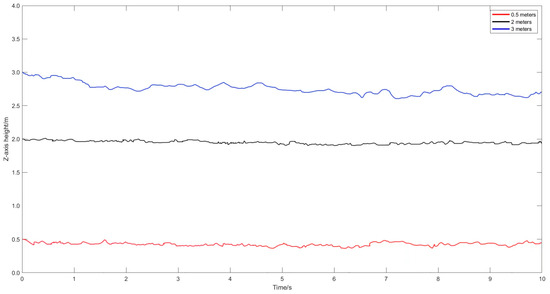

First, the flight stability during hovering was tested. Figure 12 provides height variation curves for the manipulator in the z-direction at hover heights of 0.5 m, 2 m, and 3 m. At a hover height of approximately 0.5 m, a slight fluctuation occurred in the UAV’s attitude due to ground effects. However, when flying at ~2 m, the flight was stable. Meanwhile, at a hover height of ~3 m, significant attitude variation occurred. Therefore, the selected hover height for this study was 2 m.

Figure 12.

The z-direction height variation of the manipulator.

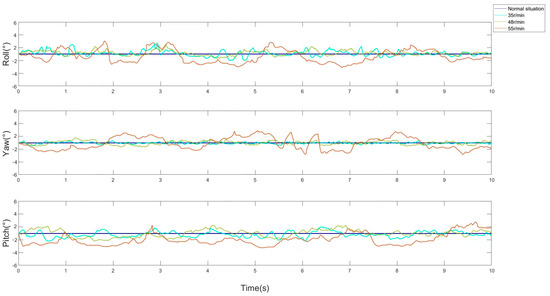

Second, to minimize the impact of manipulator motion on the stability of the flying platform, tests were conducted on the servo motor speeds. The testing scenario involved controlling the servo motor speed of the manipulator through the upper computer while the UAV was in stationary hover. Figure 13 provides the attitude angle variation curves of the servo motor at different speeds (35, 48, and 55 rpm). Moreover, the manipulator moved slowly at 48 rpm, and under conditions that ensured the operational efficiency of the manipulator, the impact of its motion on flight stability was minimized.

Figure 13.

Attitude variation curves of the servo motor at different speeds.

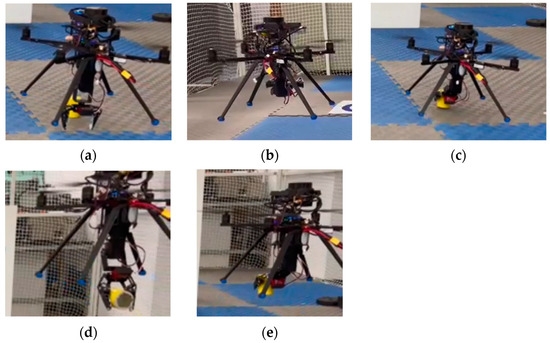

As shown in Figure 14, for the grasping task of the aerial manipulator, a segmented grasping strategy was applied. Specifically, five poses were designed, including the initial pose, pre-grasping pose, grasping pose, flight pose in operation, and delivery pose. The specific process was as follows:

Figure 14.

Main steps of aerial manipulator motion:(a) Initial pose, (b) pre-grasping pose, (c) grasping pose, (d) flight pose in operation, and (e) delivery pose.

Initial flight pose (Figure 14a): The UAV took off and approached the target object, while the manipulator remained in the initial retracted state. During the target search process, the SLAM mapping and scene reconstruction were performed with LiDAR, followed by autonomous positioning and target object search.

Pre-grasping pose (Figure 14b): The UAV navigated to the target object’s position using LiDAR-based SLAM for localization. The visual guidance system was activated to identify the target object. The UAV hovered and descended in altitude while the manipulator adjusted its position in advance. It vertically descended to approach the target object in the optimal pose for grasping. The manipulator reached the pre-grasping pose.

Grasping pose (Figure 14c): The aerial manipulator iteratively searched the target object, while the No. 2 TFmini continuously detected the height information between the UAV and target object. Once the grasping height of the aerial manipulator fell within the threshold range, the mechanical gripper quickly closed to grasp the target object.

Flight pose (Figure 14d): After the aerial manipulator grasped the target object, it flew away from the operating point and proceeded to the target delivery location.

Delivery pose (Figure 14e). The aerial manipulator reached the target position for delivery, and then took off to land at the original point.

During the entire motion testing experiment of the aerial manipulator, to ensure the system’s safety during the grasping operation, the angles of the two servos in the manipulator and gripper were repeatedly tested and optimized. The angle values of the two servos were defined as and , where represents the pitching action of the manipulator and represents the grasping action of the gripper. The corresponding output values and for the five poses during the operation of the flight robotic arm are shown in Table 2.

Table 2.

The angle values of the two servos.

From the analysis of the operational motion experiment, it was concluded that the aerial manipulator system was capable of autonomously positioning and navigating throughout the flight. It also performed target object grasping through visual guidance. Moreover, it accurately controlled the UAV’s attitude angles while maintaining a constant spatial position, possessing excellent spatial operational capabilities.

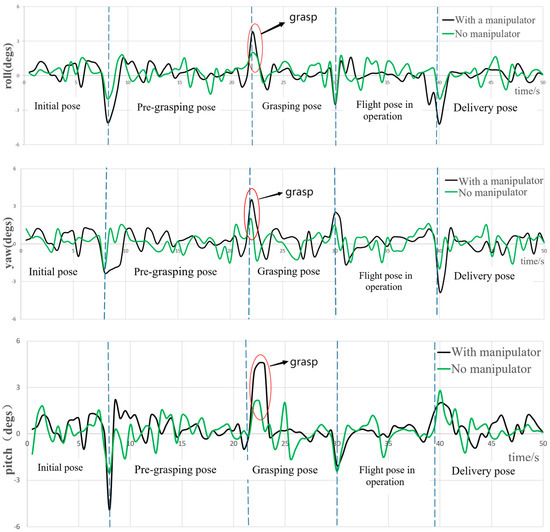

5.3. Attitude Analysis of the Aerial Manipulator

Rotary-wing UAVs are complex, nonlinear, and underactuated systems. When equipped with a manipulator to perform tasks, the system’s dynamics are influenced by factors such as motion velocity, angles, and payload mass. This can cause deviations in the attitude and positioning of the UAV, leading to mission failures, accidents such as collisions with the ground, and even crashes. Therefore, in this study, flight data was recorded to execute the five operational motion poses. The attitude information of the UAV was analyzed and compared before and after mounting the operational aerial manipulator. This analysis aimed to validate the operational stability of the proposed aerial manipulator system.

As shown in Figure 15, the aerial manipulator took off from 0 s to 8 s and moved along an autonomous navigation path in search of a target object. During the motion, the UAV’s attitude angles exhibited relatively small fluctuations before and after mounting the manipulator, which fell within the normal range of attitude angle variations for indoor flights. Between 9 s and 21 s, the UAV reached the target object and visually guided itself toward it. The manipulator descended vertically into the pre-grasping pose. Due to the disturbances caused by the manipulator, the center of gravity of the entire flight system can change, resulting in dynamic instability and slightly larger fluctuations in the attitude angles. However, the roll and yaw angles had relatively smaller fluctuations. With the manipulator, the range of fluctuation for the roll angle was within ±4°, and the range for the yaw angle was within ±2.5°. However, the pitch angle exhibited larger fluctuation within ±5°. This was due to the movement of the manipulator in the XOZ plane from the initial pose to the pre-grasping pose, significantly affecting the pitch angle stability of the aerial manipulator system.

Figure 15.

Comparison of the UAV attitude changes with and without manipulators.

Between 22 s and 30 s, the aerial manipulator entered the state of grasping the target object. Compared to the state without the manipulator, the presence of the manipulator resulted in larger variations in the attitude angles due to the weight of the grasped object. In particular, the pitch angle experienced fluctuations, however, generally remained within ±5° for control. The mechanical gripper completed the grasping action before and after 22 s (as indicated by the red circle in Figure 15). Subsequently, the aerial manipulator ascended to the operational flight pose, reached the target location for delivery, and landed at the destination. Throughout the flight process, the variations in the attitude angles can be controlled within ±5°, which falls within the normal fluctuation range. This also demonstrates the good stability of the designed operational aerial manipulator system.

In summary, the aerial manipulator system designed in this study can generally ensure the global stability of the system during flight operations in the five poses. It can respond to external forces caused by grasping the target object, allowing the UAV to maintain stability. Additionally, it does not produce significant position drift and exhibits robustness in underactuated conditions.

5.4. Position Analysis of the Aerial Manipulator

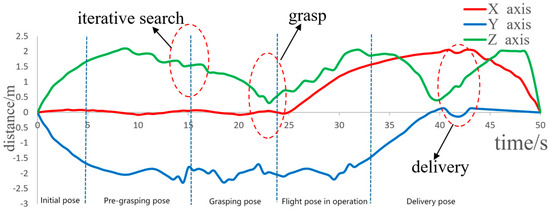

To further validate the effectiveness of the proposed method, we recorded the position data of the operational aerial manipulator system, including the initial, grasping, and delivery states.

As shown in Figure 16, the aerial manipulator took off and entered the target search state at approximately 2 m. During the iterative search for the target object, the positional information along the x, y, and z axes of the aerial manipulator fluctuated. At approximately 22 s, when the target object grasping was completed, considerable fluctuation occurred in the positional information along the x, y, and z axes. The height information along the z-axis was 35 cm, while the physical height of the aerial manipulator was 30 cm. Hence, the grasping height was within an acceptable operational range, confirming that the proposed method exhibits good control effectiveness.

Figure 16.

X, Y, Z direction positional tracking of the aerial manipulator system.

5.5. Control System Performance Analysis of the Aerial Manipulator

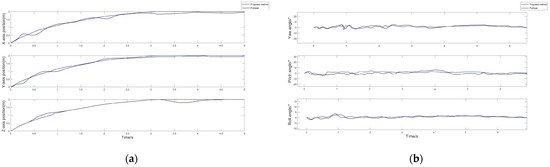

The performance validation of the aerial manipulator control system was conducted, with a primary focus on stability and disturbance resistance analysis. Moreover, the system was compared to the quaternion-based PID algorithm in the open-source Pixhawk flight controller (https://docs.px4.io/main/zh/config_mc/pid_tuning_guide_multicopter_basic.html, accessed on 8 June 2023). The motion of the manipulator during tasks, such as target object grasping, transportation, and delivery, generates reactive forces that introduce disturbances. Additionally, the state estimation process of the flight robotic arm introduces measurement noise. Therefore, this study performed trajectory tracking experiments for the aerial manipulator’s periodic swinging during straight-line UAV flight in the presence of dynamic disturbances. Two joint servos of the aerial manipulator swung periodically between θ1 = 0°, θ2 = 70°, and θ1 = 115 °, θ2 = 45° (Figure 17).

Figure 17.

Position and attitude analysis of aerial manipulator. (a) Position analysis, and (b) attitude analysis.

Compared to the quaternion-based cascaded PID algorithm used by Pixhawk, the aerial manipulator platform exhibited significant improvement in positional error in all three coordinate axes. When the manipulator underwent periodic swinging during UAV flight, the UAV’s positional and attitude errors using the proposed method were noticeably lower than those of the Pixhawk algorithm. These experiments demonstrate the effectiveness and robustness of the control method proposed in this paper.

6. Conclusions

This paper describes the design of a lightweight, single-degree-of-freedom aerial manipulator platform with a simple structure, implements a hierarchical control approach, and proposes a laser SLAM localization method integrated with IMU. By employing a segmented grasping strategy using a visual guidance algorithm, the successful grasping of target objects was achieved. The proposed SLAM-based autonomous navigation algorithm offered higher localization accuracy and greater reliability for UAVs with manipulator disturbances. Finally, the platform exhibited excellent flight stability and operational capabilities. This study provides valuable insights into the practical applications of future aerial manipulators and expands the scope of drone operations.

Our future work will focus primarily on the following research areas:

- (1)

- The proposed hierarchical control method failed to effectively address the coupling interaction between the manipulator and the UAV. Therefore, a coupling control method with good control effectiveness will be implemented onto the actual hardware platform.

- (2)

- The designed manipulator has a single degree of freedom and only grasps lightweight cylindrical objects. In the future, the structure of the manipulator and its end effector can be improved to achieve the grasping of objects in arbitrary poses.

- (3)

- The onboard computer Jetson Xavier NX in our system is tasked with multiple functions, however, its computational capabilities are limited. To conserve computational resources and improve the accuracy of target object detection, we will incorporate deep learning and lightweight neural network algorithms [40].

- (4)

- Considering that the motion of the robotic arm can alter the system’s center of gravity, affecting stability, the combination of the manipulator and a tilt-rotor UAV [41] can be considered to provide real-time compensation to address the system’s offset center of gravity. Additionally, the current position of the target object is pre-known, however, it can be autonomously obtained by installing a camera on the manipulator and providing real-time feedback [42], facilitating the dynamic tracking and capturing of moving targets. This will allow for the adjustment of the length and position of the manipulator, improving the grasping success rate.

- (5)

- Although we have focused on the control system of the aerial manipulator and the autonomous positioning and navigation system, improvements are required to address complex scenarios for various applications. In the future, consideration will be given to optimizing the motor torque of the robotic arm and the structure of the end effector to meet the practical application requirements of the flying robotic arm.

Author Contributions

Conceptualization, X.Y. and H.C.; methodology, L.W.; software (Ubuntu20.04 Python3.11), H.C., S.X. and H.N.; writing—original draft preparation, L.W. and X.Y.; writing—review and editing, L.W. and X.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This work is sponsored by the National College Student Innovation and Entrepreneurship Training Program (202310346043); General Projects of Zhejiang Provincial Department of Education (Y202353307).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The author declares no conflict of interest.

References

- Zhang, G.; He, Y.; Dai, B.; Gu, F.; Yang, L.; Han, J.; Liu, G. Aerial Grasping of an Object in the Strong Wind: Robust Control of an Aerial Manipulator. Appl. Sci. 2019, 9, 2230. [Google Scholar] [CrossRef]

- Verma, V.; Gupta, D.; Gupta, S.; Uppal, M.; Anand, D.; Ortega-Mansilla, A.; Alharithi, F.S.; Almotiri, J.; Goyal, N. A Deep Learning-Based Intelligent Garbage Detection System Using an Unmanned Aerial Vehicle. Symmetry 2022, 14, 960. [Google Scholar] [CrossRef]

- Sharma, D.; Sharma, B.; Mantri, A.; Goyal, N.; Singla, N. Dhwani Fire: Aerial System for Extinguishing Fire. ECS Trans. 2022, 107, 10295–10301. [Google Scholar] [CrossRef]

- Shakhatreh, H.; Sawalmeh, A.H.; Al-Fuqaha, A.; Dou, Z.; Almaita, E.; Khalil, I.; Othman, N.S.; Khreishah, A.; Guizani, M. Unmanned Aerial Vehicles (UAVs): A Survey on Civil Applications and Key Research Challenges. IEEE Access 2019, 7, 48572–48634. [Google Scholar] [CrossRef]

- Loor, S.J.; Bejarano, A.R.; Silva, F.M.; Andaluz, V.H. Construction and Control Aerial Manipulator Robot. In Proceedings of the International Conference on Industrial, Engineering and Other Applications of Applied Intelligent Systems, Kitakyushu, Japan, 22–25 September 2020; pp. 116–123. [Google Scholar]

- Hamaza, S.; Georgilas, I.; Fernandez, M.; Sanchez, P.; Richardson, T.; Heredia, G.; Ollero, A. Sensor installation and retrieval operations using an unmanned aerial manip-ulator. IEEE Robot. Autom. Lett. 2019, 4, 2793–2800. [Google Scholar] [CrossRef]

- Kroemer, O.; Niekum, S.; Konidaris, G. A Review of Robot Learning for Manipulation: Challenges, Representations, and Algorithms. J. Mach. Learn. Res. 2021, 22, 30–31. [Google Scholar]

- Sajjadi, S.; Bittick, J.; Janabi-Sharifi, F.; Mantegh, I. A Robust and Adaptive Sensor Fusion Approach for Indoor UAV Localization. In Proceedings of the 2023 International Conference on Unmanned Aircraft Systems (ICUAS), Warsaw, Poland, 6–9 June 2023; pp. 441–447. [Google Scholar] [CrossRef]

- Meng, X.; He, Y.; Gu, F.; Yang, L.; Dai, B.; Liu, Z.; Han, J. Design and implementation of rotor aerial manipulator system. In Proceedings of the 2016 IEEE International Conference on Robotics and Biomimetics (ROBIO), Qingdao, China, 3–7 December 2016; pp. 673–678. [Google Scholar]

- Suarez, A.; Soria, P.R.; Heredia, G.; Arrue, B.C.; Ollero, A. Anthropomorphic, compliant and lightweight dual arm system for aerial manipulation. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 992–997. [Google Scholar]

- Hamaza, S.; Georgilas, I.; Heredia, G.; Ollero, A.; Richardson, T. Design, modeling, and control of an aerial manipulator for placement and retrieval of sensors in the environment. J. Field Robot. 2020, 37, 1224–1245. [Google Scholar] [CrossRef]

- Yamada, T.; Tsuji, T.; Paul, H.; Martinez, R.R.; Ladig, R.; Shimonomura, K. Exploration of UAV Rope Handling and Flight in Narrow Space Strategies, using a Three-arm Aerial Manipulator System. In Proceedings of the 2022 IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM), Sapporo, Japan, 11–15 July 2022; pp. 677–682. [Google Scholar] [CrossRef]

- Peng, R.; Chen, X.; Lu, P. A Motion decoupled Aerial Robotic Manipulator for Better Inspection. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 4207–4213. [Google Scholar] [CrossRef]

- Zhang, W.; Liu, Q.; Wang, M.; Jia, J.; Lyu, S.; Guo, K.; Yu, X.; Guo, L. Design of an Aerial Manipulator System Applied to Capture Missions. In Proceedings of the 2021 International Conference on Unmanned Aircraft Systems (ICUAS), Athens, Greece, 15–18 June 2021; pp. 1063–1069. [Google Scholar] [CrossRef]

- Chaikalis, D.; Khorrami, F.; Tzes, A. Adaptive Control Approaches for an Unmanned Aerial Manipulation System. In Proceedings of the 2020 International Conference on Unmanned Aircraft Systems (ICUAS), Athens, Greece, 1–4 September 2020; pp. 498–503. [Google Scholar] [CrossRef]

- Chaikalis, D.; Evangeliou, N.; Tzes, A.; Khorrami, F. Decentralized Leader-Follower Visual Cooperative Package Transportation using Unmanned Aerial Manipulators. In Proceedings of the 2023 European Control Conference (ECC), Bucharest, Romania, 13–16 June 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Nguyen, H.; Alexis, K. Forceful Aerial Manipulation Based on an Aerial Robotic Chain: Hybrid Modeling and Control. IEEE Robot. Autom. Lett. 2021, 6, 3711–3719. [Google Scholar] [CrossRef]

- Nekoo, S.R.; Suarez, A.; Acosta, J.; Heredia, G.; Ollero, A. Constrained Design Optimization of a Long-Reach Dual-Arm Aerial Manipulator for Maintenance Tasks. In Proceedings of the 2023 International Conference on Unmanned Aircraft Systems (ICUAS), Warsaw, Poland, 6–9 June 2023; pp. 281–288. [Google Scholar] [CrossRef]

- Luo, B.; Chen, H.; Quan, F.; Zhang, S.; Liu, Y. Natural Feature-based Visual Servoing for Grasping Target with an Aerial Manipulator. J. Bionic Eng. 2020, 17, 215–228. [Google Scholar] [CrossRef]

- Thomas, J.; Loianno, G.; Sreenath, K.; Kumar, V. Toward image-based visual servoing for aerial grasping and perching. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014. [Google Scholar]

- Wu, H.; Jiang, L.; Liu, X.; Li, J.; Yang, Y.; Zhang, S. Intelligent Explosive Ordnance Disposal UAV System Based on Manipulator and Real-Time Object Detection. In Proceedings of the 2021 4th International Conference on Intelligent Robotics and Control Engineering (IRCE), Lanzhou, China, 18–20 September 2021; pp. 61–65. [Google Scholar] [CrossRef]

- Zhiyang, Z.; Hongyu, C.; Xiaoqiang, Z.; Yanhua, S.; Zhiyuan, C.; Yin, L. Grasp Pose Estimation for Aerial Manipulator Based on Improved 6-DOF GraspNet. In Proceedings of the 2022 5th International Conference on Pattern Recognition and Artificial Intelligence (PRAI), Chengdu, China, 23–25 September 2022; pp. 550–555. [Google Scholar] [CrossRef]

- Chen, Y.; Wu, Y.; Zhang, Z.; Miao, Z.; Zhong, H.; Zhang, H.; Wang, Y. Image-Based Visual Servoing of Unmanned Aerial Manipulators for Tracking and Grasping a Moving Target. IEEE Trans. Ind. Inform. 2023, 19, 8889–8899. [Google Scholar] [CrossRef]

- Hu, A.; Hu, A.; Xu, M.; Xu, M.; Wang, H.; Wang, H.; Liang, X.; Liang, X.; Castaneda, H.; Castaneda, H. Vision-Based Hierarchical Impedance Control of an Aerial Manipulator. IEEE Trans. Ind. Electron. 2023, 70, 7014–7022. [Google Scholar] [CrossRef]

- Ramon-Soria, P.; Arrue, B.C.; Ollero, A. Grasp Planning and Visual Servoing for an Outdoors Aerial Dual Manipulator. Engineering 2020, 6, 77–88. [Google Scholar] [CrossRef]

- Kim, S.; Seo, H.; Kim, H.J. Operating an unknown drawer using an aerial manipulator. In Proceedings of the IEEE International Conference on Robotics and Automation, Seattle, WA, USA, 26–30 May 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 5503–5508. [Google Scholar]

- Seo, H.; Kim, S.; Kim, H.J. Aerial grasping of cylindrical object using visual servoing based on stochastic model predictive control. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 6362–6368. [Google Scholar]

- Kanellakis, C.; Nikolakopoulos, G. Guidance for Autonomous Aerial Manipulator Using Stereo Vision. J. Intell. Robot. Syst. 2020, 100, 1545–1557. [Google Scholar] [CrossRef]

- Meng, X.; He, Y.; Zhang, H.; Yang, L.; Gu, F.; Han, J. Contact force control of aerial manipulator systems. Control. Theory Appl. 2020, 37, 59–68. (In Chinese) [Google Scholar]

- Zhong, H.; Wang, Y.; Li, L.; Liu, H.; Li, L. Modeling and Dynamic Center of Gravity Compensation Control of a Rotorcraft Flight Robot Arm. Control. Theory Appl. 2016, 33, 311–320. (In Chinese) [Google Scholar]

- Thomas, J.; Loianno, G.; Daniilidis, K.; Kumar, V. The role of vision in perching and grasping for MAVs. In Proceedings of the Micro- and Nanotechnology Sensors, Systems, and Applications VIII, Bellingham, WA, USA, 17–21 April 2016; p. 98361S. [Google Scholar]

- Mandal, A.K.; Seo, J.-B.; De, S.; Poddar, A.K.; Rohde, U. A Novel Statistically-Aided Learning Framework for Precise Localization of UAVs. In Proceedings of the 2023 IEEE 97th Vehicular Technology Conference (VTC2023-Spring), Florence, Italy, 20–23 June 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Dissanayake, M.; Newman, P.; Clark, S.; Durrant-Whyte, H.; Csorba, M. A solution to the simultaneous localization and map building (SLAM) problem. IEEE Trans. Robot. Autom. 2001, 17, 229–241. [Google Scholar] [CrossRef]

- Rauf, A.; Muhamamd, W.; Mehmood, Z.; Irshad, M.J. Simultaneous Localization and Mapping of UAV For Precision Agricultural Application. In Proceedings of the 2023 International Conference on Energy, Power, Environment, Control, and Computing (ICEPECC), Gujrat, Pakistan, 8–9 March 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Lin, J.; Zhang, F. Loam livox: A fast, robust, high-precision LiDAR odometry and mapping package for LiDARs of small FoV. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 3126–3131. [Google Scholar]

- Zhang, J.; Singh, S. Low-drift and real-time lidar odometry and mapping. Auton. Robot. 2017, 41, 401–416. [Google Scholar] [CrossRef]

- Pomerleau, F.; Colas, F.; Siegwart, R.; Magnenat, S. Comparing ICP variants on real-world datasets. Auton. Robot. 2013, 34, 133–148. [Google Scholar] [CrossRef]

- Vanli, O.A.; Taylor, C.N. Covariance Estimation for Factor Graph Based Bayesian Estimation. In Proceedings of the IEEE 23rd International Con-ference on Information Fusion (FUSION), Rustenburg, South Africa, 6–9 July 2020. [Google Scholar]

- Nekoo, S.R.; Acosta, J.; Ollero, A. Quaternion-based state-dependent differential Riccati equation for quadrotor drones: Regulation control problem in aerobatic flight. Robotica 2022, 40, 3120–3135. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, Y.; Hu, K. FEUI: Fusion Embedding for User Identification across social networks. Appl. Intell. 2021, 52, 8209–8225. [Google Scholar] [CrossRef]

- Elfeky, M.; Elshafei, M.; Saif, A.-W.A.; Al-Malki, M.F. Modeling and simulation of quadrotor UAV with tilting rotors. Int. J. Control. Autom. Syst. 2016, 14, 1047–1055. [Google Scholar] [CrossRef]

- Ge, D.; Yao, X.; Xiang, W.; Liu, E.; Wu, Y. Calibration on Camera’s Intrinsic Parameters Based on Orthogonal Learning Neural Network and Vanishing Points. IEEE Sens. J. 2020, 20, 11856–11863. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).