Partial Correlation Analysis and Neural-Network-Based Prediction Model for Biochemical Recurrence of Prostate Cancer after Radical Prostatectomy

Abstract

Featured Application

Abstract

1. Introduction

2. Materials and Methods

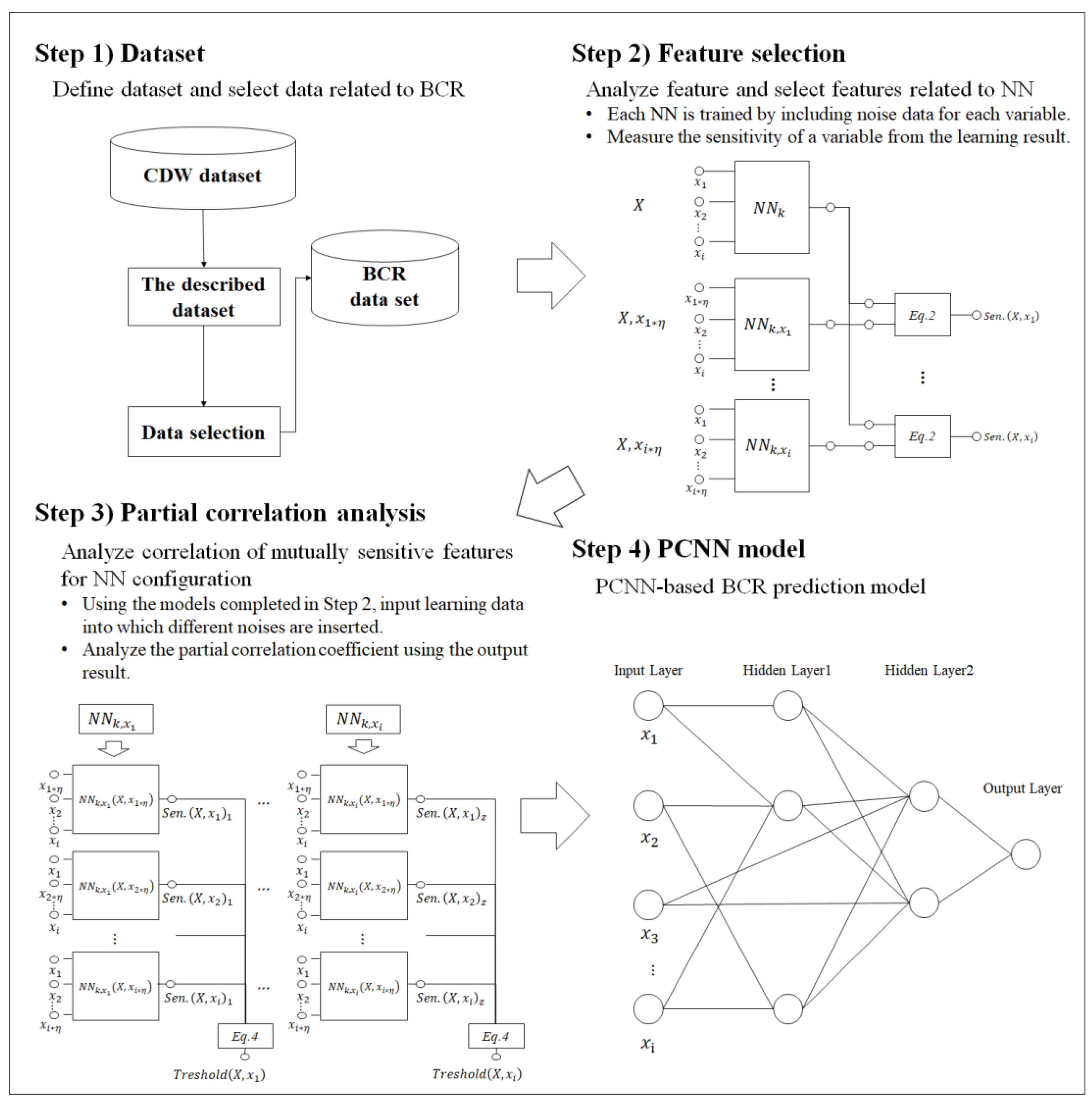

2.1. Study Design

2.2. Dataset

2.3. Feature-Sensitive Analysis

2.4. Partial Correlation Analysis

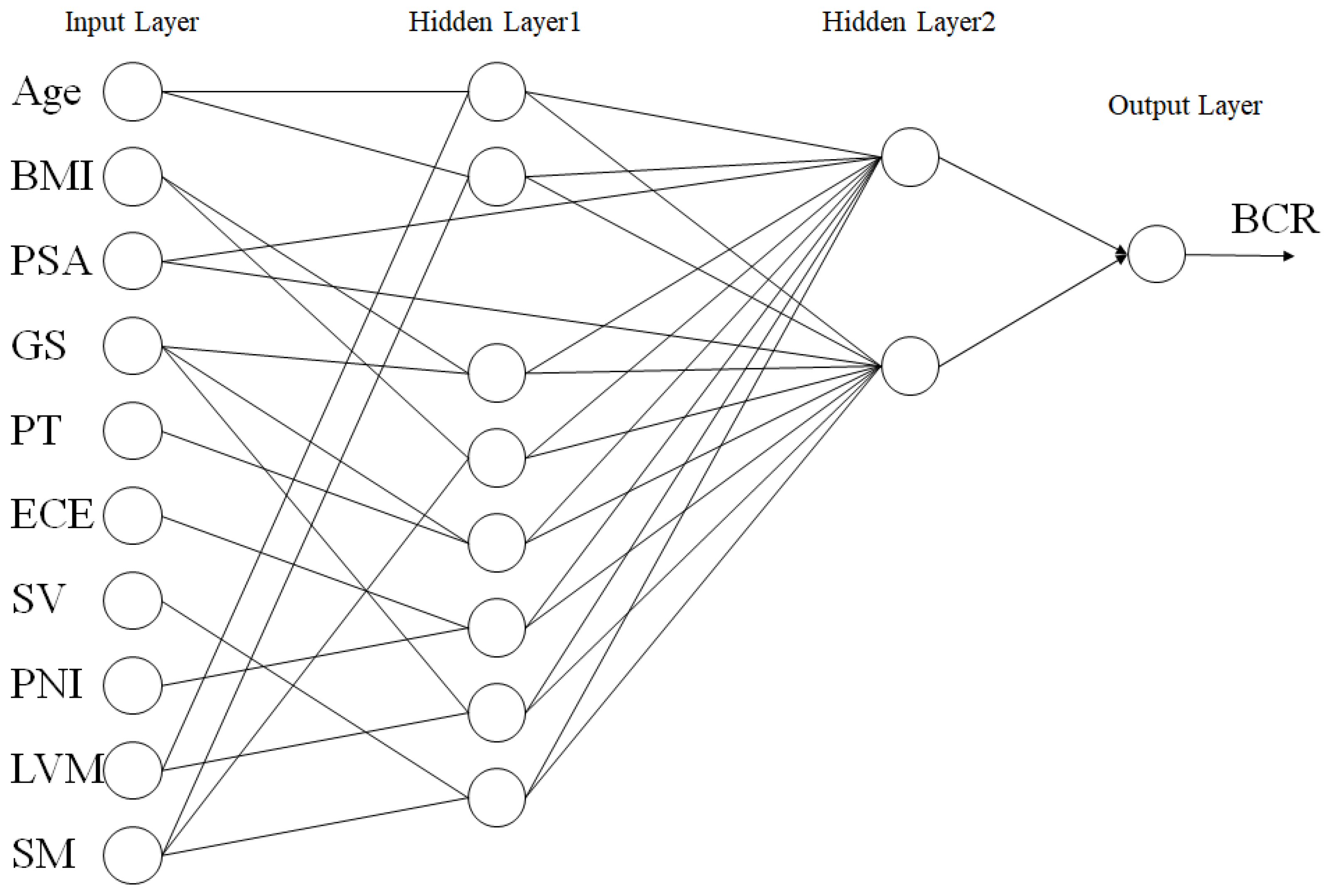

2.5. Partial Correlation Neural Network

3. Results

3.1. Characteristics

3.2. PCNN

3.3. Performance Measures

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ko, Y.H.; Park, S.W.; Ha, U.S.; Joung, J.Y.; Jeong, S.H.; Byun, S.S.; Jeon, S.S.; Kwak, C. A comparison of the survival outcomes of robotic-assisted radical prostatectomy and radiation therapy in patients over 75 years old with non-metastatic prostate cancer: A Korean multicenter study. Investig. Clin. Urol. 2021, 62, 535–544. [Google Scholar] [CrossRef] [PubMed]

- Briganti, A.; Karnes, R.J.; Joniau, S.; Boorjian, S.A.; Cozzarini, C.; Gandaglia, G.; Hinkelbein, W.; Haustermans, K.; Tombal, B.; Shariat, S.; et al. Prediction of outcome following early salvage radiotherapy among patients with biochemical recurrence after radical prostatectomy. Eur. Urol. 2014, 66, 479–486. [Google Scholar] [CrossRef] [PubMed]

- Van den Broeck, T.; van den Bergh, R.C.N.; Arfi, N.; Gross, T.; Moris, L.; Briers, E.; Cumberbatch, M.; De Santis, M.; Tilki, D.; Fanti, S.; et al. Prognostic value of biochemical recurrence following treatment with curative intent for prostate cancer: A systematic review. Eur. Urol. 2019, 75, 967–987. [Google Scholar] [CrossRef] [PubMed]

- Punnen, S.; Cooperberg, M.R.; D’Amico, A.V.; Karakiewicz, P.I.; Moul, J.W.; Scher, H.I.; Schlomm, T.; Freedland, S.J. Management of biochemical recurrence after primary treatment of prostate cancer: A systematic review of the literature. Eur. Urol. 2013, 64, 905–915. [Google Scholar] [CrossRef] [PubMed]

- Remmers, S.; Verbeek, J.F.M.; Nieboer, D.; van der Kwast, T.; Roobol, M.J. Predicting biochemical recurrence and prostate cancer-specific mortality after radical prostatectomy: Comparison of six prediction models in a cohort of patients with screening- and clinically detected prostate cancer. BJU Int. 2019, 124, 635–642. [Google Scholar] [CrossRef]

- Qiao, P.; Zhang, D.; Zeng, S.; Wang, Y.; Wang, B.; Hu, X. Using machine learning method to identify MYLK as a novel marker to predict biochemical recurrence in prostate cancer. Biomark Med. 2021, 15, 29–41. [Google Scholar] [CrossRef]

- Vittrant, B.; Leclercq, M.; Martin-Magniette, M.L.; Collins, C.; Bergeron, A.; Fradet, Y.; Droit, A. Identification of a transcriptomic prognostic signature by machine learning using a combination of small cohorts of prostate cancer. Front Genet. 2020, 11, 550894. [Google Scholar] [CrossRef]

- Lee, S.J.; Yu, S.H.; Kim, Y.; Kim, J.K.; Hong, J.H.; Kim, C.; Seo, S.I.; Byun, S.S.; Jeong, C.W.; Lee, J.Y.; et al. Prediction system for prostate cancer recurrence using machine learning. Appl. Sci. 2020, 10, 1333. [Google Scholar] [CrossRef]

- Ekşi, M.; Evren, İ.; Akkaş, F.; Arıkan, Y.; Özdemir, O.; Özlü, D.N.; Ayten, A.; Sahin, S.; Tuğcu, V.; Taşçı, A.İ. Machine learning algorithms can more efficiently predict biochemical recurrence after robot-assisted radical prostatectomy. Prostate 2021, 81, 913–920. [Google Scholar] [CrossRef]

- Tan, Y.G.; Fang, A.H.S.; Lim, J.K.S.; Khalid, F.; Chen, K.; Ho, H.S.S.; Yuen, J.S.; Huang, H.H.; Tay, K.J. Incorporating artificial intelligence in urology: Supervised machine learning algorithms demonstrate comparative advantage over nomograms in predicting biochemical recurrence after prostatectomy. Prostate 2022, 82, 298–305. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, L.; Yang, Y.; Zhou, L.; Ren, L.; Wang, F.; Liu, R.; Pang, Z.; Deen, M.J. A novel cloud-based framework for the elderly healthcare services using digital twin. IEEE Access. 2019, 7, 49088–49101. [Google Scholar] [CrossRef]

- Elayan, H.; Aloqaily, M.; Guizani, M. Digital twin for intelligent context-aware IoT healthcare systems. IEEE Internet Things J. 2021, 8, 16749–16757. [Google Scholar] [CrossRef]

- Feng, Y.; Chen, X.; Zhao, J. Create the individualized digital twin for noninvasive precise pulmonary healthcare. Significances Bioeng Biosci. 2018, 1, 2. [Google Scholar] [CrossRef]

- Zhang, J.; Li, L.; Lin, G.; Fang, D.; Tai, Y.; Huang, J. Cyber resilience in healthcare digital twin on lung cancer. IEEE Access. 2020, 8, 201900–201913. [Google Scholar] [CrossRef]

- Patrone, C.; Galli, G.; Revetria, R. A state of the art of digital twin and simulation supported by data mining in the healthcare sector. Advancing Technology Industrialization Through Intelligent Software Methodologies, Tools and Techniques. IOS Press 2019, 318, 605–615. [Google Scholar]

- Sargos, P.; Leduc, N.; Giraud, N.; Gandaglia, G.; Roumiguié, M.; Ploussard, G.; Rozet, F.; Soulié, M.; Mathieu, R.; Artus, P.M.; et al. Deep neural networks outperform the CAPRA score in predicting biochemical recurrence after prostatectomy. Front Oncol. 2021, 10, 3237. [Google Scholar] [CrossRef]

- Hu, X.H.; Cammann, H.; Meyer, H.A.; Jung, K.; Lu, H.B.; Leva, N.; Magheli, A.; Stephan, C.; Busch, J. Risk prediction models for biochemical recurrence after radical prostatectomy using prostate-specific antigen and Gleason score. Asian J. Androl. 2014, 16, 897–901. [Google Scholar]

- Peterson, L.E.; Ozen, M.; Erdem, H.; Amini, A.; Gomez, L.; Nelson, C.C.; Ittmann, M. Artificial neural network analysis of DNA microarray-based prostate cancer recurrence. In Proceedings of the 2005 IEEE Symposium on Computational Intelligence in Bioinformatics and Computational Biology, San Diego, CA, USA, 14–15 November 2005. [Google Scholar]

- Yan, Y.; Shao, L.; Liu, Z.; He, W.; Yang, G.; Liu, J.; Xia, H.; Zhang, Y.; Chen, H.; Liu, C.; et al. Deep learning with quantitative features of magnetic resonance images to predict biochemical recurrence of radical prostatectomy: A multi-center study. Cancers 2021, 13, 3098. [Google Scholar] [CrossRef]

- Olden, J.D.; Jackson, D.A. Illuminating the “black box”: A randomization approach for understanding variable contributions in artificial neural networks. Ecol Modell. 2002, 154, 135–150. [Google Scholar] [CrossRef]

- Samek, W.; Wiegand, T.; Müller, K.-R. Explainable artificial intelligence: Understanding, visualizing and interpreting deep learning models. arXiv 2017, arXiv:1708.08296. [Google Scholar]

- Dayhoff, J.E.; DeLeo, J.M. Artificial neural networks: Opening the black box. Cancer 2001, 91 (Supp. Sl), 1615–1635. [Google Scholar] [CrossRef] [PubMed]

- Zednik, C. Solving the black box problem: A normative framework for explainable artificial intelligence. Philos Technol. 2021, 34, 265–288. [Google Scholar] [CrossRef]

- Buhrmester, V.; Münch, D.; Arens, M. Analysis of explainers of black box deep neural networks for computer vision: A survey. Mach Learn Knowl Extr. 2021, 3, 966–989. [Google Scholar] [CrossRef]

- Yeom, S.K.; Seegerer, P.; Lapuschkin, S.; Binder, A.; Wiedemann, S.; Müller, K.R.; Samek, W. Pruning by explaining: A novel criterion for deep neural network pruning. Pattern Recognit. 2021, 115, 107899. [Google Scholar] [CrossRef]

- Liang, T.; Glossner, J.; Wang, L.; Shi, S.; Zhang, X. Pruning and quantization for deep neural network acceleration: A survey. Neurocomputing 2021, 461, 370–403. [Google Scholar] [CrossRef]

- Freedland, S.J.; Sutter, M.E.; Dorey, F.; Aronson, W.J. Defining the ideal cutpoint for determining PSA recurrence after radical prostatectomy. Prostate-specific antigen. Urology 2003, 61, 365–369. [Google Scholar] [CrossRef]

- Naik, D.L. A novel sensitivity-based method for feature selection. J. Big Data 2021, 8, 1. [Google Scholar] [CrossRef]

- Laroza Silva, D.; Marcelo De Jesus, K.L. Backpropagation Neural Network with Feature Sensitivity Analysis: Pothole Prediction Model for Flexible Pavements using Traffic and Climate Associated Factors. In Proceedings of the 2020 the 3rd International Conference on Computing and Big Data, Taichung Taiwan, 5–7 August 2020. [Google Scholar]

- Abaker, A.A.; Saeed, F.A. Towards transparent machine learning models using feature sensitivity algorithm. J. Inform. 2020, 14, 15–22. [Google Scholar] [CrossRef]

- Yang, J.; Li, L.; Wang, A. A partial correlation-based Bayesian network structure learning algorithm under linear SEM. Knowl Based Syst. 2011, 24, 963–976. [Google Scholar] [CrossRef]

- Agastinose Ronicko, J.F.A.; Thomas, J.; Thangavel, P.; Koneru, V.; Langs, G.; Dauwels, J. Diagnostic classification of autism using resting-state fMRI data improves with full correlation functional brain connectivity compared to partial correlation. J. Neurosci Methods 2020, 345, 108884. [Google Scholar] [CrossRef]

- Epskamp, S.; Fried, E.I. A tutorial on regularized partial correlation networks. Psychol. Methods 2018, 23, 617–634. [Google Scholar] [CrossRef]

- Kim, J.K.; Kang, S. Neural network-based coronary heart disease risk prediction using feature correlation analysis. J. Healthc. Eng. 2017, 2017, 2780501. [Google Scholar] [CrossRef]

- Sajjad, U.; Hussain, I.; Imran, M.; Sultan, M.; Wang, C.C.; Alsubaie, A.S.; Mahmoud, K.H. Boiling heat transfer evaluation in nanoporous surface coatings. Nanomaterials 2021, 11, 3383. [Google Scholar] [CrossRef]

- Sajjad, U.; Hussain, I.; Raza, W.; Sultan, M.; Alarifi, I.M.; Wang, C.C. On the critical heat flux assessment of micro- and nanoscale roughened surfaces. Nanomaterials 2022, 12, 3256. [Google Scholar] [CrossRef]

- Momenzadeh, N.; Hafezalseheh, H.; Nayebpour, M.R.; Fathian, M.; Noorossana, R. A hybrid machine learning approach for predicting survival of patients with prostate cancer: A SEER-based population study. Inform. Med. Unlocked 2021, 27, 100763. [Google Scholar] [CrossRef]

| BCR | Non-BCR | Pearson Correlation | |

|---|---|---|---|

| 283 | 738 | ||

| Age | 67.47 | 66.747 | −0.05 |

| BMI | 23.416 | 23.253 | −0.025 |

| PSA | 23.576 | 4.765 | −0.144 |

| Gleason score (sum) | −0.385 | ||

| 5 | - | 8 | |

| 6 | 4 | 115 | |

| 7 | 154 | 536 | |

| 8 | 64 | 45 | |

| 9 | 57 | 33 | |

| 10 | 4 | 1 | |

| Pathology T stage | −0.385 | ||

| T1 | 1 | 4 | |

| T2a | 7 | 87 | |

| T2b | 8 | 29 | |

| T2c | 67 | 445 | |

| T3a | 96 | 112 | |

| T3b | 95 | 56 | |

| T4 | 9 | 5 | |

| ECE | −0.421 | ||

| Present | 94 | 575 | |

| Absent | 189 | 163 | |

| SV | −0.361 | ||

| Present | 174 | 676 | |

| Absent | 109 | 62 | |

| PNI | −0.236 | ||

| Present | 33 | 264 | |

| Absent | 250 | 477 | |

| LNM | −0.295 | ||

| Present | 174 | 647 | |

| Absent | 109 | 91 | |

| SM | −0.291 | ||

| Present | 119 | 540 | |

| Absent | 164 | 198 |

| Sensitivity | Rank | |

|---|---|---|

| NN_k (X, Age·η) | 0 | 10 |

| NN_k (X, BMI·η) | 0.46 | 9 |

| NN_k (X, PSA·η) | 1.89 | 7 |

| NN_k (X, GS ·η) | 0.47 | 8 |

| NN_k (X, pT·η) | 2.83 | 5 |

| NN_k (X, ECE·η) | 2.83 | 5 |

| NN_k (X, SV·η) | 9.01 | 1 |

| NN_k (X, PNI·η) | 3.79 | 3 |

| NN_k (X, LNM·η) | 3.32 | 4 |

| NN_k (X, SM·η) | 4.75 | 2 |

| Age | BMI | PSA | GS | PT | ECE | SV | PNI | LNM | SM | |

|---|---|---|---|---|---|---|---|---|---|---|

| Age | 0 | 1.42 | 0 | 7.63 | 0.94 | 0.47 | 9.95 | 0.94 | 9.47 | 8.06 |

| BMI | 1.42 | 0 | 1.42 | 11.37 | 0.94 | 0.95 | 8.05 | 2.84 | 6.63 | 9.95 |

| PSA | 0.01 | 2.85 | 0 | 5.21 | 0 | 0.48 | 6.16 | 0.48 | 1.89 | 8.06 |

| GS | 9.94 | 6.64 | 2.37 | 0 | 4.27 | 1.43 | 9.47 | 3.78 | 13.26 | 15.17 |

| PT | 3.78 | 3.8 | 1.42 | 11.84 | 0 | 2.37 | 8.53 | 0.94 | 5.68 | 2.84 |

| ECE | 1.89 | 2.37 | 1.89 | 5.21 | 0.48 | 0 | 8.05 | 1.89 | 2.36 | 5.21 |

| SV | 4.26 | 9.48 | 4.26 | 17.06 | 4.27 | 3.8 | 0 | 3.78 | 4.26 | 8.06 |

| PNI | 4.26 | 1.9 | 1.89 | 8.05 | 2.38 | 3.8 | 5.21 | 0 | 4.74 | 8.53 |

| LNM | 4.73 | 2.37 | 4.26 | 14.21 | 1.9 | 0 | 11.37 | 0.47 | 0 | 2.84 |

| SM | 8.05 | 5.22 | 4.74 | 9.47 | 0 | 1.9 | 18.95 | 1.41 | 7.1 | 0 |

| Threshold | 4.26 | 4.00556 | 2.47222 | 10.0056 | 1.68667 | 1.68889 | 9.52667 | 1.83667 | 6.15444 | 7.63556 |

| Candidate | LNM, SM | GS, SM | SM | BMI, PT, SV, LNM | GS | PNI | PSA, SM | ECE | Age, GS | Age, BMI, SV |

| Training | Testing | |||||

|---|---|---|---|---|---|---|

| Accuracy | Sensitivity | Specificity | Accuracy | Sensitivity | Specificity | |

| SVM | 89.16 | 94.34 | 86.26 | 71.12 | 74.56 | 68.20 |

| BN | 88.86 | 92.64 | 88.80 | 70.96 | 73.77 | 70.47 |

| RF | 94.60 | 100 | 92.28 | 72.05 | 76.25 | 70.36 |

| XGBoost (LR) | 94.24 | 96.40 | 91.88 | 71.63 | 73.67 | 70.57 |

| XGBoost (Tree) | 94.60 | 100 | 92.28 | 72.14 | 76.23 | 70.85 |

| NN | 87.84 | 92.64 | 86.26 | 69.79 | 73.54 | 68.85 |

| RNN | 86.64 | 92.22 | 84.42 | 67.73 | 72.26 | 67.78 |

| LSTM | 88.86 | 96.40 | 86.26 | 71.12 | 72.26 | 68.85 |

| PCNN | 93.60 | 97.60 | 92.28 | 74.70 | 77.57 | 72.15 |

| Accuracy | Sensitivity | Specificity | |

|---|---|---|---|

| SVM | 83.29 | 87.82 | 81.00 |

| BN | 83.52 | 86.57 | 82.88 |

| RF | 86.66 | 91.89 | 84.24 |

| XGBoost (LR) | 86.16 | 88.42 | 84.85 |

| XGBoost (Tree) | 86.66 | 91.43 | 84.94 |

| NN | 82.18 | 86.77 | 80.59 |

| RNN | 81.68 | 84.64 | 80.44 |

| LSTM | 84.78 | 88.42 | 83.34 |

| PCNN | 87.16 | 90.80 | 85.62 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, J.-K.; Hong, S.-H.; Choi, I.-Y. Partial Correlation Analysis and Neural-Network-Based Prediction Model for Biochemical Recurrence of Prostate Cancer after Radical Prostatectomy. Appl. Sci. 2023, 13, 891. https://doi.org/10.3390/app13020891

Kim J-K, Hong S-H, Choi I-Y. Partial Correlation Analysis and Neural-Network-Based Prediction Model for Biochemical Recurrence of Prostate Cancer after Radical Prostatectomy. Applied Sciences. 2023; 13(2):891. https://doi.org/10.3390/app13020891

Chicago/Turabian StyleKim, Jae-Kwon, Sung-Hoo Hong, and In-Young Choi. 2023. "Partial Correlation Analysis and Neural-Network-Based Prediction Model for Biochemical Recurrence of Prostate Cancer after Radical Prostatectomy" Applied Sciences 13, no. 2: 891. https://doi.org/10.3390/app13020891

APA StyleKim, J.-K., Hong, S.-H., & Choi, I.-Y. (2023). Partial Correlation Analysis and Neural-Network-Based Prediction Model for Biochemical Recurrence of Prostate Cancer after Radical Prostatectomy. Applied Sciences, 13(2), 891. https://doi.org/10.3390/app13020891