Empirical Comparison of Higher-Order Mutation Testing and Data-Flow Testing of C# with the Aid of Genetic Algorithm

Abstract

1. Introduction

2. Data Flow and Mutation Testing

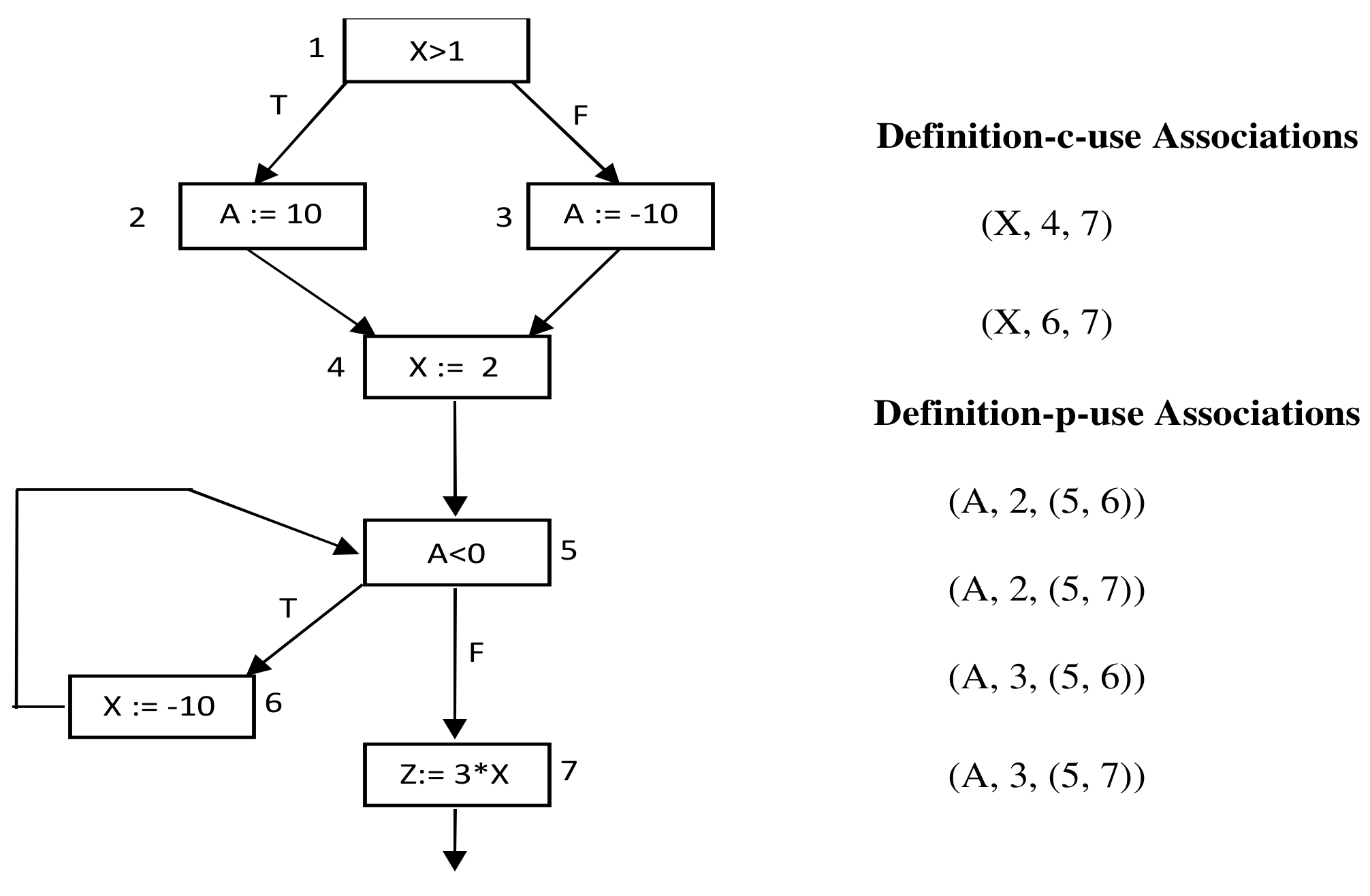

2.1. Data Flow Testing

2.2. Higher-Order Mutation

2.3. Relationship between Testing Criteria

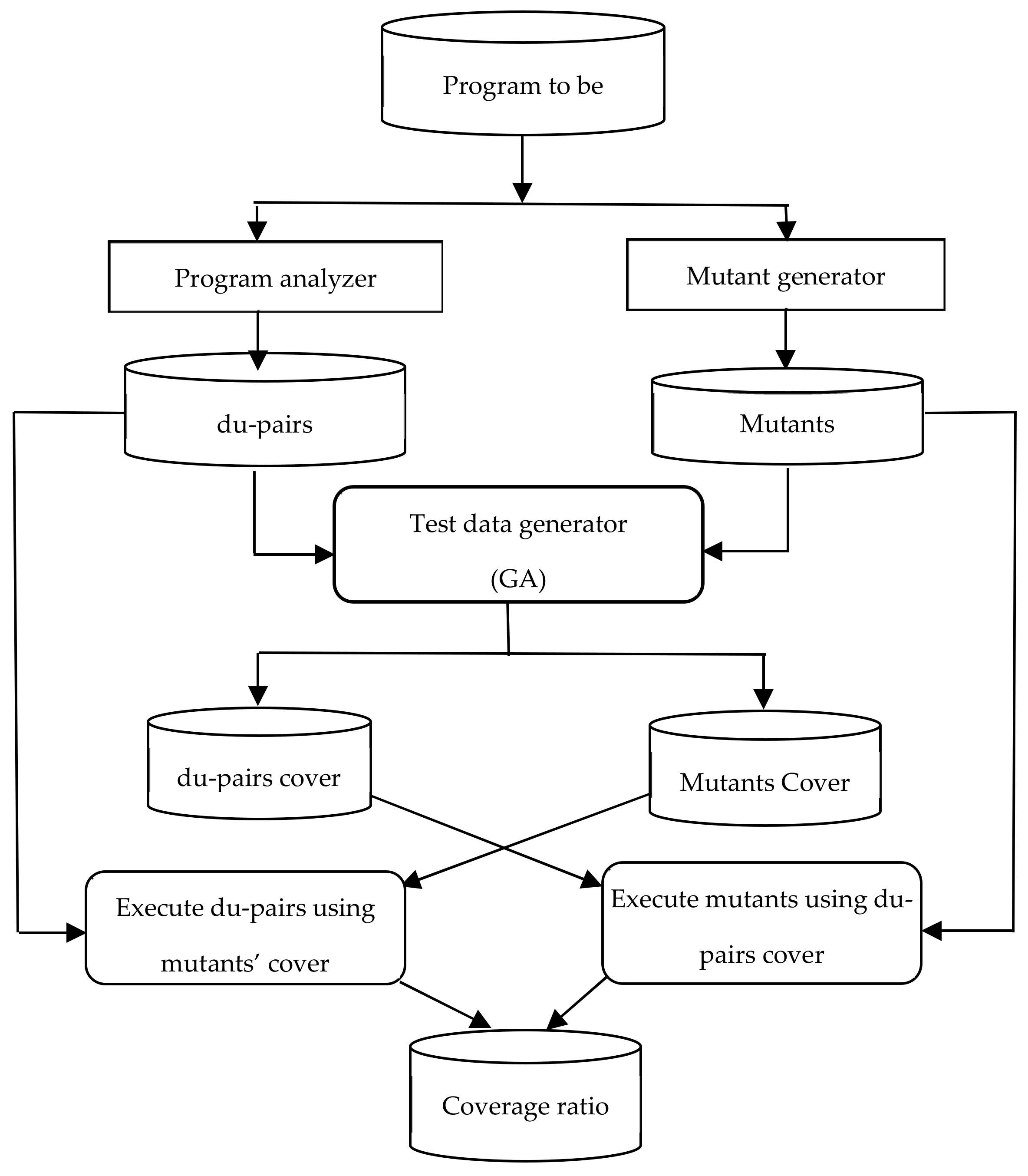

3. The Proposed Approach

- Program analyzer.

- Mutant generator.

- Test data generator.

3.1. Program Analyzer Module

- An Instrumented version (P’) of the given original program. The system instruments the assigned programs with software probes. During program execution, these probes cause the number of each traversed line to be recorded to keep track of the path traveled during the execution.

- The static analysis reports contain information about the components of each program: classes, objects, statements, variables, and functions.

- The control flow graph for the tested program.

- The list of variables def-use pairs for the tested program.

3.2. Mutant Generator Module

3.3. Test Data Generator Module

- Consider the population to be sets of test data (test suite). Each set of test data is represented by a binary string called a chromosome.

- Find the sets of test data that represent the initial population. These sets can be randomly generated according to the format and type of data used by the program under test, or they may be input to the GAs.

- Determine the fitness of each individual in the population, which is based on a fitness function that is problem dependent.

- Select two individuals that will be combined in some way to contribute to the next generation.

- Apply the crossover and mutation processes.

- The input to this phase includes:

- Instrumented version of the program to be tested.

- List of def-use paths to be covered.

- Number of program input variables.

- Domain and precision of input data.

- Population size.

- Maximum no. of generations.

- Probabilities of crossover and mutation.

- A dynamic analysis report that shows the traversed path(s) and list of covered and uncovered def-use pairs after executing the given program with the test cases generated by the GA.

- Set of test cases (du pairs cover) that cover the def-use paths of the given program, if possible. The GA may fail to find test cases to cover some of the specified def-use paths when they are infeasible (i.e., no test data can be found by the GA to cover them).

- Set of test cases (mutants cover) that cover the mutant.

4. The Experiments and Results

4.1. Subject Programs

4.2. Mutant Generator

4.3. GA Parameters Setup

- The programs’ titles (in column 1),

- The number of first-order mutants (in column 2),

- The number of killable first-order mutants (in column 3),

- The number of second-order mutants (in column 4),

- The number of killable second-order mutants (in column 5),

- The number of killable second-order mutants that affect du-pairs (in column 6), and

- The number of du-pairs for each subject program (in column 7).

- Apply the CREAM tool to generate first order mutants.

- Generate the second-order mutants for each program by applying the CREAM tool on all first-order mutants.

- Eliminate the second-order mutants that are stillborn, equivalent, or do not contain a def or use for any du-pairs.

- Generate the def-uses pairs for each program.

- Eliminate the stillborn and the equivalent mutants from the first and second-order mutants.

- Find the killable second-order mutants that affect the du-pairs.

| Tested Program | All 1st Order | Killable 1st Order | All 2nd Order | Killable 2nd Order | Du-Based Killable 2nd Order | Du-Pairs |

|---|---|---|---|---|---|---|

| Triangle | 163 | 146 | 30,799 | 23,799 | 2459 | 102 |

| Sort | 120 | 104 | 15,960 | 11,547 | 2333 | 95 |

| Stack | 70 | 57 | 5146 | 3507 | 354 | 68 |

| Reversed | 98 | 78 | 10,444 | 6620 | 956 | 77 |

| Clock | 78 | 74 | 7638 | 7036 | 109 | 64 |

| Quotient | 156 | 140 | 27,184 | 21,338 | 2597 | 89 |

| Product | 117 | 101 | 17,018 | 12,321 | 759 | 71 |

| Area | 112 | 88 | 15,555 | 8656 | 405 | 64 |

| Dist | 83 | 73 | 9207 | 6774 | 274 | 64 |

| Middle | 74 | 70 | 6876 | 5458 | 319 | 70 |

| Total | 1071 | 931 | 145,827 | 107,056 | 10,565 | 764 |

4.4. Comparison Hypotheses

4.5. Experimental Results

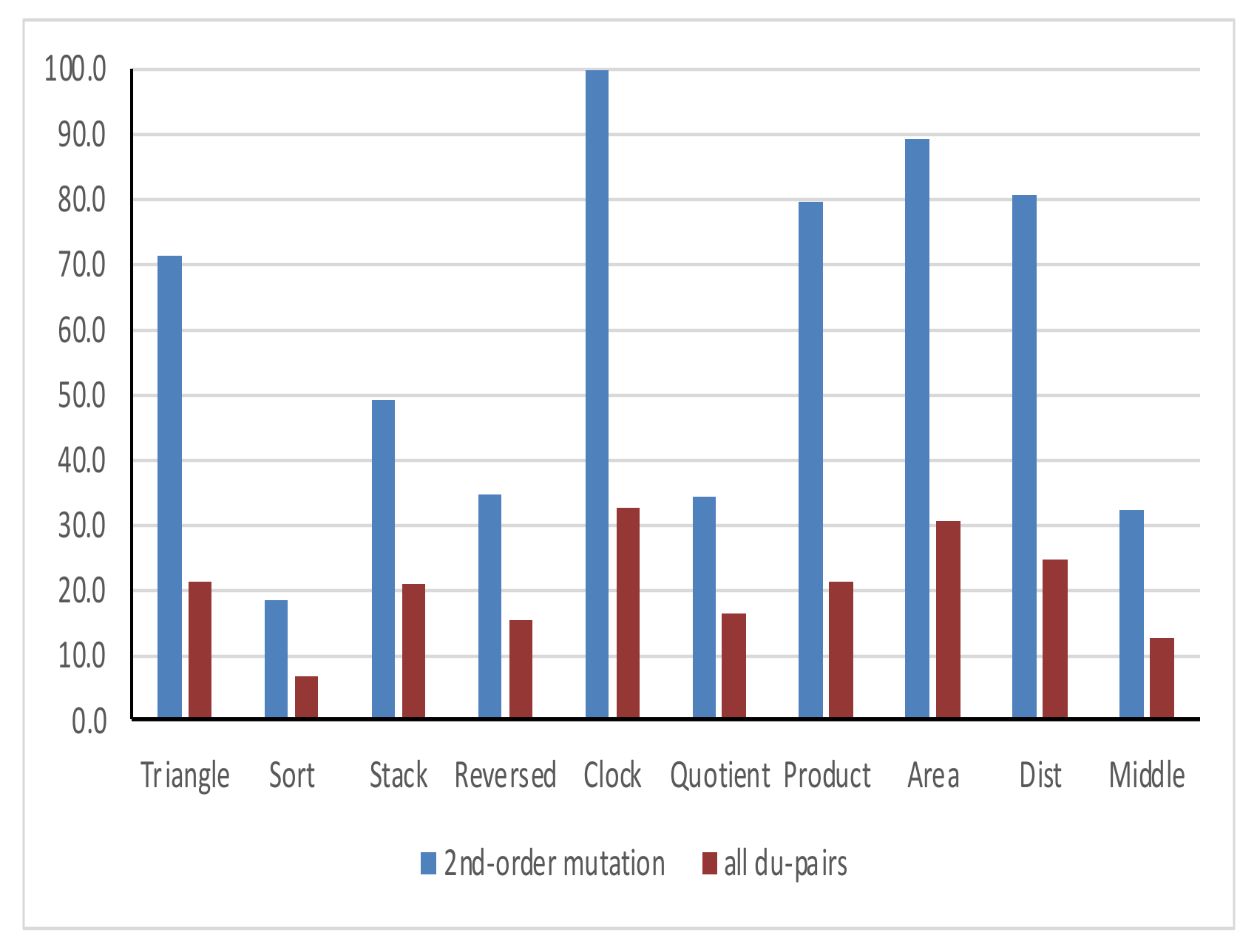

4.5.1. Coverage Cost

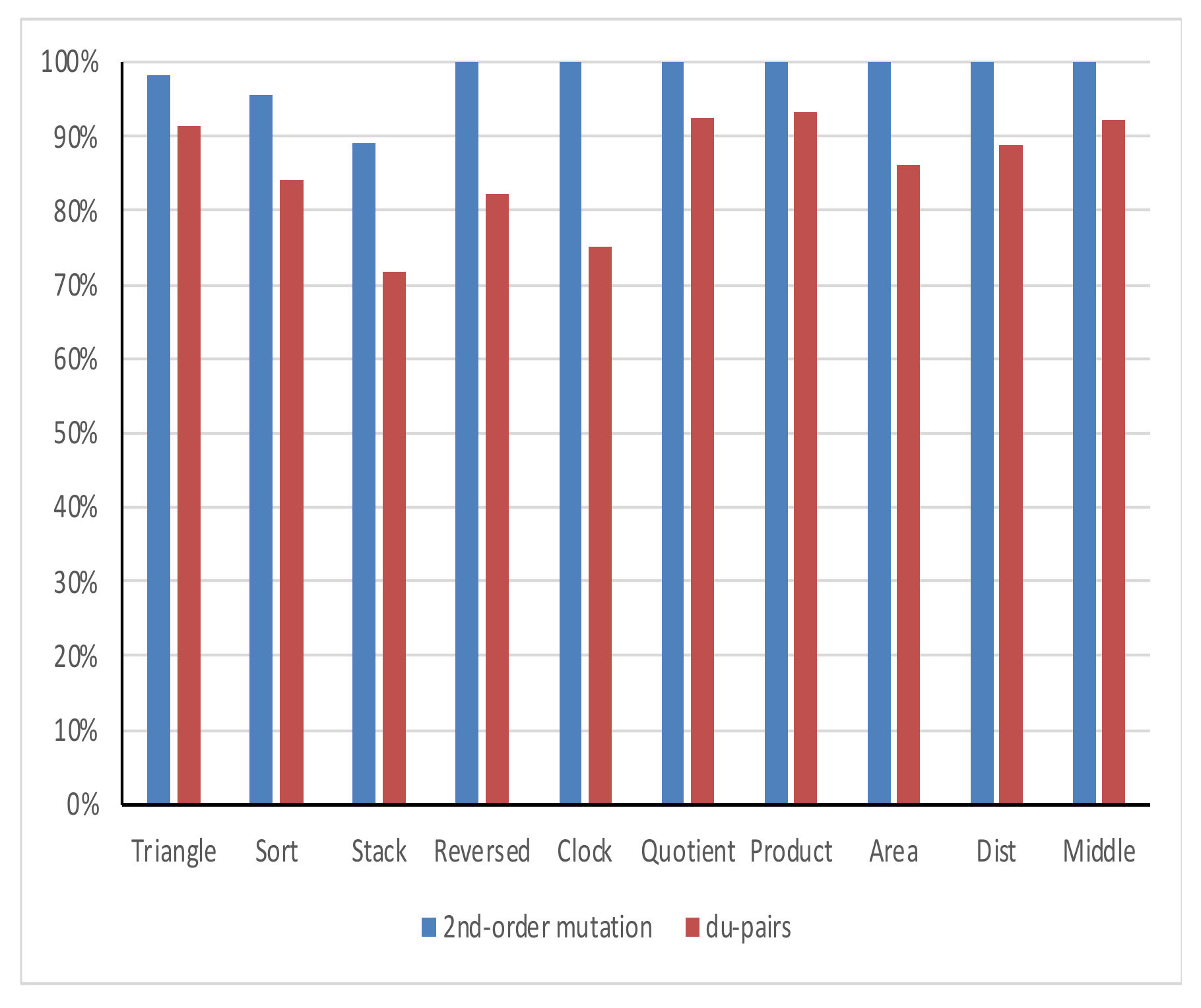

4.5.2. Coverage Adequacy

4.5.3. Failure Detection Efficiency

4.5.4. Threats to Validity

- (1)

- External validity

- (2)

- Internal validity

5. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- White, L.J. Software testing and verification. In Advances in Computers; Yovits, M.C., Ed.; Elsevier: Amsterdam, The Netherlands, 1987; Volume 26, pp. 335–390. [Google Scholar]

- Offutt, A.J.; Pan, J.; Tewary, K.; Zhang, T. An Experimental Evaluation of Data Flow and Mutation Testing. J. Softw. Pract. Exp. 1996, 26, 165–176. [Google Scholar] [CrossRef]

- Mathur, A.P.; Wong, W.E. An empirical comparison of data flow and mutation-based adequacy criteria. Softw. Test. Verif. Reliab. 1994, 4, 9–31. [Google Scholar] [CrossRef]

- Offutt, A.J.; Voas, J.M. Subsumption of Condition Coverage Techniques by Mutation Testing; Technical Report ISSE-TR-96-01; Information and Software Systems Engineering George Mason University: Fairfax, VA, USA, 1996. [Google Scholar]

- Frankl, P.G.; Weiss, S.N.; Hu, C. All-uses vs. mutation testing: An experimental comparison of effectiveness. J. Syst. Softw. 1997, 38, 235–253. [Google Scholar] [CrossRef]

- Kakarla, S.; Momotaz, S.; Namin, A.S. An Evaluation of Mutation and Data-Flow Testing: A Meta-analysis. In Proceedings of the 2011 IEEE Fourth International Conference on Software Testing, Verification and Validation Workshops, Berlin, Germany, 21–25 March 2011. [Google Scholar]

- Bluemke, I.; Kulesza, K. A Comparison of Dataflow and Mutation Testing of Java Methods. In Dependable Computer Systems; Advances in Intelligent and Soft Computing; Zamojski, W., Kacprzyk, J., Mazurkiewicz, J., Sugier, J., Walkowiak, T., Eds.; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Mathur, A.P.; Wong, W.E. Comparing the fault detection effectiveness of mutation and data flow testing: An empirical study. Softw. Qual. J. 1994, 4, 69–83. [Google Scholar]

- Tewary, K.; Harrold, M.J. Fault modeling using the program dependence graph. In Proceedings of the Fifth International Symposium on Software Reliability Engineering, Monterey, CA, USA, 6–9 November 1994. [Google Scholar]

- Aho, A.V.; Sethi, R.; Ullman, J.D. Compilers, Principles, Techniques, and Tools; Addison-Wesley Publishing Company: Boston, MA, USA, 1986. [Google Scholar]

- Frankl, P.G.; Weyuker, E.J. An applicable family of data flow testing criteria. IEEE Trans. Softw. Eng. 1988, 14, 1483–1498. [Google Scholar] [CrossRef]

- Jia, Y.; Harman, M. Higher order mutation testing. Inf. Softw. Technol. 2009, 51, 1379–1393. [Google Scholar] [CrossRef]

- Ghiduk, A.S.; Girgis, M.R.; Shehata, M.H. Higher-order mutation testing: A systematic literature review. Comput. Sci. Rev. J. 2017, 25, 9–48. [Google Scholar] [CrossRef]

- DeMillo, R.A.; Lipton, R.J.; Sayward, F.G. Hints on test data selection: Help for the practicing programmer. Computer 1978, 11, 4–41. [Google Scholar] [CrossRef]

- Hamlet, R.G. Testing programs with the aid of a compiler. IEEE Trans. Softw. Eng. 1977, 3, 279–290. [Google Scholar] [CrossRef]

- Weyuker, E.J.; Weiss, S.N.; Hamlet, R.G. Comparison of program testing strategies. In Proceedings of the Fourth Symposium on Software Testing, Analysis, and Verification, Victoria, BC, Canada, 8–10 October 1991. [Google Scholar]

- Michalewicz, Z. Genetic algorithms + Data Structures = Evolution Programs, 3rd ed.; Springer: Berlin/Heidelberg, Germany, 1998. [Google Scholar]

- Harman, M.; Jia, Y.; Langdon, B. Strong higher order mutation-based test data generation. In Proceedings of the 8th European Software Engineering Conference and the ACM SIGSOFT Symposium on the Foundations of Software Engineering (ESEC/FSE ’11), Szeged, Hungary, 5–9 September 2011. [Google Scholar]

- Dang, X.; Gong, D.; Yao, X.; Tian, T.; Liu, H. Enhancement of Mutation Testing via Fuzzy Clustering and Multi-population Genetic Algorithm. IEEE Trans. Softw. Eng. 2021, 48, 2141–2156. [Google Scholar] [CrossRef]

- Derezińska, A.; Szustek, A. CREAM—A System for Object-Oriented Mutation of C# Programs; Warsaw University of Technology: Warszawa, Poland, 2007. [Google Scholar]

- Derezińska, A. Advanced Mutation Operators Applicable in C# Programs; Warsaw University of Technology: Warszawa, Poland, 2005. [Google Scholar]

- Derezińska, A. Quality assessment of mutation operators dedicated for C# programs. In Proceedings of the 6th International Conference on Quality Software (QSIC’06), Beijing, China, 27–28 October 2006. [Google Scholar]

| Original Code | Mutated Version | ||

|---|---|---|---|

| First-Order | Higher Order Mutant | ||

| Second-Order | Third-Order | ||

| double n, m double d = n × m double d = n/m | double n, m double d = n + m double d = n/m | double n, m double d = n + m double d = n − m | double n, m double d = n + m double d = n − m++ |

| Title | Description | Scale Using LOC (Lines of Codes) |

|---|---|---|

| Triangle | find the type of triangle according to its sides’ lengths. | 52 LOC |

| Sort | arrange a group of items | 40 LOC |

| Stack | using push method to enter a group of elements and using pop method to delete element from stack. | 51 LOC |

| Reversed | returns the array after inverting the elements | 44 LOC |

| Clock | return the time in hours, minutes, and seconds | 63 LOC |

| Quotient | return the quotient and the remainder of the division between two numbers | 43 LOC |

| Product | find the summation, multiplication, and subtraction of three numbers. | 54 LOC |

| Area | find the areas of any circle, triangle, or rectangle. | 59 LOC |

| Dist | calling variables with more than one object and printing their dependent values with each call | 52 LOC |

| Middle | find the middle value of three numbers. | 42 LOC |

| Tested Program | Cover Size of 2nd Order Mutants | Cover Size of All Du-Pairs |

|---|---|---|

| Triangle | 71.3 | 21.3 |

| Sort | 18.3 | 6.7 |

| Stack | 49.3 | 21.0 |

| Reversed | 34.7 | 15.3 |

| Clock | 101.3 | 32.7 |

| Quotient | 34.3 | 16.3 |

| Product | 79.7 | 21.3 |

| Area | 89.3 | 30.7 |

| Dist | 80.7 | 24.7 |

| Middle | 32.3 | 12.7 |

| Average | 59.1 | 20.3 |

| 2nd-Order Mutant | Du-Pairs | |

|---|---|---|

| Mean | 59.13 | 20.27 |

| Variance | 824.4 | 62.96 |

| P(T ≤ t) two-tail | 2.77 × 10−4 | |

| Tested Program | Suite1 | Suite2 | Suite3 | Average |

|---|---|---|---|---|

| Triangle | 98% | 100% | 98% | 98.7% |

| Sort | 96% | 96% | 96% | 95.6% |

| Stack | 89% | 100% | 89% | 92.6% |

| Reversed | 100% | 100% | 100% | 100% |

| Clock | 100% | 100% | 100% | 100% |

| Quotient | 100% | 100% | 100% | 100% |

| Product | 100% | 100% | 100% | 100% |

| Area | 100% | 100% | 100% | 100% |

| Dist | 100% | 100% | 100% | 100% |

| Middle | 100% | 100% | 100% | 100% |

| Average | 98.3% | 99.6% | 98.3% | 98.7% |

| Tested Program | Suite1 | Suite2 | Suite3 | Average |

|---|---|---|---|---|

| Triangle | 80% | 82% | 80% | 81% |

| Sort | 85% | 85% | 85% | 85% |

| Stack | 89% | 85% | 85% | 86% |

| Reversed | 75% | 80% | 75% | 77% |

| Clock | 80% | 80% | 80% | 80% |

| Quotient | 70% | 73% | 70% | 71% |

| Product | 78% | 80% | 75% | 78% |

| Area | 80% | 83% | 88% | 84% |

| Dist | 79% | 90% | 79% | 83% |

| Middle | 85% | 85% | 85% | 85% |

| Average | 80.1% | 82.3% | 80.2% | 80.9% |

| Second-Order Mutant | Du-Pairs | |

|---|---|---|

| Mean | 98.7% | 80.9% |

| Variance | 0.1% | 0.2% |

| P(T ≤ t) two-tail | 1.11 × 10−5 | |

| Tested Program | No. of Faults | Failure Detection Efficiency | |

|---|---|---|---|

| 2nd-Order Mutation | Du-Pairs | ||

| Triangle | 2459 | 98% | 91% |

| Sort | 2333 | 96% | 84% |

| Stack | 354 | 89% | 72% |

| Reversed | 956 | 100% | 82% |

| Clock | 109 | 100% | 75% |

| Quotient | 2597 | 100% | 92% |

| Product | 759 | 100% | 93% |

| Area | 405 | 100% | 86% |

| Dist | 274 | 100% | 89% |

| Middle | 319 | 100% | 92% |

| Total = 10,565 | Average = 98% | Average = 86% | |

| 2nd-Order Mutant | Du-Pairs | |

|---|---|---|

| Mean | 98.3% | 85.7% |

| Variance | 0.1% | 0.6% |

| P(T ≤ t) two-tail | 9.195 × 10−5 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abd-Elkawy, E.H.; Ahmed, R. Empirical Comparison of Higher-Order Mutation Testing and Data-Flow Testing of C# with the Aid of Genetic Algorithm. Appl. Sci. 2023, 13, 9170. https://doi.org/10.3390/app13169170

Abd-Elkawy EH, Ahmed R. Empirical Comparison of Higher-Order Mutation Testing and Data-Flow Testing of C# with the Aid of Genetic Algorithm. Applied Sciences. 2023; 13(16):9170. https://doi.org/10.3390/app13169170

Chicago/Turabian StyleAbd-Elkawy, Eman H., and Rabie Ahmed. 2023. "Empirical Comparison of Higher-Order Mutation Testing and Data-Flow Testing of C# with the Aid of Genetic Algorithm" Applied Sciences 13, no. 16: 9170. https://doi.org/10.3390/app13169170

APA StyleAbd-Elkawy, E. H., & Ahmed, R. (2023). Empirical Comparison of Higher-Order Mutation Testing and Data-Flow Testing of C# with the Aid of Genetic Algorithm. Applied Sciences, 13(16), 9170. https://doi.org/10.3390/app13169170