An Improved AoT-DCGAN and T-CNN Hybrid Deep Learning Model for Intelligent Diagnosis of PTCs Quality under Small Sample Space

Abstract

1. Introduction

- (1)

- We design a hybrid deep learning model for the automatic classification of PTCs curves. The model achieves good classification results under small samples, enabling the fast and quantitative evaluation of the connection quality of PTCs.

- (2)

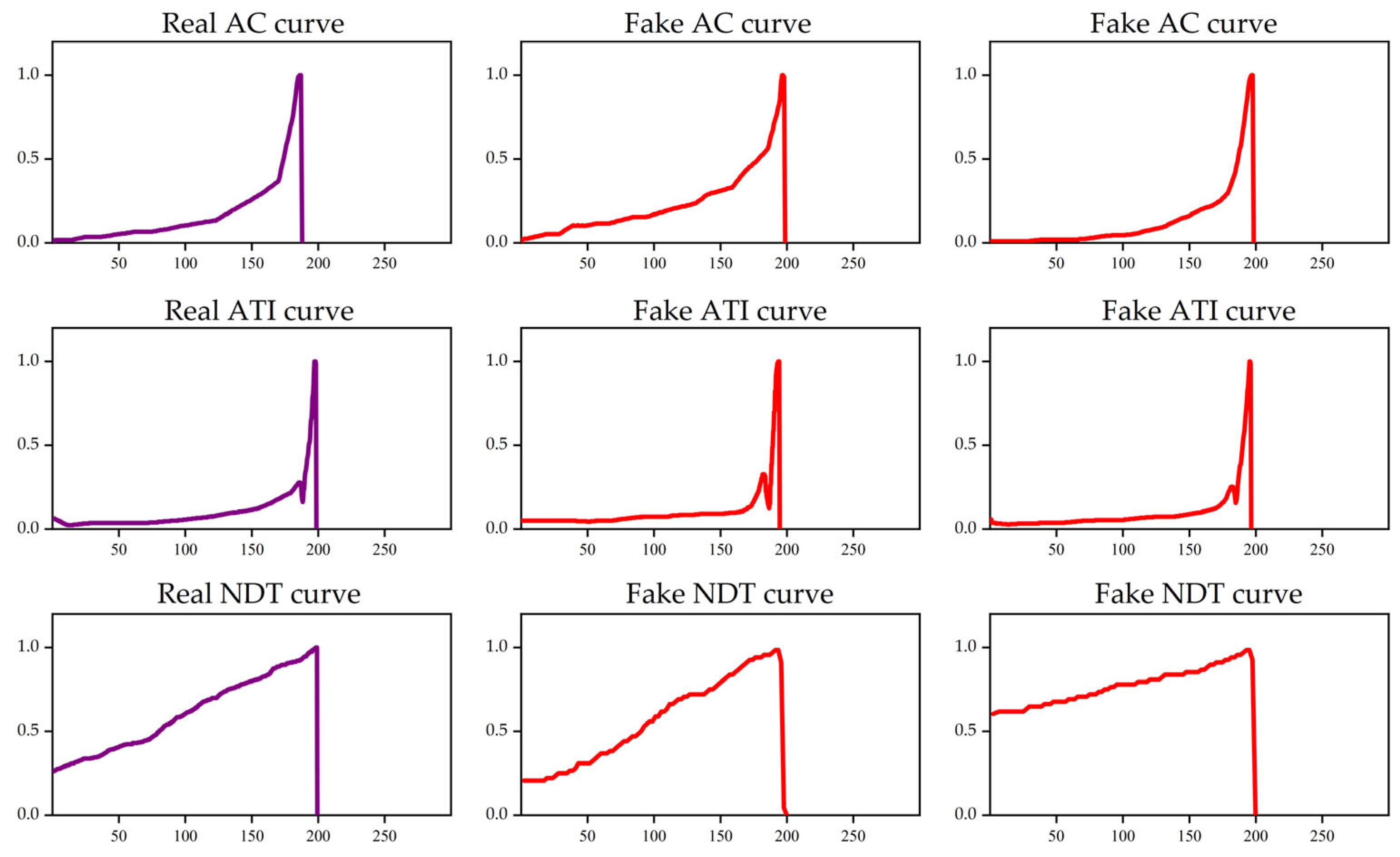

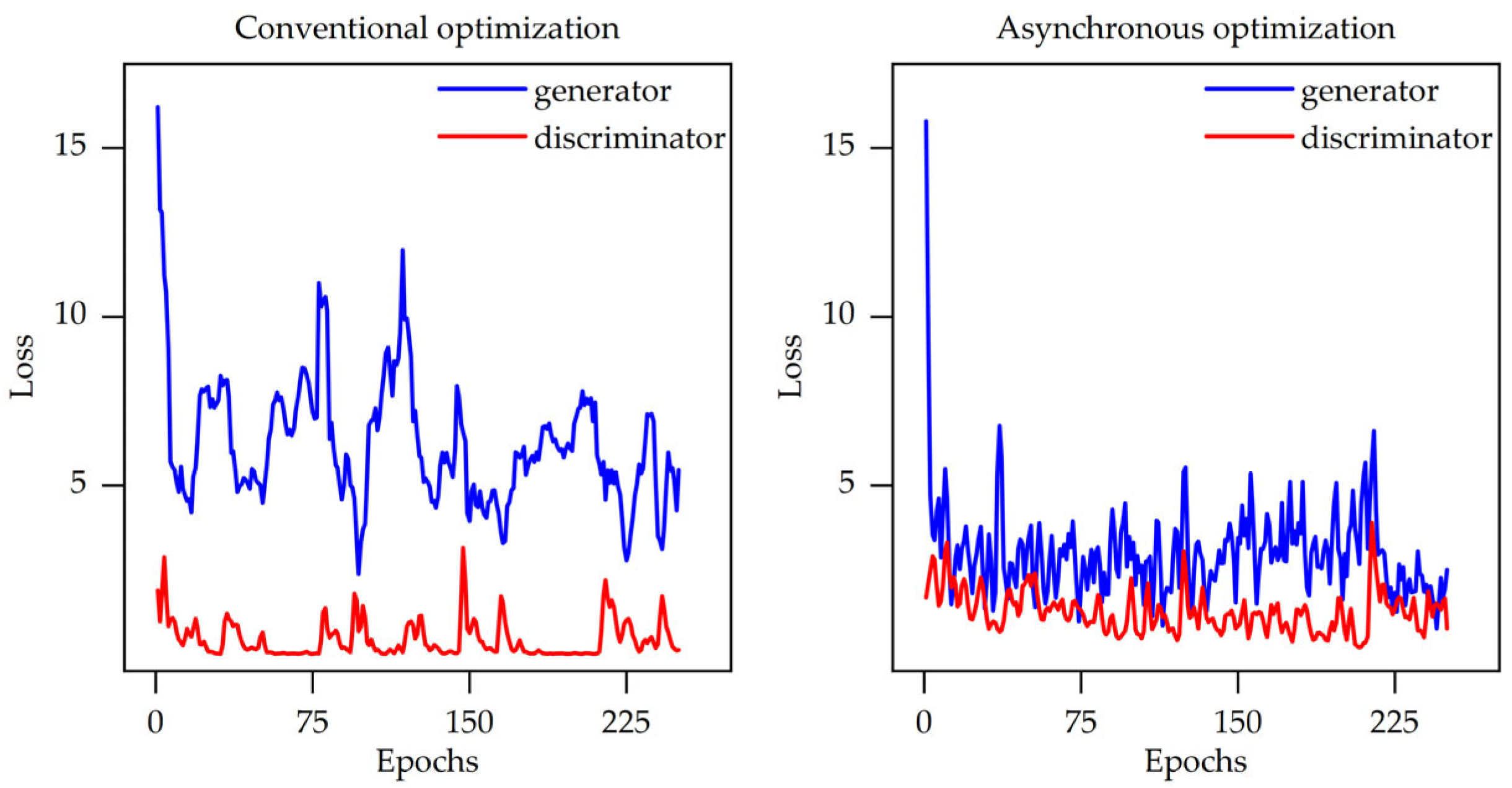

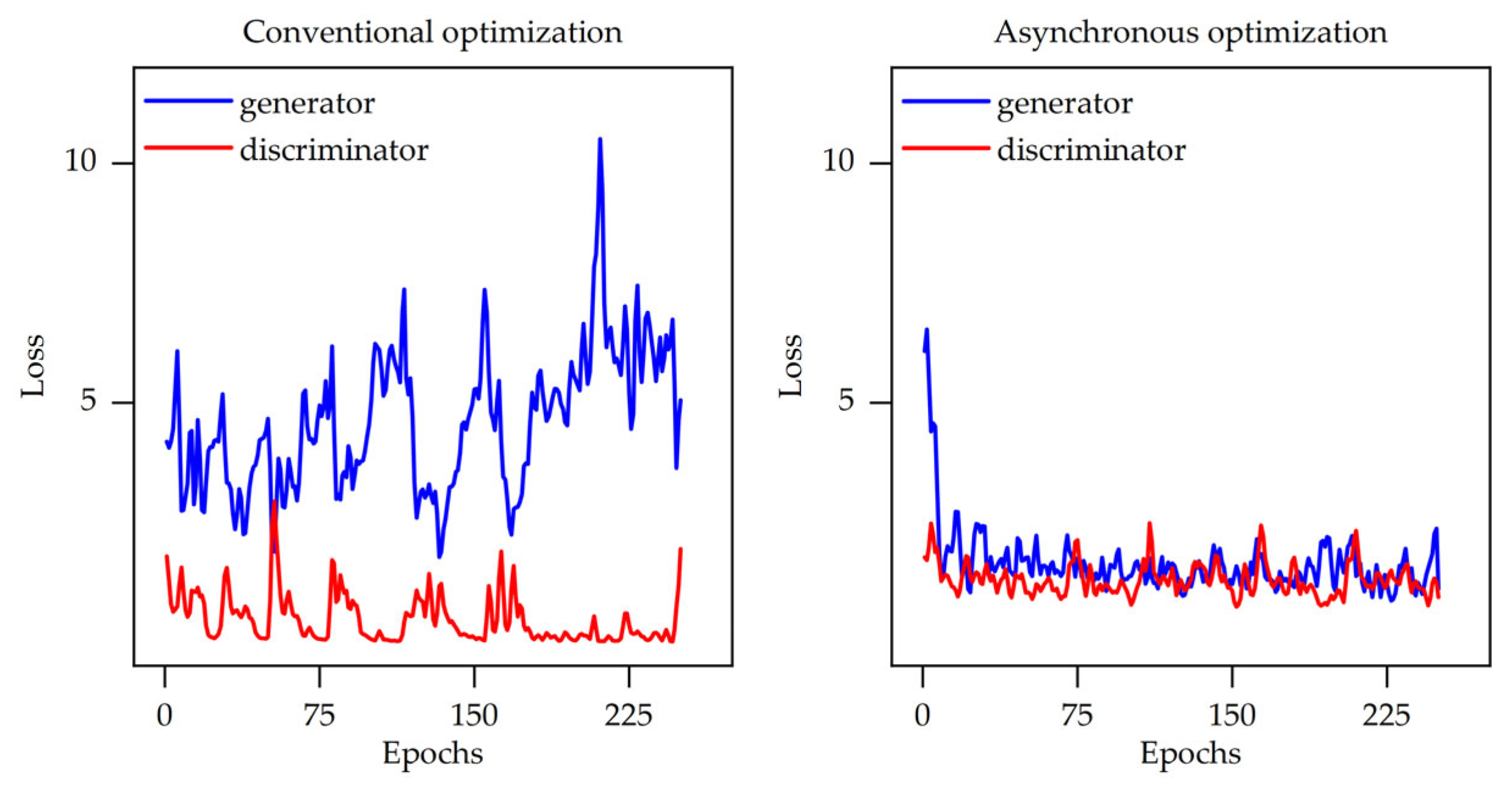

- We propose an AoT-DCGAN model capable of generating samples that closely resemble real PTCs curves, exhibiting high authenticity and accuracy. Furthermore, the model training process is more stable, effectively addressing the issue of gradient vanishing.

- (3)

- We introduce a T-CNN model for classifying PTCs curves which achieves superior classification performance compared to traditional machine learning and deep learning models.

2. Materials and Methods

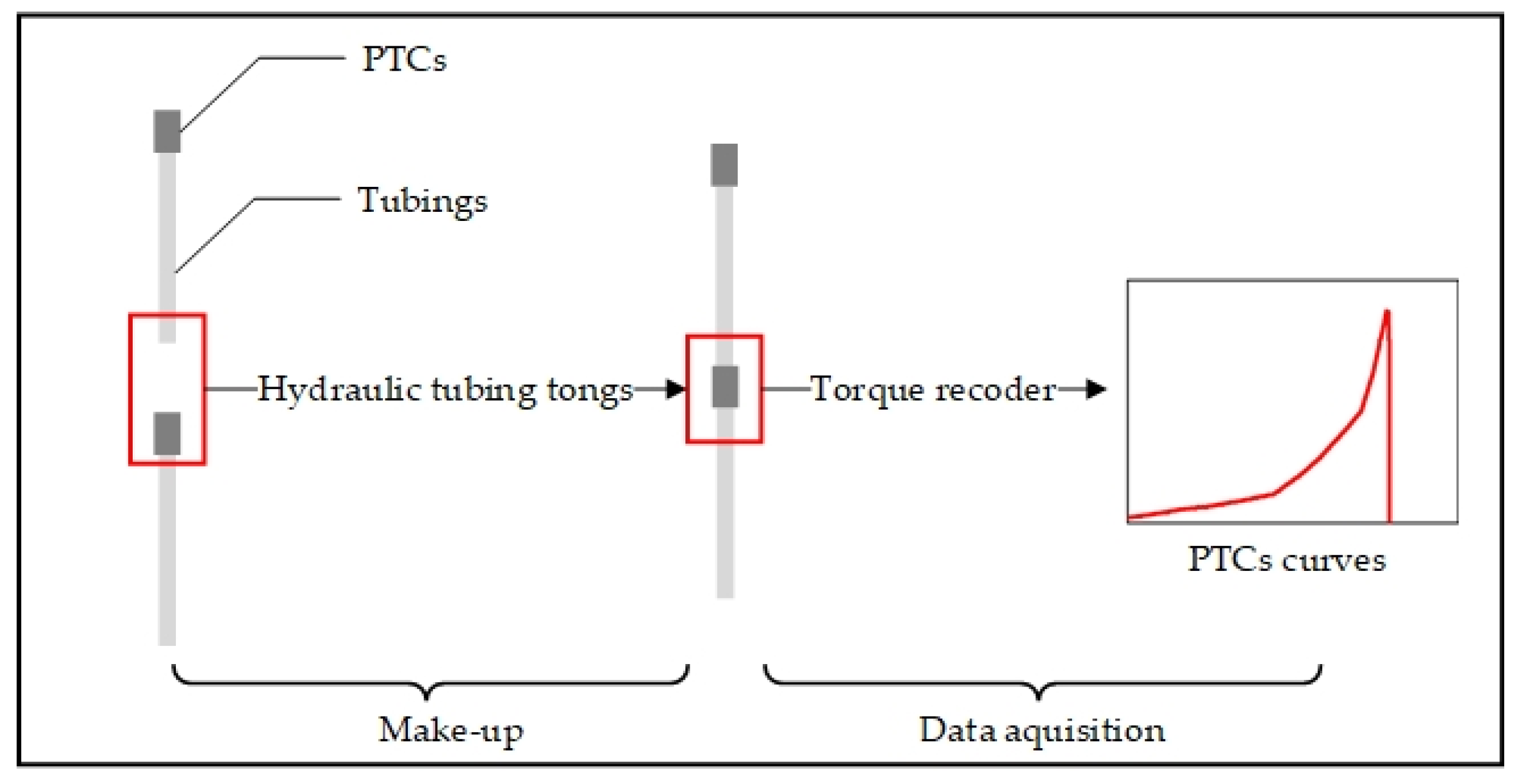

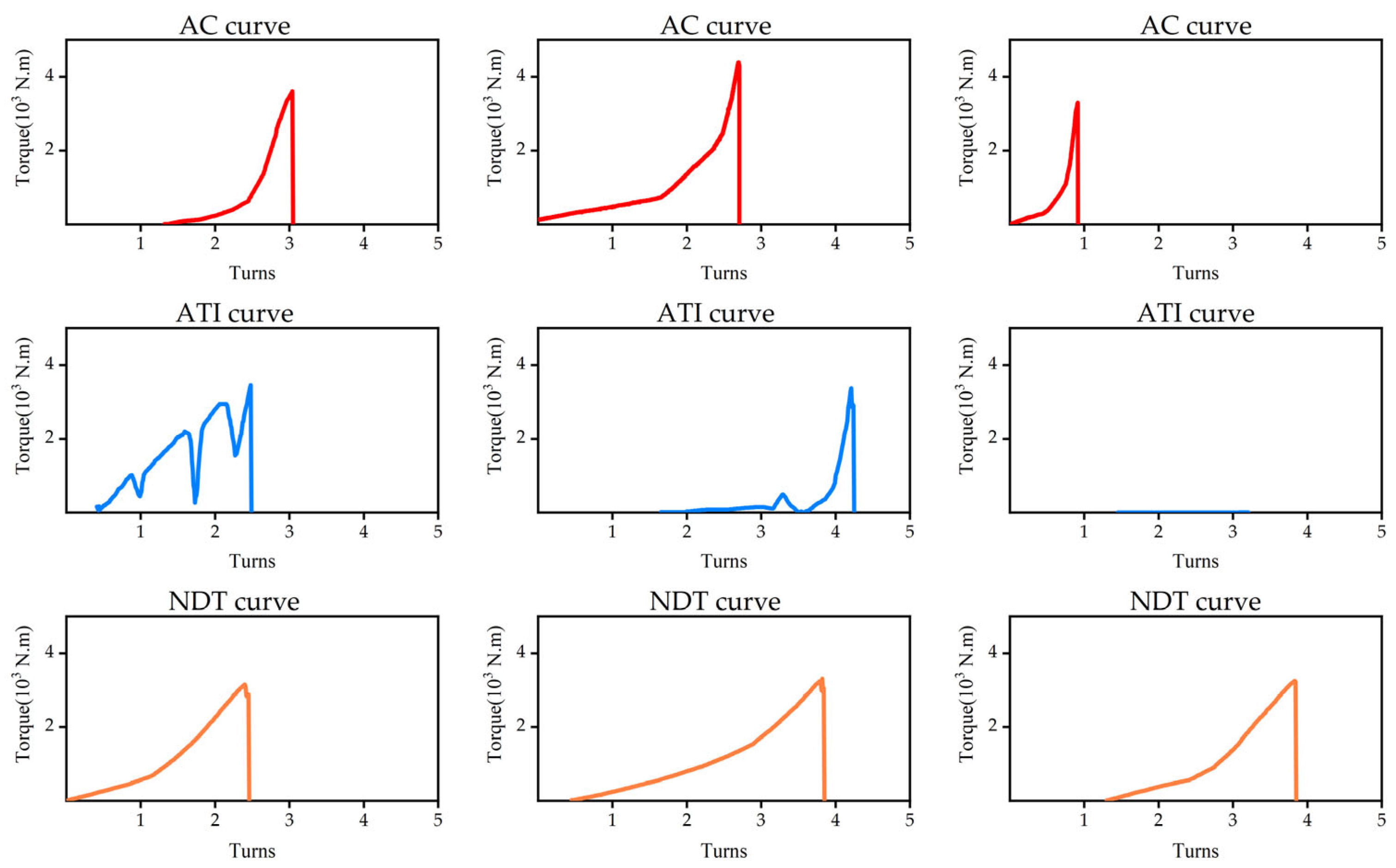

2.1. PTCs Curve

2.2. Proposed Method

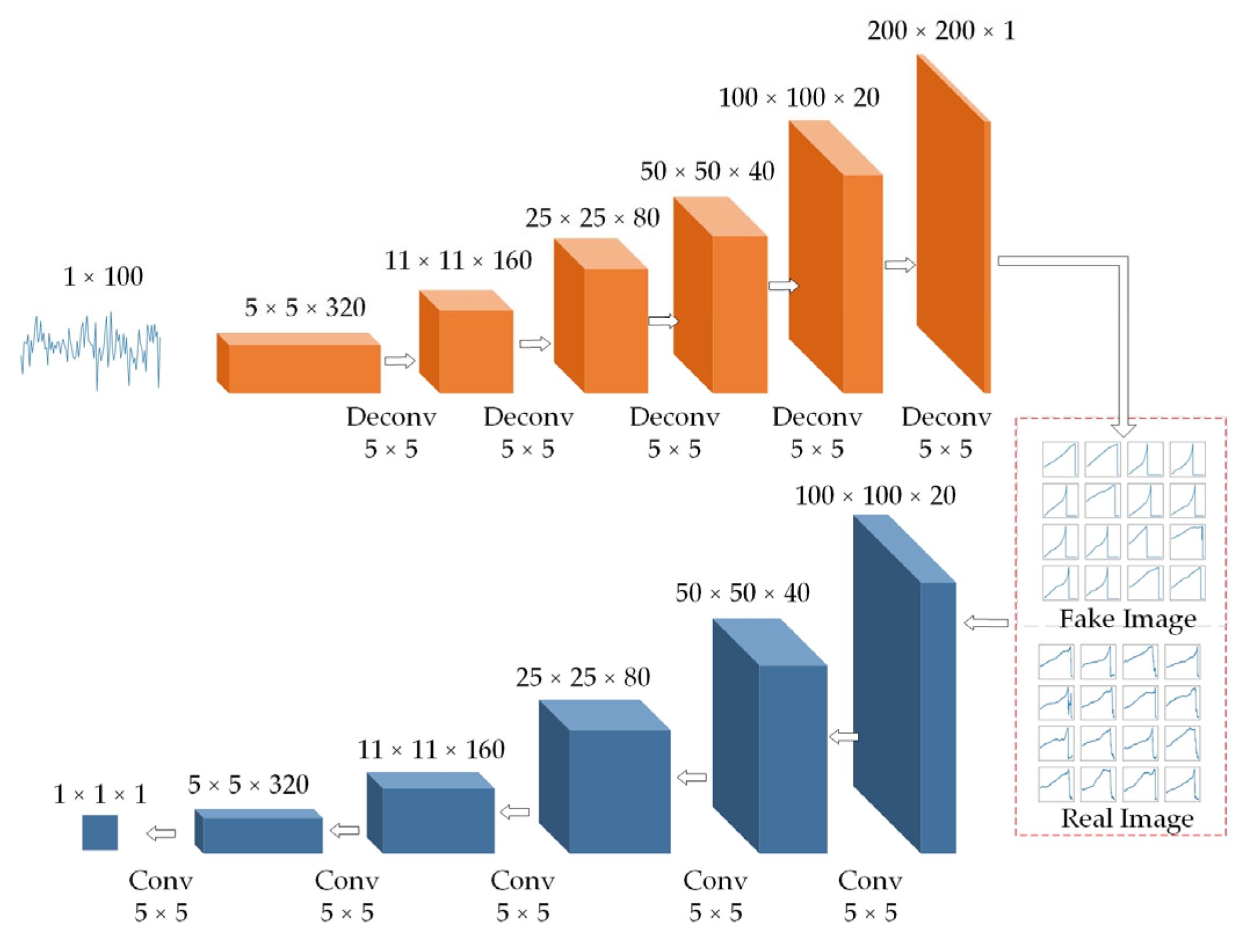

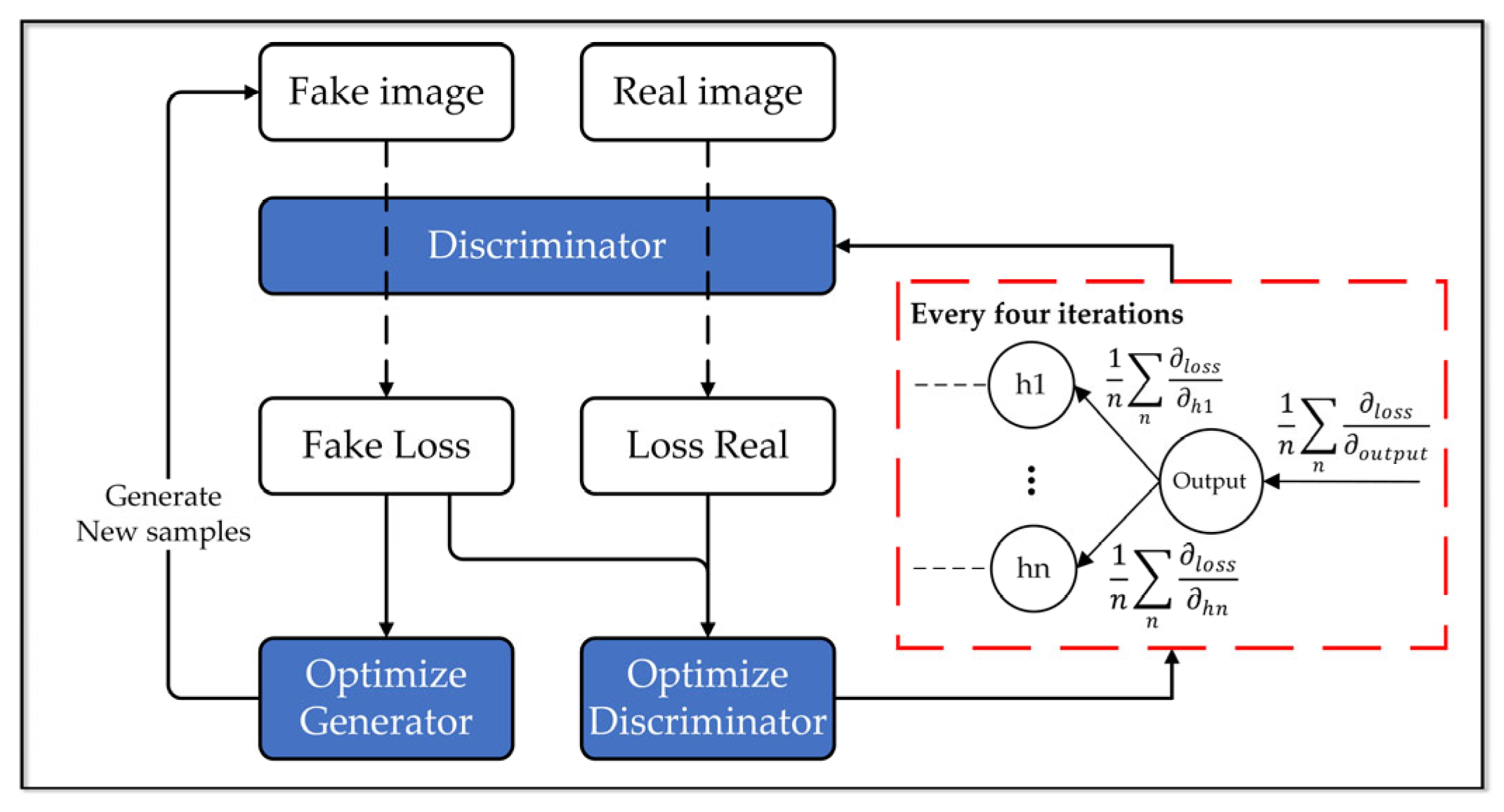

2.2.1. The Structure of the AoT-DCGAN Model

2.2.2. The Structure of T-CNN

3. Results

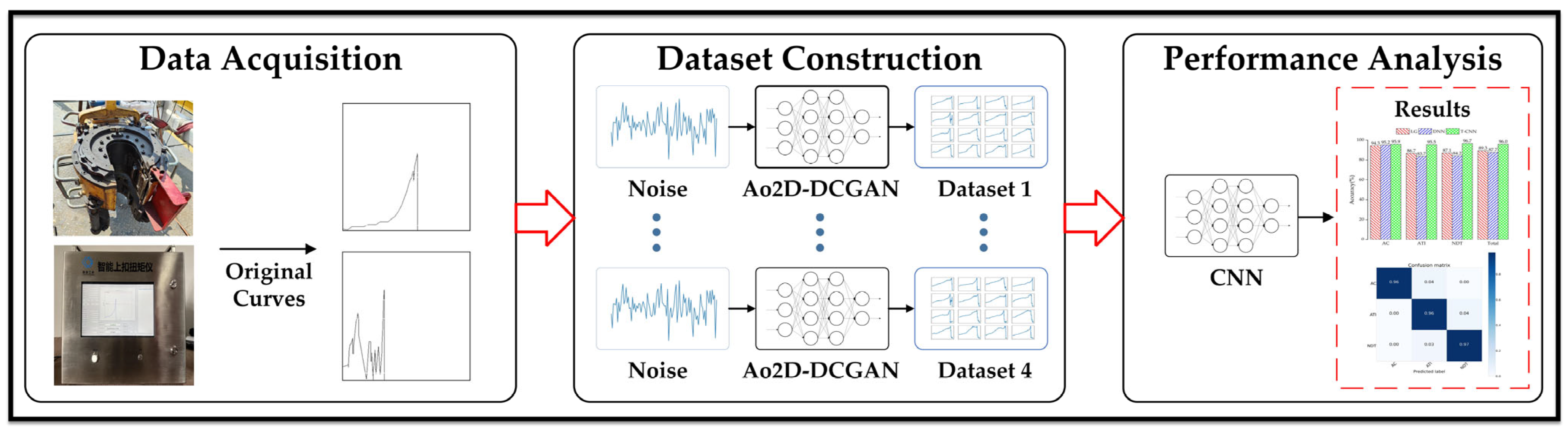

- (1)

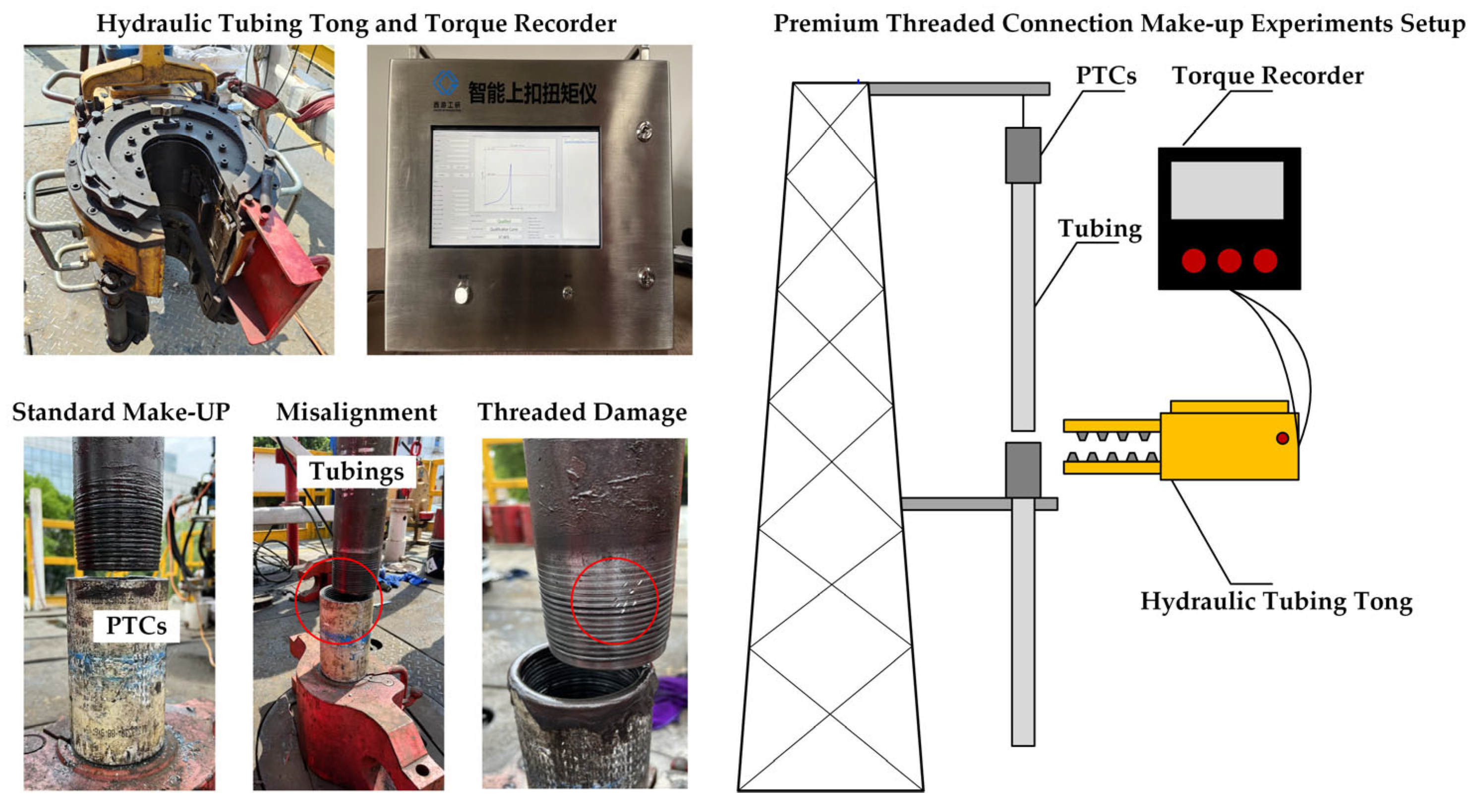

- Data acquisition: Original PTCs curves were generated via premium threaded connection make-up experiments.

- (2)

- Dataset construction: The original dataset was expanded using AoT-DCGAN to generate four sets of datasets of varying quantities, based on the experimental requirements.

- (3)

- Performance analysis: The performance of the proposed deep learning model was compared under different data augmentation ratios and classification models. Additionally, the reliability of the weight optimization strategy proposed in this study was validated.

3.1. Data Acquisition

3.2. Dataset Construction

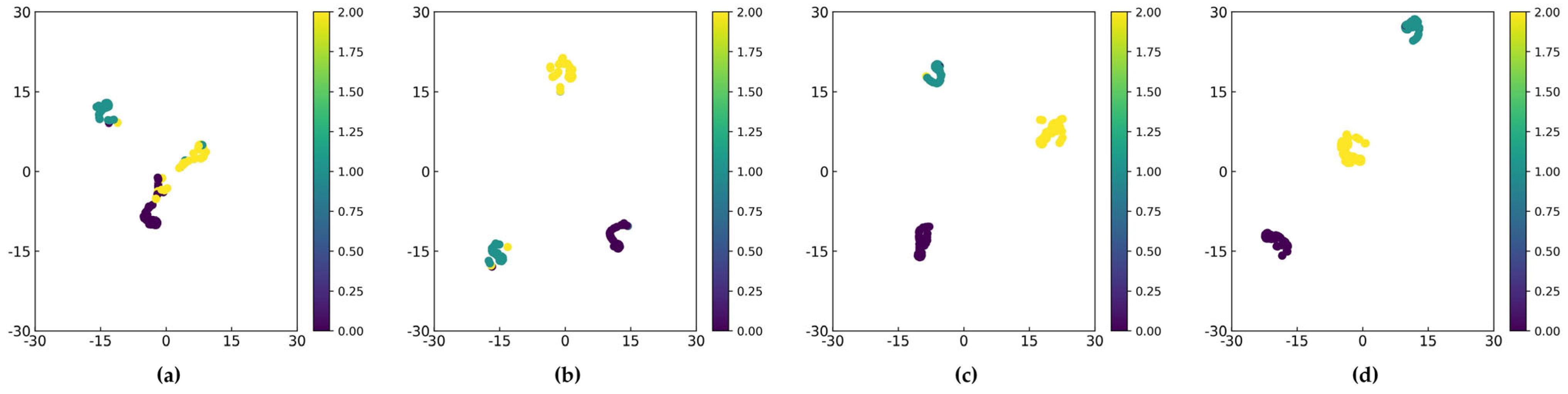

3.3. Performance Analysis

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Guo, J.; Ma, F. Air tightness performance assessment of screw threads of oil tubings in high-sulfur gas wells in the Longgang Gas Field, Sichuan Basin. Nat. Gas Ind. 2013, 33, 128–131. [Google Scholar]

- Huang, Y.; Zhang, Z.; Li, Y. Safety assessment of production casingin HTHP CO2 gas well. Drill. Prod. Technol. 2014, 37, 78–81. [Google Scholar]

- Jie, L.; Ye, H.; Hong, L. Technology and practice of well completion and putting into production for gas wells with high sulfur content in Sichuan and Chongqing areas. Nat. Gas Ind. 2006, 26, 72. [Google Scholar]

- Chen, W.; Di, Q.; Zhang, H.; Chen, F.; Wang, W. The sealing mechanism of tubing and casing premium threaded connections under complex loads. J. Pet. Sci. Eng. 2018, 171, 724–730. [Google Scholar] [CrossRef]

- Cui, F.; Li, W.; Wang, G.; Gu, Z.; Wang, Z. Design and study of gas-tight premium threads for tubing and casing. J. Pet. Sci. Eng. 2015, 133, 208–217. [Google Scholar]

- Hao, L. The Structural Design of the Threaded Part of the Premium Connection Tubing. In Proceedings of the IOP Conference Series: Earth and Environmental Science, Kamakura, Japan, 10–11 October 2021; p. 052076. [Google Scholar]

- Honglin, X.; Taihe, S.; Zhi, Z. Theoretical analysis on makeup torque in tubing and casing premium threaded connections. J. Southwest Pet. Univ. (Sci. Technol. Ed.) 2014, 36, 160. [Google Scholar]

- Yang, B.; Xu, H.; Xiang, S.; Zhang, Z.; Su, K.; Yang, Y. Effects of Make-Up Torque on the Sealability of Sphere-Type Premium Connection for Tubing and Casing Strings. Processes 2023, 11, 256. [Google Scholar] [CrossRef]

- Ma, Z.-H.; Fan, Y.; Zhang, H.-L.; Tang, G.; Li, Y.-F.; Wang, C.-L.; Peng, G.; Duan, Y.-Q. Research on Evaluation Method of Make-Up Torque of Premium Thread Based on Logistic Regression. In Proceedings of the International Field Exploration and Development Conference; Springer: Singapore, 2021; pp. 3840–3853. [Google Scholar]

- Rawat, W.; Wang, Z.H. Deep Convolutional Neural Networks for Image Classification: A Comprehensive Review. Neural Comput. 2017, 29, 2352–2449. [Google Scholar] [CrossRef]

- Qin, J.; Pan, W.; Xiang, X.; Tan, Y.; Hou, G. A biological image classification method based on improved CNN. Ecol. Inform. 2020, 58, 101093. [Google Scholar] [CrossRef]

- Yuan, Y.; Mou, L.; Lu, X. Scene recognition by manifold regularized deep learning architecture. IEEE Trans. Neural Netw. Learn. Syst. 2015, 26, 2222–2233. [Google Scholar]

- Acharya, U.R.; Fujita, H.; Oh, S.L.; Hagiwara, Y.; Tan, J.H.; Adam, M. Application of deep convolutional neural network for automated detection of myocardial infarction using ECG signals. Inf. Sci. 2017, 415, 190–198. [Google Scholar] [CrossRef]

- Athamanolap, P.; Parekh, V.; Fraley, S.I.; Agarwal, V.; Shin, D.J.; Jacobs, M.A.; Wang, T.H.; Yang, S. Trainable High Resolution Melt Curve Machine Learning Classifier for Large-Scale Reliable Genotyping of Sequence Variants. PLoS ONE 2014, 9, e109094. [Google Scholar] [CrossRef]

- Jiao, J.Y.; Zhao, M.; Lin, J.; Liang, K.X. A comprehensive review on convolutional neural network in machine fault diagnosis. Neurocomputing 2020, 417, 36–63. [Google Scholar] [CrossRef]

- Ozkok, F.O.; Celik, M. Convolutional neural network analysis of recurrence plots for high resolution melting classification. Comput. Methods Programs Biomed. 2021, 207, 106139. [Google Scholar] [CrossRef]

- Jing, L.Y.; Zhao, M.; Li, P.; Xu, X.Q. A convolutional neural network based feature learning and fault diagnosis method for the condition monitoring of gearbox. Measurement 2017, 111, 1–10. [Google Scholar] [CrossRef]

- Peng, Y. Artificial intelligence applied in sucker rod pumping wells: Intelligent dynamometer card generation, diagnosis, and failure detection using deep neural networks. In Proceedings of the SPE Annual Technical Conference and Exhibition, Calgary, AB, Canada, 30 September–2 October 2019. [Google Scholar]

- Zhang, R.; Wang, L.; Chen, D. An Intelligent Diagnosis Method of the Working Conditions in Sucker-Rod Pump Wells Based on Convolutional Neural Networks and Transfer Learning. Energy Eng. 2021, 118, 1069–1082. [Google Scholar] [CrossRef]

- Tang, S.N.; Yuan, S.Q.; Zhu, Y. Convolutional Neural Network in Intelligent Fault Diagnosis Toward Rotatory Machinery. IEEE Access 2020, 8, 86510–86519. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A survey on image data augmentation for deep learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Sun, C.; Shrivastava, A.; Singh, S.; Gupta, A. Revisiting unreasonable effectiveness of data in deep learning era. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 843–852. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 27, 139–144. [Google Scholar]

- Liang, P.; Deng, C.; Wu, J.; Li, G.; Yang, Z.; Wang, Y. Intelligent fault diagnosis via semisupervised generative adversarial nets and wavelet transform. IEEE Trans. Instrum. Meas. 2019, 69, 4659–4671. [Google Scholar] [CrossRef]

- Wu, Q.; Chen, Y.; Meng, J. DCGAN-based data augmentation for tomato leaf disease identification. IEEE Access 2020, 8, 98716–98728. [Google Scholar] [CrossRef]

- Zhang, T.C.; Chen, J.L.; Li, F.D.; Pan, T.Y.; He, S.L. A Small Sample Focused Intelligent Fault Diagnosis Scheme of Machines via Multimodules Learning With Gradient Penalized Generative Adversarial Networks. IEEE Trans. Ind. Electron. 2021, 68, 10130–10141. [Google Scholar] [CrossRef]

- Zhang, W.; Li, X.; Jia, X.D.; Ma, H.; Luo, Z.; Li, X. Machinery fault diagnosis with imbalanced data using deep generative adversarial networks. Measurement 2020, 152, 107377. [Google Scholar] [CrossRef]

- Zhang, Y.Q.; Han, J.L.; Jing, L.Y.; Wang, C.M.; Zhao, L. Intelligent Fault Diagnosis of Broken Wires for Steel Wire Ropes Based on Generative Adversarial Nets. Appl. Sci. 2022, 12, 11552. [Google Scholar] [CrossRef]

- Gui, J.; Sun, Z.A.; Wen, Y.G.; Tao, D.C.; Ye, J.P. A Review on Generative Adversarial Networks: Algorithms, Theory, and Applications. IEEE Trans. Knowl. Data Eng. 2023, 35, 3313–3332. [Google Scholar] [CrossRef]

- Wang, R.; Zhang, S.; Chen, Z.; Li, W. Enhanced generative adversarial network for extremely imbalanced fault diagnosis of rotating machine. Measurement 2021, 180, 109467. [Google Scholar] [CrossRef]

- Li, Z.; Zheng, T.; Wang, Y.; Cao, Z.; Guo, Z.; Fu, H. A novel method for imbalanced fault diagnosis of rotating machinery based on generative adversarial networks. IEEE Trans. Instrum. Meas. 2020, 70, 1–17. [Google Scholar] [CrossRef]

- Yu, Y.; Cao, Y.; Qu, Z.; Dou, Y.; Wang, Z. Finite-Element Analysis on Energy Dissipation and Sealability of Premium Connections under Dynamic Loads. Processes 2023, 11, 1927. [Google Scholar] [CrossRef]

- Yu, H.; Wang, H.; Lian, Z. An Assessment of Seal Ability of Tubing Threaded Connections: A Hybrid Empirical-Numerical Method. J. Energy Resour. Technol. 2023, 145, 052902. [Google Scholar] [CrossRef]

- Creswell, A.; White, T.; Dumoulin, V.; Arulkumaran, K.; Sengupta, B.; Bharath, A.A. Generative Adversarial Networks An overview. Ieee Signal Process. Mag. 2018, 35, 53–65. [Google Scholar] [CrossRef]

- Abdel-Hamid, O.; Deng, L.; Yu, D. Exploring convolutional neural network structures and optimization techniques for speech recognition. In Proceedings of the Interspeech, Lyon, France, 25–29 August 2013; pp. 1173–1175. [Google Scholar]

| Make-Up Conditions | Curve Description |

|---|---|

| Standard | Acceptable (AC) |

| Thread Damage | Abnormal Thread Interference (ATI) |

| Misalignment | None Defined Torque (NDT) |

| Parameter | Description |

|---|---|

| Epochs | 250 |

| Batch size | 16 |

| Learning rate | 0.002 |

| Optimizer type | Adam |

| Shuffle | Every-epoch |

| Dataset Size | Recall | Specificity | F1-Score | Precision |

|---|---|---|---|---|

| 600 (original) | 92.4 | 85.9 | 85.5 | 79.5 |

| 900 | 93.6 | 94.3 | 92.1 | 90.7 |

| 1050 | 95.9 | 98.4 | 95.9 | 95.9 |

| 1200 | 95.9 | 99.8 | 97.9 | 99.9 |

| Dataset Size | Recall | Specificity | F1-Score | Precision |

|---|---|---|---|---|

| 600 (original) | 76.3 | 95.7 | 81.7 | 87.9 |

| 900 | 85.5 | 95.3 | 86.8 | 88.1 |

| 1050 | 92.5 | 93.8 | 91.1 | 89.8 |

| 1200 | 95.5 | 96.4 | 94.8 | 94.1 |

| Dataset Size | Recall | Specificity | F1-Score | Precision |

|---|---|---|---|---|

| 600 (original) | 73.0 | 89.4 | 75.5 | 78.1 |

| 900 | 84.1 | 92.3 | 84.5 | 85.0 |

| 1050 | 90.6 | 96.5 | 92.0 | 93.5 |

| 1200 | 96.8 | 97.4 | 96.1 | 95.4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, Z.; Chen, Y.; Fan, Y.; He, X.; Luo, W.; Shu, J. An Improved AoT-DCGAN and T-CNN Hybrid Deep Learning Model for Intelligent Diagnosis of PTCs Quality under Small Sample Space. Appl. Sci. 2023, 13, 8699. https://doi.org/10.3390/app13158699

Ma Z, Chen Y, Fan Y, He X, Luo W, Shu J. An Improved AoT-DCGAN and T-CNN Hybrid Deep Learning Model for Intelligent Diagnosis of PTCs Quality under Small Sample Space. Applied Sciences. 2023; 13(15):8699. https://doi.org/10.3390/app13158699

Chicago/Turabian StyleMa, Zihan, Yuxiang Chen, Yu Fan, Xiaohai He, Wei Luo, and Jun Shu. 2023. "An Improved AoT-DCGAN and T-CNN Hybrid Deep Learning Model for Intelligent Diagnosis of PTCs Quality under Small Sample Space" Applied Sciences 13, no. 15: 8699. https://doi.org/10.3390/app13158699

APA StyleMa, Z., Chen, Y., Fan, Y., He, X., Luo, W., & Shu, J. (2023). An Improved AoT-DCGAN and T-CNN Hybrid Deep Learning Model for Intelligent Diagnosis of PTCs Quality under Small Sample Space. Applied Sciences, 13(15), 8699. https://doi.org/10.3390/app13158699