An Investigation of Transfer Learning Approaches to Overcome Limited Labeled Data in Medical Image Analysis

Abstract

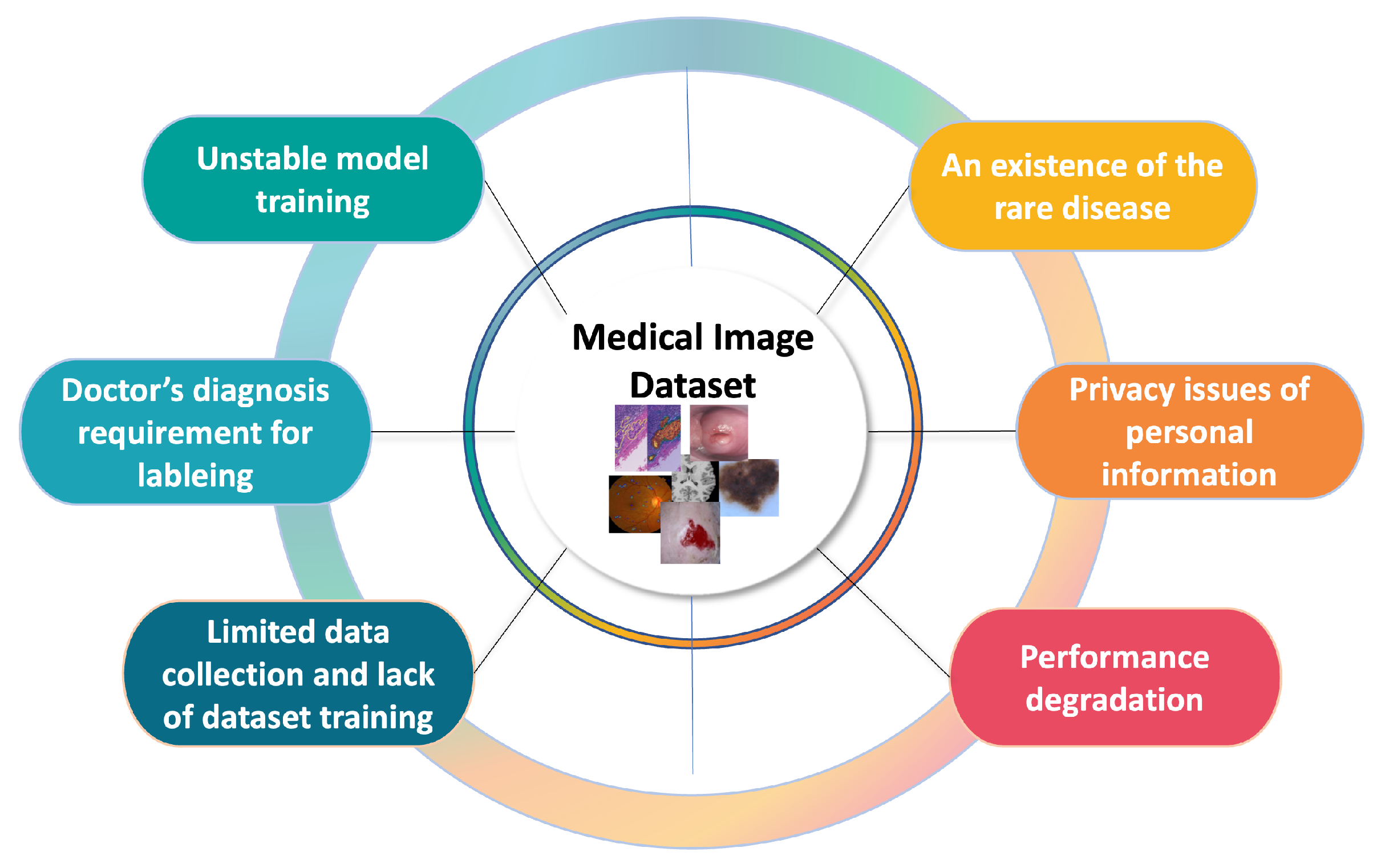

1. Introduction

- Because of the lack of data in medical images, we propose transfer learning approaches: (1) transfer learning with similar images, (2) a new approach using transfer learning with self-supervised learning, and (3) transfer learning with RoIs. We apply these approaches to image classification, object detection, and segmentation tasks, respectively, in representative medical images and demonstrate their performance.

- In addition to these approaches, we propose a multi-task learning and ensemble approach using the optimal transfer learning method appropriate to medical images.

- When these approaches were applied to cervical cancer classification, skin lesion classification, and pressure ulcer segmentation and the results were compared to those of state-of-the-art approaches, for cervical cancer classification, they improved the sensitivity by 5.4%; for skin lesion classification, they improved the accuracy by 8.7%, the precision by 28.3%, and the sensitivity by 39.7%; and for pressure ulcer segmentation, they improved the accuracy by 1.2%, the intersection over union (IoU) by 16.9%, and the Dice similarity coefficient (DSC) by 3.5%.

2. Related Work

2.1. Classification

2.2. Object Detection

2.3. Segmentation

| Reference | Application/Task | Approach | Data | Metric | TL* |

|---|---|---|---|---|---|

| Xu et al., 2017 [12] | Cervical cancer classification | CaffeNet pre-trained with ImageNet, machine learning classifier, three pyramid features: PLAB, PHOG, PLBP | 345 positive/345 negative images by NCI | Accuracy (Acc) | √ |

| Vasudha et al., 2018 [13] | Cervical cancer classification | CNN features, LeNet model | 345 positive/345 negative images randomly selected by NCI | Acc | ✗ |

| Hu et al., 2019 [14] | Cervical cancer classification | Faster R-CNN, CNN pre-trained with the ImageNet dataset and data augmentation | Cervical images by NCI | AUC, Sensitivity, Specificity | √ |

| Alyafeai et al., 2020 [16] | Cervical cancer object detection and classification | YOLO algorithm, GoogLeNet model, data augmentation | Intel & MobileODT dataset, 174 positive/174 negative images by NCI | Acc, specificity, sensitivity | ✗ |

| Xue et al., 2020 [18] | Cervical histopathology classification | Inception-V3, Xception, VGG-16, Resnet-50, and ensembled transfer learning | Histopathology images from China Medical University | Acc, precision, recall, f1-score | √ |

| Zhang et al., 2021 [17] | Cervical cancer classification | CNN with patch weights learning and spatial regulator | 978 records by NCI | Acc, AUC, equal error rate, F1-score, precision, recall | ✗ |

| Dhawan et al., 2021 [19] | Cervical cancer classification | Inception V3, ResNet50, VGG19 | Intel & MobileODT dataset | Precision, recall, f1-score, support, confusion matrix | √ |

| Zhao et al., 2022 [20] | Cervical cancer classification | Taming transformer with pre-trained T2T-ViT | Pap smear dataset consisting of a total of 963 LBC images with four classes, SIPAKMeD consisting of 4049 images of isolated cells with 5 classes, Herlev consisting of 917 isolated single-cell images with seven classes | Acc, F1-score, H-mean, sensitivity, specificity | √ |

| Ghantasala et al., 2023 [23] | Cervical cancer classification | CNN, VGG16 | Melanoma histopathology images | Acc | √ |

| Deo et al., 2023 [25] | Cervical cancer classification | CerviFormer, cross-attention | Pap smear datasets—Sipakmed dataset and Herlev dataset | Acc, precision, recall, f1-score | ✗ |

| Kalbhor and Shinde 2023 [24] | Cervical cancer classification | Pre-trained feature extractor, resnet50, Google Net | Herlev dataset with 917 images | Acc | √ |

| Zhou et al., 2017 [26] | Colonoscopy frame classification, polyp detection, pulmonary embolism detection | Pre-trained AlexNet model with active incremental fine-tuning method | 4000 colonoscopy frames selected from 6 complete colonoscopy videos, 38 short colonoscopy videos from 38 different patients, 121 CT pulmonary angiography datasets with a total number of 326 pulmonary embolisms | AUC, diversity, entropy | √ |

| Tajbakhsh et al., 2016 [27] | Colonoscopy frame classification, polyp detection, pulmonary embolism detection, intima-media boundary segmentation | Pre-trained AlexNet model with layer-wise fine-tuning method | 4000 colonoscopy frames selected from 6 complete colonoscopy videos, 40 short colonoscopy videos from 38 different patients, 121 CT pulmonary angiography datasets with a total number of 326 pulmonary embolism, 92 carotid intima-media thickness videos | AUC, free-response operating characteristic, ROC curve, segmentation error | √ |

| Kermany et al., 2018 [28] | Age-related macular degeneration and diabetic macular edema classification, Pneumonia detection | Inception V3 model pretrained on the ImageNet dataset | 207,130 OCT images. 5232 chest X-ray images from children, including 3883 characterized as depicting pneumonia (2538 bacterial and 1345 viral) and 1349 normal from a total of 5856 patients | Acc, cross-entropy loss, sensitivity, specificity, weighted error ROC curve | √ |

| Xia et al., 2021 [31] | Pancreatic cancer classification | Anatomy-aware transformer with localization u-net | pancreatic ductal adenocarcinom CT scans dataset of 1627 patients | AUC, sensitivity, specificity | ✗ |

| Gheflati B and Rivaz H 2021 [32] | Breast cancer classification | ViT, pretrained VGG16, ResNet50, InceptionV3, and NASNetLarge models based on the breast US dataset | BUSI dataset which has 780 breast US images (133 normal images, 437 malignant masses, and 210 benign tumors), Dataset B which has 163 images (110 benign masses and 53 cancerous masses) | Acc, AUC | √ |

| Rezaeijo et al., 2021 [30] | Breast cancer classification | DenseNet201 and ResNet152V2 | QIN-Breast dataset from TCIA | Acc, precision, recall, f1-score | √ |

| Yang et al., 2021 [33] | Fundus disease classification | Transformer, CNN | OIA dataset | Acc | ✗ |

| Ikromajanov et al., 2022 [34] | Prostate cancer classification | ViT | Kaggle PANDA challenge dataset which has 10,616 images | F1-score, precision, recall | √ |

| Samala et al., 2017 [29] | Classification of malignant and benign breast masses | Deep CNNs with stochastic gradient descent | 1655 digitized-screen film mammography views and 310 digital mammography views with 2454 masses (1057 malignant, 1397 benign) | AUC, ROC curves | √ |

| Masood et al., 2015 [47] | Skin lesion classification | Ensemble, deep belief network, self-advising approach for SVM (RBF kernel, poly kernel) | 290 images with 170 benign, 120 melanoma, Sydney Melanoma Diagnostic Centre, Royal Prince Alfred Hospital | Acc | ✗ |

| Pal et al., 2018 [48] | Skin lesion classification | Various pre-trained CNN models (ResNet50, DenseNet121, MobileNet) | 10,015 images from HAM10000 dataset | Acc | √ |

| Liao and Luo 2017 [50] | Skin lesion classification | ResNet-50 with multi-task learning | DermQuest images with 25 lesion types. 21,657 images that contain both the skin lesion and body location labels | Acc, average precision | √ |

| Carcagnì et al., 2019 [49] | Skin lesion classification | Pre-trained DenseNet121, SVM | 10,015 images from HAM10000 dataset | Precision, recall | √ |

| Mahbod et al., 2019 [46] | Skin lesion classification | Features with a pre-trained model (AlexNet, VGG16, ResNet18), SVM | 2037 images by ISIC 2016, 2017 datasets with 411 malignant melanomas, 254 seborrheic keratoses, 1372 benign nevi | AUC | √ |

| Albahar 2019 [43] | Skin lesion classification | CNN model with an embedded regularizer | 23,906 images by ISIC 2018 with skin melanoma images of benign and malignant lesions | Acc, AUC, sensitivity, specificity | ✗ |

| Nahata and Singh 2020 [44] | Skin cancer classification | Pre-trained CNN and data augmentation | ISIC 2018 and 2019 dataset | Acc, F1-score, precision, recall | √ |

| Sirotkin et al., 2021 [45] | Skin lesion classification | ResNet50 model with curriculum orderings and sequential self-supervised pre-training | ISIC 2019 dataset | AUC, balanced Acc | √ |

| Ahmad et al., 2023 [51] | Skin lesion clasification | Xception, ShuffleNet, data augmentation, butterfly optimization algorithm | ISIC 2018 dataset, HAM10000 dataset | Acc, precision, recall, f1-score, FNR | √ |

| Alsahafi et al., 2023 [52] | Skin lesion classification | Skin-net, cross-channel correlation | ISIC 2019 dataset, ISIC 2020 datset | Acc, precision, sensitivity, specificity, f1-score | ✗ |

| Yu et al., 2021 [35] | Melanoma (Skin) classification | Pre-trained MobileNetv2 to extract patch-wise embeddings and scale-aware transformer with soft labeling | ISIC 2020 dataset | Acc, AUC, F1-score, sensitivity, specificity | √ |

| Wu et al., 2021 [36] | Melanocytic lesions (skin) classification | Encoded multi-scale features with transformer | MPATH-Dx dataset | Acc, AUC, F1-score, sensitivity, specificity | ✗ |

| Wang et al., 2015 [62] | Wound segmentation, infection, detection, healing prediction | Encoder-decoder of ConvNet, SVM, Gaussian process | NYU database | Pixel Acc, mean IoU | ✗ |

| Goyal et al., 2017 [64] | Wound segmentation | FCN for diabetic foot ulcer segmentation, two-tier transfer learning with ImageNet and Pascal VOC dataset | Medetec medical image database, approximately a few hundred wound images | IoU, precision, recall | √ |

| Pholberdee et al., 2018 [63] | Foot ulcer segmentation | CNN, color data augmentation | Lancashire Teaching Hospitals | DSC, matthews correlation coefficient, specificity, sensitivity | ✗ |

| Garcia-Zapirain et al., 2018 [65] | Pressure ulcer segmentation, classification | 3D CNN, RoI, preselected Gaussian kernel 3D hue- saturation- intensity color space images | 193 test color pressure ulcer images, granulation necrotic eschar, and slough tissue images | AUC, DSC, percentage area distance | ✗ |

| Khalil et al., 2019 [66] | Wound segmentation, classification, healing assessment | Various color feature spaces, texture spaces, features extracted through scale-invariant feature transform, non-negative matrix factorization–based feature reduction, various classifiers (naïve Bayes, generalized linear model, random forest) | Medetec wound database with 341 RGB pressure ulcer images, 36 pressure ulcer images from National Pressure Ulcer Advisory Panel website | Acc, precision, sensitivity | ✗ |

| Ohura et al., 2019 [67] | Wound segmentation | SegNet, LinkNet, U-Net, and U-Net with the VGG16 encoder pre-trained on ImageNet | dataset of pressure ulcers, diabetic foot ulcers, and venous leg ulcers data | Acc, AUC, sensitivity, specificity | √ |

| Oota et al., 2023 [68] | Wound segmentation | WSNet with global-local architecture | WoundSeg dataset with 8 types (diabetic, pressure, trauma, venous, surgical, arterial, cellulitis and others) | IoU, DSC | √ |

| Zhang et al., 2023 [69] | Wound segmentation | FANet with a edge feature augment module and a spatial relationship feature augment module, IFANet | MISC dataset with 5523 skin wound images and FUSeg dataset with 1010 images | IoU, sensitivity, specificity | ✗ |

| Zahia et al., 2020 [57] | Pressure ulcer segmentation | Mask R-CNN with mesh rasterization, matching block and measurement block | 210 photographs of pressure injuries including 100 images from Medetec Medical Images | DSC, RMSE, mean absolute error | √ |

| Swerdlow et al., 2023 [70] | Pressure ulcer segmentation | Mask R-CNN | 969 pressure injury images from eKare Inc. | Acc, precision, recall, f1-score, DSC | √ |

| Aldughayfiq et al., 2023 [71] | Pressure ulcer detection and classification | YOLOv5 | Medetec image database and online source images | Mean average precision, recall, f1-score | ✗ |

| Moeskops et al., 2016 [72] | Anatomical structure segmentation | CNN with exponential linear units, batch normalization, and dropout | Brain MRI, breast MRI, cardiac CTA | DSC | √ |

| Dong et al., 2018 [73] | Chest organ segmentation | ResNet18 for discriminator and FCN for segmentor, with unsupervised learning and semi-supervised learning | JSRT dataset for lung and heart segmentation, which contains 257 grayscale chest X-rays. Wingspan dataset which contains 221 grayscale chest X-rays for adult patients with annotated key point for calculation of cardiothoracic ratio | Average percentage error, IoU, mean absolute error, root mean squared error (RMSE) | √ |

| Javanmardi et al., 2018 [74] | Eye vasculature segmentation, neuron membrane detection | FCN for segmentation and CNN for classification on the outputs of the segmentor, connected through a gradient reversal layer. | DRIVE dataset consists of 40 eye fundus images with corresponding pixel labeled ground truth images. STARE dataset with 20 annotated eye fundus images. 100 electron microscopy images of size 1024 × 1024 and 125 electron microscopy images of size 1250 × 1250 | F1-score | √ |

| Li et al., 2018 [77] | Liver tumor segmentation | H-DenseUNet consisting of the 2D U-Net and the 3D Counterpart followed by jointly optimizing the hybrid features in the hybrid feature fusion layer | LiTS dataset containing 131 and 70 contrast-enhanced 3D abdominal CT scans and 3DIRCADb dataset containing 20 venous phase enhanced CT scans | Average symmetric surface distance, Dice, relative volume difference, RMSE, root mean symmetric surface distance, volumetric overlap error | ✗ |

| You et al., 2022 [76] | Liver tumor segmentation | Class-aware adversarial transformer | Synapse1, LiTS, and MP-MRI | Average symmetric surface distance, DSC, jaccard Index, hausdorff distance | √ |

| Özcan et al., 2023 [82] | Liver tumor segmentation | AIM-UNet | HAOS, LiST, 3DIRCADb datsaets | Acc, DSC, IoU | ✗ |

| Rongrong et al., 2023 [83] | Liver tumor segmentation | CCLnet with cascaded context module and Ladder Atrous Spatial Pyramid Pooling, ResNet-34 | LiTS 2017 dataset, 3DIRCADb dataset | Asymmetric metric, DSC, euclidean distance, volumetric overlap | ✗ |

| Huang et al., 2020 [78] | Liver and spleen segmentation | UNet3+ with full-scale skip connections and full-scale deep supervision | ISBI LiTS 2017 dataset containing 131 contrast-enhanced 3D abdominal CT scans. Spleen dataset from the hospital containing 40 and 9 CT volumes | Dice | ✗ |

| Arias-Londono et al., 2020 [59] | Lung segmentation, classification | Deep CNN based on COVID-Net, U-Net, Grad-CAM | 49,983 control X-ray images, 24,114 pneumonia X-ray images, 8573 COVID-19 X-ray images | Acc, F1-score, geometric mean recall, positive predictive value, recall | ✗ |

| Gao et al., 2022 [75] | Cardiac segmentation | U-shape hybrid Transformer Network (a hybrid hierarchical architecture with bidirectional attention) | Large Cardiac MRI dataset, ACDC, M&Ms, M&Ms-2, and UK Biobank | DSC, hausdorff distance | ✗ |

| Hatamizadeh et al., 2022 [80] | Brain tumor segmentation | Swin U-Net transformer | BraTS 2021 datset | DSC, hausdorff distance | ✗ |

| Aggarwal et al., 2023 [84] | Brain tumor detection and segmentation | ResNet | BraTS 2020 dataset with 369 MR images | DSC, IoU, MSE, peak signal noise ratio, sensitivity, specificity | ✗ |

| Montaha et al., 2023 [85] | Brain tumor segmentation | U-Net | BraTS 2020 dataset | DSC | ✗ |

| Fatan et al., 2022 [81] | Head and neck tumor segmentation | 3D-UNet and SegResNet, combined with advanced fusion techniques | PET and CT images from HECTOR Challenge | Dice score | ✗ |

| Isensee et al., 2021 [79] | Variant segmentation | nnUNet with image normalization and voxel spacing preprocessing | Automatic Cardiac Segmentation Challenge, PROMISE12 dataset, LiTS, Multi-Atlas labeling beyond the Cranial Vault challenge | Average foreground dice score | ✗ |

| Saiz et al., 2020 [58] | Lung detection | Single Shot MultiBox Detector, VGG16 pre-trained with ImageNet, Fast R-CNN, contrast-limited adaptive histogram equalization | 887 normal X-ray images and 100 COVID-19 X-ray images | Acc, sensitivity, specificity | √ |

| Brunese et al., 2020 [60] | COVID-19 classification | Variant VGG16 pre-trained with ImageNet, Grad-CAM | 250 images of COVID-19, 2753 X-ray images of pulmonary disease, and 3520 healthy X-ray images | Acc, F-measure, sensitivity, specificity | √ |

| Rezaeijo et al., 2021 [37] | COVID-19 classification | DenseNet201, ResNet50, Xception, VGG16, RandomForest, SVM, Decision tree, Logistic regression | 5480 CT images of confirmed COVID19 and suspected cases | Acc, precision, recall, f1-score | √ |

| Costa et al., 2021 [38] | COVID-19 classification | ViT with Performer | COVIDx dataset | Acc, F1-score, precision, recall, | ✗ |

| Liang 2021 [39] | COVID-19 classification | feature aggregation by transformer, CNN features, data resampling | COV19-CT-DB | macro F1-score | ✗ |

| Sufian et al., 2023 [41] | COVID-19 classification | Histogram equalization and contrast limited adaptive histogram equalization, InceptionV3, MobileNet, ResNet50, VGG16, ViT-B16, ViT-B32 | 2481 CT scans from SARS-CoV-2 CT-Scan dataset | Acc, precision, recall, f1-score | √ |

| Constatinou et al., 2023 [42] | COVID-19 classification | ResNet50, ResNet101, DenseNet121, DenseNet169, InceptionV3 | COVID-QU dataset with 33,920 Chest X-ray images | Acc, precision, recall, f1-score | √ |

3. Method

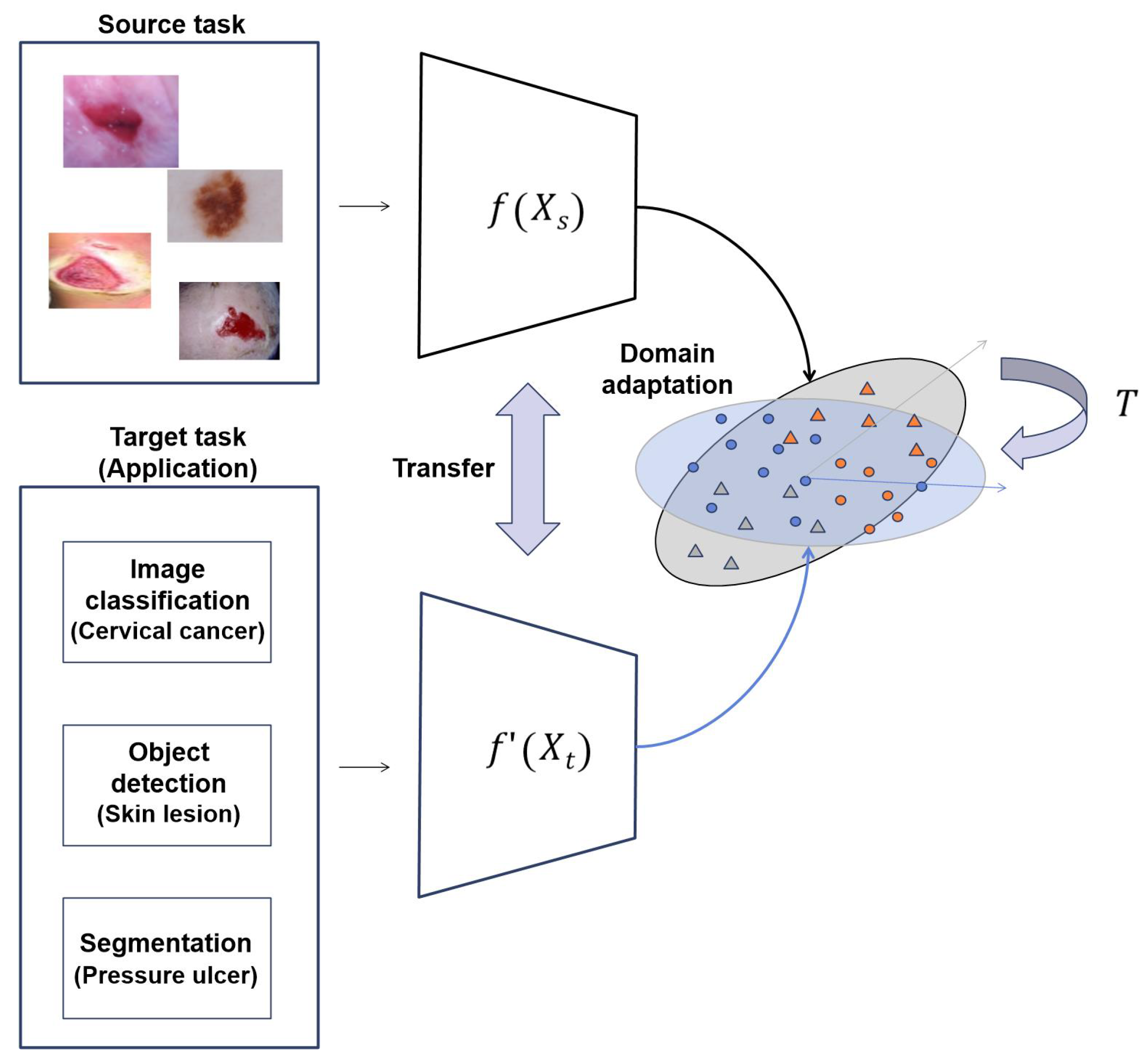

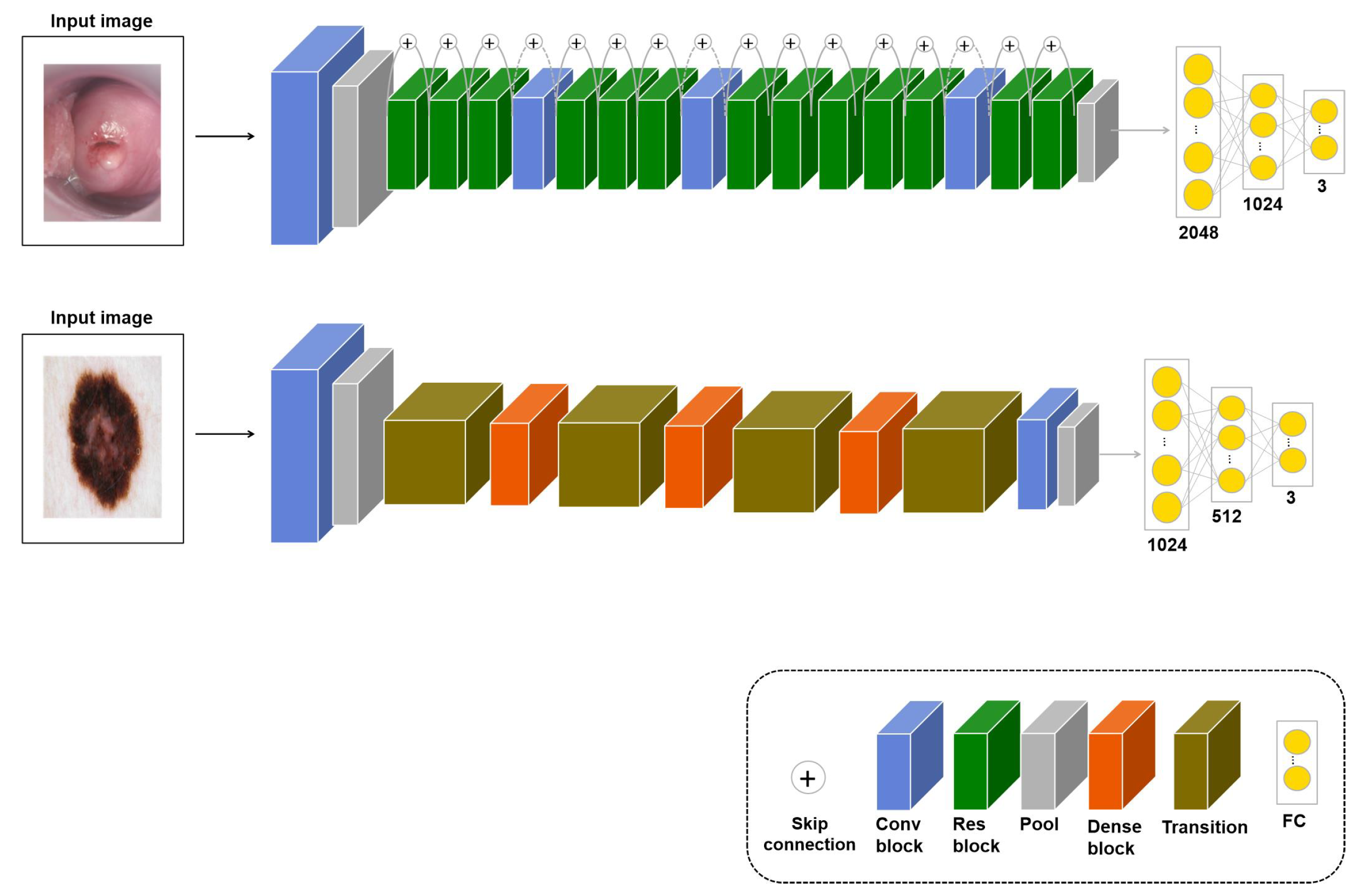

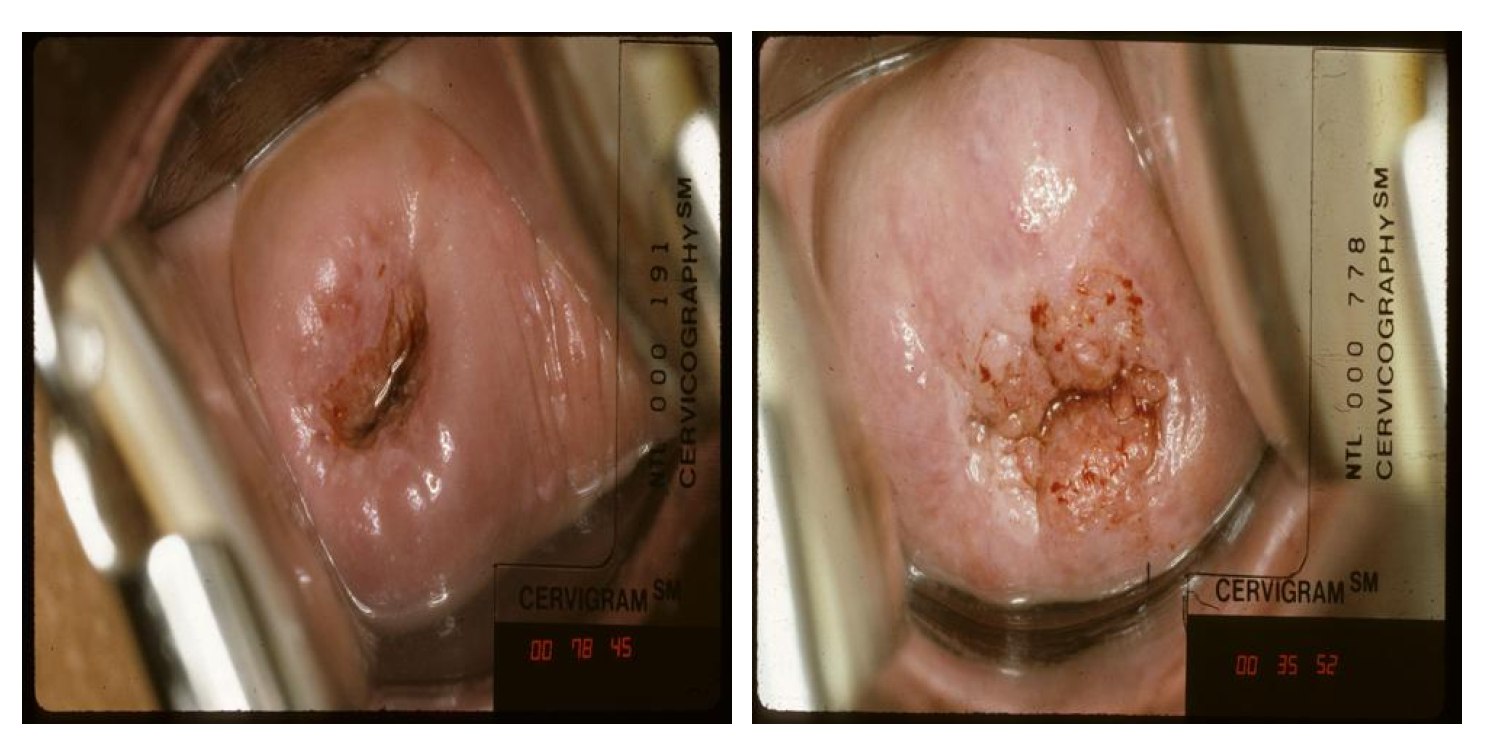

3.1. : Transfer Learning with Similar Images

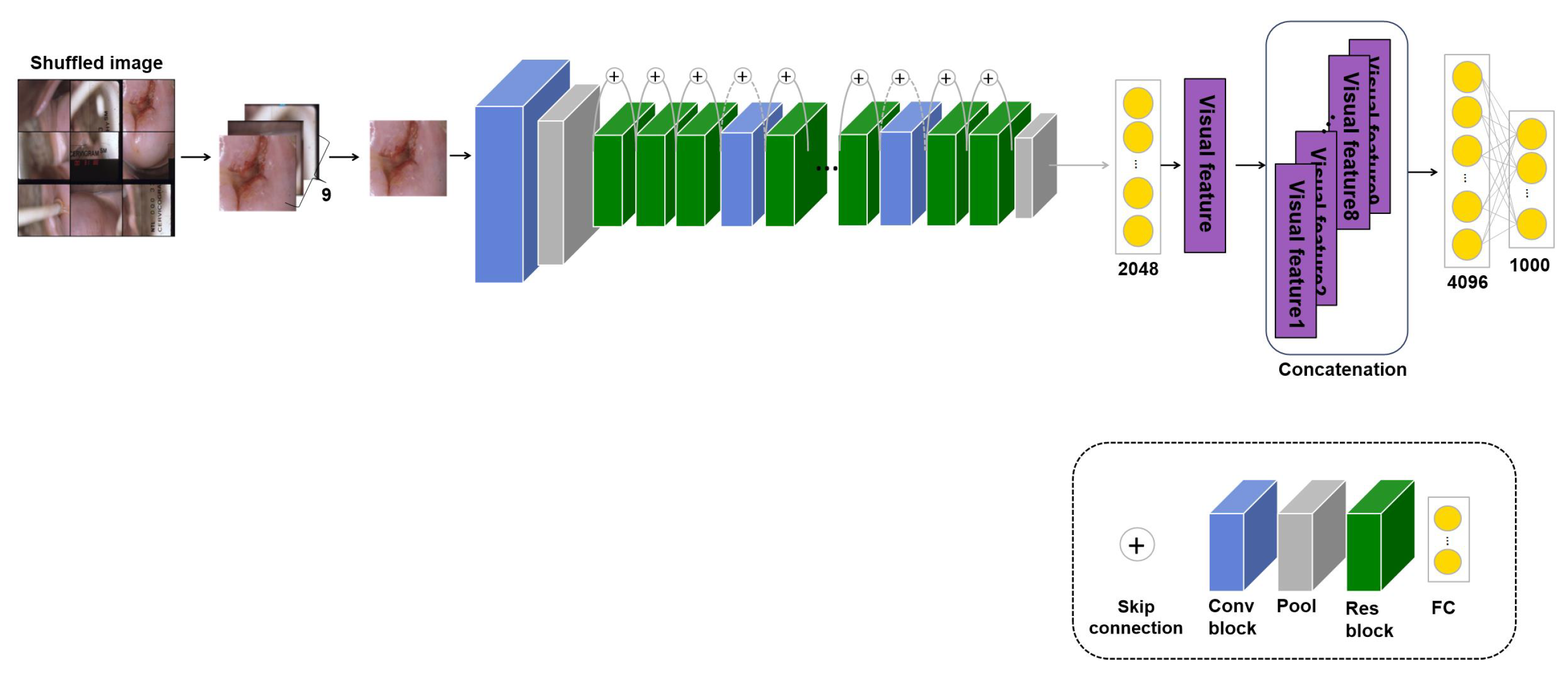

3.2. : Transfer Learning with Self-Supervised Learning

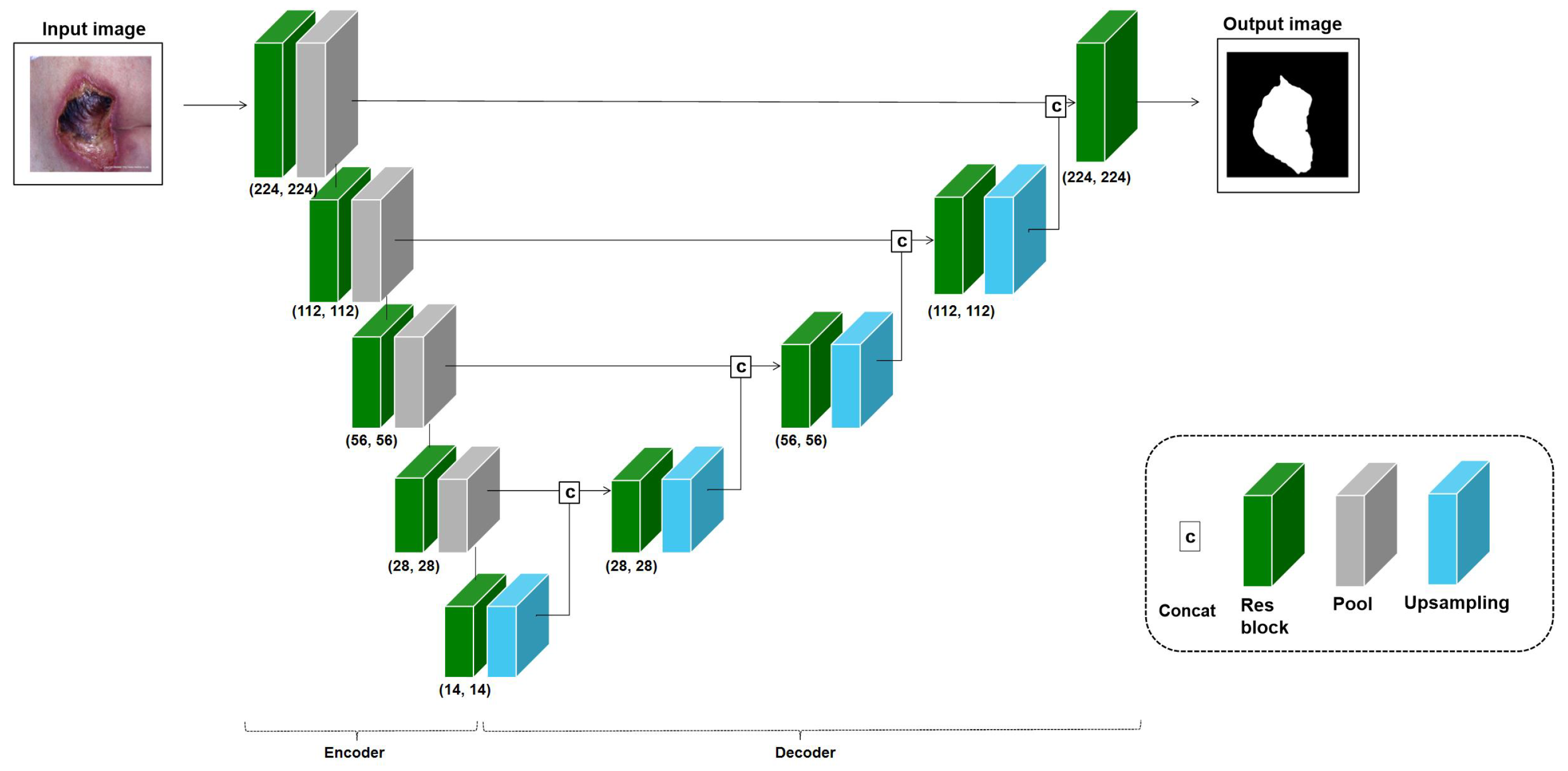

3.3. : Transfer Learning with RoI

3.4. : Transfer Learning with Multi-Task Learning

4. Experiments

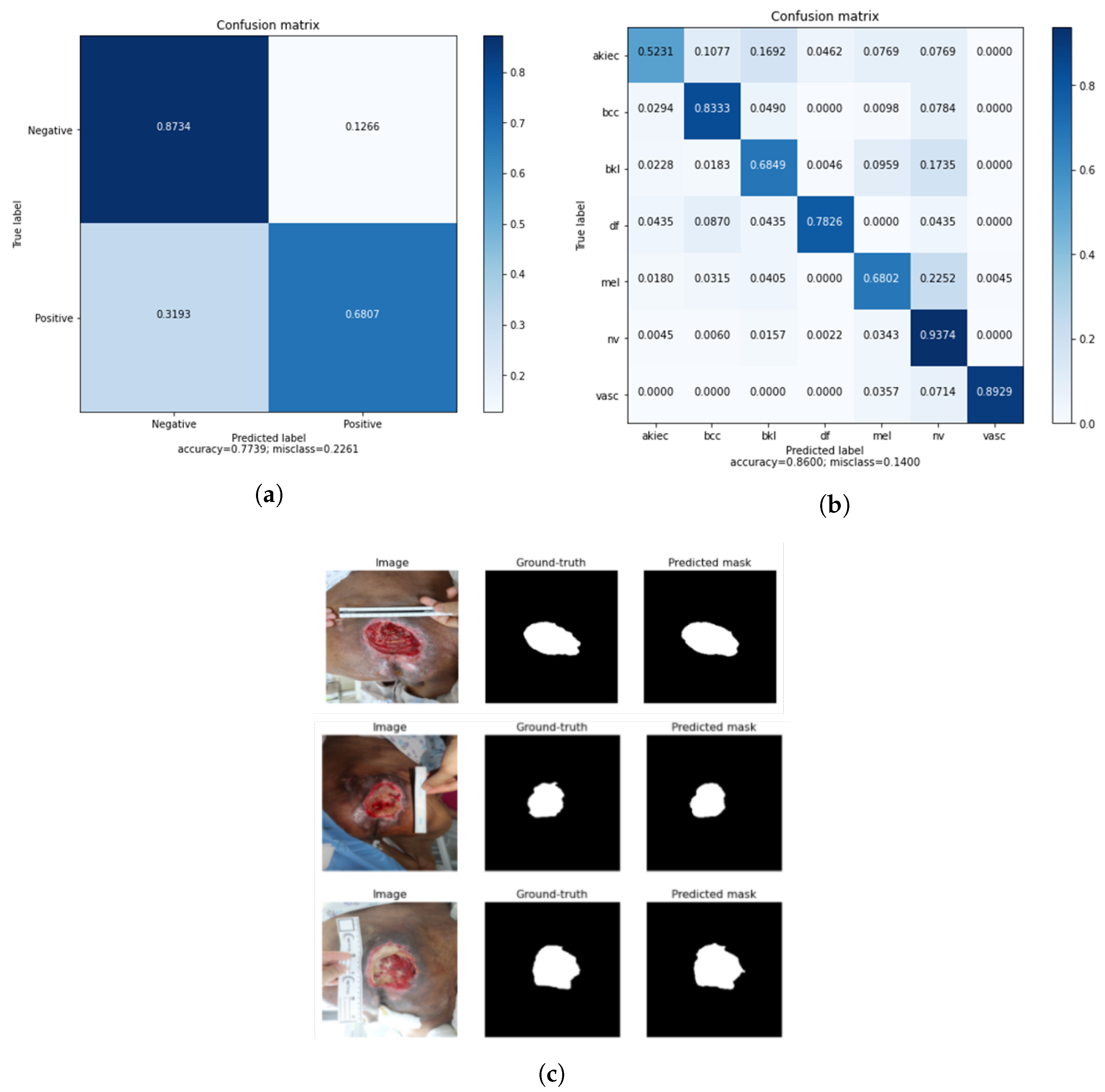

4.1. Cervical Cancer Classification

4.1.1. Datasets

4.1.2. Metrics

4.1.3. Results

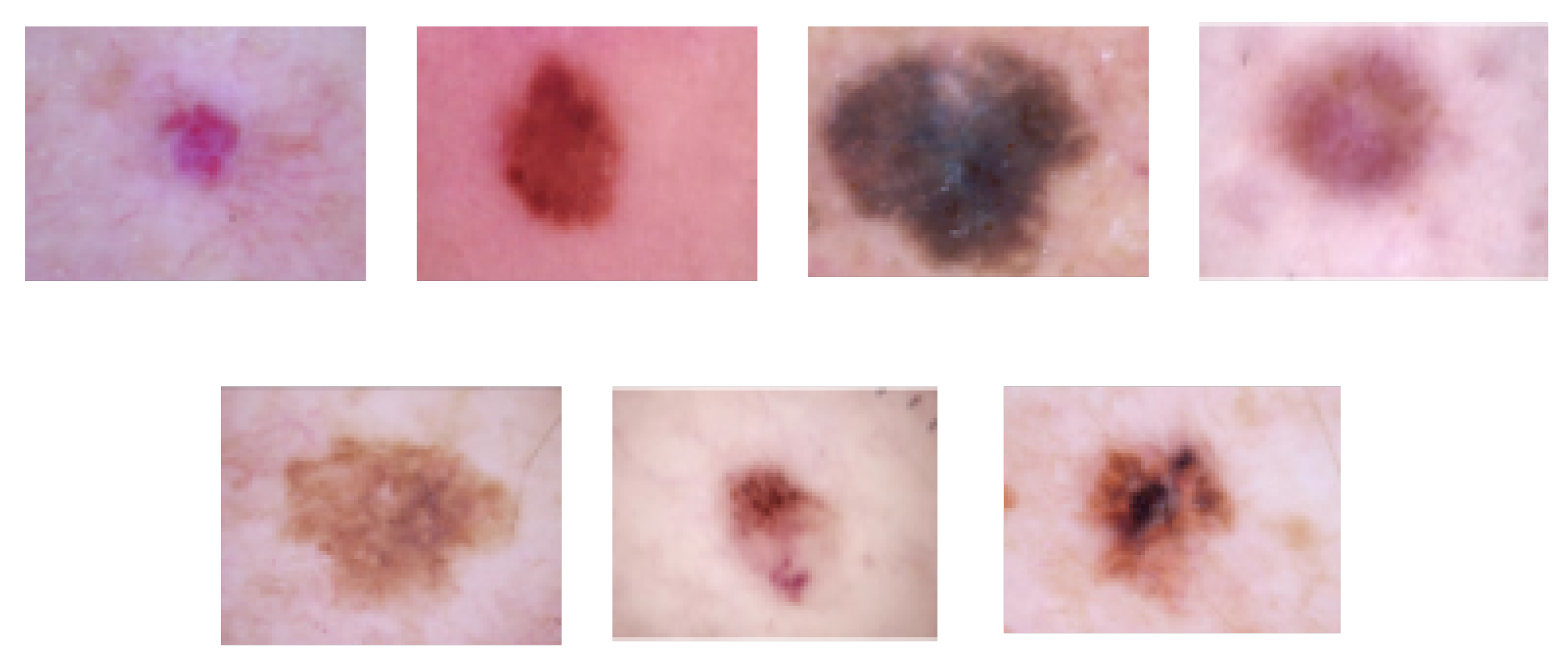

4.2. Skin Lesion Classification

4.2.1. Datasets

4.2.2. Metrics

4.2.3. Results

4.3. Pressure Ulcer Segmentation

4.3.1. Datasets

4.3.2. Metrics

4.3.3. Results

4.4. Comparison with State-of-the-Art Approaches

5. Discussion

6. Conclusions and Future work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Yann, L.; Patrick, H.; Bottou, L.; Yoshua, B. Object recognition with gradient-based learning. In Shape, Contour and Grouping in Computer Vision. Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 1999; Volume 1681, pp. 319–345. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar] [CrossRef]

- Liu, Z.; Mao, H.; Wu, C.Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A ConvNet for the 2020s. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 11966–11976. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar] [CrossRef]

- Chae, J.; Zimmermann, R.; Kim, D.; Kim, J. Attentive Transfer Learning via Self-Supervised Learning for Cervical Dysplasia Diagnosis. J. Inf. Process. Syst. 2021, 17, 453–461. [Google Scholar] [CrossRef]

- Hwang, S.; Kim, H. Self-transfer learning for fully weakly supervised object localization. arXiv 2016, arXiv:1602.01625. [Google Scholar]

- Chae, J.; Kim, H.; Yang, H. A Dual Attention Network for Skin Lesion Classification. Korea Software Congress 2020, 47, 460–462. [Google Scholar]

- Hesamian-Hesam, M.; Jia, W.; He, X.; Kennedy, P. Deep learning techniques for medical image segmentation: Achievements and challenges. J. Digit. Imaging 2019, 32, 582–596. [Google Scholar] [CrossRef]

- Zhou, Y.; Chen, H.; Li, Y.; Liu, Q.; Xu, X.; Wang, S.; Yap, P.T.; Shen, D. Multi-task learning for segmentation and classification of tumors in 3D automated breast ultrasound images. Med. Image Anal. 2021, 70, 101918. [Google Scholar] [CrossRef]

- Xu, T.; Zhang, H.; Xin, C.; Kim, E.; Long, L.R.; Xue, Z.; Antani, S.; Huang, X. Multi-feature based benchmark for cervical dysplasia classification evaluation. Pattern Recognit. 2017, 63, 468–475. [Google Scholar] [CrossRef]

- Vasudha, A.M.; Juneja, M. Cervix cancer classification using colposcopy images by deep learning method. Int. J. Eng. Technol. Sci. Res. (IJETSR) 2018, 5, 426–432. [Google Scholar]

- Hu, L.; Bell, D.; Antani, S.; Xue, Z.; Yu, K.; Horning, M.P.; Gachuhi, N.; Wilson, B.; Jaiswal, M.S.; Befano, B.; et al. An Observational Study of Deep Learning and Automated Evaluation of Cervical Images for Cancer Screening. JNCI J. Natl. Cancer Inst. 2019, 111, 923–932. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Alyafeai, Z.; Ghouti, L. A fully automated deep learning pipeline for cervical cancer classification. Expert Syst. Appl. 2020, 141, 112951. [Google Scholar] [CrossRef]

- Zhang, Y.; Yin, Y.; Liu, Z.; Zimmermann, R. A Spatial Regulated Patch-Wise Approach for Cervical Dysplasia Diagnosis. Proc. AAAI Conf. Artif. Intell. 2021, 35, 733–740. [Google Scholar] [CrossRef]

- Xue, D.; Zhou, X.; Li, C.; Yao, Y.; Rahaman, M.M.; Zhang, J.; Chen, H.; Zhang, J.; Qi, S.; Sun, H. An Application of Transfer Learning and Ensemble Learning Techniques for Cervical Histopathology Image Classification. IEEE Access 2020, 8, 104603–104618. [Google Scholar] [CrossRef]

- Dhawan, S.; Singh, K.; Arora, M. Cervix Image Classification for Prognosis of Cervical Cancer using Deep Neural Network with Transfer Learning. EAI Endorsed Trans. Pervasive Health Technol. 2021, 7, e5. [Google Scholar] [CrossRef]

- Zhao, C.; Shuai, R.; Ma, L.; Liu, W.; Wu, M. Improving cervical cancer classification with imbalanced datasets combining taming transformers with t2t-vit. Multimed. Tools Appl. 2022, 81, 24265–24300. [Google Scholar] [CrossRef]

- Esser, P.; Rombach, R.; Ommer, B. Taming Transformers for High-Resolution Image Synthesis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 12868–12878. [Google Scholar]

- Yuan, L.; Chen, Y.; Wang, T.; Yu, W.; Shi, Y.; Jiang, Z.; Tay, F.H.; Feng, J.; Yan, S. Tokens-to-Token ViT: Training Vision Transformers From Scratch on ImageNet. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021; pp. 558–567. [Google Scholar] [CrossRef]

- Ghantasala, G.S.P.; Hung, B.T.; Chakrabarti, P. An Approach For Cervical and Breast Cancer Classification Using Deep Learning: A Comprehensive Survey. In Proceedings of the 2023 International Conference on Computer Communication and Informatics (ICCCI), Budapest, Hungary, 27–29 September 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Kalbhor, M.M.; Shinde, S.V. Cervical cancer diagnosis using convolution neural network: Feature learning and transfer learning approaches. In Proceedings of the Soft Comput, Chongqing, China, 5–7 January 2023. [Google Scholar] [CrossRef]

- Deo, B.S.; Pal, M.; Panigarhi, P.K.; Pradhan, A. CerviFormer: A Pap-smear based cervical cancer classification method using cross attention and latent transformer. arXiv 2023, arXiv:2303.10222. [Google Scholar]

- Zhou, Z.; Shin, J.; Zhang, L.; Gurudu, S.; Gotway, M.; Liang, J. Fine-tuning convolutional neural networks for biomedical image analysis: Actively and incrementally. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4761–4772. [Google Scholar] [CrossRef]

- Tajbakhsh, N.; Shin, J.Y.; Gurudu, S.R.; Hurst, R.T.; Kendall, C.B.; Gotway, M.B.; Liang, J. Convolutional neural networks for medical image analysis: Full training or fine tuning? IEEE Trans. Med. Imaging 2016, 35, 1299–1312. [Google Scholar] [CrossRef]

- Kermany, D.S.; Goldbaum, M.; Cai, W.; Valentim, C.C.; Liang, H.; Baxter, S.L.; McKeown, A.; Yang, G.; Wu, X.; Yan, F.; et al. Identifying medical diagnoses and treatable diseases by image-based deep learning. Cell 2018, 172, 1122–1131.e9. [Google Scholar] [CrossRef]

- Samala, R.K.; Chan, H.P.; Hadjiiski, L.M.; Helvie, M.A.; Cha, K.H.; Richter, C.D. Multi-task transfer learning deep convolutional neural network: Application to computer-aided diagnosis of breast cancer on mammograms. Phys. Med. Biol. 2017, 62, 8894. [Google Scholar] [CrossRef]

- Rezaeijo, S.M.; Ghorvei, M.; Mofid, B. Predicting Breast Cancer Response to Neoadjuvant Chemotherapy Using Ensemble Deep Transfer Learning Based on CT Images. J. X-ray Sci. Technol. 2021, 29, 835–850. [Google Scholar]

- Xia, Y.; Yao, J.; Lu, L.; Huang, L.; Xie, G.; Xiao, J.; Yuille, A.; Cao, K.; Zhang, L. Effective Pancreatic Cancer Screening on Non-contrast CT Scans via Anatomy-Aware Transformers. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2021; de Bruijne, M., Cattin, P.C., Cotin, S., Padoy, N., Speidel, S., Zheng, Y., Essert, C., Eds.; Springer: Cham, Switzerland, 2021; pp. 259–269. [Google Scholar]

- Gheflati, B.; Rivaz, H. Vision transformer for classification of breast ultrasound images. arXiv 2021, arXiv:2110.14731. [Google Scholar]

- Yang, H.; Chen, J.; Xu, M. Fundus disease image classification based on improved transformer. In Proceedings of the International Conference on Neuromorphic Computing (ICNC), Wuhan, China, 11–14 October 2021; pp. 207–214. [Google Scholar]

- Ikromjanov, K.; Bhattacharjee, S.; Hwang, Y.B.; Sumon, R.I.; Kim, H.C.; Choi, H.K. Whole Slide Image Analysis and Detection of Prostate Cancer using Vision Transformers. In Proceedings of the 2022 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Korea, Republic of Korea, 21–24 February 2022; pp. 399–402. [Google Scholar] [CrossRef]

- Yu, Z.; Mar, V.; Eriksson, A.; Chandra, S.; Bonnington, P.; Zhang, L.; Ge, Z. End-to-End Ugly Duckling Sign Detection for Melanoma Identification with Transformers. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2021; de Bruijne, M., Cattin, P.C., Cotin, S., Padoy, N., Speidel, S., Zheng, Y., Essert, C., Eds.; Springer: Cham, Switzerland, 2021; pp. 176–184. [Google Scholar]

- Wu, W.; Mehta, S.; Nofallah, S.; Knezevich, S.; May, C.; Chang, O.; Elmore, J.; Shapiro, L. Scale-aware transformers for diagnosing melanocytic lesions. IEEE Access 2021, 9, 163526–163541. [Google Scholar] [CrossRef]

- Rezaeijo, S.M.; Ghorvei, M.; Abedi-Firouzjah, R.; Mojtahedi, H.; Zarch, H.E. Detecting COVID-19 in chest images based on deep transfer learning and machine learning algorithms. Egypt. J. Radiol. Nucl. Med. 2021, 52, 145. [Google Scholar] [CrossRef]

- Costa, G.; Paiva, A.; Júnior, G.B.; Ferreira, M. COVID-19 automatic diagnosis with CT images using the novel Transformer architecture. In Proceedings of the Anais do XXI Simpósio Brasileiro de Computação Aplicada à Saúde, SBC, Online, 15–18 June 2021; pp. 293–301. [Google Scholar] [CrossRef]

- Liang, S. A hybrid deep learning framework for covid-19 detection via 3d chest ct images. arXiv 2021, arXiv:2107.03904. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. In Proceedings of the International Conference on Learning Representations(ICLR), Virtual, 3–7 May 2021. [Google Scholar]

- Sufian, M.M.; Moung, E.G.; Hijazi, M.H.A.; Yahya, F.; Dargham, J.A.; Farzamnia, A.; Sia, F.; Mohd Naim, N.F. COVID-19 Classification through Deep Learning Models with Three-Channel Grayscale CT Images. Big Data Cogn. Comput. 2023, 7, 36. [Google Scholar] [CrossRef]

- Constantinou, M.; Exarchos, T.; Vrahatis, A.G.; Vlamos, P. COVID-19 Classification on Chest X-ray Images Using Deep Learning Methods. Int. J. Environ. Res. Public Health 2023, 20, 2035. [Google Scholar] [CrossRef] [PubMed]

- Albahar, M. Skin lesion classification using cnn with novel regularizer. IEEE Access 2019, 7, 38306–38313. [Google Scholar] [CrossRef]

- Nahata, H.; Singh, S. Deep Learning Solutions for Skin Cancer Detection and Diagnosis. In Machine Learning with Health Care Perspective: Machine Learning and Healthcare; Springer International Publishing: Berlin/Heidelberg, Germany, 2020; Volume 13, pp. 159–182. [Google Scholar] [CrossRef]

- Sirotkin, K.; Escudero-Vinolo, M.; Carballeira, P.; SanMiguelv, J. Improved skin lesion recognition by a Self-Supervised Curricular Deep Learning approach. arXiv 2021, arXiv:2112.12086. [Google Scholar]

- Mahbod, A.; Schaefer, G.; Wang, C.; Ecker, R.; Ellinge, I. Skin lesion classification using hybrid deep neural networks. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 1229–1233. [Google Scholar] [CrossRef]

- Masood, A.; Al-Jumaily, A.; Anam, K. Self-supervised learning model for skin cancer diagnosis. In Proceedings of the International IEEE/EMBS Conference on Neural Engineering (NER), Montpellier, France, 22–24 April 2015; pp. 1012–1015. [Google Scholar] [CrossRef]

- Pal, A.; Ray, S.; Garain, U. Skin disease identification from dermoscopy images using deep convolutional neural network. arXiv 2018, arXiv:1807.09163. [Google Scholar]

- Carcagnì, P.; Leo, M.; Cuna, A.; Mazzeo, P.L.; Spagnolo, P.; Celeste, G.; Distante, C. Classification of Skin Lesions by Combining Multilevel Learnings in a DenseNet Architecture. In Image Analysis and Processing—ICIAP 2019; Springer: Cham, Switzerland, 2019; pp. 335–344. [Google Scholar]

- Liao, H.; Luo, J. A deep multi-task learning approach to skin lesion classification. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence Workshops, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Ahmad, N.; Shah, J.H.; Khan, M.A.; Baili, J.; Ansari, G.J.; Tariq, U.; Kim, Y.J.; Cha, J.H. A novel framework of multiclass skin lesion recognition from dermoscopic images using deep learning and explainable AI. Front. Oncol. 2023, 13, 1151257. [Google Scholar] [CrossRef]

- Alsahafi, Y.S.; Kassem, M.A.; Hosny, K.M. Skin-Net: A novel deep residual network for skin lesions classification using multilevel feature extraction and cross-channel correlation with detection of outlier. J. Big Data 2023, 10, 105. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Washington, DC, USA, 7–13 December 2015; pp. 1440–1448. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.; Liao, H. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Zahia, S.; Garcia-Zapirain, B.; Elmaghraby, A. Integrating 3D Model Representation for an Accurate Non-Invasive Assessment of Pressure Injuries with Deep Learning. Sensors 2020, 20, 2933. [Google Scholar] [CrossRef]

- Saiz, F.; Barandiaran, I. COVID-19 detection in chest X-ray images using a deep learning approach. Int. J. Interact. Multimed. Artif. Intell. 2020, 1, 11–14. [Google Scholar] [CrossRef]

- Arias-Londoño, J.; Gómez-García, J.; Moro-Velázquez, L.; Godino-Llorente, J. Artificial intelligence applied to chest X-ray images for the automatic detection of COVID-19: A thoughtful evaluation approach. IEEE Access 2020, 8, 226811–226827. [Google Scholar] [CrossRef]

- Brunese, L.; Mercaldo, F.; Reginelli, A.; Santone, A. Explainable deep learning for pulmonary disease and coronavirus COVID-19 detection from X-rays. Comput. Methods Programs Biomed. 2020, 196, 105608. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Wang, C.; Yan, X.; Smith, M.; Kochhar, K.; Rubin, M.; Warren, S.M.; Wrobel, J.; Lee, H. A unified framework for automatic wound segmentation and analysis with deep convolutional neural networks. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 2415–2418. [Google Scholar] [CrossRef]

- Pholberdee, N.; Pathompatai, C.; Taeprasartsit, P. Study of chronic wound image segmentation: Impact of tissue type and color data augmentation. In Proceedings of the 15th International Joint Conference on Computer Science and Software Engineering (JCSSE), Nakhonpathom, Thailand, 11–13 July 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Goyal, M.; Yap, M.H.; Reeves, N.D.; Rajbhandari, S.; Spragg, J. Fully convolutional networks for diabetic foot ulcer segmentation. In Proceedings of the 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Canada, 5–8 October 2017; pp. 618–623. [Google Scholar] [CrossRef]

- Garcia-Zapirain, B.; Elmogy, M.; El-Baz, S.; Elmaghraby, A. Classification of pressure ulcer tissues with 3D convolutional neural network. Med. Biol. Eng. Comput. 2018, 56, 2245–2258. [Google Scholar] [CrossRef]

- Khalil, A.; Elmogy, M.; Ghazal, M.; Burns, C.; El-Baz, A. Chronic wound healing assessment system based on different features modalities and non-negative matrix factorization (NMF) feature reduction. IEEE Access 2019, 7, 80110–80121. [Google Scholar] [CrossRef]

- Ohura, N.; Mitsuno, R.; Sakisaka, M.; Terabe, Y.; Morishige, Y.; Uchiyama, A.; Okoshi, T.; Shinji, I.; Takushima, A. Convolutional neural networks for wound detection: The role of artificial intelligence in wound care. J. Wound Care 2019, 28, S13–S24. [Google Scholar]

- Oota, S.R.; Rowtula, V.; Mohammed, S.; Liu, M.; Gupta, M. WSNet: Towards an Effective Method for Wound Image Segmentation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 2–7 January 2023; pp. 3234–3243. [Google Scholar]

- Zhang, P.; Chen, X.; Yin, Z.; Zhou, X.; Jiang, Q.; Zhu, W.; Xiang, D.; Tang, Y.; Shi, F. Interactive Skin Wound Segmentation Based on Feature Augment Networks. IEEE J. Biomed. Health Inform. 2023, 27, 3467–3477. [Google Scholar] [CrossRef]

- Swerdlow, M.; Guler, O.; Yaakov, R.; Armstrong1, D.G. Simultaneous Segmentation and Classification of Pressure Injury Image Data Using Mask-R-CNN. Comput. Math. Methods Med. 2023, 2023, 3858997. [Google Scholar] [CrossRef]

- Aldughayfiq, B.; Ashfaq, F.; Jhanjhi, N.Z.; Humayun, M. YOLO-Based Deep Learning Model for Pressure Ulcer Detection and Classification. Healthcare 2023, 11, 1222. [Google Scholar] [CrossRef]

- Moeskops, P.; Wolterink, J.M.; van der Velden, B.H.M.; Gilhuijs, K.G.A.; Leiner, T.; Viergever, M.A.; Išgum, I. Deep Learning for Multi-task Medical Image Segmentation in Multiple Modalities. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2016; Ourselin, S., Joskowicz, L., Sabuncu, M.R., Unal, G., Wells, W., Eds.; Springer: Cham, Switzerland, 2016; pp. 478–486. [Google Scholar]

- Dong, N.; Kampffmeyer, M.; Liang, X.; Wang, Z.; Dai, W.; Xing, E. Unsupervised Domain Adaptation for Automatic Estimation of Cardiothoracic Ratio. In Medical Image Computing and Computer Assisted Intervention–MICCAI 2018; Frangi, A.F., Schnabel, J.A., Davatzikos, C., Alberola-López, C., Fichtinger, G., Eds.; Springer: Cham, Switzerland, 2018; pp. 544–552. [Google Scholar]

- Javanmardi, M.; Tasdizen, T. Domain adaptation for biomedical image segmentation using adversarial training. In Proceedings of the IEEE 15th International Symposium on Biomedical Imaging (ISBI), Washington, DC, USA, 4–7 April 2018; Volume 2018, pp. 554–558. [Google Scholar] [CrossRef]

- Gao, Y.; Zhou, M.; Liu, D.; Metaxas, D. A multi-scale transformer for medical image segmentation: Architectures, model efficiency, and benchmarks. arXiv 2022, arXiv:2203.00131. [Google Scholar]

- You, C.; Zhao, R.; Liu, F.; Chinchali, S.P.; Topcu, U.; Staib, L.H.; Duncan, J.S. Class-Aware Generative Adversarial Transformers for Medical Image Segmentation. arXiv 2022, arXiv:2201.10737. [Google Scholar]

- Li, X.; Chen, H.; Qi, X.; Dou, Q.; Fu, C.W.; Heng, P.A. H-DenseUNet: Hybrid Densely Connected UNet for Liver and Tumor Segmentation From CT Volumes. IEEE Trans. Med. Imaging 2018, 37, 2663–2674. [Google Scholar] [CrossRef] [PubMed]

- Huang, H.; Lin, L.; Tong, R.; Hu, H.; Qiaowei, Z.; Iwamoto, Y.; Han, X.H.; Chen, Y.W.; Wu, J. UNet 3+: A Full-Scale Connected UNet for Medical Image Segmentation. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 1055–1059. [Google Scholar] [CrossRef]

- Isensee, F.; Jaeger, P.F.; Kohl, S.A.A.; Petersen, J.; Maier-Hein, K.H. nnU-Net: A self-configuring method for deep learning-based biomedical image segmentation. Nat. Methods 2021, 18, 203–211. [Google Scholar] [CrossRef]

- Hatamizadeh, A.; Nath, V.; Tang, Y.; Yang, D.; Roth, H.R.; Xu, D. Swin UNETR: Swin Transformers for Semantic Segmentation of Brain Tumors in MRI Images. In Brainlesion: Glioma, Multiple Sclerosis, Stroke and Traumatic Brain Injuries; Crimi, A., Bakas, S., Eds.; Springer: Cham, Switzerland, 2022; pp. 272–284. [Google Scholar]

- Fatan, M.; Hosseinzadeh, M.; Askari, D.; Sheikhi, H.; Rezaeijo, S.M.; Salmanpour, M.R. Fusion-Based Head and Neck Tumor Segmentation and Survival Prediction Using Robust Deep Learning Techniques and Advanced Hybrid Machine Learning Systems. In Head and Neck Tumor Segmentation and Outcome Prediction; Andrearczyk, V., Oreiller, V., Hatt, M., Depeursinge, A., Eds.; Springer: Cham, Switzerland, 2022; pp. 211–223. [Google Scholar]

- Özcan, F.; Uçan, O.N.; Karaçam, S.; Tunçman, D. Fully Automatic Liver and Tumor Segmentation from CT Image Using an AIM-Unet. Bioengineering 2023, 10, 215. [Google Scholar] [CrossRef]

- Bi, R.; Guo, L.; Yang, B.; Wang, J.; Shi, C. 2.5D cascaded context-based network for liver and tumor segmentation from CT images. Electron. Res. Arch. 2023, 31, 4324–4345. [Google Scholar] [CrossRef]

- Aggarwal, M.; Tiwari, A.K.; Sarathi, M.P.; Bijalwan, A. An early detection and segmentation of Brain Tumor using Deep Neural Network. BMC Med. Inform. Decis. Mak. 2023, 23, 78. [Google Scholar] [CrossRef]

- Montaha, S.; Azam, S.; Rafid, A.K.M.R.H.; Hasan, M.Z.; Karim, A. Brain Tumor Segmentation from 3D MRI Scans Using U-Net. SN Comput. Sci. 2023, 4, 386. [Google Scholar] [CrossRef]

- National Library of Medicine (U.S.)—The Cleveland Clinic (n.d.). An Innovative Treatment for Cervical Precancer (UH3). 2017. ClinicalTrials.gov Identifier: NCT03084081. Available online: https://classic.clinicaltrials.gov/ct2/show/study/NCT03084081 (accessed on 5 January 2023).

- Tschandl, P.; Rosendahl, C.; Kittler, H. The HAM10000 dataset, a large collection of multi-source dermatoscopic images of common pigmented skin lesions. Sci. Data 2018, 5, 180161. [Google Scholar] [CrossRef] [PubMed]

- Yang, X.; Zeng, Z.; Yeo, S.; Tan, C.; Tey, H.L.; Su, Y. A novel multi-task deep learning model for skin lesion segmentation and classification. arXiv 2017, arXiv:1703.01025. [Google Scholar]

- Chae, J.; Zhang, Y.; Zimmermann, R.; Kim, D.; Kim, J. An Attention-Based Deep Learning Model with Interpretable Patch-Weight Sharing for Diagnosing Cervical Dysplasia. In Intelligent Systems and Applications; Springer: Cham, Switzerland, 2022; pp. 634–642. [Google Scholar]

- Kendall, A.; Gal, Y.; Cipolla, R. Multi-task learning using uncertainty to weigh losses for scene geometry and semantics. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition(CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 7482–7491. [Google Scholar] [CrossRef]

- Herrero, R.; Schiffman, M.; Bratti, C.; Hildesheim, A.; Balmaceda, I.; Sherman, M.; Greenberg, M.; Cárdenas, F.; Gómez, V.; Helgesen, K.; et al. Design and methods of a population-based natural history study of cervical neoplasia in a rural province of Costa Rica: The Guanacaste Project. Rev. Panam. Salud Publica 1997, 1, 362–375. [Google Scholar] [CrossRef]

- Intel & MobileODT Cervical Cancer Screening Competition. 2017. Available online: https://www.kaggle.com/c/intel-mobileodt-cervical-cancer-screening (accessed on 5 April 2023).

- Codella, N.C.F.; Gutman, D.; Celebi, M.E.; Helba, B.; Marchetti, M.A.; Dusza, S.W.; Kalloo, A.; Liopyris, K.; Mishra, N.; Kittler, H.; et al. Skin lesion analysis toward melanoma detection: A challenge at the 2017 International symposium on biomedical imaging (ISBI), hosted by the international skin imaging collaboration (ISIC). In Proceedings of the 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018), Washington, DC, USA, 4–7 April 2018; pp. 168–172. [Google Scholar] [CrossRef]

- Crawshaw, M. Multi-task learning with deep neural networks: A Survey. arXiv 2020, arXiv:009.09796. [Google Scholar]

- Chae, J.; Hong, K.; Kim, J. A pressure ulcer care system for remote medical assistance: Residual U-Net with an attention model based for wound area segmentation. arXiv 2021, arXiv:2101.09433. [Google Scholar]

- Homas, S. Medetec Wound Database. Available online: http://www.medetec.co.uk/files/medetec-image-databases.html (accessed on 5 April 2023).

- Wang, C.; Anisuzzaman, D.M.; Williamson, V.; Dhar, M.; Rostami, B.; Niezgoda, J.; Gopalakrishnan, S.; Yu, Z. Fully automatic wound segmentation with deep convolutional neural networks. Sci. Rep. 2020, 10, 21897. [Google Scholar] [CrossRef]

| Application | Task | Image Modality | Dataset Size | Remarks | Anatomical Site |

|---|---|---|---|---|---|

| Cervical cancer classification | Image classification | Colposcopy | 45,009 images from the NCI dataset | Labeled images: 978; unlabeled images: 44,031 | Organ |

| Skin lesion classification | Lesion classification | Dermoscopy | 10,015 images from the HAM10000 dataset | Labeled images | Skin |

| Pressure ulcer segmentation | Segmentation | Digital Camera | 138 images from Dongguk University Ilsan Hospital | Labeled images | Skin |

| Chest X-ray | Object detection and classification | X-ray | 987 images from public dataset | Labeled images | Organ |

| Experiment | Metrics | ||

|---|---|---|---|

| Acc (%) | Specificity (%) | Sensitivity (%) | |

| Baseline | 67.2 | 74.3 | 57.3 |

| 74.4 | 77.3 | 69.7 | |

| 69.2 | 58.8 | 85.5 | |

| 77.9 | 71.4 | 88.2 | |

| 74.9 | 85.7 | 57.9 | |

| Ensemble | 77.4 | 68.9 | 90.8 |

| Dataset | Melanoma | Seborrheic Keratosis | Nevus | Total |

|---|---|---|---|---|

| Training set | 374 | 254 | 1372 | 2000 |

| Validation set | 30 | 42 | 78 | 150 |

| Test set | 117 | 90 | 393 | 600 |

| Total | 521 | 386 | 1843 | 2750 |

| Experiment | Metrics | ||

|---|---|---|---|

| Acc (%) | Precision (%) | Sensitivity (%) | |

| Baseline | 75.9 | 52.9 | 58.6 |

| 74.2 | 56.0 | 61.6 | |

| 73.4 | 55.1 | 43.2 | |

| 77.7 | 58.7 | 55.9 | |

| 20.4 | 12.0 | 14.4 | |

| Ensemble | 86.0 | 77.3 | 75.7 |

| Experiment | Metrics | ||

|---|---|---|---|

| Acc (%) | IoU (%) | DSC (%) | |

| Baseline | 98.5 | 99.9 | 62.0 |

| 98.9 | 99.9 | 93.3 | |

| 87.2 | 99.8 | 63.0 | |

| 91.4 | 91.2 | 91.2 | |

| 85.4 | 87.2 | 21.0 | |

| Ensemble | 99.0 | 99.9 | 93.4 |

| (a) The performance results on cervical cancer classification | |||||

| Application (Task) | Approach | TL | Metrics | ||

| Acc (%) | Specificity (%) | Sensitivity (%) | |||

| Cervical cancer classification (Image classification) | Alyafeai et al. [16] * | ✗ | 72.8 | 76.2 | 66.7 |

| Zhang et al. [17] | ✗ | 80.8 | - | 85.4 | |

| Xu et al. [12] * | √ | 73.9 | 85.4 | 62.6 | |

| Hu et al. [14] | √ | 57.6 | - | 81.5 | |

| √ | 74.4 | 77.4 | 69.7 | ||

| √ | 69.2 | 58.8 | 85.5 | ||

| √ | 77.9 | 71.4 | 88.2 | ||

| √ | 74.9 | 85.7 | 57.9 | ||

| Ensemble** | √ | 77.4 | 68.9 | 90.8 | |

| (b) The performance results on skin lesion classification classification | |||||

| Application (Task) | Approach | TL | Metrics | ||

| Acc (%) | Precision (%) | Sensitivity (%) | |||

| Skin lesion classification (Object detection and classification) | Sirotkin et al. [45] | ✗ | 49.3 | - | - |

| Pal et al. [48] | √ | 77.3 | - | - | |

| Carcagnì et al. [49] | √ | - | 49.0 | 36.0 | |

| Sirotkin et al. [45] | √ | 75.4 | - | - | |

| √ | 74.2 | 56.0 | 61.6 | ||

| √ | 73.4 | 55.1 | 43.2 | ||

| √ | 77.7 | 55.9 | 58.7 | ||

| √ | 20.4 | 12.0 | 14.4 | ||

| Ensemble** | √ | 86.0 | 77.3 | 75.7 | |

| (c) The performance results on pressure ulcer segmentation | |||||

| Application (Task) | Approach | TL | Metrics | ||

| Acc (%) | IoU (%) | DSC (%) | |||

| Pressure ulcer segmentation (Segmentation) | Wang et al. [62] | ✗ | 95.0 | 47.3 | - |

| Khalil et al. [66] | ✗ | 96.0 | - | - | |

| Zahia et al. [57] | ✗ | - | 83.0 | 87.0 | |

| Goyal et al. [64] | √ | - | - | 89.9 | |

| Ohura et al. [67] | √ | 97.8 | - | 85.0 | |

| √ | 98.9 | 99.9 | 93.3 | ||

| √ | 87.2 | 99.8 | 63.0 | ||

| √ | 91.4 | 91.2 | 91.2 | ||

| √ | 85.4 | 87.2 | 21.0 | ||

| Ensemble** | √ | 99.0 | 99.9 | 93.4 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chae, J.; Kim, J. An Investigation of Transfer Learning Approaches to Overcome Limited Labeled Data in Medical Image Analysis. Appl. Sci. 2023, 13, 8671. https://doi.org/10.3390/app13158671

Chae J, Kim J. An Investigation of Transfer Learning Approaches to Overcome Limited Labeled Data in Medical Image Analysis. Applied Sciences. 2023; 13(15):8671. https://doi.org/10.3390/app13158671

Chicago/Turabian StyleChae, Jinyeong, and Jihie Kim. 2023. "An Investigation of Transfer Learning Approaches to Overcome Limited Labeled Data in Medical Image Analysis" Applied Sciences 13, no. 15: 8671. https://doi.org/10.3390/app13158671

APA StyleChae, J., & Kim, J. (2023). An Investigation of Transfer Learning Approaches to Overcome Limited Labeled Data in Medical Image Analysis. Applied Sciences, 13(15), 8671. https://doi.org/10.3390/app13158671