Forecasting and Inventory Planning: An Empirical Investigation of Classical and Machine Learning Approaches for Svanehøj’s Future Software Consolidation

Abstract

1. Introduction

Literature Review

2. Methods

2.1. Company Background

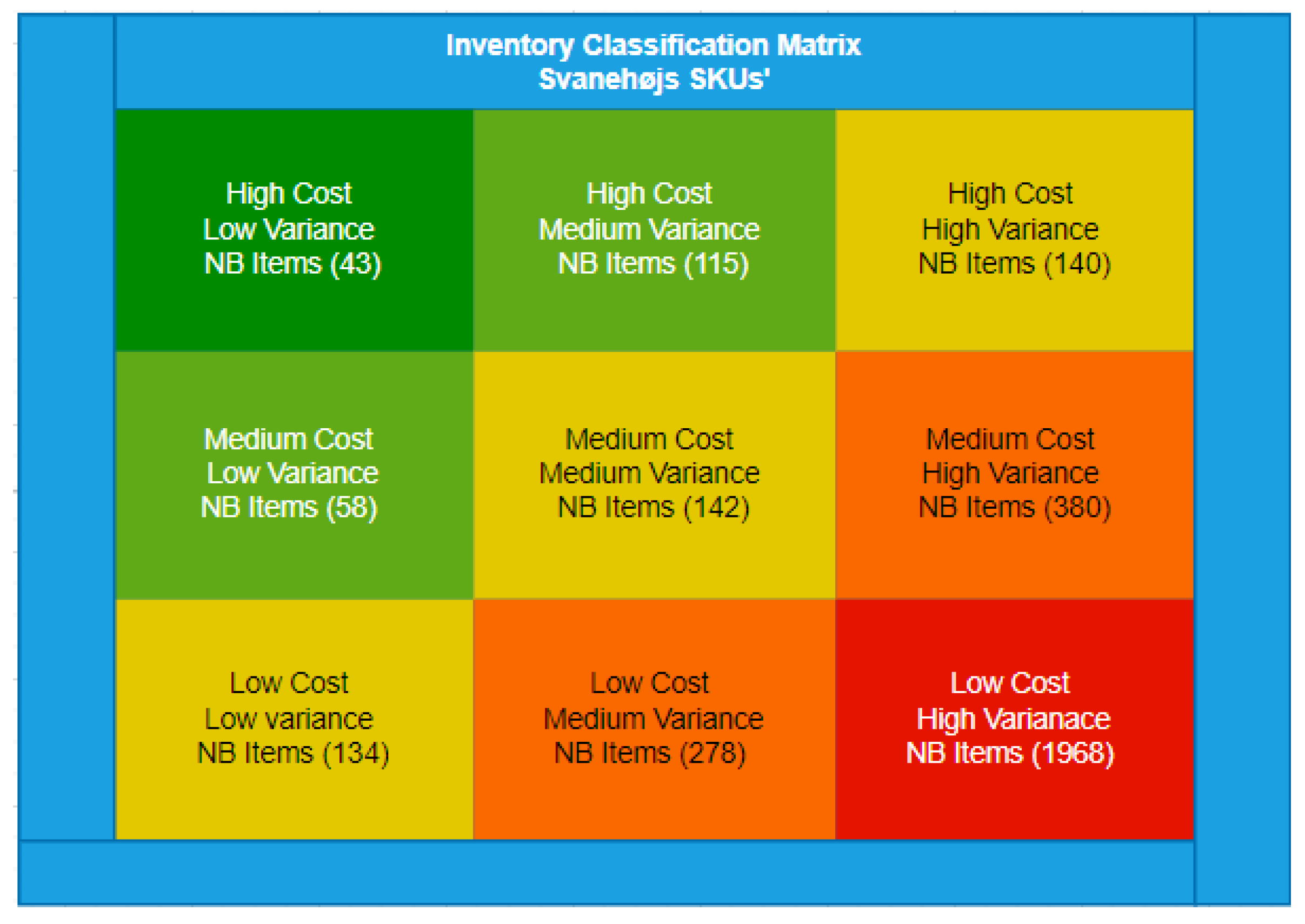

2.2. Data Used

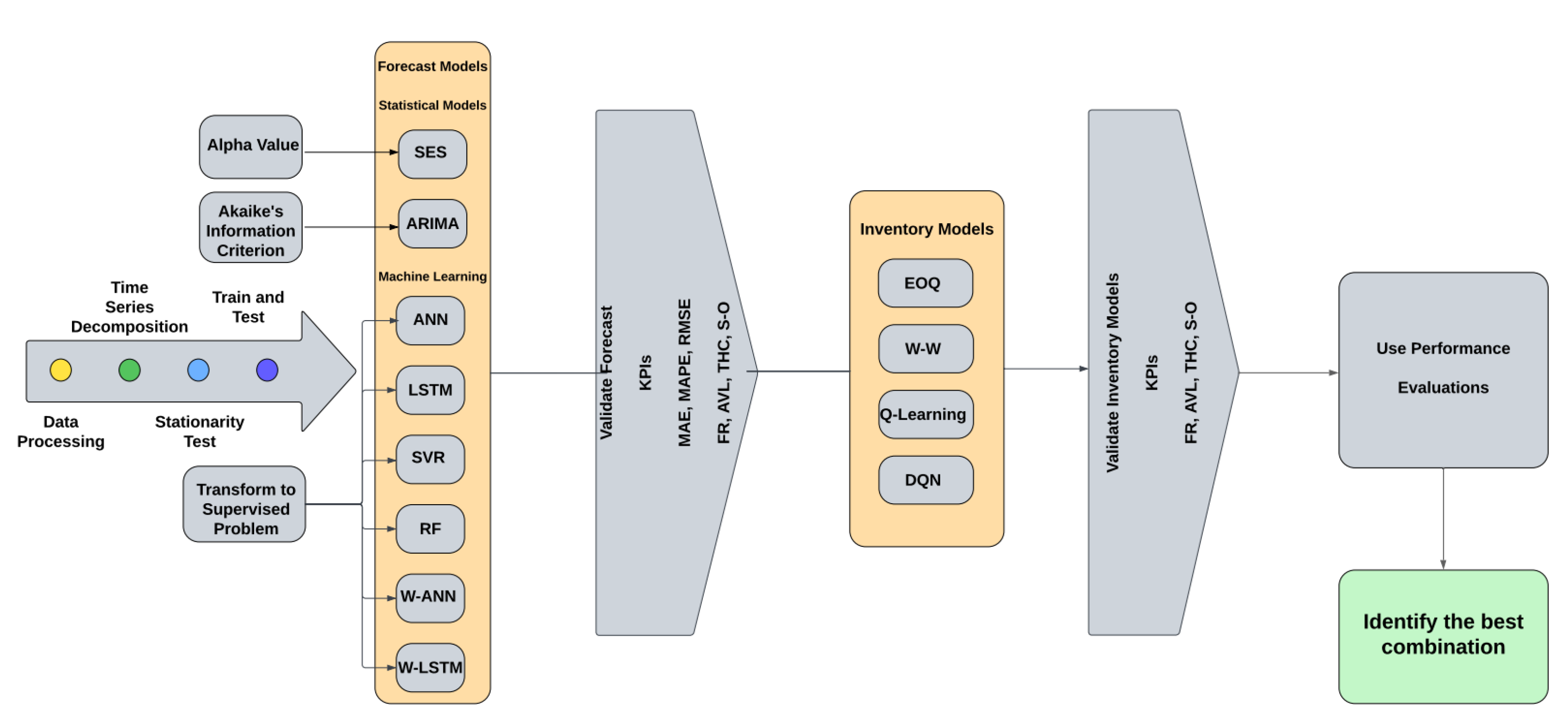

2.3. Methodology

2.4. Technical Details

2.4.1. Methods for Forecasting

2.4.2. Performance Measures for Evaluating Forecasting Methods

2.4.3. Methods for Inventory

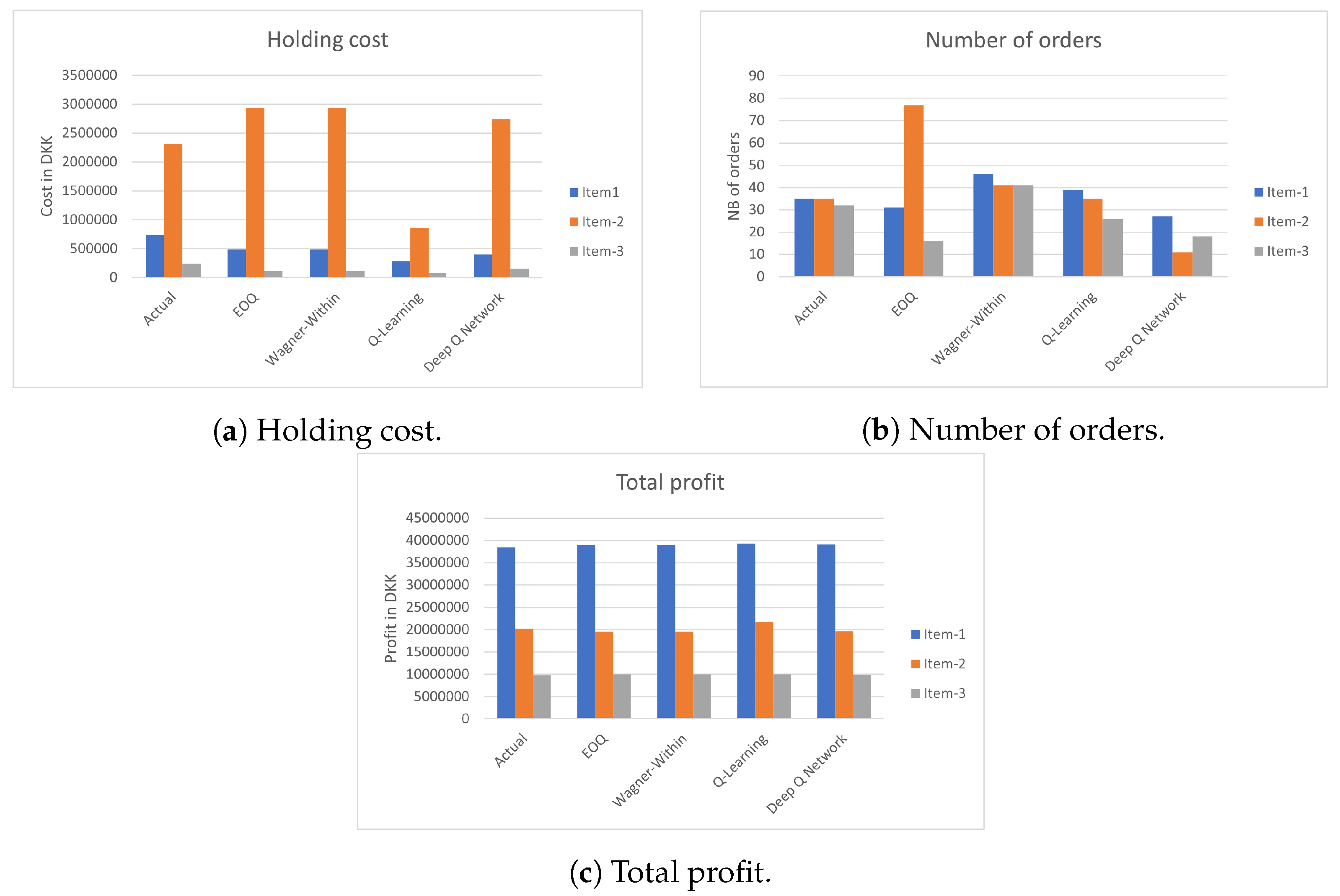

3. Results

4. Discussion

4.1. Time Series Forecast Implications

4.2. Inventory Management Implications

4.3. Time-Varying Demand

4.4. Software Consolidation

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. List of Notations

| Symbol | Description |

|---|---|

| p | Retail price |

| h | Per unit holding cost |

| k | Fixed cost per order |

| Per unit purchase cost | |

| Deterioration rate | |

| Instantaneous inventory level at time t | |

| Q | Order quantity of product at each cycle |

| D | demand |

| Length of each replenishment cycle |

Appendix B. Optimization Model for Deteriorating Products

Appendix C. Description of Machine Learning Algorithm for Inventory Replenishment Decision

| Algorithm A1 Q-learning: (off-policy) Learn function . |

Require:

|

Appendix C.1. Forecasting and Inventory Model Parameters

| Algorithm A2 DQN Algorithm. |

|

| Forecasting Parameters | |||

|---|---|---|---|

| Method | Item-1 | Item-2 | Item-3 |

| SES | :0.2 | :0.2 | :0.2 |

| ARIMA | p,d,q(2,1,0) | p,d,q(1,0,1) | p,d,q(1,0,1) |

| ANN | Epochs: 200 Neurons: 80, 60 Hidden layer(s): 2 | Epochs: 100 Neurons: 80, 60 Hidden layer(s): 2 | Epochs: 200 Neurons: 100, 60 Hidden layer(s): 2 |

| LSTM | Epochs: 200 Neurons: 100, 60 Hidden layer(s): 2 | Epochs: 200 Neurons: 120, 80 Hidden layer(s): 2 | Epochs: 200 Neurons: 64, 32 Hidden layer(s): 2 |

| SVR | Epochs: 200 Neurons: 120, 60 Hidden layer(s): 2 | Epochs: 200 Neurons: 80, 60 Hidden layer(s): 2 | Epochs: 100 Neurons: 80, 20 Hidden layer(s): 2 |

| RF | Ntree: 961 Mtry: 1 Max_depth: default (None) | Ntree: 1500 Mtry: 1 Max_depth: default (None) | Ntree: 1449 Mtry: 1 Max_depth: default (None) |

| W-ANN | Epochs: 200 Neurons: 80, 20 Hidden layer(s): 2 | Epochs: 200 Neurons: 24, 8 Hidden layer(s): 2 | Epochs: 100 Neurons: 64, 32 Hidden layer(s): 2 |

| W-LSTM | Epochs: 100 Neurons: 80, 40 Hidden layer(s): 2 | Epochs: 200 Neurons: 60, 24 Hidden layer(s): 2 | Epochs: 200 Neurons: 80, 40 Hidden layer(s): 2 |

| Inventory Management Parameters | |||

|---|---|---|---|

| Method | Item-1 | Item-2 | Item-3 |

| EOQ | Null | Null | Null |

| Q-Learning | : 0.01 : 0.99 : 1.0 # states: 500 # actions: 500 # episodes: 5000 | : 0.0001 : 0.99 : 1.0 # states: 200 # actions: 200 # episodes: 5000 | : 0.4 : 0.2 : 1.0 # states: 40,000 # actions: 40,000 # episodes: 500 |

| DQN | : 0.0001 : 0.99 : 1.0 TAU: 0.0001 buffer size: 50,000 batch size: 128 action size: 150 # episodes: 500 | : 0.00001 : 0.99 : 1.0 TAU: 0.0001 buffer size: 50,000 batch size: 128 action size: 120 # episodes: 500 | : 0.1 : 0.99 : 1.0 TAU: 0.0001 buffer size: 50,000 batch size: 128 action size: 40,000 # episodes: 50 |

References

- Foli, S.; Durst, S.; Davies, L.; Temel, S. Supply chain risk management in young and mature SMEs. J. Risk Financ. Manag. 2022, 15, 328. [Google Scholar] [CrossRef]

- Setyaningsih, S.; Kelle, P.; Maretan, A.S. Driver and Barrier Factors of Supply Chain Management for Small and Medium-Sized Enterprises: An Overview. In Economic and Social Development: Book of Proceedings of the 58th International Scientific Conference on Economic and Social Development, Budapest, Hungary, 4–5 September 2020; Varazdin Development and Entrepreneurship Agency: Varazdin, Croatia, 2020; pp. 238–249. [Google Scholar]

- Jacobs, R.F.; Weston, T. Enterprise resource planning (ERP)—A brief history. J. Oper. Manag. 2007, 25, 357–363. [Google Scholar] [CrossRef]

- Christofi, M.; Nunes, M.; Chao Peng, G.; Lin, A. Towards ERP success in SMEs through business process review prior to implementation. J. Syst. Inf. Technol. 2013, 15, 304–323. [Google Scholar] [CrossRef]

- Ahmad, M.M.; Cuenca, R.P. Critical success factors for ERP implementation in SMEs. Robot. Comput. Integr. Manuf. 2013, 29, 104–111. [Google Scholar] [CrossRef]

- Kale, P.T.; Banwait, S.S.; Laroiya, S.C. Performance evaluation of ERP implementation in Indian SMEs. J. Manuf. Technol. Manag. 2010, 21, 758–780. [Google Scholar] [CrossRef]

- Haddara, M.; Zach, O. ERP systems in SMEs: A literature review. In Proceedings of the 2011 44th Hawaii International Conference on System Sciences, IEEE, Kauai, HI, USA, 4–7 January 2011; pp. 1–10. [Google Scholar]

- Olson, D.L.; Johansson, B.; De Carvalho, R.A. Open source ERP business model framework. Robot. Comput. Integr. Manuf. 2018, 50, 30–36. [Google Scholar] [CrossRef]

- Syntetos, A.A.; Boylan, J.E.; Disney, S.M. Forecasting for inventory planning: A 50-year review. J. Oper. Res. Soc. 2009, 60, S149–S160. [Google Scholar] [CrossRef]

- Pournader, M.; Ghaderi, H.; Hassanzadegan, A.; Fahimnia, B. Artificial intelligence applications in supply chain management. Int. J. Prod. Econ. 2021, 241, 108250. [Google Scholar] [CrossRef]

- Zhu, X.; Ninh, A.; Zhao, H.; Liu, Z. Demand forecasting with supply-chain information and machine learning: Evidence in the pharmaceutical industry. Prod. Oper. Manag. 2021, 30, 3231–3252. [Google Scholar] [CrossRef]

- Heuts, R.M.J.; Strijbosch, L.W.G.; van der Schoot, E.H.M. A Combined Forecast-Inventory Control Procedure for Spare Parts. Ph.D. Thesis, Faculty of Economics and Business Administration, Tilburg University, Tilburg, The Netherlands, 1999. [Google Scholar]

- Chan, S.W.; Tasmin, R.; Aziati, A.N.; Rasi, R.Z.; Ismail, F.B.; Yaw, L.P. Factors influencing the effectiveness of inventory management in manufacturing SMEs. In IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2017; Volume 226, p. 012024. [Google Scholar]

- Dey, K.; Roy, S.; Saha, S. The impact of strategic inventory and procurement strategies on green product design in a two-period supply chain. Int. J. Prod. Res. 2019, 57, 1915–1948. [Google Scholar] [CrossRef]

- Jonsson, P.; Mattsson, S.A. Inventory management practices and their implications on perceived planning performance. Int. J. Prod. Res. 2008, 46, 1787–1812. [Google Scholar] [CrossRef]

- Saha, S.; Goyal, S.K. Supply chain coordination contracts with inventory level and retail price dependent demand. Int. J. Prod. Econ. 2015, 161, 140–152. [Google Scholar] [CrossRef]

- Perona, M.; Saccani, N.; Zanoni, S. Combining make-to-order and make-to-stock inventory policies: An empirical application to a manufacturing SME. Prod. Plan. Control 2009, 20, 559–575. [Google Scholar] [CrossRef]

- Kara, A.; Dogan, I. Reinforcement learning approaches for specifying ordering policies of perishable inventory systems. Expert Syst. Appl. 2018, 91, 150–158. [Google Scholar] [CrossRef]

- Kemmer, L.; von Kleist, H.; de Rochebouët, D.; Tziortziotis, N.; Read, J. Reinforcement learning for supply chain optimization. In Proceedings of the European Workshop on Reinforcement Learning, Lille, France, 1–3 October 2018; Volume 14. [Google Scholar]

- Peng, Z.; Zhang, Y.; Feng, Y.; Zhang, T.; Wu, Z.; Su, H. Deep reinforcement learning approach for capacitated supply chain optimization under demand uncertainty. In Proceedings of the 2019 Chinese Automation Congress (CAC), IEEE, Hangzhou, China, 22–24 November 2019; pp. 3512–3517. [Google Scholar]

- Sultana, N.N.; Meisheri, H.; Baniwal, V.; Nath, S.; Ravindran, B.; Khadilkar, H. Reinforcement learning for multi-product multi-node inventory management in supply chains. arXiv 2020, arXiv:2006.04037. [Google Scholar]

- De Moor, B.J.; Gijsbrechts, J.; Boute, R.N. Reward shaping to improve the performance of deep reinforcement learning in perishable inventory management. Eur. J. Oper. Res. 2022, 301, 535–545. [Google Scholar] [CrossRef]

- Wang, Q.; Peng, Y.; Yang, Y. Solving Inventory Management Problems through Deep Reinforcement Learning. J. Syst. Sci. Syst. Eng. 2022, 31, 677–689. [Google Scholar] [CrossRef]

- Oroojlooyjadid, A.; Nazari, M.; Snyder, L.V.; Takáč, M. A deep q-network for the beer game: Deep reinforcement learning for inventory optimization. Manuf. Serv. Oper. Manag. 2017, 24, 285–304. [Google Scholar] [CrossRef]

- Gijsbrechts, J.; Boute, R.N.; Van Mieghem, J.A.; Zhang, D.J. Can deep reinforcement learning improve inventory management? performance on lost sales, dual-sourcing, and multi-echelon problems. Manuf. Serv. Oper. Manag. 2022, 24, 1349–1368. [Google Scholar] [CrossRef]

- Esteso, A.; Peidro, D.; Mula, J.; Díaz-Madroñero, M. Reinforcement learning applied to production planning and control. Int. J. Prod. Res. 2022, 61, 2104180. [Google Scholar] [CrossRef]

- Cheng, C.; Sa-Ngasoongsong, A.; Beyca, O.; Le, T.; Yang, H.; Kong, Z.; Bukkapatnam, S.T. Time series forecasting for nonlinear and non-stationary processes: A review and comparative study. IEEE Trans. 2015, 47, 1053–1071. [Google Scholar] [CrossRef]

- Hanifi, S.; Liu, X.; Lin, Z.; Lotfian, S. A critical review of wind power forecasting methods—Past, present and future. Energies 2020, 13, 3764. [Google Scholar] [CrossRef]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. TensorFlow: A system for Large-Scale machine learning. In Proceedings of the 12th USENIX Symposium on Operating Systems Design and Implementation (OSDI 16), Savannah, GA, USA, 2–4 November 2016; pp. 265–283. [Google Scholar]

- Box, G.; Jenkins, G.; Reinsel, G. Time Series Analysis: Forecasting and Control, 4th ed.; John Wiley & Sons Inc.: Hoboken, NJ, USA, 2008. [Google Scholar]

- Khashei, M.; Bijari, M.; Ardali, G.A.R. Improvement of auto-regressive integrated moving average models using fuzzy logic and artificial neural networks (ANNs). Neurocomputing 2009, 72, 956–967. [Google Scholar] [CrossRef]

- Pai, P.F.; Lin, K.P.; Lin, C.S.; Chang, P.T. Time series forecasting by a seasonal support vector regression model. Expert Syst. Appl. 2010, 37, 4261–4265. [Google Scholar] [CrossRef]

- Yang, H.; Huang, K.; King, I.; Lyu, M.R. Localized support vector regression for time series prediction. Neurocomputing 2009, 72, 2659–2669. [Google Scholar] [CrossRef]

- He, W.; Wang, Z.; Jiang, H. Model optimizing and feature selecting for support vector regression in time series forecasting. Neurocomputing 2008, 72, 600–611. [Google Scholar] [CrossRef]

- Montesinos López, O.A.; Montesinos López, A.; Crossa, J. Multivariate Statistical Machine Learning Methods for Genomic Prediction; Springer Nature: Berlin, Germany, 2022; p. 691. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Biau, G.; Scornet, E. A random forest guided tour. Test 2016, 25, 197–227. [Google Scholar] [CrossRef]

- Tyralis, H.; Papacharalampous, G. Variable selection in time series forecasting using random forests. Algorithms 2017, 10, 114. [Google Scholar] [CrossRef]

- Shanmuganathan, S.; Samarasinghe, S. Artificial Neural Network Modelling; Springer International Publishing: Cham, Switzerland, 2016; Volume 628. [Google Scholar]

- Zhang, G.; Patuwo, B.E.; Hu, M.Y. Forecasting with artificial neural networks: The state of the art. Int. J. Forecast. 1998, 14, 35–62. [Google Scholar] [CrossRef]

- Deka, P.C.; Prahlada, R. Discrete wavelet neural network approach in significant wave height forecasting for multistep lead time. Ocean Eng. 2012, 43, 32–42. [Google Scholar] [CrossRef]

- Nury, A.H.; Hasan, K.; Alam, M.J.B. Comparative study of wavelet-ARIMA and wavelet-ANN models for temperature time series data in northeastern Bangladesh. J. King Saud Univ. Sci. 2017, 29, 47–61. [Google Scholar] [CrossRef]

- Sharma, N.; Zakaullah, M.; Tiwari, H.; Kumar, D. Runoff and sediment yield modeling using ANN and support vector machines: A case study from Nepal watershed. Model. Earth Syst. Environ. 2015, 1, 23. [Google Scholar] [CrossRef]

- Abbasimehr, H.; Shabani, M.; Yousefi, M. An optimized model using LSTM network for demand forecasting. Comput. Ind. Eng. 2020, 143, 106435. [Google Scholar] [CrossRef]

- Greff, K.; Srivastava, R.K.; Koutník, J.; Steunebrink, B.R.; Schmidhuber, J. LSTM: A search space odyssey. IEEE Trans. Neural Networks Learn. Syst. 2016, 28, 2222–2232. [Google Scholar] [CrossRef]

- Wei, Y.; Bai, L.; Yang, K.; Wei, G. Are industry-level indicators more helpful to forecast industrial stock volatility? Evidence from Chinese manufacturing purchasing managers index. J. Forecast. 2021, 40, 17–39. [Google Scholar] [CrossRef]

- Sun, C.; Shrivastava, A.; Singh, S.; Gupta, A. Revisiting Unreasonable Effectiveness of Data in Deep Learning Era. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Stevenson, W.J. Operations Management; McMcGraw-Hill Irwin: Boston, MA, USA, 2018. [Google Scholar]

- Cuartas, C.; Aguilar, J. Hybrid algorithm based on reinforcement learning for smart inventory management. J. Intell. Manuf. 2023, 34, 123–149. [Google Scholar] [CrossRef]

- Van Hasselt, H.; Guez, A.; Silver, D. Deep reinforcement learning with double q-learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; Volume 30. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Selukar, M.; Jain, P.; Kumar, T. Inventory control of multiple perishable goods using deep reinforcement learning for sustainable environment. Sustain. Energy Technol. Assess. 2022, 52, 102038. [Google Scholar] [CrossRef]

- Boute, R.N.; Gijsbrechts, J.; Van Jaarsveld, W.; Vanvuchelen, N. Deep reinforcement learning for inventory control: A roadmap. Eur. J. Oper. Res. 2022, 298, 401–412. [Google Scholar] [CrossRef]

- Mishra, P.; Rao, U.S. Concentration vs. Inequality Measures of Market Structure: An Exploration of Indian Manufacturing. Econ. Political Wkly. 2014, 59, 59–65. [Google Scholar]

- Omri, N.; Al Masry, Z.; Mairot, N.; Giampiccolo, S.; Zerhouni, N. Industrial data management strategy towards an SME-oriented PHM. J. Manuf. Syst. 2020, 56, 23–36. [Google Scholar] [CrossRef]

- Gamboa, J.C.B. Deep learning for time-series analysis. arXiv 2017, arXiv:1701.01887. [Google Scholar]

- Chhajer, P.; Shah, M.; Kshirsagar, A. The applications of artificial neural networks, support vector machines, and long-short term memory for stock market prediction. Decis. Anal. J. 2022, 2, 100015. [Google Scholar] [CrossRef]

- Ghobbar, A.A.; Friend, C.H. Evaluation of forecasting methods for intermittent parts demand in the field of aviation: A predictive model. Comput. Oper. Res. 2003, 30, 2097–2114. [Google Scholar] [CrossRef]

- Praveen, U.; Farnaz, G.; Hatim, G. Inventory management and cost reduction of supply chain processes using AI based time-series forecasting and ANN modeling. Procedia Manuf. 2019, 38, 256–263. [Google Scholar] [CrossRef]

- Osb, I.; Blundell, C.; Pritzel, A.; Van Roy, B. Deep exploration via bootstrapped DQN. In Proceedings of the 30th Conference on Neural Information Processing Systems: Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; Volume 29. [Google Scholar]

- Kolková, A.; Ključnikov, A. Demand forecasting: AI-based, statistical and hybrid models vs practicebased models-the case of SMEs and large enterprises. Econ. Sociol. 2022, 15, 39–62. [Google Scholar] [CrossRef]

- Panda, S.; Saha, S.; Basu, M. An EOQ model with generalized ramp-type demand and Weibull distribution deterioration. Asia-Pac. J. Oper. Res. 2007, 24, 93–109. [Google Scholar] [CrossRef]

- Hansen, S. Using deep q-learning to control optimization hyperparameters. arXiv 2016, arXiv:1602.04062. [Google Scholar]

| Paper | Supply Chain Environment Settings | Approach | Findings | Practical Implications |

|---|---|---|---|---|

| [18] | An ordering replenishment problem in a retailer setting with the objective to minimize cost. The authors assumes a fixed lead time from a supplier. | The authors suggest Q learning and Sara algorithm to find near optimal replenishment policies for perishable products and compare the outcome of six situations. | The numerical results show the discount factor, learning rate and exploration rate influences the model’s learning performance. | The results show an ordering policy that incorporates the age information and inventory quantity yields better outcomes than quantity depended policies. A comprehensive insight is cost analysis for the policies for demand variability, lead time, cost ratio and product lifetime. |

| [19] | Multi echelon supply chain setting with a factory, multiple warehouses with stochastic, seasonal demand. | Considers the following RL algorithms SARSA and Reinforce with an agent that acts based on the (s,Q) policy under a simple and complex scenario. | The finding shows both the SARSA and Reinforce approach yield a higher performance than the (s,Q) policy. | The authors show agents can be programmed to cope with demand trends, production level and stock allocation. The findings show agents can cope, operate, and take action under simple market conditions. |

| [20] | A multi echelon supply chain system with plant, warehouse, retailer and customers under a simple, complex and special case, each with different settings. | The authors consider a vanilla policy gradient algorithm to solve the supply chain problem through profit maximization. The production target and quantities target are either discrete or continuous variables. Afterward, the results are compared with the outcome of a (r,Q) policy. | Based on all three cases, the DRL agent outperforms the (r,Q) policy. | The results show that the two methods, (1) Action clipping and (2) Output activation function, both yield better results than the (r,Q) policy. The free DRL models do not consider transition probability and are thereby able to make decisions without knowing the demand probability. |

| [21] | Multi Echelon Systems. (2) Supplier—Warehouse—Transportation—Store. (3) Heuristic algorithms frequently result in high operating cost. | The authors consider the following 6 algorithms: (1) Multi-Agent Reinforcement Learning (MARL); (2) Mixed Integer Linear Programming (MILP); (3) Adaptive Control (AC); (4) Model-predictive Control (MPC); (5) Imitation Learning (IL); (6) Approximate Dynamic. | The numerical results demonstrate the RL model can be used for paralleled decision making if model is correct, and the RL models can further aid in minimizing the waste of perishable product. | This paper highlights that heuristic models such as (s,S) or (R,Q) models are not feasible. The paper seeks to explore algorithms that can handle multi-objective reward systems. The findings, which are based on actual data, demonstrate the potential strengths of an RL system that can handle complex environment settings with multiple objectives. |

| [22] | Consider a classical single item perishable inventory problem with stochastic demand in a single echelon setting with periodic review and a fixed lead time. | Utilizes rewards shaping to combat DRL drawbacks based on two heuristic teachers that modify the reward as the DRL agent transfer to a new state with the objective to improve the inventory policy. | Reward shaping improves the DLR learning process and reduces the cost and variability in comparison with an unshaped DLR model. | The numerical results show reward shaping is a relevant approach, since the method reduces computational requirements. Additionally, reward-shaped DQN yields a higher learning rate and better policy in comparison to an unshaped DQN. Reward shaping by using known inventory policies as teachers can serve as an enabling argument for the integration of DLR model in companies. |

| [23] | The authors consider a multi-echelon inventory system with lost sales and a complex cost structure. | DQN and DDQN frameworks are used to solve the stated inventory problem and compare the results with heuristic algorithms. | The paper’s findings showcase the DRL models’ ability to adapt to inventory problems with different complex cost structures, which rules-based heuristic has issues coping with. | Incorporation of two or more historical inventory records improves the DDLS model’s performance. Information sharing of parameter setting within supply chains can enhance performance of the DRL model, decreasing volatility during the training phase. |

| [24] | A multi-echelon supply chain system based on the beer game. | Reconfigure the DQN algorithm to operate in cooperative environment. | The results indicate DQN models obtain near-optimal policies when compared with agents that follow a base stock policy. | Transfer learning is an approach that makes the DQN agents flexible to cope with different cost structure and settings without the need for vast training. DQN shows promising numerical results in a supply chain setting with real-time information sharing between each chain entity. |

| [25] | Investigate DRL in three different inventory problems: (1) lost sales; (2) dual sourcing inventory; (3) multi-echelon inventory management. | Formulate the inventory problems as MDP and use the Asynchronous Advantage Actor–Critic (A3C) algorithm. | The paper provides a proof of concept of deep reinforcement learning ability to solve classic inventory problems. Additionally, the paper underlines that the AC3 algorithm adapts well to the stochastic environment company. | The authors conduct Sensitivity with the objective to find the optimal gaps between state-of-the-art heuristic polices and the AC3 algorithm. The findings showcase the AC3 algorithm yields a good performance for long lead times and has a higher overall performance than the heuristic algorithms. |

| ItemID | Holdingcost (HC) | Ordering Cost (OC) | Variable Order Cost (VOC) | Selling Price (SP) |

|---|---|---|---|---|

| Item-1 | 119 | 500 | 1193 | 10,896 |

| Item-2 | 3443 | 500 | 8010 | 34,443 |

| Item-3 | 0.315 | 500 | 3.15 | 31 |

| Item-1 | |||||||

|---|---|---|---|---|---|---|---|

| Methods | Holding Cost | Ordering Cost | Variable Order Cost | Total Profit | Total Demand | Total Procured | Number of Orders |

| Actual | 743,752 | 19,000 | 4,823,299 | 38,466,476 | 4077 | 4043 | 35 |

| EOQ | 486,386 | 15,500 | 4,863,861 | 39,057,150 | 4077 | 4077 | 31 |

| Q-Learning | 288,944 | 19,500 | 4,863,861 | 39,250,686 | 4077 | 4077 | 39 |

| Deep Q Network | 395,598 | 13,500 | 4,896,072 | 39,117,821 | 4077 | 4104 | 27 |

| Item-2 | |||||||

| Actual | 2,313,696 | 17,500 | 6,840,540 | 20,199,613 | 853 | 854 | 35 |

| EOQ | 2,937,364 | 38,500 | 6,832,530 | 19,571,740 | 853 | 853 | 77 |

| Q-Learning | 860,750 | 17,500 | 6,832,530 | 21,660,569 | 853 | 853 | 35 |

| Deep Q Network | 2,741,836 | 5500 | 6,960,690 | 19,672,109 | 853 | 869 | 11 |

| Item-3 | |||||||

| Actual | 238,537 | 16,000 | 1,142,766 | 9,848,969 | 363,233 | 362,783 | 32 |

| EOQ | 114,418 | 8000 | 1,144,183 | 9,997,689 | 363,233 | 363,233 | 16 |

| Q-Learning | 83,026 | 13,000 | 1,144,183 | 10,020,013 | 363,233 | 363,233 | 26 |

| Deep Q Network | 155,494 | 9,000 | 1,206,746 | 9,888,982 | 363,233 | 383,094 | 18 |

| Technique | Holding Cost | Ordering Cost | Variable Order Cost | Total Profit | Total Demand | Total Procured |

|---|---|---|---|---|---|---|

| EOQ | 120,998 | 8000 | 1,140,551 | 9,877,461 | 363,233 | 363,233 |

| Q-Learning | 83,026 | 17,000 | 1,162,031 | 9,998,166 | 363,233 | 364,175 |

| Item-1 (cV = 36.94) | |||||||

|---|---|---|---|---|---|---|---|

| KPI | FR (%) | AIL (qty.) | THC | # of Stockouts | MAE | MAPE (%) | RMSE |

| SES | 89.3 | −87 | 0 | 12 | 88.5 | 1107 | 104.57 |

| ARIMA | 98.56 | 12.25 | 61,200 | 6 | 73.91 | 1016 | 94.56 |

| ANN | 66.46 | −315 | 0 | 12 | 106.25 | 710.28 | 138.21 |

| LSTM | 100 | 308 | 441,529 | 0 | 173.91 | 3795 | 208.39 |

| SVR | 15.42 | −797 | 0 | 12 | 125.66 | 82.84 | 164.69 |

| RF | 92.69 | −17.5 | 20,519 | 6 | 100.25 | 1232 | 116.39 |

| W-ANN | 96.24 | 14.5 | 81,124 | 5 | 99 | 1212 | 127.09 |

| W-LSTM | 100 | 150 | 216,290 | 0 | 127.08 | 1496 | 152.94 |

| Item-2 (cV = 64.95) | |||||||

| SES | 63 | −107 | 0 | 12 | 17.5 | 204.18 | 29.5 |

| ARIMA | 73.85 | −83 | 0 | 12 | 19.16 | 271.80 | 30.5 |

| ANN | 83.35 | −30 | 210,023 | 10 | 19.75 | 282.87 | 29.91 |

| LSTM | 52.06 | −117 | 0 | 12 | 21.83 | 169.93 | 28.5 |

| SVR | 90.14 | 373 | 15,490,057 | 2 | 90.16 | 836.03 | 130.57 |

| RF | 62.23 | −111 | 0 | 12 | 17.5 | 221.79 | 30.87 |

| W-ANN | 92.57 | 5.75 | 946,825 | 6 | 34.58 | 129.85 | 41.12 |

| W-LSTM | 98.42 | 18 | 960,597 | 2 | 30.91 | 275.46 | 39.62 |

| Item-3 (cV = 44.91) | |||||||

| SES | 71.61 | −27,103 | 0 | 12 | 4543 | 31.4 | 5644 |

| ARIMA | 69.52 | −28,458 | 0 | 12 | 5094 | 35.82 | 6188 |

| ANN | 61.90 | −41,384 | 0 | 12 | 4794 | 33.22 | 6485 |

| LSTM | 96.85 | 2508 | 14,395 | 5 | 3669 | 30.53 | 4055 |

| SVR | 90.56 | 16,679 | 26,270 | 7 | 9905 | 90.62 | 13,307 |

| RF | 57.58 | −39,752 | 0 | 12 | 6283 | 45.03 | 7338 |

| W-ANN | 93 | 1742 | 15,985 | 4 | 5562 | 43.38 | 5768 |

| W-LSTM | 100 | 16,016 | 60,543 | 0 | 11,434 | 83 | 12,611 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wahedi, H.J.; Heltoft, M.; Christophersen, G.J.; Severinsen, T.; Saha, S.; Nielsen, I.E. Forecasting and Inventory Planning: An Empirical Investigation of Classical and Machine Learning Approaches for Svanehøj’s Future Software Consolidation. Appl. Sci. 2023, 13, 8581. https://doi.org/10.3390/app13158581

Wahedi HJ, Heltoft M, Christophersen GJ, Severinsen T, Saha S, Nielsen IE. Forecasting and Inventory Planning: An Empirical Investigation of Classical and Machine Learning Approaches for Svanehøj’s Future Software Consolidation. Applied Sciences. 2023; 13(15):8581. https://doi.org/10.3390/app13158581

Chicago/Turabian StyleWahedi, Hadid J., Mads Heltoft, Glenn J. Christophersen, Thomas Severinsen, Subrata Saha, and Izabela Ewa Nielsen. 2023. "Forecasting and Inventory Planning: An Empirical Investigation of Classical and Machine Learning Approaches for Svanehøj’s Future Software Consolidation" Applied Sciences 13, no. 15: 8581. https://doi.org/10.3390/app13158581

APA StyleWahedi, H. J., Heltoft, M., Christophersen, G. J., Severinsen, T., Saha, S., & Nielsen, I. E. (2023). Forecasting and Inventory Planning: An Empirical Investigation of Classical and Machine Learning Approaches for Svanehøj’s Future Software Consolidation. Applied Sciences, 13(15), 8581. https://doi.org/10.3390/app13158581