1. Introduction

The molding process based on fiber prepreg is currently widely used. In the molding process, prepreg is often formed into a preform, and then molded and cured. Various defects such as wrinkles, bubbles, etc. may appear on the surface of preforms, which have become one of the most concerned failure modes in the manufacturing process of composites and have received the attention of many researchers [

1,

2]. Due to the existence of defects, the strength, modulus, and lifetime of composites may be affected [

3], seriously affecting the use and promotion of composites.

For the defect detection of composites, nondestructive testing techniques, such as ultrasonic testing, radiographic testing, thermal imaging testing, and terahertz nondestructive testing, have been applied [

4]. However, these methods have limitations, and often fail to achieve online testing and timely process adjustment. In order to achieve real-time detection of surface defects on the preform and timely adjustment of process parameters, online image acquisition of surface defects on the preform based on vision, as well as defect detection methods based on deep-learning models, can quickly and accurately detect surface defects on the preform, achieving the improvement of composite material forming quality. According to its characteristics and development, it can be divided into traditional defect detection methods and defect detection methods based on deep learning.

Traditional defect detection methods mainly use image processing methods to identify defects based on their shape, size, color, and other characteristics, or combine traditional machine learning methods for classification detection. Due to the variety of scenes faced, there are many methods for image processing, including edge detection algorithms such as Prewitt, Sobel, Canny, etc. [

5]. When the defect area is large, scale invariant feature transformation and grayscale histogram feature methods have been applied, improving the recognition effect [

6,

7]; and transform frequency domain detection methods, such as Fourier transform, wavelet transform, Gabor transform, etc. [

8]. After image processing, defects can be classified using traditional machine learning methods: common traditional machine learning methods, such as support vector machines (SVM), KNN clustering, random forests, and so on. These machine learning methods combine image processing for defect classification of industrial products and fabrics, or a combination of multiple classifiers for defect classification [

9]. Defect recognition and classification based on image processing and traditional machine learning methods rely more on manual experience to collect samples, and the quality of extracted features affects recognition accuracy, resulting in low versatility.

In recent years, defect detection methods based on deep learning have been applied in many fields, among which the convolutional neural network (CNN) is the most widely used [

10]. According to different data labels, deep-learning models can be roughly divided into supervised, unsupervised, semi-supervised, and weakly supervised learning models [

11]. Currently, more research focuses on supervised representation learning, especially in defect detection. Defect detection issues are considered as classification tasks, including image label classification, region classification, and pixel classification, which can be considered as classification issues, location issues, and segmentation issues, respectively. For localization issues, target localization aims to obtain accurate location and category information for defects. The network can be divided into two-stage networks and single-stage networks. Common two-stage networks include faster R-CNN [

12], and common single-stage networks include SSD [

13] and YOLO series methods [

14]. The single stage network uses the entire image as the input of the network, and marks the location and category of defects in the regression boundary box of the output layer. The YOLO series of methods is one of the recent research hotspots and has been applied in metal surface defects, fabric and prepreg surface defects, underwater target detection, agricultural product recognition, and other aspects [

15,

16,

17]. Moreover, the YOLO series of algorithms are still in rapid development.

Li L et al. [

18] proposed an image restoration algorithm based on surface pattern repetition features to effectively identify the woven structure, wrinkles, bubbles, etc., of carbon fiber prepreg. Pourkaramdel Z et al. [

19] proposed a fabric defect detection method based on completed local quarter patterns, which extracts local texture features from fabric images to achieve fabric surface defect detection. Li L et al. [

20] proposed a method for detecting bubbles and wrinkles in carbon fiber flat woven prepreg based on image texture feature compression, which further defines defect types based on texture features through k-means clustering. Lin G et al. [

21] proposed the Swin-transformer module to replace the main module in the original YOLOv5 algorithm, realizing the detection of fabric defects. Ho C C et al. [

22] used Bayesian optimization algorithms to optimize the deep convolution neural network for defects on fabrics, greatly improving the accuracy of defect recognition. Sacco C et al. [

23] introduced a ResNet structure into the network model for defect detection in prepreg placement based on a laser profiler, reducing network complexity, solving overfitting problems, and achieving better defect recognition results.

In surface defect detection, in addition to the requirements for recognition accuracy, attention should also be paid to small samples and real-time issues. For the small sample problem, the current solutions are mainly to increase samples and reduce the lazy dependence of the algorithm on samples. Among them, the method of increasing samples generally uses data enhancement and defect generation algorithms to increase the number of samples, thereby increasing the dataset of samples [

24]. Such methods only increase the number of samples in a limited manner and are non-real samples, with limited improvement effects. In addition, there are three main aspects of research to reduce the dependence of algorithms on the number of samples. The first is network pre-training or transfer learning, which is currently widely used. The common feature data and weight information in the pre-training model can help small sample training achieve better results. Currently, it has been applied in multiple fields such as liquid crystal panel defects, PCB board defects, metal parts surface defect detection, and has achieved good results [

24,

25,

26]. The second is the optimization of network structure. A reasonable network structure can also greatly reduce the demand for samples, achieving good results [

27,

28]. The third is an unsupervised or a semi-supervised model [

29,

30]. In an unsupervised model, only normal samples are used for training, so there is no need for defective samples. Semi-supervised methods can solve network training problems with small samples using unlabeled samples. In addition, the method based on meta learning is also a solution to small sample problems, which has been applied in many fields [

31,

32]. In solving real-time issues, lightweight network design can perform network model pruning and model weight quantization to accelerate model inference and improve detection speed. Based on the YOLOv5 model, many researchers have chosen to apply methods such as Ghost Bottleneck, improved feature fusion module, improved data enhancement, and edge attention fusion network to the fields of wood surface defects, bamboo surface defects, and rubber sealing ring surface defects; and have achieved good results [

33,

34,

35]. Further, in order to ensure detection accuracy and even further improve detection accuracy on the basis of lightweight, most of the above researchers have introduced attention mechanisms or combined improved loss functions to improve detection performance, which has become the basic idea of lightweight design [

36,

37].

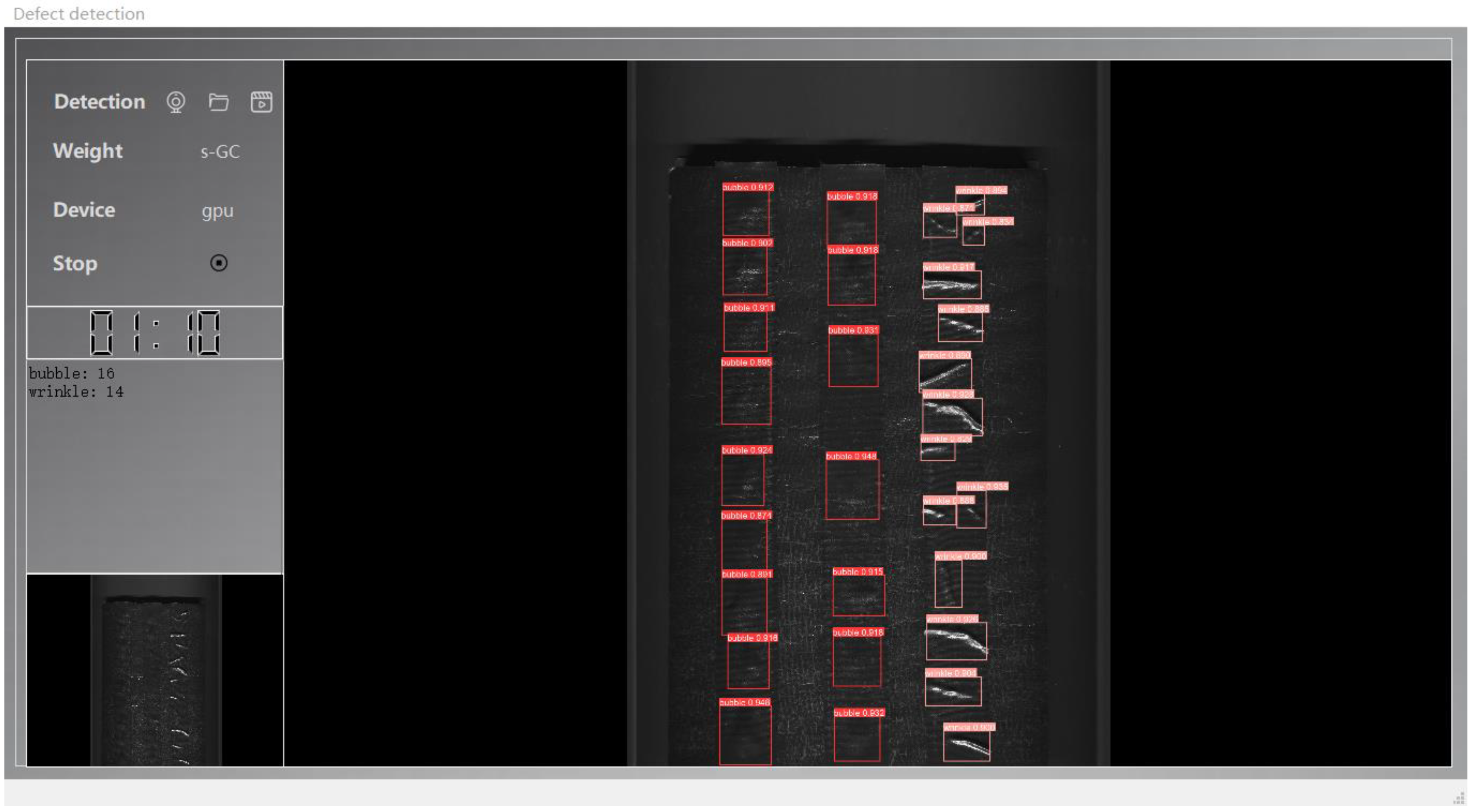

At present, there are no relevant reports in the literature on the detection of surface defects in preforms, and there is a lack of corresponding research. In order to solve the problem of high reflective characteristics of the surface of preforms based on fiber prepreg, as well as the need for online and high-precision recognition of various defects during the preform process, we proposes a method for identifying defects on the preformed surface based on machine vision and improved YOLOv5 network model. Based on the established machine vision platform, the surface image of the preform during the preforming process is obtained. In actual surface defect detection of preforms, samples exhibit the characteristics of extremely small samples and imbalanced samples. Then, the idea of transfer learning is used, and the Ghost Bottleneck module is introduced to lightweight design the YOLOv5 model. In order to improve the defect recognition accuracy, the coordinate attention module is introduced to achieve a good balance between recognition accuracy and recognition speed. Compared to before the improvement, the improved model has faster recognition speed and higher recognition accuracy.

3. YOLOv5 Network and Improvement

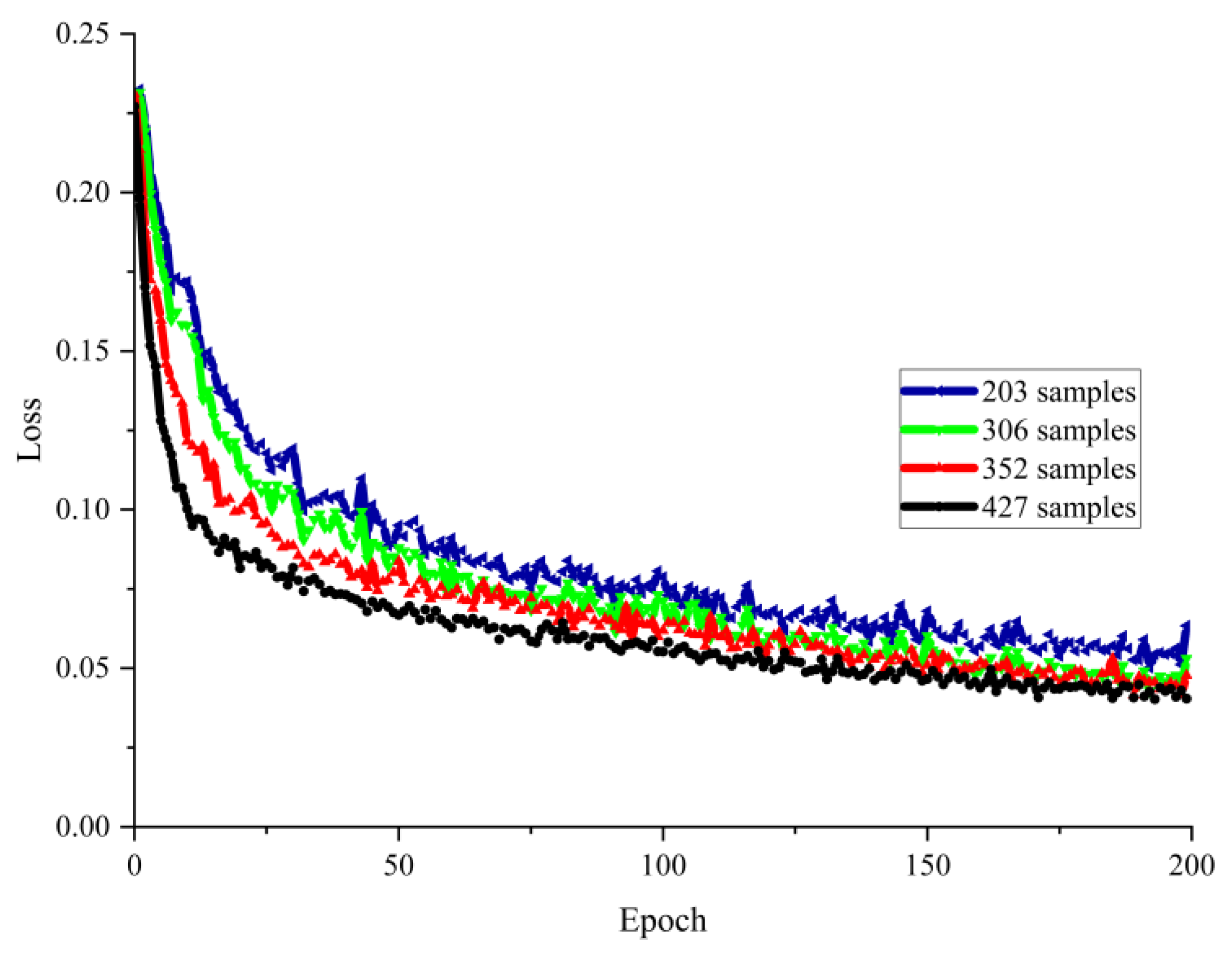

In this article, the surface image of preform is obtained by the machine visual platform, and the image is annotated by the online annotation tool Make Sense. After the annotation is completed, it is saved as a txt file in YOLO format, and a dataset is created. Based on the idea of transfer learning, the YOLOv5 model is introduced to properly train the dataset and identify defects. A total of 427 images with defects were collected, and the original image resolution was 8192 × 13,000 pixels. Each image may contain multiple defects, with a total of 2157 marks. Among them, 162 overall dislocation of prepreg, 168 overall non dislocation of prepreg, 158 dislocation of stiffeners, 162 normal stiffeners, 855 wrinkles, and 652 bubbles are marked. That is, the proportion of wrinkle defects is about 40%, the proportion of bubble defects is about 30%, the proportion of reinforcement dislocation defects is about 7.5%, and the overall dislocation defects of prepreg are about 7.5%. There are differences in the number of different defect types. Compared to other open data sets, the data set established in this article has very few samples, and there are various cases of uneven samples between classes, which poses a great challenge to defect identification.

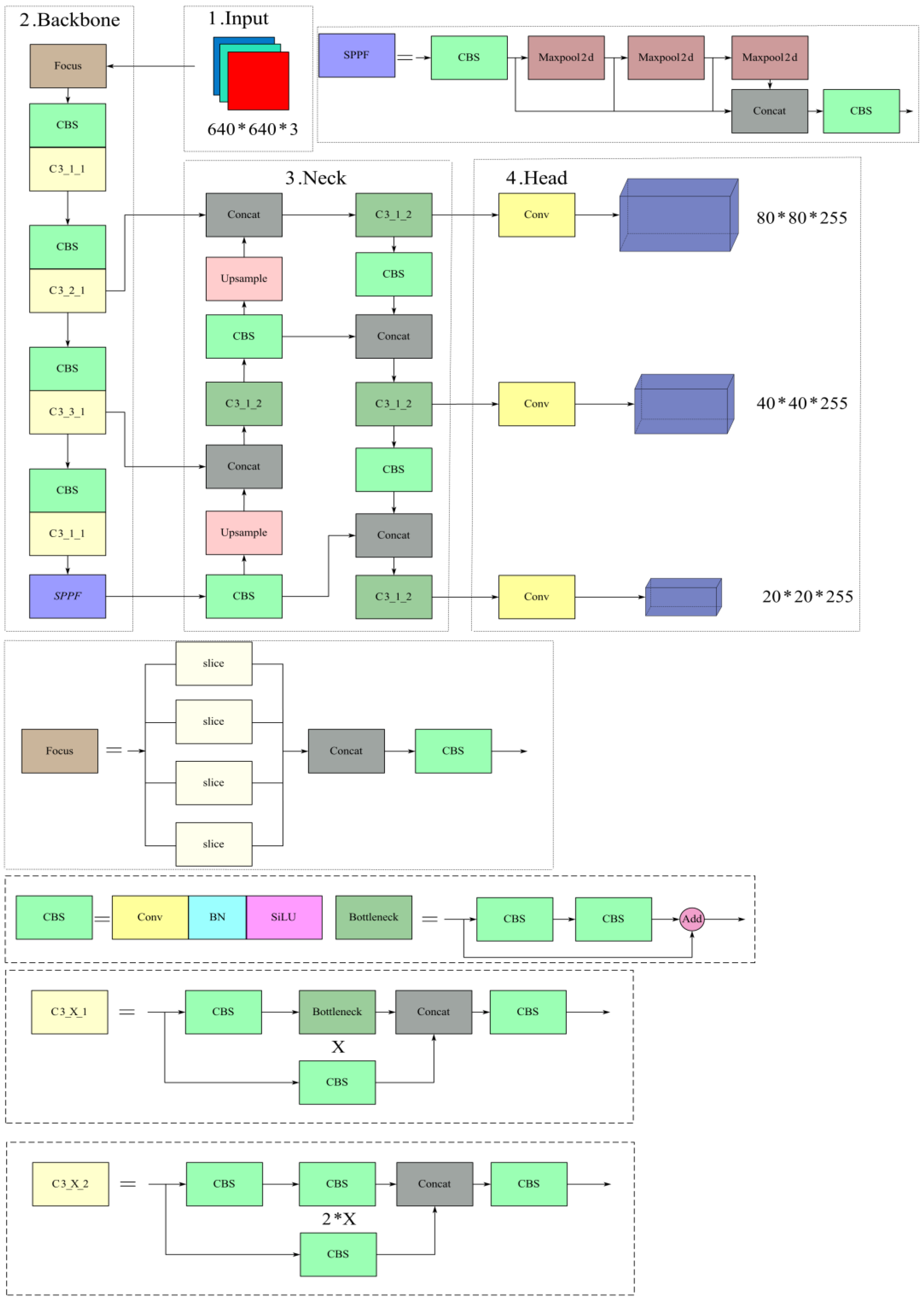

3.1. Network Structure of YOLOv5

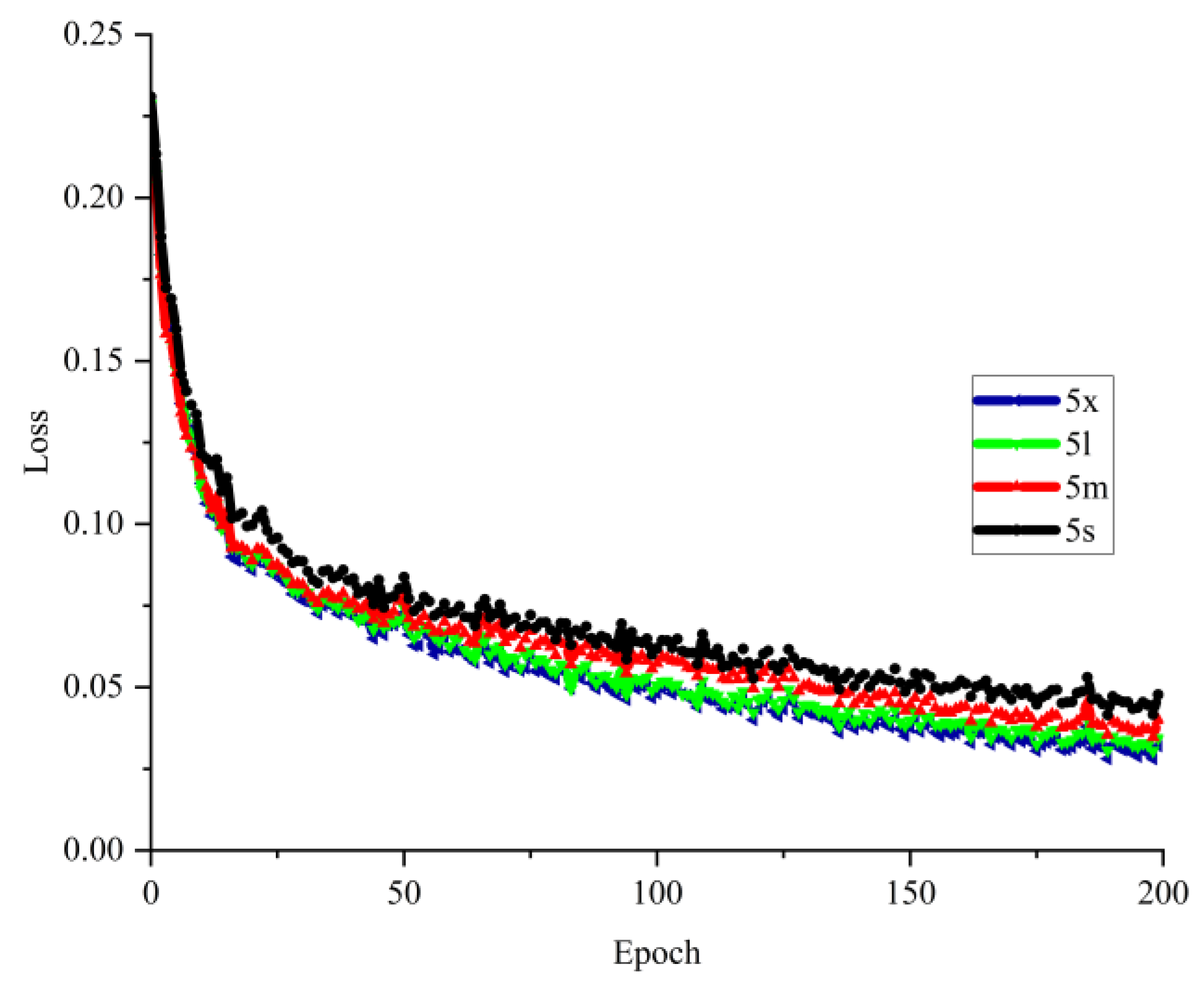

YOLO series algorithms have a fast detection speed, a high average detection accuracy, and a strong migration ability, which can be applied to other new fields. YOLOv5 is a development based on YOLOv4 and is currently widely used in the field of target detection [

16,

17,

21]. YOLOv5 is an object detection architecture and model that has been pre trained on COCO datasets, including several different series. In the official code, there are four versions that are more mature and widely used, namely the YOLOv5s, YOLOv5m, YOLOv5l, and YOLO5x models. YOLOv5 uses the C3 module and SPPF module as the backbone, FPN + PANet as the neck, and YOLOv3 head as the detection head. In addition, it also uses various data enhancement methods and some modules from YOLOv4 for performance optimization. The network structure of YOLOv5s is shown in

Figure 3.

3.2. Improved Methods

In practical industrial applications, the surface images of preforms have the characteristics of high noise and high background similarity, which brings difficulties to the recognition of defects. In order to make the model focus on the feature information of the surface defects of the preform, we introduce coordinate attention module into the YOLOv5 model. After introducing the attention mechanism, it will increase the parameters and models, and may increase the reasoning time. In order to meet the requirements of real-time detection and embedded system deployment, we consider using lightweight methods to process the network structure based on the use of CA modules and introduce the Ghost Bottleneck module.

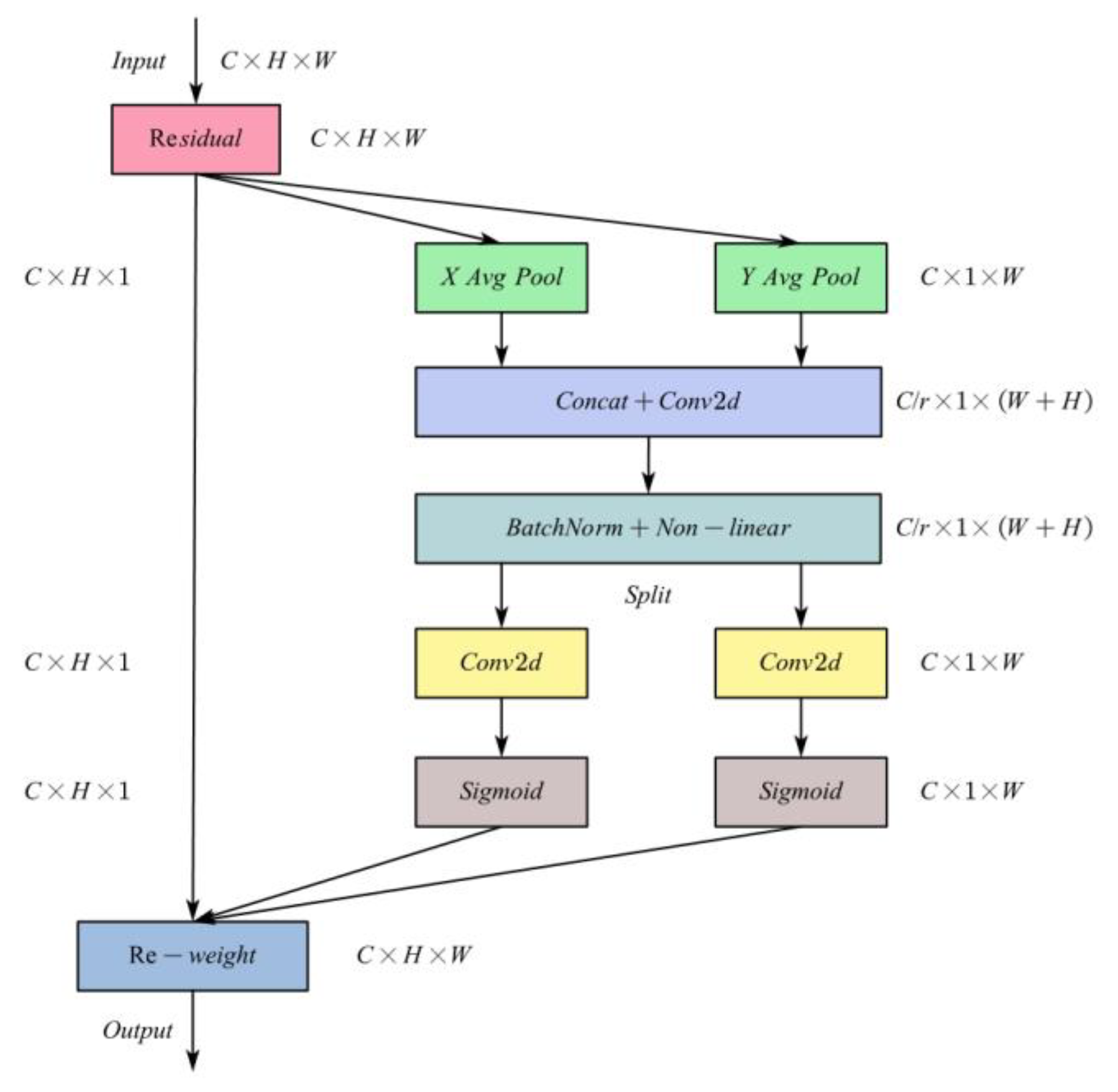

3.2.1. Coordinate Attention Module

Attention mechanisms are more effective for general classification tasks and are widely used in various neural networks. Currently, channel attention mechanisms such as the SE module and hybrid attention mechanisms such as the CBAM module have been applied [

38,

39]. However, SE only encodes information between channels, ignoring equally important spatial relationships. The CBAM module obtains location information by reducing the channel dimension of the input tensor, and then uses convolution to calculate spatial information. However, it can only extract the rejection relationship of the feature map and cannot extract the long-distance dependency relationship.

The attention module can convert any intermediate feature tensor

is used as the input, and outputs a transformation tensor

with the same size as

.

is the number of channels, and

and

are the height and width of the input image, respectively. The CA module structure is shown in

Figure 4. The calculation process includes two parts: coordinate information embedding and coordinate attention generation [

40].

In order to solve the disadvantage of encoding global pooling as spatial information for each channel in other attention modules, the CA module uses coordinate information embedding to convert global pooling into a pair of one-dimensional feature codes. Specifically, for the input

, we use two spatial range pool cores

or

to encode each channel along the horizontal and vertical coordinate, respectively. Therefore, the output of the

c-th channel at height

and the output of the

c-th channel at width

can be determined using the following expression:

By computing the two formulas, the process of aggregating characteristics takes place across two spatial directions. The result is a set of bi-directional sensing feature maps capable of capturing long-distance relationships along a single spatial direction, while maintaining precise location information along the other. This approach enables the network to more accurately locate objects of interest.

The second part is coordinate attention generation. First, connect the formulas together, and then send them to the 1 × 1 convolution function

, resulting in the following formula:

where

is a nonlinear activation function,

is an intermediate feature map encoding spatial information in the horizontal and vertical directions, and

is the ratio of down sampling. Then, we split

into two independent tensors along the spatial dimension,

and

. The other two 1 × 1 convolutional transformation functions

and

are used to transform

and

into tensors with the same channel number for input X, respectively:

where

is sigmoid function. The outputs

and

are expanded and used as attention weights, respectively. Finally, the output of the CA module can be written as:

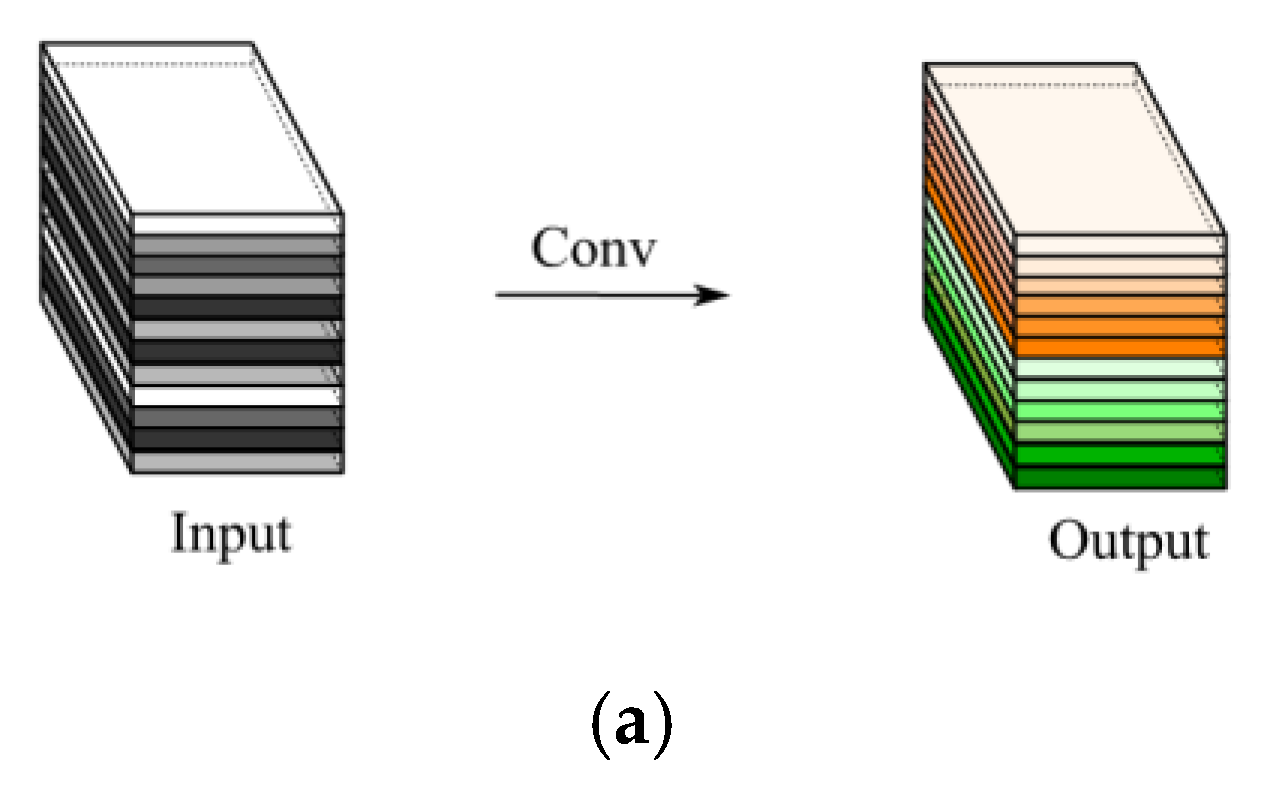

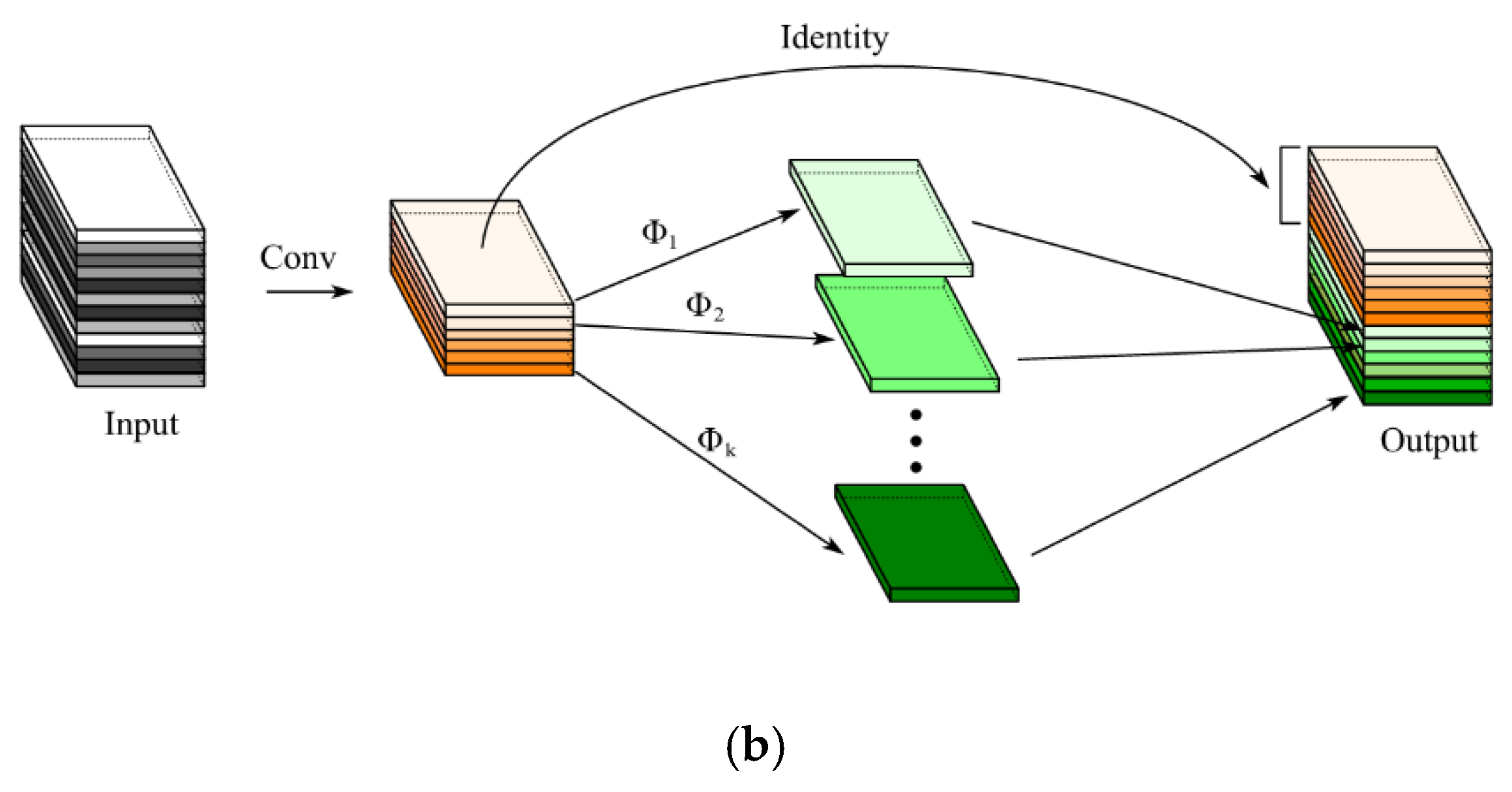

3.2.2. Ghost Bottleneck Module

The Ghost lightweight module is the main part of the Ghost net network model proposed by Huawei Noah’s Ark Laboratory in 2020 [

41]. It applies the channel attention mechanism and has been widely used [

35,

38]. From

Figure 5, (a) is a normal convolution module and (b) is a Ghost module. The calculation step of the Ghost module is that the necessary features of the input features are obtained by 1 × 1 convolution; then, a similar feature map (Ghost) of the features is obtained by depth separable convolution; and finally, the two channels are spliced. There are super parameters

s and

d in the Ghost module, and the different settings have an impact on the accuracy and volume scale of the improved network. Based on the comparative experimental results in literature [

41], we select the settings of

s = 2 and

d = 3.

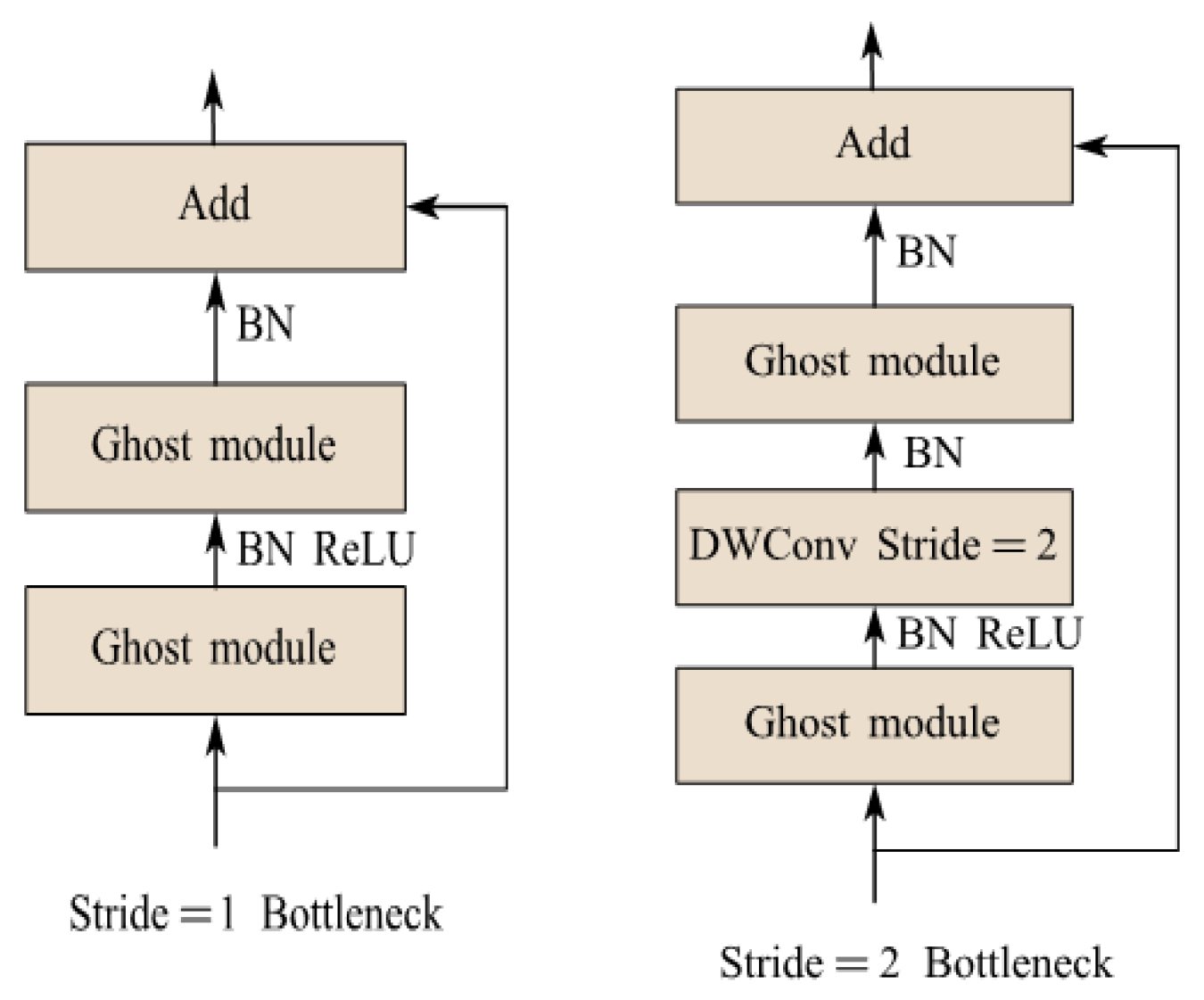

The Ghost module retains residual blocks, and there are two types of Ghost Bottleneck network structures. As shown in

Figure 6, the Ghost Bottleneck is composed of two Ghost modules stacked together. The first Ghost module is used for channel expansion and increasing the number of channels, while the second Ghost module is used to reduce the number of channels. The ReLU activation function is not used after the first Ghost, but BN and ReLU are applied to other layers. If stride = 1, the feature map size remains unchanged. If the stride = 2, a depth convolution with a step size of 2 between the down sampling layer and the two Ghost modules is used to reduce latitude and achieve a direct shortcut path.

Since the deep convolution operation with stride = 2 adds feature information, its detection speed is slow. Therefore, this article selects the Ghost Bottleneck structure with a stride = 1 for lightweight design, which can improve the detection speed.

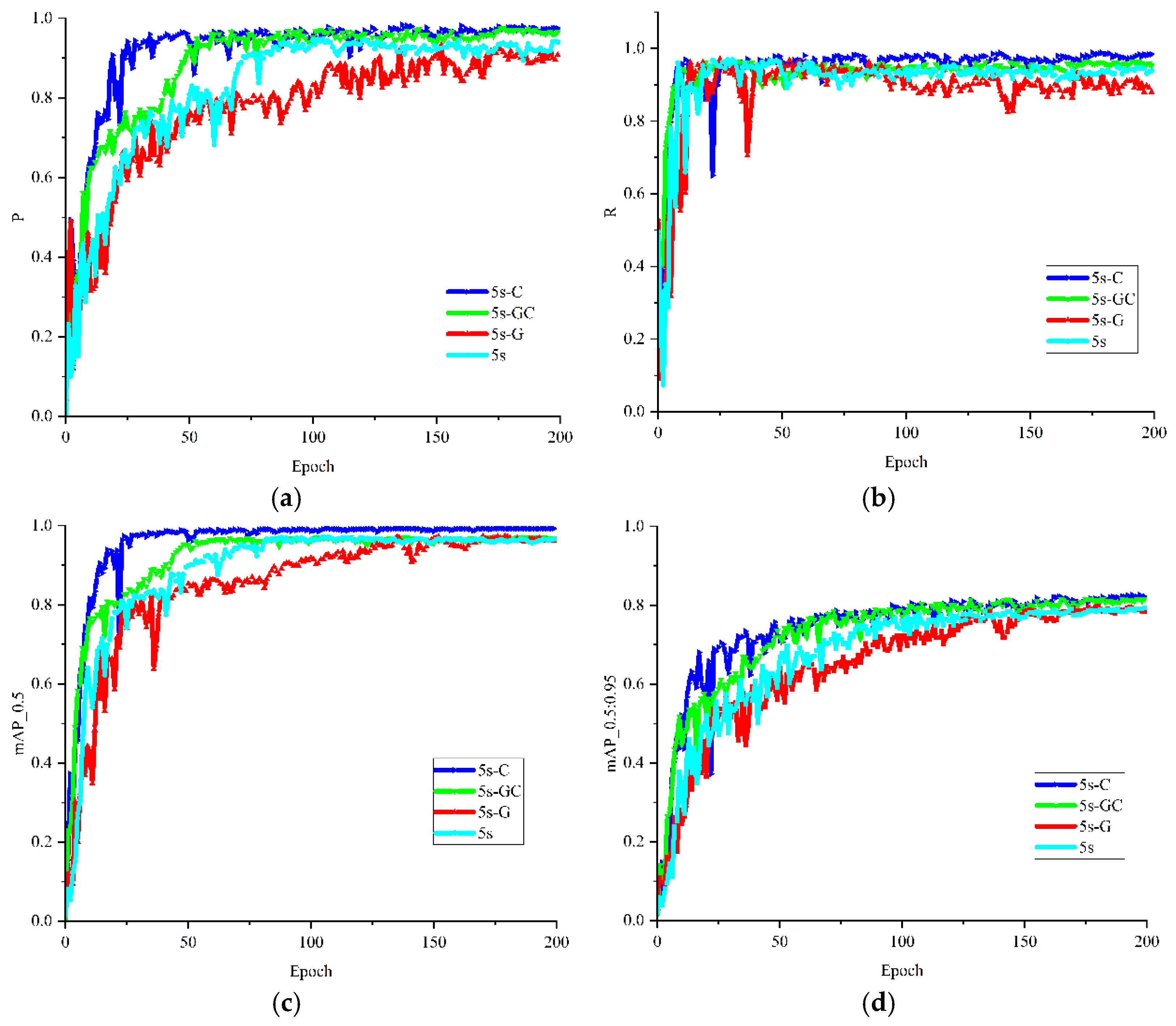

3.2.3. Improved Network Structure of YOLOv5-GC

We will carry out lightweight design in the network structure of YOLOv5s while considering the improvement of detection accuracy. For lightweight design, considering factors such as model size and detection speed, a Ghost Bottleneck module with stride = 1 is used to replace the Bottleneck module of C3_X_1 in the backbone network, constituting a C3Ghost_X module, still comprising CSP architecture; using the Ghost Bottleneck module instead of C3_X_2 modules in the neck to reduce the complexity of the model and achieve a lightweight network. Due to the fact that the feature extraction of the YOLOv5s network is mainly completed in the backbone network and the attention mechanism module can enhance the ability of the network model to obtain initial features, this article adds the CA module to the backbone network and places it after the C3Ghost_X module; the addition of the CA module can extract more features on the connection channel and improve the detection accuracy of the improved model. The improved network model is named YOLOv5s-GC, and its network structure is shown in

Figure 7.

3.2.4. Evaluation Indicators

There are many indicators commonly used to measure algorithm performance in target detection. In order to facilitate comparison, this article uses indicators such as accuracy precision, recall, mean average precision (mAP), and inference time to measure prediction performance.

Precision is an indicator used to evaluate the performance of a classification model, which refers to the ability of a classifier to accurately identify positive examples during prediction. The formula is as follows:

where TP represents true positive, i.e., the number of samples that are positive and are predicted to be positive; FP represents a false positive example, which is the number of samples that are negative examples that are incorrectly predicted as positive examples.

Recall is used to evaluate the ability of the model to find all positive samples, reflecting the ratio of all actual positive samples found by the model. Its calculation formula is:

where FN represents a false negative, which is the number of samples that are positive and incorrectly predicted to be negative.

AP is an important performance indicator in classification tasks. When measuring model performance, precision and recall are two mutually constraining performance indicators. These two indicators have the limitations of single point values and cannot fully evaluate the overall performance of the model. Therefore, the introduction of AP enables a full trade-off between precision and recall to comprehensively evaluate the performance of the model. Using precision as the ordinate and recall as the abscissa, we can obtain a P–R curve. The area between the P–R curve and the coordinate axis is the value of AP. The expression is:

where

represents the accuracy of the first

k prediction results, while

is a function that represents the correlation of the

k-th prediction results, where

k ranges from 1 to

n; and

n is the total number of prediction results.

mAP is used to measure the accuracy of the system. In a multi-classification task, each category can calculate its own AP value, and averaging these values can obtain the mAP value of the entire dataset. Typically, mAP values calculated at a threshold of 0.5 are often used to evaluate the performance of a model, also known as mAP@0.5. mAP@0.5:0.95 refers to calculating the mAP values under each threshold by changing the threshold value from 0.5 to 0.95 in steps of 0.05 and averaging all the mAP values to comprehensively evaluate the performance of the model under different IoU thresholds. The calculation formula is:

where

indicates the number of categories, and

represents the average precision of the ith category. Add up the AP values for all categories and divide by the number of categories;

gives the value of mAP.

In order to evaluate the performance of the model more comprehensively, inference time is introduced, which refers to the time required to recognize the target and output the results after inputting the image into the algorithm. Inference time is one of the important indicators to measure the practicality and real-time performance of defect recognition based on the YOLO model.