Customer Sentiment Recognition in Conversation Based on Contextual Semantic and Affective Interaction Information

Abstract

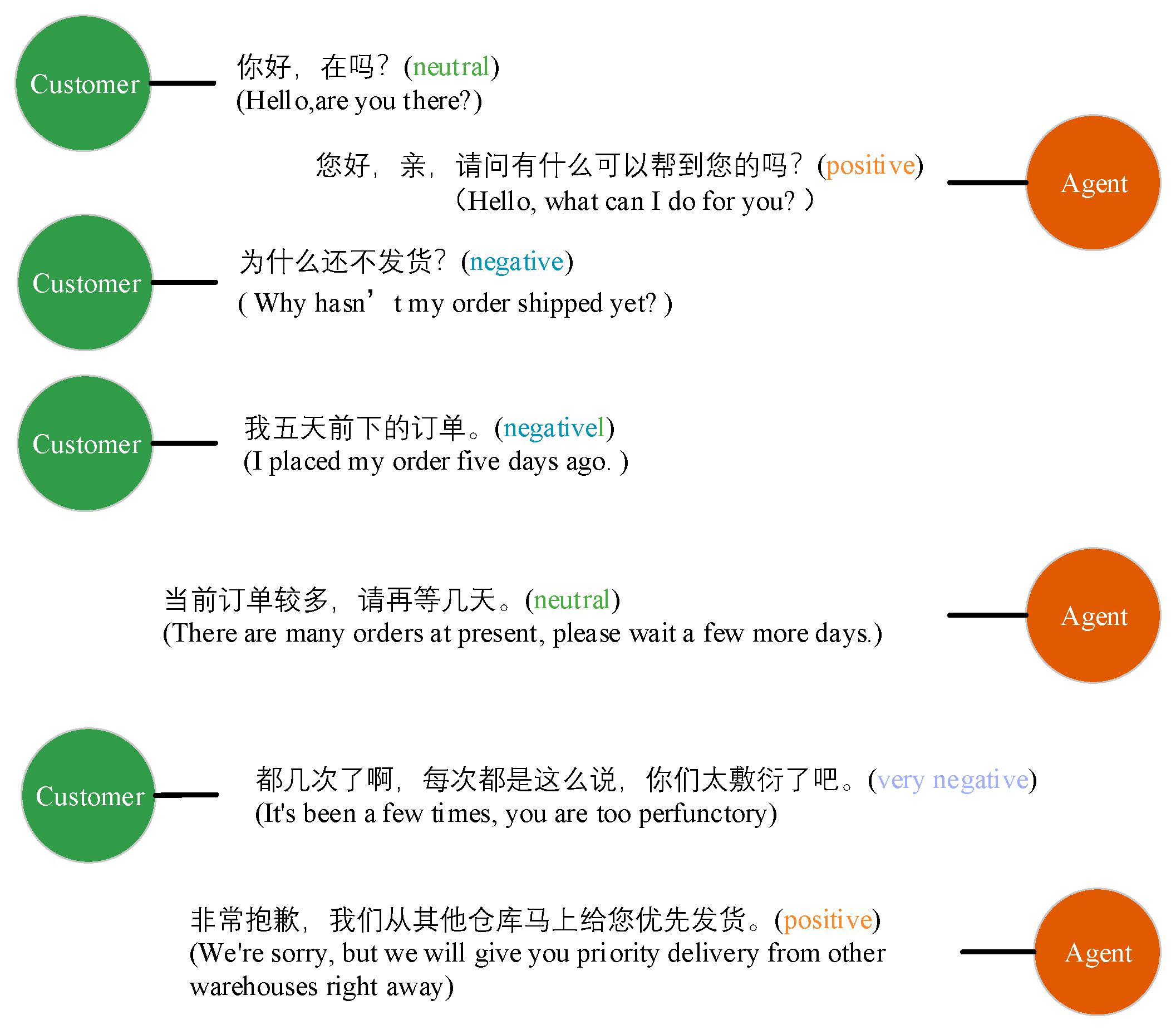

:1. Introduction

2. Related Work

3. Methods

3.1. Conversation Text Encoder

3.2. Context Semantic Encoder

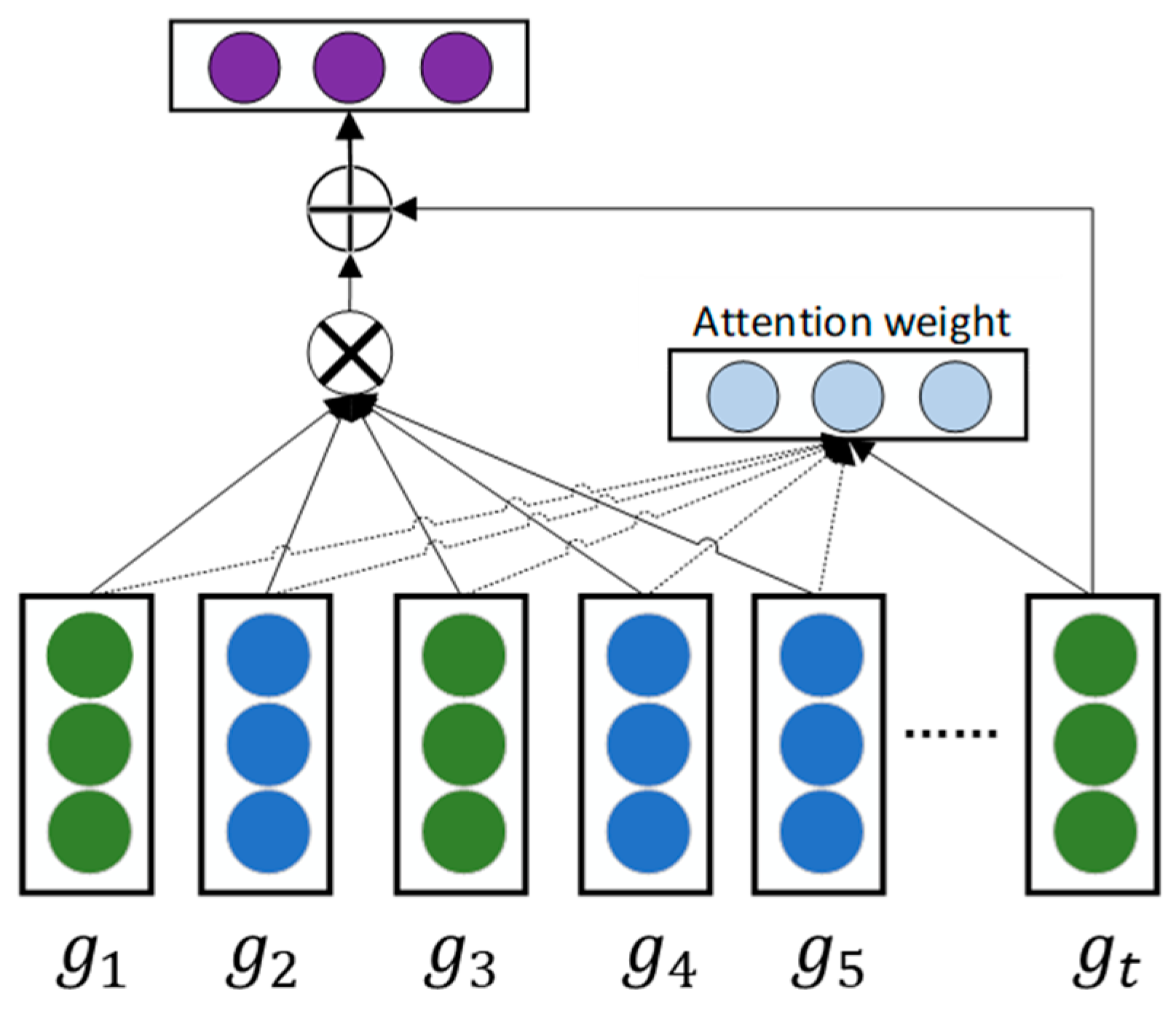

3.3. Affective Interaction Encoder

3.4. Sentiment Classifier

4. Experiments

4.1. Experimental Setup

4.1.1. Datasets

4.1.2. Parameter Settings

4.1.3. Evaluation Criteria

4.2. Baselines Methods

- (1)

- BiGRU [25]: Regardless of the contextual information in the conversation, it treats each utterance as an independent instance and uses a bidirectional GRU to encode the utterance and classify sentiment.

- (2)

- BERT [26]: This model is used to construct the utterance representations which are sent to a two-layer perceptron with a final SoftMax layer for sentiment classification.

- (3)

- ERNIE [22]: This model treats each sentence in the dialogue as an independent instance and uses the ERNIE model to encode the sentence and classify sentiment.

- (4)

- c-LSTM [9]: This model uses a context-sensitive LSTM model for sentiment classification.

- (5)

- BiGRU-Att [27]: The model uses the BiGRU network to encode and represent the context information for sentiment classification.

- (6)

- CMN [13]: The model adopts two GRU models to extract contextual features from the conversation history of two speakers and passes the current utterance as input to two different memory networks to obtain the utterance representation of the two speakers for sentiment classification.

- (7)

- DialogueRNN [15]: The model uses a BiGRU to model the speaker states, global states, and sentiment states based on recurrent neural networks (RNNs).

- (8)

- DialogueGCN [17]: Based on the graph neural network, the model constructs the conversation as a graph structure, represents the nodes in the graph as utterances, and uses the speaker’s information to determine the type of edge, to establish the time dependence and speaker dependency in the multi-party dialogue, and then realize the sentiment classification.

4.3. Results and Analysis

4.3.1. Effectiveness Analysis of Contextual Semantic Information and Affective Interaction Information

4.3.2. Affective Interaction-Directed Graph Window Size

4.3.3. The Impact Analysis of Feature Fusion Mode

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chung, H.; Iorga, M.; Voas, J.; Lee, S. Alexa, Can I Trust You? Computer 2017, 50, 100–104. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhu, X. Case II (Part A): JIMI’s Growth Path: Artificial Intelligence Has Redefined the Customer Service of JD.Com. In Emerging Champions in the Digital Economy; Springer: Singapore, 2019. [Google Scholar] [CrossRef]

- Li, F.L.; Qiu, M.; Chen, H.; Wang, X.; Gao, X.; Huang, J.; Ren, J.; Zhao, Z.; Zhao, W.; Wang, L.; et al. AliMe Assist: An Intelligent Assistant for Creating an Innovative E-commerce Experience. In Proceedings of the 2017 ACM on Conference on Information and Knowledge Management, Singapore, 6–10 November 2017; pp. 2495–2498. [Google Scholar] [CrossRef]

- Sun, X.; Zhang, C.; Li, L. Dynamic emotion modelling and anomaly detection in conversation based on emotional transition tensor. Inf. Fusion 2019, 46, 11–22. [Google Scholar] [CrossRef]

- Majid, R.; Santoso, H.A. Conversations Sentiment and Intent Categorization Using Context RNN for Emotion Recognition. In Proceedings of the 2021 7th International Conference on Advanced Computing and Communication Systems (ICACCS), Coimbatore, India, 19–20 March 2021; pp. 46–50. [Google Scholar] [CrossRef]

- Wei, J.; Feng, S.; Wang, D.; Zhang, Y.; Li, X. Attentional Neural Network for Emotion Detection in Conversations with Speaker Influence Awareness. In Natural Language Processing and Chinese Computing: 8th CCF International Conference, NLPCC 2019, Dunhuang, China, 9–14 October 2019; Springer International Publishing: Berlin/Heidelberg, Germany, 2019. [Google Scholar]

- Huang, X.; Ren, M.; Han, Q.; Shi, X.; Nie, J.; Nie, W.; Liu, A.-A. Emotion Detection for Conversations Based on Reinforcement Learning Framework. IEEE Multimed. 2021, 28, 76–85. [Google Scholar] [CrossRef]

- Hu, D.; Wei, L.; Huai, X. DialogueCRN: Contextual Reasoning Networks for Emotion Recognition in Conversations. arXiv 2021, arXiv:2106.01978. [Google Scholar]

- Poria, S.; Cambria, E.; Hazarika, D.; Majumder, N.; Zadeh, A.; Morency, L.-P. Context-Dependent Sentiment Analysis in User-Generated Videos. In Proceedings of the 55th Annual Meeting of the Association-for-Computational-Linguistics (ACL), Vancouver, BC, Canada, 30 July–4 August 2017; pp. 873–883. [Google Scholar] [CrossRef] [Green Version]

- Zhong, P.; Wang, D.; Miao, C. Knowledge-Enriched Transformer for Emotion Detection in Textual Conversations. In Proceedings of the Conference on Empirical Methods in Natural Language Processing/9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 165–176. [Google Scholar]

- Tang, D.; Qin, B.; Feng, X.; Liu, T. Effective LSTMs for Target-Dependent Sentiment Classification. In Proceedings of the COLING 2016, the 26th International Conference on Computational Linguistics: Technical Papers, Osaka, Japan, 11–16 December 2016; pp. 3298–3307. [Google Scholar]

- Basiri, M.E.; Nemati, S.; Abdar, M.; Cambria, E.; Acharrya, U.R. ABCDM: An Attention-based Bidirectional CNN-RNN Deep Model for sentiment analysis. Future Gener. Comput. Syst. Int. J. Escience 2021, 115, 279–294. [Google Scholar] [CrossRef]

- Hazarika, D.; Poria, S.; Zadeh, A.; Cambria, E.; Morency, L.P.; Zimmermann, R. Conversational Memory Network for Emotion Recognition in Dyadic Dialogue Videos. Proc. Conf. 2018, 2018, 2122–2132. [Google Scholar] [CrossRef] [PubMed]

- Hazarika, D.; Poria, S.; Mihalcea, R.; Cambria, E.; Zimmermann, R. ICON: Interactive Conversational Memory Network for Multimodal Emotion Detection. In Proceedings of the Conference on Empirical Methods in Natural Language Processing (EMNLP), Brussels, Belgium, 31 October–4 November 2018; pp. 2594–2604. [Google Scholar]

- Majumder, N.; Poria, S.; Hazarika, D.; Mihalcea, R.; Gelbukh, A.; Cambria, E. DialogueRNN: An Attentive RNN for Emotion Detection in Conversations. In Proceedings of the 33rd AAAI Conference on Artificial Intelligence/31st Innovative Applications of Artificial Intelligence Conference/9th AAAI Symposium on Educational Advances in Artificial Intelligence, Honolulu, HI, USA, 17 July 2019; pp. 6818–6825. [Google Scholar]

- Zhang, D.; Wu, L.; Sun, C.; Li, S.; Zhu, Q.; Zhou, G. Modeling both Context- and Speaker-Sensitive Dependence for Emotion Detection in Multi-speaker Conversations. In Proceedings of the 28th International Joint Conference on Artificial Intelligence, Macao, China, 10–16 August 2019; pp. 5415–5421. [Google Scholar]

- Ghosal, D.; Majumder, N.; Poria, S.; Chhaya, N.; Gelbukh, A. DialogueGCN: A Graph Convolutional Neural Network for Emotion Recognition in Conversation. In Proceedings of the Conference on Empirical Methods in Natural Language Processing/9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 30 August 2019; pp. 154–164. [Google Scholar]

- Wang, Y.; Zhang, J.; Ma, J.; Wang, S.; Xiao, J. Contextualized Emotion Recognition in Conversation as Sequence Tagging. In Proceedings of the 21st Annual Meeting of the Special-Interest-Group-on-Discourse-and-Dialogue (SIGDIAL), Electr Network, 1st Virtual Meeting, Virtual, 1–3 July 2020; pp. 186–195. [Google Scholar]

- Gao, Q.; Cao, B.; Guan, X.; Gu, T.; Bao, X.; Wu, J.; Liu, B.; Cao, J. Emotion recognition in conversations with emotion shift detection based on multi-task learning. Knowl.-Based Syst. 2022, 248, 108861. [Google Scholar] [CrossRef]

- Zhu, L.; Pergola, G.; Gui, L.; Zhou, D.; He, Y. Topic-Driven and Knowledge-Aware Transformer for Dialogue Emotion Detection. In Proceedings of the Joint Conference of 59th Annual Meeting of the Association-for-Computational-Linguistics (ACL)/11th International Joint Conference on Natural Language Processing (IJCNLP)/6th Workshop on Representation Learning for NLP (RepL4NLP), Electr Network, Online, 2 June 2021; pp. 1571–1582. [Google Scholar]

- Liu, R.; Ye, X.; Yue, Z. Review of pre-trained models for natural language processing tasks. J. Comput. Appl. 2021, 41, 1236–1246. [Google Scholar] [CrossRef]

- Sun, Y.; Wang, S.; Feng, S.; Ding, S.; Pang, C.; Shang, J.; Liu, J.; Chen, X.; Zhao, Y.; Lu, Y.; et al. Ernie 3.0: Large-scale knowledge enhanced pre-training for language understanding and generation. arXiv 2021, arXiv:2107.02137. [Google Scholar]

- Khiabani, P.J.; Basiri, M.E.; Rastegari, H. An improved evidence-based aggregation method for sentiment analysis. J. Inf. Sci. 2020, 46, 340–360. [Google Scholar] [CrossRef]

- Liu, B. Sentiment Analysis: Mining Opinions, Sentiments, and Emotions; Cambridge University Press: Singapore, 2020. [Google Scholar]

- Shen, J.; Liao, X.; Tao, Z. Sentence-Level Sentiment Analysis via BERT and BiGRU. In Proceedings of the 2nd International Conference on Image and Video Processing, and Artificial Intelligence (IPVAI), Shanghai, China, 23–25 August 2019; p. 11321. [Google Scholar] [CrossRef]

- Yenduri, G.; Rajakumar, B.R.; Praghash, K.; Binu, D. Heuristic-Assisted BERT for Twitter Sentiment Analysis. Int. J. Comput. Intell. Appl. 2021, 20, 2150016. [Google Scholar] [CrossRef]

- Zhou, L.; Bian, X. Improved text sentiment classification method based on BiGRU-Attention. J. Phys. Conf. Ser. 2019, 1345, 032097. [Google Scholar] [CrossRef]

| Number | Relationship between Speakers |

|---|---|

| 1 | |

| 2 | |

| 3 | |

| 4 | |

| 5 | |

| 6 | |

| 7 | |

| 8 |

| Sentiment | Train | Dev. | Test | Proportion |

|---|---|---|---|---|

| Very negative | 1038 | 163 | 282 | 1483 (2.1%) |

| Negative | 3466 | 613 | 1019 | 5098 (7.22%) |

| Neutral | 31,625 | 3953 | 8345 | 43,923 (62.2%) |

| Positive | 12,794 | 2482 | 3819 | 19,095 (27.04%) |

| Very positive | 722 | 102 | 193 | 1017 (1.44%) |

| Total utterances | 49,645 | 7313 | 13,658 | 70,616 |

| Model | F1 Value | Macro-F1 | ||||

|---|---|---|---|---|---|---|

| Very Negative | Negative | Neutral | Positive | Very Positive | ||

| BiGRU | 50.5 | 55.1 | 78.1 | 72.3 | 63.3 | 63.86 |

| BERT | 52.1 | 56.6 | 82.2 | 74.2 | 64.6 | 65.94 |

| ERNIE | 52.3 | 56.6 | 82.5 | 74.3 | 64.8 | 66.1 |

| c-LSTM | 55.2 | 59.7 | 82.2 | 76.1 | 67.2 | 68.08 |

| BiGRU-Att | 55.7 | 60.1 | 82.7 | 76.5 | 67.8 | 68.56 |

| CMN | 56.9 | 60.9 | 82.8 | 76.7 | 69.1 | 69.28 |

| DiaogueRNN | 58.5 | 61.9 | 83.3 | 77.5 | 70.4 | 70.32 |

| DiaogueGCN | 59.2 | 62.5 | 83.3 | 77.6 | 71.1 | 70.74 |

| Our model | 60.3 | 64.6 | 83.2 | 78.9 | 72.5 | 71.90 |

| Model | Conversation Text Encoder | Context Semantic Encoder | Affective Interaction Encoder |

|---|---|---|---|

| Our model(a) | √ | × | × |

| Our model(b) | √ | × | √ |

| Our model(c) | √ | √ | × |

| Our model | √ | √ | √ |

| Model | F1 Value | Macro-F1 | ||||

|---|---|---|---|---|---|---|

| Very Negative | Negative | Neutral | Positive | Very Positive | ||

| Our model(a) | 52.3 | 56.6 | 82.5 | 74.3 | 64.8 | 66.1 |

| Our model(b) | 56.5 | 60.1 | 81.2 | 75.8 | 68.4 | 68.4 |

| Our model(c) | 56.8 | 61.4 | 81.9 | 76.6 | 69.7 | 69.28 |

| Our model | 60.3 | 64.6 | 83.5 | 78.9 | 72.5 | 71.96 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, Z.; Liu, H.; Zhu, J.; Min, J. Customer Sentiment Recognition in Conversation Based on Contextual Semantic and Affective Interaction Information. Appl. Sci. 2023, 13, 7807. https://doi.org/10.3390/app13137807

Huang Z, Liu H, Zhu J, Min J. Customer Sentiment Recognition in Conversation Based on Contextual Semantic and Affective Interaction Information. Applied Sciences. 2023; 13(13):7807. https://doi.org/10.3390/app13137807

Chicago/Turabian StyleHuang, Zhengwei, Huayuan Liu, Jun Zhu, and Jintao Min. 2023. "Customer Sentiment Recognition in Conversation Based on Contextual Semantic and Affective Interaction Information" Applied Sciences 13, no. 13: 7807. https://doi.org/10.3390/app13137807

APA StyleHuang, Z., Liu, H., Zhu, J., & Min, J. (2023). Customer Sentiment Recognition in Conversation Based on Contextual Semantic and Affective Interaction Information. Applied Sciences, 13(13), 7807. https://doi.org/10.3390/app13137807