Abstract

With the rise in piano teaching in recent years, many people have joined the ranks of piano learners. However, the high cost of traditional manual instruction and the exclusive one-on-one teaching model have made learning the piano an extravagant endeavor. Most existing approaches, based on the audio modality, aim to evaluate piano players’ skills. Unfortunately, these methods overlook the information contained in videos, resulting in a one-sided and simplistic evaluation of the piano player’s skills. More recently, multimodal-based methods have been proposed to assess the skill level of piano players by using both video and audio information. However, existing multimodal approaches use shallow networks to extract video and audio features, which limits their ability to extract complex spatio-temporal and time-frequency characteristics from piano performances. Furthermore, the fingering and pitch-rhythm information of the piano performance is embedded within the spatio-temporal and time-frequency features, respectively. Therefore, we propose a ResNet-based audio-visual fusion model that is able to extract both the visual features of the player’s finger movement track and the auditory features, including pitch and rhythm. The joint features are then obtained through the feature fusion technique by capturing the correlation and complementary information between video and audio, enabling a comprehensive and accurate evaluation of the player’s skill level. Moreover, the proposed model can extract complex temporal and frequency features from piano performances. Firstly, ResNet18-3D is used as the backbone network for our visual branch, allowing us to extract feature information from the video data. Then, we utilize ResNet18-2D as the backbone network for the aural branch to extract feature information from the audio data. The extracted video features are then fused with the audio features, generating multimodal features for the final piano skill evaluation. The experimental results on the PISA dataset show that our proposed audio-visual fusion model, with a validation accuracy of 70.80% and an average training time of 74.02 s, outperforms the baseline model in terms of performance and operational efficiency. Furthermore, we explore the impact of different layers of ResNet on the model’s performance. In general, the model achieves optimal performance when the ratio of video features to audio features is balanced. However, the best performance achieved is 68.70% when the ratio differs significantly.

1. Introduction

The automated assessment of skills involves quantifying how proficient a person is in the task at hand. Automated skill assessment can be used in many areas such as physical movement assessment and music education. Due to the higher cost of manual instruction and space limitations, face-to-face piano instruction between teacher and student has become difficult, which makes automated skill assessment very important.

As we know, piano performances comprise both visual and auditory aspects. Therefore, the assessment of piano players’ skills can also be conducted from both visual and auditory perspectives. For the visual aspect, a piano performance judge can score the performance by observing the player’s fingering. Similarly, for the aural aspect, the judge scores the performance by assessing the rhythm of the music played by the performer.

At present, most studies on piano performance evaluation have been conducted based on a single audio mode [1,2,3], disregarding the information contained in the video mode, such as playing technique and playing posture, resulting in a one-sided assessment of the player’s skill level and an inability to conduct a comprehensive assessment from multiple aspects.

However, most existing studies on multimodal piano skill assessment are based on shallow networks that are deficient in extracting complex spatio-temporal and time-frequency features. There is also a significant gap between the number of extracted video features and audio features during feature fusion, resulting in models that do not fully utilize feature information from both modalities.

Consequently, we propose an audio-visual fusion model that enables the extraction of feature information from both video and audio data, combining them to form multimodal features for a more comprehensive and accurate assessment of the skill levels of piano players. Although there have been some studies on multimodality, there are limitations in extracting complex spatio-temporal and time-frequency features, and the feature information from both modalities is not fully utilized. Therefore, we consider ResNet as the backbone network for the learning model, as it can extend the network to a deeper depth through the structures of residual connections to better extract complex features. ResNet-3D [4] and ResNet-2D [5] are used to extract the video features and audio features, respectively. Specifically, ResNet-3D is able to model both the spatial and temporal relationships between video frames, enabling it to better capture motion and dynamic changes in videos and extract spatio-temporal features. Furthermore, for audio data that have been converted into a time-frequency spectrogram, ResNet-2D can effectively extract time-frequency features and capture the frequency and time information in the audio. To fully utilize both the video and audio features, we employ ResNet18-3D and ResNet18-2D to address the issue of feature extraction, ensuring uniformity in the number of video and audio features.

The main contributions of this paper are as follows:

(1) We present a novel ResNet-based audio-visual fusion model for evaluating piano players’ proficiencies by effectively utilizing both video and audio information. This approach addresses the problem where the unimodal approaches fail to utilize video information and the multimodal approaches fail to fully utilize both video and audio information. Firstly, we extract video and audio features using ResNet-3D and ResNet-2D, respectively. Then, the extracted features are fused to generate multimodal features that will be used for piano skills evaluation.

(2) We propose an effective method that fully utilizes information from both the visual and aural modalities to improve the accuracy and comprehensiveness of piano skills assessment. By incorporating visual and aural information, we can obtain richer and more comprehensive features, enabling a more precise assessment of the skill levels of the performers. Moreover, we maintain a balance between the number of visual and aural features, thereby ensuring that the information from both modalities is fully utilized.

(3) We conduct experiments on the PISA dataset, and the results show that our proposed ResNet-based audio-visual fusion model, with an accuracy of 70.80% and an average training time of 74.02 s, outperforms the state-of-the-art models in the evaluation of piano players’ skill levels, as well as in computational efficiency.

2. Related Works

2.1. Traditional Skills Assessment

Recently, there have been significant advances in the field of skills assessment. Seode et al. [6] proposed an autoencoder with an LSTM layer as its intermediate layer to identify runners’ skills, including their characteristics and habits, which aimed to improve the performance of runners. Lid et al. [7] constructed a novel RNN-based spatial attention model for evaluating human operational skills while performing a task from a video. Their model considers the accumulated attention state from previous frames and incorporates high-level information about the progress of the ongoing task. Doughty et al. [8] proposed a model called Rank-Aware Attention, which utilizes a learnable temporal attention module to determine the relative skills from long videos and presented a method for assessing the overall level of skills in long videos by focusing on skill-related components. Lee et al. [9] proposed a novel dual-stream convolutional neural network that combines video and audio inputs, which aims to determine the keys being played on the piano at any given time and identify the fingers used to press those keys. Moreover, Doughty et al. [10] proposed a pairwise deep ranking model that utilizes both spatial and temporal streams in combination with a novel loss function to determine and rank skill levels. Moreover, Afshangian et al. [11] evaluated motor skills using the Bilingual Aphasia Test (BAT) and conducted appropriate behavioral assessments, including coordination tests, visual-motor tests, and speech processing. Baeyens et al. [12] employed surface electromyography (sEMG) to capture the activity of the upper trapezius muscle (UT) during the prolonged playing of fast and slow music in 10 conservatory piano students. Their aim was to explore the effects of repetitive piano playing on the neck and shoulder muscles.

2.2. Piano Performance in Unimodality

The most widely used method for piano skill evaluation is based on the aural mode. Chang et al. [1] proposed an LSTM-based piano performance evaluation strategy for evaluating piano performance. Their approach incorporates three indicators, namely overall evaluation, rhythm, and expressiveness. Wang et al. [3] proposed two different audio-based systems for piano performance evaluation. The first is a sequential and modular system that includes three steps: acoustic feature extraction, dynamic time warping (DTW), and performance score regression. The second is an end-to-end system that uses a CNN and attention mechanisms to directly predict performance scores. Varinya et al. [13] evaluated piano playing using four different approaches: Support Vector Machine (SVM), Naive Bayes (NB), Convolutional Neural Network (CNN), and Long Short-Term Memory (LSTM). The evaluated results were classified as “good”, “normal”, and “bad”, and they found that the CNN approach outperformed the other methods. Liao et al. [14] presented a musical instrument digital interface (MIDI) piano evaluation scheme based on an RNN structure and Spark computational engine to compensate for the shortcomings of rule-based evaluation methods, which cannot consider the coherence and expressiveness of music.

2.3. Piano Performance in Multimodality

Every source or form of information can be called a modality. For example, humans have the senses of touch, sight, hearing, and smell, whereas the communication media for information include speech, video, text, etc. Multimodal machine learning aims to develop the ability to process and understand multisource modal information through machine learning methods. Multimodal machine learning has been used in a wide variety of fields. The authors of [15] showed that combining audio and video data can improve transcriptions compared to using each modality alone. Parmar et al. [16] were the first to propose combining video and audio for assessing piano skills. They used C3D and ResNet18-2D to process video and audio information separately, achieving promising results on their proposed PISA dataset.

2.4. Transfer Learning

Transfer learning is a method that involves extending a model trained on a specific task with a large amount of data to tackle a similar task using the knowledge it has learned to extract useful features for the new task. In recent years, transfer learning has demonstrated exceptional performance in a variety of research tasks. Pre-trained models trained on large datasets such as ImageNet have been widely used in various fields such as graph segmentation [17,18] and medical image analysis [19,20]. In [21], a C3D [22] model trained from scratch on UCF101 achieved an accuracy of 88%. However, by using a pre-trained model trained on kinetics, an accuracy of 98% was achieved.

In general, most existing studies on piano skills assessment are based on the audio modality, disregarding the video information, while the few studies based on multimodality, such as [16], suffer from an inability to capture complex spatio-temporal features that encompass fingering and pitch information and fail to fully utilize video and audio features. Therefore, we propose a ResNet-based audio-visual fusion model that is able to capture complex spatio-temporal features and fully utilize the extracted features.

3. Methodology

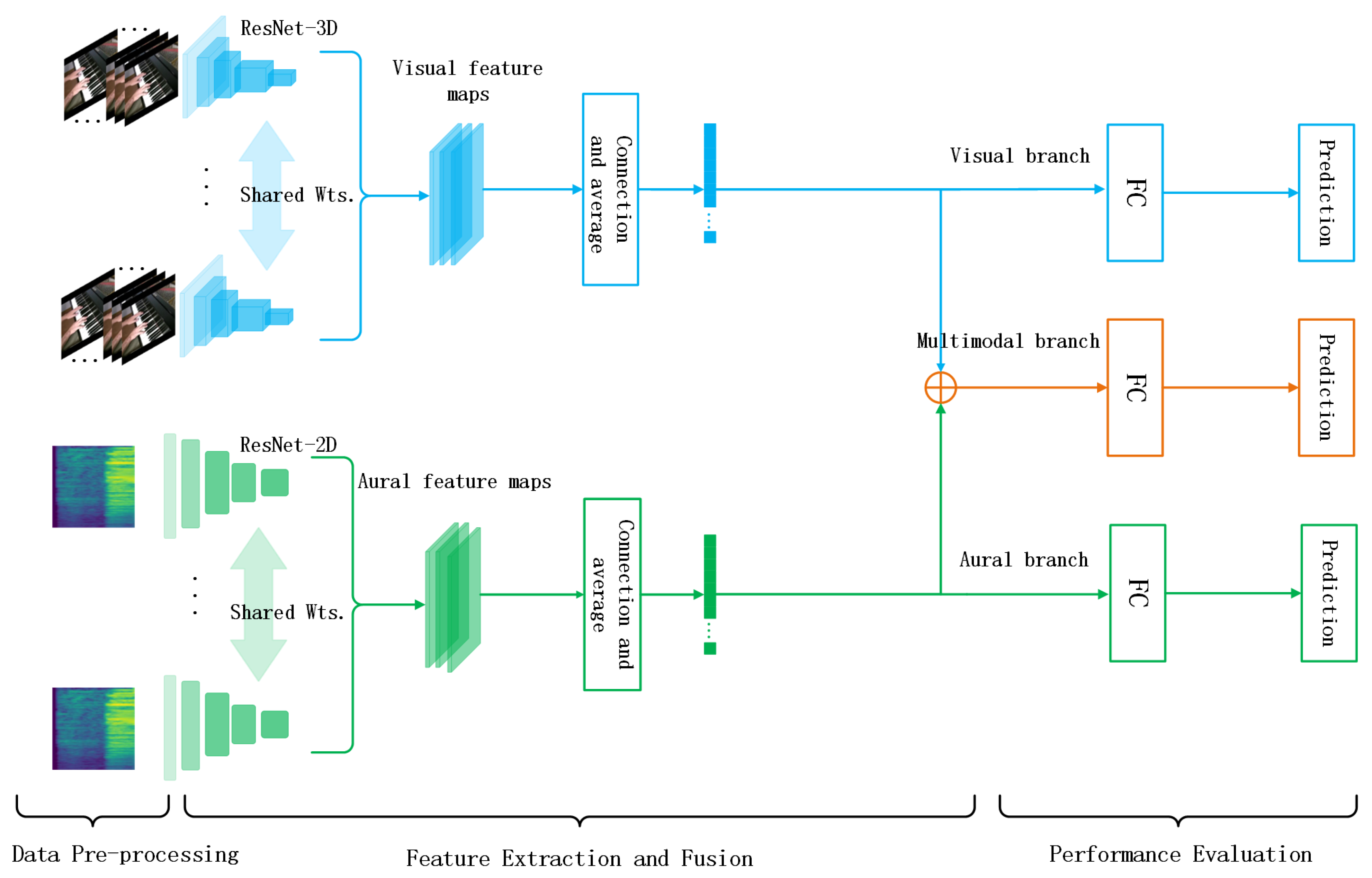

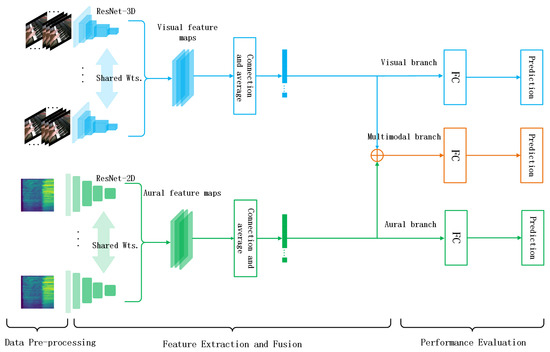

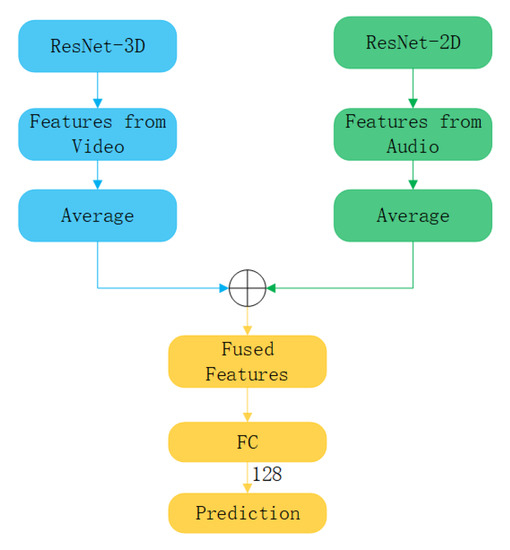

In this section, we detail the audio-visual fusion model used for assessing the skill levels of piano performers. Figure 1 shows the framework of our proposal. It consists of three main components: data pre-processing, feature extraction and fusion, and performance evaluation. First, the video data are framed and cropped to serve as the input for the visual branch. The raw audio is then converted to the corresponding Mel-spectrogram using signal processing techniques and spectral analysis methods. Second, we feed the processed video and audio data into the audio-visual fusion model to extract their respective features and fuse the extracted features to form multimodal representations. Finally, we pass the multimodal features as input to the fully connected layer and then perform prediction.

Figure 1.

Framework of audio-visual fusion model for piano skills evaluation.

3.1. Data Pre-Processing

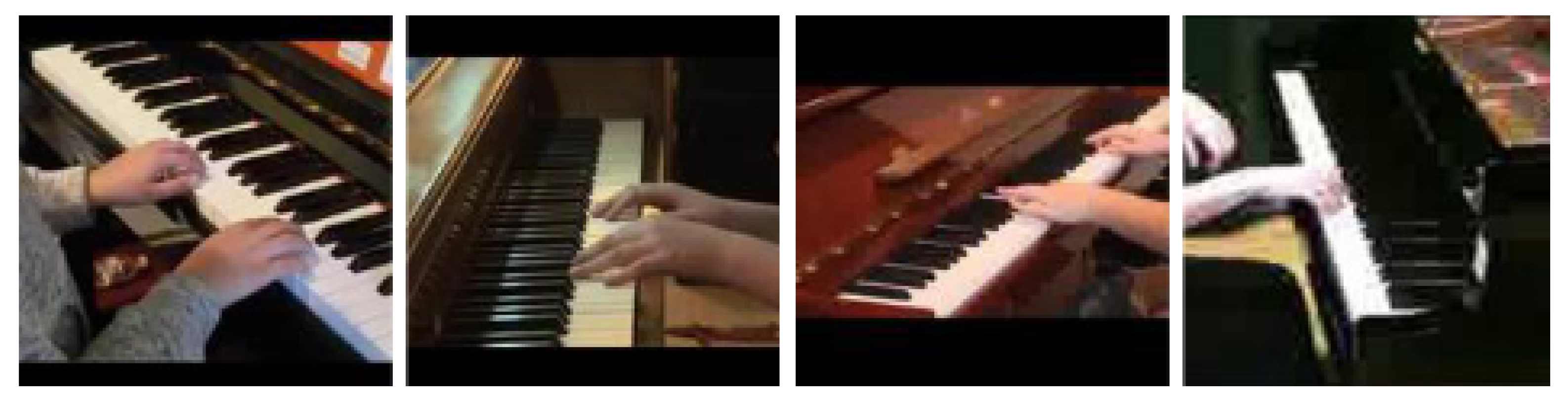

For the visual input, we discard the background, face, and other irrelevant information contained in the video. So, we crop the video data before feeding it into the model. The resulting visual information includes the forearm, hand, and piano of the player, as shown in Figure 2.

Figure 2.

Samples from the dataset. Images after cropping (only part of the complete sample is shown).

For the auditory input, we convert the raw audio to the corresponding Mel-spectrogram, which helps us to better extract information such as pitch that is embedded in the audio data. Firstly, we convert the raw audio into the corresponding spectrogram using STFT (Short-Time Fourier Transform):

where represents the outcome of the STFT, represents the window function, refers to the time-domain waveform of the original signal, f represents the frequency, t indicates the time, and j is the imaginary unit, which satisfies . Then, the obtained spectrogram is mapped to the corresponding Mel-spectrogram using the Mel-scale [23]:

where m represents the Mel frequency and f means the original frequency. Finally, the Mel-spectrogram information is expressed in decibels.

3.2. Feature Extraction and Fusion

Visual branch: There are some skills of performers that can only be observed and evaluated visually rather than by hearing such as the fingering skills of the performer. For example, a professional pianist playing a piece at high speed may use their index and ring fingers to play octaves, which is difficult for an average pianist. For a pianist, the lack of such skills does not necessarily indicate their proficiency level. However, if a pianist possesses these skills, it can prove that they have reached a high level of technical ability.

The movements of fingers captured in videos involve both the appearance and temporal dynamics of the video sequences. The efficient modeling of the spatio-temporal dynamics of these sequences is crucial for extracting robust features, which, in turn, improves the performance of the model. Three-dimensional CNNs are efficient in capturing the spatio-temporal dynamics in videos. Specifically, we consider ResNet-3D [4] to extract the spatio-temporal features of the performance clips from a video sequence. Compared to conventional 3D CNNs, ResNet-3D can effectively capture the spatio-temporal dynamics of the video modality with higher computational efficiency. For ResNet-3D, we stack multiple 3D convolutional layers to model motion features in the temporal dimension and utilize 3D pooling layers and fully connected layers for feature descent and combination. In this way, we can extract rich visual features from the video data, including information such as the object’s shape and color, to capture finger motion patterns. In addition, we can utilize a pre-trained model to improve the model’s performance, as shown in Algorithm 1. Finally, we employ the averaging method as our aggregation scheme (see Aggregation option).

| Algorithm 1 Model Initialization Algorithm |

|

Aural branch: We can also obtain a lot of information about the piano from the audio. The rhythm, notes, and pitches of the piano can be perceived through listening, and this is a common, simple, and practical way to evaluate a piano piece. In fact, different scores vary greatly in terms of style, rhythm, etc. This requires the judges to have a high level of proficiency in the piece being played by the performer in a piano competition or performance, and judges who are not familiar with the piece may encounter difficulties in evaluating the skills of the performer.

Information such as the pitch and rhythm of a piano performance is contained in the audio data, and both raw audio waveforms [24,25] and spectrograms [26,27] can be used to extract the auditory features. However, the spectrogram can provide more detailed and accurate audio features. Specifically, we convert the raw audio data into the corresponding Mel-spectrogram, which can be regarded as image data, due to its two-dimensional matrix form. We then feed the obtained Mel-spectrogram to the auditory network. Further, ResNet-2D [5] outperforms the traditional 2D CNN in terms of computational efficiency and feature extraction. Additionally, it can utilize a pre-trained model to improve performance. Therefore, we prefer to use ResNet-2D for feature extraction from the Mel-spectrogram. By stacking 2D convolutional layers, we can capture the patterns and variations in the audio data in the frequency and time dimensions. Moreover, the extracted auditory features include information such as the audio spectrum’s shape, pitch, and rhythm, which can reflect the content and characteristics of the audio data. Finally, we employ the averaging method as our aggregation scheme (see Aggregation option).

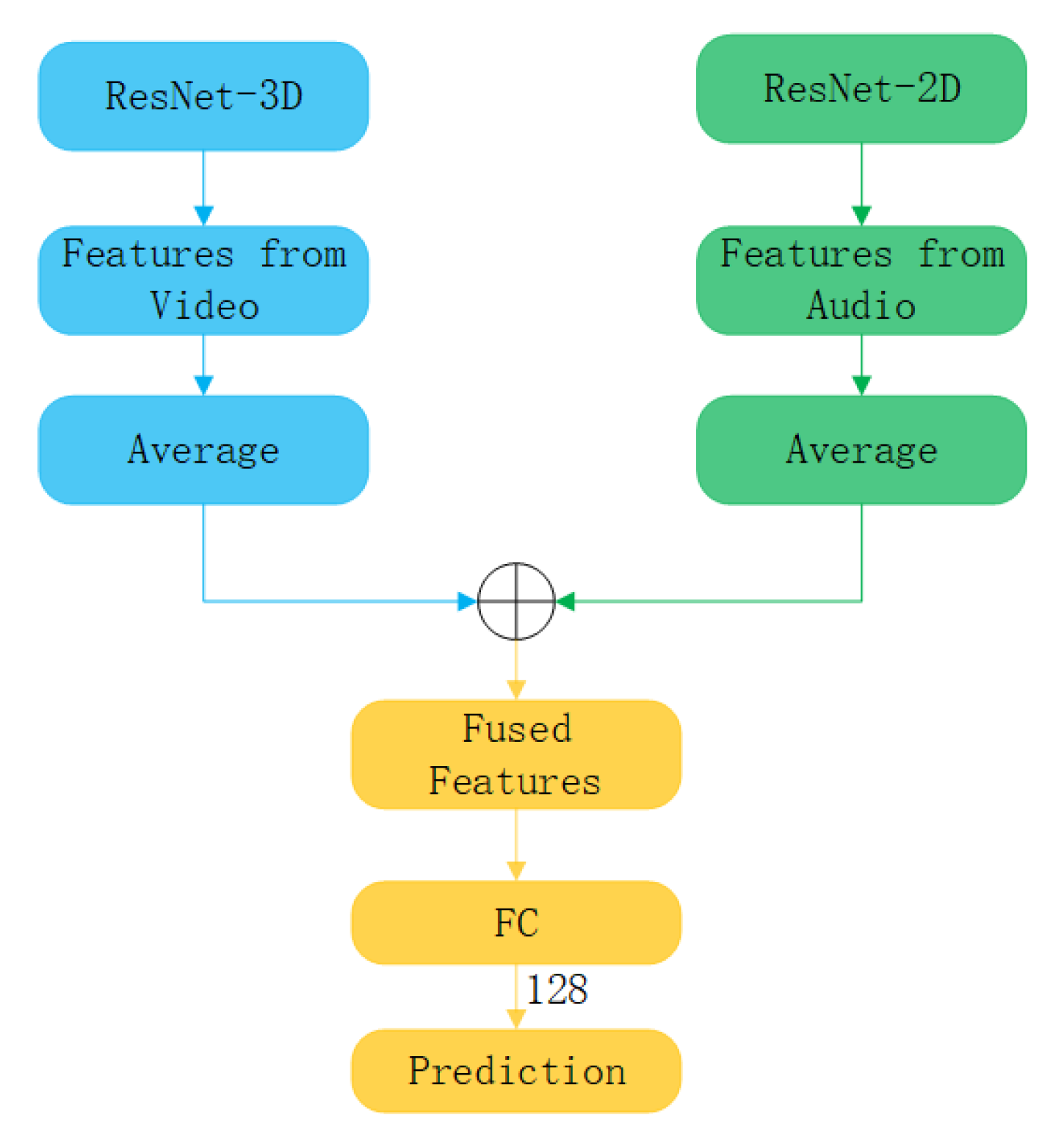

Multimodal branch: By utilizing the ResNet-3D and ResNet-2D networks, we have obtained visual and aural features. To better capture the semantic association and complementary information between the video and audio modalities, we adopt a joint representation approach for the features extracted from the video and audio data. This helps to create a more comprehensive and accurate feature representation. Let and represent two sets of deep feature vectors extracted for the visual and aural modalities, where and . d represents the dimension of the visual and aural feature representations, and M and N denote the number of extracted visual and aural features, respectively. The multimodal features, , are obtained by splicing with in the corresponding dimensions using Algorithm 2:

where L = M + N denotes the number of fused features, as shown in Figure 3.

| Algorithm 2 Feature Fusion Algorithm |

|

Figure 3.

The structure of feature fusion.

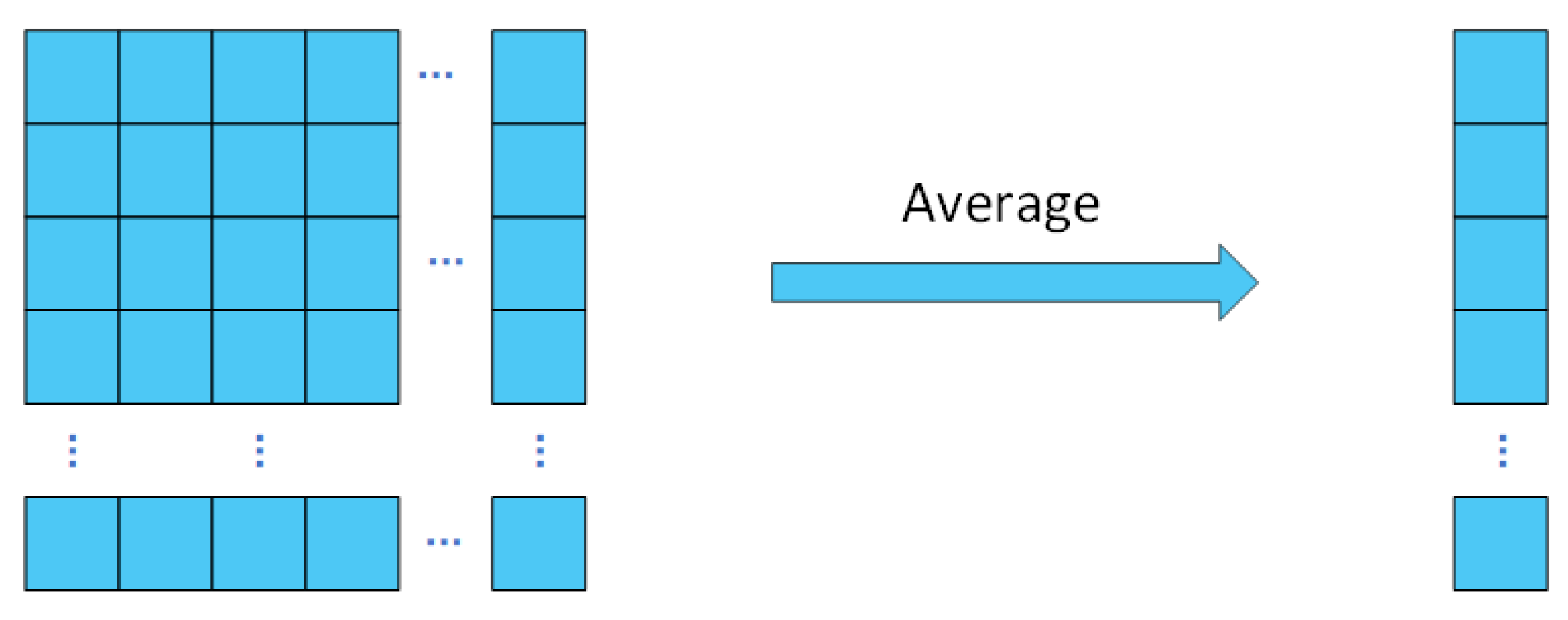

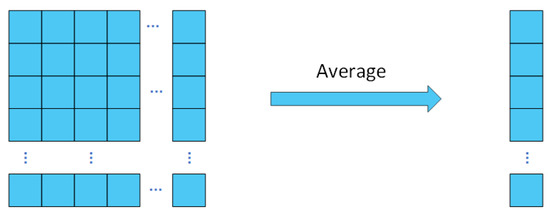

Aggregation option: During the piano performance, the score obtained by the players can be perceived as an additive operation. It is often advantageous to perform linear operations on the learned features, which enhances the interpretability and expressiveness of the learned features. Linear operations can also be utilized to reduce the dimensionality of the features, which enhances the efficiency and generalization capabilities of the model. Consequently, we propose the utilization of linear averaging as the preferred aggregation scheme. The application of linear averaging is detailed in Algorithm 3 and depicted in Figure 4.

| Algorithm 3 Feature Average Algorithm |

|

Figure 4.

Feature average option.

3.3. Performance Evaluation

In the visual and aural branches, to reduce the dimensionality of the features to 128, we pass them through a linear layer, as shown in Figure 3, and finally input them into the prediction layer. In the multimodal branch, our operations are similar to those of others, except that we do not back-propagate from the multimodal branch to a separate modal backbone to avoid cross-modal contamination.

4. Experiments

In this section, we conduct experiments on the real dataset and evaluate the performance of our proposed model. In Section 4.1, we discuss the details of the PISA dataset. In Section 4.2, we present the evaluation metric that we used, and the implementation details are presented in Section 4.3. In Section 4.4 and Section 4.5, we discuss the experimental results and the ablation study, respectively.

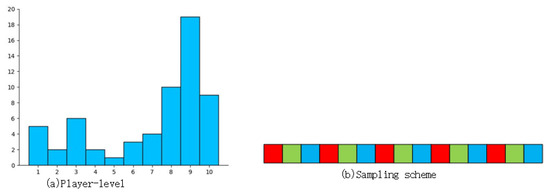

4.1. Multimodal PISA Dataset

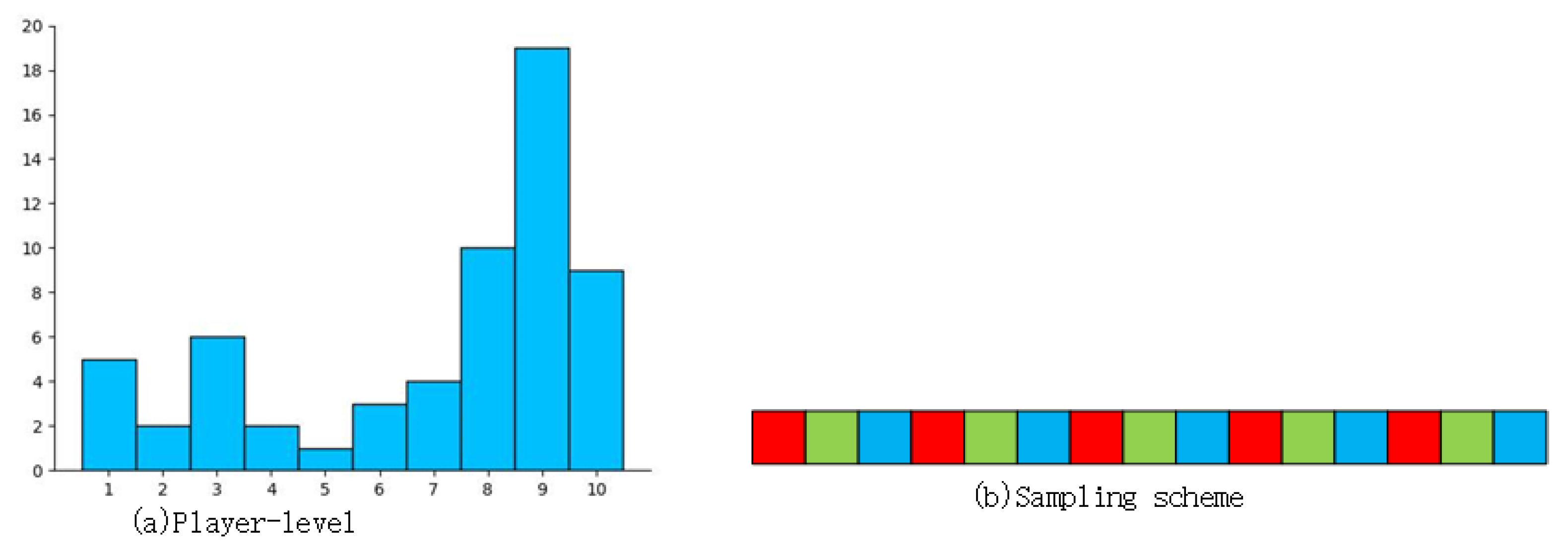

PISA: This is the Piano Skills Assessment (PISA) dataset. It consists of 61 videos of piano performances collected from YouTube and the performers’ skill levels are rated on a scale of 1–10, as shown in Figure 5a. Non-overlapping samples were obtained through uniform sampling, as shown in Figure 5b. In addition, there was no overlap between the training set and the test set. The details are shown in Table 1.

Figure 5.

(a) Histograms of player levels. (b) Sampling scheme: Time is represented along the x-axis. Each sample consists of squares with the same color, where each square represents a clip containing 16 frames.

Table 1.

Overview of the dataset.

4.2. Evaluation Metric

To evaluate the performance of the proposed model, we used accuracy (as a%) as the performance metric.

where represents the number of samples that are labeled as positive samples and also classified as positive samples, and refers to the number of samples labeled as negative samples but classified as positive samples.

4.3. Implementation Details

The entire model was built using PyTorch [28]. To regularize the network, dropout with p = 0.5 was applied to the linear layers. The initial learning rate of the network was set to and the Adam optimizer [29] was employed for all experiments. In addition, a weight decay of was used. Due to hardware limitations and memory constraints, the batch size of the model was set to 4. The number of epochs was set to 100, and the training time of each epoch was recorded.

Visual branch: The finger images were resized to 112 × 112 and, during training, a horizontal flip was applied to them. The images were then fed into the ResNet-3D model. In order to generate more samples, the videos in the dataset were converted to sequences of 160 frames, with each subsequence containing 16 frames, resulting in 516 training samples and 476 validation samples. The structure of ResNet-3D, which had been pre-trained on the KM dataset [4] (a combination of the Kinetics-700 [30] and MiT [31] datasets), is shown in Table 2. Finally, to explore the effect of different layers in ResNet-3D on the multimodal fusion model, we conducted experiments using networks with 18, 34, and 50 layers.

Table 2.

ResNet-3D for visual branch. All conv. layers are followed by Batch Normalization (BN) and Rectified Linear Units (ReLu). “[ ] × m” means repeat this block m times.

Aural branch: The audio signal was extracted from the corresponding video sequence and re-sampled to 44.1 KHz. It was then segmented into short audio segments. Firstly, we split the extracted audio signal into sub-audio segments, with durations ranging from 5.33 s to 6.67 s, corresponding to a sequence of 160 frames in the visual branch. The spectrogram was initially obtained by applying the Short-Time Fourier Transform (STFT) to each sub-audio segment. Then, the spectrogram was filtered to obtain the Mel-spectrogram using a window length of 2048 and a step size of 512, and the number of Mel-bands generated was 128. Then, the resulting Mel-spectrogram was converted to decibels (expressed in dB) and resized to 224 × 224. These Mel-spectrograms were fed into ResNet-2D, as described in Table 3, where the initial weights of the network were initialized using the values from the ImageNet [32] pre-trained model. To align the pre-trained model with our model, we modified the input channels of the first convolutional layer of the pre-trained network from 3 to 1. Similar to the visual branch, to explore the effects of different layers in ResNet-2D on the multimodal fusion model, we conducted experiments using networks with 18, 34, and 50 layers.

Table 3.

ResNet-2D for aural branch. All conv. layers are followed by Batch Normalization (BN) and Rectified Linear Units (ReLu). “[ ] × m” means repeat this block m times.

4.4. Results of Experiments

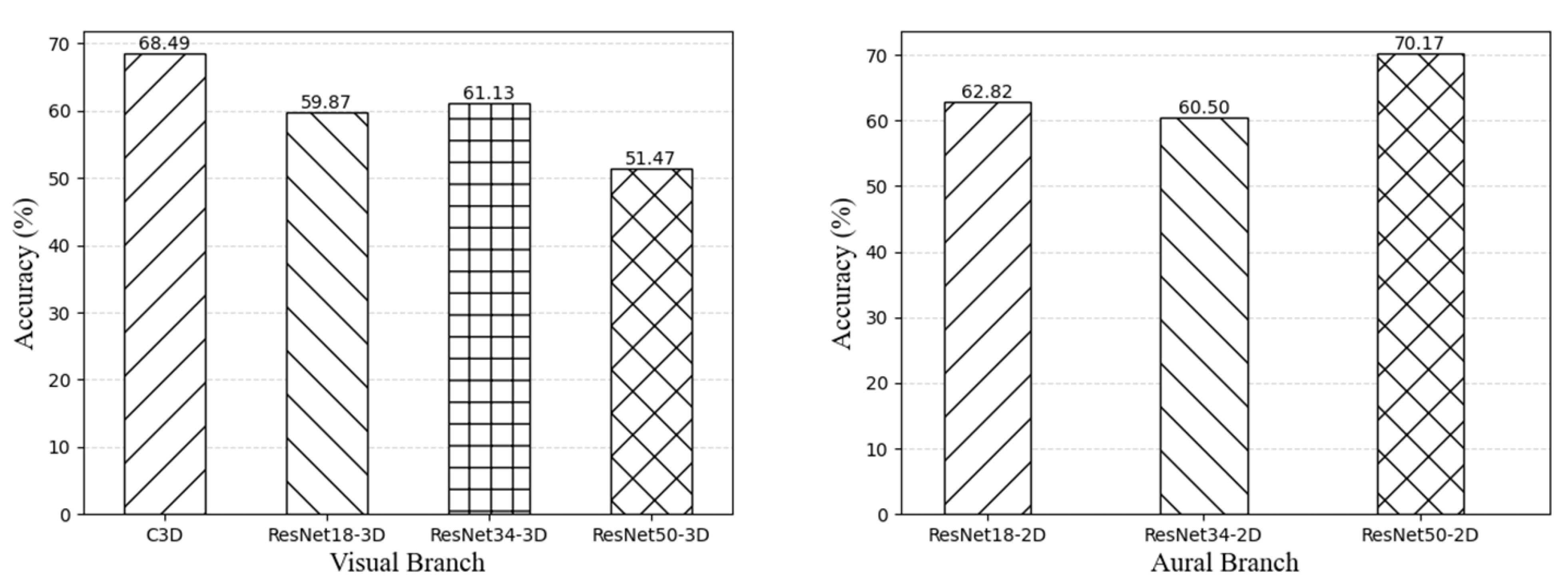

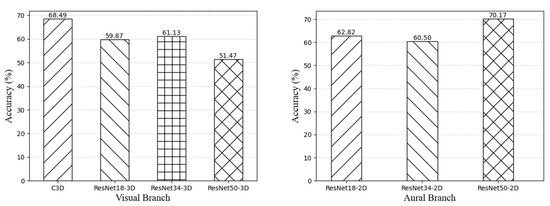

Table 4 and Figure 6 present the results of the unimodal and audio-visual fusion models on the PISA dataset. Both models achieved good results; however, the audio-visual fusion model achieved better results than the unimodal model. This indicates that the multimodal method can effectively compensate for the inability of the audio model alone to utilize visual information, leading to more accurate and comprehensive evaluation results. In addition, our audio-visual fusion model obtained the best experimental results, achieving an accuracy rate of 70.80%.

Table 4.

Performance (% accuracy) of multimodal evaluation. V:A: the ratio of the number of video features to audio features.

Figure 6.

Performance (% accuracy) of unimodal evaluation.

As shown in Table 5, our audio-visual fusion model outperformed the other models in terms of computational efficiency. This was due to the residual structure that accelerated the convergence speed of the network, as well as the small convolutional kernel that reduced the complexity of the convolutional operation, thus enhancing the computational efficiency of the model.

Table 5.

Average time to train 100 epochs per model.

The results in Table 6 show that the accuracy improvement of the audio-visual fusion model was smaller when the ratio of the number of video features to audio features was relatively large. However, when the number of features in both modalities was closer, the accuracy improvement was relatively larger. The reason may be that the number of features of one modality had a significantly larger number of features than the other, which caused the model to place more emphasis on the modality with more features and overlook the modality with fewer features.

Table 6.

Performance (% accuracy). V:A: the ratio of the number of video features to audio features.

4.5. Ablation Study

Impact of Pretraining. We compared the experimental results of the randomly initialized audio-visual fusion model with those of the audio-visual fusion model initialized with the KM and ImageNet pre-trained models. As shown in Table 7, the audio-visual fusion model utilizing the pre-trained model significantly outperformed the randomly initialized audio-visual fusion model on the PISA dataset, and the computational times for the two methods were similar. Transferring a pre-trained model trained on a large dataset effectively improved the accuracy of our model.

Table 7.

Performance impact due to pretraining. KM: the combined Kinetics-700 and Moments in Time (MiT) dataset.

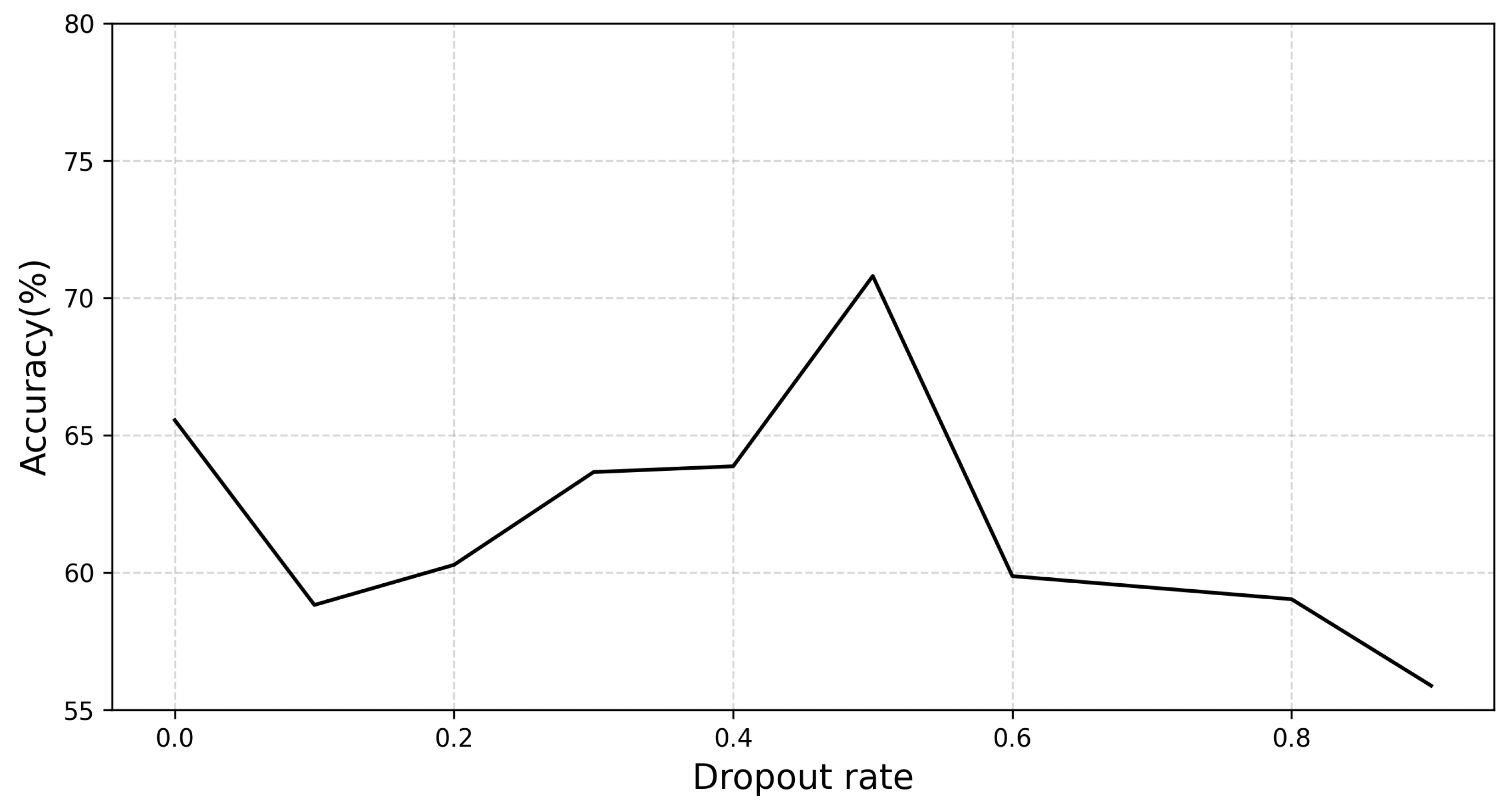

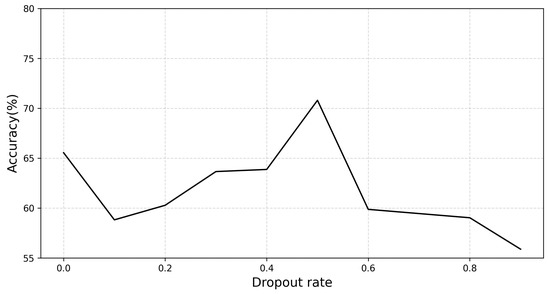

Impact of Dropout Rate. After extracting the multimodal features, we applied dropout to them and investigated the effect of different dropout rates on the performance of the audio-visual fusion model. The results are shown in Figure 7. When we trained the audio-visual fusion model without dropout, the accuracy was only 65.55%. As the dropout rate increased, the model accuracy gradually improved, reaching its highest point at a rate of 0.5, and then gradually decreased.

Figure 7.

Performance impact due to dropout rate.

5. Conclusions

In this work, we propose a ResNet-based audio-visual fusion model for evaluating piano skills. The main focus of our study is to use feature information from both the video and audio modalities to comprehensively assess the skill levels of piano players. Our study leverages the features of both modalities by fusing visual and aural information, which enhances the overall assessment of piano players’ skills.

The fusion of visual and auditory features enables the discovery of correlations and complementarities between audio and visual information, resulting in a more comprehensive and accurate feature representation. By utilizing ResNet as the backbone network, the proposed model leverages ResNet-3D and ResNet-2D to extract visual and auditory features from finger motions (visual) and audio features (auditory), respectively. Then, the visual and auditory features are combined by feature stitching to form multimodal features. Finally, the multimodal features are fed to the linear layer to predict the piano player’s skill level.

We conducted experiments on the PISA dataset and achieved an accuracy of 70.80% in the assessment of piano skills, surpassing the performance of state-of-the-art methods. We also explored the impact of different layers in ResNet on the model, providing a reference for the further optimization of the model. Our work provides piano learners with a more accurate and comprehensive skill evaluation, while also providing a deeper understanding of the piano playing process and performance.

Author Contributions

Conceptualization, X.Z. and Y.W.; methodology, Y.W.; validation, X.Z. and Y.W.; resources, Y.W.; data curation, Y.W.; writing—original draft preparation, Y.W.; writing—review and editing, X.Z. and X.C.; supervision, X.Z. and X.C.; funding acquisition, X.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This paper is supported by the Humanities and Social Sciences Foundation of the Ministry of Education (17YJCZH260) and the Sichuan Science and Technology Program (2020YFS0057).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Chang, X.; Peng, L. Evaluation strategy of the piano performance by the deep learning long short-term memory network. Wirel. Commun. Mob. Comput. 2022, 2022, 6727429. [Google Scholar] [CrossRef]

- Zhang, Y. An Empirical Analysis of Piano Performance Skill Evaluation Based on Big Data. Mob. Inf. Syst. 2022, 2022, 8566721. [Google Scholar] [CrossRef]

- Wang, W.; Pan, J.; Yi, H.; Song, Z.; Li, M. Audio-based piano performance evaluation for beginners with convolutional neural network and attention mechanism. IEEE/ACM Trans. Audio, Speech Lang. Process. 2021, 29, 1119–1133. [Google Scholar] [CrossRef]

- Hara, K.; Kataoka, H.; Satoh, Y. Can spatiotemporal 3d cnns retrace the history of 2d cnns and imagenet? In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6546–6555. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Seo, C.; Sabanai, M.; Ogata, H.; Ohya, J. Understanding sprinting motion skills using unsupervised learning for stepwise skill improvements of running motion. In Proceedings of the International Conference on Pattern Recognition Applications and Methods, Prague, Czech Republic, 19–21 February 2019. [Google Scholar]

- Li, Z.; Huang, Y.; Cai, M.; Sato, Y. Manipulation-skill Assessment from Videos with Spatial Attention Network. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), Seoul, Republic of Korea, 27–28 October 2019. [Google Scholar]

- Doughty, H.; Mayol-Cuevas, W.W.; Damen, D. The Pros and Cons: Rank-Aware Temporal Attention for Skill Determination in Long Videos. In Proceedings of the Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Lee, J.; Doosti, B.; Gu, Y.; Cartledge, D.; Crandall, D.J.; Raphael, C. Observing Pianist Accuracy and Form with Computer Vision. In Proceedings of the Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Doughty, H.; Damen, D.; Mayol-Cuevas, W.W. Who’s Better? Who’s Best? Pairwise Deep Ranking for Skill Determination. In Proceedings of the Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2019. [Google Scholar]

- Afshangian, F.; Wellington, J.; Pashmoforoosh, R.; Farzadfard, M.T.; Noori, N.K.; Jaberi, A.R.; Ostovan, V.R.; Soltani, A.; Safari, H.; Abolhasani Foroughi, A.; et al. The impact of visual and motor skills on ideational apraxia and transcortical sensory aphasia. Appl. Neuropsychol. Adult 2023, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Baeyens, J.P.; Flix Díez, L.; Serrien, B.; Goossens, M.; Veekmans, K.; Baeyens, R.; Daems, W.; Cattrysse, E.; Hohenauer, E.; Clijsen, R. Effects of Rehearsal Time and Repertoire Speed on Upper Trapezius Activity in Conservatory Piano Students. Med. Probl. Perform. Artist. 2022, 37, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Phanichraksaphong, V.; Tsai, W.H. Automatic Evaluation of Piano Performances for STEAM Education. Appl. Sci. 2021, 11, 11783. [Google Scholar] [CrossRef]

- Liao, Y. Educational Evaluation of Piano Performance by the Deep Learning Neural Network Model. Mob. Inf. Syst. 2022, 2022, 6975824. [Google Scholar] [CrossRef]

- Koepke, A.S.; Wiles, O.; Moses, Y.; Zisserman, A. Sight to Sound: An End-to-End Approach for Visual Piano Transcription. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020. [Google Scholar]

- Parmar, P.; Reddy, J.; Morris, B. Piano skills assessment. In Proceedings of the 2021 IEEE 23rd International Workshop on Multimedia Signal Processing (MMSP), Tampere, Finland, 6–8 October 2021; pp. 1–5. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Iglovikov, V.; Shvets, A. Ternausnet: U-net with vgg11 encoder pre-trained on imagenet for image segmentation. arXiv 2018, arXiv:1801.05746. [Google Scholar]

- Majkowska, A.; Mittal, S.; Steiner, D.F.; Reicher, J.J.; McKinney, S.M.; Duggan, G.E.; Eswaran, K.; Cameron Chen, P.H.; Liu, Y.; Kalidindi, S.R.; et al. Chest radiograph interpretation with deep learning models: Assessment with radiologist-adjudicated reference standards and population-adjusted evaluation. Radiology 2020, 294, 421–431. [Google Scholar] [CrossRef] [PubMed]

- Gulshan, V.; Peng, L.; Coram, M.; Stumpe, M.C.; Wu, D.; Narayanaswamy, A.; Venugopalan, S.; Widner, K.; Madams, T.; Cuadros, J.; et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA 2016, 316, 2402–2410. [Google Scholar] [CrossRef] [PubMed]

- Carreira, J.; Zisserman, A. Quo vadis, action recognition? A new model and the kinetics dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6299–6308. [Google Scholar]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning spatiotemporal features with 3d convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4489–4497. [Google Scholar]

- O’Shaughnessy, D. Speech Communication: Human and Machine; Addison-Wesley Series in Electrical Engineering; Addison-Wesley Publishing Company: New York, NY, USA, 1987. [Google Scholar]

- Lee, J.; Park, J.; Kim, K.L.; Nam, J. Sample-level deep convolutional neural networks for music auto-tagging using raw waveforms. arXiv 2017, arXiv:1703.01789. [Google Scholar]

- Zhu, Z.; Engel, J.H.; Hannun, A. Learning multiscale features directly from waveforms. arXiv 2016, arXiv:1603.09509. [Google Scholar]

- Choi, K.; Fazekas, G.; Sandler, M. Automatic tagging using deep convolutional neural networks. arXiv 2016, arXiv:1606.00298. [Google Scholar]

- Nasrullah, Z.; Zhao, Y. Music artist classification with convolutional recurrent neural networks. In Proceedings of the 2019 International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, 14–19 July 2019; pp. 1–8. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. Neural Inf. Process. Syst. 2019, 32. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Carreira, J.; Noland, E.; Hillier, C.; Zisserman, A. A short note on the kinetics-700 human action dataset. arXiv 2019, arXiv:1907.06987. [Google Scholar]

- Abu-El-Haija, S.; Kothari, N.; Lee, J.; Natsev, P.; Toderici, G.; Varadarajan, B.; Vijayanarasimhan, S. Youtube-8m: A large-scale video classification benchmark. arXiv 2016, arXiv:1609.08675. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).