Deep-Learning-Powered GRU Model for Flight Ticket Fare Forecasting

Abstract

1. Introduction

- The results obtained from the GRU model demonstrate a significant impact and provide valuable insights for ongoing research in this area.

- The research introduces a novel approach employing a deep-learning GRU model for fare prediction. This method addresses the limitations of traditional machine learning techniques that rely heavily on statistical variables in their models.

- The GRU model leverages its unique architecture to capture temporal dependencies in flight data, resulting in improved predictive performance.

2. Related Work

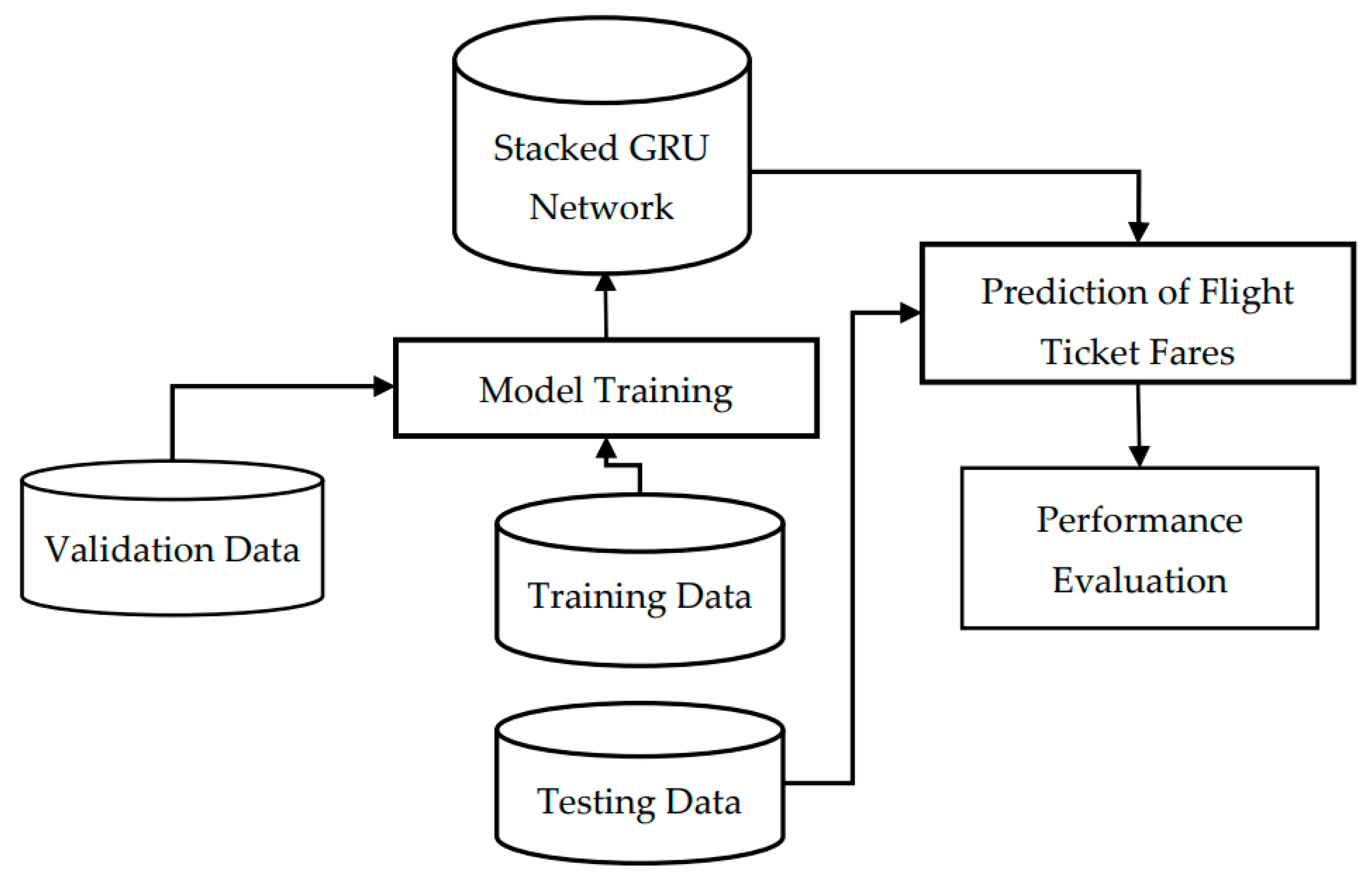

3. Methodology

3.1. Data Source

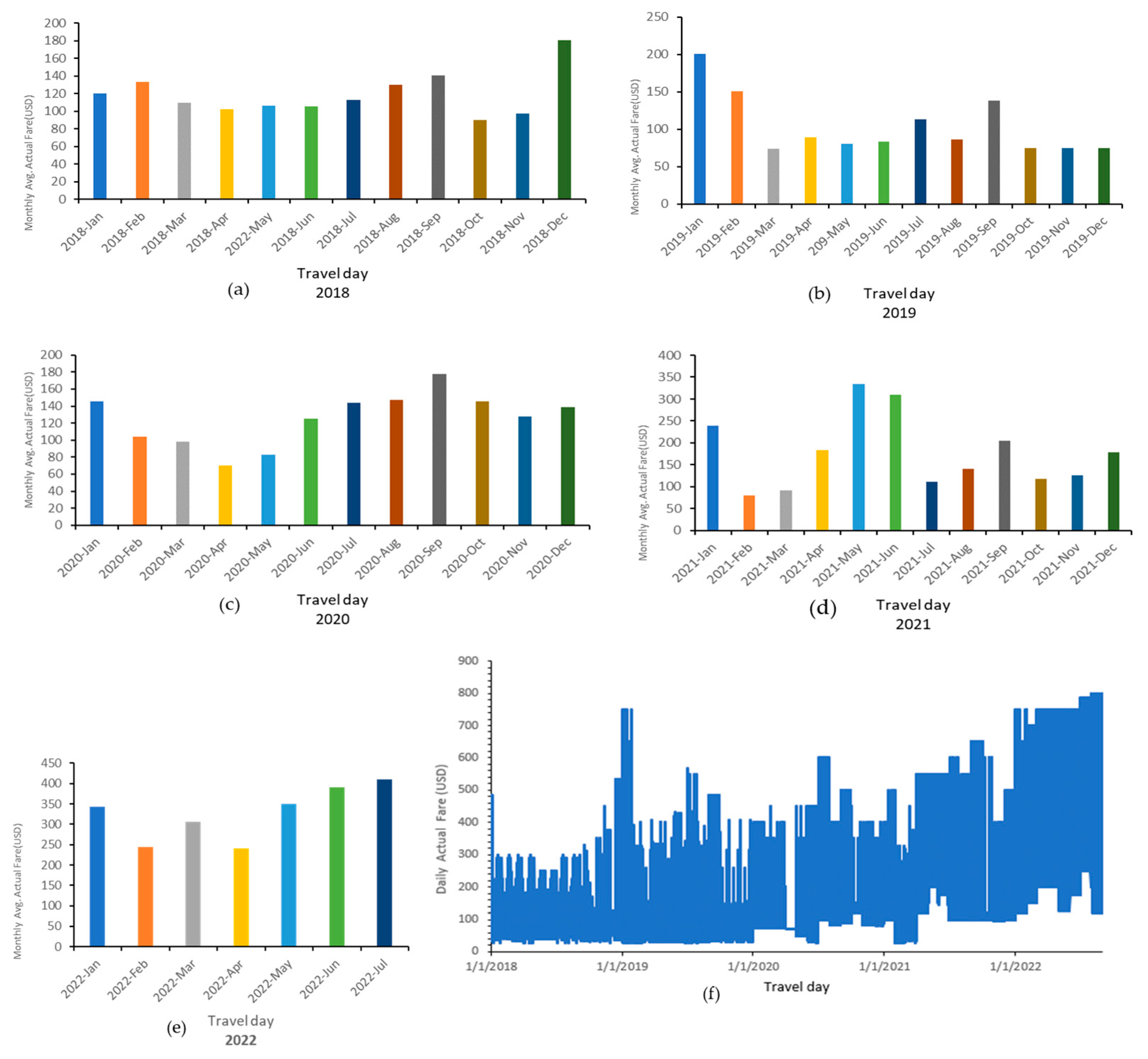

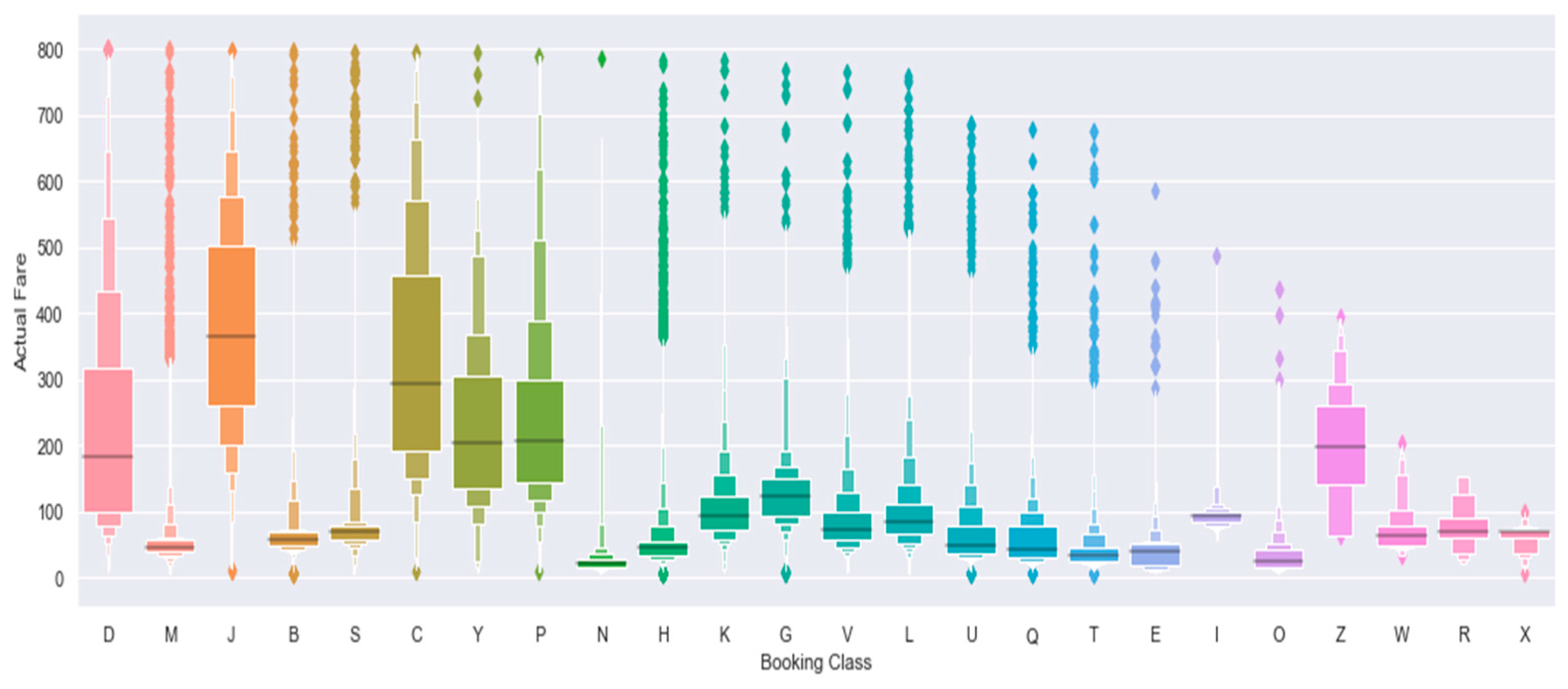

3.2. Preliminary Data Analysis

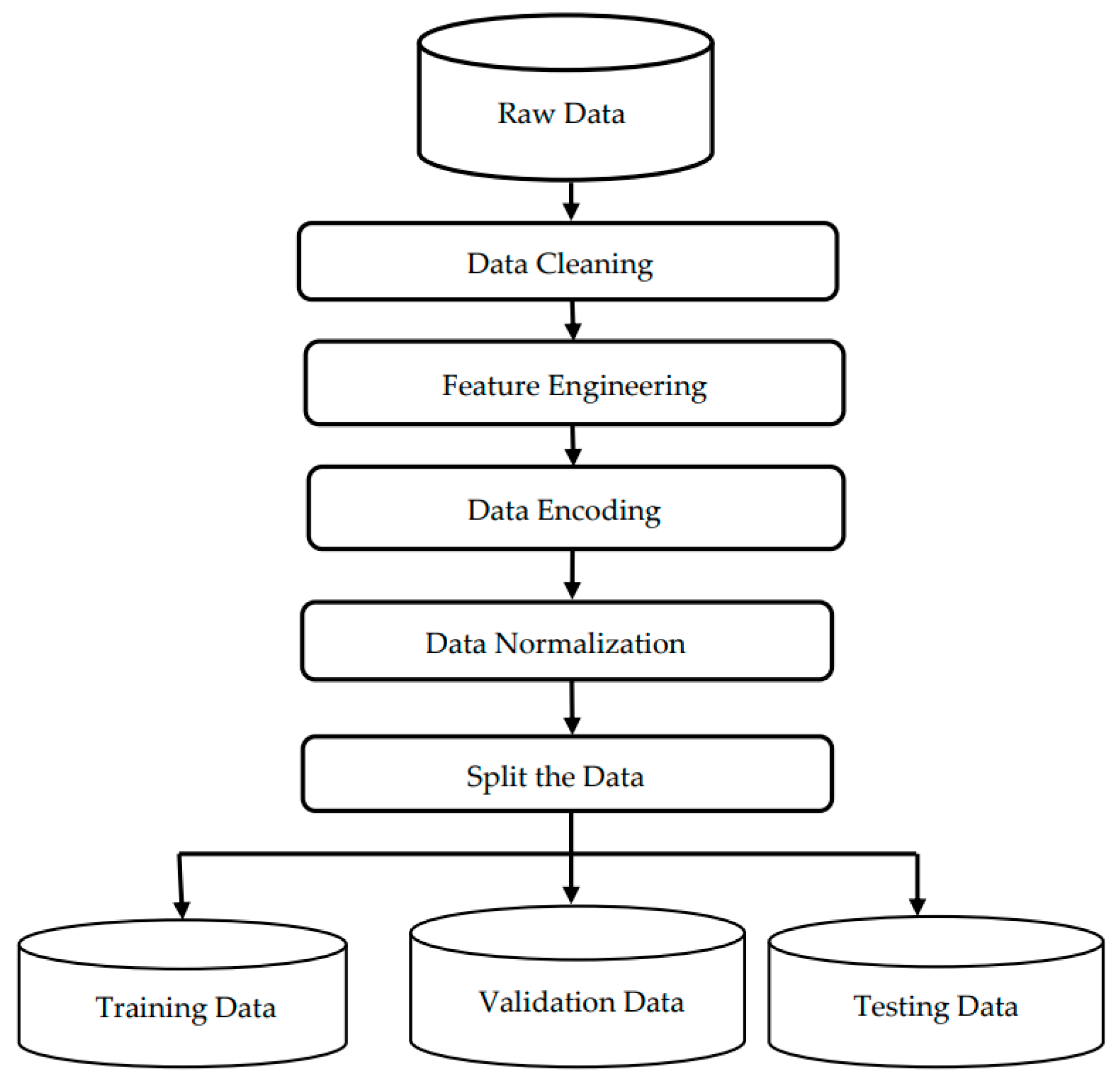

3.3. Data Preprocessing

3.4. Long Short-Term Memory

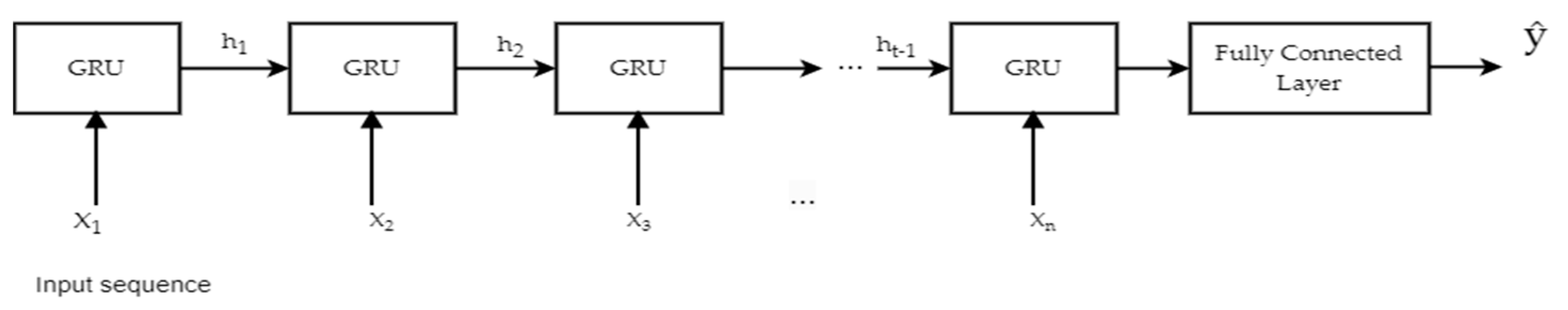

3.5. GRU Model

| Algorithm 1. Stacked GRU flight fare prediction model. |

| #Step 1: Define the StackedGRU class class StackedGRU(nn.Module): #Step 2: Define the constructor to initialize the model parameters def __init__(self, input_size, hidden_size, output_size, num_layers, dropout, window_size=1): super(StackedGRU, self).__init__() #Step 3: Set the model parameters self.input_size = input_size # Size of input layer self.hidden_size = hidden_size # Size of hidden layers self.output_size = output_size # Size of output layer self.num_layers = num_layers # Number of layers in the stacked GRU model self.dropout = dropout # Dropout probability self.window_size = window_size # Number of time steps to include in each window #Step 4: Create a list of GRU layers self.gru_layers = nn.ModuleList() #Step 5: Add the first GRU layer self.gru_layers.append(nn.GRU(input_size, hidden_size, batch_first=True, dropout=dropout)) #Step 6: Add additional GRU layers if num_layers > 1 for i in range(num_layers-1): self.gru_layers.append(nn.GRU(hidden_size, hidden_size, batch_first=True, dropout=dropout)) #Step 7: Create the output layer self.linear = nn.Linear(hidden_size * window_size, output_size) #Step 8: Define the forward pass through the stacked GRU model def forward (self, x): #Step 9: Reshape the input into windows of size self.window_size x = x.view(x.shape[0], -1, self.window_size, self.input_size) #Step 10: Transpose the input to (batch_size, window_size, sequence_length, input_size) x = x.transpose(1, 2) #Step 11: Flatten the input to (batch_size * window_size, sequence_length, input_size) x = x.contiguous().view(-1, x.shape[3], x.shape[2]) #Step 12: Pass the input through each GRU layer in the stacked model for i in range(self.num_layers): #Step 12a: Get the current GRU layer output, _ = self.gru_layers[i](x) #Step 12b: Apply dropout to the output output = F.dropout(output, p=self.dropout, training=self.training) #Step 12c: Set the input for the next layer to be the output of the current layer x = output #Step 13: Reshape the output to (batch_size, window_size, hidden_size * sequence_length) output = output.contiguous().view(-1, self.window_size, output.shape[2] * output.shape[1]) #Step 14: Flatten the output to (batch_size * window_size, hidden_size * sequence_length) output = output.view(-1, output.shape[2]) #Step 15: Pass the final output through the output layer out = self.linear(output) #Step 16: Reshape the output to (batch_size, window_size, output_size) out = out.view(-1, self.window_size, self.output_size) |

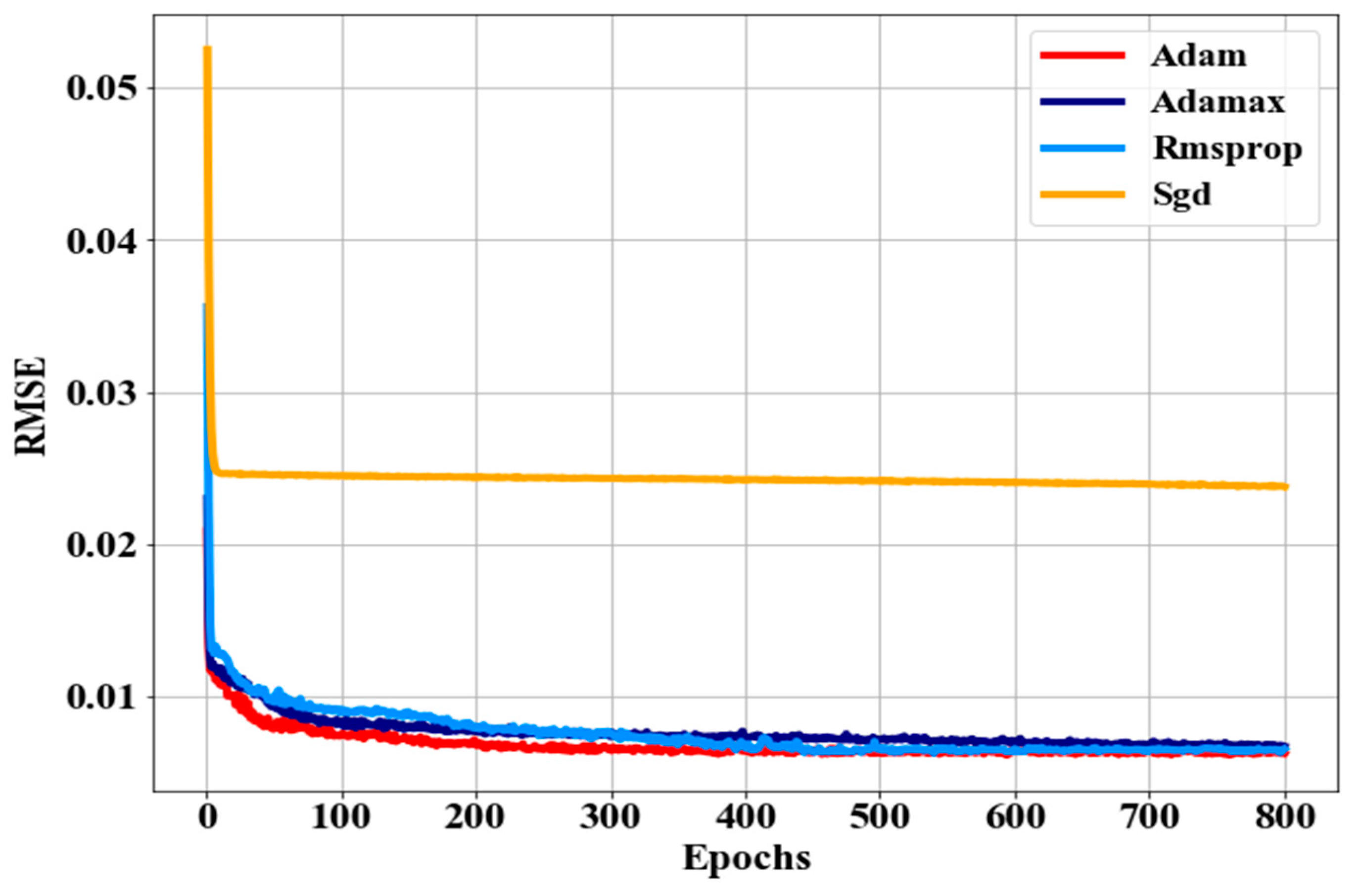

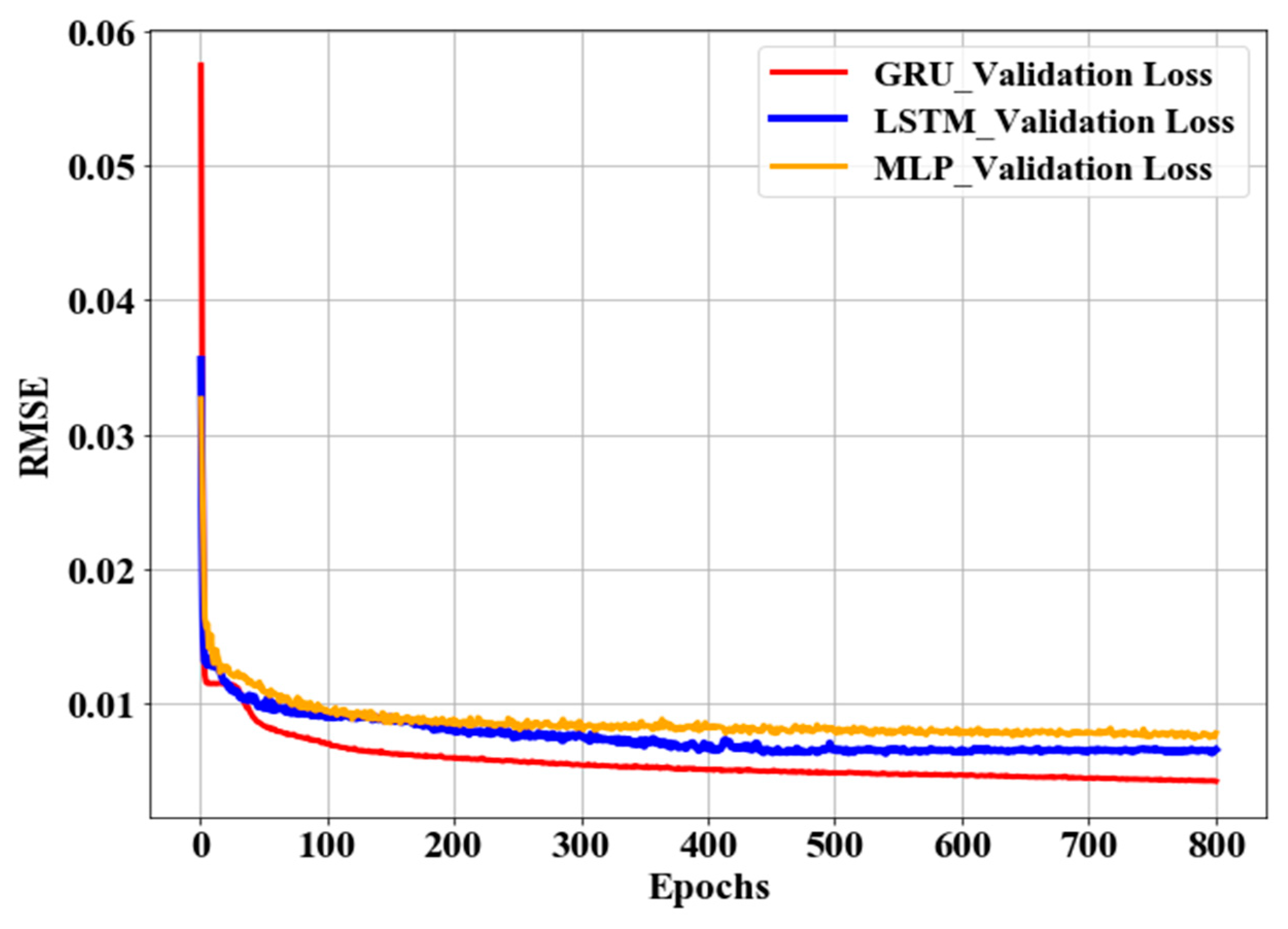

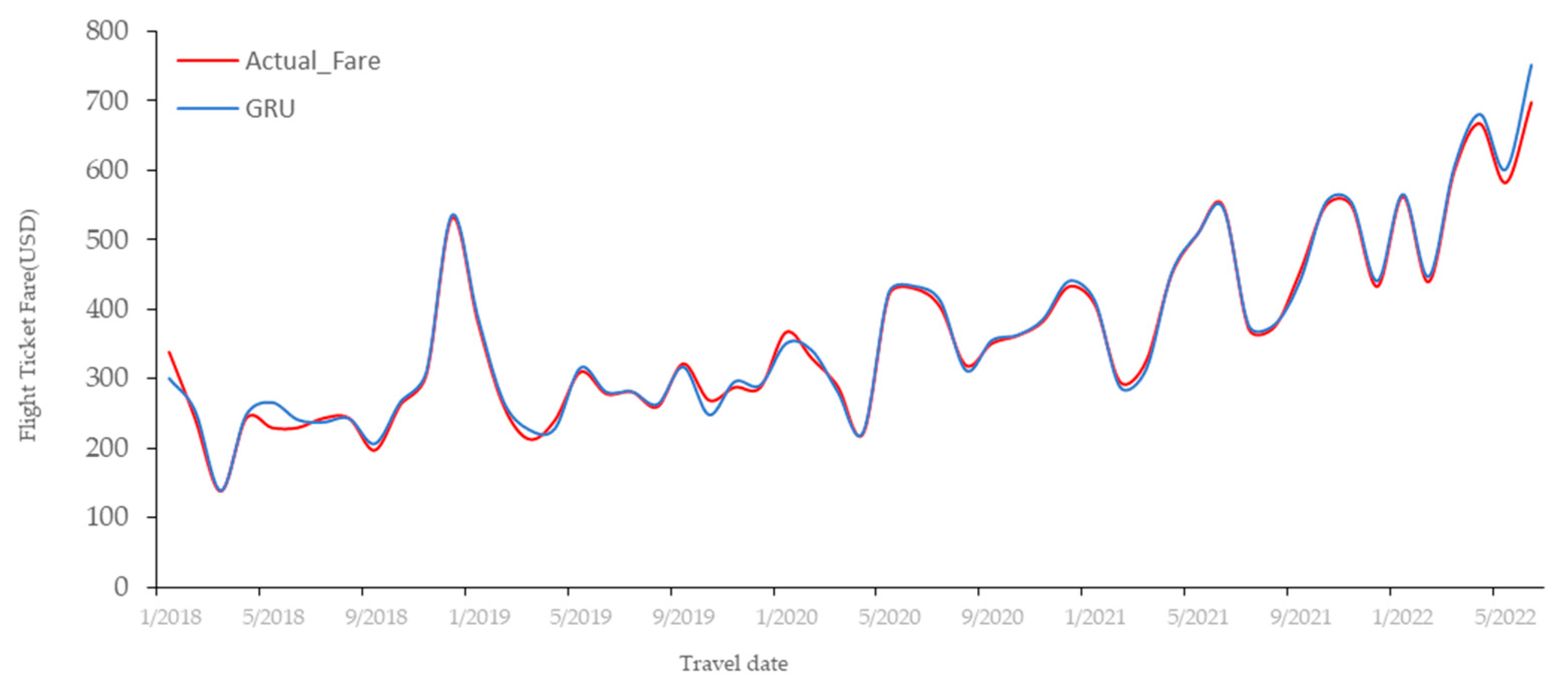

4. Experiments and Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Das, R.; Bo, R.; Chen, H.; Rehman, W.U.; Wunsch, D. Forecasting Nodal Price Difference Between Day-Ahead and Real-Time Electricity Markets Using Long-Short Term Memory and Sequence-to-Sequence Networks. IEEE Access 2022, 10, 832–843. [Google Scholar] [CrossRef]

- Jing, Y.; Guo, S.; Chen, F.; Wang, X.; Li, K. Dynamic Differential Pricing of High-Speed Railway Based on Improved GBDT Train Classification and Bootstrap Time Node Determination. IEEE Trans. Intell. Transp. Syst. 2022, 23, 16854–16866. [Google Scholar] [CrossRef]

- Wu, Y.; Cao, J.; Tan, Y.; Xiao, Q. A Data-Driven Agent-Based Simulator for Air Ticket Sales. In Computer Supported Cooperative Work and Social Computing; Springer: Berlin/Heidelberg, Germany, 2019; pp. 212–226. [Google Scholar]

- Yazdi, M.F.; Kamel, S.R.; Chabok, S.J.M.; Kheirabadi, M. Flight delay prediction based on deep learning and Levenberg-Marquart algorithm. J. Big Data 2020, 7, 1–28. [Google Scholar] [CrossRef]

- Bukhari, A.H.; Raja, M.A.Z.; Sulaiman, M.; Islam, S.; Shoaib, M.; Kumam, P. Fractional Neuro-Sequential ARFIMA-LSTM for Financial Market Forecasting. IEEE Access 2020, 8, 71326–71338. [Google Scholar] [CrossRef]

- Henrique, B.M.; Sobreiro, V.A.; Kimura, H. Literature review: Machine learning techniques applied to financial market prediction. Expert Syst. Appl. 2019, 124, 226–251. [Google Scholar] [CrossRef]

- Sahu, S.K.; Mokhade, A.; Bokde, N.D. An Overview of Machine Learning, Deep Learning, and Reinforcement Learning-Based Techniques in Quantitative Finance: Recent Progress and Challenges. Appl. Sci. 2023, 13, 1956. [Google Scholar] [CrossRef]

- Fayek, H.M.; Lech, M.; Cavedon, L. Evaluating deep learning architectures for Speech Emotion Recognition. Neural Netw. 2017, 92, 60–68. [Google Scholar] [CrossRef]

- Xiao, C.; Sutanto, D.; Muttaqi, K.M.; Zhang, M.; Meng, K.; Dong, Z.Y. Online Sequential Extreme Learning Machine Algorithm for Better Predispatch Electricity Price Forecasting Grids. IEEE Trans. Ind. Appl. 2021, 57, 1860–1871. [Google Scholar] [CrossRef]

- Minh, D.L.; Sadeghi-Niaraki, A.; Huy, H.D.; Min, K.; Moon, H. Deep Learning Approach for Short-Term Stock Trends Prediction Based on Two-Stream Gated Recurrent Unit Network. IEEE Access 2018, 6, 55392–55404. [Google Scholar] [CrossRef]

- Xu, X.; Zhang, Y. Soybean and Soybean Oil Price Forecasting through the Nonlinear Autoregressive Neural Network (NARNN) and NARNN with Exogenous Inputs (NARNN–X). Intell. Syst. Appl. 2022, 13, 200061. [Google Scholar] [CrossRef]

- Jianwei, E.; Ye, J.; Jin, H. A novel hybrid model on the prediction of time series and its application for the gold price analysis and forecasting. Phys. A Stat. Mech. Its Appl. 2019, 527, 121454. [Google Scholar] [CrossRef]

- Branda, F.; Marozzo, F.; Talia, D. Ticket Sales Prediction and Dynamic Pricing Strategies in Public Transport. Big Data Cogn. Comput. 2020, 4, 36. [Google Scholar] [CrossRef]

- Al Shehhi, M.; Karathanasopoulos, A. Forecasting hotel room prices in selected GCC cities using deep learning. J. Hosp. Tour. Manag. 2019, 42, 40–50. [Google Scholar] [CrossRef]

- Zhou, H.; Li, W.; Jiang, Z.; Cai, F.; Xue, Y. Flight Departure Time Prediction Based on Deep Learning. Aerospace 2022, 9, 394. [Google Scholar] [CrossRef]

- Meng, T.L.; Khushi, M. Reinforcement Learning in Financial Markets. Data 2019, 4, 110. [Google Scholar] [CrossRef]

- Ugurlu, U.; Oksuz, I.; Tas, O. Electricity Price Forecasting Using Recurrent Neural Networks. Energies 2018, 11, 1255. [Google Scholar] [CrossRef]

- Tanwar, S.; Patel, N.P.; Patel, S.N.; Patel, J.R.; Sharma, G.; Davidson, I.E. Deep Learning-Based Cryptocurrency Price Prediction Scheme With Inter-Dependent Relations. IEEE Access 2021, 9, 138633–138646. [Google Scholar] [CrossRef]

- Qi, L.; Khushi, M.; Poon, J. Event-Driven LSTM For Forex Price Prediction. In Proceedings of the 2020 IEEE Asia-Pacific Conference on Computer Science and Data Engineering (CSDE), Gold Coast, Australia, 16–18 December 2020; pp. 1–6. [Google Scholar]

- Hamayel, M.J.; Owda, A.Y. A Novel Cryptocurrency Price Prediction Model Using GRU, LSTM and bi-LSTM Machine Learning Algorithms. AI 2021, 2, 477–496. [Google Scholar] [CrossRef]

- Kim, G.I.; Jang, B. Petroleum Price Prediction with CNN-LSTM and CNN-GRU Using Skip-Connection. Mathematics 2023, 11, 547. [Google Scholar] [CrossRef]

- Subramanian, R.R.; Murali, M.S.; Deepak, B.; Deepak, P.; Reddy, H.N.; Sudharsan, R.R. Airline Fare Prediction Using Machine Learning Algorithms. In Proceedings of the 2022 4th International Conference on Smart Systems and Inventive Technology (ICSSIT), Tirunelveli, India, 20–22 January 2022; pp. 877–884. [Google Scholar]

- Guo, Y.; Han, S.; Shen, C.; Li, Y.; Yin, X.; Bai, Y. An Adaptive SVR for High-Frequency Stock Price Forecasting. IEEE Access 2018, 6, 11397–11404. [Google Scholar] [CrossRef]

- Abdella, J.A.; Zaki, N.M.; Shuaib, K.; Khan, F. Airline ticket price and demand prediction: A survey. J. King Saud Univ. Comput. Inf. Sci. 2021, 33, 375–391. [Google Scholar] [CrossRef]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Chen, Y.; Cao, J.; Feng, S.; Tan, Y. An ensemble learning based approach for building airfare forecast service. In Proceedings of the 2015 IEEE International Conference on Big Data (Big Data), Santa Clara, CA, USA, 29 October–1 November 2015; pp. 964–969. [Google Scholar] [CrossRef]

- Lantseva, A.; Mukhina, K.; Nikishova, A.; Ivanov, S.; Knyazkov, K. Data-driven Modeling of Airlines Pricing. Procedia Comput. Sci. 2015, 66, 267–276. [Google Scholar] [CrossRef]

- Liu, Y.; Gong, C.; Yang, L.; Chen, Y. DSTP-RNN: A dual-stage two-phase attention-based recurrent neural network for long-term and multivariate time series prediction. Expert Syst. Appl. 2020, 143, 113082. [Google Scholar] [CrossRef]

- Liu, J.; Huang, X. Forecasting Crude Oil Price Using Event Extraction. IEEE Access 2021, 9, 149067–149076. [Google Scholar] [CrossRef]

- Busari, G.A.; Lim, D.H. Crude oil price prediction: A comparison between AdaBoost-LSTM and AdaBoost-GRU for improving forecasting performance. Comput. Chem. Eng. 2021, 155, 107513. [Google Scholar] [CrossRef]

- Zhang, Y.; Na, S. A Novel Agricultural Commodity Price Forecasting Model Based on Fuzzy Information Granulation and MEA-SVM Model. Math. Probl. Eng. 2018, 2018, 2540681. [Google Scholar] [CrossRef]

- Tuli, M.; Singh, L.; Tripathi, S.; Malik, N. Prediction of Flight Fares Using Machine Learning. In Proceedings of the 2023 13th International Conference on Cloud Computing, Data Science & Engineering (Confluence), Noida, India, 19–20 January 2023. [Google Scholar]

- Tziridis, K.; Kalampokas, T.; Papakostas, G.A.; Diamantaras, K.I. Airfare prices prediction using machine learning techniques. In Proceedings of the 2017 25th European Signal Processing Conference (EUSIPCO), Kos, Greece, 28 August–2 September 2017. [Google Scholar]

- Vu, V.H.; Minh, Q.T.; Phung, P.H. An airfare prediction model for developing markets. In Proceedings of the 2018 International Conference on Information Networking (ICOIN), Chiang Mai, Thailand, 10–12 January 2018. [Google Scholar]

- Prasath, S.N.; Eliyas, S. A Prediction of Flight Fare Using K-Nearest Neighbors. In Proceedings of the 2022 2nd International Conference on Advance Computing and Innovative Technologies in Engineering (ICACITE), Greater Noida, India, 28–29 April 2022; pp. 1347–1351. [Google Scholar]

- Al-Kwifi, O.S.; Frankwick, G.L.; Ahmed, Z.U. Achieving rapid internationalization of sub-Saharan African firms: Ethiopian Airlines’ operations under challenging conditions. J. Bus. Res. 2020, 119, 663–673. [Google Scholar] [CrossRef]

- Singh, G.; Singh, J.; Prabha, C. Data visualization and its key fundamentals: A comprehensive survey. In Proceedings of the 2022 7th International Conference on Communication and Electronics Systems (ICCES), Coimbatore, India, 22–24 June 2022. [Google Scholar]

- Wu, A.; Wang, Y.; Shu, X.; Moritz, D.; Cui, W.; Zhang, H.; Zhang, D.; Qu, H. AI4VIS: Survey on Artificial Intelligence Approaches for Data Visualization. IEEE Trans. Vis. Comput. Graph. 2021, 28, 5049–5070. [Google Scholar] [CrossRef]

- He, Z.; Zhou, J.; Dai, H.N.; Wang, H. Gold Price Forecast Based on LSTM-CNN Model. In Proceedings of the 2019 IEEE Intl Conference on Dependable, Autonomic and Secure Computing, Intl Conf on Pervasive Intelligence and Computing, Intl Conf on Cloud and Big Data Computing, Intl Conf on Cyber Science and Technology Congress (DASC/PiCom/CBDCom/CyberSciTech), Fukuoka, Japan, 5–8 August 2019; pp. 1046–1053. [Google Scholar]

- Chen, S.; Zhou, C. Stock Prediction Based on Genetic Algorithm Feature Selection and Long Short-Term Memory Neural Network. IEEE Access 2021, 9, 9066–9072. [Google Scholar] [CrossRef]

- Wang, D.; Fan, J.; Fu, H.; Zhang, B. Research on Optimization of Big Data Construction Engineering Quality Management Based on RNN-LSTM. Complexity 2018, 2018, 1–16. [Google Scholar] [CrossRef]

- Jovanovic, L.; Jovanovic, D.; Bacanin, N.; Stakic, A.J.; Antonijevic, M.; Magd, H.; Thirumalaisamy, R.; Zivkovic, M. Multi-Step Crude Oil Price Prediction Based on LSTM Approach Tuned by Salp Swarm Algorithm with Disputation Operator. Sustainability 2022, 14, 14616. [Google Scholar] [CrossRef]

- Li, C.; Qian, G. Stock Price Prediction Using a Frequency Decomposition Based GRU Transformer Neural Network. Appl. Sci. 2022, 13, 222. [Google Scholar] [CrossRef]

- Zhu, D.; Wang, Y.; Zhang, F. Energy Price Prediction Integrated with Singular Spectrum Analysis and Long Short-Term Memory Network against the Background of Carbon Neutrality. Energies 2022, 15, 8128. [Google Scholar] [CrossRef]

- Yurtsever, M. Gold Price Forecasting Using LSTM, Bi-LSTM and GRU. Eur. J. Sci. Technol. 2021, 31, 341–347. [Google Scholar] [CrossRef]

- Dehnaw, A.M.; Manie, Y.C.; Chen, Y.Y.; Chiu, P.H.; Huang, H.W.; Chen, G.W.; Peng, P.C. Design Reliable Bus Structure Distributed Fiber Bragg Grating Sensor Network Using Gated Recurrent Unit Network. Sensors 2020, 20, 7355. [Google Scholar] [CrossRef]

- Tolosana, R.; Vera-Rodriguez, R.; Fierrez, J.; Ortega-Garcia, J. Exploring Recurrent Neural Networks for On-Line Handwritten Signature Biometrics. IEEE Access 2018, 6, 5128–5138. [Google Scholar] [CrossRef]

- Deng, Y.; Wang, L.; Jia, H.; Tong, X.; Li, F. A Sequence-to-Sequence Deep Learning Architecture Based on Bidirectional GRU for Type Recognition and Time Location of Combined Power Quality Disturbance. IEEE Trans. Ind. Inform. 2019, 15, 4481–4493. [Google Scholar] [CrossRef]

- Zhang, S.; Luo, J.; Wang, S.; Liu, F. Oil price forecasting: A hybrid GRU neural network based on decomposition–reconstruction methods. Expert Syst. Appl. 2023, 218, 119617. [Google Scholar] [CrossRef]

- Willmott, C.J.; Matsuura, K. Advantages of the mean absolute error (MAE) over the root mean square error (RMSE) in assessing average model performance. Clim. Res. 2005, 30, 79–82. [Google Scholar] [CrossRef]

- Zhang, D. A Coefficient of Determination for Generalized Linear Models. Am. Stat. 2017, 71, 310–316. [Google Scholar] [CrossRef]

| Ref. | Methods | Features | Dataset | Metrics |

|---|---|---|---|---|

| Tuli et al. [32] | linear regression, support vector regressor, k-neighbor regressor, decision tree regressor, bagging regressor, XGBoost regressor, light gradient boosting machine, extra tree regressor, and artificial neural network | airline, date of journey, source, destination, route, departure time, arrival time, duration, and total stops | 10,683 records | mean absolute error and R2 |

| Prasath et al. [35] | k-nearest neighbors | origin, destination, date of departure, time of departure, time of arrival, total fare, airways, and date of departure | not given | root mean square error and R2 |

| Tziridis et al. [33] | multilayer perceptrons, generalized regression neural network, extreme learning machine, random forest regression tree, regression tree, bagging regression tree, regression SVM and linear regression | days before departure, arrival time, amount of free luggage, departure time, number of intermediate stops, holiday, time of day and day of the week, and number of intermediates | 1814 flights for an international route | mean square error mean absolute error and R2 |

| Vu et al. [34] | random forest and multilayer perceptrons | departure date, arrival date, departure time, arrival time, fare class, date of purchase, number of stops, price, departure airport, arrival airport, airline, and flight number | 51,000 records | mean absolute percentage error and R2 |

| Chen et al. [26] | ensemble-based learning algorithm | Prices of the same itinerary, prices of recent itineraries before the target day, prices of itineraries with the same day of the week, and prices of itineraries with the same date of the month | five international routes for 110 days | mean absolute percentage error |

| No. | Origin | Destination | Flight Type | |

|---|---|---|---|---|

| Domestic | International | |||

| 1. | Addis Ababa (ADD) | ASO (Asosa) | Yes | No |

| 2. | Djibouti (JIB) Djibouti | Addis Ababa (ADD) | No | Yes |

| 3. | Addis Ababa (ADD) | Entebbe (EBB) Uganda | No | Yes |

| 4. | Hargeisa (HGA) Somalia | Addis Ababa (ADD) | No | Yes |

| 5. | Addis Ababa (ADD) | Hawassa (AWA) | Yes | No |

| 6. | Bahir Dar (BJR) | Addis Ababa (ADD) | Yes | No |

| 7. | Dire Dawa (DIR) | Addis Ababa (ADD) | Yes | No |

| Holiday | Booking Class B | Week Day | Point of Ticket Issuance | Booking Class H | Seg_Dest | Season | Class of Service | Op Flt Num | Actual Fare | |

| Holiday | 1 | −0.71 | 0.18 | 0.75 | 0.19 | 0.31 | 0.51 | 0.73 | 0.23 | 0.97 |

| Booking Class B | −0.71 | 1 | 0.21 | 0.36 | −0.05 | 0.63 | 0.32 | 0.67 | 0.01 | 0.44 |

| Week Day | 0.18 | 0.21 | 1 | 0.30 | 0.02 | 0.16 | 0.01 | 0.95 | −0.01 | 0.75 |

| Point of Ticket Issuance | 0.75 | 0.36 | 0.30 | 1 | 0.72 | −0.06 | 0.53 | 0.57 | 0.02 | 0. 78 |

| Booking Class H | 0.19 | −0.05 | 0.02 | 0.72 | 1 | −0.16 | 0.05 | 0.16 | 0.08 | −0.11 |

| Seg_Dest | 0.31 | 0.63 | 0.16 | 0.05 | −0.16 | 1 | 0.79 | 0.08 | 0.51 | 0.92 |

| Season | 0.51 | 0.32 | 0.01 | 0.53 | 0.05 | 0.79 | 1 | 0.58 | 0.07 | 0.67 |

| Class of Service | 0.73 | 0.67 | 0.95 | 0.57 | 0.16 | 0.08 | 0.58 | 1 | 0.19 | 0.84 |

| Op Flt Num | 0.23 | 0.01 | −0.01 | 0.02 | 0.08 | 0.51 | 0.07 | 0.19 | 1 | 0.61 |

| Actual Fare | 0.97 | 0.44 | 0.75 | 0. 78 | −0.11 | 0.92 | 0.67 | 0.84 | 0.61 | 1 |

| Features | Description |

|---|---|

| Travel Date | The date on which the passenger is scheduled to travel |

| Booking class | The letter code used by the airline to identify the fare type and restrictions of the ticket purchased by a passenger |

| Class of Service | The class of service for the flight (economy, business) |

| Seg Orig. | The origin or starting point of the travel segment |

| Seg Dest. | The destination or endpoint of the travel segment |

| Distance in Miles | The distance in miles between the origin and destination of the travel segment |

| Duration in Hours | The duration of the travel segment in hours |

| Duration in Minutes | The duration of the travel segment in minutes |

| Airline Code | The code of the airline operating the flight |

| Total stop | The total number of stops or layovers involved in the travel segment |

| Op Flt Num | The operating flight number of the airline that is providing the travel segment |

| Flight Type | The type of flight, such as domestic, international, or connecting |

| Pax | The number of passengers traveling on the booking |

| Weekend | Whether the travel segment falls on a weekend or not |

| Holiday | Whether the travel segment falls on a holiday or not |

| Season | The season during which the travel segment takes place, such as summer, winter, autumn, or spring |

| Marketing Airline Code | The code for the airline that is marketing the flight |

| Point of Ticket Issuance | The location where the ticket was issued |

| Actual Fare | The actual fare or cost of the travel segment |

| Parameters | Value |

|---|---|

| Hidden Size | 824, 512, 256, 128, 64 |

| Batch Size | 256 |

| Number of Epochs | 800 |

| Drop Out | 0.5 |

| Learning Rate | 0.001 |

| Out Size | 1 |

| Optimizer | Adam |

| Loss Function | Mean Absolute Error |

| Layers | GRU × 5 |

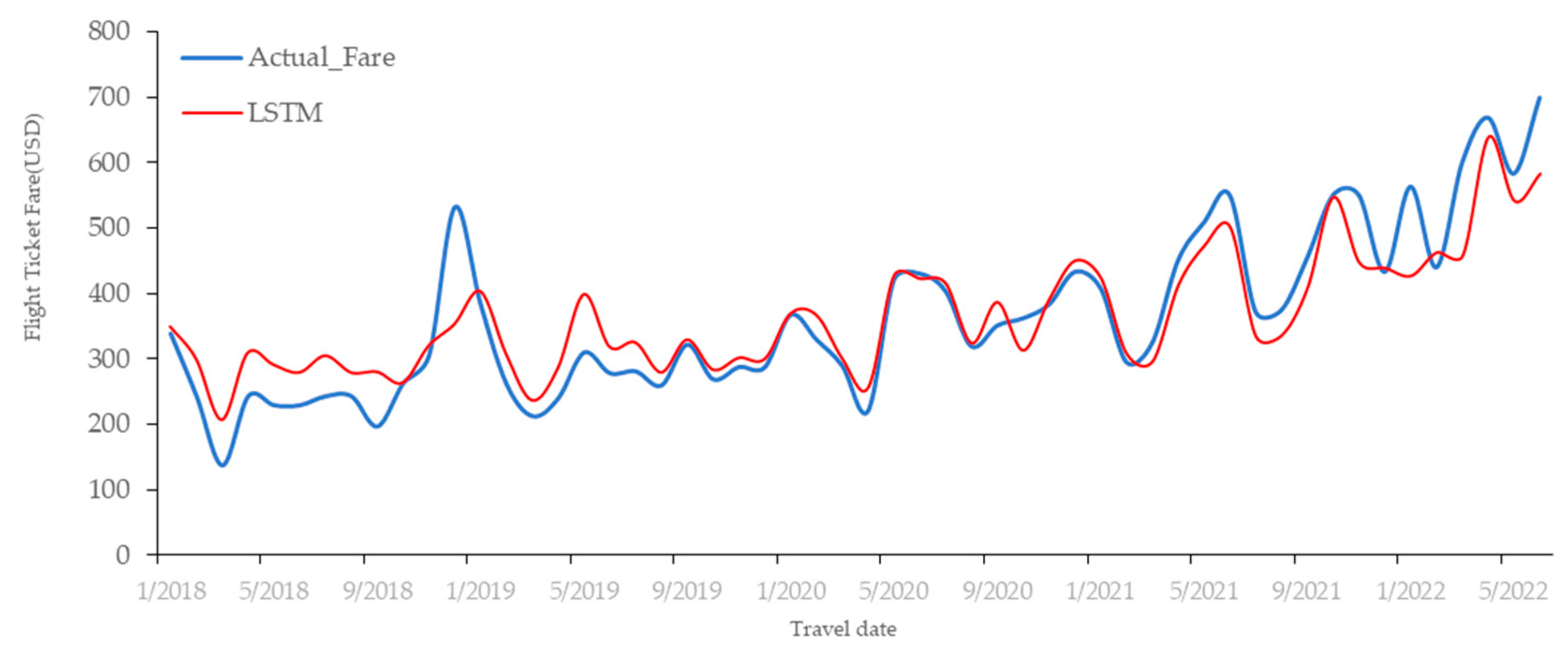

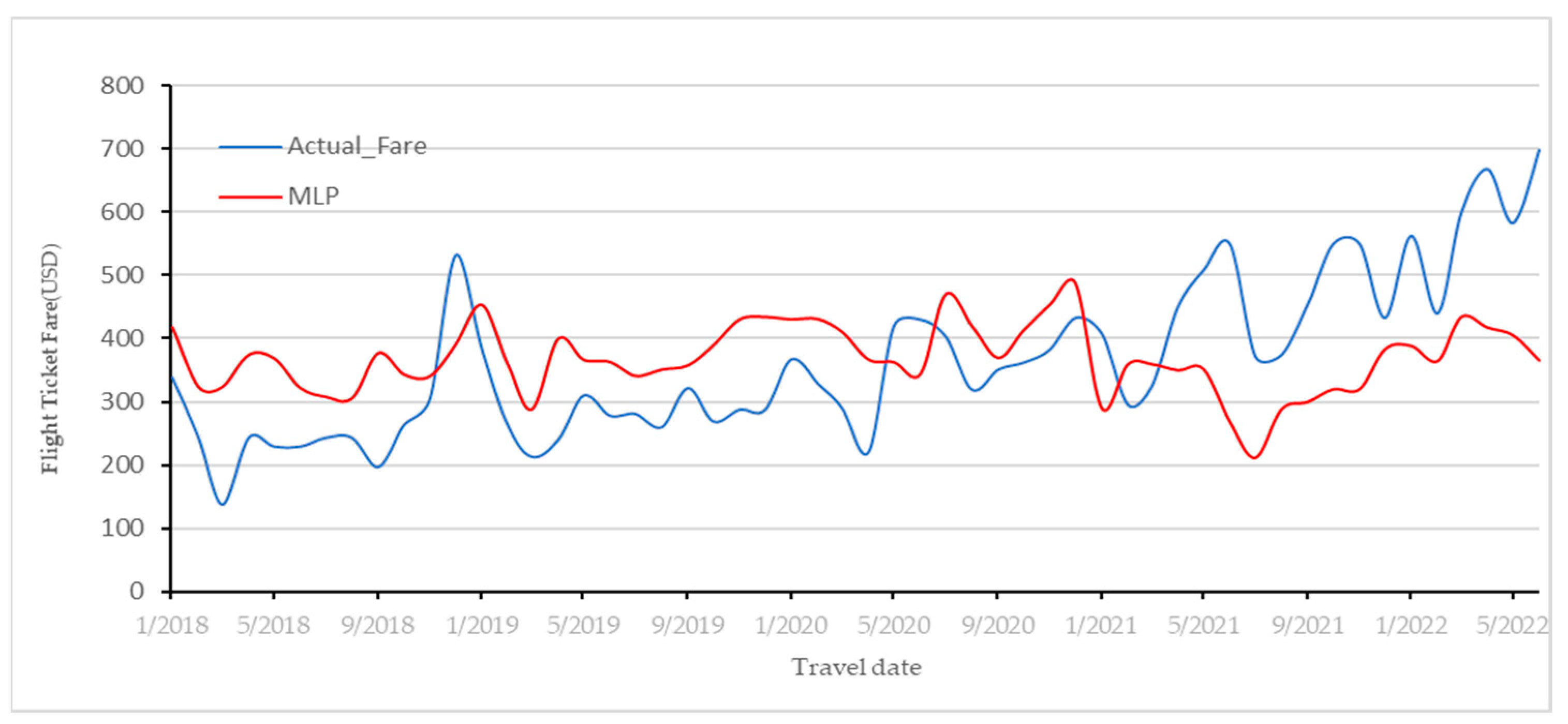

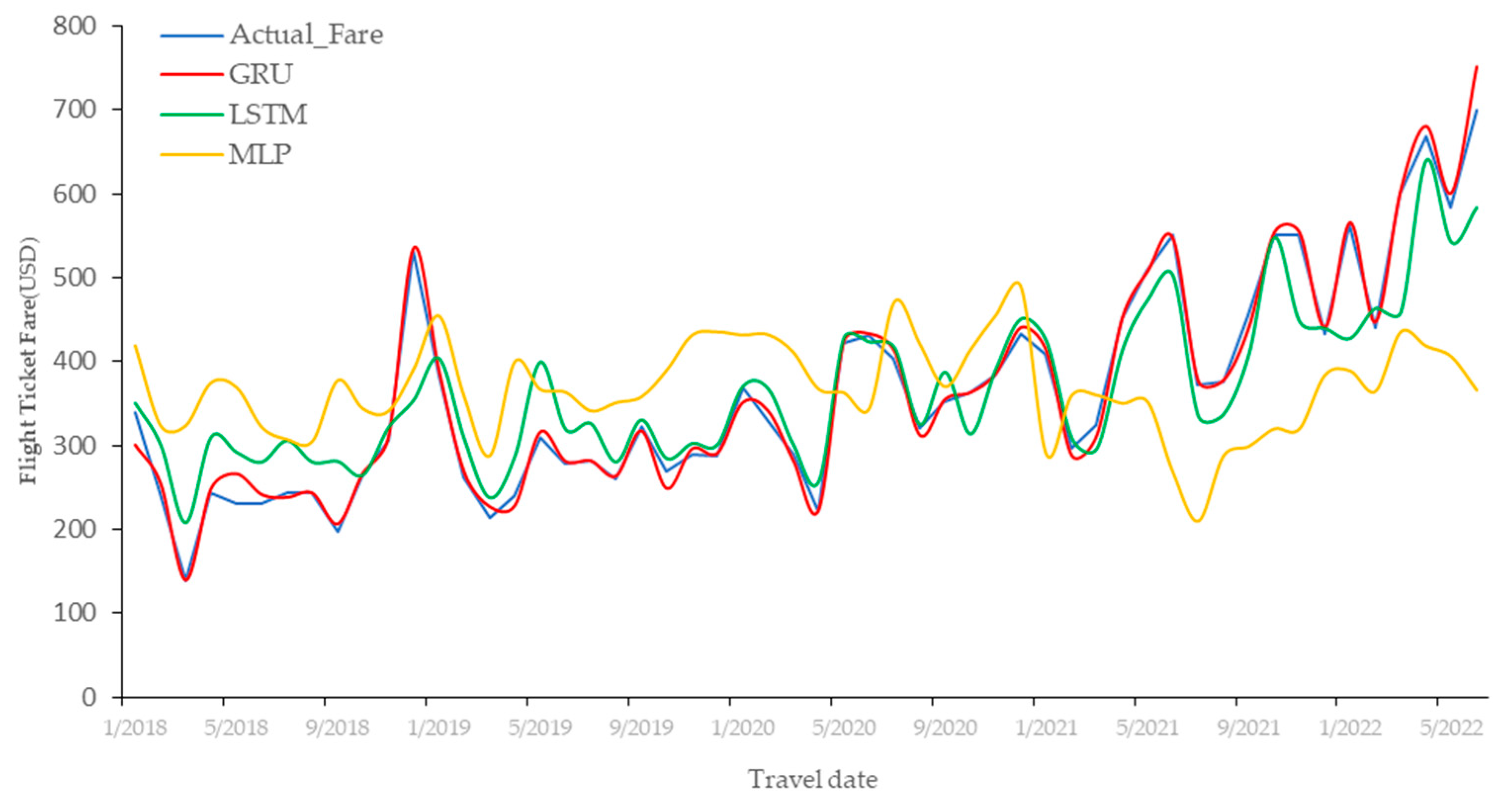

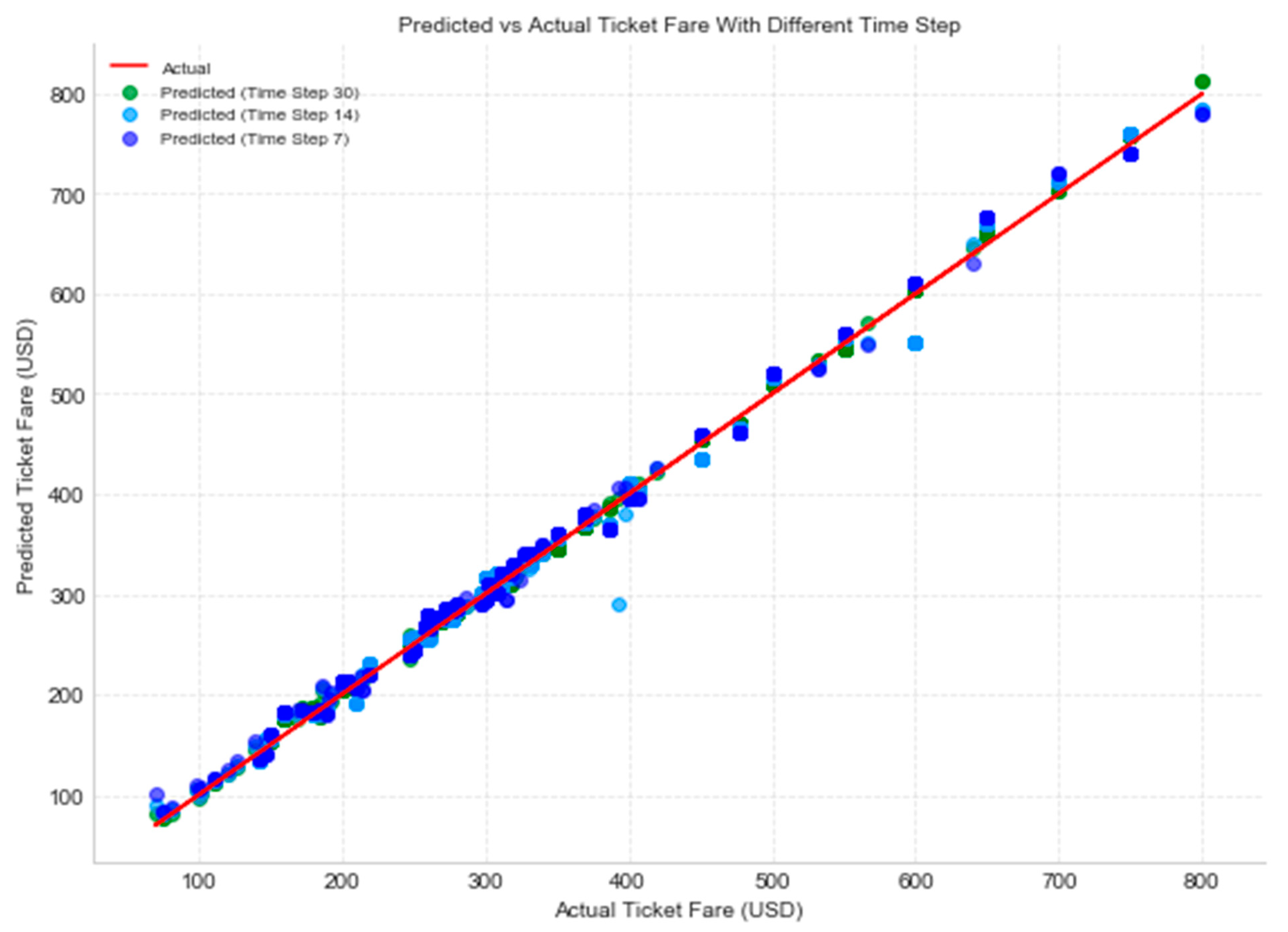

| Model | MAE | RMSE | R2 |

|---|---|---|---|

| MLP | 43.50 | 64.93 | 0.60 |

| LSTM | 8.67 | 13.99 | 0.70 |

| GRU | 3.76 | 5.93 | 0.98 |

| ARIMA | 165.67 | 205.73 | 0.51 |

| SVR | 246.76 | 315.69 | 0.27 |

| Average Value of Actual Ticket Fares | Average Value of Predicted Ticket Fares | ||

|---|---|---|---|

| GRU | LSTM | MLP | |

| 379.16 | 376.43 | 352.69 | 285.83 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Degife, W.A.; Lin, B.-S. Deep-Learning-Powered GRU Model for Flight Ticket Fare Forecasting. Appl. Sci. 2023, 13, 6032. https://doi.org/10.3390/app13106032

Degife WA, Lin B-S. Deep-Learning-Powered GRU Model for Flight Ticket Fare Forecasting. Applied Sciences. 2023; 13(10):6032. https://doi.org/10.3390/app13106032

Chicago/Turabian StyleDegife, Worku Abebe, and Bor-Shen Lin. 2023. "Deep-Learning-Powered GRU Model for Flight Ticket Fare Forecasting" Applied Sciences 13, no. 10: 6032. https://doi.org/10.3390/app13106032

APA StyleDegife, W. A., & Lin, B.-S. (2023). Deep-Learning-Powered GRU Model for Flight Ticket Fare Forecasting. Applied Sciences, 13(10), 6032. https://doi.org/10.3390/app13106032