Detection of Helmet Use in Motorcycle Drivers Using Convolutional Neural Network

Abstract

1. Introduction

Related Work

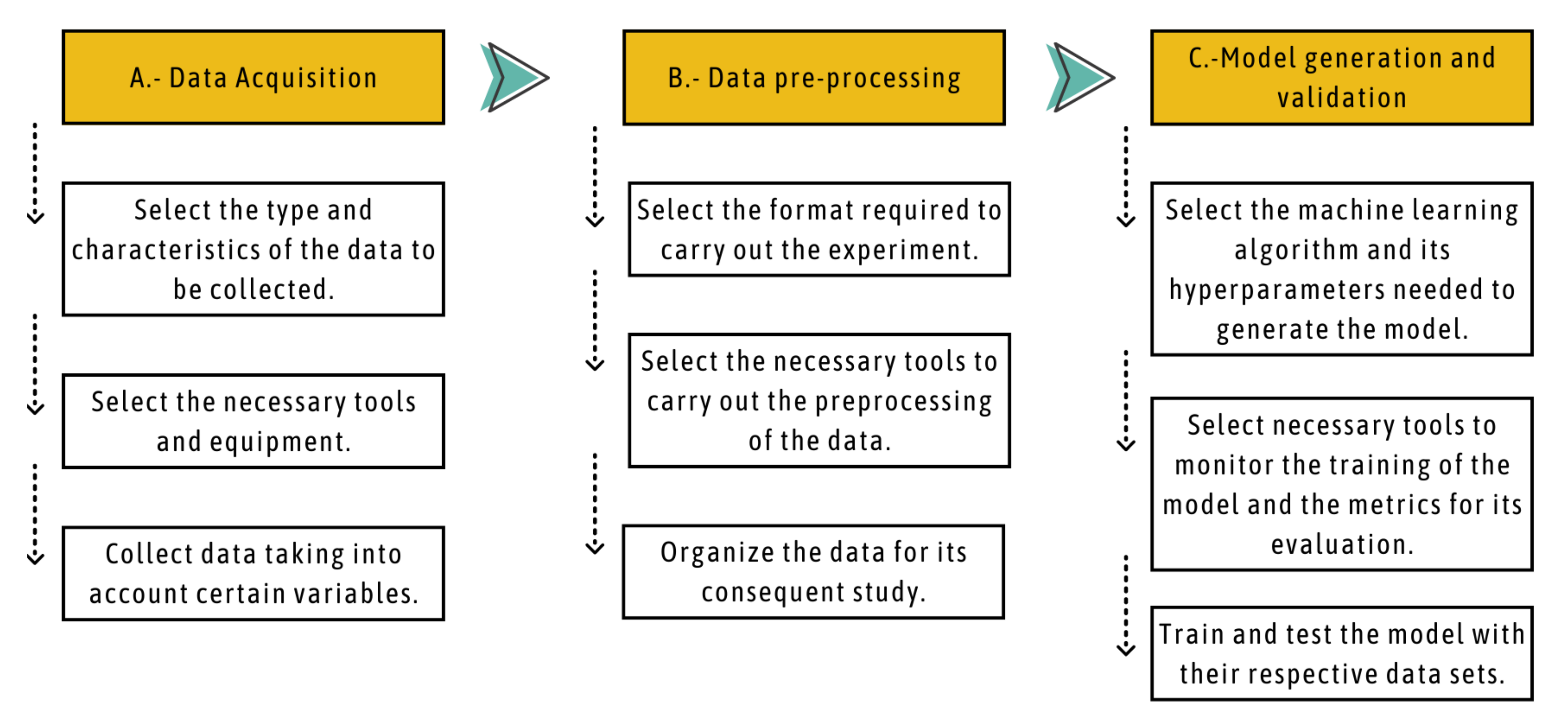

2. Materials and Methods

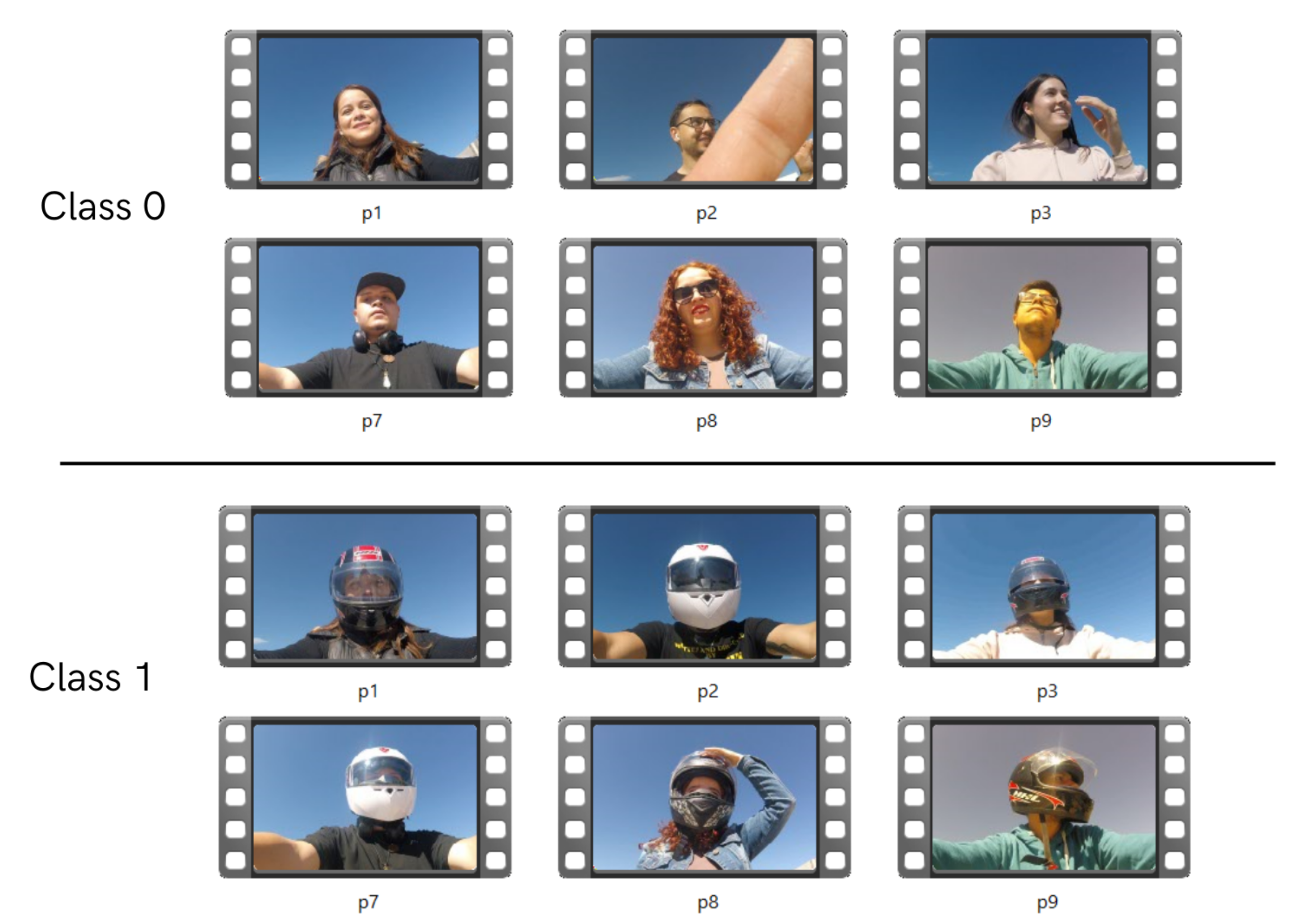

2.1. Data Acquisition

2.2. Data Pre-Processing

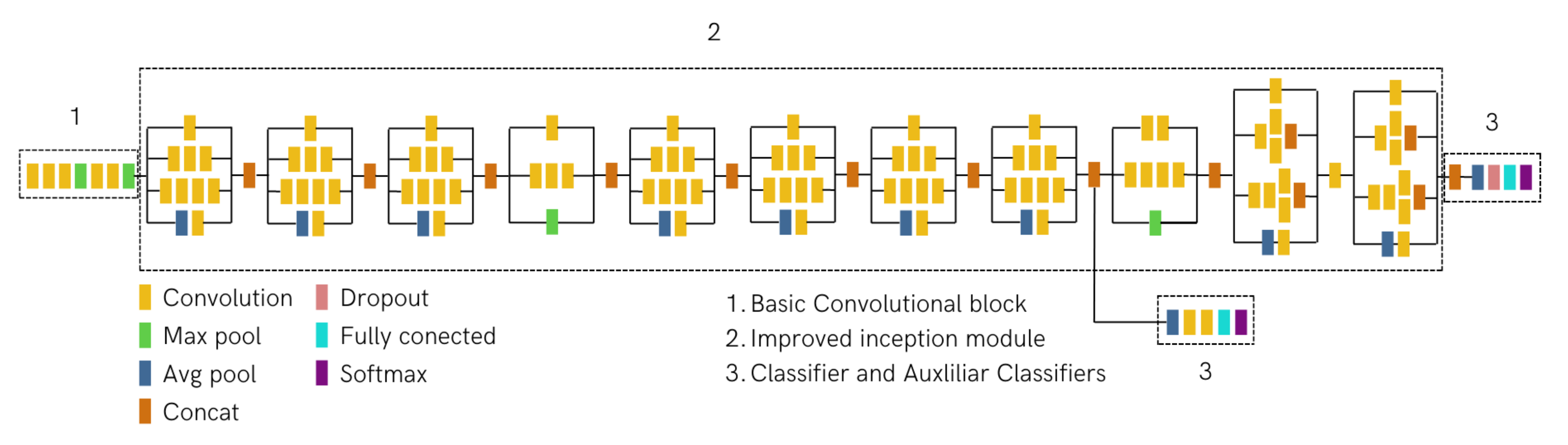

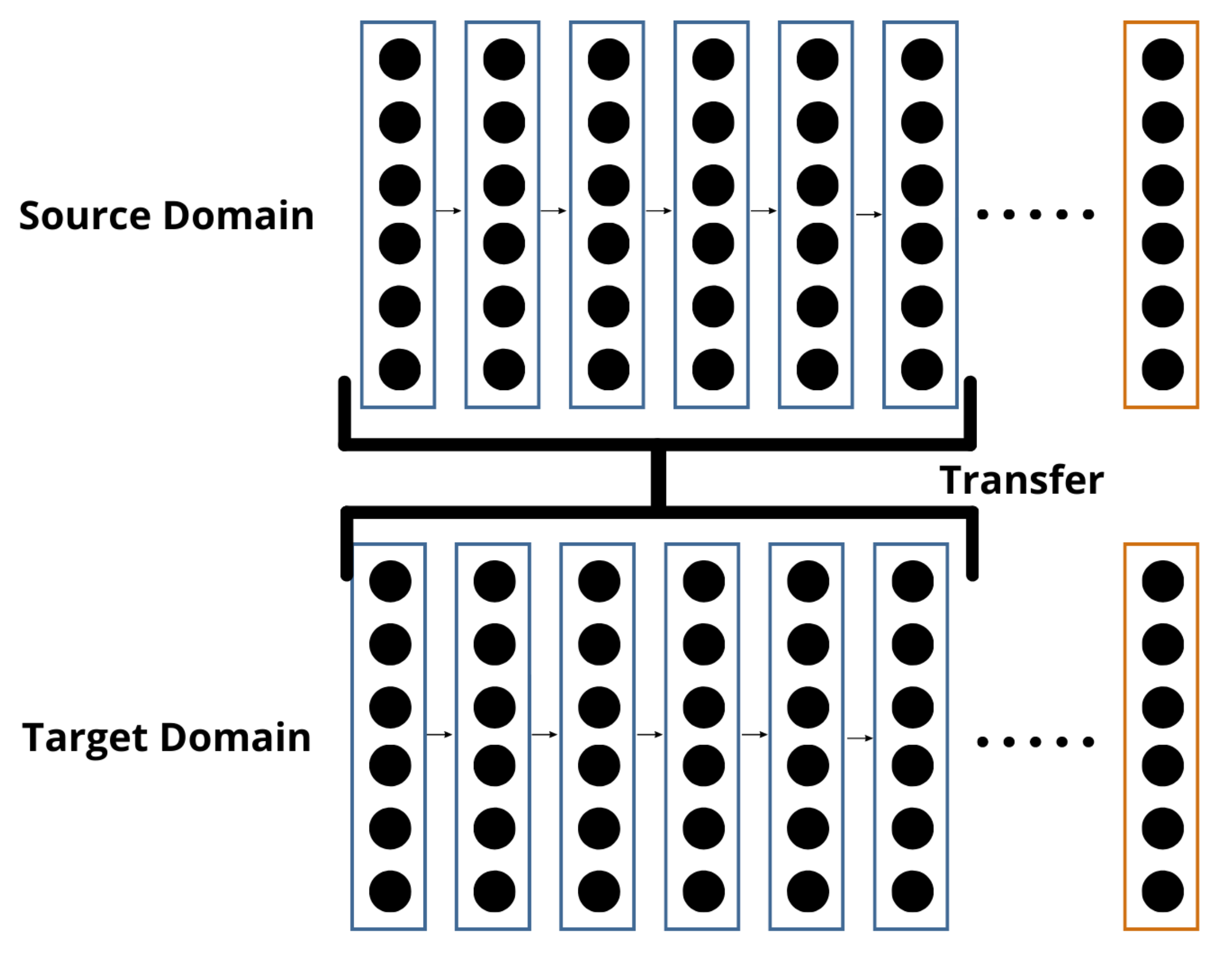

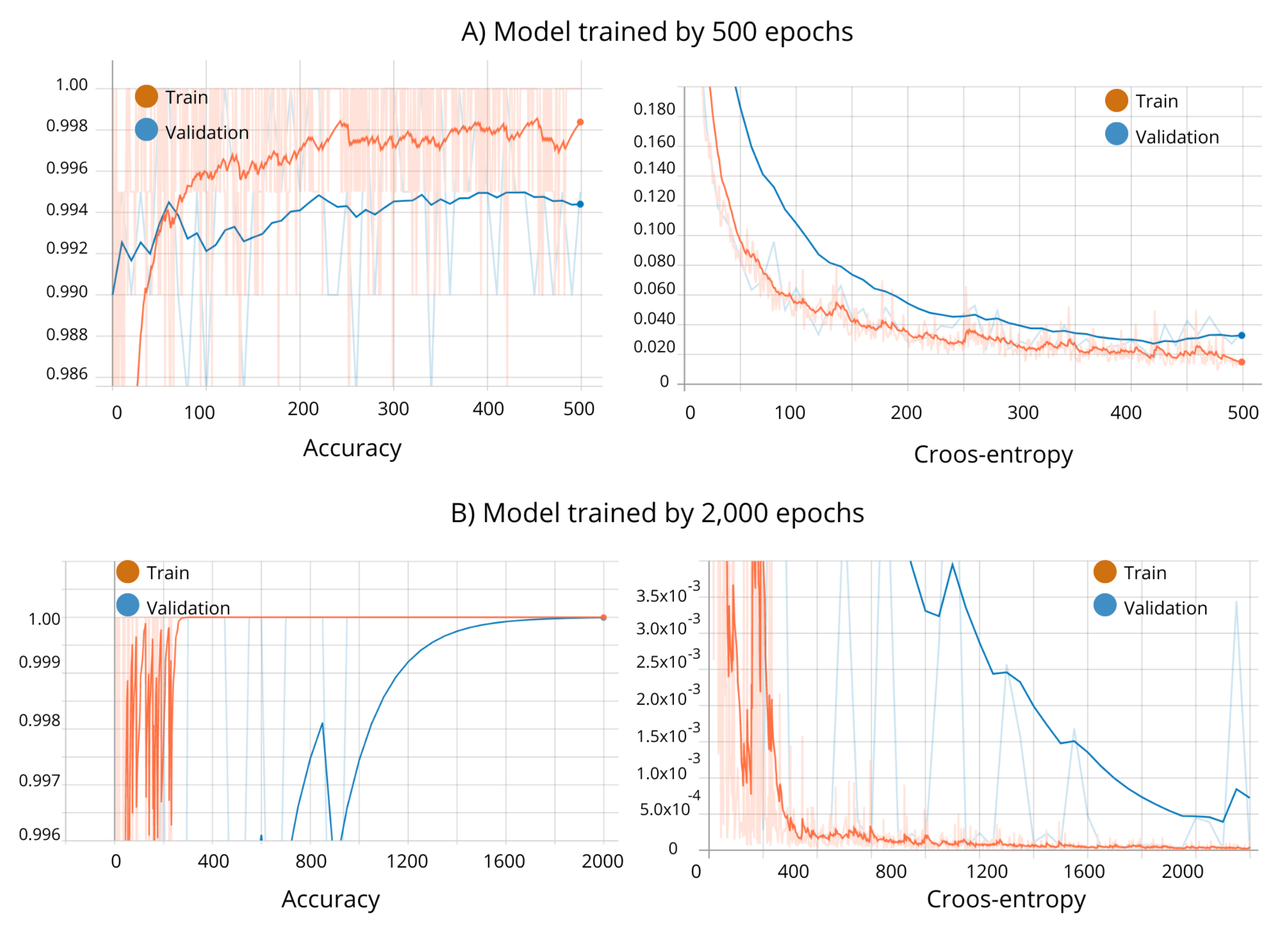

2.3. Model Generation and Validation

3. Results

3.1. Results of Data Acquisition

3.2. Results of Data Pre-Processing

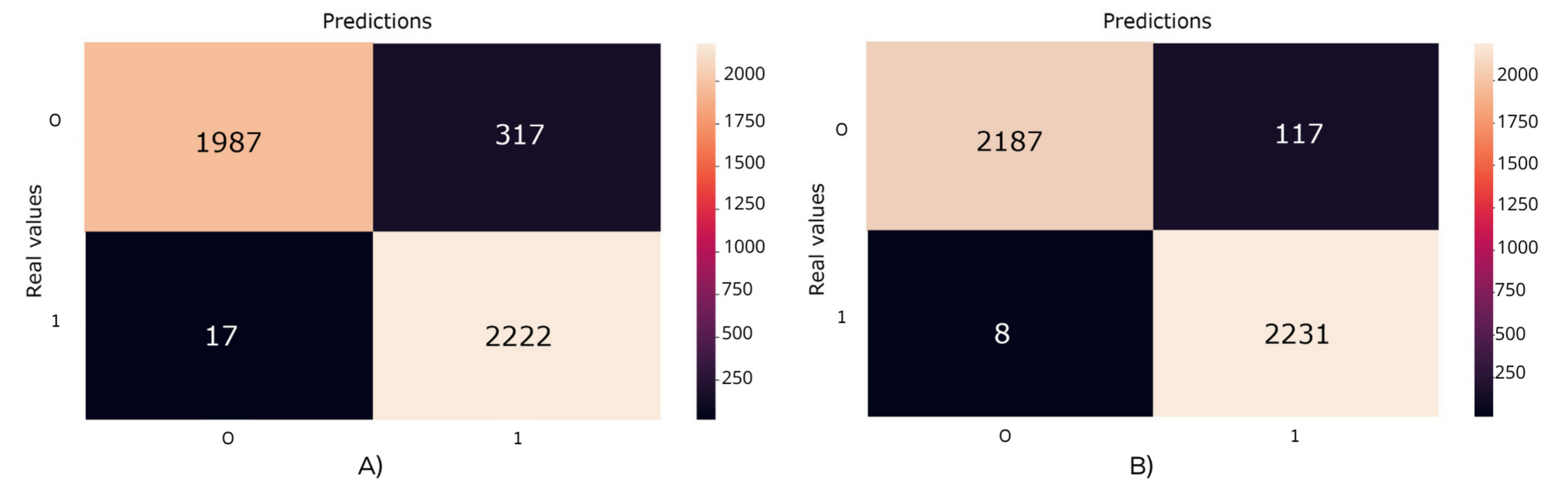

3.3. Results of Model Generation and Validation

4. Discussion

5. Conclusions

Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Cadavid, L.; Salazar-Serna, K. Mapping the Research Landscape for the Motorcycle Market Policies: Sustainability as a Trend—A Systematic Literature Review. Sustainability 2021, 13, 10813. [Google Scholar] [CrossRef]

- Jittrapirom, P.; Knoflacher, H.; Mailer, M. The conundrum of the motorcycle in the mix of sustainable urban transport. Transp. Res. Procedia 2017, 25, 4869–4890. [Google Scholar] [CrossRef]

- Abdi, N.; Robertson, T.; Petrucka, P.; Crizzle, A.M. Do motorcycle helmets reduce road traffic injuries, hospitalizations and mortalities in low and lower-middle income countries in Africa? A systematic review and meta-analysis. BMC Public Health 2021, 22, 824. [Google Scholar] [CrossRef]

- Cheng, A.S.; Liu, K.P.; Tulliani, N. Relationship Between Driving-violation Behaviours and Risk Perception in Motorcycle Accidents. Hong Kong J. Occup. Ther. 2015, 25, 32–38. [Google Scholar] [CrossRef]

- MacLeod, J.B.; Digiacomo, J.C.; Tinkoff, G. An evidence-based review: Helmet efficacy to reduce head injury and mortality in motorcycle crashes: EAST practice management guidelines. J. Trauma-INJ Infect. Crit. Care 2010, 69, 1101–1111. [Google Scholar] [CrossRef]

- World Health Organization. Powered Two-and Three-Wheeler Safety: A Road Safety Manual For Decision-Makers and Practitioners; World Health Organization: Geneve, Switzerland, 2017. [Google Scholar]

- WHO. Global Status Report on Road on Road Safety; WHO: Geneve, Switzerland, 2018. [Google Scholar]

- Tabary, M.; Ahmadi, S.; Amirzade-Iranaq, M.H.; Shojaei, M.; Asl, M.S.; Ghodsi, Z.; Azarhomayoun, A.; Ansari-Moghaddam, A.; Atlasi, R.; Araghi, F.; et al. The effectiveness of different types of motorcycle helmets—A scoping review. Accid. Anal. Prev. 2021, 154, 106065. [Google Scholar] [CrossRef] [PubMed]

- Sharif, P.M.; Pazooki, S.N.; Ghodsi, Z.; Nouri, A.; Ghoroghchi, H.A.; Tabrizi, R.; Shafieian, M.; Heydari, S.T.; Atlasi, R.; Sharif-Alhoseini, M.; et al. Effective factors of improved helmet use in motorcyclists: A systematic review. BMC Public Health 2023, 23, 26. [Google Scholar] [CrossRef]

- Araujo, M.; Illanes, E.; Chapman, E.; Rodrigues, E. Effectiveness of interventions to prevent motorcycle injuries: Systematic review of the literature. Int. J. INJ Control. Saf. Promot. 2017, 24, 406–422. [Google Scholar] [CrossRef]

- WHO. WHO Kicks off a Decade of Action for Road Safety; WHO: Geneve, Switzerland, 2021. [Google Scholar]

- WHO. Helmets: A Road Safety Manual for Decision-makers and Practictioners; WHO Library Cataloguing in Publication Data; WHO: Geneve, Switzerland, 2006; pp. 1–147. [Google Scholar]

- Craft, G.; Bui, T.V.; Sidik, M.; Moore, D.; Ederer, D.J.; Parker, E.M.; Ballesteros, M.F.; Sleet, D.A. A Comprehensive Approach to Motorcycle-Related Head Injury Prevention: Experiences from the Field in Vietnam, Cambodia, and Uganda. Int. J. Environ. Res. Public Health 2017, 14, 1486. [Google Scholar] [CrossRef]

- Forum, I.T. Improving Motorcyclist Safety: Priority actions for Safe System Integration. Available online: https://www.itf-oecd.org/improving-motorcyclist-safety (accessed on 3 March 2023).

- Ambak, K.; Rahmat, R.; Ismail, R. Intelligent Transport System for Motorcycle Safety and Issues. Eur. J. Sci. Res. 2009, 28, 600–611. [Google Scholar]

- Forero, M.A.V. Detection of motorcycles and use of safety helmets with an algorithm using image processing techniques and artificial intelligence models. In Proceedings of the MOVICI-MOYCOT 2018: Joint Conference for Urban Mobility in the Smart City, Medellin, Colombia, 18–20 April 2018; pp. 1–9. [Google Scholar] [CrossRef]

- Singh, A.; Singh, D.; Singh, J.; Singh, P.; Kaur, D.A. Helmet & Number Plate Detection Using Deep Learning and Its Comparative Analysis. In Proceedings of the International Conference on Innovative Computing & Communication (ICICC) 2022, Delhi, India, 19–20 February 2022. [Google Scholar]

- Goyal, A.; Agarwal, D.; Subramanian, A.; Jawahar, C.V.; Sarvadevabhatla, R.K. Detecting, Tracking and Counting Motorcycle Rider Traffic Violations on Unconstrained Roads. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Rohith, C.A.; Nair, S.A.; Nair, P.S.; Alphonsa, S.; John, N.P. An efficient helmet detection for MVD using deep learning. In Proceedings of the International Conference on Trends in Electronics and Informatics, ICOEI 2019, Tirunelveli, India, 23–25 April 2019; pp. 282–286. [Google Scholar] [CrossRef]

- Shine, L.; Jiji, C.V. Automated detection of helmet on motorcyclists from traffic surveillance videos: A comparative analysis using hand-crafted features and CNN. Multimed. Tools Appl. 2020, 79, 14179–14199. [Google Scholar] [CrossRef]

- Lin, H.; Deng, J.D.; Albers, D.; Siebert, F.W. Helmet Use Detection of Tracked Motorcycles Using CNN-Based Multi-Task Learning. IEEE Access 2020, 8, 162073–162084. [Google Scholar] [CrossRef]

- Cheng, R.; He, X.; Zheng, Z.; Wang, Z. Multi-Scale Safety Helmet Detection Based on SAS-YOLOv3-Tiny. Appl. Sci. 2021, 11, 3652. [Google Scholar] [CrossRef]

- Jia, W.; Xu, S.; Liang, Z.; Zhao, Y.; Min, H.; Li, S.; Yu, Y. Real-time automatic helmet detection of motorcyclists in urban traffic using improved YOLOv5 detector. IET Image Process. 2021, 15, 3623–3637. [Google Scholar] [CrossRef]

- Waris, T.; Asif, M.; Ahmad, M.B.; Mahmood, T.; Zafar, S.; Shah, M.; Ayaz, A. CNN-Based Automatic Helmet Violation Detection of Motorcyclists for an Intelligent Transportation System. Math. Probl. Eng. 2022, 2022, 8246776. [Google Scholar] [CrossRef]

- Rasli, M.K.A.M.; Madzhi, N.K.; Johari, J. Smart Helmet with Sensors for Accident Prevention. In Proceedings of the 2013 International Conference on Electrical, Electronics and System Engineering (ICEESE), Selangor, Malaysia, 4–5 December 2013; pp. 21–26. [Google Scholar]

- Kashevnik, A.; Ali, A.; Lashkov, I.; Shilov, N. Seat Belt Fastness Detection Based on Image Analysis from Vehicle In-cabin Camera. In Proceedings of the 2020 26th Conference of Open Innovations Association (FRUCT), Yaroslavl, Russia, 20–24 April 2020; pp. 143–150. [Google Scholar] [CrossRef]

- Sampieri, R.H. Fundamentos de Investigacion; McGraw-Hill/Interamericana: New York, NY, USA, 2017. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Team MCD. Mexico 2022. Motorcycles Market Hits The 9th Record in A String. Available online: https://www.motorcyclesdata.com/2023/02/03/mexico-motorcycles/ (accessed on 15 March 2023).

- Data Mexico. Conductores de Motocicleta: Salarios, Diversidad, Industrias e Informalidad Laboral. Available online: https://datamexico.org/es/profile/occupation/conductores-de-motocicleta (accessed on 28 April 2023).

- Padway, M. Motorcycle Accident Statistics Updated to 2023-MLF Blog. 2023. Available online: https://www.motorcyclelegalfoundation.com/motorcycle-accident-statistics-safety/ (accessed on 2 March 2023).

- Kluyver, T.; Ragan-Kelley, B.; Pérez, F.; Granger, B.; Bussonnier, M.; Frederic, J.; Kelley, K.; Hamrick, J.; Grout, J.; Corlay, S.; et al. Jupyter Notebooks—A publishing format for reproducible computational workflows. In Positioning and Power in Academic Publishing: Players, Agents and Agendas; Loizides, F., Schmidt, B., Eds.; IOS Press: Amsterdam, The Netherlands, 2016; pp. 87–90. [Google Scholar]

- Gholamy, A.; Kreinovich, V.; Kosheleva, O. A Pedagogical Explanation A Pedagogical Explanation Part of the Computer Sciences Commons. Available online: https://scholarworks.utep.edu/cgi/viewcontent.cgi?article=2202&context=cs_techrep (accessed on 23 March 2023).

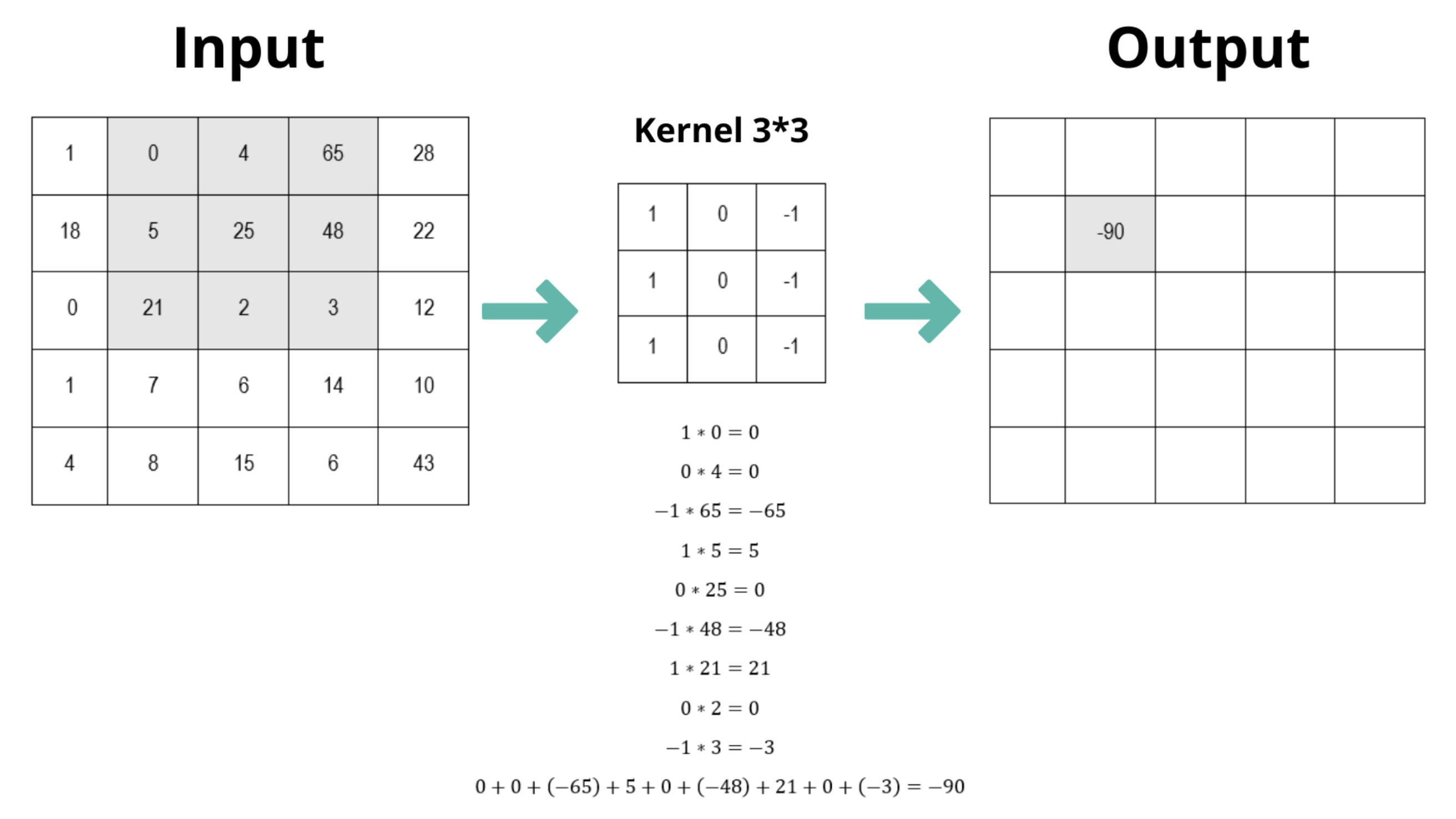

- Albawi, S.; Mohammed, T.A.; Al-Zawi, S. Understanding of a convolutional neural network. In Proceedings of the 2017 International Conference on Engineering and Technology, ICET 2017, Antalya, Turkey, 21–23 August 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Kuo, C.C.J. Understanding Convolutional Neural Networks with A Mathematical Model. arXiv 2016, arXiv:1609.04112. [Google Scholar] [CrossRef]

- Lin, C.; Li, L.; Luo, W.; Wang, K.; Guo, J. Transfer Learning Based Traffic Sign Recognition Using Inception-v3 Model. Period. Polytech. Transp. Eng. 2018, 47, 242–249. [Google Scholar] [CrossRef]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Distributed Systems. arXiv 2016. [Google Scholar] [CrossRef]

- Guía avanzada de Inception v3|Cloud TPU|Google Cloud. Available online: https://cloud.google.com/tpu/docs/inception-v3-advanced?hl=es-419 (accessed on 7 March 2023).

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar] [CrossRef]

- Nair, V.; Hinton, G.E. Rectified linear units improve Restricted Boltzmann machines. In Proceedings of the ICML 2010-Proceedings, 27th International Conference on Machine Learning, Haifa, Israel, 21–24 June 2010. [Google Scholar]

- Chuanqi, T.; Sun, F.; Tao, K.; Wenchang, Z.; Chao, Y.; Chunfang, L. A Survey on Deep Transfer Learning; Springer International Publishing: Cham, Switzerland, 2018; pp. 270–279. [Google Scholar]

- Cilimkovic, M. Neural Networks and Back Propagation Algorithm. Available online: https://drive.uqu.edu.sa/_/takawady/files/NeuralNetworks.pdf (accessed on 21 March 2023).

- Kingma, D.P.; Ba, J.L. Adam: A Method for Stochastic Optimization. arXiv 2015, arXiv:1412.6980. [Google Scholar]

- How to Retrain an Image Classifier for New Categories|TensorFlow. Available online: https://web.archive.org/web/20180703133602/https://www.tensorflow.org/tutorials/image_retraining (accessed on 20 October 2022).

- Özbilgin, F.; Kurnaz, Ç; Aydın, E. Prediction of Coronary Artery Disease Using Machine Learning Techniques with Iris Analysis. Diagnostics 2023, 13, 1081. [Google Scholar] [CrossRef] [PubMed]

- Banaei, N.; Moshfegh, J.; Mohseni-Kabir, A.; Houghton, J.M.; Sun, Y.; Kim, B. Machine learning algorithms enhance the specificity of cancer biomarker detection using SERS-based immunoassays in microfluidic chips. RSC Adv. 2019, 9, 1859–1868. [Google Scholar] [CrossRef] [PubMed]

- Bradley, A.P. The use of the area under the ROC curve in the evaluation of machine learning algorithms. Pattern Recognit. 1997, 30, 1145–1159. [Google Scholar] [CrossRef]

- Pathar, R.; Adivarekar, A.; Mishra, A.; Deshmukh, A. Human Emotion Recognition using Convolutional Neural Network in Real Time. In Proceedings of the 2019 1st International Conference on Innovations in Information and Communication Technology (ICIICT), Chennai, India, 25–26 April 2019; pp. 1–7. [Google Scholar] [CrossRef]

- Li, W.; Zhang, L.; Wu, C.; Cui, Z.; Niu, C. A new lightweight deep neural network for surface scratch detection. Int. J. Adv. Manuf. Technol. 2022, 123, 1999–2015. [Google Scholar] [CrossRef] [PubMed]

| Settings | Parameters |

|---|---|

| Video Resolution | 1080 × 920 (pixels) |

| Aspect Ratio | 16:9 |

| Field of view | Medium scope |

| Frames per Second | 30 |

| Type | Patch Size/Stride | Input Size |

|---|---|---|

| conv | 3 × 3/2 | 299 × 299 × 3 |

| conv | 3 × 3/1 | 149 × 149 × 32 |

| conv padded | 3 × 3/1 | 147 × 147 × 32 |

| pool | 3 × 3/2 | 147 × 147 × 64 |

| conv | 3 × 3/1 | 73 × 73 × 64 |

| conv | 3 × 3/2 | 71 × 71 × 80 |

| conv | 3 × 3/1 | 35 × 35 × 192 |

| 3 × Inception | Filter concat 1 | 35 × 35 × 288 |

| 5 × Inception | Filter concat 2 | 17 × 17 × 768 |

| 2 × Inception | Filter concat 3 | 8 × 8 × 1280 |

| pool | 8 × 8 | 8 × 8 × 1280 |

| linear | logits | 1 × 1 × 2048 |

| softmax | classifier | 1 × 1 × 1000 |

| Class | Training Set (70%) | Test Set (30%) |

|---|---|---|

| C0: Without Helmet | 5376 | 2304 |

| C1: With Helmet | 5226 | 2239 |

| Total | 10,602 | 4543 |

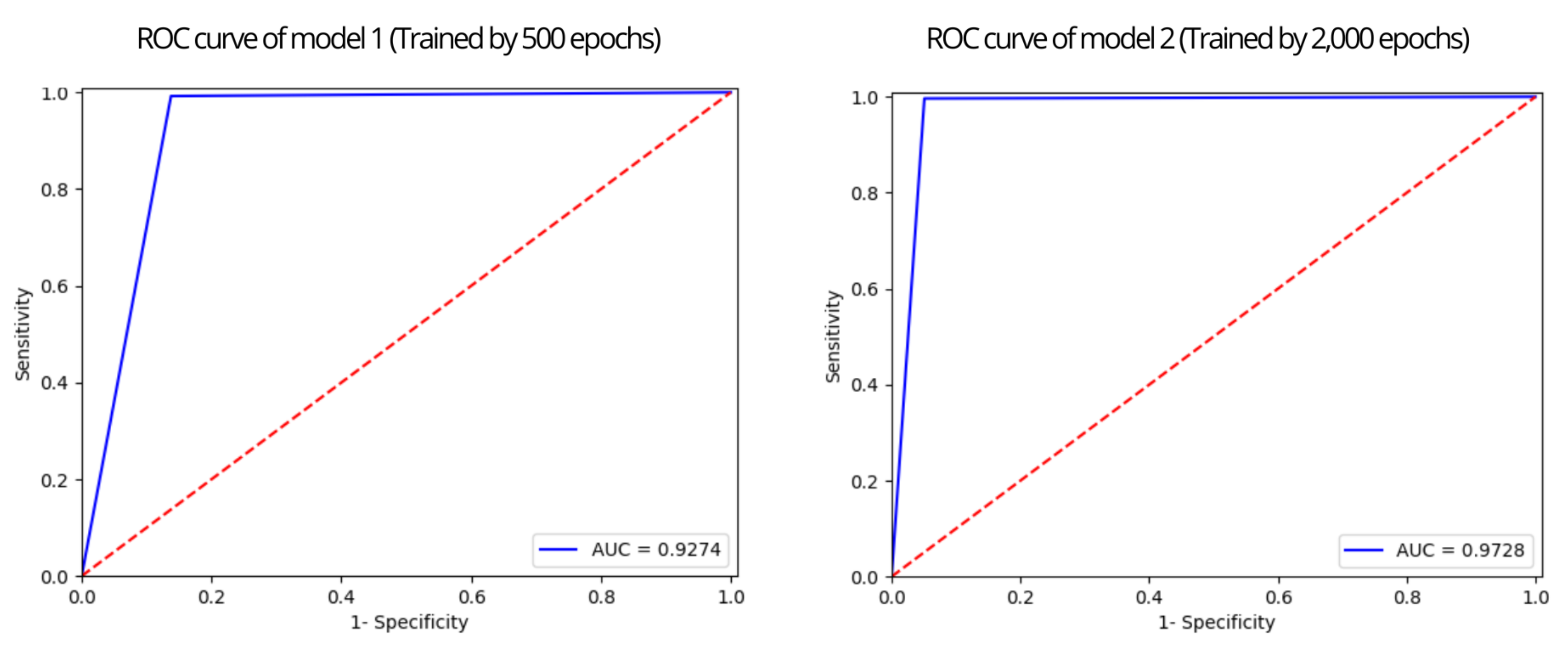

| Accuracy | Sensitivity | Specificity | Precision | |

|---|---|---|---|---|

| Model 1 | 0.9264 | 0.8624 | 0.9924 | 0.9915 |

| Model 2 | 0.9724 | 0.9492 | 0.9964 | 0.9963 |

| Model Used | Video Resolution | Accuracy | Sensitivity or Recall | Specificity | AUC | Precision | |

|---|---|---|---|---|---|---|---|

| Our proposed work | Retrained Inception V3 | 1920*1080 | 0.9724 | 0.9492 | 0.9964 | 0.9728 | 0.9963 |

| * Singh et al. [17] | Custom CNN | N/M | 0.991 | N/M | N/M | N/M | N/M |

| Rohit et al. [19] | Retrained Inception V3 | 1920*1088 | 0.74 | N/M | N/M | N/M | N/M |

| Shine et al. [20] | Custom CNN | 1250*720 | 0.9962 | 1 | N/M | 1 | 1 |

| Lin et al. [21] | Retrained Inception V3 | 1920*1080 | 0.806 | N/M | N/M | N/M | N/M |

| Cheng et al. [22] | SAS-YOLOv3-tiny | N/M | 0.782 | 0.809 | N/M | N/M | 0.716 |

| Jia et al. [23] | YOLOv5-HD | 1920*1080 | N/M | 0.972 | N/M | N/M | 0.98 |

| Waris et al. [24] | Faster R-CNN | N/M | 0.9769 | 0.9825 | 0.9694 | N/M | 0.9770 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mercado Reyna, J.; Luna-Garcia, H.; Espino-Salinas, C.H.; Celaya-Padilla, J.M.; Gamboa-Rosales, H.; Galván-Tejada, J.I.; Galván-Tejada, C.E.; Solís Robles, R.; Rondon, D.; Villalba-Condori, K.O. Detection of Helmet Use in Motorcycle Drivers Using Convolutional Neural Network. Appl. Sci. 2023, 13, 5882. https://doi.org/10.3390/app13105882

Mercado Reyna J, Luna-Garcia H, Espino-Salinas CH, Celaya-Padilla JM, Gamboa-Rosales H, Galván-Tejada JI, Galván-Tejada CE, Solís Robles R, Rondon D, Villalba-Condori KO. Detection of Helmet Use in Motorcycle Drivers Using Convolutional Neural Network. Applied Sciences. 2023; 13(10):5882. https://doi.org/10.3390/app13105882

Chicago/Turabian StyleMercado Reyna, Jaime, Huizilopoztli Luna-Garcia, Carlos H. Espino-Salinas, José M. Celaya-Padilla, Hamurabi Gamboa-Rosales, Jorge I. Galván-Tejada, Carlos E. Galván-Tejada, Roberto Solís Robles, David Rondon, and Klinge Orlando Villalba-Condori. 2023. "Detection of Helmet Use in Motorcycle Drivers Using Convolutional Neural Network" Applied Sciences 13, no. 10: 5882. https://doi.org/10.3390/app13105882

APA StyleMercado Reyna, J., Luna-Garcia, H., Espino-Salinas, C. H., Celaya-Padilla, J. M., Gamboa-Rosales, H., Galván-Tejada, J. I., Galván-Tejada, C. E., Solís Robles, R., Rondon, D., & Villalba-Condori, K. O. (2023). Detection of Helmet Use in Motorcycle Drivers Using Convolutional Neural Network. Applied Sciences, 13(10), 5882. https://doi.org/10.3390/app13105882